PyChatAI: Enhancing Python Programming Skills—An Empirical Study of a Smart Learning System

Abstract

1. Introduction

2. Motivation and Research Objectives

2.1. Research Objectives

- Evaluate the effectiveness of PyChatAI in improving programming skills among novice learners.

- Identify factors influencing students’ acceptance of smart learning systems using the Technology Acceptance Model (TAM3).

- Explore the role of AI-driven feedback and real-time support in facilitating personalised learning.

- Assess the potential of culturally adapted AI tools in supporting digital education goals, particularly in Saudi Arabia.

2.2. Research Questions

- Does PyChatAI significantly improve students’ programming skills across key dimensions (e.g., code writing and problem-solving)?

- How do students perceive the usefulness and ease of use of PyChatAI?

- What is the impact of the bilingual and proactive features of PyChatAI on user engagement and learning outcomes?

- Can PyChatAI be effectively integrated into existing programming courses in a way that aligns with educational objectives in Saudi Arabia?

3. Literature Review

3.1. Existing Research and Tools

- Pyo focuses on introductory Python programming. It offers real-time chatbot support to explain syntax, concepts, and debugging strategies [21]. While useful, it lacks deep personalisation and automated code assessment.

- Python-Bot and Revision-Bot support programming practice, revision, and exam preparation. These tools provide interactive feedback and automatic assessments of code quality, syntax, and logic. They also integrate with Learning Management Systems (LMSs) to track student progress [7,22]. However, their reliance on predefined question banks limits their adaptability to individual learning trajectories.

- KIAAA serves as an AI-driven intelligent tutoring system (ITS) with a focus on automation-related programming tasks. It offers customised learning paths and supports 3D simulation-based interactive execution [23].

- VoiceBots employ voice-based technology to support multimedia programming education, particularly in HTML and CSS. While they enhance accessibility and interactivity, they lack deep integration with core programming instruction.

3.2. Broader Educational Context

3.3. Theoretical Frameworks

3.4. Gaps in Research

- Lack of Proactive Engagement: Most existing tools—including ChatGPT and Python-Bot—are reactive in nature, responding only when prompted by the user. They do not proactively detect or intervene when students encounter difficulties during coding tasks.

- Limited Adaptability: Tools such as ChatGPT provide static responses that do not adjust to a learner’s skill level or learning trajectory. While systems such as KIAAA offer dynamic difficulty adjustments, they are not specifically designed for programming education [23].

4. Methodology

4.1. Introduction to Methodology

4.2. Participants and Setting

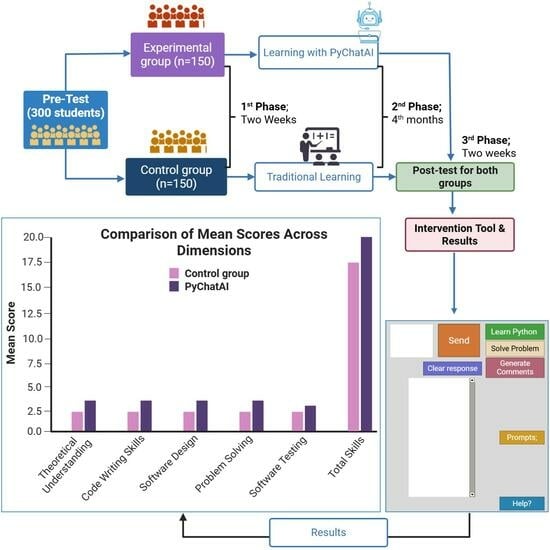

4.3. Experimental Design and Evaluation

4.3.1. The Structure of the Solomon Four-Group Design

4.3.2. Explanation of Design Components

- Pre-test: Measures participants’ baseline programming knowledge and skills prior to the intervention. This establishes a reference point and helps assess whether initial proficiency influences the effectiveness of PyChatAI.

- Intervention (use of PyChatAI): represents the experimental conditions in which students interacted with PyChatAI during programming sessions to receive real-time assistance, debugging support, and contextual code feedback.

- Post-test: administered following the intervention to evaluate changes in programming performance, enabling a comparative analysis across all four groups.

4.3.3. Purpose of Research Design

- Effect of the Intervention:By comparing outcomes between the experimental groups (who used PyChatAI) and the control groups (who did not), this study quantitatively assessed the direct impact of PyChatAI on students’ computer programming skills. This comparison provides robust evidence regarding the efficacy of the application in enhancing programming competencies.

- Effect of Pre-testing:The inclusion of both pre-tested and non-pre-tested groups controls for potential testing effects that might influence post-test performance. This allowed this study to examine whether exposure to the pre-test itself contributes to improvements in computer programming skills, independent of the intervention.

4.3.4. Research Process Flowchart

4.4. System Development and Technical Implementation

- Programming Language: Python 3.6+.

- User Interface (UI): developed using Tkinter for graphical interface design.

- AI Integration: utilises OpenAI API for generating context-specific programming responses.

- System Requirements:

- Operating Systems: Windows, macOS, or Linux.

- Dependencies: Tkinter, OpenAI API, and Pyperclip for clipboard operations.

4.4.1. Technical Contribution of PyChatAI

- Custom Prompt Engineering: PyChatAI uses domain-optimised prompt templates that scaffold learning based on student inputs, reducing hallucinations and ensuring responses are pedagogically aligned with Python course content.

- No Custom Dataset Training: The system does not train its own models but uses pre-trained OpenAI models. However, its performance is fine-tuned at runtime through controlled prompt engineering and contextual filtering.

- Adaptive Layer Built on OpenAI API: PyChatAI adds a real-time logic layer that monitors engagement patterns (e.g., frequent syntax errors and repeated queries) and offers tailored scaffolding. This adaptivity goes beyond simple request–response models.

- Arabic/English Multilingual Interface: the chatbot dynamically detects the language and adjusts UI elements, error messages, and responses accordingly.

- Debugging Assistant: this analyses common logic and syntax errors using pattern-matching and suggests improvements proactively based on detected coding issues.

4.4.2. Innovation Compared to Existing Tools

4.4.3. Pedagogical Framework

4.5. PyChatAI System Features and Development Phases

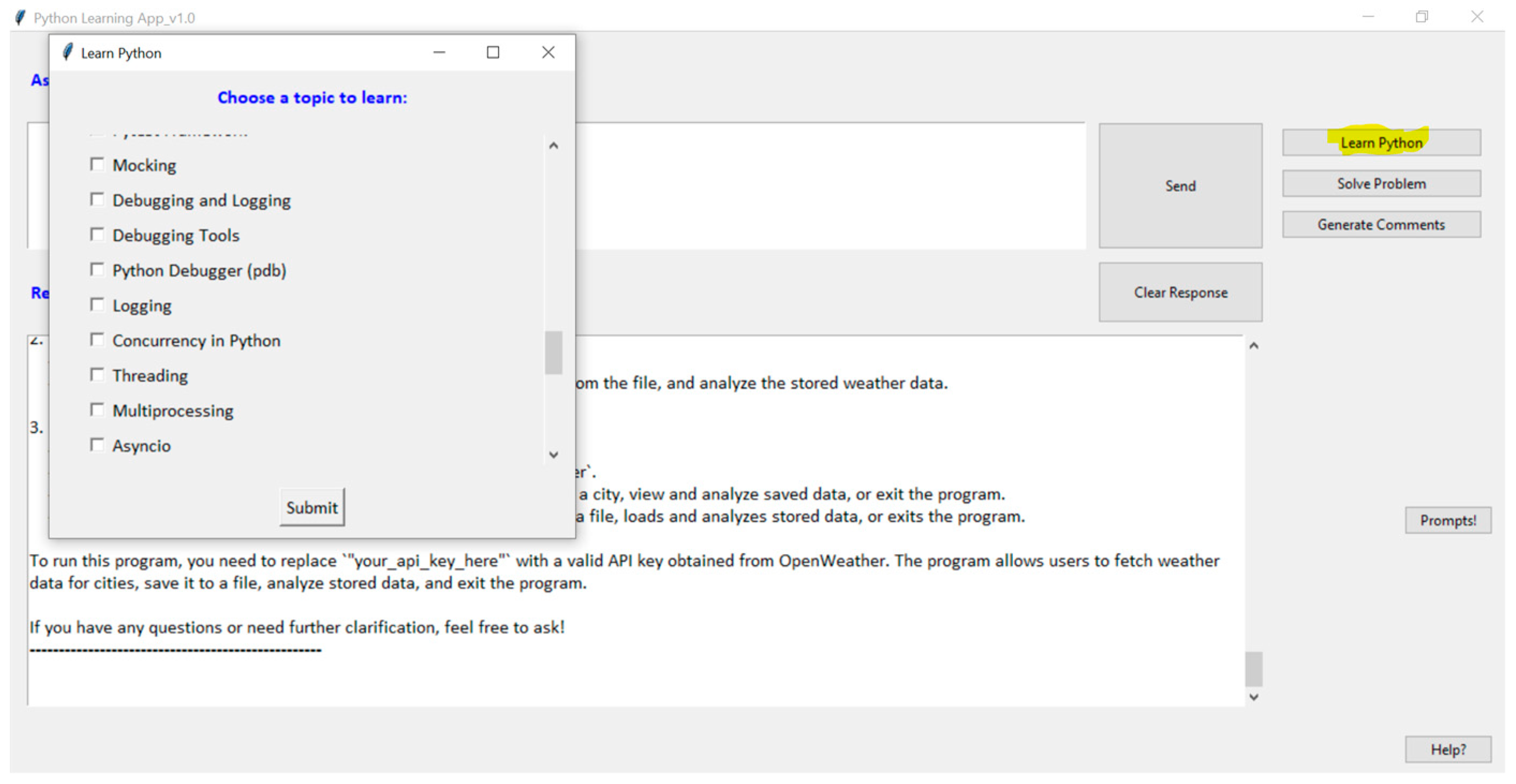

- Phase 1: Core Functionalities

- Live Question Assistance: Users can submit Python-related questions and receive instant responses. Contextual filters ensure all responses remain specific to Python programming.

- Multilingual Support: The system supports both English and Arabic, making it accessible to a diverse learner base. The interface and chatbot dynamically adjust to the selected language.

- Phase 2: Enhanced Features

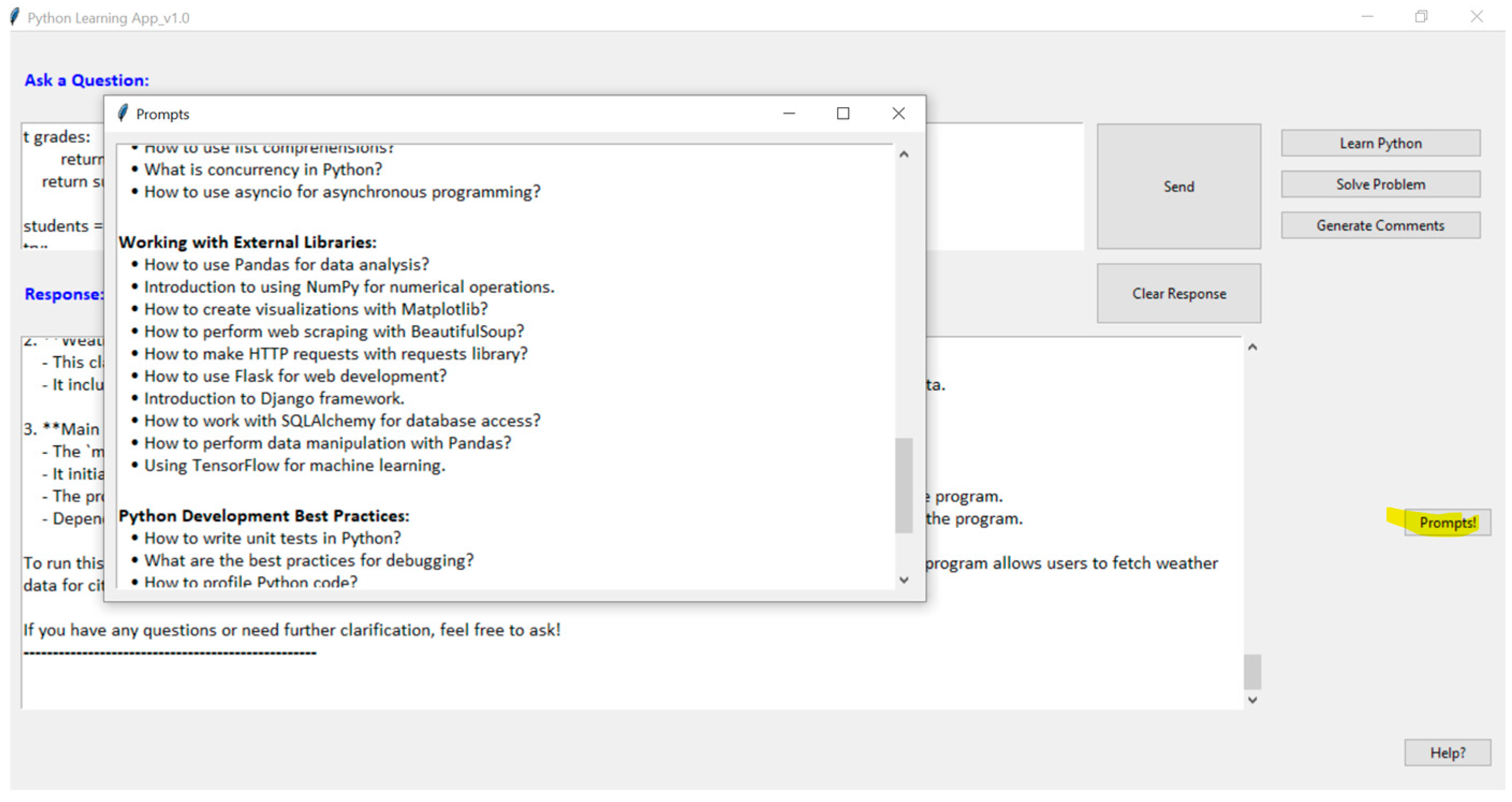

- Predefined Learning Prompts: interactive prompts guide users through fundamental Python concepts, such as loops, conditionals, and functions (Figure 5).

- Problem Description Form: students describe their coding challenges, and PyChatAI generates a basic logic framework to aid problem-solving.

- Interactive Debugging Assistance: the tool detects syntax errors and provides corrective suggestions in real-time (Figure 3).

- Code Analysis and Feedback: students can submit code to receive a fully commented version, helping them understand coding best practices and improve code readability (Figure 4).

4.6. Data Collection and Analysis

- Quantitative Data Collection:

- Pre-tests and Post-tests: programming assessments administered before and after the intervention to measure changes in coding skills and problem-solving abilities.

- Technology Acceptance Model (TAM3) Questionnaire: To investigate factors influencing student acceptance of PyChatAI, this study incorporates a TAM3-based questionnaire. This model evaluates key variables, such as perceived usefulness, ease of use, and behavioural intention to continue using the application.

- Qualitative Data Collection:

- Faculty Interviews: To gain a comprehensive understanding of the educational value of PyChatAI, faculty perspectives were gathered through structured interviews. These interviews focused on the effectiveness of PyChatAI in enhancing programming instruction, its integration into the curriculum, and potential areas for improvement.

5. Results

5.1. Pre-Test: Equivalence of Study Groups

- For theoretical understanding, the control group (M = 2.17; SD = 1.14) and the experimental group (M = 2.26; SD = 1.56) showed no significant difference, t(298) = 0.593 and p = 0.554, indicating comparable baseline knowledge between the two groups.

- Similarly, in code-writing skills, the control group (M = 1.98; SD = 1.13) and the experimental group (M = 1.82; SD = 1.08) did not differ significantly, t(298) = 1.252 and p = 0.212, suggesting equivalent starting levels for this skill.

- In software design, no significant difference was observed between the control group (M = 1.77; SD = 1.13) and the experimental group (M = 1.56; SD = 1.21), t(298) = 1.534 and p = 0.126, confirming the groups’ similarity prior to the intervention.

- For problem-solving skills, both groups had identical mean scores (M = 1.51), with no significant difference, t(298) = 0.001 and p = 0.999, further supporting the equivalence of the groups.

- In software testing and debugging, while the experimental group scored slightly higher (M = 2.05; SD = 1.42) than the control group (M = 1.83; SD = 1.11), this difference was not statistically significant, t(298) = 1.539 and p = 0.062.

- Finally, for total programming skills, the control group (M = 9.25; SD = 2.79) and the experimental group (M = 9.20; SD = 3.85) showed no significant difference, t(298) = 0.12 and p = 0.904, confirming overall equivalence across all measured skills.

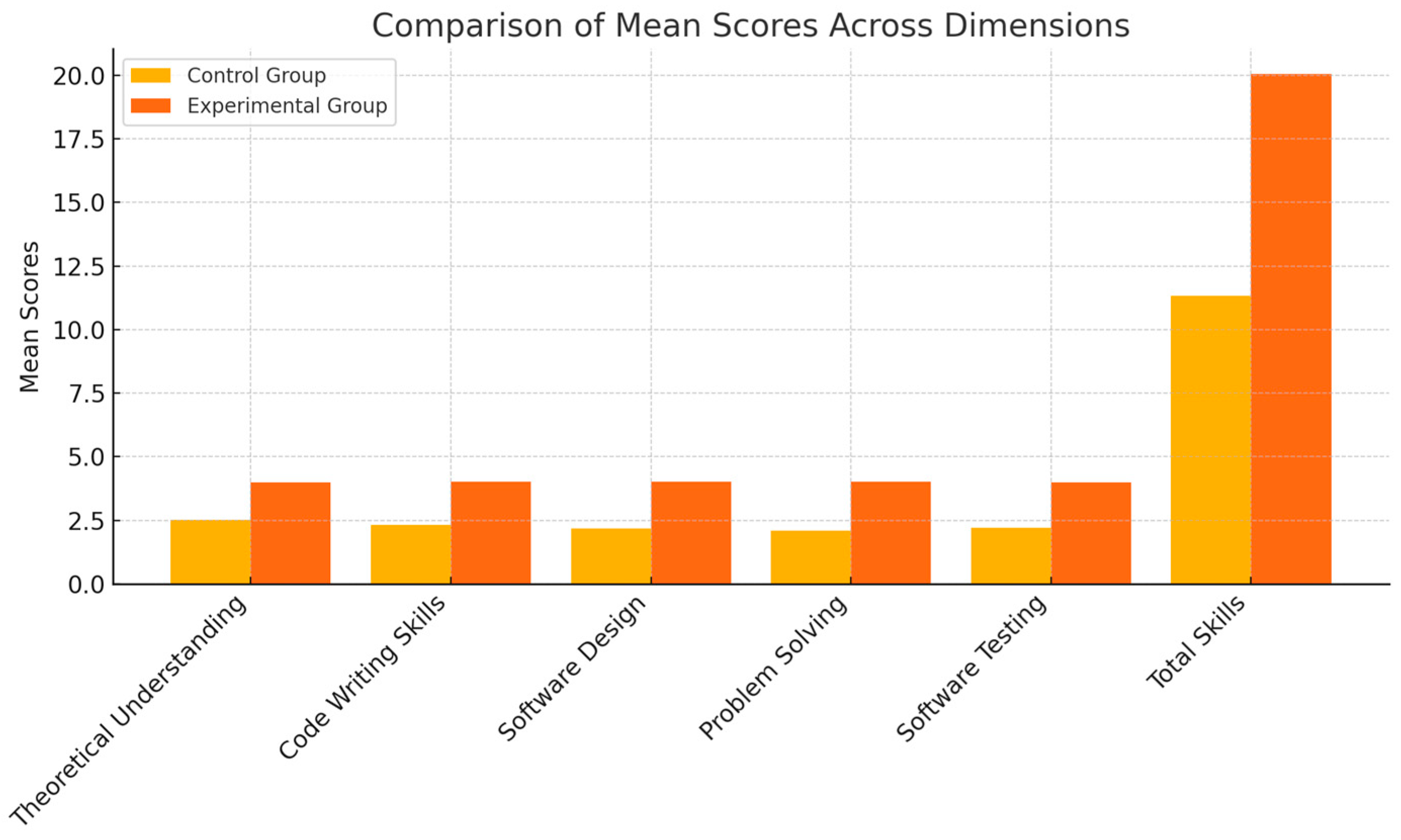

5.2. Post-Test Results: Impact of PyChatAI

- The experimental group had a mean score of 3.99 in theoretical understanding compared to 2.51 for the control group, with a t-value of 12.402 and a p-value < 0.001, indicating a significant improvement due to the intervention.

- In code-writing skills, the experimental group achieved a mean score of 4.02, substantially higher than the control group’s 2.31, with a t-value of 14.719 and a p-value < 0.001.

- The experimental group outperformed the control group in software design, with a mean score of 4.02 compared to 2.19, reflected by a t-value of 15.211 and a p-value < 0.001.

- In problem-solving, the experimental group’s mean score of 4.03 significantly exceeded the control group’s 2.09, with a t-value of 15.096 and a p-value < 0.001.

- The experimental group achieved a mean score of 4.00 in software testing, higher than the control group’s 2.22, with a t-value of 15.120 and a p-value < 0.001.

- The total programming skill score showed a marked improvement in the experimental group, with a mean of 20.05 compared to 11.33 in the control group and a t-value of 21.068 with a p-value < 0.001, confirming the significant overall effectiveness of the PyChatAI application in enhancing programming skills.

6. Discussion

6.1. Interpretation of Pre-Test Results

6.2. Impact of PyChatAI on Computer Programming Skills

6.3. Implications for Educational Practice

7. Conclusions

- Examine the system’s applicability across diverse learner populations and institutions.

- Test PyChatAI in other programming languages and advanced computing topics.

- Explore long-term retention and the sustained impact of AI-assisted learning.

- Investigate how integrating real-time performance analytics and reinforcement learning could further personalise the learning experience.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Alharthi, F. Challenges related to computer programming education in Saudi Arabia. Proc. Eng. 2024, 6, 37–44. [Google Scholar] [CrossRef]

- Alothman, M.; Robertson, J.; Michaelson, G. Computer usage and attitudes among Saudi Arabian undergraduate students. Comput. Educ. 2017, 110, 127–142. [Google Scholar] [CrossRef]

- Alanazi, A.; Li, A.; Soh, B. Effects on Saudi Female Student Learning Experiences in a Programming Subject Using Mobile Devices: An Empirical Study. Int. J. Interact. Mob. Technol. 2023, 17, 44–58. [Google Scholar] [CrossRef]

- Alasmari, O.A.; Singer, J.; Ada, M.B. Do current online coding tutorial systems address novice programmer difficulties? In Proceedings of the 15th International Conference on Education Technology and Computers, Barcelona, Spain, 26–28 September 2023; pp. 242–248. [Google Scholar]

- Zhong, X.; Zhan, Z. An intelligent tutoring system for programming education based on informative tutoring feedback: System development, algorithm design, and empirical study. Interact. Technol. Smart Educ. 2024. ahead-of-print. [Google Scholar]

- Abdulla, S.; Ismail, S.; Fawzy, Y.; Elhag, A. Using ChatGPT in Teaching Computer Programming and Studying Its Impact on Students Performance. Electron. J. E-Learn. 2024, 22, 66–81. [Google Scholar] [CrossRef]

- Mekthanavanh, V.; Meksavanh, B.; Bouddy, S. ChatGPT Enhances Programming Skills of Computer Engineering Students. Souphanouvong Univ. J. Multidiscip. Res. Dev. 2024, 10, 1–9. [Google Scholar] [CrossRef]

- Stone, I. Investigating the Use of ChatGPT to Support the Learning of Python Programming Among Upper Secondary School Students: A Design-Based Research Study. In Proceedings of the 2024 Conference on United Kingdom & Ireland Computing Education Research, Manchester, UK, 5–6 September 2024; p. 1. [Google Scholar]

- Maguire, J.; Cutts, Q. Supporting the Computing Science Education Research Community with Rolling Reviews. In Proceedings of the United Kingdom & Ireland Computing Education Research Conference, Glasgow, UK, 3–4 September 2020; ACM: New York, NY, USA, 2020. [Google Scholar]

- Van Der Stuyf, R.R. Scaffolding as a teaching strategy. Adolesc. Learn. Dev. 2002, 52, 5–18. [Google Scholar]

- Yilmaz, R.; Yilmaz, F.G.K. The effect of generative artificial intelligence (AI)-based tool use on students’ computational thinking skills, programming self-efficacy and motivation. Comput. Educ. Artif. Intell. 2023, 4, 100147. [Google Scholar] [CrossRef]

- Rahman, M.; Watanobe, Y. ChatGPT for education and research: Opportunities, threats, and strategies. Appl. Sci. 2023, 13, 5783. [Google Scholar] [CrossRef]

- Silva, C.A.G.D.; Ramos, F.N.; De Moraes, R.V.; Santos, E.L.D. ChatGPT: Challenges and benefits in software programming for higher education. Sustainability 2024, 16, 1245. [Google Scholar] [CrossRef]

- Tick, A. Exploring ChatGPT’s Potential and Concerns in Higher Education. In Proceedings of the 2024 IEEE 22nd Jubilee International Symposium on Intelligent Systems and Informatics (SISY), Pula, Croatia, 19–21 September 2024. [Google Scholar]

- Wieser, M.; Schöffmann, K.; Stefanics, D.; Bollin, A.; Pasterk, S. Investigating the role of ChatGPT in supporting text-based programming education for students and teachers. In Proceedings of the International Conference on Informatics in Schools: Situation, Evolution, and Perspectives, Lausanne, Switzerland, 23–25 October 2023; Springer Nature: Cham, Switzerland, 2023; pp. 40–53. [Google Scholar]

- Ma, B.; Chen, L.; Konomi, S.I. Enhancing programming education with ChatGPT: A case study on student perceptions and interactions in a Python course. In Proceedings of the International Conference on Artificial Intelligence in Education, Recife, Brazil, 8–12 July 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 113–126. [Google Scholar]

- Jalon, J.B.; Chua, G.A.; Torres, M.D.L. ChatGPT as a learning assistant: Its impact on students learning and experiences. Int. J. Educ. Math. Sci. Technol. 2024, 12, 1603–1619. [Google Scholar] [CrossRef]

- Akçapınar, G.; Sidan, E. AI chatbots in programming education: Guiding success or encouraging plagiarism. Discov. Artif. Intell. 2024, 4, 87. [Google Scholar] [CrossRef]

- Sandu, R.; Gide, E.; Elkhodr, M. The role and impact of ChatGPT in educational practices: Insights from an Australian higher education case study. Discov. Educ. 2024, 3, 71. [Google Scholar] [CrossRef]

- Haindl, P.; Weinberger, G. Does ChatGPT help novice programmers write better code? Results from static code analysis. IEEE Access 2024, 12, 114146–114156. [Google Scholar] [CrossRef]

- Okonkwo, C.W.; Ade-Ibijola, A. Python-bot: A chatbot for teaching python programming. Eng. Lett. 2020, 29, 25. [Google Scholar]

- Carreira, G.; Silva, L.; Mendes, A.J.; Oliveira, H.G. Pyo, a chatbot assistant for introductory programming students. In Proceedings of the 2022 International Symposium on Computers in Education (SIIE), Coimbra, Portugal, 17–19 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Abolnejadian, M.; Alipour, S.; Taeb, K. Leveraging chatgpt for adaptive learning through personalized prompt-based instruction: A cs1 education case study. In Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 1–8. [Google Scholar]

- Popovici, M.-D. ChatGPT in the classroom. Exploring its potential and limitations in a functional programming course. Int. J. Hum. Comput. Interact. 2024, 40, 7743–7754. [Google Scholar] [CrossRef]

- Vukojičić, M.; Krstić, J. ChatGPT in programming education: ChatGPT as a programming assistant. InspirED Teach. Voice 2023, 2023, 7–13. [Google Scholar]

- Xue, Y.; Chen, H.; Bai, G.R.; Tairas, R.; Huang, Y. Does ChatGPT help with introductory programming? An experiment of students using ChatGPT in cs1. In Proceedings of the 46th International Conference on Software Engineering: Software Engineering Education and Training, Lisbon, Portugal, 14–20 April 2024; pp. 331–341. [Google Scholar]

- Güner, H.; Er, E.; Akçapinar, G.; Khalil, M. From chalkboards to AI-powered learning. Educ. Technol. Soc. 2024, 27, 386–404. [Google Scholar]

- Eilermann, S.; Wehmeier, L.; Niggemann, O.; Deuter, A. KIAAA: An AI assistant for teaching programming in the field of automation. In Proceedings of the 2023 IEEE 21st International Conference on Industrial Informatics (INDIN), Lemgo, Germany, 18–20 July 2023; pp. 1–7. [Google Scholar]

- Essel, H.B.; Vlachopoulos, D.; Nunoo-Mensah, H.; Amankwa, J.O. Exploring the impact of VoiceBots on multimedia programming education among Ghanaian university students. Br. J. Educ. Technol. 2025, 56, 276–295. [Google Scholar] [CrossRef]

- Daniel, G.; Cabot, J.; Deruelle, L.; Derras, M. Xatkit: A multimodal low-code chatbot development framework. IEEE Access 2020, 8, 15332–15346. [Google Scholar] [CrossRef]

- Daniel, G.; Cabot, J. The software challenges of building smart chatbots. In Proceedings of the 2021 IEEE/ACM 43rd International Conference on Software Engineering: Companion Proceedings (ICSE-Companion), Madrid, Spain, 25–28 May 2021; pp. 324–325. [Google Scholar]

- Daniel, G.; Cabot, J. Applying model-driven engineering to the domain of chatbots: The Xatkit experience. Sci. Comput. Program. 2024, 232, 103032. [Google Scholar] [CrossRef]

- Okonkwo, C.W.; Ade-Ibijola, A. Revision-Bot: A Chatbot for Studying Past Questions in Introductory Programming. IAENG Int. J. Comput. Sci. 2022, 49, 644. [Google Scholar]

- Bassner, P.; Frankford, E.; Krusche, S. Iris: An ai-driven virtual tutor for computer science education. In Proceedings of the 2024 on Innovation and Technology in Computer Science Education, Milan, Italy, 8–10 July 2024; Association for Computing Machinery: New York, NY, USA, 2024; Volume 1, pp. 394–400. [Google Scholar]

- Daud, S.H.M.; Teo, N.H.I.; Zain, N.H.M. Ejava chatbot for learning programming language: Apost-pandemic alternative virtual tutor. Int. J. 2020, 8, 3290–3298. [Google Scholar]

- Chrysafiadi, K.; Virvou, M. PerFuSIT: Personalized Fuzzy Logic Strategies for Intelligent Tutoring of Programming. Electronics 2024, 13, 4827. [Google Scholar] [CrossRef]

- Biňas, M.; Pietriková, E. Impact of virtual assistant on programming novices’ performance, behavior and motivation. Acta Electrotech. Inform. 2022, 22, 30–36. [Google Scholar] [CrossRef]

| Feature | ChatGPT | KIAAA | Pyo | VoiceBots | Xatkit |

|---|---|---|---|---|---|

| Year | 2023-2024-2025 | 2023 | 2022 | 2024 | 2020-2021-2024 |

| Primary Purpose | General AI assistant for various tasks, including programming. | AI-driven Intelligent Tutoring System (ITS) for automation programming education. | AI-based assistant for Python programming support. | Enhances multimedia programming education through VoiceBot technology. | AI-powered programming learning environment. |

| AI Model Used | GPT-4 (Natural Language Processing, NLP). | Help-DKT (AI cognitive model for student progress tracking). | Built on Rasa (Conversational AI framework). | IBM Watson AI Services. | NLP-based machine learning models. |

| Specialisation in Programming | Not specific to programming education. | Focused on automation-related programming. | Specialises in introductory Python programming. | Emphasises HTML and CSS in multimedia programming. | Supports multiple languages, including Python, Java, and C++. |

| Response to Coding Queries | Provides general programming explanations and debugging assistance. | Generates custom programming tasks and dynamically adapts to student responses. | Explains Python syntax, concepts, and debugging strategies. | Responds to multimedia programming inquiries in real time. | Provides immediate feedback, debugging support, and adaptive learning. |

| Real-Time Interaction | No direct code execution. | Supports interactive execution in a 3D simulation environment. | Offers real-time programming assistance via chatbot. | Provides instant feedback through WhatsApp integration. | Delivers live chatbot responses with adaptive learning. |

| Adaptability to Student Level | Static responses, no adaptation to user expertise. | Monitors and adjusts difficulty dynamically based on student progress. | Provides guidance based on errors but lacks deep personalisation. | Customisable responses based on student engagement and feedback. | Adapts difficulty based on learning patterns. |

| Automated Code Evaluation | No real-time assessment. | AI-powered evaluation with automated feedback. | Identifies syntax errors and offers hints via multiple-choice prompts. | Offers automated responses but lacks code execution analysis. | Performs syntax validation, logic analysis, and efficiency checks. |

| Simulation & Hands-on Learning | No simulation capabilities. | 3D virtual learning environment for practical coding tasks. | Encourages logical code structuring through guided exercises. | Provides interactive learning experiences in multimedia programming. | Supports hands-on coding exercises and problem-solving scenarios. |

| Integration with Educational Systems | No direct LMS or IDE support. | Compatible with IDEs and automation software. | Embedded in an online Python learning platform. | Integrated with WhatsApp and Learning Management Systems (LMS). | Supports LMS integration, classroom analytics, and API connectivity. |

| Assessment & Feedback | Provides only text-based explanations, no evaluation metrics. | AI-driven real-time feedback with automated assessment. | Offers hints, explanations, and solutions for debugging exercises. | Provides real-time feedback with additional learning resources. | Automated grading, performance tracking, and skill improvement recommendations. |

| Ref | [11,14,20,24,25,26,27] | [28] | [22] | [29] | [30,31,32] |

| Feature | Revision-Bot | Python-Bot | Iris | e-JAVA Chatbot | PerFuSIT |

| Year | 2022 | 2020 | 2024 | 2020 | 2024 |

| Primary Purpose | AI-based chatbot for personalised programming revision and learning support. | AI-based chatbot for programming practice and revision. | AI tutor in Artemis for personalised coding help | Virtual tutor for learning JAVA programming. | Adaptive Learning, Personalised Tutoring |

| AI Model Used | SnatchBot API with NLP and AIML (Artificial Intelligence Markup Language). | SnatchBot API with NLP and AIML. | GPT-3.5-Turbo with advanced prompting. | Rule-based chatbot with text-matching techniques. | Fuzzy Logic, Machine Learning |

| Specialisation in Programming | Python-focused, designed for exam practice and interactive problem-solving. | Python-focused, designed for exam practice and interactive problem-solving. | CS1 programming exercises, no direct solutions. | Focused on JAVA, particularly control structures. | Python, Java, C++ |

| Response to Coding Queries | AI-driven question-answer chatbot with a predefined question bank. | AI-driven question-answer chatbot with a predefined question bank. | Hints, counter-questions, and explanations. | Provides instant responses using pre-defined patterns. | Real-Time Feedback, Debugging Assistance |

| Real-Time Interaction | AI-powered chatbot with interactive feedback. | AI-powered chatbot with interactive feedback. | Instant, context-aware responses. | Offers immediate feedback through chat-based input. | Dynamic Adjustments, Live Support |

| Adaptability to Student Level | Tracks student progress and customises revision content accordingly. | Tracks student progress and customises revision content accordingly. | Adjusts to problem context, not skill level. | Limited adaptability; follows structured responses | Beginner to Advanced, Skill-Based Progression |

| Automated Code Evaluation | AI-driven assessment of code quality, syntax, and logic. | AI-driven assessment of code quality, syntax, and logic. | Uses Artemis’s automated feedback. | Generates code samples but does not evaluate user code. | Syntax Analysis, Logical Error Detection |

| Simulation & Hands-on Learning | Practice-based revision tool with interactive coding challenges. | Practice-based revision tool with interactive coding challenges. | Real-time guidance, no simulations | Supports learning via structured problem-solving. | Interactive Coding Exercises, Virtual Labs |

| Integration with Educational Systems | Web-based chatbot with LMS compatibility and social media deployment. | Web-based chatbot with LMS compatibility and social media deployment. | Fully embedded in Artemis. | Standalone tool; not integrated with LMS or e-learning platforms. | LMS Compatibility, API Integration |

| Assessment & Feedback | AI-powered self-evaluation, student progress tracking, and score prediction. | AI-powered self-evaluation, student progress tracking, and score prediction. | Provides hints, not direct grading. | Provides structured responses but lacks personalised feedback. | Performance Tracking, Personalised Reports |

| Ref | [33] | [21] | [34] | [35] | [36] |

| Group | Pre-Test | Intervention (Using PyChatAI) | Post-Test | Sample Size |

|---|---|---|---|---|

| Control Group One | No | No | Yes | 50 |

| Control Group Two | Yes | No | Yes | 50 |

| Experimental Group One | Yes | Yes | Yes | 50 |

| Experimental Group Two | No | Yes | Yes | 50 |

| Feature | PyChatAI | ChatGPT | Python-Bot/Pyo |

|---|---|---|---|

| Proactive Error Detection | Yes | No | No |

| Adaptive Scaffolding | Yes | No | No |

| Arabic/English Support | Yes (bilingual UI/feedback) | Limited | No |

| Curriculum-Aligned Prompts | Yes | No | No |

| Context-Aware Learning Feedback | Yes | No | No |

| Built-in Debugging Assistance | Yes | Basic (if prompted) | No |

| Skill | Group | N | Mean | Std. Deviation | T-Value | DF | p-Value |

|---|---|---|---|---|---|---|---|

| Theoretical Understanding | Control | 150 | 2.17 | 1.14 | 0.593 | 298 | 0.554 |

| Experimental | 150 | 2.26 | 1.56 | ||||

| Code Writing Skills | Control | 150 | 1.98 | 1.13 | 1.252 | 298 | 0.212 |

| Experimental | 150 | 1.82 | 1.08 | ||||

| Software Design | Control | 150 | 1.77 | 1.13 | 1.534 | 298 | 0.126 |

| Experimental | 150 | 1.56 | 1.21 | ||||

| Problem Solving | Control | 150 | 1.51 | 1.02 | 0.001 | 298 | 0.999 |

| Experimental | 150 | 1.51 | 1.28 | ||||

| Software Testing | Control | 150 | 1.83 | 1.11 | 1.539 | 298 | 0.062 |

| Experimental | 150 | 2.05 | 1.42 | ||||

| Total Skills | Control | 150 | 9.25 | 2.79 | 0.12 | 298 | 0.904 |

| Experimental | 150 | 9.20 | 3.85 |

| Skill | Group | N | Mean | Std. Deviation | T-Value | DF | p-Value |

|---|---|---|---|---|---|---|---|

| Theoretical Understanding | Control | 150 | 2.51 | 1.01 | 12.402 | 298 | 0.001> |

| Experimental | 150 | 3.99 | 1.05 | ||||

| Code Writing Skills | Control | 150 | 2.31 | 1.01 | 14.719 | 298 | 0.001> |

| Experimental | 150 | 4.02 | 1.01 | ||||

| Software Design | Control | 150 | 2.19 | 0.89 | 15.211 | 298 | 0.001> |

| Experimental | 150 | 4.02 | 1.17 | ||||

| Problem Solving | Control | 150 | 2.09 | 0.99 | 15.096 | 298 | 0.001> |

| Experimental | 150 | 4.03 | 1.21 | ||||

| Software Testing | Control | 150 | 2.22 | 0.90 | 15.120 | 298 | 0.001> |

| Experimental | 150 | 4.00 | 1.12 | ||||

| Total Skills | Control | 150 | 11.33 | 2.53 | 21.068 | 298 | 0.001> |

| Experimental | 150 | 20.05 | 4.40 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alanazi, M.; Soh, B.; Samra, H.; Li, A. PyChatAI: Enhancing Python Programming Skills—An Empirical Study of a Smart Learning System. Computers 2025, 14, 158. https://doi.org/10.3390/computers14050158

Alanazi M, Soh B, Samra H, Li A. PyChatAI: Enhancing Python Programming Skills—An Empirical Study of a Smart Learning System. Computers. 2025; 14(5):158. https://doi.org/10.3390/computers14050158

Chicago/Turabian StyleAlanazi, Manal, Ben Soh, Halima Samra, and Alice Li. 2025. "PyChatAI: Enhancing Python Programming Skills—An Empirical Study of a Smart Learning System" Computers 14, no. 5: 158. https://doi.org/10.3390/computers14050158

APA StyleAlanazi, M., Soh, B., Samra, H., & Li, A. (2025). PyChatAI: Enhancing Python Programming Skills—An Empirical Study of a Smart Learning System. Computers, 14(5), 158. https://doi.org/10.3390/computers14050158