Abstract

Polymer flooding is an important enhanced oil recovery (EOR) method with high performance which is acceptable and applicable on a field scale but should first be evaluated through lab-scale experiments or simulation tools. Artificial intelligence techniques are strong simulation tools which can be used to evaluate the performance of polymer flooding operation. In this study, the main parameters of polymer flooding were selected as input parameters of models and collected from the literature, including: polymer concentration, salt concentration, rock type, initial oil saturation, porosity, permeability, pore volume flooding, temperature, API gravity, molecular weight of the polymer, and salinity. After that, multilayer perceptron (MLP), radial basis function, and fuzzy neural networks such as the adaptive neuro-fuzzy inference system were adopted to estimate the output EOR performance. The MLP neural network had a very high ability for prediction, with statistical parameters of R2 = 0.9990 and RMSE = 0.0002. Therefore, the proposed model can significantly help engineers to select the proper EOR methods and API gravity, salinity, permeability, porosity, and salt concentration have the greatest impact on the polymer flooding performance.

1. Introduction

After primary production, approximately two-thirds of the initial oil in place is expected to remain in the reservoirs. Enhanced oil recovery (EOR) methods which have become a main subject in petroleum engineering to meet the demand for energy will extract enough oil to fulfill a significant portion of the global oil demand [1]. As an EOR method, chemical flooding has been a popular strategy for improving oil recovery in mature oil fields that is now carried out using a variety of chemical agents and it has been shown to be successful [2]. Two types of features, microscopic and macroscopic sweep efficiencies, are considered in an EOR process. For the first case, chemical agents like surfactants are used and, for the second one, polymers are utilized to improve the mobility ratio by increasing the shear viscosity of water. Polymer flooding is an effective way to boost the water flooding effect and field experiments and applications have been conducted in a series of oil fields, with positive results in terms of increasing oil production [3,4], where water-soluble polymers were used to improve the rheological properties of water [5]. Therefore, every factor that strengthens or weakens rheological properties of the polymer solution is an influential factor [6]. Besides the polymer type and its concentration, there are many influencing factors which should be considered regarding the water, oil, and rock type of a reservoir [7]. Therefore, the various screening criteria of polymer flooding make the evaluation of its performance before field-scale operations difficult. One way to overcome this issue is the simulation of the process through core flood experiments that are still expensive and time consuming. One more way that is more economical and facile is using simulation tools such as an artificial neural network (ANN), fuzzy inference system (FIS), evolutionary computation (EC), and their hybrids, which have all been used effectively to construct a predictive model [8]. These methods are appealing because they can deal with various uncertainties. Soft computing approaches are increasingly employed as a substitute for traditional statistical methods [9]. To the best of our knowledge, the modeling and prediction of the polymer flooding experiment have not been widely investigated, particularly using ANNs such as multilayer perceptron (MLP), radial basis function (RBF), and fuzzy neural networks such as an adaptive neuro-fuzzy inference system (ANFIS). The current study investigated the performance of polymer flooding by using the abovementioned modeling tools. The first step is to find out the important factors and, as mentioned above, the polymer type and its concentration are known as influential factors. The most widely used polymer in petroleum engineering for EOR operations is hydrolyzed polyacrylamide (HPAM). Therefore, its data are shown in this article [10,11]. In addition to the species and concentration of polymer and its molecular weight, both the type and concentration of salt also have a great effect on the rheological properties [12] because the addition of divalent ions causes a large decrease in the rheological properties of the polymer solution [13]. Hence, in addition to the salt concentration, three categories are considered regarding salt type. The first category is fresh water, the second category is a low saline, which is assigned to monovalent salts, and the third category is a high saline, which is assigned to salts that contain both monovalent and divalent ions [6]. Mobility ratio, which is roughly defined as the ratio of flooded fluid (oil) to flooding fluid (polymer solution) [14], is an important influencing parameter that should be considered during performance evaluations of EOR processes. As mentioned previously, the viscosity of polymer solutions is dependent on several parameters that are considered as inputs for the models and, therefore, it cannot be considered directly because putting dependent parameters as inputs in the model will impose huge complexity on the model versus fewer gains. Hence, some of the abovementioned independent parameters, of which the polymer solution viscosity is a function, were selected as inputs to indirectly see the effect of polymer solution viscosity. Additionally, as for the oil viscosity, because it is indirectly dependent on American Petroleum Institute (API) oil gravity [15,16,17], just the API gravity was considered as an input parameter to avoid complexity.

Not only rock type [18], porosity [19], permeability [20], temperature [21], API gravity [22], and initial oil saturation [23], among the reservoir properties, but also the volume of flooded fluid (pore volume (PV)) among the operational parameters, were considered as input parameters for the ANN and, finally, EOR was predicted using the abovementioned networks [24].

Briefly, the aim of this paper is to introduce a proper model with high accuracy to predict the performance of polymer flooding as an EOR method before doing any lab- and field-scale activities.

2. Methodology

2.1. Data Collection

Six prior investigations on both carbonate and sandstone core reservoir samples provided the raw data needed for modeling [25,26,27,28,29,30]. There were 847 data records in the gathered data sets, which were separated into three groups: training (70%), validating (15%), and testing data (15%). Eleven relevant elements were present in the actual or experimental input data, including (1) polymer concentration, (2) salt concentration, (3) rock type, (4) initial oil saturation, (5) porosity, (6) permeability, (7) pore volume flooding, (8) temperature, (9) API gravity, (10) molecular weight of the polymer, and (11) salinity. The only output of the utilized models was the oil recovery factor via polymer flooding compared to the final one after pure water flooding per unit percentage (%), which was presented as a percentage and dubbed “EOR after polymer flooding”. Table 1 displays the ranges of various input parameters.

Table 1.

Features of the present study’s input variables.

2.2. ANN

ANNs, often known as neural networks, are current systems and computational approaches for machine learning, knowledge presentation, and, lastly, using that information to maximize the output responses of complex systems. The primary principle behind these networks is based on how the biological brain system processes data and information to learn and produce knowledge. The creation of new methods for information processing systems is a major component of this concept [31].

This system consists of a huge number of highly linked processing components, i.e., neurons that collaborate to solve problems and send information via synapses (electromagnetic communications). If one cell in these networks is harmed, other cells can be compensated by contributing to its regeneration. Thereby, these networks can learn. By injecting tactile nerve cells, for example, the cells learn not to travel to the heated body, and the system learns to fix its error with this algorithm. These systems learn adaptively, meaning that when new inputs are presented, the weight of the synapses changes in such a manner that the system delivers the proper response [32].

Input, output, and processing are the three levels of an ANN unless the user inhibits communication between neurons, and each layer comprises a set of nerve cells that are ordinarily interconnected with all nerve cells in other layers. However, the nerve cells in each layer have no link with other nerve cells in the same layer. A nerve cell is the smallest unit of information processing that allows neural networks to operate. A neural network is a collection of neurons that build a specific architecture based on connections between neurons in distinct layers while being positioned in distinct layers. As neurons are a type of nonlinear mathematical function, a neural network made up of them can be a fully complicated nonlinear system. Each neuron in a neural network works independently, and the network’s overall activity is the product of the actions of numerous neurons. In other words, neurons in a cooperative process correct each other [33,34,35].

2.3. MLP Artificial Neural Network

The MLP network consists of several types of layers, including the input, one or more hidden layers, and the output that every type of layer possesses some of the processing neurons, and every neuron is entirely linked to succeeding layers via a weighted interconnection [8]. Therefore, the first one has an equal number of input parameters and neurons, and the model’s output is related to a neuron in the third one. Additionally, the correlation of the model’s output and input is specified in the second type of layer. Their numbers of hidden layers and neurons will crucially affect the efficiency of the MLP network [36]. The node’s value in the second and the last type of layer is determined based on its weight in the former layer [37]. After that, the offset value is aggregated to the gained results, and the computed value is transited to the trigger level via the transfer function to generate the final output. Various activation functions, such as a binary step, identity Gaussian, and linear functions, could be adopted for the second and third types of layers. The following equation shows the results of the model:

where is the output, is the link weight, is the input, is the bias vector, and is the activation function. The MLP training process is executed using a backpropagation algorithm such as scaled conjugate gradient, gradient descent, Levenberg–Marquardt, and resilient backpropagation [38].

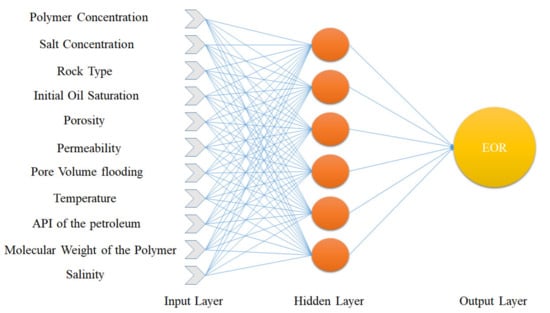

In this paper, among several activation functions used in MLP artificial neural networks including tangent sigmoid (tansig) and log-sigmoid, and linear transfer function (purelin), tansig is used for the link between the input and the hidden layers and purelin is adopted for the link between the hidden and the output layers [8]. The structure of the MLP network used in this paper to predict the target data is shown in Figure 1.

Figure 1.

MLP network structure for EOR prediction.

2.4. Radial Basis Function (RBF) Artificial Neural Network

The feasibility of this kind of neural network to process arbitrary sparse data, that is easy to generalize to multidimensional space, and to provide spectral precision makes it a particularly suitable alternative [38]. In addition, the RBF neural network is superior to the MLP model because it has excellent accuracy in nonlinear data modeling and can be trained in a single direct program instead of an iterative solution in MLP [39]. While the frame of RBF is comparable to the MLP [40], the RBF possesses only one hidden layer, which consists of multiple nodes called RBF units. The RBF neural network architecture is a two-layer feed-forward neural network, in which the input is transmitted from the neurons in the hidden layer to the output layer. Each RBF network possesses two important factors, which describe the center position of the function and its deviation. Finding the center of the unit and determining the optimal value of the weight connecting the RBF unit and the output unit are the two main steps in the training process of the RBF neural network [41]. Different methods, such as random center selection [42], clustering [43], and density estimation [44], could be adopted to discover the center in the RBF network. The output of the system can be expressed as Equation (2):

where is the transposed output of the shell vector, and is a kernel function. For this, different optimization algorithms can be applied. Here, trial and error are adopted to find out the optimal value for this parameter [8]. By changing this parameter, RBF neural networks with different structures are developed and the product of each RBF neural network is observed according to the MSE value of the test data subset [38].

2.5. ANFIS

This fuzzy logic (FL)-based average value was initially provided in 1998 [45]. The ANFIS model can use qualitative methods to solve nonlinear problems and model physics, instead of operating quantitative methods by turning input data into a particular term called even fuzzy set or linguistic. The frame of the neuro-fuzzy system has five layers which are illustrated here [46,47].

First, the fuzzy input is made based on transforming input data by defining a membership function (MF) [48]. The computed membership degree of every input factor is reproduced, resulting in the firing strength, as shown below:

where is the calculated firing strength, is the degree of membership of the th MF for the th input, and m employs the input counter. For each rule, the firing strength is obtained using multiplication, and the highest one obtained matches with the input [32]. The next layer operates based on the following equation:

where is the normalized firing strength. At the end, the final result is obtained using the following equation:

In the above formula, can be a constant or polynomial function. The values of and are the adjustment factors of TSK-FIS and their values should be optimized by the specified algorithm to have a more accurate prediction [8]. The ultimate layer sums the outputs in the prior layer to generate the following general ANFIS output:

In this work, three types of ANFIS are studied, and the characteristic that distinguishes them is the distribution of membership functions. The first kind of system is identified by the division of the grid, and the membership function is uniformly distributed in space, while the second kind of system uses a subtractive grouping mechanism; the last one is based on fuzzy clustering of c-means. See a previous article for more theories [8].

To optimize the network parameters, the grasshopper optimization algorithm (GOA) [49], genetic algorithm (GA) [50], and swarm optimization algorithm (PSO) [51] can be applied on artificial neural networks. In this study, we used a trial and error method that examined 500 replications for each parameter to ensure that the optimal value of the data also changed randomly with each iteration.

3. Model Evaluation

Several statistical standards were adopted to evaluate the accuracy of the applied model, including coefficient of determination (R2), mean squared error (MSE), root mean squared error (RMSE), mean error (μ), error standard deviation (σ), and average absolute relative deviation (AARD):

where λ is the mean of the error:

is the amount of data, and and represent the original target data and the predicted output of the model, respectively.

4. Results and Discussion

Among the 847 datapoints collected for each of the parameters ((1) polymer concentration, (2) salt concentration, (3) rock type, (4) initial oil saturation, (5) porosity, (6) permeability, (7) pore volume flooding, (8) temperature, (9) API gravity, (10) molecular weight of the polymer, (11) salinity, and (12) EOR) from different articles, an attempt was made to model using MLP, RBF, and ANFIS neural networks. In the following, the networks are created and their effective parameters and optimization are examined.

4.1. Optimum MLP Structure

The results of MLP neural network sensitivity analysis are shown in Table 2. This three-layer network, that includes input, hidden, and output layers, was evaluated with different training algorithms, due to their higher speed (which is less costly to the system) and higher efficiency (statistical parameters indicate this). The Levenberg–Marquardt backpropagation algorithm, as the superior algorithm, was used in the rest of the comparisons in this study (Table 3). In this study, we assigned 70% of the collected data to the training data and, to the validation and test data, 15%, which is clearly shown in Table 4, using this data distribution to obtain the power of the most optimal MLP networks. Additionally, based on Table 2, the best number of neurons was determined, and based on this, six neurons was considered to be the best. As the number of neurons in the MLP network increases, the quality and efficiency of the network increases, but more than six neurons in the data of this study do not increase the cost of efficiency, so six neurons are regarded to be the best value. The structure of the superior MLP network can be found in Figure 1.

Table 2.

Sensitivity analysis of MLP network with Levenberg–Marquardt backpropagation training algorithm.

Table 3.

Evaluation of the efficiency of different MLP neural network training methods.

Table 4.

Sensitivity analysis for the distribution of data types.

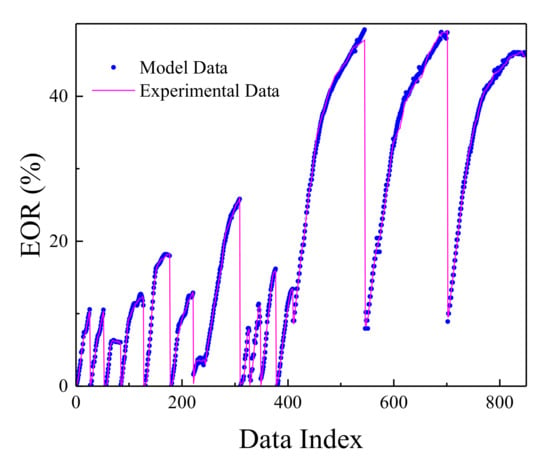

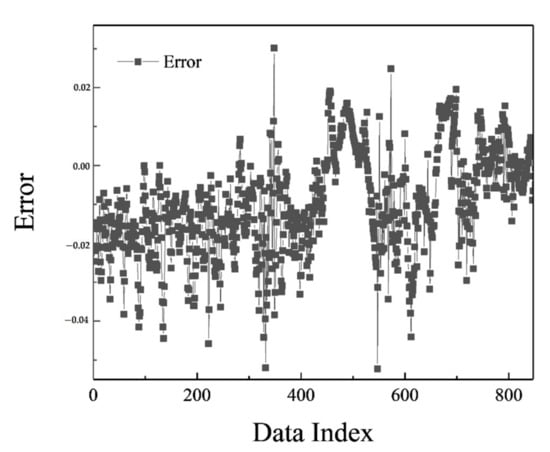

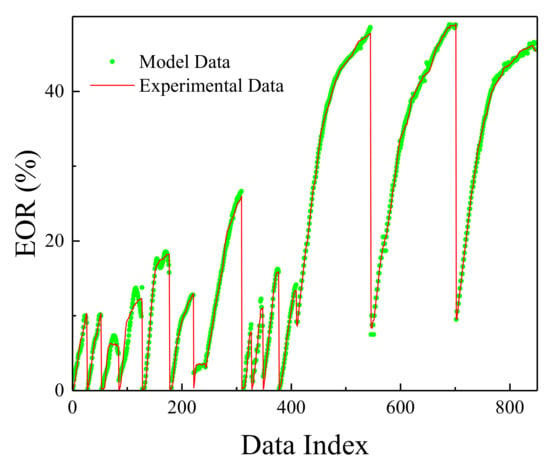

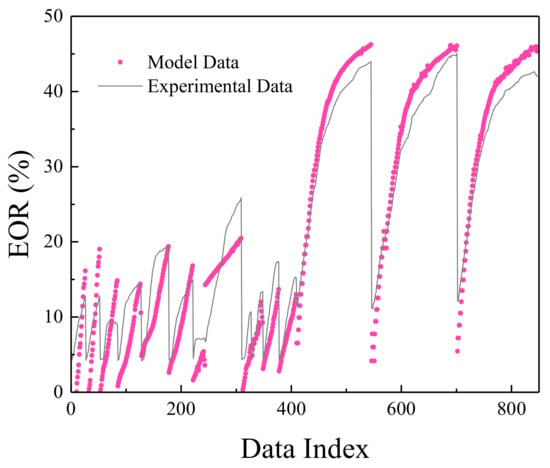

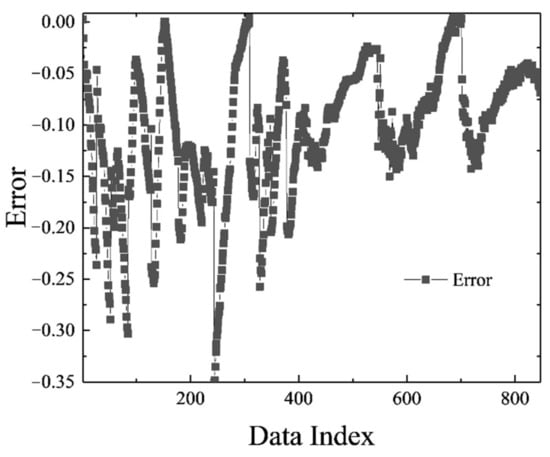

The MLP network was trained in the most optimal mode presented, and its result can be seen in Figure 2. Figure 3 shows the network error after data normalization, which is a reasonable and very low network error that can have many industrial applications in this field.

Figure 2.

Comparison between actual EOR and MLP values.

Figure 3.

MLP error chart.

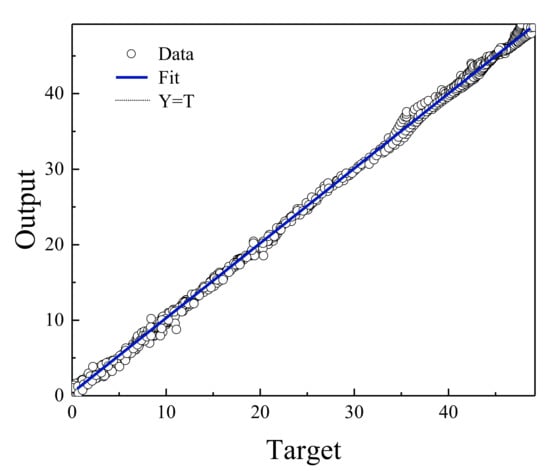

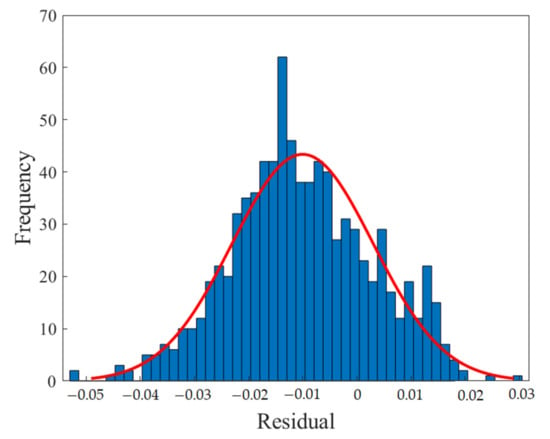

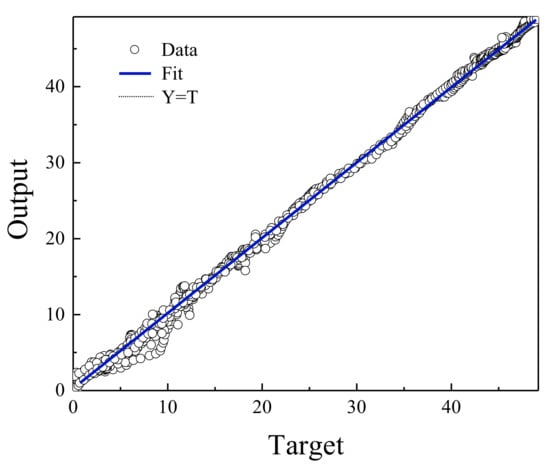

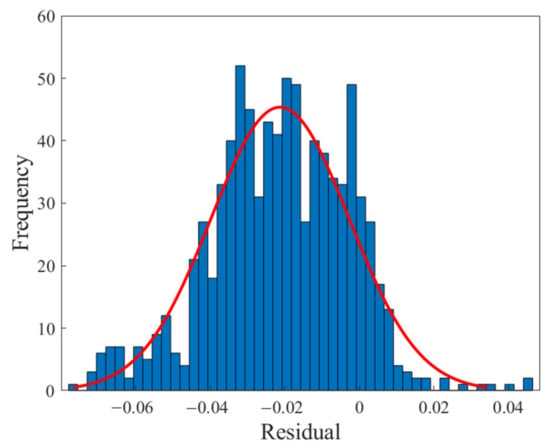

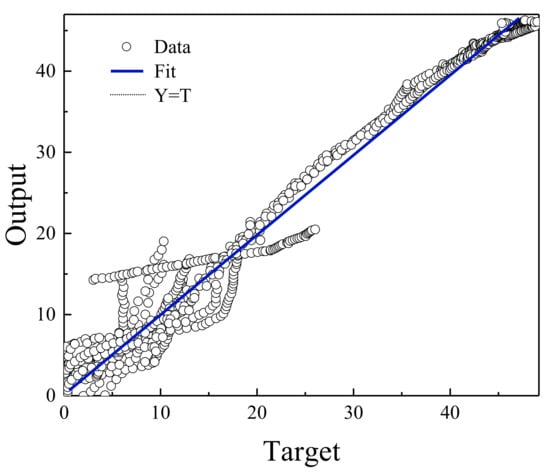

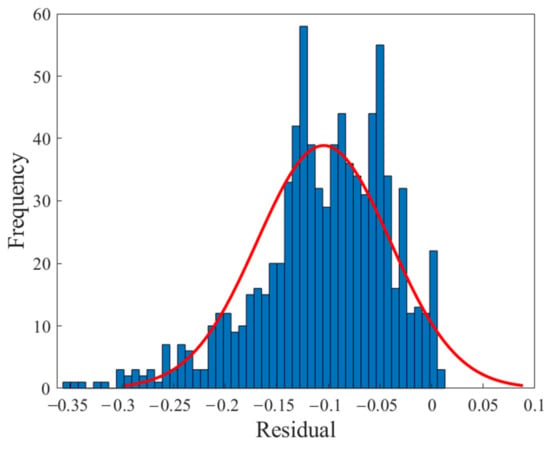

Figure 4 shows the regression diagram of all the output data of the model and the target data obtained from the articles. This diagram shows the amount of difference between the model and target data that overlap. Figure 5 represents an error histogram graph that has been obtained from the normal data loss, which can also be seen below the network error value. Meanwhile, it can be seen that there are very few outliers that can easily be seen at this high level of precision.

Figure 4.

Relationship between MLP network output and EOR target data.

Figure 5.

Error histogram of the MLP neural network.

4.2. Optimum RBF Structure

Based on Table 5, to determine the optimal parameter of the RBF neural network, we first determined the optimal maximum number of neurons in the hidden layer. After running the program about 700 times for each neuron from 1 to 100, 44 neurons were selected as the best number of neurons. Then, the spread coefficient was determined and this operation was carefully examined with about three points from 1 to 100, which gave the best network results with a spread coefficient of 1.1, which can be clearly seen in Table 5.

Table 5.

Sensitivity analysis of RBF network.

It should be noted that in this particular data, when increasing the spread coefficient, the accuracy of the network decreases, and also, as mentioned earlier, when increasing the number of neurons, more accuracy can be expected from the neural network, but this happens until the cost that is applied to the network is at the same level as the accuracy of the network (increasing neuron number increases the cost).

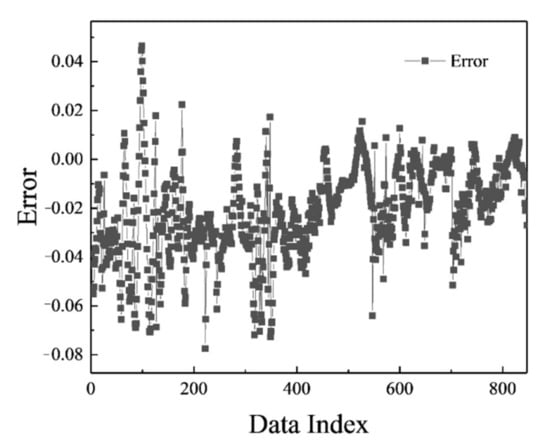

Figure 6, Figure 7, Figure 8 and Figure 9 are related to the superior RBF neural network in polymer data, expressing the high accuracy of this neural network. Figure 6 and Figure 7, which show the normalized error of the data, indicate that this network has a very low error in data estimation and has a good ability to predict the data. In addition, based on Figure 8 and Figure 9, this capability can be seen and it can also be easily seen that this network has very few outliers and residual values have normal scattering, which show the strength of this network.

Figure 6.

Comparison between actual EOR and RBF values.

Figure 7.

RBF error chart.

Figure 8.

Relationship between RBF network output and EOR target data.

Figure 9.

Error histogram of the RBF neural network.

4.3. Optimum ANFIS Structure

Experiments were performed and different ANFIS networks from three common types of ANFIS, grid partitioning-based ANFIS, subtractive clustering based ANFIS, and fuzzy c-means (FCM) clustering, were run [8], and Table 6 shows their sensitivity analysis. Information about fuzzy neural networks based on subtractive clustering is reported.

Table 6.

The performance of different types of ANFIS.

To determine the optimal parameters of the fuzzy neural network which are presented in this section, a trial and error method and its repetition for each parameter at a rate of 100 times and recording network data and determining the best values for the neural network was carried out. Based on the sensitivity analysis that is shown in Table 7, it can be stated that by increasing the step size decrease rate for polymer data to 23, the desired result is obtained, and as the size of the network increases, an error also occurs. The same is true for changing the step size increase rate parameter up to 20. Increasing the value of the initial step size parameter above four and decreasing it to less than four networks do not provide the desired results. The value of the radius parameter is a vector that determines the range of influence of the center of the clusters in each of the data dimensions. With a lot of trial and error, a value of 0.333 was determined for this parameter, which provides the most desirable network for these polymer data.

Table 7.

Sensitivity analysis of ANFIS network based on subtractive clustering.

Figure 10, Figure 11, Figure 12 and Figure 13 show the accuracy of this network, and according to Figure 10 and Figure 11, this network has more errors than the MLP and RBF networks presented in the previous sections. Based on Figure 12 and Figure 13 showing the linear regression and a histogram of the error of the data after their normalization, respectively, it was found that there are very few outlier when estimating data using the fuzzy neural network and relatively good accuracy but due to the higher accuracy seen in previous cases, it is less accurate than MLP and RBF neural networks.

Figure 10.

Comparison between actual EOR and ANFIS values.

Figure 11.

ANFIS error chart.

Figure 12.

Relationship between ANFIS network output and EOR target data.

Figure 13.

Error histogram of the ANFIS neural network.

4.4. Performances of Optimized MLP, RBF, and ANFIS Models

Based on the comparisons made in the previous sections, it can be presented that the MLP neural network has the best performance for teaching this type of data. Figure 2, Figure 3, Figure 4 and Figure 5 show the performance of the trained network using polymer data, which was discussed in detail in the previous sections. The MLP neural network with the Levenberg–Marquardt backpropagation algorithm, along with its sensitivity analysis presented in Table 2, has a very strong prediction ability with the desired data inside and outside the predicted range. The general shape of the network with six neurons in the hidden layer is shown in Figure 1. The complete information of the best-trained network (which is of the MLP type) is shown in Table 8.

Table 8.

Properties of the optimized MLP model.

5. Overfitting Evaluation

Overfitting is a phenomenon in which the accuracy of network training data is very high and powerful, but this is not observed in network test data. The reasons for this can be a small dataset [52] and a very complex model [53]. The figures and diagrams embedded in the previous sections clearly show that the trained neural networks do not involve overfitting at all, but a method similar to the Tabaraki and Khodabakhshi method presented in 2020 [53] can be used to prove that the models that are presented in this section do not include overfitting

At the beginning of the work for the target network (here, for the top MLP, RBF, and ANFIS networks), the value of the total number of adjustable parameters (TNAP) was calculated, and for this purpose the following equation is used:

where , , , , , and are the numbers of hidden neurons, input neurons, membership functions, parameters in membership functions (this value is specifically intended to be two for Gaussian functions), output neurons, and rules, respectively. These values are measured for the best MLP and RBF neural networks, which are equal to 84 and 616, respectively. To calculate this value for ANFIS, we have: number of input neurons (11), number of membership functions (11), number of parameters in membership functions (2), number of output neurons (1), and number of rules (3). By placing these values in Equation (16), the value 245 is obtained for the desired parameter [53].

To determine the threshold value of the total number of adjustable parameters, the value of another parameter that is presented in the following equation must be determined:

The amount of is equal to the amount of training data. This value is 296.5 for the data of this study (593 divided by 2. See Table 8 for more information). According to the literature, if NPAP is lower than TNAP, there will be no overfitting [53,54].

From the experiments performed, it can be clearly stated that the MLP neural network and the superior ANFIS neural network do not encounter any kind of overfitting in this study, but the introduced superior RBF network may have overfitting.

6. Relevancy Factor Evaluation

It was concluded that the introduced networks have good accuracy in predicting EOR data, for which the MLP network was introduced as the top network. In the following section, the impact of each input on the output (EOR) is measured.

where and designate the th value of the th input variable and the average value of the th input variable, respectively; indicates the th predicted EOR value, denotes the mean value of predicted values of EOR, and finally is the amount of data in the gathered dataset. On the other hand, the value of the relevancy factor is defined in the range between −1 and +1. The closer the value of is to +1, the more positive the effect, and the closer the value of is to −1, the more negatively it affects the network.

Relevancy factor values for each input are presented in Table 9. Accordingly, API gravity, salinity, permeability, porosity, and salt concentration have the greatest impact on EOR. It should be noted that these cases can only be expressed for the data collected in these articles that their specifications can be seen in Table 1.

Table 9.

Relevancy factor to predict EOR.

7. Conclusions

In this paper, MLP, RBF, and ANFIS neural networks based on subtractive clustering of EOR data using existing polymer, rock, and fluid properties, including polymer concentration, salt concentration, rock type, initial oil saturation, porosity, permeability, pore volume flooding, temperature, API gravity, molecular weight of the polymer, and salinity, were used to predict the EOR performance of HPAM polymer flooding. All the proposed models had a very high accuracy (R2 = 0.9990 and RMSE = 0.0002 for MLP, R2 = 0.9973 and RMSE=0.0008 for RBF, and R2 = 0.9729 and RMSE = 0.0150 for ANFIS neural network) in predicting the data, however, the MLP was the top network. Finally, by using overfitting prevention methods and testing whether the networks were overfitted or not, the networks were evaluated. It can be also clearly stated that the MLP neural network is valid in all respects to predict data inside and outside the network built-in range. Next, through relevancy factor evaluation, the parameters which have the greatest impact on EOR performance of polymer flooding were shown to be API gravity, salinity, permeability, porosity, and salt concentration. The results emphasized that by using the proposed model, the performance of HPAM polymer flooding in a special reservoir can be well evaluated before carrying out any lab-scale experiments or field-scale operations.

Author Contributions

Conceptualization, H.S., E.E. and H.J.C.; methodology, H.S. and E.E.; software, H.S., E.E. and H.J.C.; validation, H.S., E.E. and H.J.C.; formal analysis, H.S., E.E. and H.J.C.; investigation, H.S., E.E. and H.J.C.; resources, H.S. and E.E.; data curation, H.S. and E.E.; writing—original draft preparation, H.S. and E.E.; writing—review and editing, E.E. and H.J.C.; visualization, H.S., E.E. and H.J.C.; supervision, E.E. and H.J.C.; project administration, E.E. and H.J.C.; funding acquisition, H.J.C. All authors have read and agreed to the published version of the manuscript.

Funding

National Research Foundation of Korea (2021R1A4A2001403).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shayan Nasr, M.; Shayan Nasr, H.; Karimian, M.; Esmaeilnezhad, E. Application of Artificial Intelligence to Predict Enhanced Oil Recovery Using Silica Nanofluids. Nat. Resour. Res. 2021, 30, 529–2542. [Google Scholar] [CrossRef]

- Qiannan, Y.; Yikun, L.; Liang, S.; Shuai, T.; Zhi, S.; Yang, Y. Experimental study on surface-active polymer flooding for enhanced oil recovery: A case study of Daqing placanticline oilfield, NE China. Pet. Explor. Dev. 2019, 46, 1206–1217. [Google Scholar]

- Meybodi, H.E.; Kharrat, R.; Wang, X. Study of microscopic and macroscopic displacement behaviors of polymer solution in water-wet and oil-wet media. Transp. Porous Media 2011, 89, 97–120. [Google Scholar] [CrossRef]

- Meybodi, H.E.; Kharrat, R.; Araghi, M.N. Experimental studying of pore morphology and wettability effects on microscopic and macroscopic displacement efficiency of polymer flooding. J. Petrol. Sci. Eng. 2011, 78, 347–363. [Google Scholar] [CrossRef]

- Maurya, N.K.; Kushwaha, P.; Mandal, A. Studies on interfacial and rheological properties of water soluble polymer grafted nanoparticle for application in enhanced oil recovery. J. Taiwan Inst. Chem. Eng. 2017, 70, 319–330. [Google Scholar] [CrossRef]

- Sarsenbekuly, B.; Kang, W.; Fan, H.; Yang, H.; Dai, C.; Zhao, B.; Aidarova, S.B. Study of salt tolerance and temperature resistance of a hydrophobically modified polyacrylamide based novel functional polymer for EOR. Colloids Surf. A Physicochem. Eng. Asp. 2017, 514, 91–97. [Google Scholar] [CrossRef]

- Ershadi, M.; Alaei, M.; Rashidi, A.; Ramazani, A.; Khosravani, S. Carbonate and sandstone reservoirs wettability improvement without using surfactants for Chemical Enhanced Oil Recovery (C-EOR). Fuel 2015, 153, 408–415. [Google Scholar] [CrossRef]

- Saberi, H.; Esmaeilnezhad, E.; Choi, H.J. Application of artificial intelligence to magnetite-based magnetorheological fluids. J. Ind. Eng. Chem. 2021, 100, 399–409. [Google Scholar] [CrossRef]

- Amid, S.; Mesri Gundoshmian, T. Prediction of output energies for broiler production using linear regression, ANN (MLP, RBF), and ANFIS models. Environ. Prog. Sustain. Energy 2017, 36, 577–585. [Google Scholar] [CrossRef]

- Gao, C. Viscosity of partially hydrolyzed polyacrylamide under shearing and heat. J. Pet. Explor. Prod. Technol. 2013, 3, 203–206. [Google Scholar] [CrossRef] [Green Version]

- Maghsoudian, A.; Tamsilian, Y.; Kord, S.; Soulgani, B.S.; Esfandiarian, A.; Shajirat, M. Styrene Intermolecular Associating Incorporated-Polyacrylamide Flooding of Crude Oil in Carbonated Coated Micromodel System at High Temperature, High Salinity Condition: Rheology, Wettability Alteration, Recovery Mechanisms. J. Mol. Liq. 2021, 337, 116206. [Google Scholar] [CrossRef]

- Obisesan, O.; Ahmed, R.; Amani, M. The Effect of Salt on Stability of Aqueous Foams. Energies 2021, 14, 279. [Google Scholar] [CrossRef]

- Ahmad, F.B.; Williams, P.A. Effect of salts on the gelatinization and rheological properties of sago starch. J. Agric. Food Chem. 1999, 47, 3359–3366. [Google Scholar] [CrossRef]

- Shayan Nasr, M.; Esmaeilnezhad, E.; Choi, H.J. Effect of silicon-based nanoparticles on enhanced oil recovery: Review. J. Taiwan. Inst. Chem. Eng. 2021, 122, 241–259. [Google Scholar] [CrossRef]

- Sánchez-Minero, F.; Sánchez-Reyna, G.; Ancheyta, J.; Marroquin, G. Comparison of correlations based on API gravity for predicting viscosity of crude oils. Fuel 2014, 138, 193–199. [Google Scholar] [CrossRef]

- Liu, Y.; Shi, S.; Wang, Y.; Xie, J.; Xie, G. A new model for predicting the viscosity of heavy oil. Pet. Sci. Technol. 2016, 34, 832–837. [Google Scholar] [CrossRef]

- Hemmati-Sarapardeh, A.; Aminshahidy, B.; Pajouhandeh, A.; Yousefi, S.H.; Hosseini-Kaldozakh, S.A. A soft computing approach for the determination of crude oil viscosity: Light and intermediate crude oil systems. J. Taiwan. Inst. Chem. Eng. 2016, 59, 1–10. [Google Scholar] [CrossRef]

- Hadia, N.J.; Ng, Y.H.; Stubbs, L.P.; Torsæter, O. High Salinity and High Temperature Stable Colloidal Silica Nanoparticles with Wettability Alteration Ability for EOR Applications. Nanomaterials 2021, 11, 707. [Google Scholar] [CrossRef]

- Hanamertani, A.S.; Ahmed, S. Probing the role of associative polymer on scCO2-Foam strength and rheology enhancement in bulk and porous media for improving oil displacement efficiency. Energy 2021, 228, 120531. [Google Scholar] [CrossRef]

- Guetni, I.; Marlière, C.; Rousseau, D. Chemical EOR in Low Permeability Sandstone Reservoirs: Impact of Clay Content on the Transport of Polymer and Surfactant. In Proceedings of the SPE Western Regional Meeting, Virtual Conference, 20–22 April 2021. [Google Scholar]

- Santhosh, B.; Ionescu, E.; Andreolli, F.; Biesuz, M.; Reitz, A.; Albert, B.; Sorarù, G.D. Effect of pyrolysis temperature on the microstructure and thermal conductivity of polymer-derived monolithic and porous SiC ceramics. J. Eur. Ceram. Soc. 2021, 41, 1151–1162. [Google Scholar] [CrossRef]

- Esfandyari, H.; Moghani, A.; Esmaeilzadeh, F.; Davarpanah, A. A Laboratory Approach to Measure Carbonate Rocks Adsorption Density by Surfactant and Polymer. Math. Problems Eng. 2021, 2021, 5539245. [Google Scholar] [CrossRef]

- Almahfood, M.; Bai, B. Characterization and oil recovery enhancement by a polymeric nanogel combined with surfactant for sandstone reservoirs. Pet. Sci. 2021, 18, 123–135. [Google Scholar] [CrossRef]

- Zhang, Y.; Huang, S.S.; Luo, P. Coupling immiscible CO2 technology and polymer injection to maximize EOR performance for heavy oils. J. Can. Pet. Technol. 2010, 49, 25–33. [Google Scholar] [CrossRef]

- Shaker Shiran, B.; Skauge, A. Enhanced oil recovery (EOR) by combined low salinity water/polymer flooding. Energy Fuels 2013, 27, 1223–1235. [Google Scholar] [CrossRef]

- Li, M.; Romero-Zerón, L.; Marica, F.; Balcom, B.J. Polymer flooding enhanced oil recovery evaluated with magnetic resonance imaging and relaxation time measurements. Energy Fuels 2017, 31, 4904–4914. [Google Scholar] [CrossRef]

- Sharafi, M.S.; Jamialahmadi, M.; Hoseinpour, S.-A. Modeling of viscoelastic polymer flooding in Core-scale for prediction of oil recovery using numerical approach. J. Mol. Liq. 2018, 250, 295–306. [Google Scholar] [CrossRef]

- Rezaei, A.; Abdi-Khangah, M.; Mohebbi, A.; Tatar, A.; Mohammadi, A.H. Using surface modified clay nanoparticles to improve rheological behavior of Hydrolized Polyacrylamid (HPAM) solution for enhanced oil recovery with polymer flooding. J. Mol. Liq. 2016, 222, 1148–1156. [Google Scholar] [CrossRef]

- Kakati, A.; Kumar, G.; Sangwai, J.S. Low Salinity Polymer Flooding: Effect on Polymer Rheology, Injectivity, Retention, and Oil Recovery Efficiency. Energy Fuels 2020, 34, 5715–5732. [Google Scholar] [CrossRef]

- Cheraghian, G. Effect of nano titanium dioxide on heavy oil recovery during polymer flooding. Pet Sci. Technol. 2016, 34, 633–641. [Google Scholar] [CrossRef]

- Peinado, J.; Jiao-Wang, L.; Olmedo, Á.; Santiuste, C. Use of Artificial Neural Networks to Optimize Stacking Sequence in UHMWPE Protections. Polymers 2021, 13, 1012. [Google Scholar] [CrossRef] [PubMed]

- Abnisa, F.; Anuar Sharuddin, S.D.; Bin Zanil, M.F.; Wan Daud, W.M.A.; Indra Mahlia, T.M. The yield prediction of synthetic fuel production from pyrolysis of plastic waste by levenberg–Marquardt approach in feedforward neural networks model. Polymers 2019, 11, 1853. [Google Scholar] [CrossRef] [Green Version]

- Al-Yaari, M.; Dubdub, I. Application of Artificial Neural Networks to Predict the Catalytic Pyrolysis of HDPE Using Non-Isothermal TGA Data. Polymers 2020, 12, 1813. [Google Scholar] [CrossRef] [PubMed]

- Doblies, A.; Boll, B.; Fiedler, B. Prediction of thermal exposure and mechanical behavior of epoxy resin using artificial neural networks and Fourier transform infrared spectroscopy. Polymers 2019, 11, 363. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Meißner, P.; Watschke, H.; Winter, J.; Vietor, T. Artificial neural networks-based material parameter identification for numerical simulations of additively manufactured parts by material extrusion. Polymers 2020, 12, 2949. [Google Scholar] [CrossRef] [PubMed]

- Ke, K.-C.; Huang, M.-S. Quality Prediction for Injection Molding by Using a Multilayer Perceptron Neural Network. Polymers 2020, 12, 1812. [Google Scholar] [CrossRef] [PubMed]

- Maleki, A.; Safdari Shadloo, M.; Rahmat, A. Application of artificial neural networks for producing an estimation of high-density polyethylene. Polymers 2020, 12, 2319. [Google Scholar] [CrossRef] [PubMed]

- Sabah, M.; Talebkeikhah, M.; Agin, F.; Talebkeikhah, F.; Hasheminasab, E. Application of decision tree, artificial neural networks, and adaptive neuro-fuzzy inference system on predicting lost circulation: A case study from Marun oil field. J. Petrol. Sci. Eng. 2019, 177, 236–249. [Google Scholar] [CrossRef]

- Kopal, I.; Harničárová, M.; Valíček, J.; Krmela, J.; Lukáč, O. Radial basis function neural network-based modeling of the dynamic thermo-mechanical response and damping behavior of thermoplastic elastomer systems. Polymers 2019, 11, 1074. [Google Scholar] [CrossRef] [Green Version]

- Ghorbani, M.A.; Zadeh, H.A.; Isazadeh, M.; Terzi, O. A comparative study of artificial neural network (MLP, RBF) and support vector machine models for river flow prediction. Environ. Earth Sci. 2016, 75, 476. [Google Scholar] [CrossRef]

- Yang, C.; Ma, W.; Zhong, J.; Zhang, Z. Comparative Study of Machine Learning Approaches for Predicting Creep Behavior of Polyurethane Elastomer. Polymers 2021, 13, 1768. [Google Scholar] [CrossRef]

- Izonin, I.; Tkachenko, R.; Dronyuk, I.; Tkachenko, P.; Gregus, M.; Rashkevych, M. Predictive modeling based on small data in clinical medicine: RBF-based additive input-doubling method. Math. Biosci. Eng. 2021, 18, 2599–2613. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Cao, M.; Sun, Y.; Gao, H.; Lou, F.; Liu, S.; Xia, Q. Uncertain data stream algorithm based on clustering RBF neural network. Microprocess. Microsyst. 2021, 81, 103731. [Google Scholar] [CrossRef]

- Oyang, Y.-J.; Hwang, S.-C.; Ou, Y.-Y.; Chen, C.-Y.; Chen, Z.-W. Data classification with radial basis function networks based on a novel kernel density estimation algorithm. IEEE Trans. Neural Netw. Learn. Syst. 2005, 16, 225–236. [Google Scholar] [CrossRef] [PubMed]

- Zadeh, L.A. Fuzzy logic. Computer 1988, 21, 83–93. [Google Scholar] [CrossRef]

- Esmaeilnezhad, E.; Ranjbar, M.; Nezam abadi-pour, H.; Shoaei Fard Khamseh, F. Prediction of the best EOR method by artificial intelligence. Pet. Sci. Technol. 2013, 31, 1647–1654. [Google Scholar] [CrossRef]

- Buragohain, M.; Mahanta, C. A novel approach for ANFIS modelling based on full factorial design. Appl. Soft Comput. 2008, 8, 609–625. [Google Scholar] [CrossRef]

- Afriyie Mensah, R.; Xiao, J.; Das, O.; Jiang, L.; Xu, Q.; Okoe Alhassan, M. Application of Adaptive Neuro-Fuzzy Inference System in Flammability Parameter Prediction. Polymers 2020, 12, 122. [Google Scholar] [CrossRef] [Green Version]

- Saremi, S.; Mirjalili, S.; Lewis, A. Grasshopper Optimisation Algorithm: Theory and application. Adv. Eng. Softw. 2017, 105, 30–47. [Google Scholar] [CrossRef] [Green Version]

- Kim, C.; Batra, R.; Chen, L.; Tran, H.; Ramprasad, R. Polymer design using genetic algorithm and machine learning. Comput. Mater. Sci. 2021, 186, 110067. [Google Scholar] [CrossRef]

- Zhu, W.; Rad, H.N.; Hasanipanah, M. A chaos recurrent ANFIS optimized by PSO to predict ground vibration generated in rock blasting. Appl. Soft Comput. 2021, 108, 107434. [Google Scholar] [CrossRef]

- Baek, Y.; Kim, H.Y. ModAugNet: A new forecasting framework for stock market index value with an overfitting prevention LSTM module and a prediction LSTM module. Expert Syst. Appl. 2018, 113, 457–480. [Google Scholar] [CrossRef]

- Tabaraki, R.; Khodabakhshi, M. Performance comparison of wavelet neural network and adaptive neuro-fuzzy inference system with small data sets. J. Mol. Graph. Model. 2020, 100, 107698. [Google Scholar] [CrossRef]

- Kauffman, G.W.; Jurs, P.C. Prediction of surface tension, viscosity, and thermal conductivity for common organic solvents using quantitative structure property relationships. J. Chem. Inform. Comput. Sci. 2001, 41, 408–418. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).