1. Introduction

Rapid recent progress in soft robotics has enabled more applications of robots related to human interactions and communications. Communication practices vary for different purposes of the robot. This could include verbal or non-verbal communication. Recently, physical touch communication between humans and robots has been deemed indispensable and much more popular compared to verbal delivery in almost every social robot [

1]. For instance, vending machine interfaces, service robots in restaurants or shops, or even smart phones. One practical application of social robots in health and therapy is nursing robots in hospitals for physical and intellectual rehabilitation [

2] or in children’s healthcare experiences [

3]. A multisensory environment (MSE) module as part of a robot system can be defined as a space equipped with sensory materials that provide users with visual, auditory, and tactile stimulations, usually with the aim of offering stimulating or relaxing experiences to individuals with cognitive and behavioral impairments, including people with Profound Intellectual and Multiple Disabilities (PIMD) [

4,

5].

People with PIMD are characterized by severe cognitive and/or sensory disabilities, which lead to very intensive support needs. They are developmentally equivalent to a two-year-old and have other physical problems, such as cerebral vision impairment. Individuals with PIMD often have difficulties in communication, so caregivers must always be on the alert for their few communication signs, as they could interpret the individual’s messages. The MSE integrated with items that stimulate other senses is often utilized to trigger and elicit positive responses in interactions between people with PIMD and sometimes for therapeutic reasons [

4,

5]. These devices are typically equipped with aromatherapy, music, adjustable lighting, a projector, a rocking chair, bean bags, and weighted blankets [

4,

5]. However, many MSE products have restricted engagement capabilities and may elicit a limited set of responses. Therefore, more versatile MSE devices that interreact safely through means such as touch [

6], sounds [

7], lights [

8,

9] while generating visual, auditory, or tactile feedback are demanded.

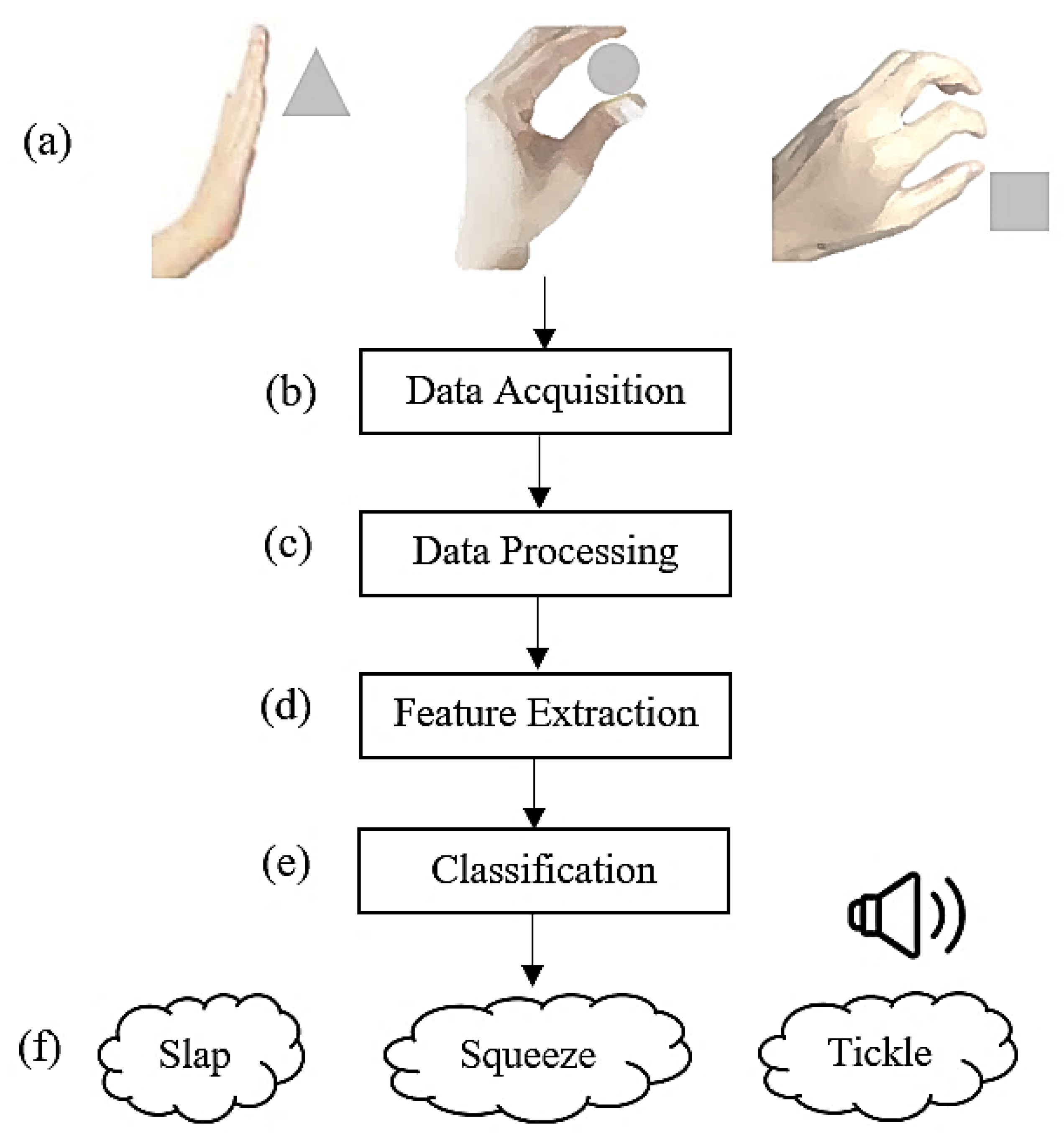

This study presents a multisensory soft robotics module with potential use for educational and therapeutic purposes. The designs need to be sturdy, safe, modular, and adjustable, as each client has different preferences and personalities. This approach supports the more creative and personalized modules for an interactive communication module by giving the robotic body a voice in response to touch. Hence, a safe modular product made of soft material, particularly silicon, is introduced to translate excitement levels by detecting and classifying touch gestures to a scale of three different sounds. This study aims to recognize three different types of touch gestures based on categorizations of higher human intents through affective touch so that they reflect anger (slap), restful (tickle), and playful (squeeze) emotions.

1.1. Touch Gesture Sensing in Robots

Humans can use gestures to express attitudes and desires. To bring the computer closer to human life, this project focuses on applying touching gestures to human–robot interactions. This could help build a concept of how humans and robots can understand each other. The role that the sense of touch plays in emotional communication in humans and animals has been widely studied, finding relations to attachment, bonding, stress, and even memory [

10,

11]. Touch, as a common communicative gesture, is an important aspect of social interaction among humans. Several works focus on using touch as a valid modality to ascertain the user’s intention and claim evidence of the potency of touch as a powerful means of communicating emotions. Hence, touch is a natural way of interaction that can contribute to improving human–robot interaction (HRI) and promoting intelligent behavior in social robots.

In robotics, various types of sensors are used to classify touch gestures. A set of microphones is utilized for the swipe, tap, knock, and stomp gesture classifications with a high accuracy range of 91–99% [

12]. However, background noise and the risk of interfering with the touch signal have been deemed the main drawbacks. In addition, the application of acoustic touch detection and classification is limited to small objects and solid materials, such as plastic or metal. Other tactile sensing technologies, such as resistive, capacitive, and optical transducers, could be considered in the MSE module [

13]. For instance, optical touch gesture detection and classification of soft materials has not yet met widespread acceptance due to changing lighting conditions, posing classification reliability issues, and changing lighting conditions [

14], and some of these approaches can only sense relatively simple motion and are difficult to embed inside deforming surfaces or elongational soft robots. In this work, a general sensing strategy using low-cost piezoelectric accelerometers and their efficiency in both touch gesture classification and various soft module sizes is demonstrated. The PVDF sensors were selected due to their flexibility, small size, and high sensitivity.

The Piezoelectric PVDF sensor can be used at a wide range of frequencies and is not bandlimited, so the amount of signal transferred is significant [

15]. In addition, the lightweight and flexible properties of this sensor help lower the mechanical impedance [

15]. The PVDF film sensor is also thin and flexible; hence, it is compliant with any type of surface. The sensor can easily be pasted on different materials. Compared to strain gauges, PVDF sensors are easier to install and can suit any size and geometry. This feature makes the PVDF film sensor stand out from the complicated installation process of a strain gauge.

1.2. Machine Learning Classification

The real-time classification and recognition of physical interactions, or proprioception, is a challenging problem for soft robots due to the many degrees of freedom (DOFs) and lack of available off-the-shelf sensors. In this work, low-cost piezoelectric sensors are used based on a data-driven machine learning (ML) strategy to classify proprioception input signals accurately in real time. Machine learning algorithms are also applied to determine the relationship between sensor data and shape-oriented parameters. To do so, the signals of different gestures on different soft modules with various shapes are acquired to create a dataset to train the model.

Different classifier algorithms have been used in ML for touch and gesture classification in human and robot interactions, including linear and quadratic support vector machines (SVM) [

16], temporal decision trees (TDT) [

17], and k-nearest neighbors (kNN) [

18]. However, it was shown that, as per the type of sensors and the applications, the most suitable classification method could also be varied. Therefore, this study used different SVM classifier algorithms using the machine learning package in MATLAB as the classifying algorithm to classify different tactile gestures.

The rest of the paper is structured as follows:

Section 2 describes the proposed system for touch detection and classification and details the hardware used in this work, as well as the fabrication of the soft robot module.

Section 3 describes the feature collection process with the validation and testing of our dataset and discusses the results obtained. Finally, the conclusions of this work are presented in

Section 4.

3. Results and Discussion

A high-pass Chebyshev Type 2 filter was employed to process the signals. This will help feature extraction to go more easily and increase accuracy. The stop frequency is set to be 0.4 Hz, and the stop amplitude is 60 dB. The signals are time domain, and the achieved results in the first 30 s are shown in

Figure 6. The results shown below was achieved by applying the gestures to the silicon module continuously. The red and blue features represent the output of sensor 1 and sensor 2 on the silicon module. Furthermore, the graphs showed that each gesture characteristic could be distinctly received by the sensors, producing a voltage linearly related to the magnitude of the touch force applied. This was deduced by the small but dense variation of the tickling graph, the large and widespread variation of the squeezing graph, and the sharp and widespread variation of the slapping graph.

The results are demonstrated in five cases. The first is a classification based solely on a round shape. Comparisons between round and triangle, round, and square, and triangle and square are the second, third, and fourth cases, respectively. The final case is to combine all three shapes into one classification session.

In the first case, the classification consists of three gestures in a round shape only. Therefore, the category of classifying variables includes tickling, squeezing, and slapping. The model was trained with all SVM algorithms, and three returned the highest accuracy of 99.6%, including Linear, Quadratic, and Cubic SVM, as shown in

Figure 7a. The complexity of this case is the lowest because the model only needs to learn three types of gestures, and the amount of data is not large. Therefore, a linear SVM is sufficient to train the model in this case. The confusion matrix (

Figure 7b) shows that all the testing samples were correctly classified, with no misclassified samples, in the round module. The accuracy percentage of the model in this case is 100%.

In the second case, the model is meant to classify three types of touching on two shapes: round and square. This makes the number of classifying variables 6 instead. All SVM algorithms are also used to train the model. Fine Gaussian SVM returned the highest accuracy, up to 100%. However, the Fine Gaussian SVM model was not promising with the testing data. Therefore, the Cubic SVM model was used, reflecting the least amount of misclassification in the testing data. The confusion matrix had an accuracy of 92.92%, as shown in

Figure 8a. The third case considered the classification of touch gestures on round and triangle, where again the Cubic SVM model was used to achieve the highest classification algorithms of 97.5%, as shown in the confusion matrix in

Figure 8b. The scenario in the third study is similar to the two earlier cases, but the classification between square and triangle shapes and three touch gestures is shown in

Figure 8c. However, the Cubic SVM model for this case had the lowest accuracy of 84.58%.

After testing with groups of two shapes, the final case is to combine all three shapes with the acceleration response of three touching gestures on each shape. The total number of classifying variables is 9. With a larger dataset, Quadratic SVM has shown the highest accuracy of 89.4%. Since the dataset for this case is the biggest, the cross-validation folds were set to be 5 folds. Cross-validation was applied to protect against overfitting by partitioning the dataset into folds and estimating the accuracy of each fold. As shown in the confusion matrix, the model could still classify with an acceptable accuracy of 85%, considering the larger pool of datasets.

As per the confusion matrix results (

Figure 8d), the touch gestures of all the testing data could be successfully classified. However, in cases where the model classified three gestures as different shapes, the maximum accuracy dropped. In most cases, the samples that are misclassified are tickles and slaps. This could be explained by the fact that tickles and slaps were performed on the surface of the module. In contrast, the gesture of squeezing comes from two sides of the module. Moreover, round, triangle, and square are three different shapes, and the touchpoints of squeezing can be completely different. Thus, errors in classifying squeezes are less likely to occur.

The three touches on all shapes’ success rates are summarized in

Table 1. It can be noticed in the tickling signals on round and square shapes that they are mostly similar. The tickling in a round shape and the tickling in a square shape have an equal number of peaks. Both happen within a duration of time and have a similar pattern ratio. This is also the cause of the errors in the two cases of round vs triangle and triangle vs square. The misclassed samples in round and triangle are the least among 3 cases that compare the gestures in pairs of shapes. The case that has the most misclassed samples is triangle vs square. The errors occur mainly in the classification of slapping samples of the two shapes. In brief, throughout the classification in all cases, the shapes of the module affected only the squeezing gesture. Meanwhile, tickling and slapping are less dependent on the shapes of the module because they are performed only on the surface, which is similar among all tested modules.

The tickling action on the shapes was almost the same. This was because this action was applied with the fingertips with light contact on the surface, which was the same for all shapes. The slapping gestures experienced the same, as this gesture occurred too quickly and powerfully, causing the graph to undergo a burst in voltage and then quickly go down swiftly. However, a minor difference can be seen right before the stabilized value. Squeezing gestures provided a slight difference between the triangle and the other two shapes. The logic behind this apparently came from the shape of the module. As stated above, the squeezing gesture was performed by placing pressure on the opposite sides of the module. Therefore, the width of the shapes contributed to the small variation, and the width of the triangle was the smallest of the three shapes, while the round and square shapes were all 100 mm in dimension.

4. Conclusions

A physically stimulated soft module for detecting and classifying human touch gestures, based on machine learning, was developed to help with stimulating or relaxing experiences for individuals with cognitive and behavioral impairments, including Profound Intellectual and Multiple Disabilities (PIMD) people and safe social robots, where the sound reaction resulting from the type of touch could be used to therapize the user’s emotional problems. The study provided knowledge of how a computer module was trained to recognize the three common touching gestures of humans, which are tickling, squeezing, and slapping, on different shaped soft modules, including round, triangle, and square.

First, Ecoflex 00-50 silicon-made soft modules were fabricated using 3D printing in different shapes. The touching gestures’ signals were then acquired on all three shapes by means of two PVDF accelerometers. Sufficient training and testing data were used for classification via the SVM algorithm in the machine learning package in MATLAB. The model has been trained with the data of three gestures from one shape, different pairs of shapes, and all three shapes together. The validating results were acceptable since the highest accuracy was 100% in differentiating the types of touch, and the lowest accuracy was 84.58%, classifying both shape and touch gestures. In detail, only the squeezing gesture is affected by the module’s forms. Tickling and slapping, on the other hand, are less affected by the shape of the module since they are conducted solely on the surface, which is consistent across all the evaluated modules. The thickness of the module critically affected the efficiency of the classifying process; the thinner the module, the noisier the data became.

The suggested module may be used by a wider population to inspire curiosity, reduce stress, and improve well-being via the ability to identify and categorize touch gestures, either as a stand-alone system or in conjunction with conventional touch sensing methods. The module should be operated wirelessly so that the user can bring it and put it anywhere. The design of the complete module should be more decorative and user-friendly, i.e., the color and shape of the module could be improved and have different options with all the electronic components hidden and 3D-printed. An inflatable silicone robot is a future direction. In addition, with the luxury of 4D printing, more adjustable and customizable modules are possible.