Abstract

Precision agriculture is a crucial way to achieve greater yields by utilizing the natural deposits in a diverse environment. The yield of a crop may vary from year to year depending on the variations in climate, soil parameters and fertilizers used. Automation in the agricultural industry moderates the usage of resources and can increase the quality of food in the post-pandemic world. Agricultural robots have been developed for crop seeding, monitoring, weed control, pest management and harvesting. Physical counting of fruitlets, flowers or fruits at various phases of growth is labour intensive as well as an expensive procedure for crop yield estimation. Remote sensing technologies offer accuracy and reliability in crop yield prediction and estimation. The automation in image analysis with computer vision and deep learning models provides precise field and yield maps. In this review, it has been observed that the application of deep learning techniques has provided a better accuracy for smart farming. The crops taken for the study are fruits such as grapes, apples, citrus, tomatoes and vegetables such as sugarcane, corn, soybean, cucumber, maize, wheat. The research works which are carried out in this research paper are available as products for applications such as robot harvesting, weed detection and pest infestation. The methods which made use of conventional deep learning techniques have provided an average accuracy of 92.51%. This paper elucidates the diverse automation approaches for crop yield detection techniques with virtual analysis and classifier approaches. Technical hitches in the deep learning techniques have progressed with limitations and future investigations are also surveyed. This work highlights the machine vision and deep learning models which need to be explored for improving automated precision farming expressly during this pandemic.

1. Introduction

Smart farming helps farmers plan their work with the data obtained with agricultural drones, satellites and sensors. The detailed topography, climate forecasts, temperature and acidity of the soil can be accessed by sensors positioned on the agricultural farms. Precision agriculture affords farmers with compilations of statistics to:

- create an outline of the agricultural land

- detect environmental risks

- manage the usage of fertilizers and pesticides

- forecast crop yields

- organize for harvest

- improve the marketing and distribution of the farm products.

According to the 2011 census, in India nearly 54.6% of the entire workforce is dedicated to agricultural and associated sector tasks, which in 2017–2018 accounted for 17.1% of the nation’s Gross Value Added. To safeguard from the risks inherent to agriculture, the Ministry of Agriculture and Farmers Welfare announced an insurance scheme for crops in 1985. Problems have emerged in the scheme technology to collect data and lessen the delays in responding to insurance claims by the farmers. Crop yield estimation is mandatory for this and are recorded by conducting Crop Cutting Experiments (CCE) conducted in regions of the states by the Government of India. The directorate of Economics and Statistics is presently guiding Crop Cutting Experiments for 13 chief crops under the General Crop Estimation Scheme. To improve the quality of statistics collection of Crop Cutting Experiments, Global Positioning System (GPS) data such as elevation of fields, area, latitude and longitude are being recorded by remote sensing [1,2]. The vegetation indices acquired through the satellite images track the phenological profiles of the crops throughout the year [3,4].

The conventional crop yield estimation requires crop acreages along with sample assessments that depend on crop cutting experiments. The crop yield data is the most essential data for the area-yield insurance schemes such as Pradhan Mantri Fasal Bima Yojana (PMFBY) in India. The PMFBY scheme was launched to support the Indian farmers financially during times of crop failure caused by natural disasters or pest attacks [5]. To implement these national scale agricultural policies, crop cutting experiments are carried out by government officers in various regions in different districts of the state. Because the costs involved are pretty high, the desired crop data from large specific regions is limited to small scale crop cutting experiments and surveys of small zones. The present-day industry methods for yield estimation use automated computer vision technology to detect and estimate the count of various harvests [6]. The progress in computing capabilities has provided appropriate techniques for small area yield estimation. The proficiency of crop yield estimation can be improved by using remote sensing data for a considerably larger area [7]. Satellite images are quantitatively processed to obtain high accuracy in agricultural applications such as crop yield estimation [8].

The crop yield prediction has been possible by counting the number of flowers and comparinf this number with the count of fruits prior to harvesting stage for citrus trees [6]. The bloom intensity existing in an orchard influences the crop management in the early season. The estimation of flower count with a deep learning model will be effectual for crop yield prediction, thinning and pruning which impact the fruit yield [9]. The prediction of vine yield helps the farmer to prepare for harvest, transport the crop and plan for distribution in the market. The plant diseases during the flowering and fruit development stage may affect crop yield forecasts. Deep learning classifier models are advanced to execute crop disease identification to operate in agricultural farms under controlled and real cultivation environments [10,11].

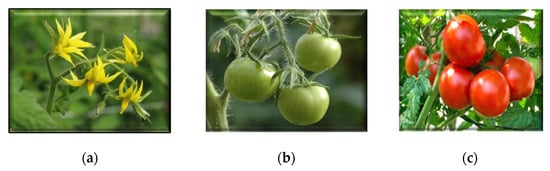

At Iwate University, Japan, a robotic harvester with a machine vision system was able to recognize Fuji apples on the tree and estimate the fruit yield with an accuracy of 88%. The bimodal distribution of the enhanced image with its histogram uses optimal thresholding segmentation to extract the fruit portion from the background [12]. The maturity level for tomato berries can be detected with a supervised backpropagation neural network classifier, with the green, orange and red color extraction technique as explained in [13]. Agricultural robots execute their farm duties either as self-propelled autonomous vehicles or manually controlled smart machines. The autonomous vehicles may be an unmanned aerial vehicle (UAV) or an unmanned ground vehicle (UGV) guided by GPS and a global navigation satellite system (GNSS). The autonomous agricultural tasks that can be accomplished by the larger robots range from seeding to harvesting and post-harvesting tasks as well in some cases.

Automation in agriculture to perform farm duties must face challenges due to lighting conditions and crop variations [14,15]. In Norway, an autonomous strawberry harvester was developed considering light variations. The machine vision system changed its color threshold in response to alterations in the light intensity [16]. Robot harvesting machines achieve lower accuracy in spotting and picking crops due to occlusions caused by leaves and twigs [17,18]. Modern machine vision techniques and machine learning models with assorted sensors and cameras can overcome these inadequacies. The basic system of a robot harvester must perform functions such as: detect the fruit or detect the disease, pick the fruit/ berry without damaging it, guide the harvester to navigate the field, maneuver irrespective of the lighting and weather conditions, be cost-effective and have a simple mechanical design [19].

Being a review paper, we have extensively surveyed the merits and demerits of the deep learning techniques used in smart agriculture. A keyword-based search was performed for transactions, journal and conference papers with the scientific indexing from databases such as IEEE Xplore, Scopus, Wiley Online library and ScienceDirect. We used “machine vision” and “deep learning techniques in agriculture” as keywords and filtered the papers for various agricultural applications. This review intends to help researchers further explore machine vision techniques and the various classifiers of deep learning models used in smart farming.

The outline of our review is as follows: Section 2 discusses with the various image acquisition approaches at the ground level and aerial view. The modes of automation for diverse agricultural applications are surveyed in Section 3. Section 4 deals with the image preprocessing techniques involved in enhancing the raw images. Section 5 lists the image segmentation approaches for distinguishing fruit/flower pixels from the background pixels. Section 6 emphases the selection and extraction of features for the deep classifier models. Section 7 discusses further literature on classifiers used in deep learning models for various agricultural applications, followed by the available datasets. Section 8 expands the survey with the performance metrics used to compare the existing and proposed algorithms. Section 9 interprets with the pros and cons of the existing approaches. Section 10 concludes the survey with a discussion of potential future work.

2. Materials and Methods

Many studies on machine vision and deep learning models for fruit and flower detection, counting and harvesting are being formulated. The accurate yield estimation for diverse vegetable and fruit crops is extremely essential for better harvesting, marketing and logistics planning. Bloom intensity estimation effectively provides crop yield predictions and fruit detection with machine vision techniques facilitates yield estimations. The accurate prediction of yield helps the farmers to improve the quality of the crop at an early stage.

This review deals with diverse research issues in agricultural automation such as:

- ✓ image acquisition using handheld cameras under different lighting conditions;

- ✓ approaches employing image segmentation techniques;

- ✓ identification of features with various descriptors;

- ✓ improving the classification rate with deep learning models;

- ✓ achieving high accuracy and reducing the error rates;

- ✓ the essential challenges to be tackled in the future.

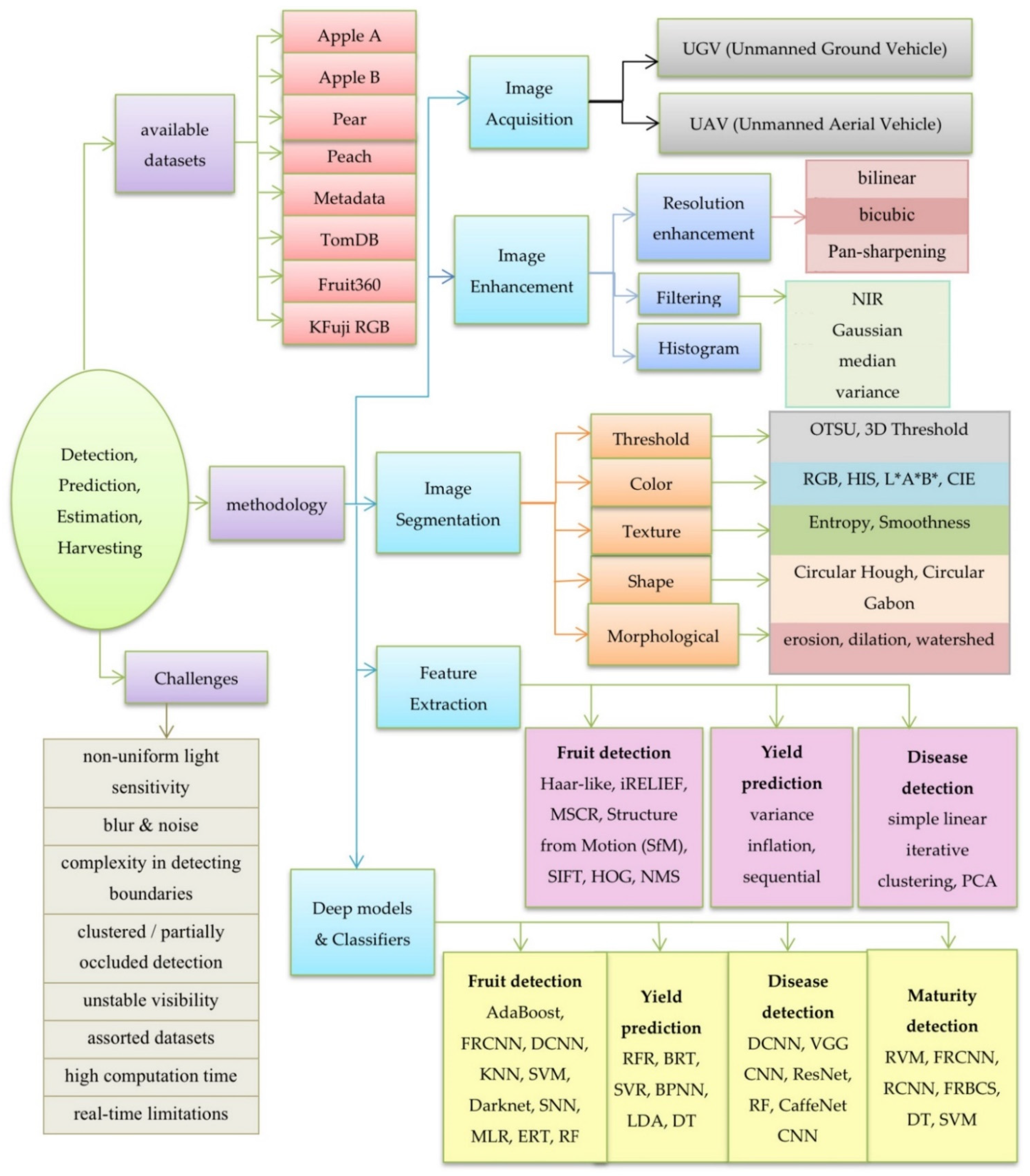

The outcome of this research will reduce the labor, time consumption and cost-effective machineries to support farmers in precision farming. The stages of the literature review are shown in Figure 1.

Figure 1.

Summary of the literature search work.

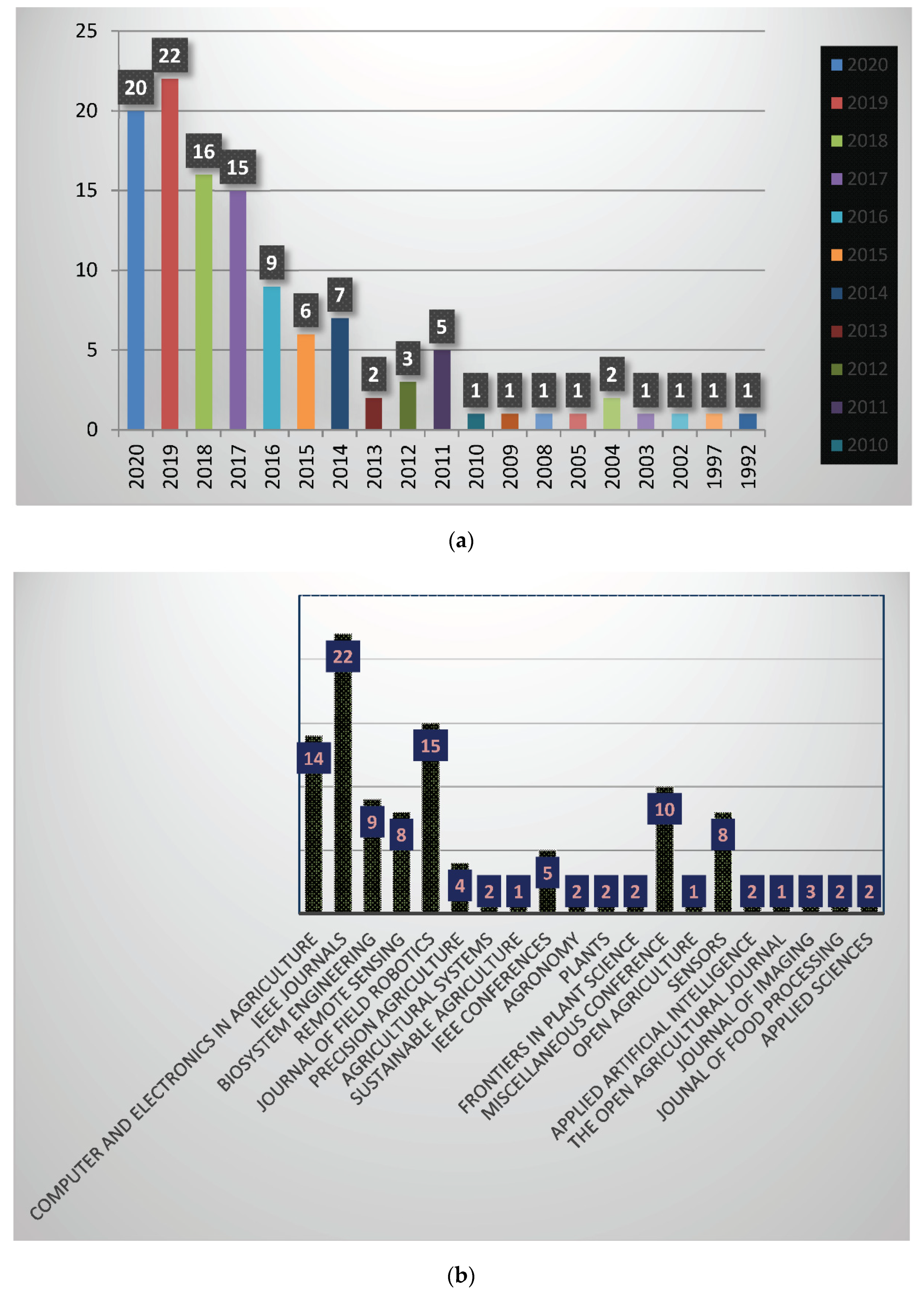

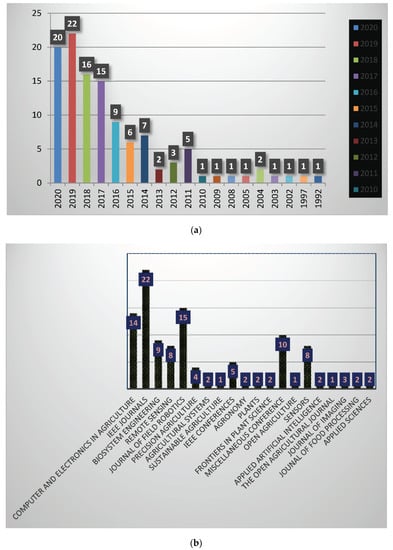

Several publicly available datasets were used in this study. The Apple A dataset provides a collection of apple flower images recorded with a hand-held camera and the Apple B dataset provides a collection of apple flower images taken by a utility vehicle. Figure 2a presents the distribution of papers taken for the literature review. From the bar chart, it is relevant that 50% of papers taken for study are published in the years 2018, 2019 and 2020. Figure 2b presents the distribution of agriculture-related articles in each journal taken for the literature study.

Figure 2.

(a) Yearwise distribution of paper taken for literature review (b) Distribution of papers in each journal.

2.1. Crop Image Acquisition

Machine vision systems attempt to provide automatic analysis and image-based inspection data for guidance and control of the machine by integrating the accessible methods in innovative ways for solving the real-time problems [20]. The so called agrobots execute their farm duties through developed machine learning technology and robot vision systems [21]. The mapping, navigation and detection for an autonomous agrobot to control and plan the execution can be achieved with these machine vision algorithms [22]. The machine vision system requires an assortment of image processing technologies such as: filtering, thresholding, segmentation, color-texture- shape analysis, pattern recognition, edge detection, blob detection and diverse neural network processing models. The machine learning models combined with machine vision techniques enhance the performance of the automated system to perform farm duties more precisely.

2.1.1. Crop Image Acquired by Cameras at Ground Level

RGB images are captured with cameras in a small scale or on a larger scale depending on the area of the field. The images of the plants are captured utilizing common digital cameras with a high image resolution [23]. The recordings were taken under various environmental conditions. The lighting conditions include natural sunlight, with or without shadows, and artificial illumination or infrared lighting at night. Fusion of RGB with near infrared (NIR) multimodal pictures acquired all through the day as well as at night was used for fruit detection [24]. To enhance the fruit detection, pixel-based fusion techniques like the Laplacian pyramid transform (LPT) and fuzzy logic were tested. Fuzzy logic of grey image functioned better than LPT based on image fusion indices. The segmentation success rate was 0.89 for fuzzy logic and 0.72 for LPT. A visible image and a thermal infrared image fusion enhanced fruit detection [25]. RGB images were analyzed to estimate the chlorophyll content in potato plants [26] and maturity of the tomato plants. Image acquisition cameras provide high-resolution real-time pictures that are further processed contingent on the prerequisites of the machine. The artificial active lighting enhances the system with a ring flash fastened around the lenses [23]. The image acquisition at the bloom stage (flowering) predicted the crop yield using image processing algorithms [27]. The image acquisition for training data needs to be cautiously chosen. The selection of the architecture of the neural network should not be affected by the size of training datasets with respect to its performance. The machine vision techniques encounter specific problems owing to the configuration of the agricultural fields for image acquisition such as:

- ♦ Natural illumination to detect the fruit/berries on the plant [28,29]

- ♦ Multiple recognition instances of the same fruit, acquired from subsequent images that may perhaps lead to miscounting [30].

- ♦ Occlusion of fruits due to foliage, twigs, branches or additional fruit [14,31].

- ♦ Location of camera with respect to distance and angle [32].

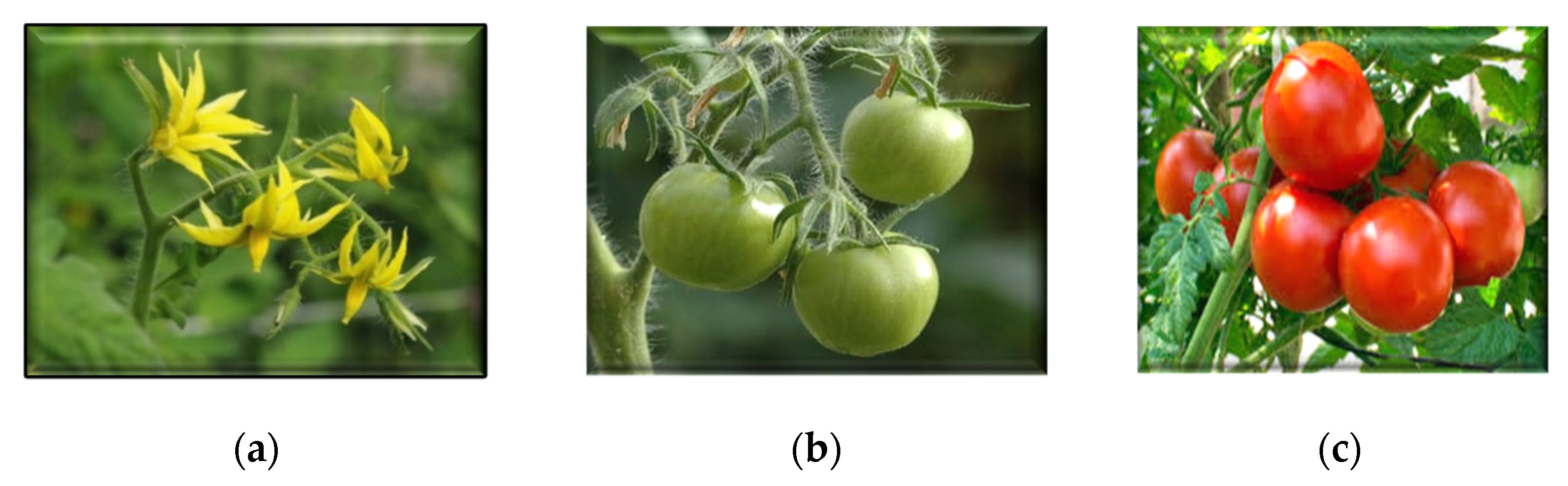

Sample dataset images of tomato crop phenology are shown in Figure 3.

Figure 3.

Phenology of tomato crop: (a) bloom stage; (b) immature tomatoes (c) mature tomatoes.

2.1.2. Crop Image Acquisition by Remote Sensing

The images obtained through Landsat 8 OLI have low resolution and a pan-sharpening technique is applied to calculate the vegetation indices [33,34,35]. The multispectral and hyperspectral images acquired through remote sensing were used for monitoring seasonally variable crop and soil status features such as crop diseases, crop biomass, the nitrogen content in leaves, weed and insect penetration, chlorophyll levels of leaves, moisture content, surface roughness, soil texture and soil temperature. The satellite images have low spatial resolution compared to the images acquired via drones or in-situ images. This requires cloud scattering, radiometric, atmospheric and geometric correction with assorted techniques for effective calibration of the acquired remote sensing data [8]. The obstructions in remote sensing data may occur due to cloud coverage during the course of the satellite overflight. The corn yield estimation was achieved with the various data acquired from MODIS product such as leaf area index (LAI), gross primary production (GPP), fraction of photosynthetically active radiation (FPAR), evapotranspiration (ET), soil moisture (SM) and enhanced vegetation Index (EVI) using deep learning techniques [36,37,38,39,40]. The yield prediction is executed more effectively with RGB data than normalized difference vegetation indices (NDVI) images [41].

3. Autonomous Movers for Smart Farming

3.1. Unmanned Ground Vehicles (UGVs)

The autonomous ground vehicles for tree pruning, and blossom stage tasks such as fruit thinning, mowing, spraying pests, sensing, fruit harvesting and post harvesting were trained, reconfigured and reassigned for numerous operations. An autonomous prime mover could complete 300 km of driving in orchards with no supervision to reduce labor costs [42]. The autonomous navigation technique in row detection for tiny plants adapts a pattern with a Hough transform to assess the row spacing and lateral offset with real-time field data [28,43]. The localization, mapping, path planning and the agricultural field information with miscellaneous sensors guide autonomous ground vehicles to perform various farm duties. Obstacle-averting decisions in agricultural terrain are complicated. The fusion of sensors with multiple algorithms and multiple robots detects the defined obstacles, controls and navigates the agricultural environment [44,45,46]. The automation system increases the farm proficiency with the self-guided vehicles and autonomous execution of farm duties like spraying, pruning, mowing, thinning and harvesting. A phenotyping robot can be fast and more precise; a next-best view (NBV) algorithm collects the information of unknown obstacles to plan the plant phenotyping automatically [47].

The autonomous harvesting process entails the detection of targets by the machine vision model and plans the sequential task of grasping the real targets by the manipulators or grippers. The sugarcane harvester machine combined with machine vision algorithm detects the damaged billets, consequently increasing the quality of the production [48]. The autonomous rice harvester with a combined robot performed harvesting, unloading and restarting with adequate accuracy [49]. The autonomous harvesting grippers with machine vision locate target like peduncles for various crops and remove the leaves and stems as obstacles to improve the harvesting system [50]. A manipulator (Jaco arm) can perform trimming of a bush into three shapes and the navigation system tracked the generalized travelling salesman problem (GTSP) [51]. Reducing the cycle time for harvesting represents a vital role for the robot industry. The review showed a kiwi harvester could achieved the shortest cycle time of 1 s due to a low-cost and effective manipulator [52,53]. The damage caused during the grasping of the fruit is one of the main concerns in dealing with manipulators [54]. An arc shaped finger was designed and demonstrated to reduce injury to the cortex of apples.

Autonomous robots are widely used in weed detection and management [55]. The agricultural robots are quite expensive and not widely used due to safety reasons, mechanical and industrial limitations. The pest sprayer robot lessens the exposure of human workers to pesticides, reducing medical hazards [56,57,58]. A high-resolution camera for a machine vision system along with accurate sensors and increased number of manipulators executing in parallel with human collaboration can progress the agricultural automation industry [59]. Dual arm manipulators in a single harvester with one gripper dedicated to moving aside any obstacles, could successfully pick strawberries [16]. An autonomous robot can navigate in straight or curved rows without having hundreds of programmed waypoints, by utilizing a 2D laser scanner [46]. A 3D simulation with real-time geographic coordinates and a first order approximation model was designed as a skid-steering autonomous robot [60]. The dynamic and kinematic constraints of a path-planning robot were successfully applied to a yield prediction and harvest scheduling path planner autonomous machine [61]. The motion control of the manipulator has been realized with a TRAC-IK kinematic solver. Some other kinematic solvers used are ROS MoveIt, and lazy PRM planner [62].

3.2. Unmanned Aerial Vehicles (UAVs)

Drones or unmanned aerial vehicles mounted with RGB-NIR cameras afford data with high spatial resolution. UAVs can cover the tree crowns and plants and provide multi-spectral images by flying at low altitudes [63]. Agricultural drones provide a bird’s eye view with multispectral images and survey the field periodically to provide information about the crops. Drones provide bird-view snapshots of the agronomic world. The aerial images are acquired through drones equipped with a high definition RGB camera with 4k resolution to snap images along with an attached GPS [64]. Depending on the agricultural application, UAV platforms with diverse embedded technologies have been commercialized. UAVs can fly at lower as well as higher altitudes, depending on the requirements of the monitoring function at hand [65].

4. Enhancement of Captured Crop Images for Bloom/Yield Detection

The enhancement process eliminates noise or blur in an image. Various techniques like bilinear, nearest neighbor, bicubic, histogram equalization, iterative-curvature-based interpolation, and linear/non-linear filtering enhancement were employed in applications such as resizing, image reduction, image registration, zooming and to alter spatio-geometric distortions.

4.1. Resolution Enhancement

Real time captured color images are resized with techniques like the bicubic interpolation or bilinear interpolation method for computational simplicity. Bicubic interpolation technique resample images considering 16 (4 × 4) pixels on a 2D grid to furnish a smooth scaled image. In remote sensing applications, a digital terrain model established process improves the accuracy and efficacy of the satellite data [66]. To unify the dimensions of the samples, resizing of the images is necessary. The effectiveness in categorization can be ensured by increasing the resolution of satellite images through a pan-sharpening technique. The accuracy of the crop yield estimation relies upon the total number as well as the size of the samples. The size of the input image for classification persists as a crucial parameter. The average size of the featured templates defines the minimum size of the image window [67]. The resolution of the images was chosen based on the regions of interest (ROIs) which were extracted from the captured real time frame. The importance of positioning of the camera angles such as azimuth and zenith angles, were explained in [32]. The detection rate increases with the increase in number of captured images of the same crop from multifarious viewpoints.

4.2. Filtering

The images require filtering techniques to diminish noisy pixels. NIR filters help to trace the visible light precisely. A Gaussian filter is applied to the tonal images to lessen the noise [68]. The separation of fruit from the background pixels along with noise elimination uses a Gaussian density function, emphasized with erosion and dilation, which erodes the neighborhood pixels [23]. The Gaussian filtering affects the edges during noise removal for a fixed window size. The median filter preserves edges effectively and eliminates the impulsive noise in digital images. The templates are transformed with K-means clustering based on Euclidean metrics to rescale images considering points of interest. For the enhancement of an RGB image and to remove the edge pixel region, a variance filter was used to replace the individual pixels using the neighbourhood variance value of the R, G, B regions correspondingly [14]. A median filter can reduce the noise caused by sun ray illumination.

4.3. Histogram Equalization

In computer vision, the image histogram can be epitomized graphically with pixels plotted through tonal variations for images to analyze the peaks and valleys and consequently to uncover the threshold value. The histograms applied for color spaces assist in background removal to improve the efficiency as well as accuracy [69]. The optimal threshold value can be resolved automatically with the unimodal attributes generated by the grey-level histogram of the luminance designed for natural images. The histogram for each pixel in the image constructs peaks and valleys for each object in accordance with the color. As a consequence, a threshold value can be procured for each entity in the image. Normalized histograms were used for the correlation with persimmon fruit [70].

Our investigation indicates that the most common color space model used in various yield recognition applications was RGB. Image resizing processes are applied in most of the works to unify the dimensions of the samples and to speed up the training process in deep learning models. High-resolution cameras are commonly used for capturing the details of the crops. In most of the works, color-based or threshold-based segmentation was performed to extract the region of interest. Table 1 lists the miscellaneous modes of capture and their enhancement methods.

Table 1.

Diverse modes for Real-time capture.

5. Crop Image Segmentation

The image-based segmentation is the process of classification of parts of images into fruit, leaf, stem, flower or any background as non-plant pixels. In this method, the acquired raw images are modified to lessen the effects of blur, noise and distortion to improve the image quality. Recent advances in computer vision enable us to analyze each pixel of an image. For identifying the pixel region as an individual fruit, leaf, flower or twig, image segmentation approaches are required [76,77]. Some of the image-segmentation approaches for machine vision system have been reviewed in this article.

5.1. Threshold-Based Segmentation

The partitioning of an image into its foreground and background by a threshold value is defined by exploring the peaks and valleys from the histogram. The optimal threshold value segments the object from the background. The threshold magnitudes were determined by the trial-and-error method for assorted color spaces, to procure the required color layer. The color image acquired will be instantly threshold segmented into the H layer by the Otsu method for differentiating reddish grapes region from greenish background. The histogram-based thresholding for the H component eliminated the twigs, leaf, sky, trunk and sky from real-time images. Local 3D threshold values computed for different smaller regions in network device interface (NDI) space of the image with high, medium and low illumination conditions could reduce the false detection of real fruits [78]. The tracking of the fruit with respect to new detection are estimated by the boundary threshold value and intersection of union (IoU) threshold value. The threshold value was computed for the red regions to eliminate the background images with morphological functions for the estimation of tomatoes. The EVI data contaminated with clouds were smoothened by a hard threshold [68]. The false positive elimination was made on the basis of cluster reflectance, geometry and positioning. The clusters were segmented with a 3D point cloud with a reflectance threshold value. The blob and pixel-based segmentation with X-means clustering technique classified and detected individual tomato berry from a fruit cluster [79].

5.2. Color-Based Segmentation

Machine vision for harvesting incorporates miscellaneous color spaces like RGB, HSI, L*A*B*, CIE Lab changing in harmony with the illumination of the environment. The color space, L*A*B* restores the human vision based on chromatic eccentricity level of the image. The detection of the fruit during the ripening period uses RGB and HSI color models along with the calibration spheres to resolve the size of apple fruits in [15,80]. The fruit detection by thresholding of grey images was not satisfactory, as the histogram values of the color images and grey images were not unimodal. The color of the fruit was accounted for with the calculated pixels of an individual citrus fruit and tomato berry. The color-based segmentation may be effective only under natural daylight conditions. The multiband ratio-based segmentation has a self-adaptive range for diverse illumination effects accomplished with an Otsu threshold value.

5.3. Segmentation Based on Texture Analysis

Texture-based segmentation extracts the regions of interest from images based on spatially distributed boundaries with similar pixels. The Wigner-Ville distribution defines an auto-correlation function of the time-frequency domain to construct the textures of the color segmented image [81]. Entropy (E) and smoothness (S) were estimated to remove the false positives. The lower entropy value was categorized as fruits when compared with the values of leaves and twigs. The fruit identification with texture-based analysis extracts the regions with similar adjacent pixels and partitions the needed fruit pixels from the background pixels. A revolution invariant circular Gabor texture segmentation with color and shape features—an ‘Eigenfruit’ algorithm—was proposed to detect green citrus fruits [74].

5.4. Segmentation Based on Shapes

To preserve shapes and to reconstruct the captured scene, a 3D reconstruction shape algorithm with image registration practices is employed for pruning vines [82]. Considering only the color features may lead to many false positives due to the similarity of the green colors of fruits and leaves. A circular Hough transform can identify the circular citrus fruits by merging multiple detections along with the histograms of H, R, B components [69]. The calibration measurements with destructive hand samples by the time of imaging provide accurate prediction of vine yields [83].

5.5. Morphological Operations

The morphological operations involve the conversion of pixel regions to individual fruits to be counted. The eccentricity was calculated for individual color segmented apple regions; thereby, a threshold value finds a relatively round region, which further determines individual apples without occlusion. In the case of two or more occluded fruits, the length of the ellipse was calculated and its major axis was split into two segments [30]. The parting of individual fruits from clusters or to link disjoint fragments of the same fruit, the watershed algorithm was used along with a circular Hough transform. The watershed algorithm executed the work better than circular Hough transform. The watershed transformation is an efficient segmentation algorithm which considers the image as a homogeneous topographic plane. The morphological functions were applied with an Euclidean transform and watershed gradient lines to detect small blobbed cucumbers thereby eliminating small leaves and flowers [67].

6. Feature Extraction for Classification

Feature extraction with color values of RGB converted to HSI color values determines the maturity of tomato samples with image processing techniques. The deep learning methods do not require hand-crafted features during training of data. The basic convolution neural network (CNN) architecture with its input layer, intervening convolution and max-pooling or sub-sampling layer and the output layers automatically extracts feature as well as classifies diverse object classes in images. Haar-like features based on edge, radian and line were experimented for grey images combined with color analysis in an Adaboost classifier to eliminate the false negative ratio. The texture features and the maximally stable color region (MSCR) descriptor sets, govern fruit detection in the subdivided support window with a frequency distributed histogram using a support vector machine (SVM) classifier. The attribute profiles with multilevel morphological characteristics such as area (dimensions of the region in terms of pixels), standard deviation (texture measure of the region) and moment of inertia (shape of the region) offered a vast advancement upon state-of-art descriptors in the classification of weed and crop using machine vision [84]. The estimation of fruit is realized with a fruit-as-feature as a SfM, which converts 2D traces to 3D markers. This feature improves the system with CNN classifier to eliminate scenarios of double counting of fruits and was a faster algorithm when paralleled with scale-invariant feature transform (SIFT) features [85]. The feature sets like closeness, solidity, extent, compactness and texture were selected by a sequential forward selection and RELIEF algorithm and implemented with SVM for yield estimation and prediction [86]. A combination of histograms of oriented gradients (HOG) features in color image, false color removal (FCR) technique and non-maximum suppression (NMS) features trained using a SVM classifier detected mature tomatoes with a processing time of 0.95 s [87].

The other parameters selected by feature assorting algorithms like variance inflation factor, sequential forward parameter descriptor, random forest variable and correlation-based descriptor selection were number of wells, area, tanks, the canal lengths and soil capacity, landscape features. The descriptors selected for crop yield prediction were rainfall, maximum-average-minimum temperature, solar radiation, planting area, irrigation water depth and season duration [88]. Automatic disease detection with computer vision technology can treat the crop at the earliest, which consequently improves the quality and increase the crop yield. Crop disease detection can utilize simple linear iterative clustering features to segment the super pixels in a CIELAB color model [89]. The autonomous maturity detection of tomato berries was developed with the fusion of multiple (color- texture) features using an iterative RELIEF algorithm [90]. Principal component analysis (PCA), a pixel level classification technique, could automatically detect diseases in pepper leaves from the color features [91].

7. Deep Learning Models

Deep learning models have been used in diverse applications of crop yield measurements such as crop monitoring, prediction, estimation and fruit detection in harvesting with numerous data sets for the machine to learn. The architecture can be implemented in different ways like, deep Boltzmann machine, deep belief network, convolutional neural network and stacked auto-encoders. The CNN architecture learns in depth the hierarchical features with residual blocks and soft-NMS decays the detected object with the bounding boxes. The networks are interpreted as universal approximation theorems with hidden layers, filters and hyper-parameters. Previous works report on the prediction accuracy with respect to the number of convolutional layers. The increase in the number of convolutional layers improves the accuracy of the network.

7.1. Deep Architectures in Smart Farming

A deep convolutional neural network (DCNN) is a multi-layered neuron, which is trained with complex patterns provided with appropriately classified features of an image. The InceptionV3 model assists as a conventional image feature extractor to classify fruit and background pixels in an image. The classifier localizes the fruits to count the quantities of fruit present [31,92] and classify the species of tomato [93]. A K-nearest neighbour (KNN) classifier was employed to classify the fruit pixels in trained datasets with a threshold pixel value set as a fruit pixel. The SVM functions for pattern classification as well as linear regression assessment, based on the selected features. Darknet classifier with a trained “you only look once” (YOLO) model detected iceberg lettuce [94] and grapes [95] with edges for harvesting using a Vegebot. YOLO models offer a high objects detection rate in real-time when compared to faster region-based CNN (FRCNN) [96].

The AdaBoost model structures the strong traditional classifier by combining the weak classifiers linearly with minimal thresholding tasks and Haar-like features to detect tomato berries with an accuracy of 96% [72]. A multi-modal faster region-based CNN model constructs an efficient fruit yield detection technique with multifarious modalities by the fusion of RGB and near-infrared images and has improved the performance up to 0.83 F1 score [97]. The dataset images were fed to the R-CNN model to generate the feature map for classification.

The spatiotemporal exploration from remote sensing image data of normalized difference vegetation indices were trained with a spiking neural network (SNN) to plan crop yield prediction and crop yield estimation of winter wheat [98]. A better prediction algorithm for corn, soybean [99] and paddy crops was proposed with a (feed forward back propagation) artificial neural network (ANN) and later with a fusion of multiple linear regression (MLR). The linear discriminant analysis (LDA) approach eradicates the imbalance generated from the performance value attained through an ANN classifier [100]. The fusion of huge datasets was implemented and compared with various machine learning models like SVM, DL, extremely randomized trees (ERT) and random forest (RF) for the estimation of corn yield [36]. The deep learning (DL) model succeeded with high accuracies with respect to correlation coefficients. The detection of flowers in an image accomplished by a deep learning model in semantic segmentation of CNN and SVM classifier helps crop yield management. The image segmentation techniques and canopy features were used by backpropagation neural network (BPNN) model to train the system for the apple yield prediction [101]. The SVM and kNN classifiers were efficient, with an accuracy of 98.49% and 98.50%. Deep convolutional neural networks were developed to identify plant diseases and to predict the macronutrient deficiencies during the flowering and fruit development stage [102]. The visual geometry group (VGG) CNN architecture identified plant diseases with the leaf images of the plants and communicated the results to farmers through smart phones [103,104]. The endemic fungal infection diagnosis in the winter wheat [89] was validated and trained with Imagenet datasets and implemented with an adaptive deep CNN. The deep CNN model with GoogleNet classified nine diseases in the tomato leaves [105]. The defects in the external regions and the occlusion of flower and berries of tomatoes were identified with deep autoencoders and a residual neural network (ResNet) 50 classifier [106,107]. A leaf-based disease identification model was developed with a random forest classifier trained with HOG features and could detect diseases on papaya leaves [108].

Ripeness estimation is required in the agricultural industry to know the quality and level of maturity of the fruit. The ripening of tomatoes was detected with the fusion of features extracted and classified using a weighted relevance vector machine (RVM) as a bilayer classification approach for harvesting agrobots. The maturity levels in tomatoes were detected with the color features classified with BPNN model. A fuzzy rule-based classification (FRBCS) approach was proposed based on the color feature with decision trees (DT) and Mamdani fuzzy technique to estimate six stages of maturity level in tomato berries [109]. A mature-tomato can be identified with a SVM classifier trained by HOG features along with false elimination and overlap removal features.

7.2. Network Training Datasets and Tools

The deep learning architectures vary widely based on the diverse applications and models that are implemented to train them. The deep learning techniques analyze huge datasets in a very short computation period to predict the sowing time as well as the optimum harvesting time of the plants. The available datasets could be accessed to predict and detect diseases in the crops. For creating a deep learning model, there exist various architectures which may be pre-trained with diverse datasets. Huge datasets with vast numbers of input images are required to train the deep learning models to resolve complicated challenges. The numerous models and datasets include CaffeNet, modified Inception-ResNet, YOLO version 3 trained by Darknet classifier, MobileNet, R-CNN trained using ResNet 152, Resnet 50, AlexNet, GoogLeNet, Overfeat, AlexNetOWTBn and VGG, ReLU, GitHub and Kaggle. The neural network libraries that work with Python comprise of keras, DeepLaB + RGR, TensorFlow, Caffe, R library, Torch7, LuaJIT, PyTorch, pylearn2, Theano and the Deep Learning Matlab Toolbox. The available datasets for crop images are listed in Table 2.

Table 2.

Image Databases of crops: fruits and vegetables.

8. Performance Metrics

The image enhancement techniques, image processing algorithms, detection and prediction algorithms with classifier approaches were compared and evaluated with diverse performance metrics. The comparative studies presented by diverse deep learning models were validated with assorted performance metrics which are as follows:

- ♦ Root mean square error (RMSE)

- ♦ Normalized mean absolute error (MAE)

- ♦ Root relative square error (RRSE)

- ♦ Correlation coefficient (R)

- ♦ Mean forecast error

- ♦ Average cycle time

- ♦ Harvest and detachment success

- ♦ Mean absolute percentage error (MAPE)

- ♦ Root relative square error (RRSE)

- ♦ Receiver operating characteristics

- ♦ Precision, recall, F-measure

Table 3 lists the various classifiers used for different agricultural applications with their respective pros and cons and some topics for future work.

Table 3.

Summary of performance measures of different classifiers for smart agriculture.

9. Discussion

Precise agricultural farming requires constant innovation to increase the quantity and quality of food production. The machine vision techniques for machine learning approaches in the automation industry have both positive features and shortcomings that are discussed in this section.

Advantages and Disadvantages

The acquired images may be prone to be degradation caused by misfocus of the camera, poor lighting conditions or sensor noise. The image enhancement techniques have a visual impact on the desired information in a real time captured image. The image enhancement techniques do not afford augmented results unless the color modifications are made under multiple light sources. The median filter removes the blurred effect and reduces the noise. The nonlinear filtering technique can be employed to upgrade the quality of blurred images with the light source being refined. Adding noise to the image can improve the image in certain applications.

The image segmentation techniques are easy to implement and modify to classify pixels with less computation. The threshold segmentation requires appropriate lighting conditions. The optimal threshold value has to be selected, but it may not be pertinent for every application. Any background complexity increases the error rate and computation time. The color-based segmentation has constraints due to the non-uniform light sensitivity. Otsu thresholds excel in the detection of edges and select the threshold value based on the features provided to the image. The watershed segmentation provides continuous boundaries however, with consequent complexity in the calculation of the gradients. The texture and shape-based segmentation are time-consuming and provide blurred boundaries. To optimize the computer vision technology, further exploration in unstable agricultural environments has to be formulated.

The feature selection process reduces the quantity of input data while developing a predictive classifier model. Haar wavelet features combined with an AdaBoost classifier achieved high accuracy. The feature selection prioritizes the existing features in a dataset. The PCA can outperform other features with high accuracy by the pixel-level identification of input as original image compared with the input features. The SIFT detection algorithm requires scaling of local features in the images. The HOG method can extract global features by computing the edge gradient. HOG+FCR+NMS achieved a computation time of 0.95s for maturity detection. The hybrid approaches in feature extraction can improve classification and computation time.

The DL model with SVM, BPNN classifiers outperformed other classifiers. The SVM classifiers provide less error with effective prediction but require abundant datasets and are more complex and delicate to handle varied datatypes. The TensorFlow library endeavors to uncover optimal policy and does not wait till the termination to update the utility function. K-NN classifiers are robust in classifying the data with zero cost in learning process. These classifiers require large datasets with high computation for mixed data. The DL can extract the required features based on color, texture, shape and SIFT feature extraction processes. The combination of ANN and MLR classifier provided the highest accuracy in crop prediction. DL classifiers were used in a wide range of agricultural applications with an average performance F1 score of 0.8. Errors occurred due to the occlusion of leaves or cluster of fruits. The fruit detection for robot harvesting and yield estimation outperformed using a combination of CNN and linear regression models. The need of large datasets as input for training increases the computation time for DL approach. The SVM classifiers provide high accuracy with improved computation time. The fusion of the classifiers with assorted features may improve the computer vision technique and DL model.

10. Conclusions

The progression and challenges of various image processing and deep learning classification techniques in agricultural farm duties were analyzed in this paper. The inferences based on our extensive review is presented below:

- The review highlighted the merits and demerits of different machine vision and deep learning techniques along with their various performance metrics.

- The pertinence of diverse techniques for yield prediction with the bloom intensity estimation helps farmers improve their crop yields at the early stage.

- The fruit detection and counting models with image analysis and feature extraction for classifiers technologically advance the crop yield estimation and robot harvesting.

- The combination of various hand-crafted features using hybrid DL models improves the computational efficiency and reduces the computation time.

- The deep learning models outperform the other conventional image processing techniques with an average accuracy of 92.51% in diverse agricultural applications.

As future work, hybrid techniques of machine vision and deep learning models can be applied to develop automated systems for precision agriculture. The methods highlighted in this paper can be tested for real-time crop yield estimation applications. Innovative methods with the objective of improving the performance of the overall system can be also developed.

Abbreviations

The abbreviations used in this manuscript are given as under:

| CCE | Crop Cutting Experiments |

| GPS | Global Positioning System |

| PMFBY | Pradhan Mantri Fasal Bima Yojana |

| UAV | Unmanned Aerial Vehicle |

| UGV | Unmanned Ground Vehicle |

| GNSS | Global Navigation Satellite System |

| NIR | Near Infra-Red |

| DL | Deep Learning |

| ML | Machine Learning |

| MSCR | Maximally Stable Color Region |

| SfM | Structure from Motion |

| SIFT | Scale-Invariant Feature Transform |

| HOG | Histograms of Oriented Gradients |

| NMS | Non-Maximum Suppression |

| PCA | Principal Component Analysis |

| CNN | Convolution Neural Network |

| FRCNN | Faster Region Convolution Neural Network |

| DCNN | Deep Convolutional Neural Network |

| KNN | K-nearest neighbour |

| SVM | Support Vector Machine |

| SNN | Spiking Neural Network |

| MLR | Multiple Linear Regression |

| ERT | Extremely Randomized Trees |

| RF | Random Forest |

| RFR | Random Forest Regression |

| BRT | Boosted Regression Tree |

| SVR | Support Vector Regression |

| BPNN | Backpropagation Neural Network |

| LDA | Linear Discriminant Analysis |

| DT | Decision Trees |

| VGG | Visual Geometry Group |

| RVM | Relevance Vector Machine |

| RCNN | Region based Convolutional Neural Network |

| FRBCS | Fuzzy Rule-Based Classification Approach |

| LPT | Laplacian Pyramid Transform |

| LAI | Leaf Area Index |

| GPP | Gross Primary Production |

| FPAR | Fraction of Photosynthetically Active Radiation |

| ET | Evapotranspiration |

| SM | Soil Moisture |

| EVI | Enhanced Vegetation Index |

| NDVI | Normalized Difference Vegetation Indices |

| UGV | Unmanned Ground Vehicle |

| UAV | Unmanned Aerial Vehicle |

| NBV | next-best view |

| GTSP | Generalized Travelling Salesman Problem |

| ROIs | Regions of Interests |

| NDI | Network Device Interface |

| IoU | Intersection of Union |

| FCR | False Color Removal |

| YOLO | You Only Look Once |

| ANN | Artificial Neural Network |

| ResNet | Residual Neural Network |

| SURF | Speeded-UP Robust Features |

Author Contributions

Conceptualization, P.D.; Data Collection, B.D., S.P.; Formal Analysis, B.D.; Document preparation, B.D., P.D., S.P., D.E.P., and D.J.H.; Supervision, P.D., D.E.P., D.J.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Adamchuk, V.I.; Hummel, J.W.; Morgan, M.K.; Upadhyaya, S. On-the-go soil sensors for precision agriculture. Comput. Electron. Agric. 2004, 44, 71–91. [Google Scholar] [CrossRef]

- Perez-Ruiz, M.; Slaughter, D.C.; Gliever, C.; Upadhyaya, S.K. Tractor-based Real-time Kinematic-Global Positioning System (RTK-GPS) guidance system for geospatial mapping of row crop transplant. Biosyst. Eng. 2012, 111, 64–71. [Google Scholar] [CrossRef]

- Pastor-Guzman, J.; Dash, J.; Atkinson, P.M. Remote sensing of mangrove forest phenology and its environmental drivers. Remote Sens. Environ. 2018, 205, 71–84. [Google Scholar] [CrossRef]

- Zhang, X.; Friedl, M.A.; Schaaf, C.B.; Strahler, A.H.; Hodges, J.C.F.; Gao, F.; Reed, B.C.; Huete, A. Monitoring vegetation phenology using MODIS. Remote Sens. Environ. 2003, 84, 471–475. [Google Scholar] [CrossRef]

- Tiwari, R.; Chand, K.; Anjum, B. Crop insurance in India: A review of Pradhan Mantri Fasal Bima Yojana (PMFBY). FIIB Bus. Rev. 2020, 9, 249–255. [Google Scholar] [CrossRef]

- Dorj, U.-O.; Lee, M.; Yun, S.-S. An yield estimation in citrus orchards via fruit detection and counting using image processing. Comput. Electron. Agric. 2017, 140, 103–112. [Google Scholar] [CrossRef]

- Singh, R.; Goyal, R.C.; Saha, S.K.; Chhikara, R.S. Use of satellite spectral data in crop yield estimation surveys. Int. J. Remote Sens. 1992, 13, 2583–2592. [Google Scholar] [CrossRef]

- Ferencz, C.; Bognár, P.; Lichtenberger, J.; Hamar, D.; Tarcsai, G.; Timár, G.; Molnár, G.; Pásztor, S.; Steinbach, P.; Székely, B.; et al. Crop yield estimation by satellite remote sensing. Int. J. Remote Sens. 2004, 25, 4113–4149. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Multispecies fruit flower detection using a refined semantic segmentation network. IEEE Robot. Autom. Lett. 2018, 3, 3003–3010. [Google Scholar] [CrossRef]

- Hong, H.; Lin, J.; Huang, F. Tomato disease detection and classification by deep learning. In Proceedings of the 2020 International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Fuzhou, China, 12–14 June 2020; p. 0001. [Google Scholar]

- Liu, J.; Wang, X. Tomato diseases and pests detection based on improved YOLO V3 convolutional neural network. Front. Plant Sci. 2020, 11, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Bulanon, D.; Kataoka, T.; Ota, Y.; Hiroma, T. AE—automation and emerging technologies: A segmentation algorithm for the automatic recognition of Fuji apples at harvest. Biosyst. Eng. 2002, 83, 405–412. [Google Scholar] [CrossRef]

- Wan, P.; Toudeshki, A.; Tan, H.; Ehsani, R. A methodology for fresh tomato maturity detection using computer vision. Comput. Electron. Agric. 2018, 146, 43–50. [Google Scholar] [CrossRef]

- Payne, A.B.; Walsh, K.B.; Subedi, P.P.; Jarvis, D. Estimation of mango crop yield using image analysis–Segmentation method. Comput. Electron. Agric. 2013, 91, 57–64. [Google Scholar] [CrossRef]

- Xiang, R.; Ying, Y.; Jiang, H. Research on image segmentation methods of tomato in natural conditions. In Proceedings of the 2011 4th International Congress on Image and Signal Processing, Shanghai, China, 15–17 October 2011; pp. 1268–1272. [Google Scholar]

- Xiong, Y.; Ge, Y.; Grimstad, L.; From, P.J. An autonomous strawberry-harvesting robot: Design, development, integration, and field evaluation. J. Field Robot. 2020, 37, 202–224. [Google Scholar] [CrossRef]

- Horng, G.-J.; Liu, M.-X.; Chen, C.-C. The smart image recognition mechanism for crop harvesting system in intelligent agriculture. IEEE Sensors J. 2020, 20, 2766–2781. [Google Scholar] [CrossRef]

- Hua, Y.; Zhang, N.; Yuan, X.; Quan, L.; Yang, J.; Nagasaka, K.; Zhou, X.-G. Recent advances in intelligent automated fruit harvesting robots. Open Agric. J. 2019, 13, 101–106. [Google Scholar] [CrossRef]

- Silwal, A.; Davidson, J.R.; Karkee, M.; Mo, C.; Zhang, Q.; Lewis, K. Design, integration, and field evaluation of a robotic apple harvester. J. Field Robot. 2017, 34, 1140–1159. [Google Scholar] [CrossRef]

- Pajares, G.; García-Santillán, I.; Campos, Y.; Montalvo, M.; Guerrero, J.M.; Emmi, L.A.; Romeo, J.; Guijarro, M.; Gonzalez-De-Santos, P. Machine-vision systems selection for agricultural vehicles: A guide. J. Imaging 2016, 2, 34. [Google Scholar] [CrossRef]

- Rehman, T.U.; Mahmud, M.S.; Chang, Y.K.; Jin, J.; Shin, J. Current and future applications of statistical machine learning algorithms for agricultural machine vision systems. Comput. Electron. Agric. 2019, 156, 585–605. [Google Scholar] [CrossRef]

- Bini, D.; Pamela, D.; Prince, S. Machine vision and machine learning for intelligent agrobots: A review. In Proceedings of the 2020 5th International Conference on Devices, Circuits and Systems (ICDCS), Coimbatore, India, 5–6 March 2020; pp. 12–16. [Google Scholar] [CrossRef]

- Font, D.; Tresanchez, M.; Martínez, D.; Moreno, J.; Clotet, E.; Palacín, J. Vineyard yield estimation based on the analysis of high resolution images obtained with artificial illumination at night. Sensors 2015, 15, 8284–8301. [Google Scholar] [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. Deepfruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed]

- Bulanon, D.; Burks, T.; Alchanatis, V. Image fusion of visible and thermal images for fruit detection. Biosyst. Eng. 2009, 103, 12–22. [Google Scholar] [CrossRef]

- Yadav, S.P.; Ibaraki, Y.; Gupta, S.D. Estimation of the chlorophyll content of micropropagated potato plants using RGB based image analysis. Plant Cell, Tissue Organ Cult. 2009, 100, 183–188. [Google Scholar] [CrossRef]

- Aggelopoulou, A.D.; Bochtis, D.; Fountas, S.; Swain, K.C.; Gemtos, T.A.; Nanos, G.D. Yield prediction in apple orchards based on image processing. Precis. Agric. 2011, 12, 448–456. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Cheng, J.; Xiong, J. Fruit detection in natural environment using partial shape matching and probabilistic Hough transform. Precis. Agric. 2020, 21, 160–177. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Sheppard, C. Deep count: Fruit counting based on deep simulated learning. Sensors 2017, 17, 905. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Nuske, S.; Bergerman, M.; Singh, S. Automated crop yield estimation for apple orchards. In Experimental Robotics; Springer: Heidelberg, Germany, 2013. [Google Scholar] [CrossRef]

- Fourie, J.; Hsiao, J.; Werner, A. Crop yield estimation using deep learning. In Proceedings of the 7th Asian-Australasian Conference Precis. Agric., Hamilton, New Zealand, 16 October 2017; pp. 1–10. [Google Scholar]

- Hemming, J.; Ruizendaal, J.; Hofstee, J.W.; Van Henten, E.J. Fruit detectability analysis for different camera positions in sweet-pepper. Sensors 2014, 14, 6032–6044. [Google Scholar] [CrossRef] [PubMed]

- Luciani, R.; Laneve, G.; Jahjah, M. Agricultural monitoring, an automatic procedure for crop mapping and yield estimation: The great Rift valley of Kenya case. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2196–2208. [Google Scholar] [CrossRef]

- Moran, M.S.; Inoue, Y.; Barnes, E.M. Opportunities and limitations for image-based remote sensing in precision crop management. Remote Sens. Environ. 1997, 61, 319–346. [Google Scholar] [CrossRef]

- Aghighi, H.; Azadbakht, M.; Ashourloo, D.; Shahrabi, H.S.; Radiom, S. Machine learning regression techniques for the silage maize yield prediction using time-series images of landsat 8 oli. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4563–4577. [Google Scholar] [CrossRef]

- Kim, N.; Lee, Y.-W. Machine learning approaches to corn yield estimation using satellite images and climate data: A case of Iowa state. J. Korean Soc. Surv. Geodesy Photogramm. Cartogr. 2016, 34, 383–390. [Google Scholar] [CrossRef]

- Kuwata, K.; Shibasaki, R. Estimating crop yields with deep learning and remotely sensed data. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium, Milan, Italy, 26–31 July 2015; pp. 858–861. [Google Scholar]

- Liu, J.; Shang, J.; Qian, B.; Huffman, T.; Zhang, Y.; Dong, T.; Jing, Q.; Martin, T. Crop yield estimation using time-series modis data and the effects of cropland masks in Ontario, Canada. Remote Sens. 2019, 11, 2419. [Google Scholar] [CrossRef]

- Fernandez-Ordonez, Y.M.; Soria-Ruiz, J. Maize crop yield estimation with remote sensing and empirical models. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; Volume 8, pp. 3035–3038. [Google Scholar] [CrossRef]

- Jiang, Z.; Chen, Z.; Chen, J.; Liu, J.; Ren, J.; Li, Z.; Sun, L.; Li, H. Application of crop model data assimilation with a particle filter for estimating regional winter wheat yields. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4422–4431. [Google Scholar] [CrossRef]

- Nevavuori, P.; Narra, N.; Lipping, T. Crop yield prediction with deep convolutional neural networks. Comput. Electron. Agric. 2019, 163, 104859. [Google Scholar] [CrossRef]

- Hamner, B.; Bergerman, M.; Singh, S. Autonomous orchard vehicles for specialty crops production. In Proceedings of the 2011 American Society of Agricultural and Biological Engineers, Louisville, KT, USA, 7–10 August 2011; p. 1. [Google Scholar]

- Winterhalter, W.; Fleckenstein, F.V.; Dornhege, C.; Burgard, W. Crop row detection on tiny plants with the pattern hough transform. IEEE Robot. Autom. Lett. 2018, 3, 3394–3401. [Google Scholar] [CrossRef]

- Gao, X.; Li, J.; Fan, L.; Zhou, Q.; Yin, K.; Wang, J.; Song, C.; Huang, L.; Wang, Z. Review of wheeled mobile robots’ navigation problems and application prospects in agriculture. IEEE Access 2018, 6, 49248–49268. [Google Scholar] [CrossRef]

- Zhong, J.; Cheng, H.; He, L.; Ouyang, F. Decentralized full coverage of unknown areas by multiple robots with limited visibility sensing. IEEE Robot. Autom. Lett. 2019, 4, 338–345. [Google Scholar] [CrossRef]

- Le, T.D.; Ponnambalam, V.R.; Gjevestad, J.G.O.; From, P.J. A low-cost and efficient autonomous row-following robot for food production in polytunnels. J. Field Robot. 2020, 37, 309–321. [Google Scholar] [CrossRef]

- Wu, C.; Zeng, R.; Pan, J.; Wang, C.C.L.; Liu, Y.-J. Plant phenotyping by deep-learning-based planner for multi-robots. IEEE Robot. Autom. Lett. 2019, 4, 3113–3120. [Google Scholar] [CrossRef]

- Alencastre-Miranda, M.; Davidson, J.R.; Johnson, R.M.; Waguespack, H.; Krebs, H.I. Robotics for sugarcane cultivation: Analysis of billet quality using computer vision. IEEE Robot. Autom. Lett. 2018, 3, 3828–3835. [Google Scholar] [CrossRef]

- Kurita, H.; Iida, M.; Cho, W.; Suguri, M. Rice autonomous harvesting: Operation framework. J. Field Robot. 2017, 34, 1084–1099. [Google Scholar] [CrossRef]

- Zhang, T.; Huang, Z.; You, W.; Lin, J.; Tang, X.; Huang, H. An autonomous fruit and vegetable harvester with a low-cost gripper using a 3D sensor. Sensors 2019, 20, 93. [Google Scholar] [CrossRef] [PubMed]

- Kaljaca, D.; Vroegindeweij, B.; Van Henten, E. Coverage trajectory planning for a bush trimming robot arm. J. Field Robot. 2019, 37, 283–308. [Google Scholar] [CrossRef]

- Bac, C.W.; Van Henten, E.J.; Hemming, J.; Edan, Y. Harvesting robots for high-value crops: State-of-the-art review and challenges ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Williams, H.; Ting, C.; Nejati, M.; Jones, M.H.; Penhall, N.; Lim, J.; Seabright, M.; Bell, J.; Ahn, H.S.; Scarfe, A.; et al. Improvements to and large-scale evaluation of a robotic kiwifruit harvester. J. Field Robot. 2020, 37, 187–201. [Google Scholar] [CrossRef]

- Ji, W.; Qian, Z.; Xu, B.; Tang, W.; Li, J.; Zhao, D. Grasping damage analysis of apple by end-effector in harvesting robot. J. Food Process. Eng. 2017, 40, e12589. [Google Scholar] [CrossRef]

- McCool, C.S.; Beattie, J.; Firn, J.; Lehnert, C.; Kulk, J.; Bawden, O.; Russell, R.; Perez, T. Efficacy of mechanical weeding tools: A study into alternative weed management strategies enabled by robotics. IEEE Robot. Autom. Lett. 2018, 3, 1184–1190. [Google Scholar] [CrossRef]

- Adamides, G.; Katsanos, C.; Constantinou, I.; Christou, G.; Xenos, M.; Hadzilacos, T.; Edan, Y. Design and development of a semi-autonomous agricultural vineyard sprayer: Human-robot interaction aspects. J. Field Robot. 2017, 34, 1407–1426. [Google Scholar] [CrossRef]

- Ko, M.H.; Ryuh, B.-S.; Kim, K.C.; Suprem, A.; Mahalik, N.P. Autonomous greenhouse mobile robot driving strategies from system integration perspective: Review and application. IEEE/ASME Trans. Mechatron. 2014, 20, 1705–1716. [Google Scholar] [CrossRef]

- Berenstein, R.; Edan, Y. Human-robot collaborative site-specific sprayer. J. Field Robot. 2017, 34, 1519–1530. [Google Scholar] [CrossRef]

- Gongal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Sensors and systems for fruit detection and localization: A review. Comput. Electron. Agric. 2015, 116, 8–19. [Google Scholar] [CrossRef]

- Fernandez, B.; Herrera, P.J.; Cerrada, J.A. A simplified optimal path following controller for an agricultural skid-steering robot. IEEE Access 2019, 7, 95932–95940. [Google Scholar] [CrossRef]

- Cheein, F.A.; Torres-Torriti, M.; Hopfenblatt, N.B.; Prado, Á.J.; Calabi, D. Agricultural service unit motion planning under harvesting scheduling and terrain constraints. J. Field Robot. 2017, 34, 1531–1542. [Google Scholar] [CrossRef]

- Arad, B.; Balendonck, J.; Barth, R.; Ben-Shahar, O.; Edan, Y.; Hellström, T.; Hemming, J.; Kurtser, P.; Ringdahl, O.; Tielen, T.; et al. Development of a sweet pepper harvesting robot. J. Field Robot. 2020, 37, 1027–1039. [Google Scholar] [CrossRef]

- Senthilnath, J.; Dokania, A.; Kandukuri, M.; Ramesh, K.N.; Anand, G.; Omkar, S.N. Detection of tomatoes using spectral-spatial methods in remotely sensed RGB images captured by UAV. Biosyst. Eng. 2016, 146, 16–32. [Google Scholar] [CrossRef]

- Murugan, D.; Garg, A.; Singh, D. Development of an adaptive approach for precision agriculture monitoring with drone and satellite data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 5322–5328. [Google Scholar] [CrossRef]

- Kim, J.; Kim, S.; Ju, C.; Son, H.I. Unmanned aerial vehicles in agriculture: A review of perspective of platform, control, and applications. IEEE Access 2019, 7, 105100–105115. [Google Scholar] [CrossRef]

- Ashapure, A.; Oh, S.; Marconi, T.G.; Chang, A.; Jung, J.; Landivar, J.; Enciso, J. Unmanned aerial system based tomato yield estimation using machine learning. In Proceedings of the Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping IV. International Society for Optics and Photonics, Baltimore, MA, USA, 11–15 August 2019; p. 110080O. [Google Scholar] [CrossRef]

- Fernández, R.; Montes, H.; Surdilovic, J.; Surdilovic, D.; Gonzalez-De-Santos, P.; Armada, M.; Surdilovic, D. Automatic detection of field-grown cucumbers for robotic harvesting. IEEE Access 2018, 6, 35512–35527. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Li, H.; Lee, W.S.; Wang, K. Immature green citrus fruit detection and counting based on fast normalized cross correlation (FNCC) using natural outdoor colour images. Precis. Agric. 2016, 17, 678–697. [Google Scholar] [CrossRef]

- Garcia, L.; Parra, L.; Basterrechea, D.A.; Jimenez, J.M.; Rocher, J.; Parra, M.; García-navas, J.L.; Sendra, S.; Lloret, J.; Lorenz, P.; et al. Quantifying the production of fruit-bearing trees using image processing techniques. In Proceedings of the INNOV 2019 Eighth Int. Conf. Commun. Comput. Netw. Technol, Valencia, Spain, 24–28 November 2019; pp. 14–19. [Google Scholar]

- Bargoti, S.; Underwood, J.P. Image segmentation for fruit detection and yield estimation in apple orchards. J. Field Robot. 2017, 34, 1039–1060. [Google Scholar] [CrossRef]

- Zhao, Y.; Gong, L.; Zhou, B.; Huang, Y.; Liu, C. Detecting tomatoes in greenhouse scenes by combining AdaBoost classifier and colour analysis. Biosyst. Eng. 2016, 148, 127–137. [Google Scholar] [CrossRef]

- Hu, C.; Liu, X.; Pan, Z.; Li, P. Automatic detection of single ripe tomato on plant combining faster R-CNN and intuitionistic fuzzy set. IEEE Access 2019, 7, 154683–154696. [Google Scholar] [CrossRef]

- Kurtulmus, F.; Lee, W.S.; Vardar, A. Green citrus detection using ‘eigenfruit’, color and circular Gabor texture features under natural outdoor conditions. Comput. Electron. Agric. 2011, 78, 140–149. [Google Scholar] [CrossRef]

- Gong, A.; Yu, J.; He, Y.; Qiu, Z. Citrus yield estimation based on images processed by an Android mobile phone. Biosyst. Eng. 2013, 115, 162–170. [Google Scholar] [CrossRef]

- Sharma, P.; Berwal, Y.P.S.; Ghai, W. Performance analysis of deep learning CNN models for disease detection in plants using image segmentation. Inf. Process. Agric. 2020, 7, 566–574. [Google Scholar] [CrossRef]

- Gim, J.; Gonz, J.D.; Jim, M.; Toledo-moreo, A.B.; Soto-valles, F.; Torres-s, R. Segmentation of multiple tree leaves pictures with natural backgrounds using deep learning for image-based agriculture applications. Appl. Sci. 2019, 10, 202. [Google Scholar]

- Vitzrabin, E.; Edan, Y. Changing task objectives for improved sweet pepper detection for robotic harvesting. IEEE Robot. Autom. Lett. 2016, 1, 578–584. [Google Scholar] [CrossRef]

- Yamamoto, K.; Guo, W.; Yoshioka, Y.; Ninomiya, S. On plant detection of intact tomato fruits using image analysis and machine learning methods. Sensors 2014, 14, 12191–12206. [Google Scholar] [CrossRef]

- Zhou, R.; Damerow, L.; Sun, Y.; Blanke, M.M. Using colour features of cv. ‘Gala’ apple fruits in an orchard in image processing to predict yield. Precis. Agric. 2012, 13, 568–580. [Google Scholar] [CrossRef]

- Rakun, J.; Stajnko, D.; Zazula, D. Detecting fruits in natural scenes by using spatial-frequency based texture analysis and multiview geometry. Comput. Electron. Agric. 2011, 76, 80–88. [Google Scholar] [CrossRef]

- Botterill, T.; Paulin, S.; Green, R.; Williams, S.; Lin, J.; Saxton, V.; Mills, S.; Chen, X.Q.; Corbett-Davies, S. A robot system for pruning grape vines. J. Field Robot. 2017, 34, 1100–1122. [Google Scholar] [CrossRef]

- Nuske, S.; Gupta, K.; Narasimhan, S.; Singh, S. Modeling and calibrating visual yield estimates in vineyards. Springer Tracts Adv. Robot. 2013, 92, 343–356. [Google Scholar] [CrossRef]

- Bosilj, P.; Duckett, T.; Cielniak, G. Analysis of morphology-based features for classification of crop and weeds in precision agriculture. IEEE Robot. Autom. Lett. 2018, 3, 2950–2956. [Google Scholar] [CrossRef]

- Liu, X.; Chen, S.W.; Liu, C.; Shivakumar, S.S.; Das, J.; Taylor, C.J.; Underwood, J.P.; Kumar, V. Monocular camera based fruit counting and mapping with semantic data association. IEEE Robot. Autom. Lett. 2019, 4, 2296–2303. [Google Scholar] [CrossRef]

- Liu, S.; Whitty, M. Automatic grape bunch detection in vineyards with an SVM classifier. J. Appl. Log. 2015, 13, 643–653. [Google Scholar] [CrossRef]

- Liu, G.; Mao, S.; Kim, J.H. A mature-tomato detection algorithm using machine learning and color analysis. Sensors 2019, 19, 2023. [Google Scholar] [CrossRef]

- Gonzalez-Sanchez, A.; Frausto-Solis, J.; Ojeda-Bustamante, W. Predictive ability of machine learning methods for massive crop yield prediction. Span. J. Agric. Res. 2014, 12, 313. [Google Scholar] [CrossRef]

- Picon, A.; Alvarez-Gila, A.; Seitz, M.; Ortiz-Barredo, A.; Echazarra, J.; Johannes, A. Deep convolutional neural networks for mobile capture device-based crop disease classification in the wild. Comput. Electron. Agric. 2019, 161, 280–290. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, B.; Zhou, J.; Xiong, Y.; Gu, B.; Yang, X. Automatic recognition of ripening tomatoes by combining multi-feature fusion with a BI-layer classification strategy for harvesting robots. Sensors 2019, 19, 612. [Google Scholar] [CrossRef]

- Schor, N.; Bechar, A.; Ignat, T.; Dombrovsky, A.; Elad, Y.; Berman, S. Robotic disease detection in greenhouses: Combined detection of powdery mildew and tomato spotted wilt virus. IEEE Robot. Autom. Lett. 2016, 1, 354–360. [Google Scholar] [CrossRef]

- Lee, J.; Nazki, H.; Baek, J.; Hong, Y.; Lee, M. Artificial intelligence approach for tomato detection and mass estimation in precision agriculture. Sustainability 2020, 12, 9138. [Google Scholar] [CrossRef]

- Alajrami, M.A.; Abunaser, S.S. Type of tomato classification using deep learning. Int. J. Acad. Pedagog. Res. 2020, 3, 21–25. [Google Scholar]

- Birrell, S.; Hughes, J.; Cai, J.Y.; Iida, F. A field-tested robotic harvesting system for iceberg lettuce. J. Field Robot. 2019, 37, 225–245. [Google Scholar] [CrossRef] [PubMed]

- Santos, T.T.; De Souza, L.L.; Dos Santos, A.A.; Avila, S. Grape detection, segmentation, and tracking using deep neural networks and three-dimensional association. Comput. Electron. Agric. 2020, 170, 105247. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; Anderson, N. Deep learning for mango (Mangifera Indica) panicle stage classification. Agronomy 2020, 10, 143. [Google Scholar] [CrossRef]

- Bender, A.; Whelan, B.; Sukkarieh, S. A high-resolution, multimodal data set for agricultural robotics: A Ladybird ’s-eye view of Brassica. J. Field Robot. 2020, 37, 73–96. [Google Scholar] [CrossRef]

- Bose, P.; Kasabov, N.K.; Bruzzone, L.; Hartono, R.N. Spiking neural networks for crop yield estimation based on spatiotemporal analysis of image time series. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6563–6573. [Google Scholar] [CrossRef]

- Kaul, M.; Hill, R.L.; Walthall, C. Artificial neural networks for corn and soybean yield prediction. Agric. Syst. 2005, 85, 1–18. [Google Scholar] [CrossRef]

- Pourdarbani, R.; Sabzi, S.; Hernández-Hernández, M.; Hernández-Hernández, J.L.; García-Mateos, G.; Kalantari, D.; Molina-Martínez, J.M. Comparison of different classifiers and the majority voting rule for the detection of plum fruits in garden conditions. Remote Sens. 2019, 11, 2546. [Google Scholar] [CrossRef]

- Cheng, H.; Damerow, L.; Sun, Y.; Blanke, M. Early yield prediction using image analysis of apple fruit and tree canopy features with neural networks. J. Imaging 2017, 3, 6. [Google Scholar] [CrossRef]

- Tran, T.-T.; Choi, J.-W.; Le, T.-T.H.; Kim, J.-W. A comparative study of deep CNN in forecasting and classifying the macronutrient deficiencies on development of tomato plant. Appl. Sci. 2019, 9, 1601. [Google Scholar] [CrossRef]

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Yang, K.; Zhong, W.; Li, F. Leaf segmentation and classification with a complicated background using deep learning. Agronomy 2020, 10, 1721. [Google Scholar] [CrossRef]

- Brahimi, M.; Boukhalfa, K.; Moussaoui, A. Deep learning for tomato diseases: Classification and symptoms visualization. Appl. Artif. Intell. 2017, 31, 299–315. [Google Scholar] [CrossRef]

- Da Costa, A.Z.; Figueroa, H.E.H.; Fracarolli, J.A. Computer vision based detection of external defects on tomatoes using deep learning. Biosyst. Eng. 2020, 190, 131–144. [Google Scholar] [CrossRef]

- Sun, J.; He, X.; Ge, X.; Wu, X.; Shen, J.; Song, Y. Detection of key organs in tomato based on deep migration learning in a complex background. Agriculture 2018, 8, 196. [Google Scholar] [CrossRef]

- Ramesh, S.; Hebbar, R.; Niveditha, M.; Pooja, R.; Shashank, N.; Vinod, P.V. Plant disease detection using machine learning. In Proceedings of the 2018 International Conference on Design Innovations for 3Cs Compute Communicate Control (ICDI3C), Bengaluru, India, 24–26 April 2018; pp. 41–45. [Google Scholar] [CrossRef]

- Goel, N.; Sehgal, P. Fuzzy classification of pre-harvest tomatoes for ripeness estimation–An approach based on automatic rule learning using decision tree. Appl. Soft Comput. 2015, 36, 45–56. [Google Scholar] [CrossRef]

- Mureşan, H.; Oltean, M. Fruit recognition from images using deep learning. Acta Univ. Sapientiae Inform. 2018, 10, 26–42. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Gregorio, E.; Guevara, J.; Auat, F.; Sanz-Cortiella, R.; Escolà, A.; Llorens, J.; Morros, J.-R.; Ruiz-Hidalgo, J.; Vilaplana, V.; et al. Fruit detection in an apple orchard using a mobile terrestrial laser scanner. Biosyst. Eng. 2019, 187, 171–184. [Google Scholar] [CrossRef]

- Zhang, L.; Jia, J.; Gui, G.; Hao, X.; Gao, W.; Wang, M. Deep learning based improved classification system for designing tomato harvesting robot. IEEE Access 2018, 6, 67940–67950. [Google Scholar] [CrossRef]

- Gopal, P.S.M.; Bhargavi, R. A novel approach for efficient crop yield prediction. Comput. Electron. Agric. 2019, 165, 104968. [Google Scholar] [CrossRef]

- Halstead, M.A.; McCool, C.S.; Denman, S.; Perez, T.; Fookes, C. Fruit quantity and ripeness estimation using a robotic vision system. IEEE Robot. Autom. Lett. 2018, 3, 2995–3002. [Google Scholar] [CrossRef]

- Song, Y.; Glasbey, C.A.; Horgan, G.W.; Polder, G.; Dieleman, J.A.; van der Heijden, G.W.A.M. Automatic fruit recognition and counting from multiple images. Biosyst. Eng. 2014, 118, 203–215. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).