Development and Application of an Intelligent Plant Protection Monitoring System

Abstract

:1. Introduction

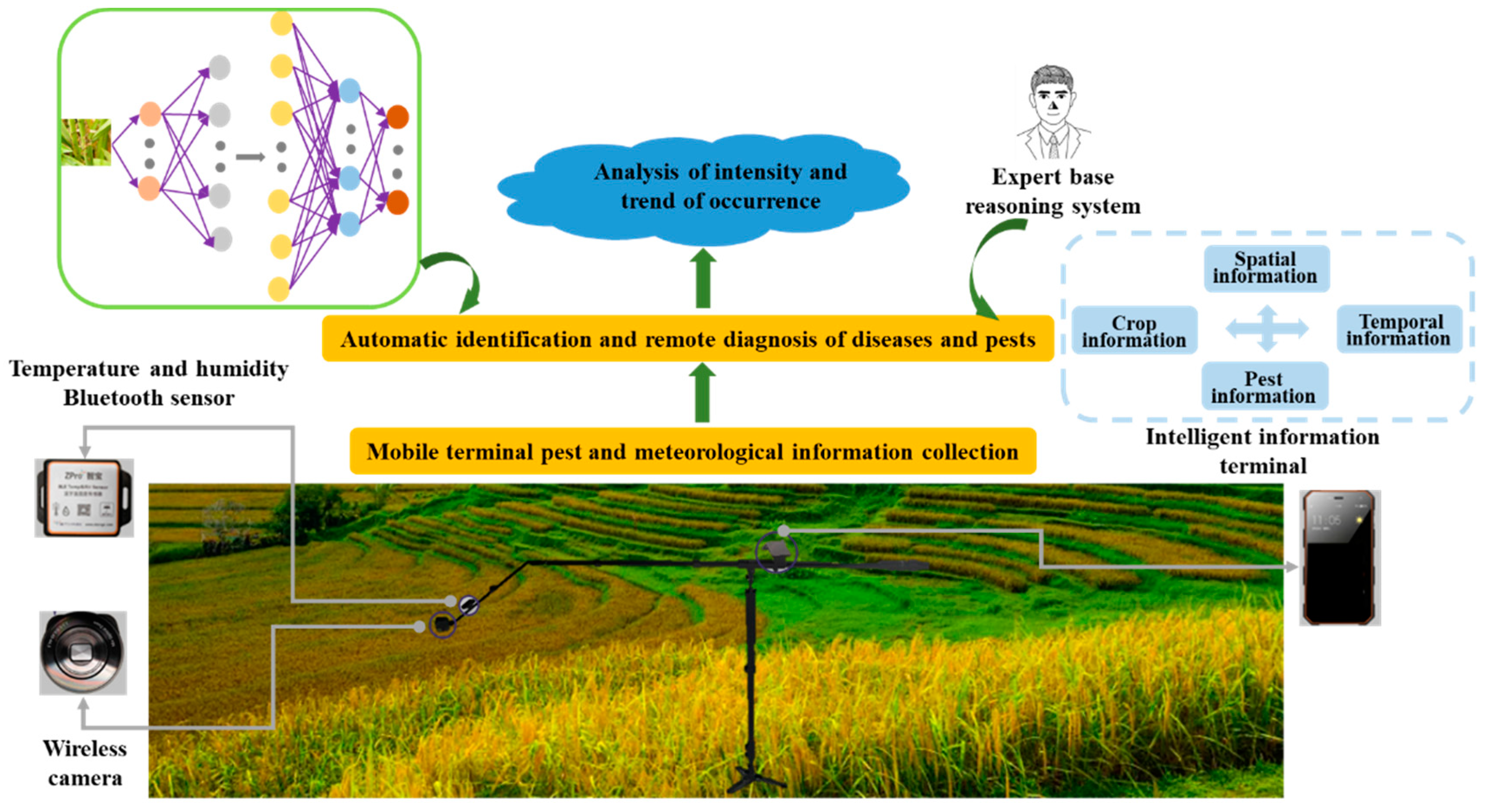

- (1)

- A complete set of monitoring systems is constructed, including the software and hardware of the system.

- (2)

- The system can collect meteorological data and plant images at the same time and realize the identification and counting of pests and diseases based on Yolo v3. A timely solution can be given through the expert system.

2. Diseases and Pests Identification

2.1. Image Acquisition and Coding of Diseases and Pests in Macro Mode

2.2. Identification Based on Neural Network

2.3. Data Reporting and Location Information Acquisition

3. Hardware System Development and Application

3.1. Perception Module Construction

3.2. Positioning and Communication Module

4. Software System Development

4.1. Development of Mobile Terminal Software System

4.2. Development of Computer Terminal Software System

5. Experiment and Discussion

5.1. Image Collection of Diseases and Pests

5.2. Analysis of Acquisition Results

5.2.1. Rice Planthopper

5.2.2. Rice-Leaf Roller

5.2.3. Rice Sheath Blight

5.2.4. Wheat Spider

5.2.5. Wheat Aphid

5.2.6. Wheat Leaf Rust

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kaivosoja, J.; Hautsalo, J.; Heikkinen, J.; Hiltunen, L.; Ruuttunen, P.; Näsi, R.; Niemeläinen, O.; Lemsalu, M.; Honkavaara, E.; Salonen, J. Reference Measurements in Developing UAV Systems for Detecting Pests, Weeds, and Diseases. Remote Sens. 2021, 13, 1238. [Google Scholar] [CrossRef]

- Duke, S.O. Interaction of chemical pesticides and their formulation ingredients with microbes associated with plants and plant pests. J. Agric. Food Chem. 2018, 66, 7553–7561. [Google Scholar] [CrossRef]

- Kim, K.H.; Kabir, E.; Jahan, S.A. Exposure to pesticides and the associated human health effects. Sci. Total Environ. 2017, 575, 525–535. [Google Scholar] [CrossRef]

- Manavalan, R. Automatic identification of diseases in grains crops through computational approaches: A review. Comput. Electron. Agric. 2020, 178, 105802. [Google Scholar] [CrossRef]

- Li, L.; Zhang, S.; Wang, B. Plant disease detection and classification by deep learning—A review. IEEE Access 2021, 9, 56683–56698. [Google Scholar] [CrossRef]

- Wang, S.; Han, Y.; Chen, J.; Zhang, K.; Zhang, Z.; Liu, X. Weed Density Extraction based on Few-shot Learning through UAV Remote Sensing RGB and Multi-spectral Images in Ecological Irrigation Area. Front. Plant Sci. 2022, 12, 735230. [Google Scholar] [CrossRef]

- Waheed, A.; Goyal, M.; Gupta, D.; Khanna, A.; Hassanien, A.E.; Pandey, H.M. An optimized dense convolutional neural network model for disease recognition and classification in corn leaf. Comput. Electron. Agric. 2020, 175, 105456. [Google Scholar] [CrossRef]

- Nagasubramanian, G.; Sakthivel, R.K.; Patan, R.; Sankayya, M.; Daneshmand, M.; Gandomi, A.H. Ensemble Classification and IoT Based Pattern Recognition for Crop Disease Monitoring System. IEEE Internet Things J. 2021, 8, 12847–12854. [Google Scholar] [CrossRef]

- Albanese, A.; Nardello, M.; Brunelli, D. Automated Pest Detection with DNN on the Edge for Precision Agriculture. IEEE J. Emerg. Sel. Top. Circuits Syst. 2021, 11, 458–467. [Google Scholar] [CrossRef]

- Mishra, M.; Choudhury, P.; Pati, B. Modified ride-NN optimizer for the IoT based plant disease detection. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 691–703. [Google Scholar] [CrossRef]

- Sudirman, S.; Liu, L.; Wang, R.; Xie, C.; Yang, P.; Wang, F.; Liu, W. Deep learning based automatic multi-class wild pest monitoring approach using hybrid global and local activated features. IEEE Trans. Ind. Inform. 2020, 17, 7589–7598. [Google Scholar]

- Neupane, K.; Baysal-Gurel, F. Automatic identification and monitoring of plant diseases using unmanned aerial vehicles: A review. Remote Sens. 2021, 13, 3841. [Google Scholar] [CrossRef]

- Wang, S.; Chen, J.; He, X. An adaptive composite disturbance rejection for attitude control of the agricultural quadrotor UAV. ISA Trans. 2022. [Google Scholar] [CrossRef]

- Sharma, A.; Jain, A.; Gupta, P.; Chowdary, V. Machine learning applications for precision agriculture: A comprehensive review. IEEE Access 2020, 9, 4843–4873. [Google Scholar] [CrossRef]

- Wang, S.; Wang, L.; He, X.; Cao, Y. A Monocular Vision Obstacle Avoidance Method Applied to Indoor Tracking Robot. Drones 2021, 5, 105. [Google Scholar] [CrossRef]

- Trilles, S.; Torres-Sospedra, J.; Belmonte, Ó.; Zarazaga-Soria, F.J.; González-Pérez, A.; Huerta, J. Development of an open sensorized platform in a smart agriculture context: A vineyard support system for monitoring mildew disease. Sustain. Comput. Inform. Syst. 2020, 28, 100309. [Google Scholar] [CrossRef]

- Methun, N.R.; Yasmin, R.; Begum, N. Carrot Disease Recognition using Deep Learning Approach for Sustainable Agriculture. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 732–741. [Google Scholar] [CrossRef]

- Udutalapally, V.; Mohanty, S.P.; Pallagani, V.; Khandelwal, V. Scrop: A novel device for sustainable automatic disease prediction, crop selection, and irrigation in internet-of-agro-things for smart agriculture. IEEE Sens. J. 2020, 21, 17525–17538. [Google Scholar] [CrossRef]

- Khattab, A.; Habib, S.E.; Ismail, H.; Zayan, S.; Fahmy, Y.; Khairy, M.M. An IoT-based cognitive monitoring system for early plant disease forecast. Comput. Electron. Agric. 2019, 166, 105028. [Google Scholar] [CrossRef]

- Mekala, M.S.; Viswanathan, P. CLAY-MIST: IoT-cloud enabled CMM index for smart agriculture monitoring system. Measurement 2019, 134, 236–244. [Google Scholar] [CrossRef]

- He, L.; Qi, S.-L.; Duan, J.-Z.; Guo, T.-C.; Feng, W.; He, D.-X. Monitoring of Wheat Powdery Mildew Disease Severity Using Multiangle Hyperspectral Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2021, 59, 979–990. [Google Scholar] [CrossRef]

- Dong, Y.; Xu, F.; Liu, L.; Du, X.; Ren, B.; Guo, A.; Geng, Y.; Ruan, C.; Ye, H.; Huang, W.; et al. Automatic System for Crop Pest and Disease Dynamic Monitoring and Early Forecasting. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4410–4418. [Google Scholar] [CrossRef]

- Kim, W.S.; Lee, D.H.; Kim, Y.J. Machine vision-based automatic disease symptom detection of onion downy mildew. Comput. Electron. Agric. 2020, 168, 105099. [Google Scholar] [CrossRef]

- Gao, D.; Sun, Q.; Hu, B.; Zhang, S. A framework for agricultural pest and disease monitoring based on internet-of-things and unmanned aerial vehicles. Sensors 2020, 20, 1487. [Google Scholar] [CrossRef] [Green Version]

- Zhang, B.; Ye, H.; Lu, W.; Huang, W.; Wu, B.; Hao, Z.; Sun, H. A Spatiotemporal Change Detection Method for Monitoring Pine Wilt Disease in a Complex Landscape Using High-Resolution Remote Sensing Imagery. Remote Sens. 2021, 13, 2083. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767v1. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. Available online: https://arxiv.org/abs/1506.01497 (accessed on 27 April 2021).

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland; pp. 21–37. [Google Scholar]

| Crop | Pests and Diseases | Specific Diseases and Pests | Coding |

|---|---|---|---|

| 11: Wheat | 1: Pest | 11: Wheat spider | 11111 |

| 21: Aphid, wheat long tube aphid or wheat binary aphid (green) | 11121 | ||

| 22: Aphid, rhopalosiphus (black) | 11122 | ||

| 23: Aphids (red) | 11123 | ||

| 31: Slime worm | 11131 | ||

| 2: Disease | 11: Normal wheat ears | 11211 | |

| 12: Scab | 11212 | ||

| 21: Powdery mildew | 11221 | ||

| 31: Wheat leaf rust | 11231 | ||

| 41: Wheat stripe rust | 11241 | ||

| 12: Rice | 1: Pest | 11: Rice planthopper | 12111 |

| 21: Normal rice leaves | 12121 | ||

| 22: Rice-leaf roller | 12122 | ||

| 31: Striped rice borer | 12131 | ||

| 2: Disease | 11: Sheath blight | 12211 | |

| 21: Flax spot | 12221 | ||

| 31: Rice-false smut | 12231 | ||

| 41: Rice blast (leaf) | 12241 | ||

| 42: Rice blast (panicle) | 12242 | ||

| 51: Bacterial streak | 12251 | ||

| 13: Rape | 1: Pest | 11: Aphid | 13111 |

| 2: Disease | 11: Sclerotinia (rhizome) | 13211 | |

| 12: Sclerotinia (leaf) | 13212 | ||

| 21: Downy mildew | 13221 |

| Form | Wireless Lens | Probe Rod Kit | Intelligent Information Terminal | Temperature and Humidity Sensor | Support Frame | Macro Lens | |

|---|---|---|---|---|---|---|---|

| Pattern | |||||||

| Macro mode | √ | √ | √ | √ | |||

| Handheld probe mode | √ | √ | √ | √ | |||

| Support frame mode | √ | √ | √ | √ | √ | ||

| Type | Name | Viewfinder Range | Angle and Distance |

|---|---|---|---|

| Pest | Rice planthopper | The side is facing the aggregation area of rice planthopper above the rice root. The lens vertically penetrates into the cluster and overlooks the aggregation area of rice planthopper above the root. | Angle: 0~30°. Distance: 5~10 cm, 10~15 cm, mainly in a clear state with a distance of 5~10 cm. |

| Rice-leaf roller | The viewfinder shall mainly focus on the side images of rice leaves, which can be distinguished by the naked eye. | Angle: 30~60°. Distance: 10~20 cm, 20~30 cm, mainly in a clear state with a distance of 20~30 cm. | |

| Wheat spider | The lens is close to the ground in the winter and perpendicular to the ground in the spring. | Angle: 0~90°. Distance: 5~10 cm, 10~15 cm, mainly in a clear state with a distance of 5~10 cm. | |

| Wheat aphid | The whole wheat plant shall be photographed before jointing, and the middle and upper parts of the wheat plant shall be photographed after booting. | Angle: 0~30°. Distance: 5~10 cm, 10~15 cm, mainly in a clear state with a distance of 5~10 cm. | |

| Others | Other pests, such as striped borer, leaf cicada, rice borer sandfly, and armyworm, can be photographed according to the actual situation of the investigation. | According to the survey characteristics, refer to the above form. | |

| Disease | Sheath blight | The middle and lower part of rice is photographed (one side is used). | Angle: 0~30°. Distance: 10~20 cm, 20~30 cm, mainly in a clear state with a distance of 10~20 cm. |

| Leaf rust | The viewfinder shall mainly focus on the side image of wheat ear (which can be distinguished by naked eyes). | Angle: 30~60°. Distance: 10~20 cm, 20~30 cm, mainly in a clear state with a distance of 20~30 cm. | |

| Others | Other diseases, such as bacterial stripe disease, rice false smut, and ear neck blast, can be photographed according to the actual situation of the investigation and with reference to the above types. | According to the survey characteristics, refer to the above form. | |

| Seedling growth | According to the significant characteristic areas of different growth periods, the viewfinder should mainly focus on the side image of crop seedling. | Angle: 30~60°. Distance: 10~20 cm, 20~30 cm, mainly in a clear state with a distance of 20~30 cm. | |

| Rice Planthopper | Rice-Leaf Roller | Rice Sheath Blight | Wheat Spider | Wheat Aphid | Wheat Leaf Rust | |

|---|---|---|---|---|---|---|

| Ours (Yolo V3) | 83.32% | 85.34% | 82.03% | 86.32% | 85.53% | 87.01% |

| Yolo V2 [27] | 78.13% | 80.78% | 78.89% | 81.32% | 80.56% | 83.65% |

| Faster RCNN [28] | 75.52% | 77.45% | 76.76% | 75.89% | 73.20% | 79.32% |

| SSD [29] | 72.32% | 75.32% | 77.27% | 74.12% | 71.32% | 74.78% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Qi, P.; Zhang, W.; He, X. Development and Application of an Intelligent Plant Protection Monitoring System. Agronomy 2022, 12, 1046. https://doi.org/10.3390/agronomy12051046

Wang S, Qi P, Zhang W, He X. Development and Application of an Intelligent Plant Protection Monitoring System. Agronomy. 2022; 12(5):1046. https://doi.org/10.3390/agronomy12051046

Chicago/Turabian StyleWang, Shubo, Peng Qi, Wei Zhang, and Xiongkui He. 2022. "Development and Application of an Intelligent Plant Protection Monitoring System" Agronomy 12, no. 5: 1046. https://doi.org/10.3390/agronomy12051046