Canopy Laser Interception Compensation Mechanism—UAV LiDAR Precise Monitoring Method for Cotton Height

Abstract

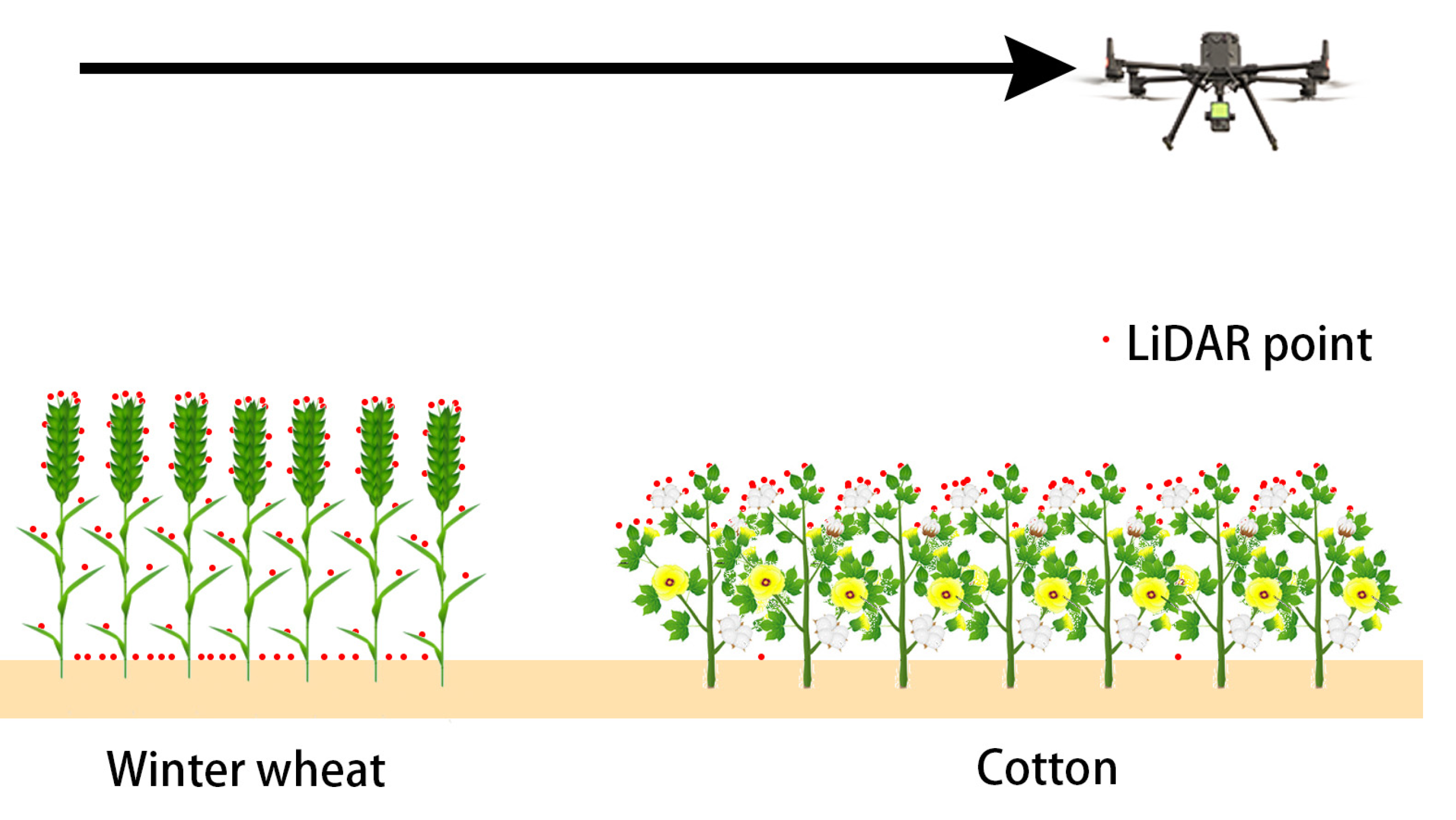

:1. Introduction

- (1)

- Analyzing a suitable ground point filtering algorithm for the cotton point cloud model in fields;

- (2)

- Research on a compensation mechanism for plant height in canopy laser interception to solve the problem of laser penetration;

- (3)

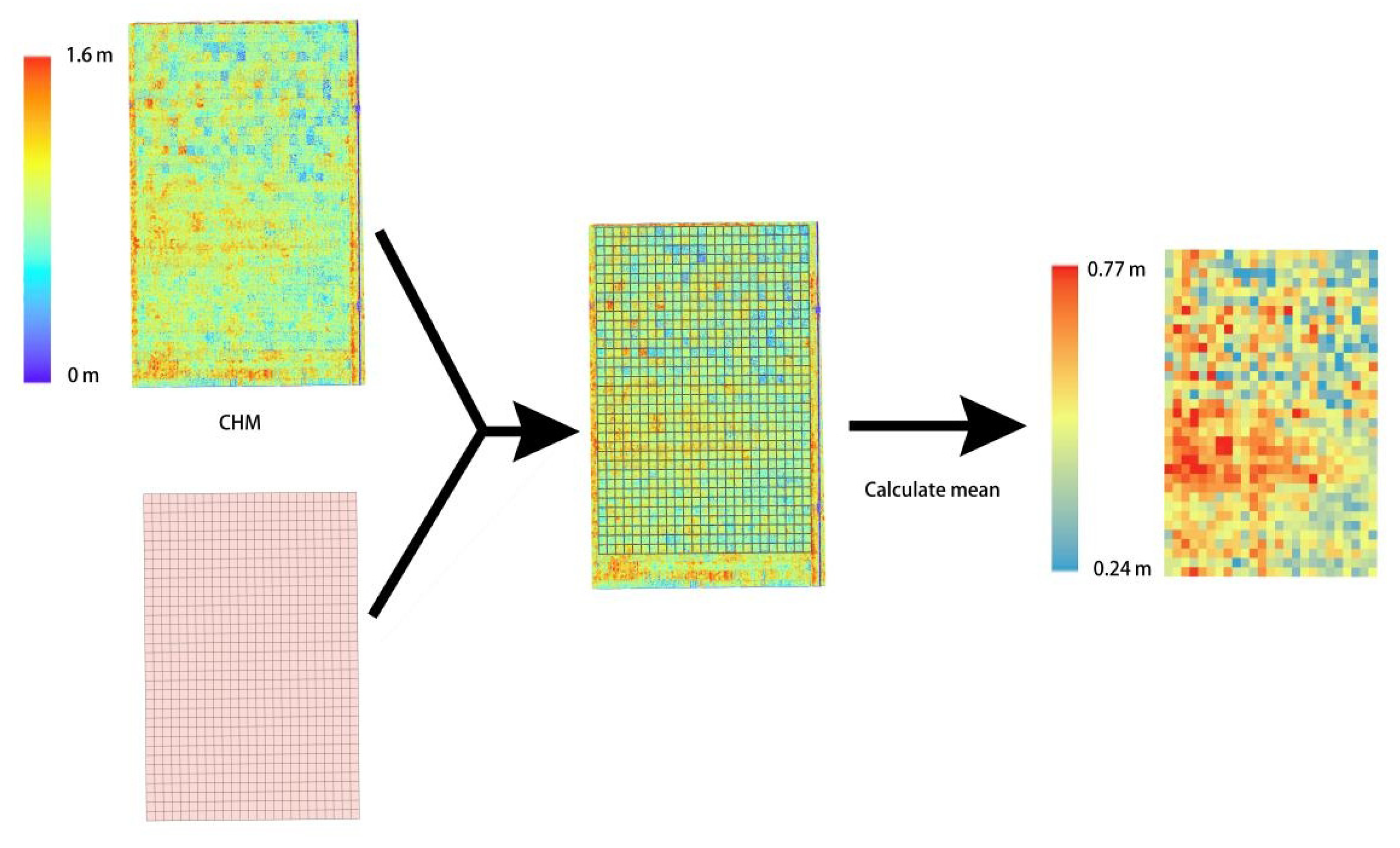

- Improving the accuracy of canopy height models (CHM).

2. Materials and Methods

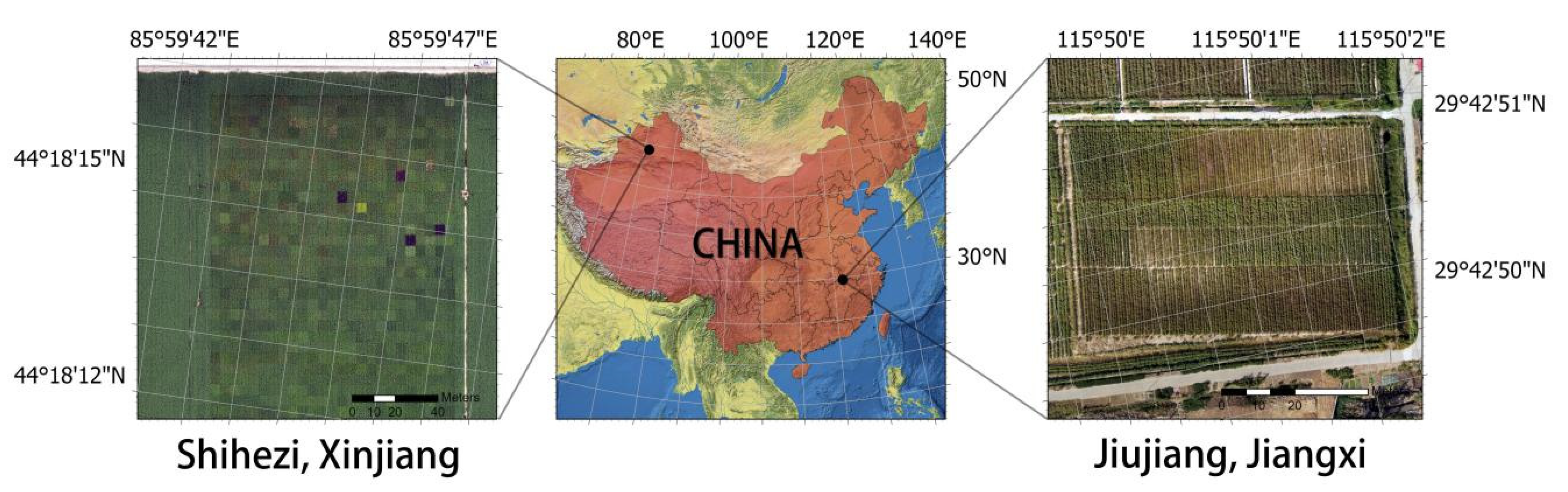

2.1. Experimental Area and Field Sampling

2.2. Point Cloud Data Collection and Preprocessing

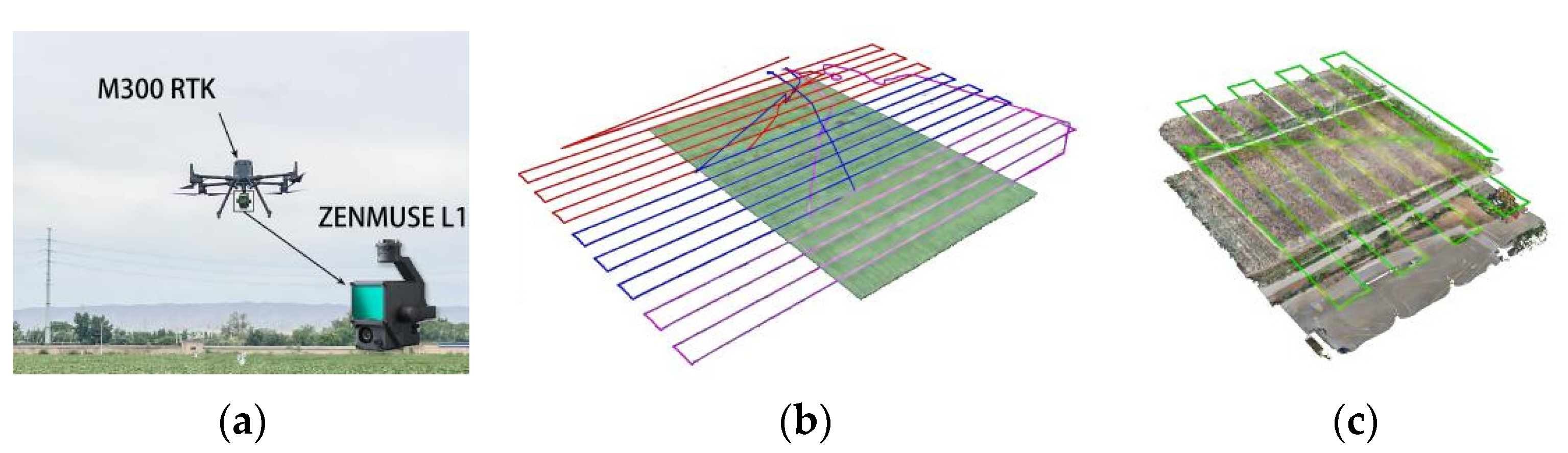

2.2.1. Data Acquisition and Splicing of UAV Borne LiDAR

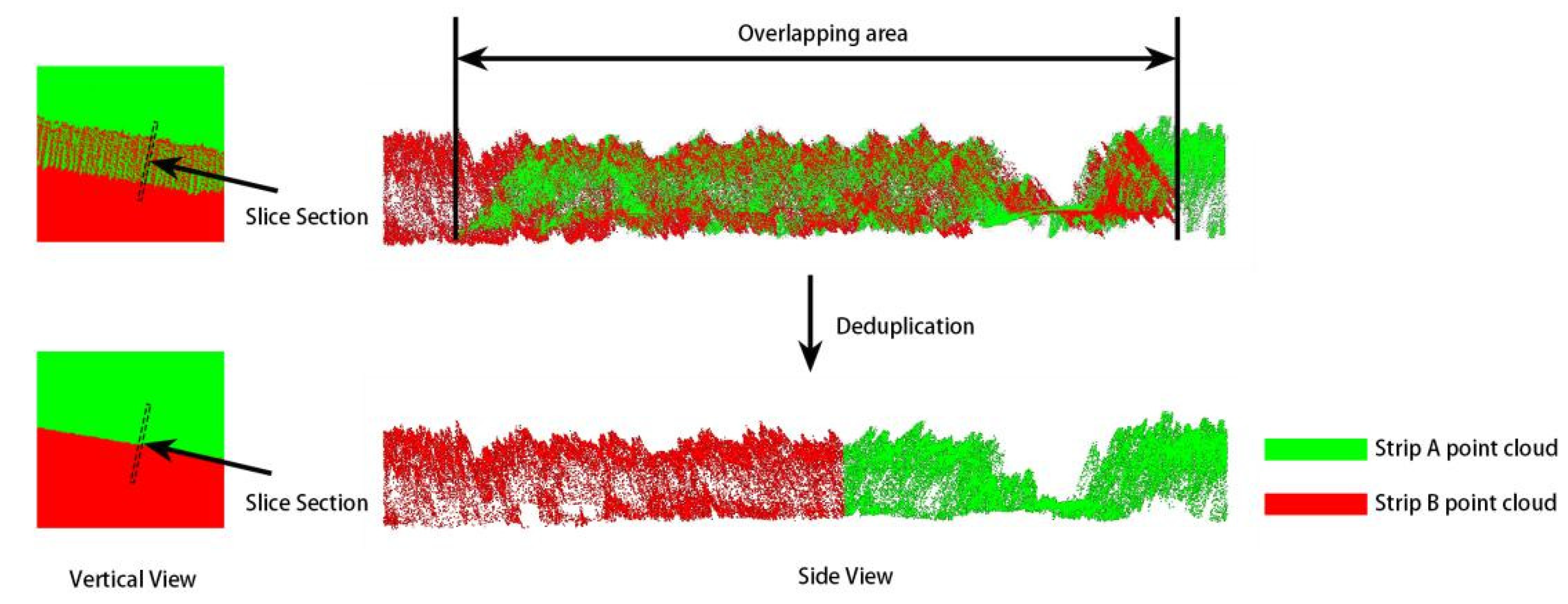

2.2.2. Point Cloud Data Preprocessing

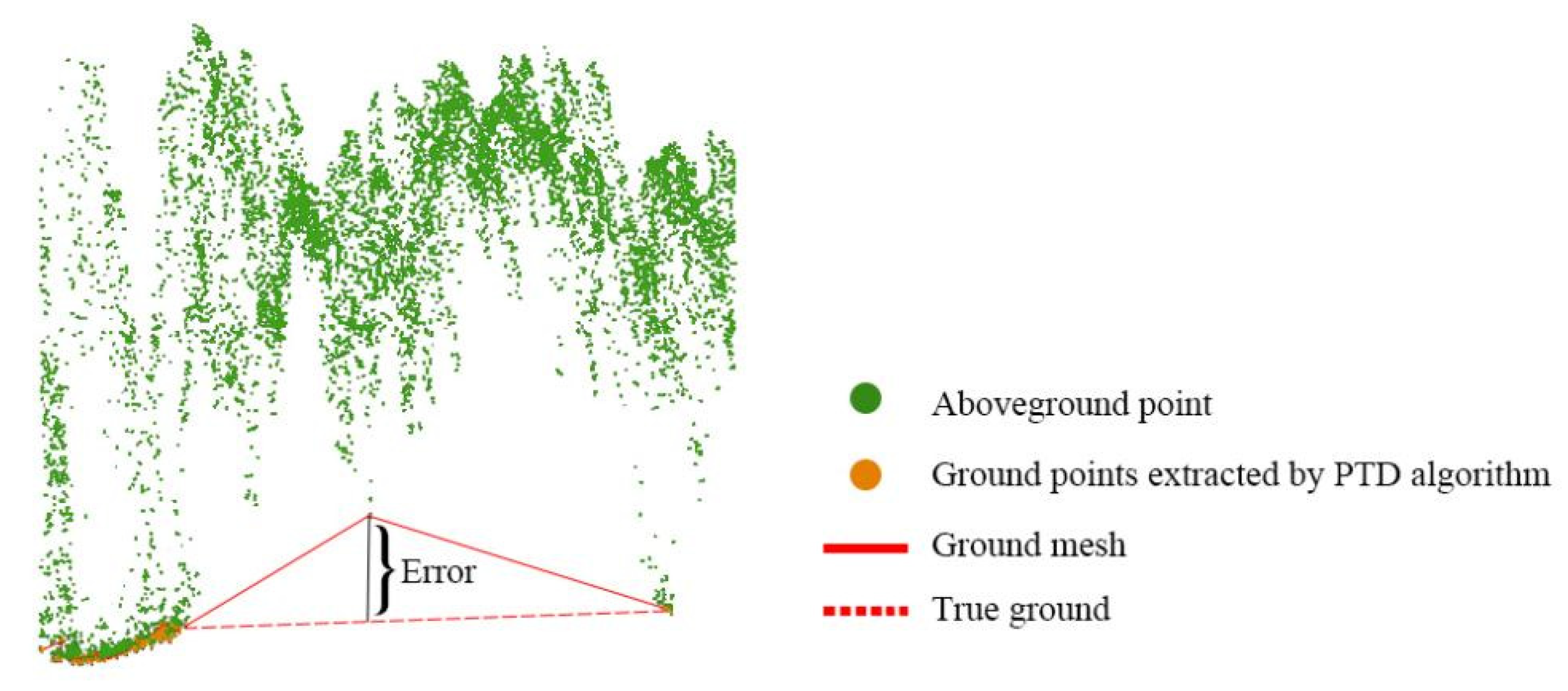

2.3. Ground Point Extraction

2.3.1. Progressive TIN Densification (PTD) Ground Filtering

- (1)

- Low point removal. Due to the multi-path effect of the LiDAR system or due to errors in the laser ranging system, there is a probability that laser points with much lower elevation than other points around them will be formed. If these points are not removed, then the ground seed point selection using the above assumptions will result in a set of ground seed points containing these low points, which will introduce errors in the subsequent filtering;

- (2)

- Ground seed point selection. Define a cube boundary box to wrap the point cloud data and divide it into n rows and m columns and multiple areas. According to the principle of the lowest local elevation, the ground seed points are evenly selected from the original point cloud, and the remaining points are the points to be judged. n and m can be obtained by Formula (1), where x and y are the length and width of the cube boundary, and bmax is set to the width of the ridge in this experiment;

- (3)

- Construct the initial TIN. Use the initial ground seed points selected in (2) to construct the initial TIN (Figure 6);

- (4)

- Select the ground point from the points to be judged for iterative encryption. For all the points to be judged, find the triangular plane whose projection coordinates in the xoy plane correspond to the triangular plane in the TIN and calculate the slope of the triangular plane at the same time. If the slope is greater than the set maximum slope α1, use the mirror image point (Figure 7a) to judge. The classification of this point is consistent with the classification of the mirror point, and find the vertex Ph(xm,ym,zm) with the largest elevation value in the triangle where the point P to be judged is located. The coordinates of the P point can be calculated by Formula (2);

2.3.2. Cloth Simulation Filter (CSF) Ground Filtering

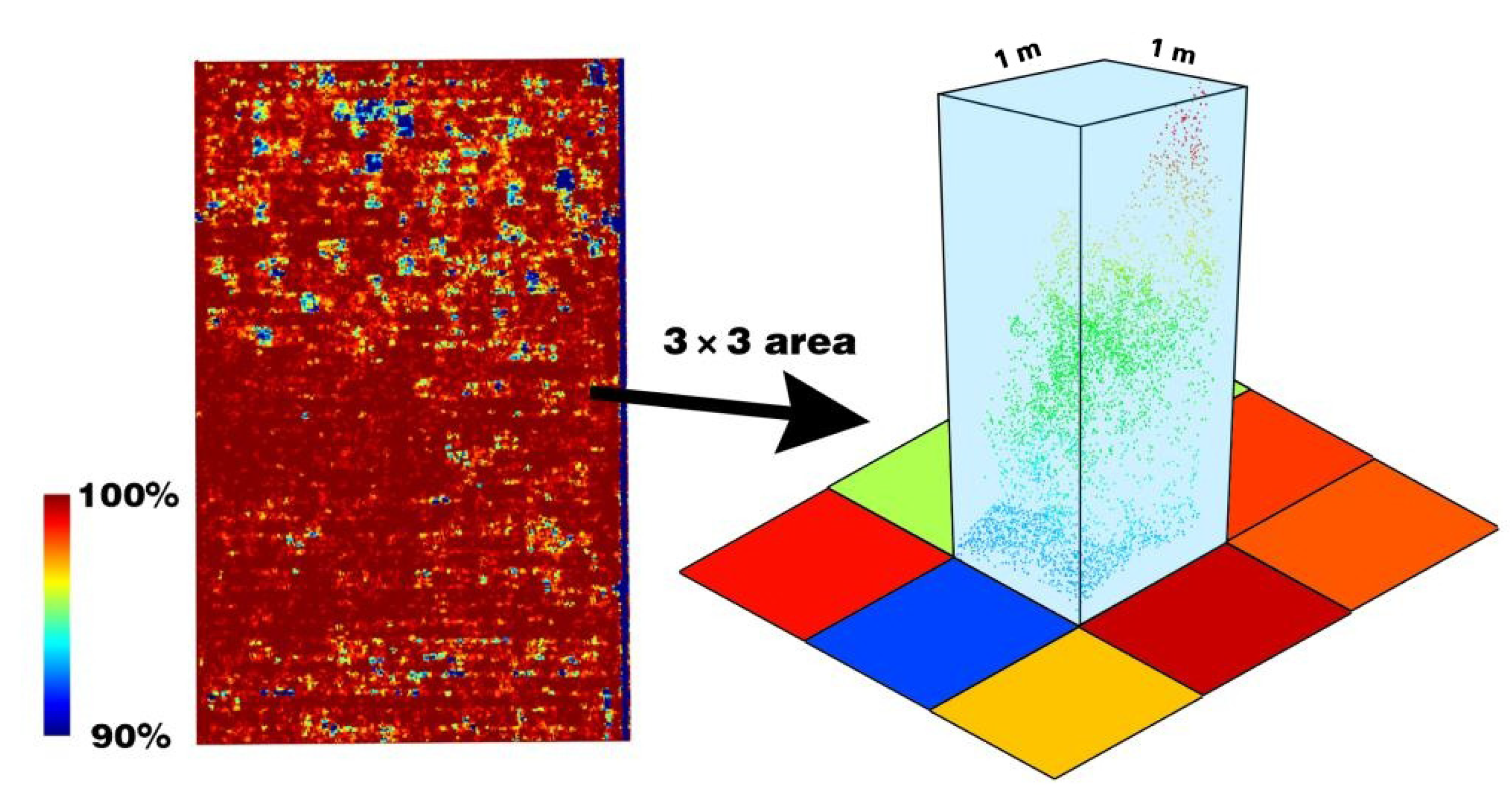

2.4. Compensation of Canopy Laser Interception Rate

2.5. Evaluation Index

3. Results

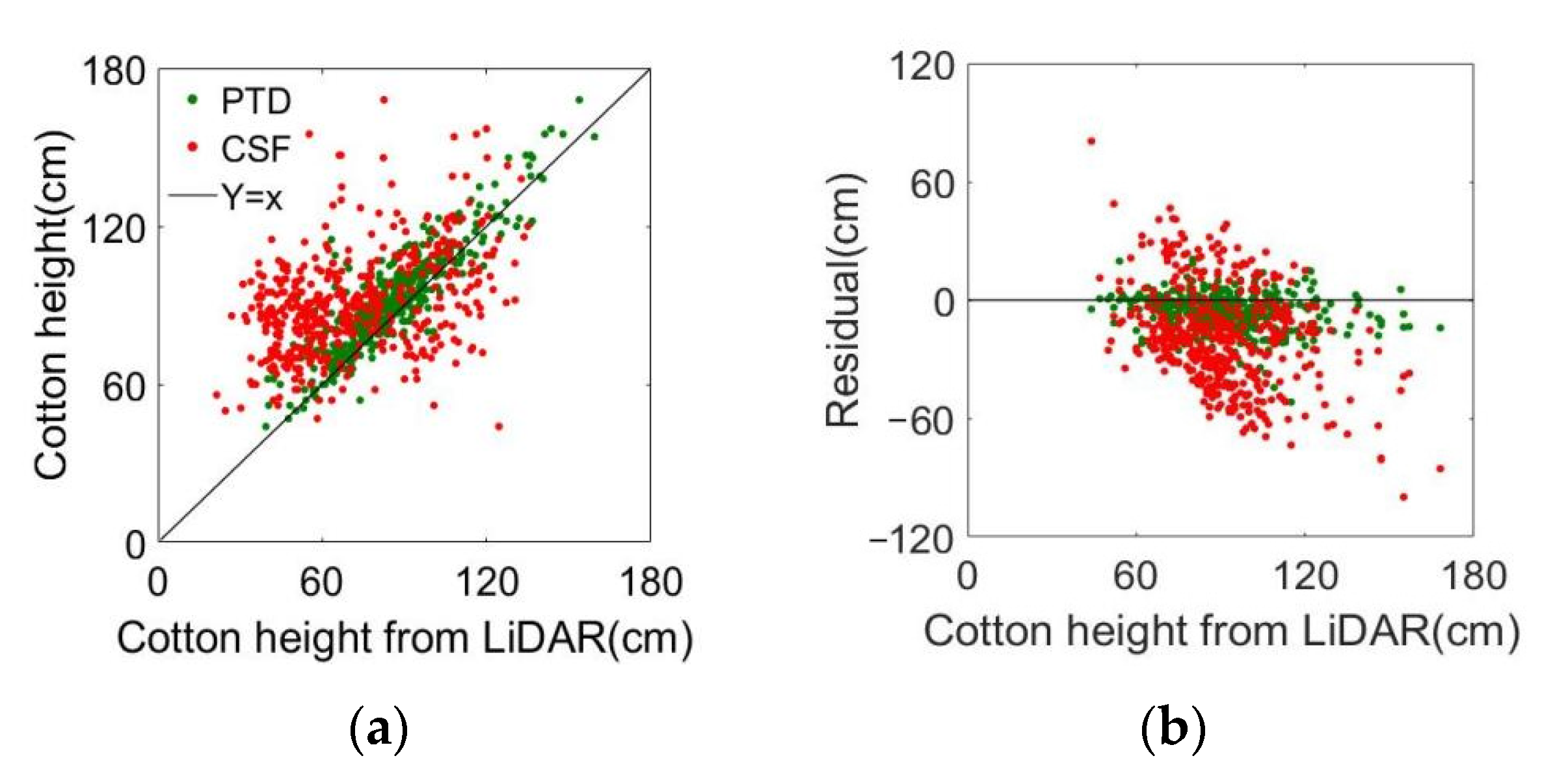

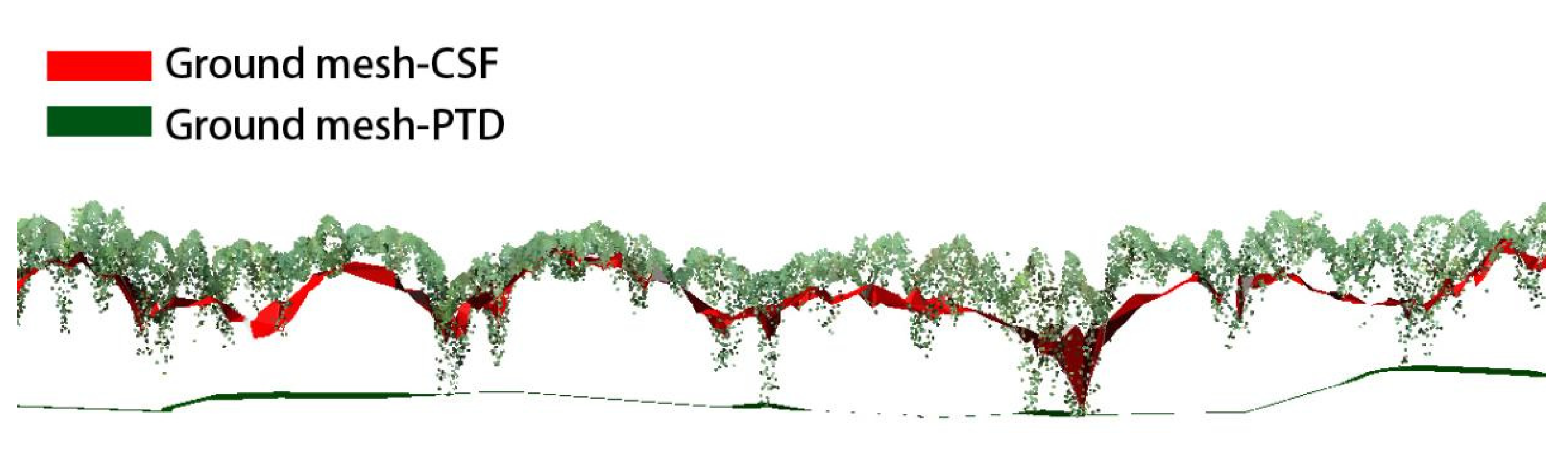

3.1. Influence of Ground Filtering Algorithm

3.2. Effect of Canopy Density

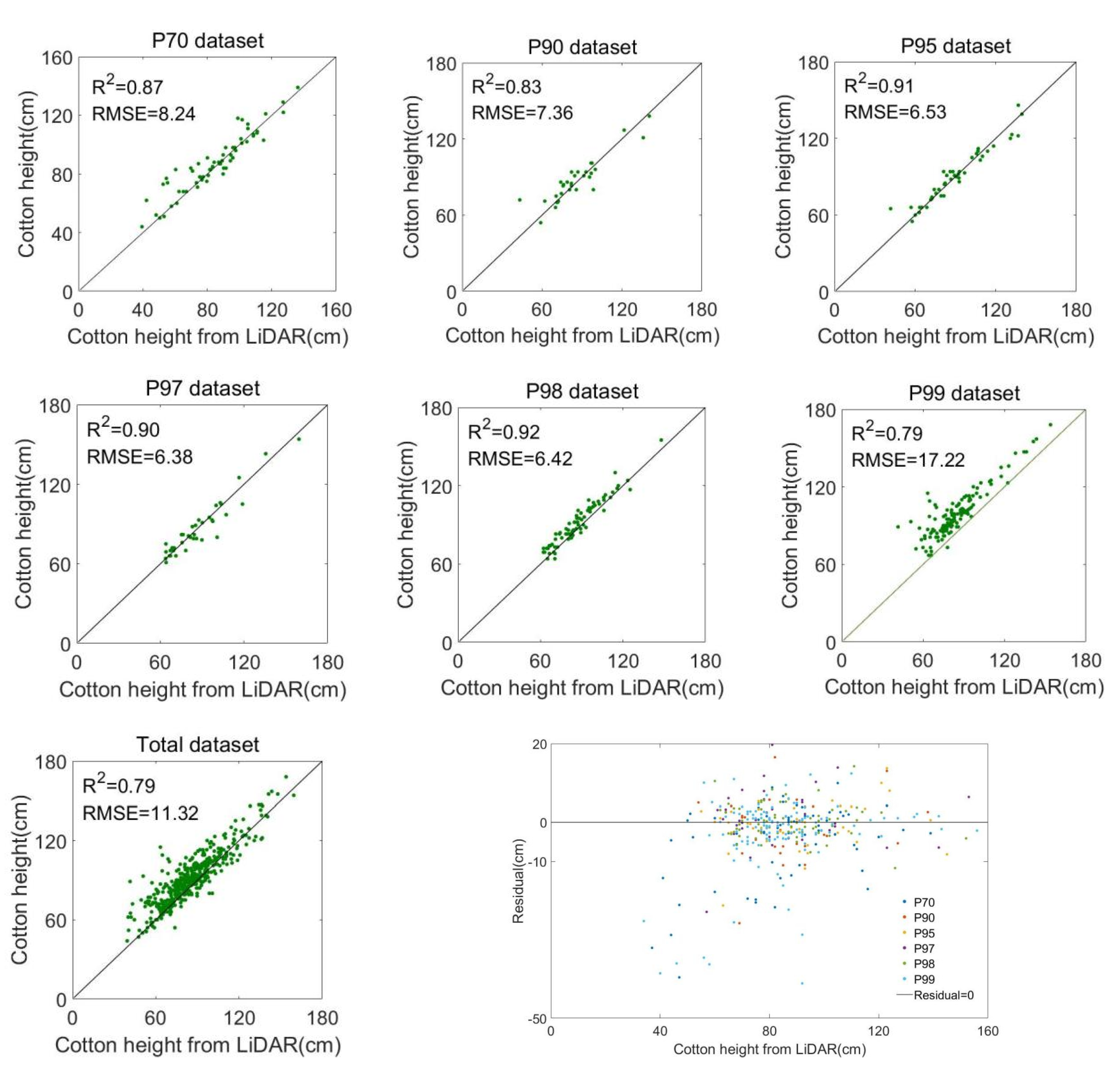

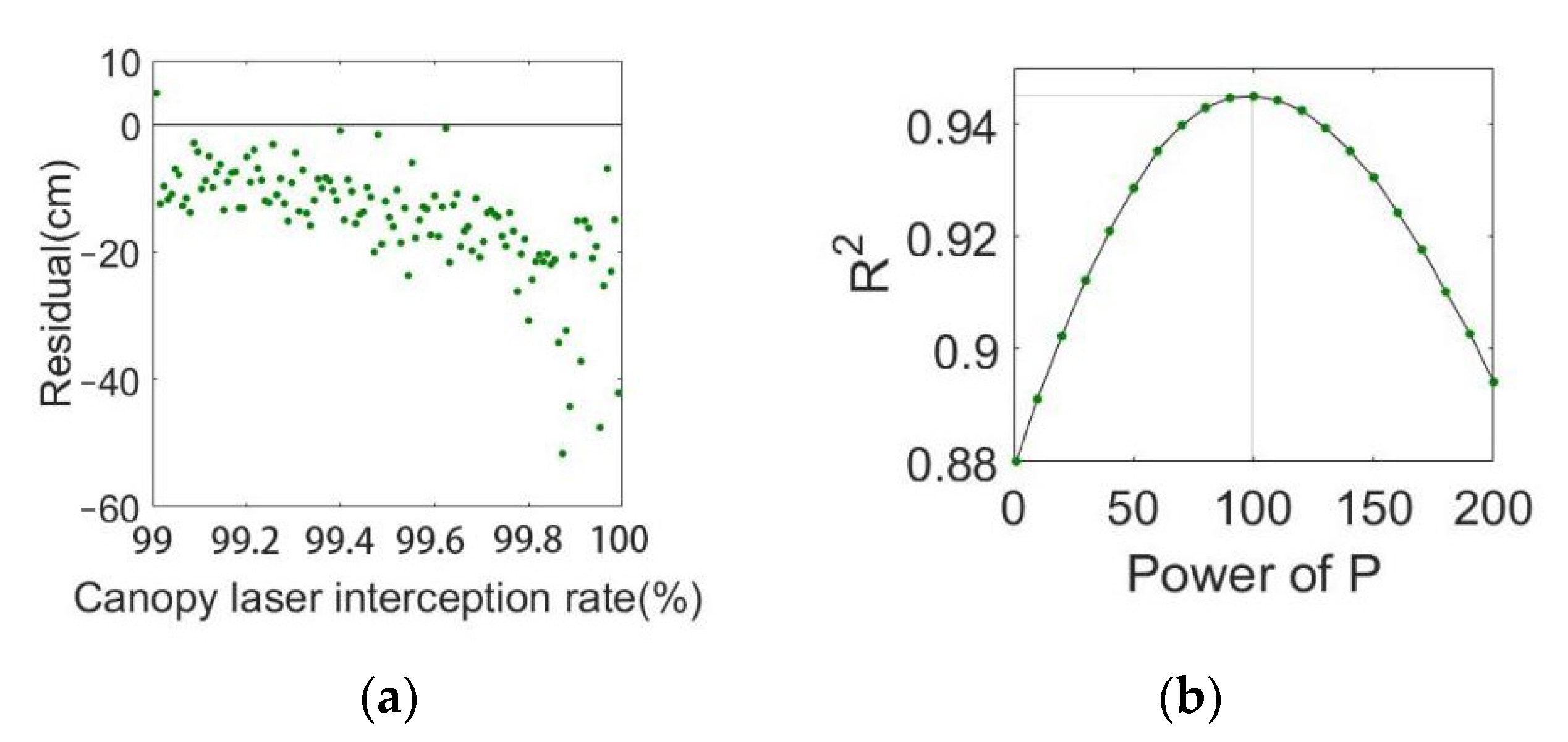

3.3. Determination of Compensation Function f(α)

3.4. Verification and Visualization

4. Discussion

4.1. Estimation of Canopy Height Based on Airborne LiDAR

4.2. Application Prospect

5. Conclusions

- (1)

- The effect of the PTD ground filtering algorithm (R2 = 0.79) is significantly better than that of CSF (R2 = 0.29). The preliminary inference is that the “tip” formed by the laser penetrating the canopy can easily pass through the gaps between the fabric particles, resulting in the result obtained by the CSF filtering being the lower surface of the canopy rather than the ground;

- (2)

- When the canopy interception rate is greater than 99%, the estimation accuracy of LiDAR decreases sharply (R2 = 0.79, RMSE = 17.22 cm), and there is an overall trend of smaller; this is due to the lack of ground points in the sampling area, which leads to the high generated ground mesh, thus affecting the estimation accuracy;

- (3)

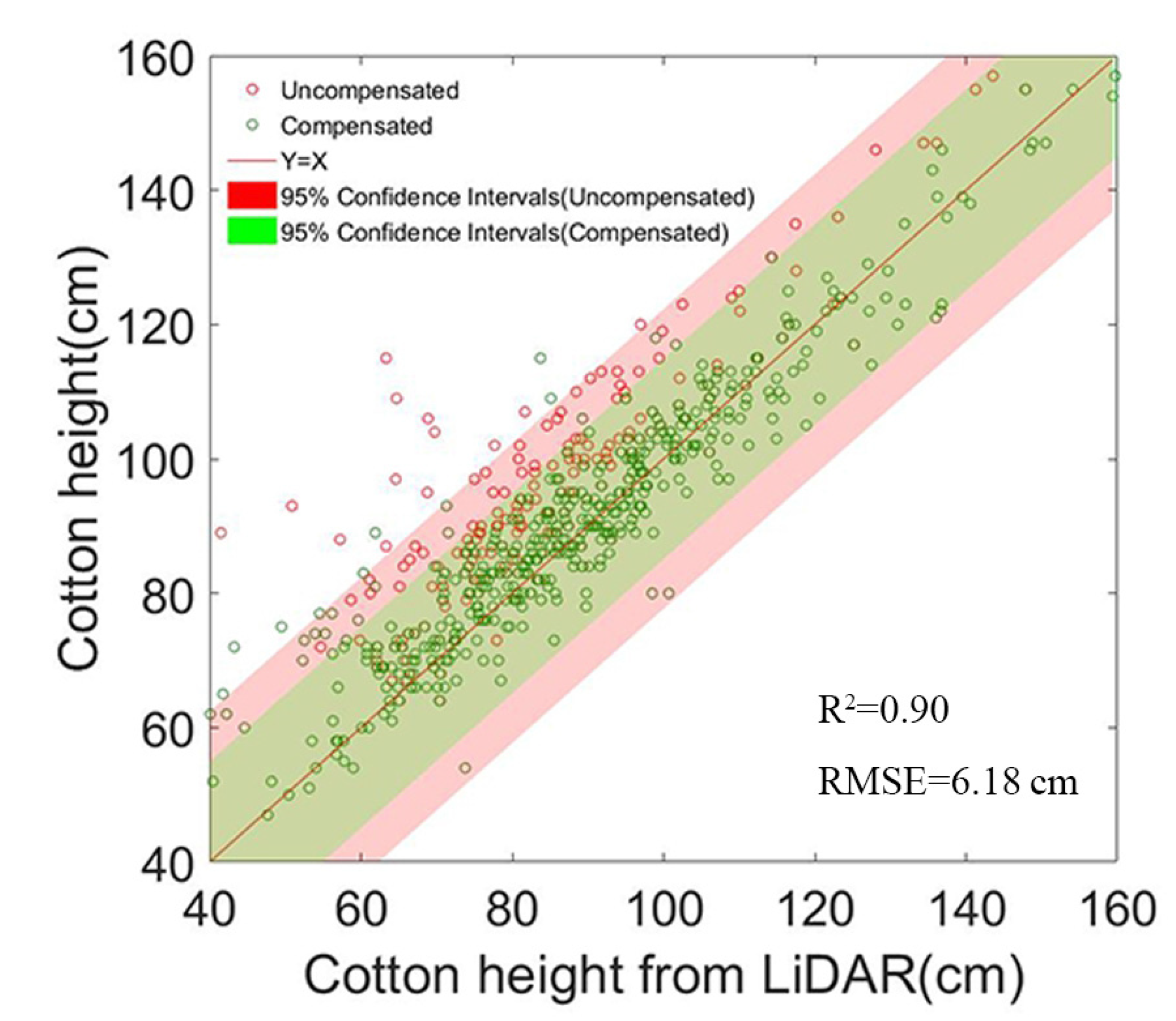

- It is not appropriate to use a globally unified compensation coefficient, which will lead to negative optimization in the area with a low canopy interception rate and increase the estimation error. Therefore, this study designs the compensation function according to the classification of canopy laser interception rate. Compared with the estimation accuracy before compensation, the overall R2 and RMSE reach 0.90 and 6.18 cm, and the results before compensation are greatly improved (R2 increase by 13.92%, RMSE decrease by 45.41%).

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Araus, J.L.; Cairns, J.E. Field high-throughput phenotyping: The new crop breeding frontier. Trends Plant Sci. 2014, 19, 52–61. [Google Scholar] [CrossRef] [PubMed]

- Xu, W.; Chen, P.; Zhan, Y.; Chen, S.; Zhang, L.; Lan, Y. Cotton yield estimation model based on machine learning using time series UAV remote sensing data. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102511. [Google Scholar] [CrossRef]

- Lati, R.N.; Filin, S.; Eizenberg, H. Plant growth parameter estimation from sparse 3D reconstruction based on highly-textured feature points. Precis. Agric. 2013, 14, 586–605. [Google Scholar] [CrossRef]

- Wu, J.; Mao, L.L.; Tao, J.C.; Wang, X.X.; Zhang, H.J.; Xin, M.; Shang, Y.Q.; Zhang, Y.A.; Zhang, G.H.; Zhao, Z.T.; et al. Dynamic Quantitative Trait Loci Mapping for Plant Height in Recombinant Inbred Line Population of Upland Cotton. Front. Plant Sci. 2022, 13, 914140. [Google Scholar]

- Xu, W.; Yang, W.; Chen, S.; Wu, C.; Chen, P.; Lan, Y. Establishing a model to predict the single boll weight of cotton in northern Xinjiang by using high resolution UAV remote sensing data. Comput. Electron. Agric. 2020, 179, 105762. [Google Scholar] [CrossRef]

- Cheng, J.P.; Yang, H.; Qi, J.B.; Sun, Z.D.; Han, S.Y.; Feng, H.K.; Jiang, J.Y.; Xu, W.M.; Li, Z.H.; Yang, G.J.; et al. Estimating canopy-scale chlorophyll content in apple orchards using a 3D radiative transfer model and UAV multispectral imagery. Comput. Electron. Agric. 2022, 202, 107401. [Google Scholar]

- Tahir, M.N.; Lan, Y.; Zhang, Y.; Wang, Y.; Nawaz, F.; Shah, M.A.A.; Gulzar, A.; Qureshi, W.S.; Naqvi, S.M.; Naqvi, S.Z.A. Real time estimation of leaf area index and groundnut yield using multispectral UAV. Int. J. Precis. Agric. Aviat. 2020, 1, 1–6. [Google Scholar]

- Kawamura, K.; Asai, H.; Yasuda, T.; Khanthavong, P.; Soisouvanh, P.; Phongchanmixay, S. Field phenotyping of plant height in an upland rice field in Laos using low-cost small unmanned aerial vehicles (UAVs). Plant Prod. Sci. 2020, 23, 452–465. [Google Scholar] [CrossRef]

- Malachy, N.; Zadak, I.; Rozenstein, O. Comparing Methods to Extract Crop Height and Estimate Crop Coefficient from UAV Imagery Using Structure from Motion. Remote Sens. 2022, 14, 810. [Google Scholar] [CrossRef]

- Holman, F.H.; Riche, A.B.; Michalski, A.; Castle, M.; Wooster, M.J.; Hawkesford, M.J. High Throughput Field Phenotyping of Wheat Plant Height and Growth Rate in Field Plot Trials Using UAV Based Remote Sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Luo, S.Z.; Liu, W.W.; Zhang, Y.Q.; Wang, C.; Xi, X.H.; Nie, S.; Ma, D.; Lin, Y.; Zhou, G.Q. Maize and soybean heights estimation from unmanned aerial vehicle (UAV) LiDAR data. Comput. Electron. Agric. 2021, 182, 106005. [Google Scholar]

- Ni-Meister, W.; Yang, W.Z.; Lee, S.; Strahler, A.H.; Zhao, F. Validating modeled lidar waveforms in forest canopies with airborne laser scanning data. Remote Sens. Environ. 2018, 204, 229–243. [Google Scholar]

- Xu, P.; Wang, H.; Yang, S.; Zheng, Y. Detection of crop heights by UAVs based on the Adaptive Kalman Filter. Int. J. Precis. Agric. Aviat. 2021, 1, 52–58. [Google Scholar] [CrossRef]

- Yang, H.; Hu, X.; Zhao, J.; Hu, Y. Feature extraction of cotton plant height based on DSM difference method. Int. J. Precis. Agric. Aviat. 2021, 1, 59–69. [Google Scholar] [CrossRef]

- Chen, G.; Hay, G.J. An airborne lidar sampling strategy to model forest canopy height from Quickbird imagery and GEOBIA. Remote Sens. Environ. 2011, 115, 1532–1542. [Google Scholar]

- Qiu, Q.; Sun, N.; Bai, H.; Wang, N.; Fan, Z.Q.; Wang, Y.J.; Meng, Z.J.; Li, B.; Cong, Y. Field-Based High-Throughput Phenotyping for Maize Plant Using 3D LiDAR Point Cloud Generated With a “Phenomobile”. Front. Plant Sci. 2019, 10, 554. [Google Scholar] [CrossRef] [PubMed]

- Schaefer, M.T.; Lamb, D.W. A Combination of Plant NDVI and LiDAR Measurements Improve the Estimation of Pasture Biomass in Tall Fescue (Festuca arundinacea var. Fletcher). Remote Sens. 2016, 8, 109. [Google Scholar] [CrossRef]

- Eitel, J.; Magney, T.S.; Vierling, L.A.; Brown, T.T.; Huggins, D.R. LiDAR based biomass and crop nitrogen estimates for rapid, non-destructive assessment of wheat nitrogen status. Field Crops Res. 2014, 159, 21–32. [Google Scholar] [CrossRef]

- Watanabe, K.; Guo, W.; Arai, K.; Takanashi, H.; Kajiya-Kanegae, H.; Kobayashi, M.; Yano, K.; Tokunaga, T.; Fujiwara, T.; Tsutsumi, N.; et al. High-Throughput Phenotyping of Sorghum Plant Height Using an Unmanned Aerial Vehicle and Its Application to Genomic Prediction Modeling. Front. Plant Sci. 2017, 8, 421. [Google Scholar] [CrossRef]

- Oh, S.; Jung, J.; Shao, G.; Shao, G.; Gallion, J.; Fei, S. High-Resolution Canopy Height Model Generation and Validation Using USGS 3DEP LiDAR Data in Indiana, USA. Remote Sens. 2022, 14, 935. [Google Scholar] [CrossRef]

- Lou, S.W.; Dong, H.Z.; Tian, X.L.; Tian, L.W. The “Short, Dense and Early” Cultivation of Cotton in Xinjiang: History, Current Situation and Prospect. Sci. Agric. Sin. 2021, 54, 720–732. [Google Scholar]

- Xu, R.; Li, C.Y.; Bernardes, S. Development and Testing of a UAV-Based Multi-Sensor System for Plant Phenotyping and Precision Agriculture. Remote Sens. 2021, 13, 3517. [Google Scholar] [CrossRef]

- Yan, P.C.; Han, Q.S.; Feng, Y.M.; Kang, S.Z. Estimating LAI for Cotton Using Multisource UAV Data and a Modified Universal Model. Remote Sens. 2022, 14, 4272. [Google Scholar] [CrossRef]

- Axelesson, P.E. DEM Generation from Laser Scanner Data Using Adaptive TIN Models. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2000, 4, 110–117. [Google Scholar]

- Zhang, W.M.; Qi, J.B.; Wan, P.; Wang, H.T.; Xie, D.H.; Wang, X.Y.; Yan, G.J. An Easy-to-Use Airborne LiDAR Data Filtering Method Based on Cloth Simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Madec, S.; Baret, F.; de Solan, B.; Thomas, S.; Dutartre, D.; Jezequel, S.; Hemmerle, M.; Colombeau, G.; Comar, A. High-Throughput Phenotyping of Plant Height: Comparing Unmanned Aerial Vehicles and Ground LiDAR Estimates. Front. Plant Sci. 2017, 8, 2002. [Google Scholar] [CrossRef]

- Wu, J.; Wen, S.; Lan, Y.; Yin, X.; Zhang, J.; Ge, Y. Estimation of cotton canopy parameters based on unmanned aerial vehicle (UAV) oblique photography. Plant Methods 2022, 18, 129. [Google Scholar]

- Ten Harkel, J.; Bartholomeus, H.; Kooistra, L. Biomass and Crop Height Estimation of Different Crops Using UAV-Based Lidar. Remote Sens. 2020, 12, 17. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Roitsch, T.; Cabrera-Bosquet, L.; Fournier, A.; Ghamkhar, K.; Jimenez-Berni, J.; Pinto, F.; Ober, E.S. Review: New sensors and data-driven approaches-A path to next generation phenomics. Plant Sci. 2019, 282, 2–10. [Google Scholar]

- Tan, C.; Zhou, X.; Zhang, P.; Wang, Z.; Wang, D.; Guo, W.; Yun, F. Predicting grain protein content of field-grown winter wheat with satellite images and partial least square algorithm. PLoS ONE 2020, 15, e0228500. [Google Scholar]

- Feng, S.; Cao, Y.; Xu, T.; Yu, F.; Chen, C.; Zhao, D.; Jin, Y. Inversion Based on High Spectrum and NSGA2-ELM Algorithm for the Nitrogen Content of Japonica Rice Leaves. Spectrosc. Spectr. Anal. 2020, 40, 2584–2591. [Google Scholar]

- Zhang, L.Y.; Han, W.T.; Niu, Y.X.; Chavez, J.L.; Shao, G.M.; Zhang, H.H. Evaluating the sensitivity of water stressed maize chlorophyll and structure based on UAV derived vegetation indices. Comput. Electron. Agric. 2021, 185, 106174. [Google Scholar]

| ZENMUSE L1 | |

|---|---|

| LiDAR | |

| Ranging accuracy | 3 cm at 100 m |

| Maximum number of echoes supported | 3 |

| FOV | Repeat scanning: 70.4° × 4.5° Non Repeat scanning: 70.4° × 77.2° |

| Data acquisition rate | Single echo: 240,000 points/s Multi echo: 480,000 points/s |

| IMU (Inertial Measurement Unit) | |

| Refresh rate | 200 |

| Course Accuracy; Pitch accuracy | 0.15°; 0.025° |

| RGB Camera | |

| Resolution of CMOS sensor | 5472 × 3648 |

| Focal length | 8.8 mm/24 mm (Equivalent full frame) |

| Aperture | f/2.8–f/11 |

| Dataset | Sample Size | Mean (H) | Std (H) | Mean (P) | Std (P) |

|---|---|---|---|---|---|

| Total | 440 | 84.28 cm | 20.61 cm | 88% | 18.20% |

| P70 (70–90%) | 62 | 85.63 cm | 22.82 cm | 81% | 5.65% |

| P90 (90–95%) | 32 | 86.94 cm | 17.82 cm | 92% | 1.37% |

| P95 (95–97%) | 43 | 90.49 cm | 21.43 cm | 95% | 0.59% |

| P97 (97–98%) | 37 | 84.76 cm | 21.27 cm | 97% | 0.29% |

| P98 (98–99%) | 71 | 87.38 cm | 17.21 cm | 98% | 0.29% |

| P99 (99–100%) | 124 | 84.25 cm | 19.18 cm | 99% | 0.30% |

| Dataset | Estimating Equation | R2 | RMSE (cm) | R2 Groth Rate | RMSE Reduction Rate |

|---|---|---|---|---|---|

| Total | HLiDAR + 6.97 × P | 0.77 | 9.43 | −2.53% | 15.81% |

| P70 (70–90%) | HLiDAR + 2.24 × P | 0.89 | 7.49 | 0% | −0.13% |

| P90 (90–95%) | HLiDAR + 1.18 × P | 0.86 | 6.76 | 3.61% | 8.15% |

| P95 (95–97%) | HLiDAR + 0.58 × P | 0.92 | 5.97 | 1.10% | 8.58% |

| P97 (97–98%) | HLiDAR − 1.43 × P | 0.91 | 6.32 | 1.11% | 0.94% |

| P98 (98–99%) | HLiDAR + 0.08 × P | 0.94 | 4.12 | 2.17% | 35.82% |

| P99 (99–100%) | HLiDAR + 14.86 × P | 0.88 | 6.46 | 11.40% | 62.49% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, W.; Yang, W.; Wu, J.; Chen, P.; Lan, Y.; Zhang, L. Canopy Laser Interception Compensation Mechanism—UAV LiDAR Precise Monitoring Method for Cotton Height. Agronomy 2023, 13, 2584. https://doi.org/10.3390/agronomy13102584

Xu W, Yang W, Wu J, Chen P, Lan Y, Zhang L. Canopy Laser Interception Compensation Mechanism—UAV LiDAR Precise Monitoring Method for Cotton Height. Agronomy. 2023; 13(10):2584. https://doi.org/10.3390/agronomy13102584

Chicago/Turabian StyleXu, Weicheng, Weiguang Yang, Jinhao Wu, Pengchao Chen, Yubin Lan, and Lei Zhang. 2023. "Canopy Laser Interception Compensation Mechanism—UAV LiDAR Precise Monitoring Method for Cotton Height" Agronomy 13, no. 10: 2584. https://doi.org/10.3390/agronomy13102584

APA StyleXu, W., Yang, W., Wu, J., Chen, P., Lan, Y., & Zhang, L. (2023). Canopy Laser Interception Compensation Mechanism—UAV LiDAR Precise Monitoring Method for Cotton Height. Agronomy, 13(10), 2584. https://doi.org/10.3390/agronomy13102584