Real-Time Detection System of Broken Corn Kernels Based on BCK-YOLOv7

Abstract

:1. Introduction

2. Materials and Methods

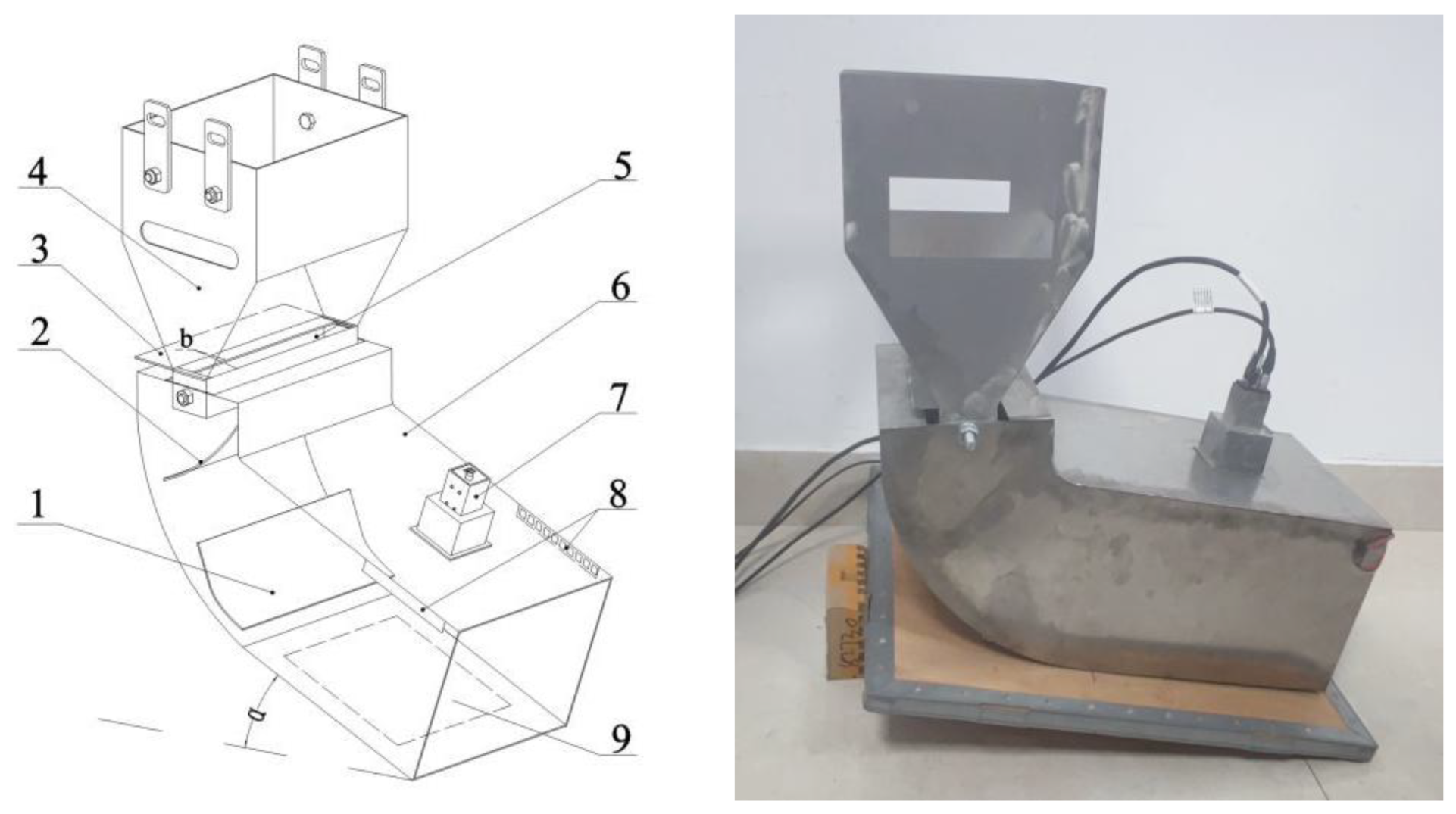

2.1. Corn Kernels Image Acquisition Device

2.2. Datasets Preparation

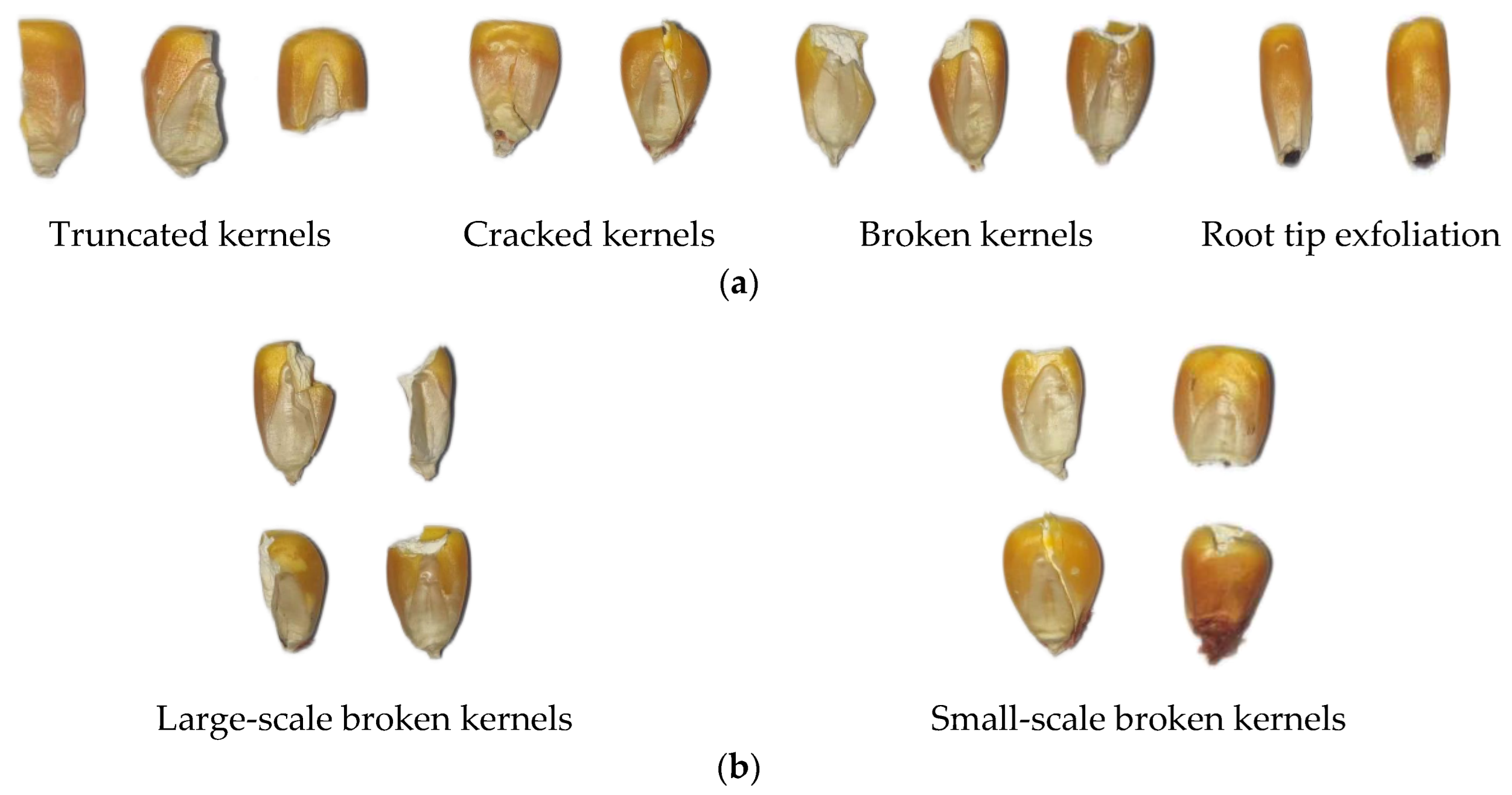

2.2.1. Broken Corn Kernel Definition

2.2.2. Corn Kernel Datasets

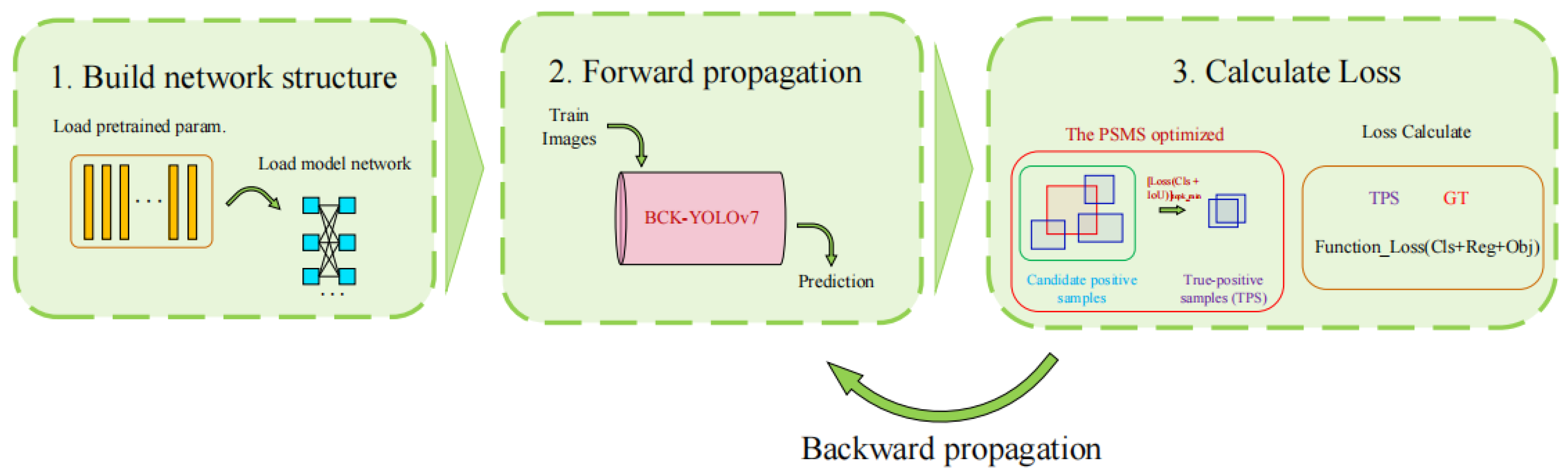

2.3. Broken Corn Kernel Detection Model

2.3.1. Baseline Model Determination

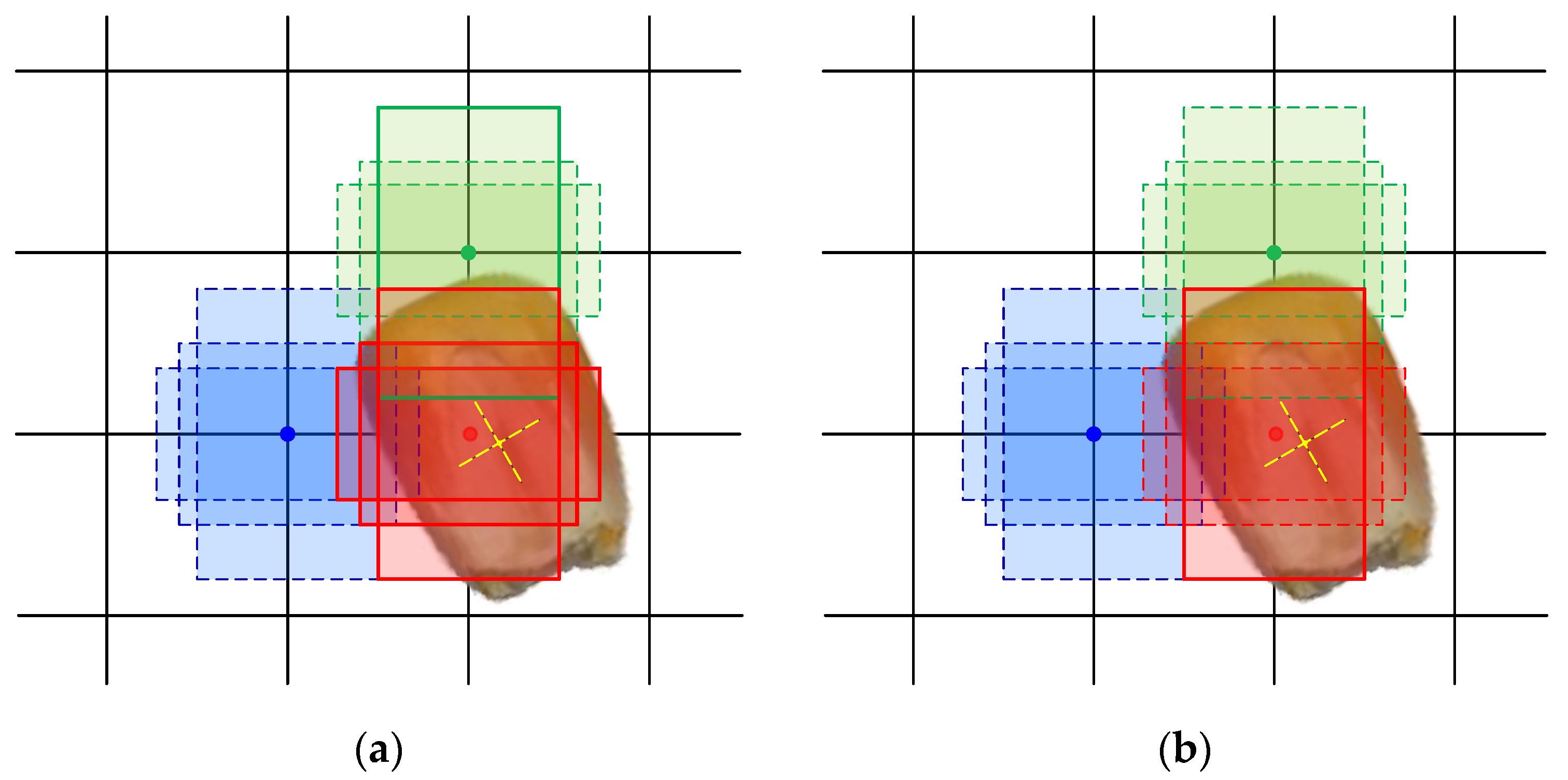

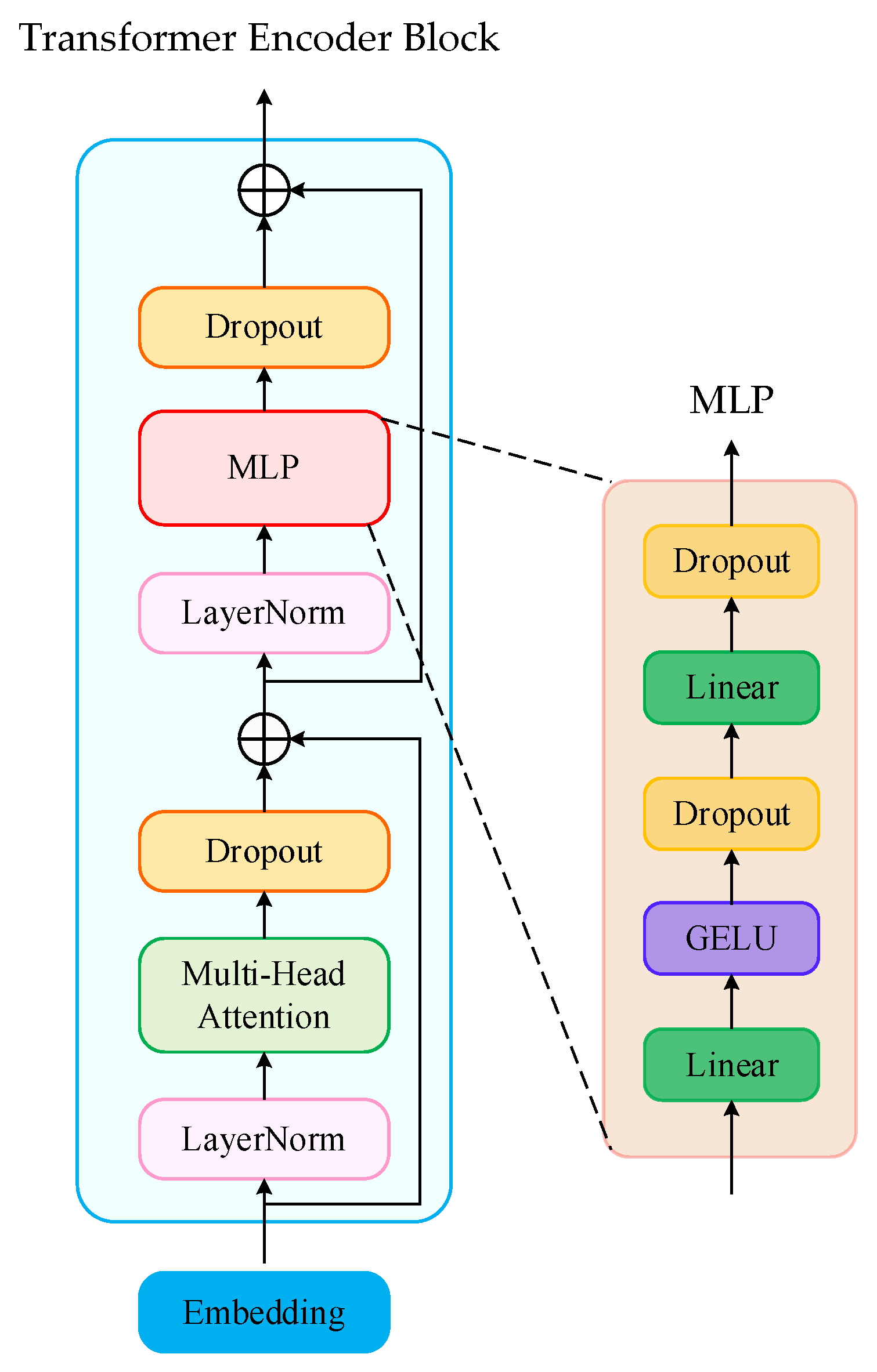

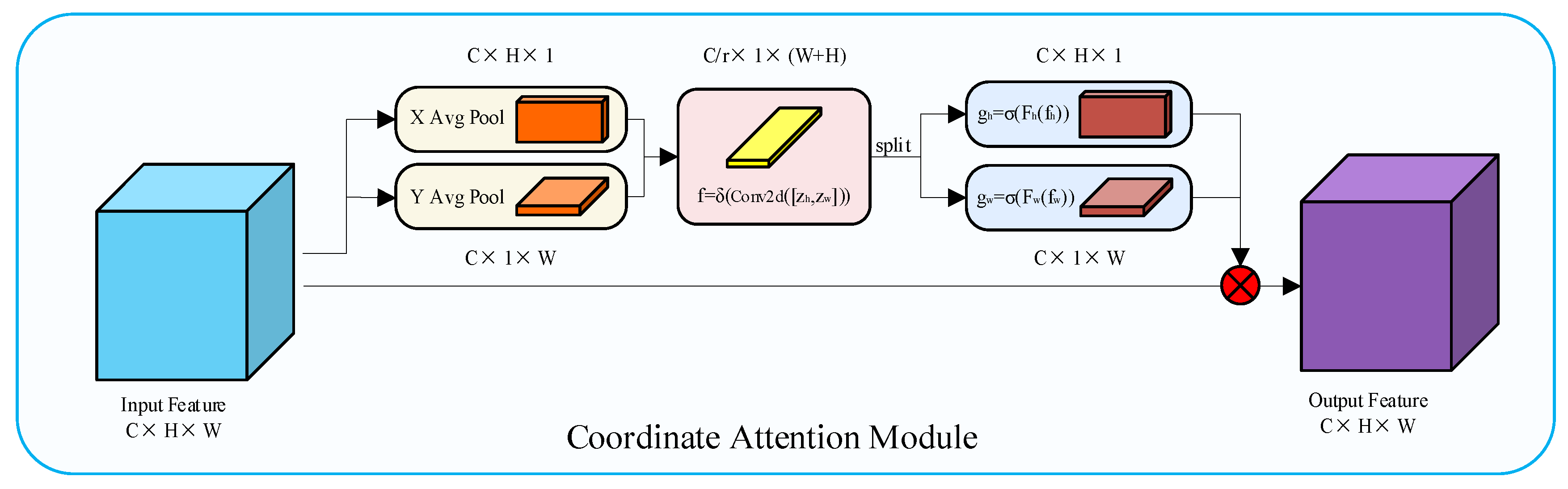

2.3.2. BCK-YOLOv7

2.4. Evaluation Metrics

2.5. Edge Deployment of BCK-YOLOv7 Model

3. Results and Discussions

3.1. Model Training Parameters and Environment Configuration

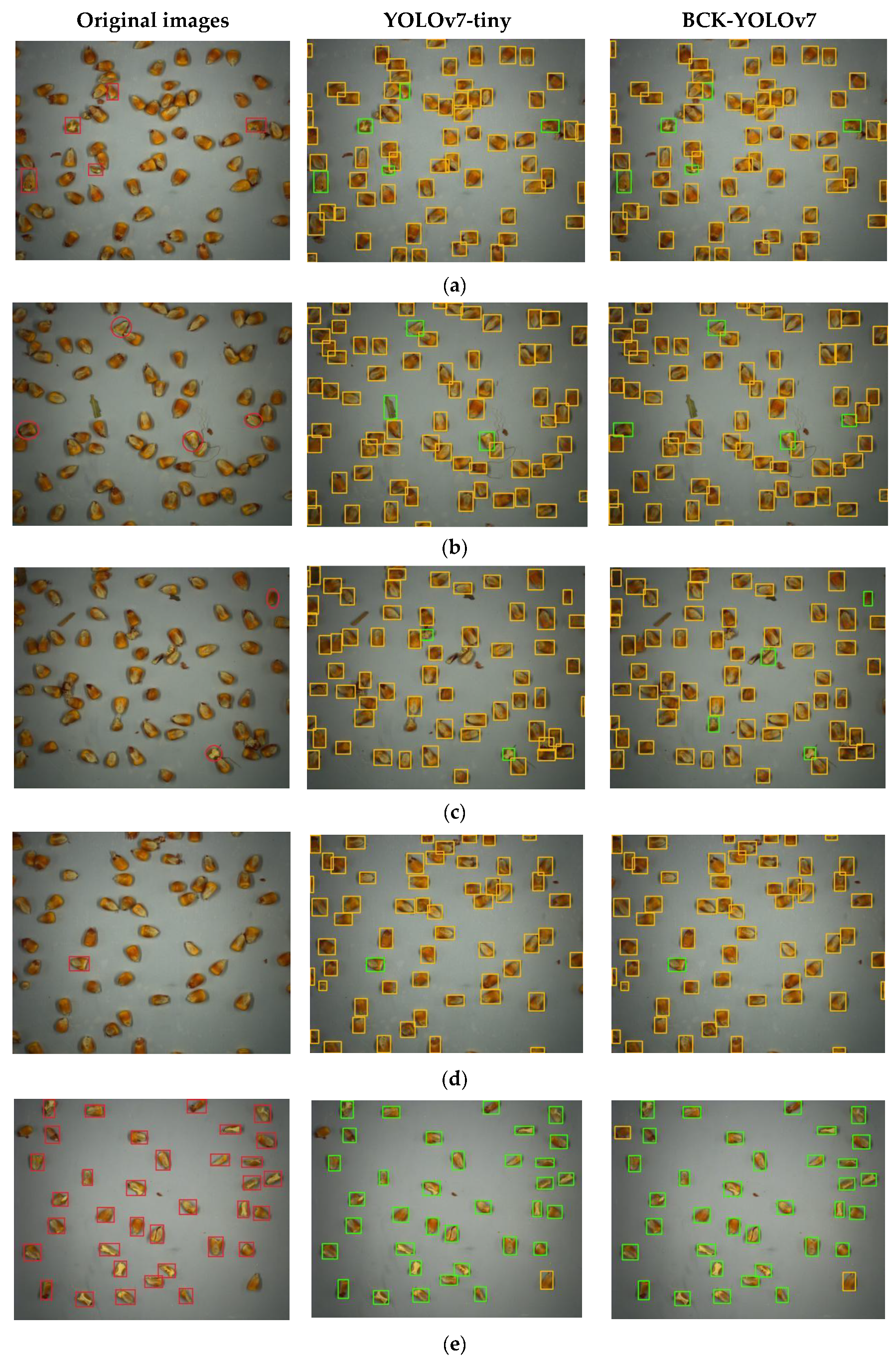

3.2. Broken Corn Kernel Detection Results

3.3. Ablation Experiments

3.4. Model Deployment

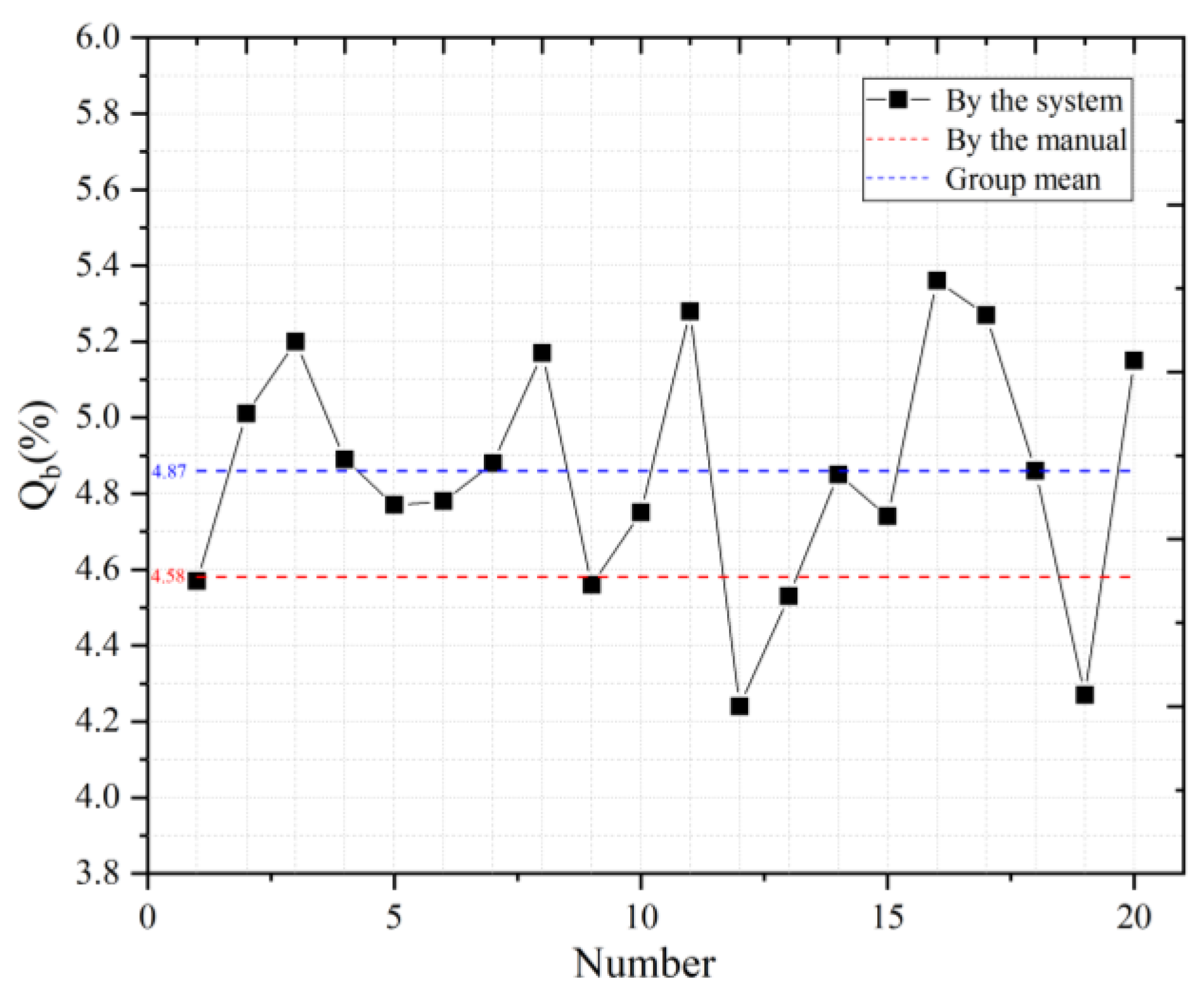

3.5. System Experiment

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, Q.; Chen, Y. Advantages Analysis of Corn Planting in China. J. Agric. Sci. Tech. China 2018, 20, 1–9. [Google Scholar]

- Xie, R.-Z.; Ming, B.; Gao, S.; Wang, K.-R.; Hou, P.; Li, S.-K. Current state and suggestions for mechanical harvesting of corn in China. J. Integr. Agric. 2022, 21, 892–897. [Google Scholar] [CrossRef]

- Guo, Y.-N.; Hou, L.-Y.; Li, L.-L.; Gao, S.; Hou, J.-F.; Ming, B.; Xie, R.-Z.; Xue, J.; Hou, P.; Wang, K.-R.; et al. Study of corn kernel breakage susceptibility as a function of its moisture content by using a laboratory grinding method. J. Integr. Agric. 2022, 21, 70–77. [Google Scholar] [CrossRef]

- Velesaca, H.O.; Suárez, P.L.; Mira, R.; Sappa, A.D. Computer vision based food grain classification: A comprehensive survey. Comput. Electron. Agric. 2021, 187, 106287. [Google Scholar] [CrossRef]

- Sen, N.; Shaojin, M.; Yankun, P.; Wei, W.; Yongyu, L. Research Progress of Rapid Optical Detection Technology and Equipment for Grain Quality. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. (China) 2022, 53, 1–12. [Google Scholar]

- Birla, R.; Chauhan, A.P.S. An Efficient Method for Quality Analysis of Rice Using Machine Vision System. J. Adv. Informat. Technol. 2015, 6, 140–145. [Google Scholar] [CrossRef]

- Singh, K.R.; Chaudhury, S. Efficient technique for rice grain classification using back-propagation neural network and wavelet decomposition. IET Comput. Vis. 2016, 10, 780–787. [Google Scholar] [CrossRef]

- Zhu, X.; Du, Y.; Chi, R.; Deng, X. Design of On-line Detection Device for Grain Breakage of Corn Harvester Based on OpenCV. In Proceedings of the 2019 ASABE Annual International Meeting. American Society of Agricultural and Biological Engineers, Boston, MA, USA, 7–10 July 2019. [Google Scholar] [CrossRef]

- Chen, J.; Lian, Y.; Zou, R.; Zhang, S.; Ning, X.; Han, M. Real-time grain breakage sensing for rice combine harvesters using machine vision technology. Int. J. Agric. Biol. Eng. 2020, 13, 194–199. [Google Scholar] [CrossRef]

- Wu, K.; Zhang, M.; Wang, G.; Chen, X.; Wu, J. A Continuous Single-Layer Discrete Tiling System for Online Detection of Corn Impurities and Breakage Rates. Agriculture 2022, 12, 948. [Google Scholar] [CrossRef]

- Kar, A.; Kulshreshtha, P.; Agrawal, A.; Palakkal, S.; Boregowda, L.R. Annotation-free Quality Estimation of Food Grains using Deep Neural Network. BMVC 2019, 52, 1–12. [Google Scholar]

- Velesaca, H.O.; Mira, R.; Suarez, P.L.; Larrea, C.X.; Sappa, A.D. Deep Learning based Corn Kernel Classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 66–67. [Google Scholar]

- Rasmussen, C.B.; Kirk, K.; Moeslund, T.B. Anchor tuning in Faster R-CNN for measuring corn silage physical characteristics. Comput. Electron. Agric. 2021, 188, 106344. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, S. Broken Corn Detection Based on an Adjusted YOLO With Focal Loss. IEEE Access 2019, 7, 68281–68289. [Google Scholar] [CrossRef]

- Zhang, Q.; Hu, J.; Xu, L.; Cai, Q.; Yu, X.; Liu, P. Impurity/Breakage Assessment of Vehicle-Mounted Dynamic Rice Grain Flow on Combine Harvester Based on Improved Deeplabv3+ and YOLOv4. IEEE Access 2023, 11, 49273–49288. [Google Scholar] [CrossRef]

- Liu, L.; Du, Y.; Chen, D.; Li, Y.; Li, X.; Zhao, X.; Li, G.; Mao, E. Impurity monitoring study for corn kernel harvesting based on machine vision and CPU-Net. Comput. Electron. Agric. 2022, 202, 107436. [Google Scholar] [CrossRef]

- Li, X.; Du, Y.; Yao, L.; Wu, J.; Liu, L. Design and Experiment of a Broken Corn Kernel Detection Device Based on the Yolov4-Tiny Algorithm. Agriculture 2021, 11, 1238. [Google Scholar] [CrossRef]

- Munappy, A.; Bosch, J.; Olsson, H.H.; Arpteg, A.; Brinne, B. Data management challenges for deep learning. In Proceedings of the 2019 45th Euromicro Conference on Software Engineering and Advanced Applications (SEAA), Kallithea, Greece, 28–30 August 2019; pp. 140–147. [Google Scholar]

- Whang, S.E.; Lee, J.-G.; Kaist, S.E.W.; Kaist, J.-G.L. Data collection and quality challenges for deep learning. Proc. VLDB Endow. 2020, 13, 3429–3432. [Google Scholar] [CrossRef]

- GB 1353-2018; Maize. State Administration for Standardization of China: Beijing, China, 2018.

- GB/T 5494-2019; Inspection of Grain and Oils—Determination of Foreign Matter and Unsound Kernels of Grain and Oilseeds. State Administration for Standardization of China: Beijing, China, 2019.

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; Chaurasia, A.; Changyu, L. ultralytics/yolov5. Github Repository, YOLOv5. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 21 June 2023).

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16×16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 630–645. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

| Dataset | Images | Whole Corn Kernel | Broken Corn Kernel |

|---|---|---|---|

| Train | 263 | 14,797 | 1503 |

| Validation | 88 | 4892 | 512 |

| Test | 87 | 4821 | 505 |

| Total | 438 | 24,510 | 2520 |

| Model | Feature Descriptions | mAP |

|---|---|---|

| YOLOv3 | Multi-scale predictions for small targets, but feature extraction ability is limited. | 89.8% |

| YOLOv4 | FPN + PANET structure for more features, but more computation. | 92.7% |

| YOLOv5 | More tricks for YOLOv4, which is generally similar. | 93.5% |

| YOLOv7 | ELAN module for more features and REP module upon deployment for faster prediction | 96.3% |

| Evaluation Metrics | Formula | Short Description |

|---|---|---|

| Precision (P) | TP and FP stand for the amount of correctly and incorrectly predicted positive samples, respectively. | |

| Recall (R) | FN stands for the number of negative samples the model predicts. | |

| Average Precision (AP) | APi represents the average precision rate of the model for the detection of each category. | |

| Mean Average Precision (mAP) | mAP refers to the dataset’s mean AP across all classes. This is the target detection domain’s most popular metric. |

| Models | Precision (%) | Recall (%) | mAP (%) | Param. (M) |

|---|---|---|---|---|

| YOLOv7-tiny | 93.2 | 93.2 | 96.3 | 6.02 |

| YOLOv7-tiny + Tuning | 96.2 (↑3.0) | 97.1 (↑3.9) | 98.1 (↑1.8) | 6.02 (=) |

| YOLOv7-tiny (Previous + TBE) * | 97.0 (↑0.8) | 95.6 (↓1.5) | 98.7 (↑0.6) | 12.79 (↑6.77) |

| YOLOv7-tiny (Previous + CA) * | 96.9 (↓0.1) | 97.5 (↑1.9) | 99.1 (↑0.4) | 12.80 (↑0.01) |

| Raw | FP32 Mode | FP16 Mode | INT8 | |

|---|---|---|---|---|

| Prediction accuracy (%) | 99.1 | 98.4 | 97.8 | 95.2 |

| Prediction speed (FPS) | 18 | 23 | 33 | 36 |

| Group | Number | Qb by System (%) | Group Mean(%) | Qb by Man (%) | |Absolute Deviation| (%) | Detect. Time (ms/per Image) |

|---|---|---|---|---|---|---|

| 1 | 0 | 4.17 | 4.58 | 0.01 | 143 | |

| 1 | 5.61 | 69 | ||||

| 2 | 5.88 | 4.57 | 39 | |||

| 3 | 3.67 | 38 | ||||

| 4 | 3.45 | 33 | ||||

| 2 | 5 | 6.47 | 4.58 | 0.73 | 33 | |

| 6 | 5.19 | 33 | ||||

| 7 | 4.76 | 5.31 | 33 | |||

| 8 | 5.27 | 33 | ||||

| 9 | 4.86 | |||||

| ··· ··· | ··· ··· | ··· ··· | ||||

| 20 | 95 | 6.23 | 4.58 | 0.57 | 33 | |

| 96 | 5.21 | 33 | ||||

| 97 | 5.10 | 5.15 | 33 | |||

| 98 | 4.21 | 33 | ||||

| 99 | 4.99 | 33 | ||||

| Mean | — | 4.87 | 4.58 | 0.35 | — | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Q.; Yang, H.; He, Q.; Yue, D.; Zhang, C.; Geng, D. Real-Time Detection System of Broken Corn Kernels Based on BCK-YOLOv7. Agronomy 2023, 13, 1750. https://doi.org/10.3390/agronomy13071750

Wang Q, Yang H, He Q, Yue D, Zhang C, Geng D. Real-Time Detection System of Broken Corn Kernels Based on BCK-YOLOv7. Agronomy. 2023; 13(7):1750. https://doi.org/10.3390/agronomy13071750

Chicago/Turabian StyleWang, Qihuan, Haolin Yang, Qianghao He, Dong Yue, Ce Zhang, and Duanyang Geng. 2023. "Real-Time Detection System of Broken Corn Kernels Based on BCK-YOLOv7" Agronomy 13, no. 7: 1750. https://doi.org/10.3390/agronomy13071750

APA StyleWang, Q., Yang, H., He, Q., Yue, D., Zhang, C., & Geng, D. (2023). Real-Time Detection System of Broken Corn Kernels Based on BCK-YOLOv7. Agronomy, 13(7), 1750. https://doi.org/10.3390/agronomy13071750