Monitoring of Heracleum sosnowskyi Manden Using UAV Multisensors: Case Study in Moscow Region, Russia

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

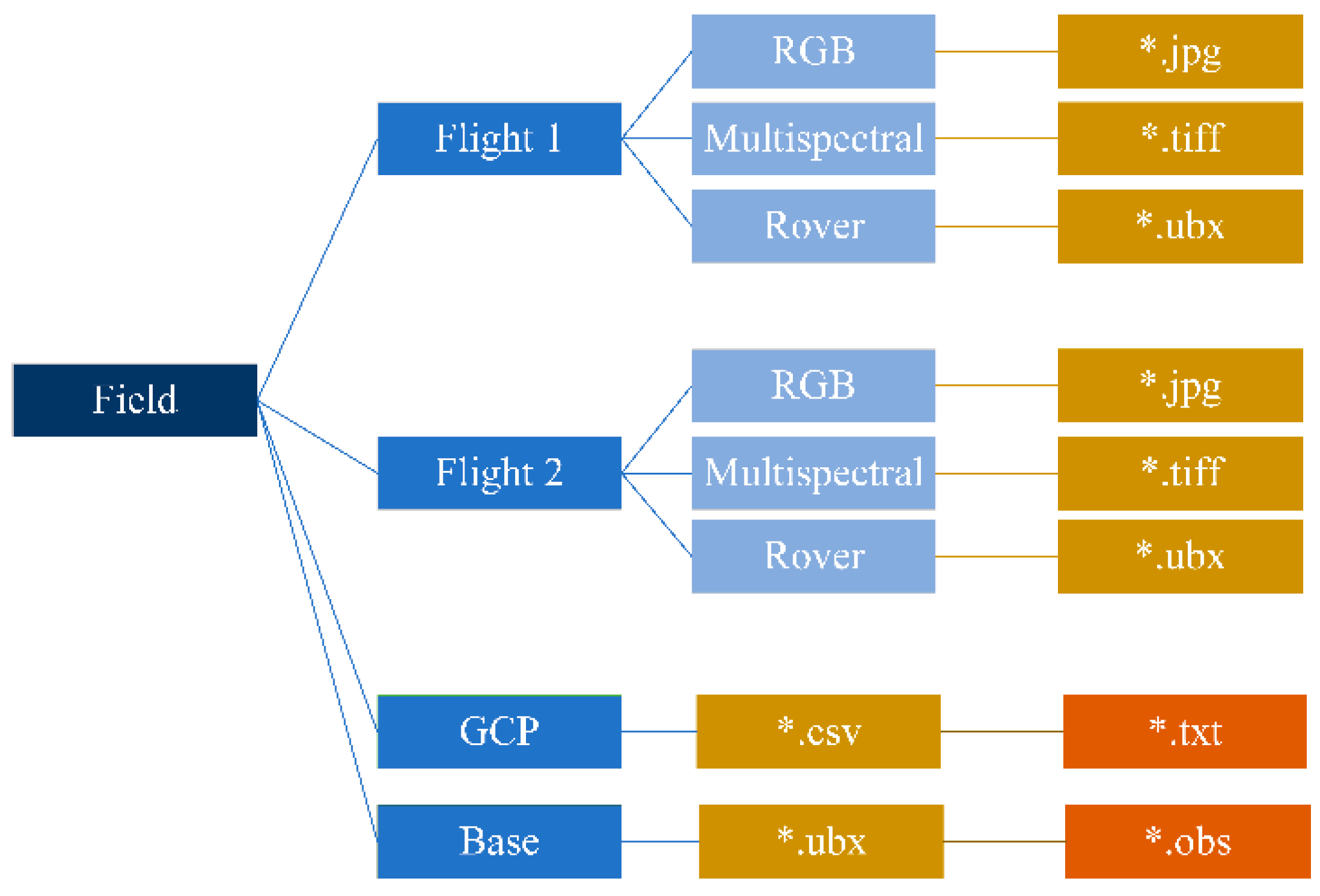

2.2. UAV Image Acquisition

2.3. Data Preparation and Processing

2.4. Assessment of Project Accuracy

2.5. UAVs’ Spectral and Derived Vegetation Indices

2.6. Collecting Ground Control Point

2.7. Statistical Analysis

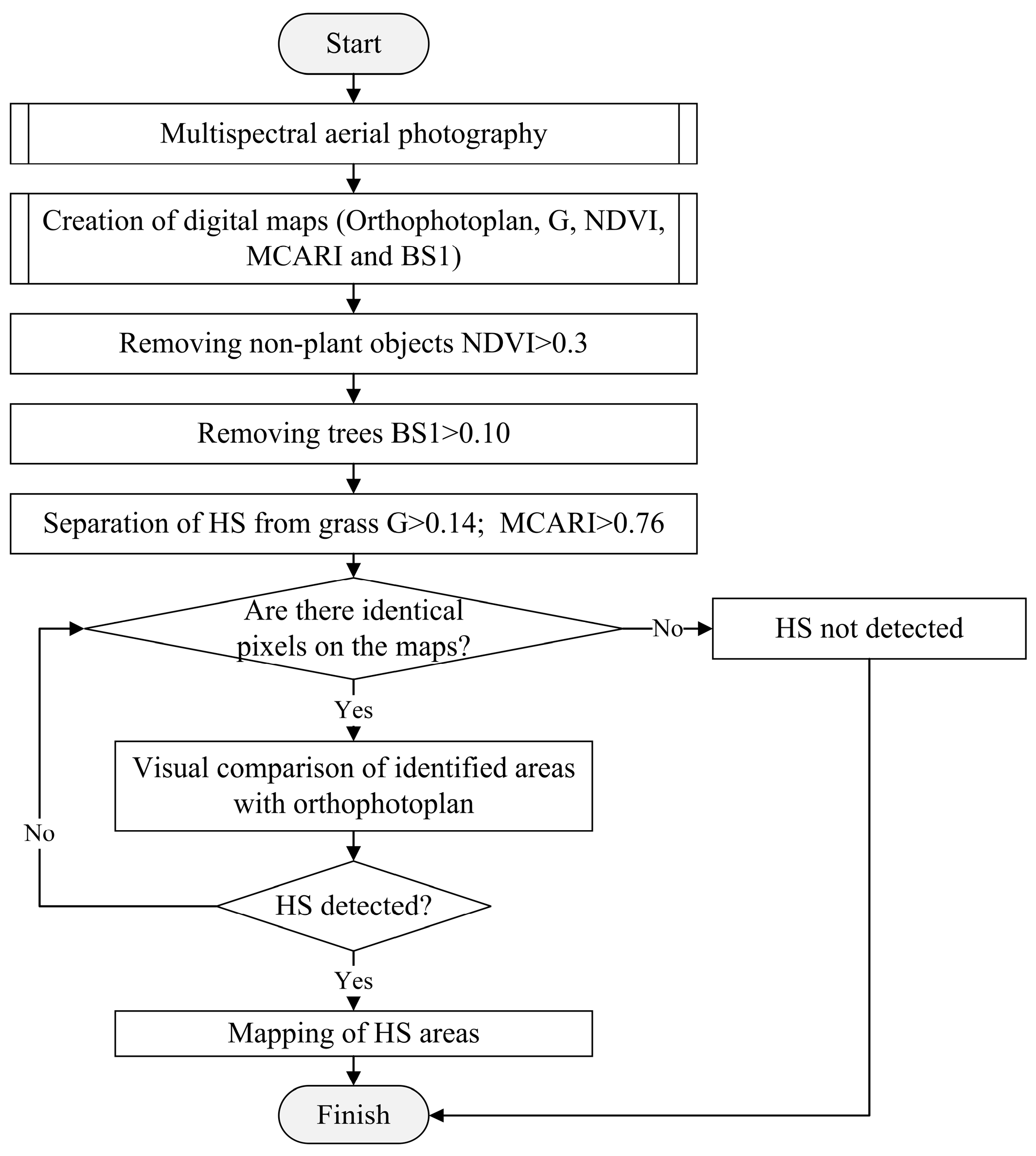

2.8. Developed Algorithm for Identification of Sosnowsky’s Hogweed

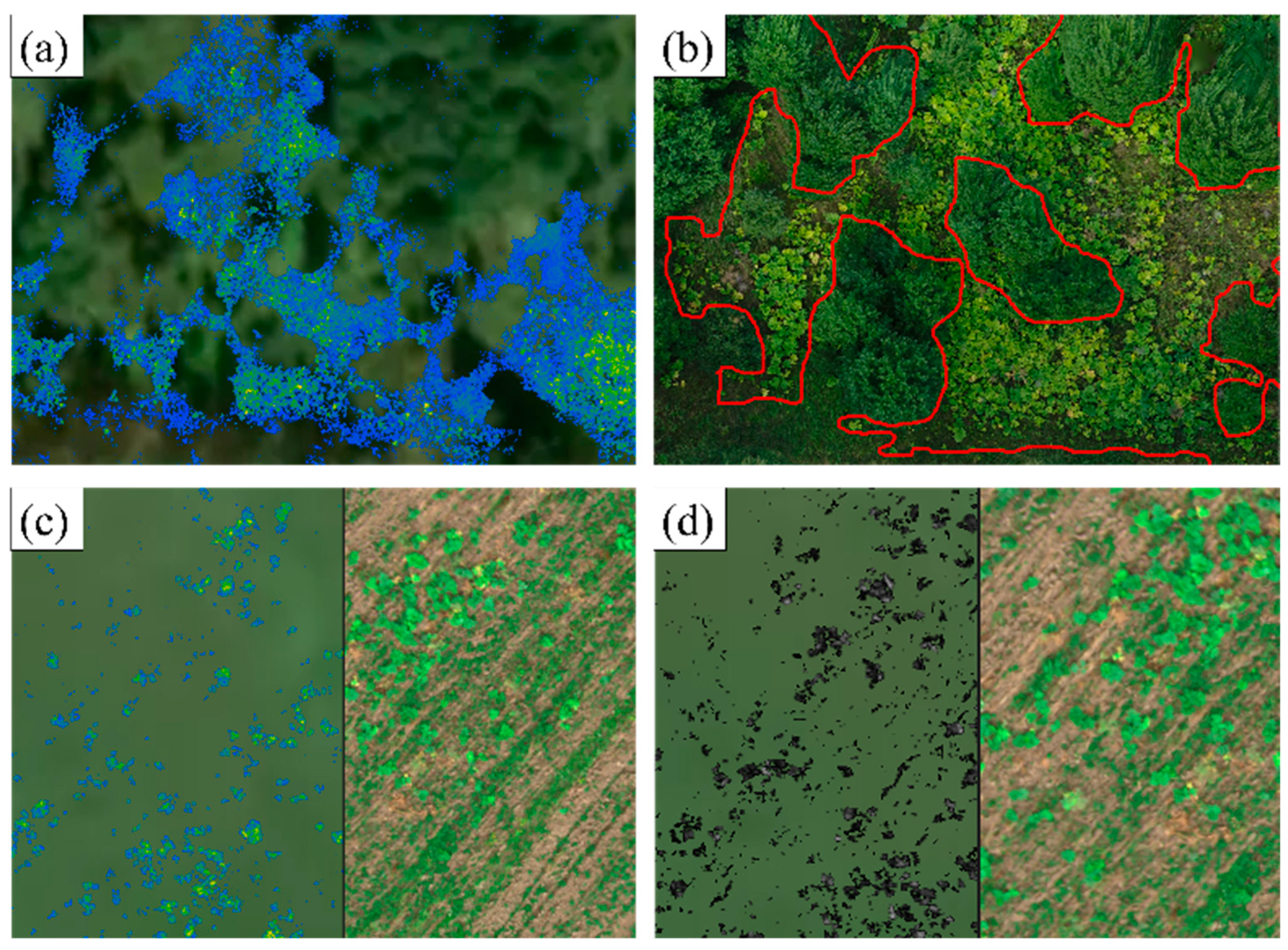

3. Results

3.1. Spectral Analysis of Sosnowsky’s Hogweed

3.2. Statistical Analysis of the Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Abramova, L.M.; Golovanov, Y.M.; Rogozhnikova, D.R. Sosnowsky’s Hogweed (Heracleum sosnowskyi Manden., Apiaceae) in Bashkortostan. Russ. J. Biol. Invasions 2021, 12, 127–135. [Google Scholar] [CrossRef]

- Bogdanov, V.; Osipov, A.; Garmanov, V.; Efimova, G.; Grik, A.; Zavarin, B.; Terleev, V.; Nikonorov, A. Problems and monitoring the spread of the ecologically dangerous plant Heracleum sosnowskyi in urbanized areas and methods to combat it. E3S Web Conf. 2021, 258, 08028. [Google Scholar] [CrossRef]

- Lobachevskiy, Y.P.; Beylis, V.M.; Tsench, Y.S. Digitization aspects of the system of technologies and machines. Elektrotekhnologii I Elektrooborud. V APK 2019, N3, 40–45. [Google Scholar]

- Mazitov, N.K.; Shogenov, Y.K.; Tsench, Y.S. Agricultural machinery: Solutions and prospects. Vestn. Viesh. 2018, N3, 94–100. [Google Scholar]

- Tsench, Y.; Maslov, G.; Trubilin, E. To the history of agricultural machinery development. Vestn. Bsau. 2018, 3, 117–123. [Google Scholar] [CrossRef]

- Lobachevskiy, Y.P.; Tsench, Y.S.; Beylis, V.M. Creation and development of systems for machines and technologies for the complex mechanization of technological processes in crop production. Hist. Sci. Eng. 2019, 12, 46–55. [Google Scholar]

- Grygus, I.; Lyko, S.; Stasiuk, M.; Zubkovych, I.; Zukow, W. Risks posed by Heracleum sosnowskyi Manden in the Rivne region. Ecol. Quest. 2018, 29, 35–42. [Google Scholar]

- Grzedzicka, E. Invasion of the giant hogweed and the Sosnowsky’s Hogweed as a multidisciplinary problem with unknown future—A review. Earth 2022, 3, 287–312. [Google Scholar] [CrossRef]

- Chadin, I.; Dalke, I.; Zakhozhiy, I.; Malyshev, R.; Madi, E.; Kuzivanova, O.; Kirillov, D.; Elsakov, V. Distribution of the invasive plant species Heracleum sosnowskyi Manden. in the Komi Republic (Russia). PhytoKeys 2017, 77, 71–80. [Google Scholar] [CrossRef]

- Kondrat’ev, M.N.; Budarin, S.N.; Larikova, Y.S. Physiological and ecological mechanisms of invasive penetration of Sosnowskyi hogweed (Heracleum sosnowskyi Manden.) in unexploitable agroecosystems. Izv. Timiryazev Agric. Acad. 2015, 2, 36–49. [Google Scholar]

- Sitzia, T.; Campagnaro, T.; Kowarik, I.; Trentanovi, G. Using forest management to control invasive alien species: Helping implement the new European regulation on invasive alien species. Biol. Invasions 2016, 18, 1–7. [Google Scholar] [CrossRef]

- Lozano, V.; Marzialetti, F.; Carranza, M.L.; Chapman, D.; Branquart, E.; Dološ, K.; Große-Stoltenberg, A.; Fiori, M.; Capece, P.; Brundu, G. Modelling Acacia saligna invasion in a large Mediterranean island using PAB factors: A tool for implementing the European legislation on invasive species. Ecol. Indic. 2020, 116, 106516. [Google Scholar] [CrossRef]

- Chmielewski, J.; Pobereżny, J.; Florek-Łuszczki, M.; Żeber-Dzikowska, I.; Szpringer, M. Sosnowsky’s hogweed—Current environmental problem. Environ. Prot. Nat. Resour. 2017, 28, 40–44. [Google Scholar] [CrossRef]

- Ryzhikov, D.M. Heracleum sosnowskyi growth area control by multispectral satellite data. Inf. Control. Syst. 2017, 6, 43–51. [Google Scholar] [CrossRef]

- Duncan, P.; Podest, E.; Esler, K.J.; Geerts, S.; Lyons, C. Mapping invasive Herbaceous plant species with Sentinel-2 satellite imagery: Echium plantagineum in a Mediterranean shrubland as a case study. Geomatics 2023, 3, 328–344. [Google Scholar] [CrossRef]

- Newete, S.W.; Mayonde, S.; Kekana, T.; Adam, E. A rapid and accurate method of mapping invasive Tamarix genotypes using Sentinel-2 images. PeerJ 2023, 11, e15027. [Google Scholar] [CrossRef] [PubMed]

- Duarte, L.; Castro, J.P.; Sousa, J.J.; Pádua, L. GIS application to detect invasive species in aquatic ecosystems. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 6013–6018. [Google Scholar] [CrossRef]

- Grigoriev, A.N.; Ryzhikov, D.M. General methodology and results of spectroradiometric research of reflective properties of the Heracleum sosnowskyi in the range 320–1100 nm for Earth remote sensing. Mod. Probl. Remote Sens. Space 2018, 15, 43–51. [Google Scholar] [CrossRef]

- Lachuga, Y.F.; Izmaylov, A.Y.; Lobachevsky, Y.P.; Shogenov, Y.K. The results of scientific research of agro-engineering scientific organizations on the development of digital systems in agriculture. Mach. Equip. Rural. Area 2022, 4, 2–6. [Google Scholar] [CrossRef]

- Alvarez-Taboada, F.; Paredes, C.; Julián-Pelaz, J. Mapping of the invasive Species Hakea sericea using unmanned aerial vehicle (UAV) and WorldView-2 imagery and an object-oriented approach. Remote Sens. 2017, 9, 913. [Google Scholar] [CrossRef]

- Wang, X.; Wang, L.; Tian, J.; Shi, C. Object-based spectral-phenological features for mapping invasive Spartina alterniflora. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102349. [Google Scholar] [CrossRef]

- Nowak, M.M.; Dziób, K.; Bogawski, P. Unmanned aerial vehicles (UAVs) in environmental biology: A review. Eur. J. Ecol. 2018, 4, 56–74. [Google Scholar] [CrossRef]

- Michez, A.; Piégay, H.; Jonathan, L.; Claessens, H.; Lejeune, P. Mapping of riparian invasive species with supervised classification of unmanned aerials system (UAS) imagery. Int. J. Appl. Earth Obs. Geoinf. 2016, 44, 88–94. [Google Scholar] [CrossRef]

- Müllerová, J.; Brůna, J.; Bartaloš, T.; Dvořák, P.; Vítková, M.; Pyšek, P. Timing is important: Unmanned aircraft vs. satellite imagery in plant invasion monitoring. Front. Plant Sci. 2017, 8, 887. [Google Scholar] [CrossRef]

- Menshchikov, A.; Shadrin, D.; Prutyanov, V.; Lopatkin, D.; Sosnin, S.; Tsykunov, E.; Iakovlev, E.; Iakovlev, E.; Somov, A. Real-time detection of hogweed: UAV platform empowered by deep learning. IEEE Trans. Comput. 2021, 70, 1175–1188. [Google Scholar] [CrossRef]

- Koshelev, I.; Savinov, M.; Menshchikov, A.; Somov, A. Drone-aided detection of weeds: Transfer learning for embedded image processing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 102–111. [Google Scholar] [CrossRef]

- Müllerová, J.; Bartaloš, T.; Brůna, J.; Dvořák, P.; Vítková, M. Unmanned aircraft in nature conservation: An example from plant invasions. Int. J. Remote Sens. 2017, 38, 2177–2198. [Google Scholar] [CrossRef]

- Imanni, H.S.E.; Harti, A.E.; Bachaoui, E.M.; Mouncif, H.; Eddassouqui, F.; Hasnai, M.A.; Zinelabidine, M.I. Multispectral UAV data for detection of weeds in a citrus farm using machine learning and Google Earth Engine: Case study of Morocco. Remote Sens. Appl. Soc. Environ. 2023, 30, 100941. [Google Scholar] [CrossRef]

- Rosle, R.; Sulaiman, N.; Che′Ya, N.N.; Radzi, M.F.M.; Omar, M.H.; Berahim, Z.; Ilahi, W.F.F.; Shah, J.A.; Ismail, M.R. Weed detection in rice fields using UAV and multispectral aerial imagery. Chem. Proc. 2022, 10, 44. [Google Scholar] [CrossRef]

- Kawamura, K.; Asai, H.; Yasuda, T.; Soisouvanh, P.; Phongchanmixay, S. Discriminating crops/weeds in an upland rice field from UAV images with the SLIC-RF algorithm. Plant Prod. Sci. 2020, 24, 198–215. [Google Scholar] [CrossRef]

- Osorio, K.; Puerto, A.; Pedraza, C.; Jamaica, D.; Rodríguez, L. A deep learning approach for weed detection in lettuce crops using multispectral images. AgriEngineering 2020, 2, 471–488. [Google Scholar] [CrossRef]

- Dos Santos Ferreira, A.; Freitas, D.M.; da Silva, G.G.; Pistori, H.; Folhes, M.T. Weed detection in soybean crops using ConvNets. Comput. Electron. Agric. 2017, 143, 314–324. [Google Scholar] [CrossRef]

- Su, J.; Yi, D.; Coombes, M.; Liu, C.; Zhai, X.; McDonald-Maier, K.; Chen, W.H. Spectral analysis and mapping of blackgrass weed by leveraging machine learning and UAV multispectral imagery. Comput. Electron. Agric. 2022, 192, 106621. [Google Scholar] [CrossRef]

- Oldeland, J.; Große-Stoltenberg, A.; Naftal, L.; Strohbach, B.J. The potential of UAV derived image features for discriminating savannah tree species. In The Roles of Remote Sensing in Nature Conservation; Díaz-Delgado, R., Lucas, R., Hurford, C., Eds.; Springer: Cham, Switzerland, 2017; pp. 183–202. [Google Scholar] [CrossRef]

- Lopatin, J.; Dolos, K.; Kattenborn, T.; Fassnacht, F.E. How canopy shadow affects invasive plant species classification in high spatial resolution remote sensing. Remote Sens. Ecol. Conserv. 2019, 5, 302–317. [Google Scholar] [CrossRef]

- De Sá, N.C.; Castro, P.; Carvalho, S.; Marchante, E.; López-Núñez, F.A.; Marchante, H. Mapping the flowering of an invasive plant using Unmanned Aerial Vehicles: Is there potential for biocontrol monitoring? Front. Plant Sci. 2018, 9, 1–13. [Google Scholar] [CrossRef]

- Kattenborn, T.; Lopatin, J.; Förster, M.; Braun, A.C.; Fassnacht, F.E. UAV data as alternative to field sampling to map woody invasive species based on combined Sentinel-1 and Sentinel-2 data. Remote Sens. Environ. 2019, 227, 61–73. [Google Scholar] [CrossRef]

- Savin, I.Y.; Andronov, D.P.; Shishkonakova, E.A.; Vernyuk, Y.I. Detecting Sosnowskyi’s Hogweed (Heracleum sosnowskyi Manden.) using UAV survey data. Russ. Agric. Sci. 2021, 47, S90–S96. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A review on the use of unmanned aerial vehicles and imaging sensors for monitoring and assessing plant stresses. Drones 2019, 3, 40. [Google Scholar] [CrossRef]

- Hafeez, A.; Husain, M.A.; Singh, S.; Chauhan, A.; Khan, M.T.; Kumar, N.; Chauhan, A.; Soni, S. Implementation of drone technology for farm monitoring & pesticide spraying: A review. Inf. Process. Agric. 2022, 10, 192–203. [Google Scholar] [CrossRef]

- Bzdęga, K.; Zarychta, A.; Urbisz, A.; Szporak-Wasilewska, S.; Ludynia, M.; Fojcik, B.; Tokarska-Guzik, B. Geostatistical models with the use of hyperspectral data and seasonal variation—A new approach for evaluating the risk posed by invasive plants. Ecol. Indic. 2021, 121, 107204. [Google Scholar] [CrossRef]

- Vaz, A.S.; Alcaraz-Segura, D.; Campos, J.C.; Vicente, J.R.; Honrado, J.P. Managing plant invasions through the lens of remote sensing: A review of progress and the way forward. Sci. Total Environ. 2018, 642, 1328–1339. [Google Scholar] [CrossRef]

- Müllerová, J.; Pergl, J.; Pyšek, P. Remote sensing as a tool for monitoring plant invasions: Testing the effects of data resolution and image classification approach on the detection of a model plant species Heracleum mantegazzianum (giant hogweed). Int. J. Appl. Earth Obs. Geoinf. 2013, 25, 55–65. [Google Scholar] [CrossRef]

- Niphadkar, M.; Nagendra, H. Remote sensing of invasive plants: Incorporating functional traits into the picture. Int. J. Remote Sens. 2016, 37, 3074–3085. [Google Scholar] [CrossRef]

- Royimani, L.; Mutanga, O.; Odindi, J.; Dube, T.; Matongera, T.N. Advancements in satellite remote sensing for mapping and monitoring of alien invasive plant species (AIPs). Phys. Chem. Earth Parts A/B/C 2019, 112, 237–245. [Google Scholar] [CrossRef]

- Kurbanov, R.K.; Zakharova, N.I. Justifying the parameters for an unmanned aircraft flight mission of multispectral aerial photography. Agric. Mach. Technol. 2022, 16, 33–39. [Google Scholar] [CrossRef]

- Kurbanov, R.K.; Zakharova, N.I.; Gorshkov, D.M. Improving the accuracy of aerial photography using ground control points. Agric. Mach. Technol. 2021, 15, 42–47. [Google Scholar] [CrossRef]

- Ryzhikov, D.M. Control of Sosnowsky’s Hogweed Growth Zones Based on Spectral Characteristics of Reflected Waves Optical Range. Ph.D. Thesis, Saint-Petersburg State University of Aerospace Instrumentation, St. Petersburg, Russia, 2019; 221p. [Google Scholar]

- Solymosi, K.; Kövér, G.; Romvári, R. The development of vegetation indices: A short overview. ACTA Agrar. Kaposváriensis 2019, 23, 75–90. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H., Jr.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the great plains with ERTS. NASA Spec. Publ. 1974, 351, 309–317. [Google Scholar]

- Cammarano, D.; Fitzgerald, G.; Basso, B.; O’Leary, G.; Chen, D.; Grace, P.; Costanza, F. Use of the canopy chlorophyl content index (CCCI) for remote estimation of wheat nitrogen content in rainfed environments. Agron. J. 2011, 103, 1597–1603. [Google Scholar] [CrossRef]

- Mulla, D.J. Twenty-five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. Spec. Issue Sens. Technol. Sustain. Agric. 2014, 114, 358–371. [Google Scholar] [CrossRef]

- Wang, F.M.; Huang, J.F.; Tang, Y.L.; Wang, X.Z. New vegetation index and its application in estimating leaf area index of rice. Rice Sci. 2007, 14, 195–203. [Google Scholar] [CrossRef]

- Strong, C.J.; Burnside, N.G.; Llewellyn, D. The potential of small-unmanned aircraft systems for the rapid detection of threatened unimproved grassland communities using an Enhanced normalized difference vegetation index. PLoS ONE 2017, 12, e0186193. [Google Scholar] [CrossRef]

- Kang, Y.; Nam, J.; Kim, Y.; Lee, S.; Seong, D.; Jang, S.; Ryu, C. Assessment of regression models for predicting rice yield and protein content using unmanned aerial vehicle-based multispectral imagery. Remote Sens. 2021, 13, 1508. [Google Scholar] [CrossRef]

- Clemente, A.A.; Maciel, G.M.; Siquieroli, A.C.; de Araujo Gallis, R.B.; Pereira, L.M.; Duarte, J.G. High-throughput phenotyping to detect anthocyanins, chlorophylls, and carotenoids in red lettuce germplasm. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102533. [Google Scholar] [CrossRef]

- Rumora, L.; Majić, I.; Miler, M.; Medak, D. Spatial video remote sensing for urban vegetation mapping using vegetation indices. Urban Ecosyst. 2021, 24, 21–33. [Google Scholar] [CrossRef]

- Morales-Gallegos, L.M.; Martínez-Trinidad, T.; Hernández-de la Rosa, P.; Gómez-Guerrero, A.; Alvarado-Rosales, D.; Saavedra-Romero, L.L. Tree health condition in urban green areas assessed through crown indicators and vegetation indices. Forests 2023, 14, 1673. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Aubry-Kientz, M.; Vincent, G.; Senyondo, H.; White, E.P. DeepForest: A Python package for RGB deep learning tree crown delineation. Methods Ecol. Evol. 2020, 11, 1743–1751. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.; Zare, A.; White, E. Individual tree-crown detection in RGB imagery using semi-supervised deep learning neural networks. Remote Sens. 2019, 11, 1309. [Google Scholar] [CrossRef]

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar] [CrossRef]

- Marzialetti, F.; Frate, L.; De Simone, W.; Frattaroli, A.R.; Acosta, A.T.R.; Carranza, M.L. Unmanned aerial vehicle (UAV)-based mapping of Acacia saligna invasion in the Mediterranean coast. Remote Sens. 2021, 13, 3361. [Google Scholar] [CrossRef]

- Hill, D.J.; Tarasoff, C.; Whitworth, G.E.; Baron, J.; Bradshaw, J.L.; Church, J.S. Utility of unmanned aerial vehicles for mapping invasive plant species: A case study on yellow flag iris (Iris pseudacorus L.). Int. J. Remote Sens. 2017, 38, 2083–2105. [Google Scholar] [CrossRef]

- Türk, T.; Tunalioglu, N.; Erdogan, B.; Ocalan, T.; Gurturk, M. Accuracy assessment of UAV-post-processing kinematic (PPK) and UAV-traditional (with ground control points) georeferencing methods. Environ. Monit. Assess. 2022, 194, 476. [Google Scholar] [CrossRef] [PubMed]

- Vinci, A.; Brigante, R.; Traini, C.; Farinelli, D. Geometrical characterization of hazelnut trees in an intensive orchard by an unmanned aerial vehicle (UAV) for precision agriculture applications. Remote Sens. 2023, 15, 541. [Google Scholar] [CrossRef]

- Demir, S.; Dursun, I. Determining burned areas using different threshold values of NDVI with Sentinel-2 satellite images on gee platform: A case study of Muğla province. Int. J. Sustain. Eng. Technol. 2023, 2, 117–130. [Google Scholar]

- Xing, F.; An, R.; Guo, X.; Shen, X. Mapping invasive noxious weed species in the alpine grassland ecosystems using very high spatial resolution UAV hyperspectral imagery and a novel deep learning model. GIScience Remote Sens. 2023, 61, 2327146. [Google Scholar] [CrossRef]

- Wijesingha, J.; Astor, T.; Schulze-Brüninghoff, D.; Wachendorf, M. Mapping invasive Lupinus polyphyllus Lindl. In semi-natural grasslands using object-based image analysis of UAV-borne images. J. Photogramm. Remote Sens. Geoinf. Sci. 2020, 88, 391–406. [Google Scholar] [CrossRef]

- Daughtry, C.; Walthall, C.L.; Kim, M.S.; Brown de Colstoun, E.; McMurtrey, J.E., III. Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Xu, S.; Xu, X.; Blacker, C.; Gaulton, R.; Zhu, Q.; Yang, M.; Yang, G.; Zhang, J.; Yang, Y.; Yang, M.; et al. Estimation of leaf nitrogen content in rice using vegetation indices and feature variable optimization with information fusion of multiple-sensor images from UAV. Remote Sens. 2023, 15, 854. [Google Scholar] [CrossRef]

- Shanmugapriya, P.; Latha, K.R.; Pazhanivelan, S.; Kumaraperumal, R.; Karthikeyan, G.; Sudarmanian, N.S. Cotton yield prediction using drone-derived LAI and chlorophyll content. J. Agrometeorol. 2022, 24, 348–352. [Google Scholar] [CrossRef]

- Parida, P.K.; Somasundaram, E.; Krishnan, R.; Radhamani, S.; Sivakumar, U.; Parameswari, E.; Raja, R.; Shri Rangasami, S.R.; Sangeetha, S.P.; Gangai Selvi, R. Unmanned aerial vehicle-measured multispectral vegetation indices for predicting LAI, SPAD chlorophyll, and yield of maize. Agriculture 2024, 14, 1110. [Google Scholar] [CrossRef]

| Sites Larger Than 10 ha | Sites Larger Than 5 ha | Sites Larger Than 1 ha | Sites Less Than 1 ha | In Total | |

|---|---|---|---|---|---|

| Number of sites, pcs. | 7 | 8 | 30 | 101 | 146 |

| Total area, ha | 153.86 | 49.98 | 65.97 | 35.19 | 304.63 |

| Date | 12 May–12 June 2020 | 4 July 2021 | 26 June 2022 | Total |

|---|---|---|---|---|

| Area, ha | 207.17 | 45.35 | 52.48 | 305 |

| Number of sites, pcs. | 144 | 1 | 1 | 146 |

| Number of flights, pcs. | 150 | 5 | 5 | 160 |

| RGB data, Gb | 31.80 | 12.90 | 15.10 | 59.8 |

| Multispectral data, Gb | 236.1 | 103 | 115.6 | 454.7 |

| Location | 55°24′54.4″ N 37°14′2″ E | 56°5′18.7″ N 37°54′48.9″ E | ||

| Vegetation Index/Spectral Channel | Range/Formula | Source |

|---|---|---|

| Blue (B) | p475 ± 32 nm | MicaSense Knowledge Base https://support.micasense.com/hc/en-us/articles/360010025413-Altum-Integration-Guide (accessed on 2 April 2020) |

| Green (G) | p560 ± 27 nm | |

| Red I | p668 ± 16 nm | |

| Red Edge (RE) | p717 ± 12 nm | |

| Near-Infrared (NIR) | p842 ± 57 nm | |

| NDVI | Rouse et al. (1974) [51] | |

| NDRE | Cammarano et al. (2011) [52] | |

| MCARI | Mulla (2014) [53] | |

| GNDVI | Mulla (2014) [53] | |

| GBNDVI | Fu-min et al. (2007) [54] | |

| EVI | Mulla (2014) [53] | |

| ENDVI | Strong et al. (2017) [55] | |

| CIRedEdge (CIRE) | Kang et al. (2021) [56] | |

| CIGreen (CIG) | Clemente et al. (2021) [57] | |

| CI | Index DataBase https://www.indexdatabase.de/db/i-single.php?id=11 (accessed on 4 April 2020) | |

| BWDRVI | Rumora et al. (2021) [58] | |

| BNDVI | Morales-Gallegos et al. (2023) [59] | |

| BS1 | Custom Index |

| NDVI | B | G | R | RE | NIR | |

|---|---|---|---|---|---|---|

| t HS vs. GR | 1.24 | 2.75 ** | 9.76 *** | 4.59 *** | 9.98 *** | 6.53 *** |

| t HS vs. TR | 3.98 *** | 6.90 *** | 8.69 *** | 7.49 *** | 10.91 *** | 7.43 *** |

| t GR vs. TR | 2.11 * | 1.82 | 3.20 ** | 1.44 | 0.84 | 0.63 |

| NDRE | MCARI | GNDVI | GBNDVI | EVI | ENDVI | |

| t HS vs. GR | 5.72 *** | 7.93 *** | 5.75 *** | 4.37 *** | 6.07 *** | 0.28 |

| t HS vs. TR | 7.06 *** | 7.95 *** | 6.10 *** | 5.71 *** | 6.05 *** | 2.66 ** |

| t GR vs. TR | 1.92 | 0.39 | 0.87 | 1.28 | 0.29 | 2.41 * |

| CIRE | CIG | CI | BWDRVI | BNDVI | BS1 | |

| t HS vs. GR | 5.74 *** | 6.19 *** | 1.90 | 0.32 | 0.10 | 11.60 *** |

| t HS vs. TR | 6.47 *** | 5.84 *** | 2.33 * | 0.74 | 3.08 ** | 2.88 ** |

| t GR vs. TR | 2.58 * | 2.32 * | 1.57 | 0.35 | 2.42 * | 7.47 *** |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kurbanov, R.K.; Dalevich, A.N.; Dorokhov, A.S.; Zakharova, N.I.; Rebouh, N.Y.; Kucher, D.E.; Litvinov, M.A.; Ali, A.M. Monitoring of Heracleum sosnowskyi Manden Using UAV Multisensors: Case Study in Moscow Region, Russia. Agronomy 2024, 14, 2451. https://doi.org/10.3390/agronomy14102451

Kurbanov RK, Dalevich AN, Dorokhov AS, Zakharova NI, Rebouh NY, Kucher DE, Litvinov MA, Ali AM. Monitoring of Heracleum sosnowskyi Manden Using UAV Multisensors: Case Study in Moscow Region, Russia. Agronomy. 2024; 14(10):2451. https://doi.org/10.3390/agronomy14102451

Chicago/Turabian StyleKurbanov, Rashid K., Arkady N. Dalevich, Alexey S. Dorokhov, Natalia I. Zakharova, Nazih Y. Rebouh, Dmitry E. Kucher, Maxim A. Litvinov, and Abdelraouf M. Ali. 2024. "Monitoring of Heracleum sosnowskyi Manden Using UAV Multisensors: Case Study in Moscow Region, Russia" Agronomy 14, no. 10: 2451. https://doi.org/10.3390/agronomy14102451

APA StyleKurbanov, R. K., Dalevich, A. N., Dorokhov, A. S., Zakharova, N. I., Rebouh, N. Y., Kucher, D. E., Litvinov, M. A., & Ali, A. M. (2024). Monitoring of Heracleum sosnowskyi Manden Using UAV Multisensors: Case Study in Moscow Region, Russia. Agronomy, 14(10), 2451. https://doi.org/10.3390/agronomy14102451