The Effects of Display Type, Weather Type, and Pilot Experience on Pilot Interpretation of Weather Products

Abstract

:1. Introduction

1.1. GA Weather Product/Displays

1.2. Weather Phenomena

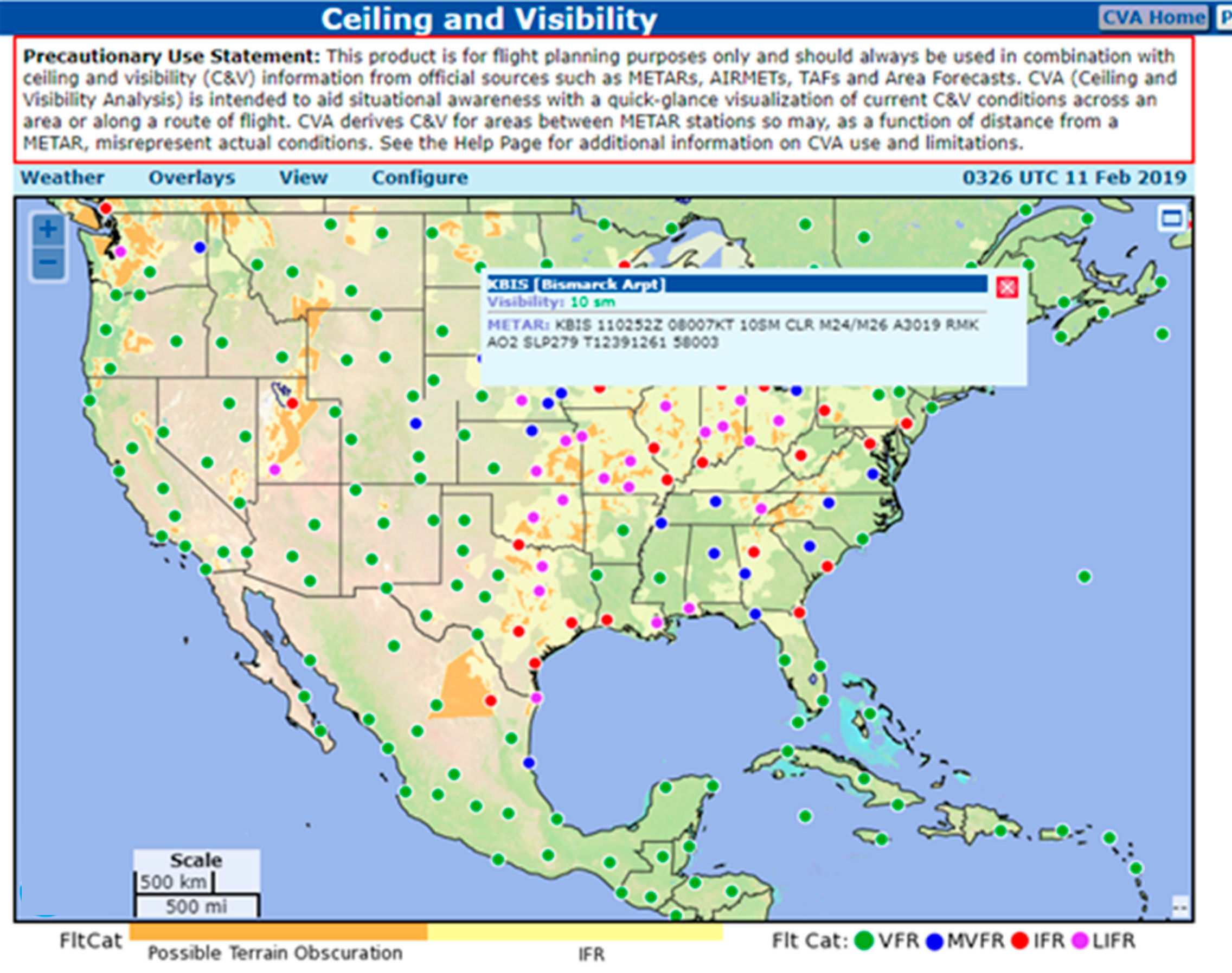

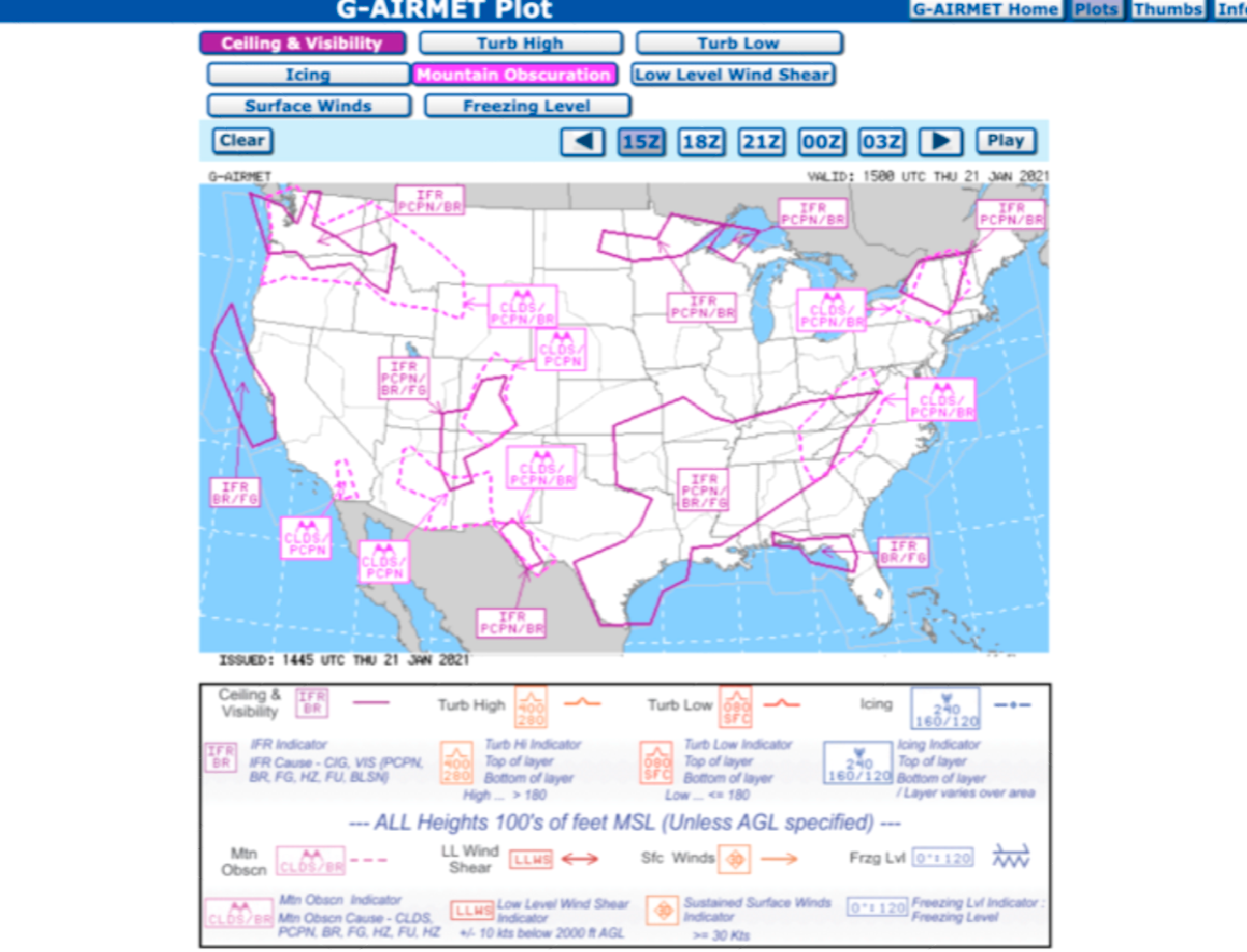

1.2.1. Ceiling and Visibility

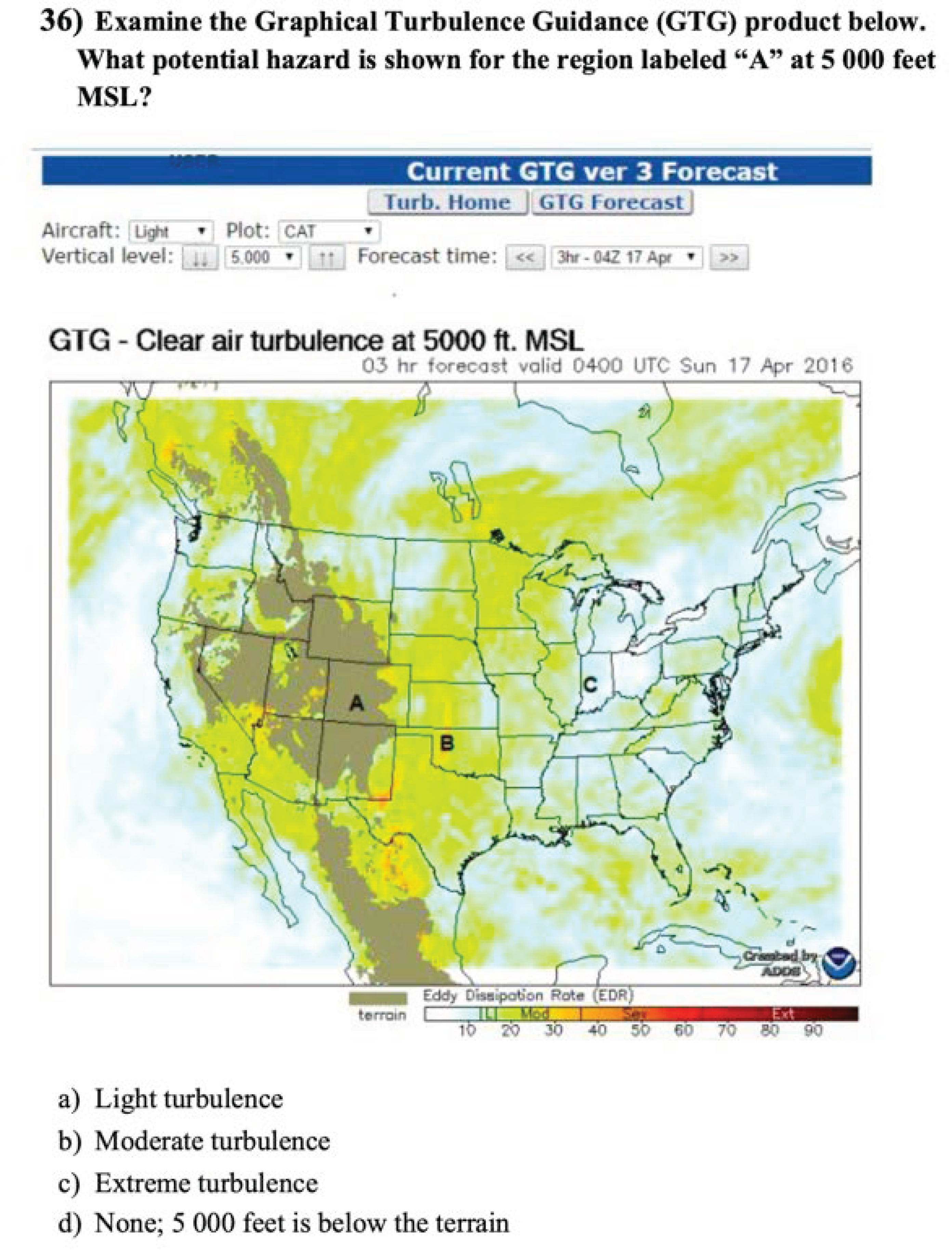

1.2.2. Turbulence

1.2.3. Icing

1.3. Automation

1.4. Experience

1.5. Purpose

2. Materials and Method

2.1. Data

2.2. Participants

2.3. Measures

Product Interpretation Score

2.4. Procedure

2.5. Data Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Eick, D. Turbulence related Accidents & Incidents. Available online: https://ral.ucar.edu/sites/default/files/public/events/2014/turbulence-impact-mitigation-workshop-2/docs/eick-turbulencerelatedaccidents.pdf (accessed on 30 December 2020).

- Aircraft Owners and Pilots Association. Nall Report. AOPA, 2020. Available online: https://www.aopa.org/training-and-safety/air-safety-institute/accident-analysis/joseph-t-nall-report/nall-report-figure-view?category=all&year=2017&condition=all&report=true (accessed on 19 January 2021).

- Lanicci, J.; Halperin, D.; Shappell, S.; Hackworth, C.; Holcomb, K.; Bazargan, M.; Iden, R. General Aviation Weather Encounter Case Studies; Aerospace Medicine Technical Report DOT/FAA/AM-12/11; Office of Aerospace Medicine: Washington, DC, USA, 2012. [Google Scholar]

- Aviation Safety Information Analysis and Sharing. Weather—Related Aviation Accident Study 2003–2007. Federal Aviation Administration, 2010. Available online: https://www.asias.faa.gov/i/studies/2003-2007weatherrelatedaviationaccidentstudy.pdf (accessed on 18 January 2021).

- Gultepe, I.; Wayne, F.F. Aviation Meteorology: Observations and Models. Introduction. Pure Appl. Geophys. 2019, 176, 1863–1867. [Google Scholar] [CrossRef] [Green Version]

- Federal Aviation Administration. Advisory Circular 00-45H, Change 2, Aviation Weather Services; 2019. Available online: https://www.faa.gov/documentLibrary/media/Advisory_Circular/AC_00-45H_CHG_2.pdf (accessed on 2 December 2020).

- Mosher, F.R. Aviation Weather Forecasts Beyond Today. In Proceedings of the 17th Conference on Aviation, Range, and Aerospace Meteorology. In Proceedings of the 95th Annual Meeting of the American Meteorological Society, Phoenix, AZ, USA, 4−8 January 2015; Available online: https://ams.confex.com/ams/95Annual/webprogram/Paper257969.html (accessed on 2 December 2020).

- Foreflight. Mobile Application Software. (Version 11.6). Boeing: Chicago, IL, USA. Available online: https://foreflight.com/ (accessed on 2 December 2020).

- Aviation Weather Center. Available online: https://www.aviationweather.gov/ (accessed on 2 December 2020).

- Fultz, A.J.; Ashley, W.S. Fatal weather-related general aviation accidents in the United States. Phys. Geogr. 2016. [Google Scholar] [CrossRef] [Green Version]

- Aviation Weather Center. Ceiling and Visibility; National Oceanic and Atmospheric Administration & National Weather Service: Washington, DC, USA, 2019. Available online: https://aviationweather.cp.ncep.noaa.gov/cva (accessed on 11 February 2019).

- Herzegh, P.; Wiener, G.; Bateman, R.; Cowie, J.; Black, J. Data fusion enables better recognition of ceiling and visibility hazards in aviation. Bull. Am. Meteorol. Soc. 2015, 96, 526–532. [Google Scholar] [CrossRef]

- American Meteorological Society. Cited 2020: Turbulence. Glossary of Meteorology. Available online: https://glossary.ametsoc.org/wiki/Aircraft_turbulence (accessed on 18 January 2021).

- Federal Aviation Administration. Advisory Circular 00-6B, Aviation Weather. 2016. Available online: https://www.faa.gov/documentlibrary/media/advisory_circular/ac_00-6b.pdf (accessed on 18 January 2021).

- Sharman, R.; Tebaldi, C.; Wiener, G.; Wolff, J. An integrated approach to mid-and upper-level turbulence forecasting. Weather Forecast. 2006, 21, 268–287. [Google Scholar] [CrossRef]

- Aviation Weather Center. Current GTG Forecast; National Oceanic and Atmospheric Administration & National Weather Service: Washington, DC, USA, 2021. Available online: https://www.aviationweather.gov/turbulence/gtg (accessed on 18 January 2021).

- Muñoz-Esparza, D.; Sharman, R. An Improved Algorithm for Low-Level Turbulence Forecasting. J. Appl. Meteor. Climatol. 2018, 57, 1249–1263. [Google Scholar] [CrossRef]

- Aviation Weather Center. CIP and FIP Plots; National Oceanic and Atmospheric Administration & National Weather Service: Washington, DC, USA, 2019. Available online: https://www.aviationweather.gov/icing/fip (accessed on 11 February 2019).

- Etherton, B.J.; Wandishin, M.S.; Hart, J.E.; Layne, G.J.; Leon, M.H.; Petty, M.A. Assessment of the Current Icing Product (CIP) and Forecast Icing Product (FIP) Version 1.1; United States National Oceanic and Atmospheric Administration, Earth System Research Laboratory: Boulder, CO, USA, 2014. [Google Scholar] [CrossRef]

- Aviation Weather Center. G-AIRMET Plot; National Oceanic and Atmospheric Administration & National Weather Service: Washington, DC, USA, 2021. Available online: https://www.aviationweather.gov/gairmet (accessed on 18 January 2021).

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. A model for types and levels of human interaction with automation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2000, 30, 286–297. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ortiz, Y.; Guinn, T.; King, J.; Thomas, R.; Blickensderfer, B. The role of automation in aviation weather: Product development and general aviation pilot performance. In Human Performance in Automated and Autonomous Systems: Emerging Issues and Practical Perspectives, 1st ed.; Mouloua, M., Hancock, P., Eds.; CRC Press: Boca Raton, FL, USA, 2019; pp. 43–60. [Google Scholar] [CrossRef]

- Chen, W.; Zhao, L.; Tan, D.; Wei, Z.; Xu, K.; Jiang, Y. Human–machine shared control for lane departure assistance based on hybrid system theory. Control Eng. Pract. 2019, 84, 399–407. [Google Scholar] [CrossRef]

- Parasuraman, R.; Riley, V. Humans and automation: Use, misuse, disuse, abuse. Hum. Factors 1997, 39, 230–253. [Google Scholar] [CrossRef]

- Keller, D.; Rice, S. System-wide versus component-specific trust using multiple aids. J. Gen. Psychol. 2009, 137, 114–128. [Google Scholar] [CrossRef] [PubMed]

- Anderson, J.R. Rules of the Mind; Psychology Press: New York, NY, USA, 1993. [Google Scholar]

- Patel, V.L.; Groen, G.J.; Norman, G.R. Effects of conventional and problem-based medical curricula on problem solving. Acad. Med. 1991, 66, 380–389. [Google Scholar] [CrossRef] [PubMed]

- Rockwell, T.H.; McCoy, C.E. General Aviation Pilot Error: A Study of Pilot Strategies in Computer Simulated Adverse Weather Scenarios; United States Department of Transportation: Cambridge, MA, USA, 1988. [Google Scholar]

- Wiggins, M.W.; O’hare, D. Expertise in aeronautical weather-related decision making: A cross-sectional analysis of general aviation pilots. J. Exp. Psychol. Appl. 1995, 1, 305. [Google Scholar] [CrossRef]

- Blickensderfer, B.L.; Guinn, T.A.; Lanicci, J.M.; Ortiz, Y.; King, J.M.; Thomas, R.L.; DeFilippis, N. Interpretability of aviation weather information displays for general aviation. Aerosp. Med. Hum. Perform. 2020, 91, 318–325. [Google Scholar] [CrossRef] [PubMed]

- Blickensderfer, B.; Lanicci, J.; Guinn, T.; Thomas, R.; Thropp, J.; King, J.; Ortiz, Y. Aviation Weather Knowledge Questions (FAA Grant #14-G-010). Unpublished project report.

- IBM SPSS Statistics for Windows. Computer software. (Version 24). IBM: Armonk, NY, USA. Available online: https://www.ibm.com/products/spss-statistics?lnk=hp-optb (accessed on 2 December 2020).

- Blickensderfer, B.; McSorley, J.; Defillipis, N.; King, J.M.; Ortiz, Y.; Guinn, T.A.; Thomas, R. General aviation pilots’ capability to interpret aviation weather displays. Under review.

| Product | Weather Phenomena | Model-Based Imagery or HITLPolygons | Number of Questions |

|---|---|---|---|

| Ceiling and Visibility Analysis (CVA) | Flight category of weather present at an airport (ceiling and visibility) | Imagery | 2 |

| Graphical Turbulence Guidance (GTG) | Mid- and upper-level turbulence | Imagery | 2 |

| Current Icing Product (CIP) and Forecast Icing Product (FIP) | Icing and supercooled large droplets (SLD) | Imagery | 4 |

| Graphical Airman Meteorological Advisories Sierra (G-AIRMET Sierra) | Ceiling and visibility, mountain obscuration, | Polygons | 1 |

| Graphical Airman Meteorological Advisories Tango (G-AIRMET Tango) | Turbulence high, turbulence low, low-level wind shear (LLWS), surface winds (SW), | Polygons | 2 |

| Graphical Airman Meteorological Advisories Ice (G-AIRMET Ice) | Icing and freezing level | Polygons | 2 |

| Flight Certificate/Rating | HITL Polygon | Model-Based Imagery | ||||

|---|---|---|---|---|---|---|

| Turbulence M (SD) | Visibility M (SD) | Icing M (SD) | Turbulence M (SD) | Visibility M (SD) | Icing M (SD) | |

| Student | 45.53 (33.13) | 56.10 (50.24) | 42.68 (38.01) | 68.29 (31.14) | 54.88 (41.54) | 28.05 (21.06) |

| Private | 51.64 (30.2) | 63.38 (48.52) | 47.18 (29.14) | 75.35 (30.32) | 57.04 (39.00) | 29.93 (20.97) |

| Private w/Instrument | 60.00 (28.57) | 80.00 (40.41) | 49.00 (29.43) | 70.00 (33.50) | 69.00 (34.83) | 34.50 (23.63) |

| Commercial w/Instrument | 56.10 (30.22) | 73.17 (44.86) | 51.22 (23.68) | 75.61 (29.84) | 64.63 (32.10) | 40.85 (23.56) |

| Total | 53.37 (30.64) | 67.98 (46.77) | 47.54 (30.16) | 72.66 (31.13) | 61.08 (37.40) | 32.88 (22.51) |

| Flight Certificate/Rating | Turbulence M (SD) | Visibility M (SD) | Icing M (SD) | Total M (SD) |

|---|---|---|---|---|

| Student | 54.63 (24.50) | 55.28 (36.22) | 32.93 (16.02) | 47.65 (13.6) |

| Private | 61.13 (23.39) | 59.15 (33.90) | 35.68 (16.00) | 56.62 (15.67) |

| Private w/instrument | 64.00 (24.58) | 72.67 (28.32) | 39.33 (16.41) | 61.77 (12.93) |

| Commercial w/instrument | 63.90 (25.38) | 67.48 (28.37) | 44.31 (16.08) | 65.62 (14.50) |

| Total | 61.18 (24.37) | 63.40 (32.42) | 37.77 (16.49) | 57.88 (15.54) |

| Display Type | Student M (SD) | Private M (SD) | Private w/Instrument M (SD) | Commercial w/Instrument M (SD) | Total M (SD) |

|---|---|---|---|---|---|

| Traditional/polygon | 46.34 (21.51) | 51.23 (21.67) | 58.25 (19.33) | 61.59 (23.12) | 54.06 (21.94) |

| Automated/model-based imagery | 44.82 (16.53) | 48.06 (19.55) | 52.00 (21.33) | 55.49 (19.57) | 49.88 (19.66) |

| Total | 47.65 (13.61) | 55.70 (14.88) | 61.79 (12.93) | 65.62 (14.50) | 57.88 (15.54) |

| Weather Type | Polygon M (SD) | Imagery M (SD) | Total M (SD) |

|---|---|---|---|

| Turbulence | 53.36 (30.64) | 72.66 (31.13) | 61.18 (24.37) |

| Visibility | 67.98 (46.77) | 61.08 (37.40) | 63.40 (32.42) |

| Icing | 47.54 (30.16) | 32.88 (22.51) | 37.77 (16.49) |

| Total | 54.06 (21.94) | 49.88 (19.66) | 57.88 (15.54) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

King, J.M.; Blickensderfer, B.; Guinn, T.; Kleber, J.L. The Effects of Display Type, Weather Type, and Pilot Experience on Pilot Interpretation of Weather Products. Atmosphere 2021, 12, 143. https://doi.org/10.3390/atmos12020143

King JM, Blickensderfer B, Guinn T, Kleber JL. The Effects of Display Type, Weather Type, and Pilot Experience on Pilot Interpretation of Weather Products. Atmosphere. 2021; 12(2):143. https://doi.org/10.3390/atmos12020143

Chicago/Turabian StyleKing, Jayde M., Beth Blickensderfer, Thomas Guinn, and John L. Kleber. 2021. "The Effects of Display Type, Weather Type, and Pilot Experience on Pilot Interpretation of Weather Products" Atmosphere 12, no. 2: 143. https://doi.org/10.3390/atmos12020143

APA StyleKing, J. M., Blickensderfer, B., Guinn, T., & Kleber, J. L. (2021). The Effects of Display Type, Weather Type, and Pilot Experience on Pilot Interpretation of Weather Products. Atmosphere, 12(2), 143. https://doi.org/10.3390/atmos12020143