2.1. Single Image Defogging

In the presence of fog or haze, the original irradiance of the scene is attenuated in proportion to the distance of the objects. This effect is combined with the scattering of atmospheric light. A simple mathematical model can be formulated as follows [

17,

18]:

where

and

are the hazy and clear images, respectively,

A is the global atmospheric light, and

is the transmission map of the scene, defined as

, where

is the depth map of the scene and

is the scattering coefficient of the atmosphere.

In theory, knowing

A,

, and the depth of the scene

, the inversion of Equation (

1) can perfectly reconstruct the original clear image. However, the problem is ill-posed, and the estimation of the three parameters from single images is difficult and prone to errors. For these reasons, many defogging techniques based on the physical model directly estimate the transmission map

supposing

A to be constant and typically equal to 1.

One of the first single image dehazing methods was proposed by Narasimhan et al. [

19], and it relied on supplied information about the scene structure. An effective method was developed by Fattal [

20], who used an Independent Component Analysis-based method to estimate the albedo and transmission map of a scene. He et al. [

21] observed an interesting property of outdoor scenes with clear visibility: most objects have at least one color channel that is significantly darker (the pixel value for that channel is near zero) than the others. Using this property, a method based on dark channel prior was used to estimate the transmission map

. Tarel et al. [

22] proposed a technique whose complexity is linear with the number of image pixels. Their approach is based on a heuristic estimation of the atmospheric veil, which is then used to recover the haze-free image. Other classical dehazing approaches directly estimate the parameters of the aforementioned physical model from examples or exploit some statistical properties of images [

23,

24].

The most recent approaches are based on deep learning techniques, which are used to estimate the atmospheric light and the transmission map. An approach based on convolutional neural networks (CNN) was proposed by Ren et al. [

3], where a coarse holistic transmission map is first produced using a CNN and then refined using a different fine-scale network. Cai et al. introduced a CNN called DehazeNet [

2] with the goal of learning a direct mapping between foggy images and the corresponding transmission maps. None of the approaches described above consider the esthetic quality of the resulting image in the parameter estimation phase. End-to-end models, which directly estimate the clear image, began to emerge with the work by Li et al. [

4].

Generative adversarial networks (GANs) [

25] have proven to be effective for many image generation tasks, such as super-resolution, data augmentation, and style transfer. The application of GANs to dehazing is quite recent; most notably, the use of adversarial training for the estimation of the transmission map was proposed by Pang et al. in [

5]. The use of GANs as end-to-end models to directly produce a haze-free image without estimating the transmission map was first developed by Li et al. [

6]; in that model, the discriminator receives a pair of images—the hazy image and the corresponding clear image—and is trained to estimate the probability that the haze-free image is the real k age given the foggy picture.

All the CNN or GAN-based approaches reported above are trained on paired datasets of foggy and clear images. Unfortunately, these datasets are often synthetic and the quality of results may decrease if the model is tested with real photographs. CycleGAN [

26], a special GAN approach based on cycle consistency loss, does not require any pairing between the two collections of data. Defogging with unpaired data was explored by Engin et al. [

7]: taking inspiration from neural style transfer [

27], the inception loss computed by features extracted from a VGG network [

28] was used as a regularizer. Moreover, Liu et al. [

8] proposed a model similar to CycleGAN used in conjunction with the physical model to apply fog to real images during the inverse mapping.

Curriculum learning was recently explored in the context of foggy scene understanding and semantic segmentation [

29,

30], surpassing the previous state-of-the-art in these tasks. The curriculum learning approach presented in [

29] shows some similarities to our defogging approach, validating our proposal even in a different scenario. The curriculum learning approach proposed in [

30] is an improvement of [

29], where more than two curriculum steps are used. However, the two approaches present some differences from our proposal: in [

29,

30], the ground-truth data are generated with self-supervision (from the previous step model), while we do not require the ground truth when working with real foggy images. Moreover, our proposal uses generative models (GANs), while [

29,

30] used supervised models (CNN). Apart from these differences, we cannot compare our approach with those presented in [

29,

30] as they operate in a different setting; in fact, while our approach is aimed at removing the fog from images to reconstruct the same scene taken in clear weather conditions, the suggested papers focus on semantic segmentation and scene understanding.

2.2. Defogging Metrics

Assessing the quality of defogging is a particularly difficult task, especially without information about the geometry of the image such as the 3D model of the scene. As of the writing of this work, human judgment is often preferred with regard to automatic evaluation to assess the quality of defogging. However, human evaluations are subjective, onerous, tedious, and impractical for large amounts of data.

The most used automatic evaluation techniques are based on methods originally developed to assess image degradation or noise, such as structural similarity (SSIM) [

31] and the peak signal-to-noise ratio (PSNR), both of which well-known in image processing (e.g., being used to estimate the quality of compression or deblurring techniques). The main problem with these metrics is that they need a reference clear image which, as stated before, is nearly impossible to obtain in a real-world scenario. In addition, SSIM and PSNR often do not correlate well with human judgment or with other referenceless metrics [

9].

Recently, some referenceless metrics specifically designed for defogging have been proposed. One of these “blind” metrics was introduced by Hautière et al. [

15]; it consists of three different indicators:

e,

and

. The value of

e is proportional to the number of visible edges in the defogged image relative to the original foggy picture. An edge is considered visible if its local contrast is above 5%, in accordance with the CIE international lighting vocabulary [

32]. The value of

represents the percentage of pixels that become saturated (black or white) after defogging. Finally,

denotes the geometric mean of the ratios of the gradient at visible edges; in short, it gives an indication of the amelioration of the contrast in the defogged image.

Another referenceless metric used to assess the density of fog within an image was introduced by Choi et al. [

33] relying on the natural scene and fog-aware statistical features. The metric, named the Fog Aware Density Evaluator (FADE), gives an estimation of the quantity of fog in an image and seems to correlate well with human evaluation.

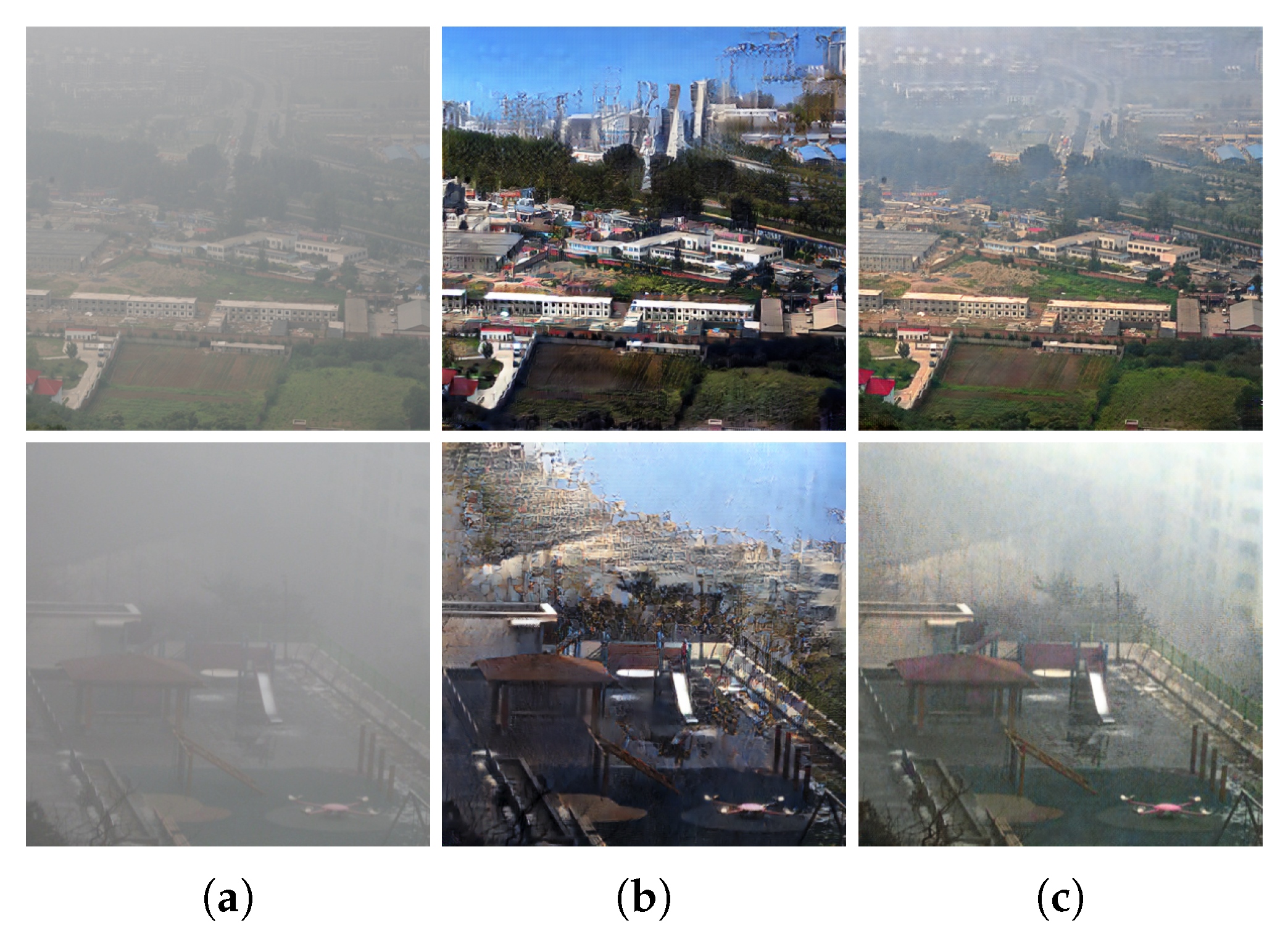

However, all the aforementioned metrics do not take into account the presence of artifacts in the defogged image, which is a critical issue especially when unpaired image-to-image translation models are used. Furthermore, the metrics proposed in [

15] can be highly deceived by the presence of artifacts (especially the descriptors

e and

), since the addition of nonexistent objects can raise the number of visible edges in the defogged image. As an example, an edge in the defogged image that is located in a region where many edges are also present in the original foggy image is probably the result of a correct visibility enhancement and should be taken into consideration in the metric computation. On the other hand, a new border in the defogged image that lies in a region where no edge is visible in the associated foggy image is probably an artifact inserted by the model and should not be considered, or possibly penalized, in the metric calculation.