1. Introduction

Many types of accidents, malicious actions, or terrorist attacks lead to the releases of noxious gases into the atmosphere. Densely built-up and highly populated areas, such as industrial sites or urban districts, are the critical locations for such events. The health effects are most severe close to the source of emission. On a local scale, the buildings significantly influence the airflow and consequently the dispersion. Thus, atmospheric dispersion models need to account for the influence on the flow of the three-dimensional (3D) geometry of the buildings.

Up to now, first responders and emergency teams essentially relied on modified Gaussian modeling. Most of the fast response modeling tools indeed use simplified flow formulations in the urban canopy and concentration analytical solutions with assumptions about the initial size of the plume and increased turbulence due to the urban environment: for examples, see ADMS-Urban [

1], PRIME [

2], SCIPUFF [

3], and the dispersion model tested by Hanna and Baja [

4]. However, Gaussian approaches do not apply to the complex flow patterns in the vicinity of the release in built-up areas, where the most serious consequences of the release are happening. Further away from the source, they cannot model specific effects due to buildings, such as the entrapment and subsequent releases of noxious substances due to canyon streets, cavity zones behind buildings, etc. Field experiments, such as the Mock Urban Setting Test (MUST) field experiment [

5], clearly demonstrated the importance of high-resolution modeling in complex built-up areas: critical effects, such as change in the plume centerline direction compared to the inflow condition, were observed and studied [

6,

7]. Models developed for scales too large to handle individual buildings have difficulties taking into account such effects.

At the same time, more precise modeling tools are becoming increasingly available for emergency situations at the mesoscale [

8] and local scale [

9,

10]. Computational fluid dynamics (CFD) models provide reference solutions by solving the Navier–Stokes equations. In particular, the Reynolds-averaged Navier–Stokes (RANS) approach can be used on small setups by relying on heavy parallel computing and optimization [

11] or precomputation [

12,

13].

The computational cost of CFD calculations, such as RANS and especially large eddy simulation (LES), indeed makes their usability complex for real-time emergency situations. To overcome this, a common strategy is to precompute a database of cases [

12,

13]. Nonetheless, the capability of the database to represent the variability of actual flows is an important issue, especially for complex 3D flows: it requires discretizing not only many inflow parameters, such as wind speed and wind direction, but also turbulence parameters and requires consideration of multiple inflow locations, usually at least along a vertical distribution. This leads to very large databases still lacking representativeness. The representativeness is particularly critical in built-up environments where an actual flow can be drastically different from a database case with similar but just slightly different inflow conditions.

Other approaches strike a balance between model speed and accuracy. Röckle [

14] suggested such an approach by using mass consistency in combination with local wind observations to solve the mean flow. Kaplan et al. [

15] extended the approach by coupling the flow modeling with Lagrangian particle dispersion modeling (LPDM, see [

16]). This approach has been completed to provide modeling solutions (see [

17,

18]).

Since first responders and emergency teams still essentially rely on modified Gaussian modeling, the EMERGENCIES project was designed to prove the operational feasibility of 3D high-resolution modeling as a support tool for first responders or emergency teams. As such, the modeling approach was defined to apply to a realistic chain of events and applied to a geographic area compatible with the area of responsibility of an emergency team. The approach selected was to precompute a 3D and high-resolution flow over this huge domain by using mesoscale forecasts from one day to the next and then to compute the dispersion on an on-demand basis.

While source term estimation is a critical issue, it is not covered in this paper, which is dedicated to the modeling of flow and dispersion. Source term estimation requires dedicated modeling to deal both with uncertainties regarding the situation and with the particular physics that may be involved, such as fire or explosions.

The paper is organized in five parts:

Section 2 contains a brief description of the modeling tools, both the numerical models and the computing infrastructure, and

Section 3 summarizes the EMERGENCIES project modeling set-up.

Section 4 presents and comments on the results of the simulations, including the operational aspects.

Section 5 draws conclusions on the potential use of this approach for emergency preparedness and response in the case of an atmospheric release.

2. Modeling Tools

After describing the numerical models, we introduce the computing cluster used for the simulations.

2.1. Presentation of the PMSS Modeling System

Operational modeling systems must provide reliable results in a limited amount of time. This is all the more of a challenge when one needs to consider large simulation domains, e.g., covering a whole urban area, at a high spatial resolution. The challenge can be tackled by combining appropriate models with efficient parallelization. This was the basic motivation for the development of the Parallel Micro SWFT and SPRAY (PMSS) modeling system.

2.1.1. The Modeling System

The PMSS modeling system [

17,

19,

20,

21] is a parallelized flow and dispersion modeling system. It is constituted of the Parallel-SWIFT (PSWIFT) flow model and the Parallel-SPRAY (PSPRAY), an LPDM, both used in small-scale urban mode.

The PSWIFT model [

22,

23,

24] is a parallelized mass-consistent 3D diagnostic model able to handle complex terrain using terrain-following coordinates. It uses analytical relationships for the flow velocity around buildings and produces diagnostic wind velocity, pressure, turbulence, temperature, and humidity fields. A calculation is performed using the three sequential steps:

First guess computation starting from heterogeneous meteorological input data, mixing surface and profile measurements with mesoscale model outputs;

Modification of the first guess using analytical zones defined around isolated buildings or within groups of buildings [

14,

15];

Mass consistency with impermeability on ground and buildings.

The PSWIFT model also incorporates a RANS solver [

24,

25]. It can be used as an alternate in the third step above. The RANS solver provides a more accurate pressure field: this is essential to derive the pressure on facades of buildings and model the infiltration inside.

The PSPRAY model [

23,

26,

27] is a parallelized LPDM [

16] that takes into account obstacles. The PSPRAY model simulates the dispersion of an airborne contaminant by following the trajectories of numerous numerical particles. The velocity of each virtual particle is the sum of a transport component and a turbulence component. The transport component is derived from the local average wind vector, while the turbulence component is derived from the stochastic scheme developed by Thomson [

28] that solves a 3D form of the Langevin equation. This equation comprises a deterministic term that depends on the Eulerian probability density function of the turbulent velocity, and is determined from the Fokker–Planck equation, and a stochastic diffusion term that is obtained from a Lagrangian structure function. The PSPRAY model treats elevated and ground-level emissions, instantaneous and continuous emissions, or time-varying sources. Additionally, it is able to deal with plumes with initial arbitrarily oriented momentum, negative or positive buoyancy, and cloud spread at the ground due to gravity.

2.1.2. Scalability

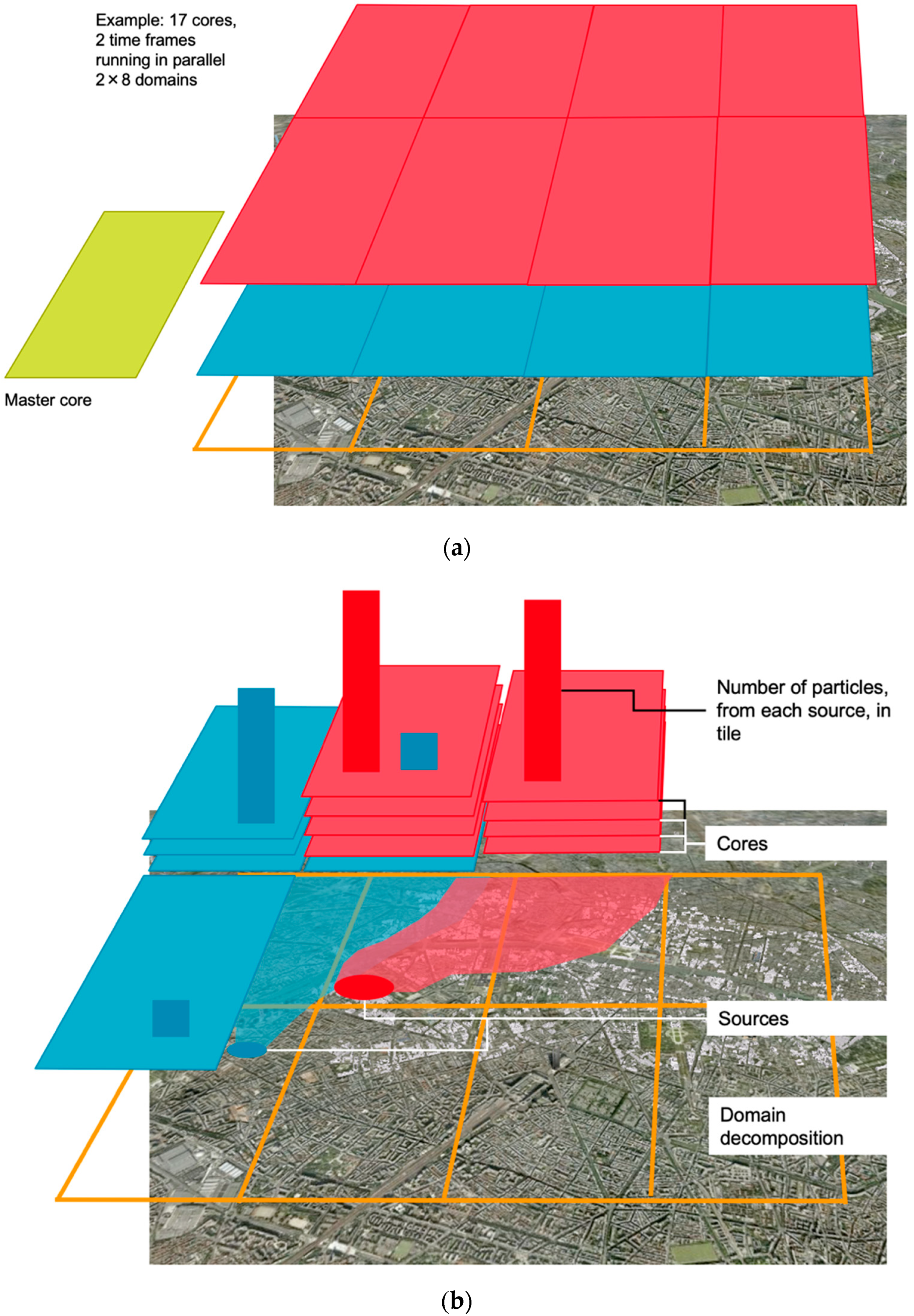

In order to allow for very large calculations and near-real-time calculations, the PMSS modeling system integrates parallelism using both weak and strong scalability [

17].

The weak scalability relies on domain decomposition (DD). The domain is divided into tiles that are compatible in size with the memory available to a single core. The strong scalability is implemented differently within the PSWIFT and PSPRAY models. Within the PSWIFT model, strong scalability relies on the diagnostic property of the code: time frames are computed in parallel. Within the PSPRAY model, strong scalability is achieved by distributing the virtual particles among the computing cores. An example of a combination of strong and weak scaling is illustrated for the PSWIFT model and for the PSPRAY model in

Figure 1.

The PMSS modeling system has also the ability to handle multiple nested domains. The PSPRAY model can hence use a nested domain computed by the PWIFT flow model or computed by another flow model such as, in our case, Code_Saturne (see

Section 2.2). The results of this flow model on the nested domain must be stored in the same binary format as a PSWIFT calculation, and they must contain at least wind velocity and turbulence fields.

The PMSS modeling system has been validated both in scalar mode [

22,

26,

27] and regarding parallel algorithms [

17,

20]. The parallel testing was performed in computational environments ranging from a multicore laptop to several hundred cores in a high-performance computing center.

2.2. Presentation of the Code_Saturne Model

In an urban area, one cannot exclude transfers of pollutants from outside to the inner part of buildings or, vice versa, pollution originating in a building and being transferred outside. Neither the diagnostic nor the momentum version of PSWIFT is appropriate to model the flow inside a building. Thus, computational fluid dynamics (CFD) is needed; more specifically, we used the Code_Saturne model [

29], an open-source general-purpose and environmental application-oriented CFD model.

The Code_Saturne model (

http://code-saturne.org, accessed on 17 May 2021) is an open-source CFD model developed at EDF R&D. Based on a finite volume method, it simulates incompressible or compressible laminar and turbulent flows in complex 2D and 3D geometries. Code_Saturne solves the RANS equations for continuity, momentum, energy, and turbulence. The turbulence modeling uses eddy-viscosity model or second-moment closure, such as k-epsilon. The time-marching scheme is based on a prediction of velocity followed by a pressure correction step. Additional details are provided in [

29].

The Code_Saturne model also has an atmospheric option [

7]. The model has been used extensively, not only for atmospheric flow but also to model the flow within buildings, including the complicated setup of an intensive care hospital room [

30].

2.3. The Computing Infrastructure

Modeling the flow and dispersion at high resolution in very large domains of several tens of kilometers of extent requires parallel domain decomposition using several hundreds or even thousands of cores of a supercomputer.

The simulations were carried out on a supercomputer consisting of 5040 B510 bull-X nodes, each with two eight-core Intel Sandy Bridge EP (E5-2680) processors at 2.7 GHz and with 64 GB of memory. The network is an InfiniBand QDR Full Fat Tree network. The file system offers 5 PB of disk storage.

3. The EMERGENCIES Project

The EMERGENCIES project aims to demonstrate the operational feasibility of accelerated time tracking of toxic atmospheric releases, be they accidental or intentional, in a large city and its buildings through 3D numerical simulation.

After describing the domain setup, we present the flow modeling and then the release scenarios.

3.1. Domain Setup

The modeling domain covers Greater Paris: it includes the City of Paris, the Hauts-de-Seine, the Seine-Saint-Denis, and the Val-de-Marne (see

Figure 2). It extends to the airports of Orly, to the south, and of Roissy Charles de Gaulle, to the north. This geographic area is under the authority of the Paris Fire Brigade.

The simulation domain has a uniform horizontal resolution of 3 m and dimensions of 38.4 km × 40.8 km, leading to 12,668 × 13,335 points in a horizontal plane. The vertical grid has 39 points from the ground up to 1000 m, the resolution near the ground being 1.5 m. The grid, therefore, has more than 6 billion points.

The static data used for the modeling consists of the following:

The topography at an original resolution of 25 m taken from the BD Topo of the French National Geographical Institute (IGN);

The land use data with the same gridded resolution but obtained from the CORINE Land Cover data [

31];

The buildings data, taken also from the BD Topo of IGN, consisting of 1.15 million polygons with height attributes.

These data have a disk footprint ranging from 1 GB for the topography or land use to 3 GB for the building data.

Figure 3 presents a view of the topography and the building data.

This very large domain is treated using domain decomposition: the domain is divided into horizontal tiles of 401 × 401 horizontal grid points (see

Figure 4). In total, 1088 tiles are used to cover the domain, 32 along the west–east axis, and 34 along the south–north axis.

Three buildings of particular interest have been chosen: a museum (M), a train station (TS), and an administration building (A). Their locations are presented in

Figure 5. They have been selected to demonstrate the capability of the system to provide relevant information regarding releases either propagating from inner parts of buildings to the outside or occurring in the urban environment and then penetrating buildings. Three nested domains around M, TS, and A have been defined using a grid with a very high horizontal and vertical resolution both inside the buildings and in their vicinity. The characteristics of these nested domains are summarized in

Table 1. The grid size is about 1 m horizontally and vertically.

3.2. Flow Simulations

The flow accounting for the buildings in the huge EMERGENCIES domain is downscaled from the forecasts of the mesoscale Weather Research and Forecasting (WRF) model [

32]. These simulations run every day and require 100 computing cores for 2 h 10 min for 72 h simulated.

The WRF forecasts are extracted every hour on vertical profiles inside the huge urban simulation domain and provided as inputs to the PMSS modeling system. Thus, 24 timeframes of the microscale 3D flow around buildings are computed by PSWIFT. Finally, the inflow conditions for flow and turbulence are extracted on the boundaries of the very high resolution nested domain to perform the Code_Saturne simulations.

These calculations can be performed routinely every day provided the computing resource is available. The flow and turbulence fields are then available every day in advance both for the full domain and the chosen nests. Accidental scenarios can then be simulated on an on-demand basis.

3.3. Dispersion Simulations

In the framework of the project, fictitious multiple attacks consisting of the releases of substances with potential health consequences were considered. These substances could be radionuclides, chemical products, or pathogenic biological agents. Substances were assumed to be released inside or near the public buildings introduced in the previous section. All sources are fictitious point releases of short durations occurring on the same day (taken as an example), in real meteorological conditions.

The day for the release was chosen arbitrarily to be 23 October 2014. The releases were carried out in the morning, starting at 10 a.m. for the first one. The simulated period for the dispersion is a 5-h duration period between 10 a.m. and 3 p.m.

The flow conditions for 23 October 2014 show an average wind speed of 3 m/s at 20 m above ground with winds coming from the southwest.

Each release has a 10 min duration, and the material emitted is considered to be a gas, without density effect, or fine particulate matter, i.e., with aerodynamic diameters below 2.5 µm. One kilogram of the material is emitted during each release. The first release occurs at 10 a.m. local time in the museum, the second one occurs at 11 a.m. inside the administration building, and the last one starts at 12 a.m. in front of the train station.

All these releases are purely fictional, but the intention is to try to apply the modeling system to a scenario that could be faced by emergency teams and first responders.

The nested modeling is handled in a two-way mode by the LPDM PSPRAY model within the PMSS modeling system: numerical particles move from the nested domains around the buildings where the attacks take place to the large domain encompassing the Paris area or, vice versa, from this large domain back to a nested domain.

4. Results and Discussion

In this section, the results obtained in the framework of the EMERGENCIES project are presented and discussed, taking into account the characteristics of the simulations and the associated computational resources (duration, CPU required, and storage). After describing the results for the flow simulations, we then present the results obtained for the dispersion before briefly discussing the constraints in rapidly and efficiently visualizing very large results.

4.1. Flow Simulations

After presenting the flow results for the large domain and the nested domains, we discuss the operating applicability of the system developed in the frame of the EMERGENCIES project.

The calculation for the flow on the whole domain using the PMSS modeling system requires a minimum of 1089 computing cores, i.e., one core per tile plus a master core. Time frames can be simulated in parallel. Several parallel configurations have been tested and are presented in

Table 2. An example of the level of precision of the flow obtained throughout the whole domain is presented in

Figure 6.

The duration ranges from around 2 h 40 min using the minimum number of cores down to around 1 h 20 min when computing eight timeframes concurrently. Regarding the storage, a single time frame requires 200 GB for the whole domain, leading to around 8 TB for 24 timeframes.

When considering the simulation for the nested domains, the Code_Saturne CFD model has been set up using around 200 cores per nested domain and per time frame. It requires 14,400 cores to handle the 24 timeframes for each of the three domains. The simulation duration is then 100 min for the domain M, 69 min for the domain TS, and 64 min for the domain A. The storage per time frame is 281, 232, and 80 MB, respectively. The storage for the detailed computations in and around the buildings is low compared to the large urban domain covering Greater Paris, contrarily to the computational costs. Indeed, the CFD model is much more computationally intensive than the diagnostic approach used in the PMSS modeling system. An example of the flow both in the close vicinity of and inside the administration building is presented in

Figure 7.

The flow modeling is intended for use on a daily basis: each day, a high-resolution flow modeling over the whole Paris area is simulated to be made available should any dispersion modeling of accidental or malevolent airborne releases be necessary.

After the WRF forecast modeling, around 3 h down to no more than 1 h 20 min is required to downscale the flow on the large Parisian domain at 3 m resolution, depending on the number of cores dedicated, respectively 1089 and 8705.

Then, around 5000 cores are required to handle the 24 timeframes of each nest in less than 1 h 40 min. If the three nested domains at very high resolution are treated, around 10,000 cores need to be available for 2 h: 5000 cores available each hour to handle the domain TS and then the domain A and 5000 cores to handle the domain M for 1 h 40 min.

Hence, the whole microscale flow modeling could be performed in 3 h 20 min using 10,000 cores.

4.2. Dispersion Simulations

After presenting the dispersion results for the large domain and the nested domains, we discuss the operating applicability of the system developed in the framework of the EMERGENCIES project.

The dispersion modeling benefits from two-way nested computations: numerical particles can move from the inner-most 1 m resolution domains to the large domain over Paris and its vicinity, and vice versa. At each 5 s emission step, 40,000 numerical particles are emitted. Since there are three 10 min-long releases, 14.4 million particles are emitted. A parametric study regarding the number of cores is presented in

Table 3. Concentrations are calculated every 10 min using 10 min averages.

Five hundred cores were retained for operational use. The simulation results produce 90 GB for 5 simulated hours. Eighty tiles of the large domain were reached by the plumes during the calculation, plus the three nested domains.

Figure 8 illustrates the evolution of the plume inside, in the vicinity of, and further away from the museum.

In an operational context, the dispersion simulation would be activated on demand. Using 500 cores, the duration of the calculation, one and a half hours, has to be compared to the physical duration of five hours simulated here. The simulation is thus 3.3 times faster than the reality. Moreover, the results can be analyzed on the fly, without requiring the whole simulation to be completed.

4.3. Visualization of the Simulation Results

Due to the large amount of data generated, the efficient visualization of data for operational use has proved to be a challenge in itself. After introducing a first attempt using a traditional scientific visualization application, we present a short overview of a more operative approach [

33].

3D modeling results can be explored using 3D scientific visualization applications. Due to the large quantity of data, the viewer chosen has to handle heavy parallelization. We chose the open-source software ParaView and developed a dedicated plugin. Nonetheless, interactive visualization using several hundred cores required several seconds or tens of seconds for refreshing: this is too ineffective for a user to navigate the results. Non-interactive image rendering has also been used (see

Figure 1), but it would be ineffective for the user in an emergency situation.

Hence, we relied finally on a tiled web map approach. The results of the simulation are processed on the fly as results are produced [

33]. Multiple vertical levels can be treated to provide views not solely on the ground but also in the volume at multiple heights above the ground. Processing takes a few minutes for each time frame since it consists only of a change of file format. Then, results can be consulted in real time through a tile map service using any web browser.

Figure 6,

Figure 8 and

Figure 9 use such an approach.

5. Conclusions

The EMERGENCIES project proved it is feasible to provide emergency teams and first responders with high-resolution simulation results to support field decisions. The project showed that the areas of responsibility of several tens of kilometers of length can be managed operationally. The domain, at a 3 m resolution, includes very high resolution modeling for specific buildings of interest where both the outside and the inside of the building have been modeled at 1 m resolution.

The modeling is carried out in two steps: First, the modeling of the flow is computed in advance starting from mesoscale forecasts provided each day for the following day. Then, these flows are used for on-demand modeling of dispersion that can occur everywhere in the domain and at multiple locations.

The modeling of the flow for the 24 timeframes can be achieved in 3 h 20 min using 10,000 cores both for the large domain and the three nested domains. If 5000 cores are available, the large domain requires 1 h 30 min and each nested domain requires an additional duration ranging between 1 h and 1 h 40 min. Five hundred cores are then required to be ready for on-demand modeling of the dispersion: the dispersion is modeled 3.3 times faster than it occurs in real life.

This amount of computer power is large but not inconsistent with the means usually dedicated to crisis management.

The dispersion requires a lower amount of computing cores than the modeling of the flow. This allows the modeler to perform several dispersion calculations concurrently, particularly to create alternate scenarios for the source terms. This is of particular interest considering the uncertainties related to the source term estimation. Successive scenarios may also be considered as information is updated and the comprehension of the situation by the emergency teams improves.

A large amount of 3D data was produced: 200 GB for the flow and 90 GB for the dispersion. While data produced near the ground are of the uttermost importance, the vertical distribution of concentration from the ground to a moderate elevation is also particularly relevant. Firstly, calculations of inflow and outflow exchanges for specific buildings of interest require such information. Then, the vertical distribution of concentration can support decisions to design evacuation strategies for specific buildings, such as moving people toward the building rooftop rather than evacuating them at the ground level into the neighboring streets.

One key aspect also put forward during the project is the difficulty of efficiently visualizing this huge amount of data produced. After focusing initially on a parallel scientific visualization application, we relied on on-the-fly data processing to create tiled web maps for the flow and the dispersion. These data can then be accessed in real time by the emergency team. This approach to visualizing data will be the focus of a dedicated paper.

Author Contributions

Conceptualization, O.O. and P.A.; Data curation, C.D., S.P. and M.N.; Investigation, O.O., C.D., S.P. and M.N.; Methodology, O.O., P.A. and S.P.; Resources, C.D., S.P. and M.N.; Supervision, O.O. and P.A.; Visualization, S.P.; Writing—original draft, O.O.; Writing—review & editing, O.O. and P.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- McHugh, C.A.; Carruthers, D.J.; Edmunds, H.A. ADMS-Urban: An air quality management system for traffic, domestic and industrial pollution. Int. J. Environ Pollut. 1997, 8, 666–674. [Google Scholar]

- Schulman, L.L.; Strimaitis, D.G.; Scire, J.S. Development and evaluation of the PRIME plume rise and building down-wash model. J. Air Waste Manag. Assoc. 2000, 5, 378–390. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sykes, R.I.; Parker, S.F.; Henn, D.S.; Cerasoli, C.P.; Santos, L.P. PC-SCIPUFF Version 1.3—Technical Documentation; Technical Report 725; Titan Corporation, ARAP Group: Princeton, NJ, USA, 2000. [Google Scholar]

- Hanna, S.; Baja, E. A simple urban dispersion model tested with tracer data from Oklahoma City and Manhattan. Atmos. Environ. 2009, 43, 778–786. [Google Scholar] [CrossRef]

- Biltoft, C.A. Customer Report for Mock Urban Setting Test; DPG Document Number 8-CO-160-000-052; Defense Threat Reduction Agency: Alexandria, VA, USA, 2001.

- Warner, S.; Platt, N.; Heagy, J.F.; Jordan, J.E.; Bieberbach, G. Comparisons of Transport and Dispersion Model Predictions of the Mock Urban Setting Test Field Experiment. J. Appl. Meteorol. Climatol. 2006, 45, 1414–1428. [Google Scholar] [CrossRef]

- Milliez, M.; Carissimo, B. Numerical simulations of pollutant dispersion in an idealized urban area, for different meteorological conditions. Bound. Layer Meteorol. 2006, 122, 321–342. [Google Scholar] [CrossRef]

- Rolph, G.; Stein, A.; Stunder, B. Real-time Environmental Applications and Display System: READY. Environ. Modell. Softw. 2017, 95, 210–228. [Google Scholar] [CrossRef]

- Leitl, B.; Trini Castelli, S.; Baumann-Stanzer, K.; Reisin, T.G.; Barmpas, P.; Balczo, M.; Andronopoulos, S.; Armand, P.; Jurcakova, K.; Milliez, M. Evaluation of air pollution models for their use in emergency response tools in built environments: The ‘Michelstadt’ Case study in COST ES1006 action. In Air Pollution Modelling and Its Application XXIII; Springer: Cham, Switzerland, 2014; pp. 395–399. [Google Scholar]

- Armand, P.; Bartzis, J.; Baumann-Stanzer, K.; Bemporad, E.; Evertz, S.; Gariazzo, C.; Gerbec, M.; Herring, S.; Karppinen, A.; Lacome, J.M.; et al. Best Practice Guidelines for the Use of the Atmospheric Dispersion Models in Emergency Response Tools at Local-Scale in Case of Hazmat Releases into the Air; Technical Report COST Action ES 1006; University of Hamburg: Hamburg, Germany, 2015. [Google Scholar]

- Gowardhan, A.A.; Pardyjak, E.R.; Senocak, I.; Brown, M.J. A CFD-based wind solver for urban response transport and dispersion model. Environ. Fluid Mech. 2011, 11, 439–464. [Google Scholar] [CrossRef]

- Yee, E.; Lien, F.S.; Ji, H. A Building-Resolved Wind Field Library for Vancouver: Facilitating CBRN Emergency Response for the 2010 Winter Olympic Games; Defense Research and Development: Suffield, AB, Canada, 2010.

- Patnaik, G.; Moses, A.; Boris, J.P. Fast, Accurate Defense for Homeland Security: Bringing High-Performance Computing to First Responders. J. Aerosp. Comput. Inf. Commun. 2010, 7, 210–222. [Google Scholar] [CrossRef]

- Röckle, R. Bestimmung der Strömungsverhaltnisse im Bereich Komplexer Bebauungs-Strukturen. Ph.D. Thesis, Technical University Darmstadt, Darmstadt, Germany, 1990. [Google Scholar]

- Kaplan, H.; Dinar, N. A Lagrangian dispersion model for calculating concentration distribution within a built-up domain. Atmos. Environ. 1996, 30, 4197–4207. [Google Scholar] [CrossRef]

- Rodean, H.C. Stochastic Lagrangian Models of Turbulent Diffusion; American Meteorological Society: Boston, MA, USA, 1996; Volume 45. [Google Scholar]

- Oldrini, O.; Armand, P.; Duchenne, C.; Olry, C.; Tinarelli, G. Description and preliminary validation of the PMSS fast response parallel atmospheric flow and dispersion solver in complex built-up areas. J. Environ. Fluid Mech. 2017, 17, 1–18. [Google Scholar] [CrossRef]

- Singh, B.; Pardyjak, E.R.; Norgren, A.; Willemsen, P. Accelerating urban fast response Lagrangian dispersion simulations using inexpensive graphics processor parallelism. Environ. Modell. Softw. 2011, 26, 739–750. [Google Scholar] [CrossRef]

- Oldrini, O.; Olry, C.; Moussafir, J.; Armand, P.; Duchenne, C. Development of PMSS, the Parallel Version of Micro SWIFT SPRAY. In Proceedings of the 14th International Conference on Harmonisation within Atmospheric Dispersion Modelling for Regulatory Purposes, Kos Island, Greece, 2–6 October 2011; pp. 443–447. [Google Scholar]

- Oldrini, O.; Armand, P.; Duchenne, C.; Perdriel, S. Parallelization Performances of PMSS Flow and Dispersion Modeling System over a Huge Urban Area. Atmosphere 2019, 10, 404. [Google Scholar] [CrossRef] [Green Version]

- Oldrini, O.; Armand, P. Validation and Sensitivity Study of the PMSS Modelling System for Puff Releases in the Joint Urban 2003 Field Experiment. Bound. Layer Meteorol. 2019, 171, 513–535. [Google Scholar] [CrossRef]

- Moussafir, J.; Oldrini, O.; Tinarelli, G.; Sontowski, J.; Dougherty, C. A new operational approach to deal with dispersion around obstacles: The MSS (Micro-Swift-Spray) software suite. In Proceedings of the 9th International Conference on Harmonisation within Atmospheric Dispersion Modelling for Regulatory Purposes, Garmisch-Partenkirchen, Germany, 1–4 June 2004; Volume 2, pp. 114–118. [Google Scholar]

- Tinarelli, G.; Brusasca, G.; Oldrini, O.; Anfossi, D.; Trini Castelli, S.; Moussafir, J. Micro-Swift-Spray (MSS) a new modelling system for the simulation of dispersion at microscale. General description and validation. In Air Pollution Modelling and Its Applications XVII; Borrego, C., Norman, A.N., Eds.; Springer: Cham, Switzerland, 2007; pp. 449–458. [Google Scholar]

- Oldrini, O.; Nibart, M.; Armand, P.; Olry, C.; Moussafir, J.; Albergel, A. Introduction of Momentum Equations in Micro-SWIFT. In Proceedings of the 15th International Conference on Harmonisation within Atmospheric Dispersion Modelling for Regulatory Purposes, Madrid, Spain, 6–9 May 2013. [Google Scholar]

- Oldrini, O.; Nibart, M.; Duchenne, C.; Armand, P.; Moussafir, J. Development of the parallel version of a CFD—RANS flow model adapted to the fast response in built-up environments. In Proceedings of the 17th International Conference on Harmonisation within Atmospheric Dispersion Modelling for Regulatory Purposes, Budapest, Hungary, 9–12 May 2016. [Google Scholar]

- Anfossi, D.; Desiato, F.; Tinarelli, G.; Brusasca, G.; Ferrero, E.; Sacchetti, D. TRANSALP 1989 experimental campaign—II. Simulation of a tracer experiment with Lagrangian particle models. Atmos. Environ. 1998, 32, 1157–1166. [Google Scholar] [CrossRef]

- Tinarelli, G.; Mortarini, L.; Trini Castelli, S.; Carlino, G.; Moussafir, J.; Olry, C.; Armand, P.; Anfossi, D. Review and validation of Micro-Spray, a Lagrangian particle model of turbulent dispersion. In Lagrangian Modelling of the Atmosphere, Geophysical Monograph; American Geophysical Union (AGU): Washington, DC, USA, 2013; Volume 200, pp. 311–327. [Google Scholar]

- Thomson, D.J. Criteria for the selection of stochastic models of particle trajectories in turbulent flows. J. Fluid Mech. 1987, 180, 529–556. [Google Scholar] [CrossRef]

- Archambeau, F.; Méchitoua, N.; Sakiz, M. Code Saturne: A finite volume code for the computation of turbulent incompressible flows-Industrial applications—Industrial Applications. Int. J. Finite Vol. 2004, 1, hal-01115371. [Google Scholar]

- Beauchêne, C.; Laudinet, N.; Choukri, F.; Rousset, J.L.; Benhamadouche, S.; Larbre, J.; Chaouat, M.; Benbunan, M.; Mimoun, M.; Lajonchère, J.; et al. Accumulation and transport of microbial-size particles in a pressure protected model burn unit: CFD simulations and experimental evidence. BMC Infect. Dis. 2011, 11, 58. [Google Scholar] [CrossRef] [Green Version]

- Bossard, M.; Feranec, J.; Otahel, J. CORINE Land Cover Technical Guide—Addendum 2000; Technical Report No. 40; European Environment Agency: Copenhagen, Denmark, 2000. [Google Scholar]

- Skamarock, W.C.; Klemp, J.B.; Dudhia, J.; Gill, D.O.; Barker, D.; Duda, M.G.; Huang, X.-Y.; Wang, W.; Powers, J.G. A Description of the Advanced Research WRF Version 3; No. NCAR/TN-475+STR; University Corporation for Atmospheric Research: Boulder, CO, USA, 2008; p. 113. [Google Scholar]

- Oldrini, O.; Perdriel, S.; Armand, P.; Duchenne, C. Web visualization of atmospheric dispersion modelling applied to very large calculations. In Proceedings of the 18th International Conference on Harmonisation within Atmospheric Dispersion Modelling for Regulatory Purposes HARMO, Bologna, Italy, 9–12 October 2017; Volume 18, pp. 9–12. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).