A Comparative Study of Multi-Model Ensemble Forecasting Accuracy between Equal- and Variant-Weight Techniques

Abstract

:1. Introduction

2. Data and Methods

2.1. Methodology

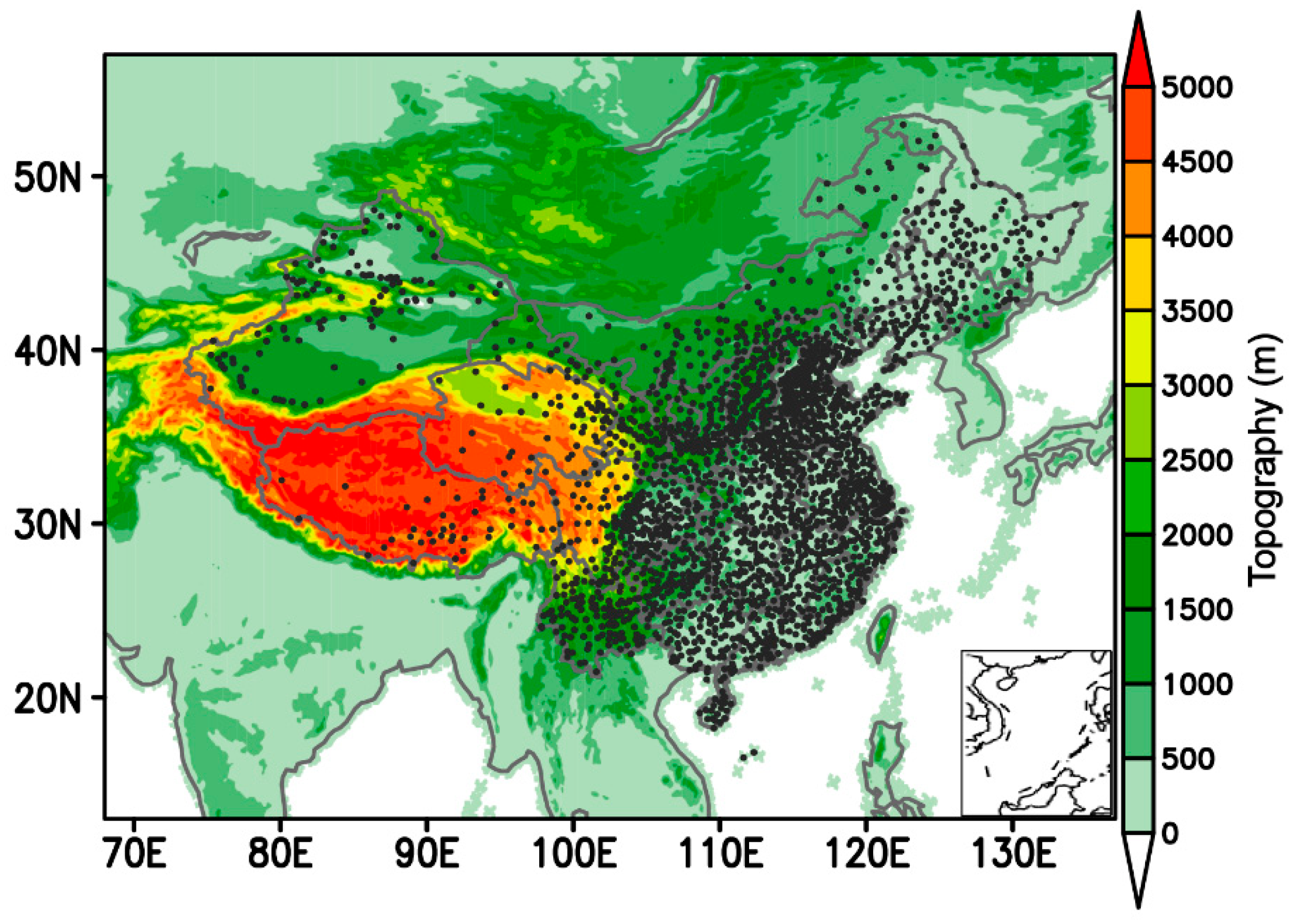

2.2. Data and Verification

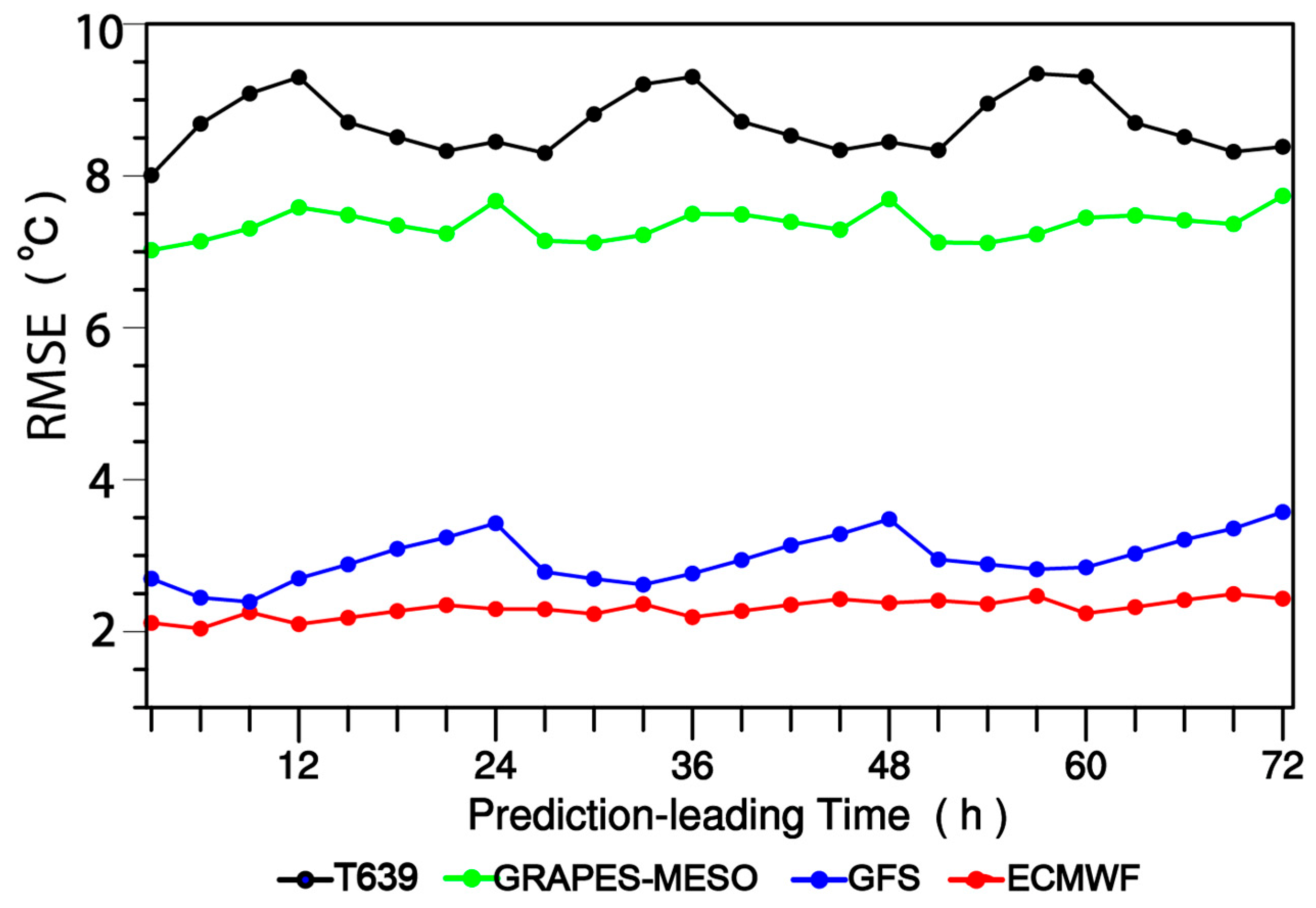

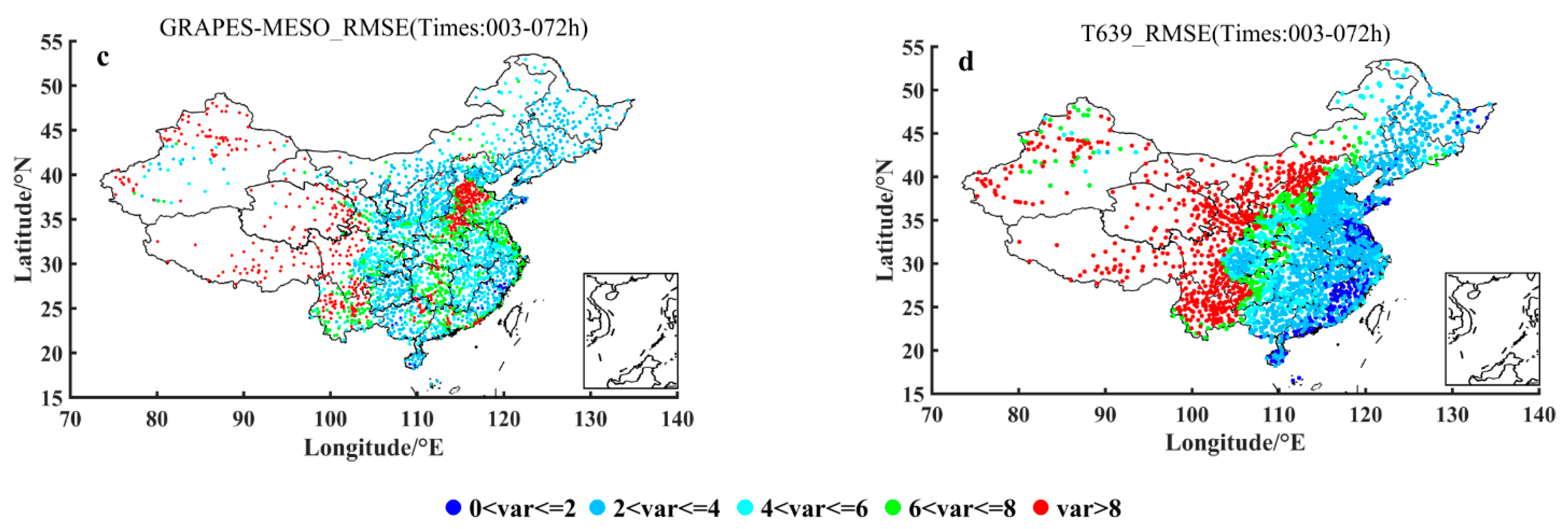

2.3. Performance Analysis of Ensemble Members

3. A Brief Introduction to the Principle of the VW Method and Its Superiority

4. Example Verification

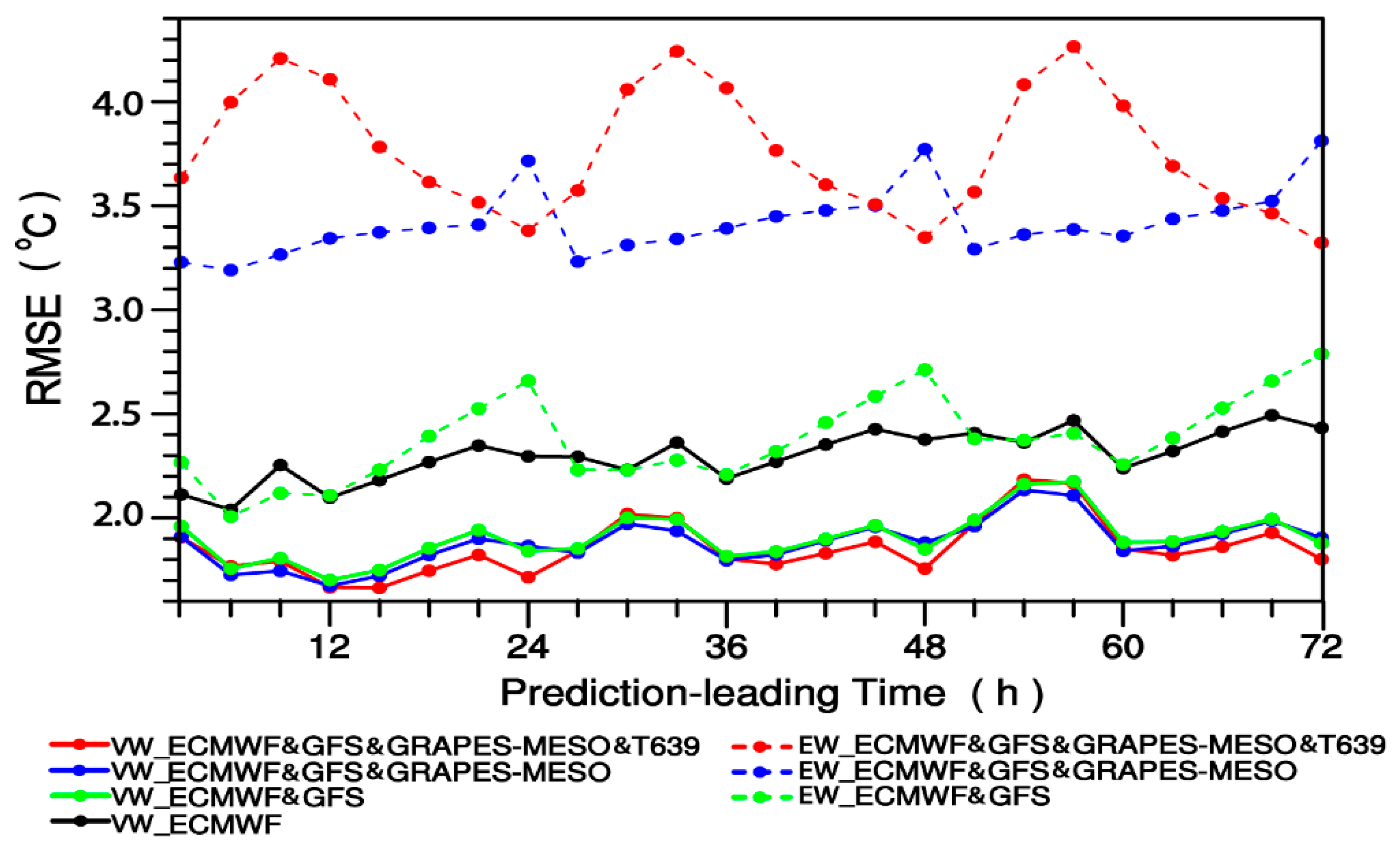

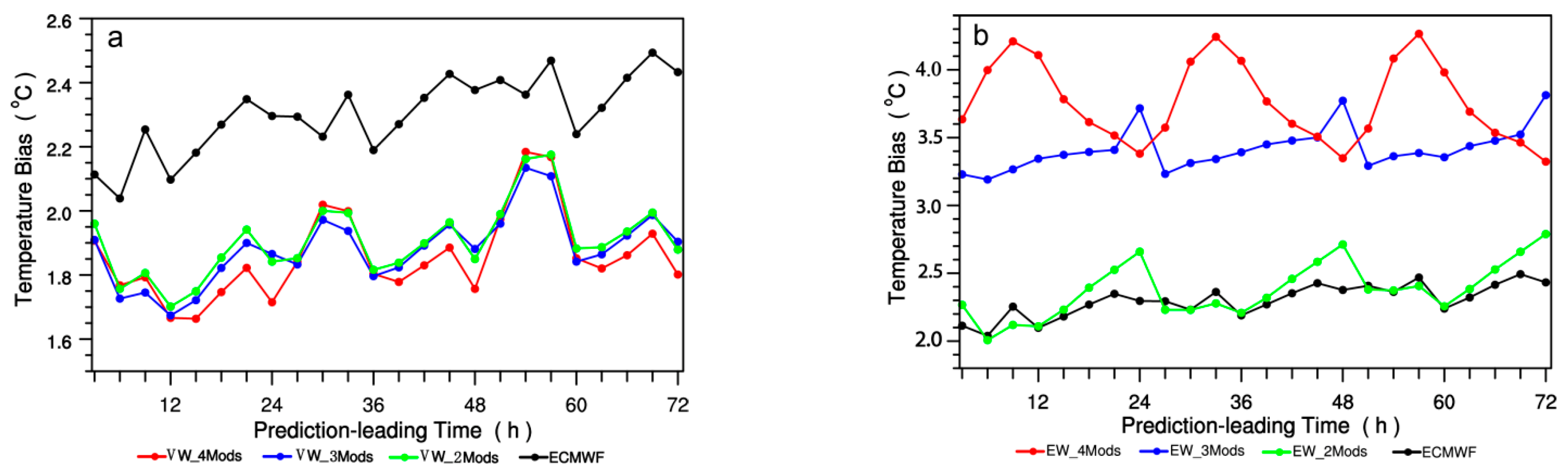

4.1. Comparative Analysis of Ensemble Forecasts between VW and EW Methods

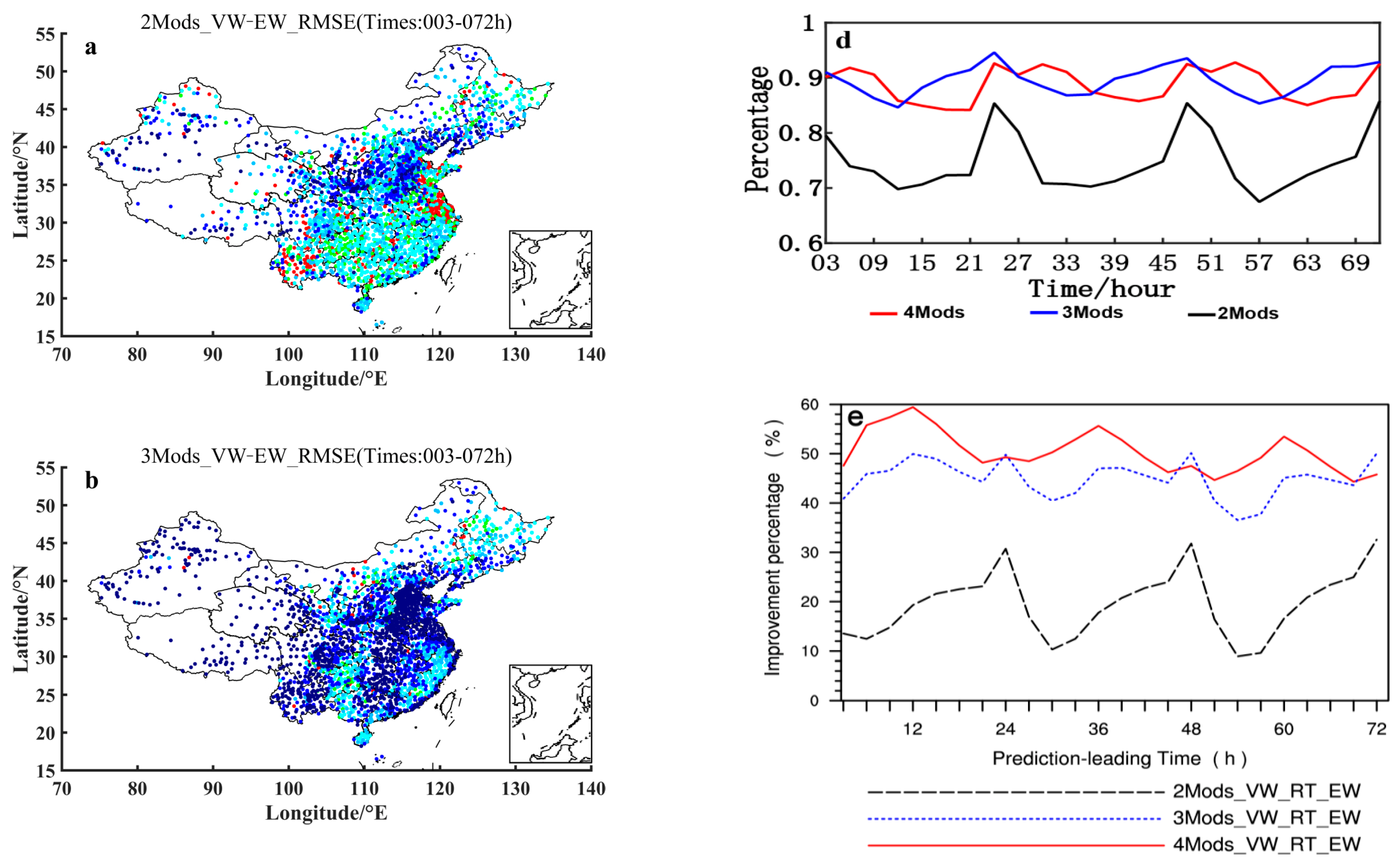

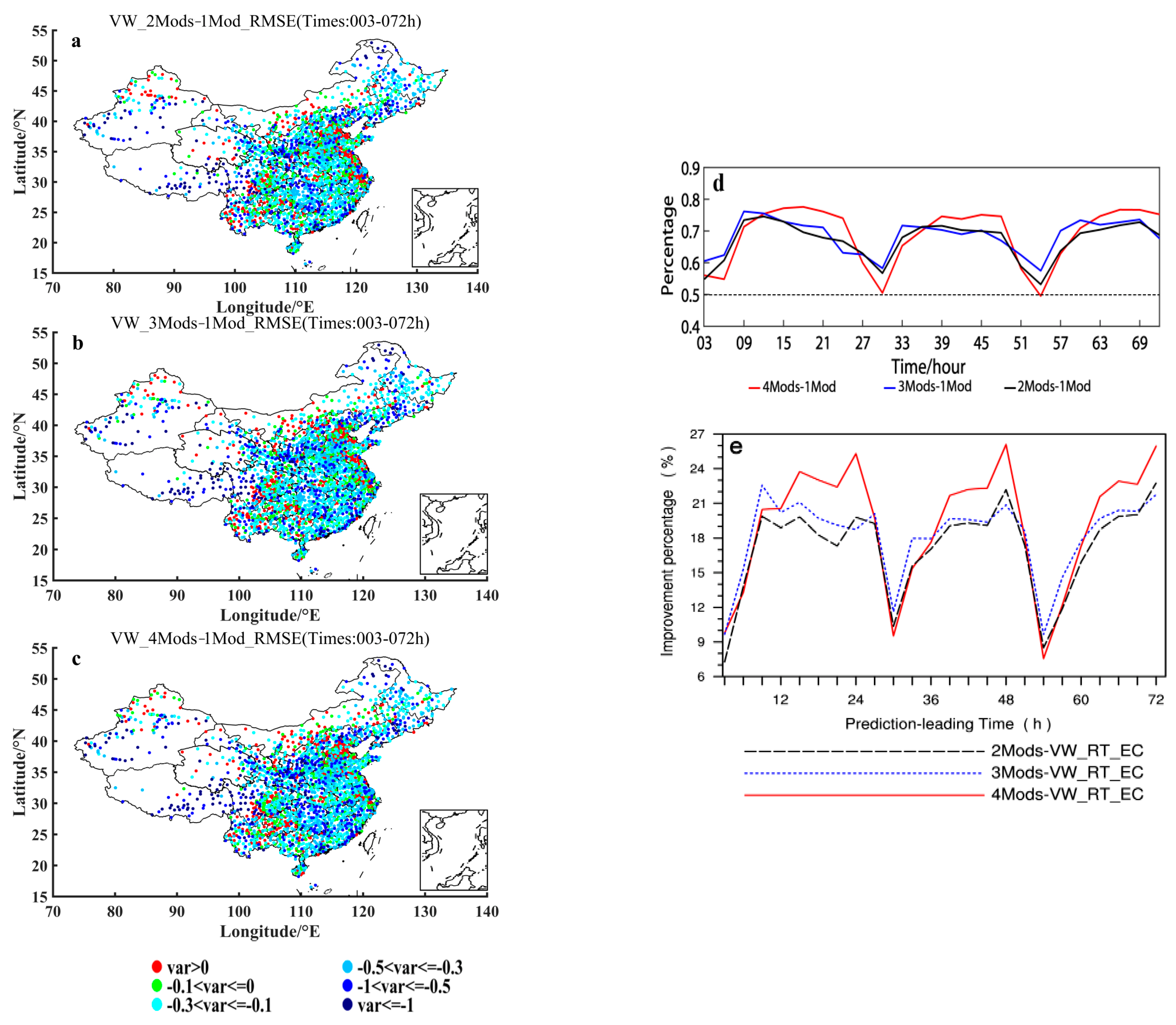

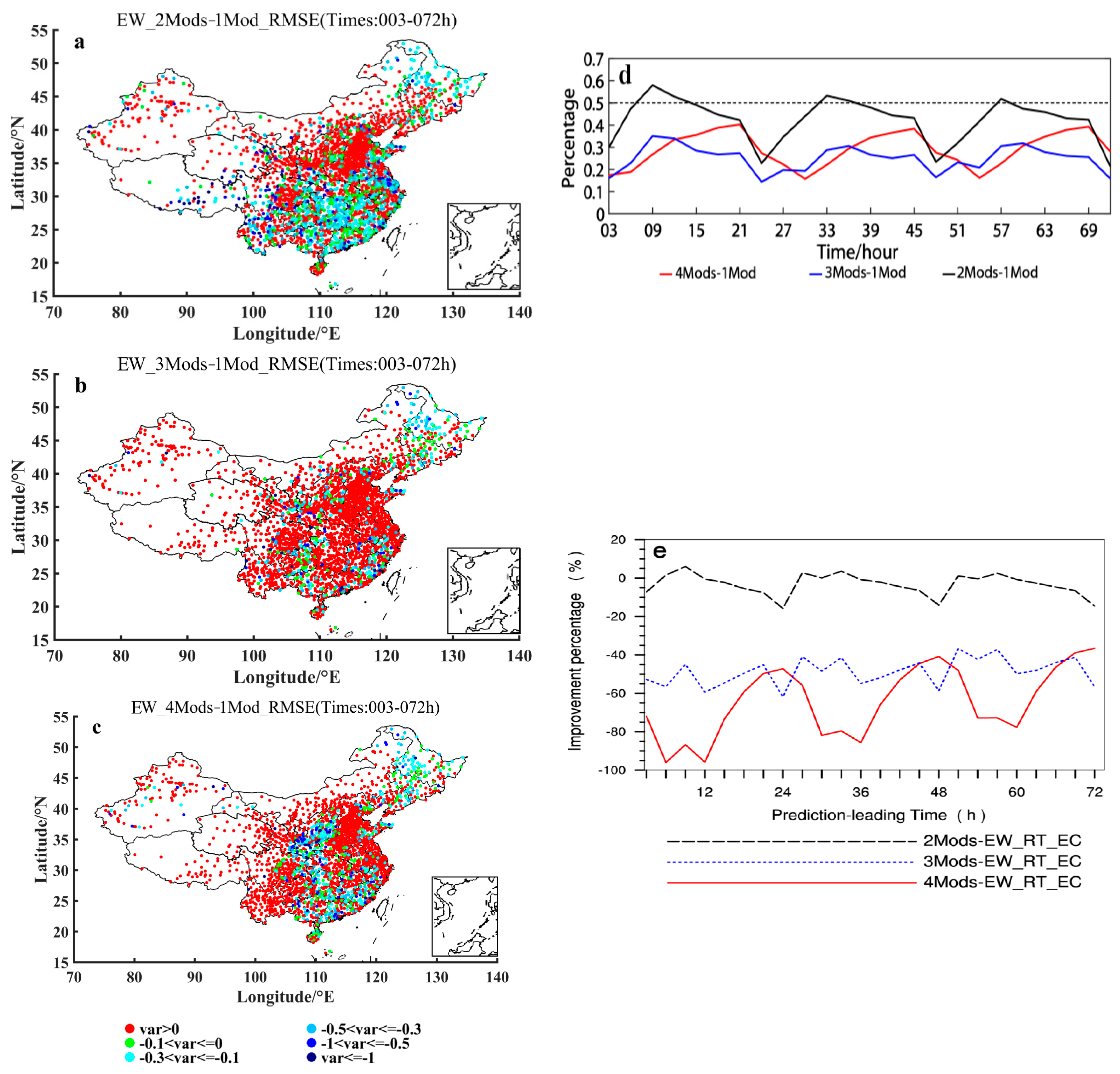

4.2. The Superiority of VW over EW Methods as Shown by the Combination Prediction Verification

5. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Calculation of a Best Estimate of the Mean Forecasts by the VW Ensemble Method

Appendix A.2. Comparative Analysis of Accuracy between VW and EW Ensemble Forecasts

Appendix A.3. Influence of Increasing Ensemble Model Member on VW and EW Forecast Accuracy

References

- Tracton, M.S.; Kalnay, E. Operational ensemble prediction at the national meteorological center: Practical aspects. Weather Forecast. 1993, 8, 379–398. [Google Scholar] [CrossRef]

- Molteni, F.; Buizza, R.; Palmer, T.N.; Petroliagis, T. The ECMWF ensemble prediction system: Methodology and validation. Q. J. R. Meteor. Soc. 1996, 122, 73–119. [Google Scholar] [CrossRef]

- Velazquez, A.J.; Petit, T.; Lavoie, A.; Boucher, M.-A.; Turcotte, R.; Fortin, V.; Anctil, F. An evaluation of the Canadian global meteorological ensemble prediction system for short-term hydrological forecasting. Hydrol. Earth Syst. Sci. 2009, 13, 2221–2231. [Google Scholar] [CrossRef] [Green Version]

- Descamps, L.; Labadie, C.; Joly, A.; Bazile, E.; Arbogast, P.; Cébron, P. PEARP, the Météo-France short-range ensemble prediction system. Q. J. R. Meteor. Soc. 2014, 141, 1671–1685. [Google Scholar] [CrossRef]

- Bowler, E.N.; Arribas, A.; Mylne, K.R.; Robertson, K.B.; Beare, S.E. The MOGREPS short-range ensemble prediction system. Q. J. R. Meteorol. Soc. 2008, 134, 703–722. [Google Scholar] [CrossRef]

- Garcia-Moya, A.J.; Callado, A.; Santo, C.; Santos-Muñoz, D.; Simarro, J. Predictability of Short-Range Forecasting: A Multimodel Approach. Nota Técnica 1 del Servicio de Predecibilidady Predicciones Extendidas (NT SPPE-1); Agencia Estatal de Meteorologia (AEMET), Ministerio de Medio Ambiente, y MedioRural y Marino: Madrid, Spain, 2009. [Google Scholar]

- Frogner, L.I.; Haakenstad, H.; Iversen, T. Limited-area ensemble predictions at the Norwegian Institute. Q. J. R. Meteorol. Soc. 2006, 132, 2785–2808. [Google Scholar] [CrossRef]

- Marsigli, C.; Montani, A.; Pacagnella, T. A spatial verification method applied to the evaluation of high-resolution ensemble forecasts. Meteorol. Appl. 2008, 15, 125–143. [Google Scholar] [CrossRef]

- Yamaguchi, M.; Sakai, R.; Kyoda, M.; Komori, T.; Kadowaki, T. Typhoon ensemble prediction system developed at the Janpan Meteorological Agency. Mon. Weather Rev. 2009, 137, 2592–2604. [Google Scholar] [CrossRef]

- Park, S.; Kim, D.; Lee, S.; Lee, K.; Kim, J.; Song, E.; Seo, K. Comparison of extended medium-range forecast skill between KMA ensemble, ocean coupled ensemble, and GloSea5. Asia-Pac. J. Atmos. Sci. 2017, 53, 393–401. [Google Scholar] [CrossRef]

- Ebert, E.E. Ability of a poor man’s ensemble to predict the probability and distribution of precipitation. Mon. Weather Rev. 2001, 129, 2461–2480. [Google Scholar] [CrossRef]

- Otsuka, S.T.; Miyoshi, A. Bayesian optimization approach to multimodel ensemble Kalman filter with a low-order model. Mon. Weather Rev. 2015, 143, 2001–2012. [Google Scholar] [CrossRef]

- Buizza, R.; Palmer, T.N. Impact of ensemble size on ensemble prediction. Mon. Weather Rev. 1988, 126, 2503–2518. [Google Scholar] [CrossRef]

- Jonson, A.; Wang, X.G.; Xue, M.; Kong, F.; Zhao, G.; Wang, Y.; Thomas, K.W.; Brewster, K.A.; Gao, J. Multiscale characteristics and evolution of perturbations for warm season convection-allowing precipitation forecast: Dependence on background flow and method of perturbation. Mon. Weather Rev. 2014, 142, 1053–1073. [Google Scholar] [CrossRef]

- Wang, Y.M.; Bellus, J.F.; Geleyn, X.; Ma, H.; Tian, H.; Weidle, F. A new method for generating initial condition perturbations in a regional ensemble prediction system: Blending. Mon. Weather Rev. 2014, 142, 2043–2059. [Google Scholar] [CrossRef] [Green Version]

- Sonia, J.; Juan, P.M.; Pedro, J.G.; Juan, G.N.J.; Raquel, L.P. A multi-physics ensemble of present-day climate regional simulations over the Iberian Peninsula. Clim. Dyn. 2013, 40, 3023–3046. [Google Scholar]

- Lee, A.J.; Haupt, S.E.; Young, G.S. Down-selecting numerical weather prediction multi-physics ensembles with hierarchical cluster analysis. J. Climatol. Weather. Forecast. 2016, 4, 156. [Google Scholar]

- Garcia-Ortega, E.; Lorenzana, J.; Merino, A.; Fernandez-Gonzalez, S.; Lopez, L.; Sanchez, J.L. Performance of multi-physics ensembles in convective precipitation events over northeastern. Spain Atmos. Res. 2017, 190, 55–67. [Google Scholar] [CrossRef]

- Zhang, Z.; Krishnamurti, T.N. Ensemble forecasting of hurricane tracks. Bull. Amer. Meteor. Soc. 1997, 78, 2785–2795. [Google Scholar] [CrossRef] [Green Version]

- Du, J.; Mullen, S.L.; Sanders, F. Short-range ensemble forecasting of quantitative precipitation. Mon. Weather Rev. 1997, 125, 2427–2459. [Google Scholar] [CrossRef]

- Zhi, F.X.; Qi, H.X.; Bai, Y.Q.; Lin, C. A comparison of three kinds of multimodel ensemble forecast techniques based on the TIGGE data. Acta Meteor. Sin. 2012, 26, 41–51. [Google Scholar] [CrossRef]

- Zhi, F.X.; Zhang, L.; Bai, Y.Q. Application of the Multimodel Ensemble Forecast in the QPF. In Proceedings of the International Conference on Information Science and Technology, Nanjing, China, 26–28 March 2011; pp. 657–660. [Google Scholar] [CrossRef]

- Zhi, X.F.; Bai, Y.Q.; Lin, C. Multimodel super ensemble forecasts of the surface air temperature in the Northern Hemisphere. In Proceedings of the Third THORPEX International Science Symposium, Monterey, CA, USA, 14–18 September 2009; WMO: 57. Available online: https://www.researchgate.net/publication/303486757_Superensemble_forecasts_of_the_surface_temperature_in_Northern_Hemisphere_middle_latitudes (accessed on 24 January 2022).

- Krishnamurti, N.T.; Sagadevan, A.D.; Chakraborty, A.; Mishra, A.K.; Simon, A. Improving multimodel weather forecast of monsoon rain over China using FSU superensemble. Adv. Atmos. Sci. 2009, 26, 813–839. [Google Scholar] [CrossRef]

- Zheng, M.; Chang, K.E.; Colle, A.B. Evaluating US East Coast winter storms in a multimodel ensemble using EOF and clustering approaches. Mon. Weather Rev. 2019, 147, 1967–1987. [Google Scholar] [CrossRef]

- Evans, E.R.; Harrison, M.S.J.; Graham, R.J.; Mylne, K.R. 2000: Joint medium-range ensembles from the Met. Office and ECMWF systems. Mon. Weather Rev. 2000, 128, 3104–3127. [Google Scholar] [CrossRef]

- Du, J. Uncertainty and Ensemble Forecasting. NOAA/NWS Science and Technology Infusion Lecture Series. 2007. Available online: http://www.nws.noaa.gov/ost/climate/STIP/uncertainty.htm (accessed on 24 January 2022).

- Qi, L.; Yu, H.; Chen, P. Selective ensemble-mean technique for tropical cyclone track forecast by using ensemble prediction systems. Q. J. R. Meteor. Soc. 2014, 140, 805–813. [Google Scholar] [CrossRef]

- Raftery, A.E.; Gneiting, T.; Balabdaoui, F.; Polakowski, M. Using Bayesian model averaging to calibrate forecast ensembles. Mon. Weather Rev. 2005, 133, 1155–1174. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.G.; Xie, Z.H. BMA probabilistic quantitative precipitation forecasting over the Huaihe basin using TIGGE multimodel ensemble forecasts. Mon. Weather Rev. 2014, 142, 1542–1555. [Google Scholar] [CrossRef] [Green Version]

- Bouallegue, Z.B. Calibrated short-range ensemble precipitation forecasts using extended logistic regression with interaction terms. Wea. Forecast. 2013, 28, 515–524. [Google Scholar] [CrossRef]

- Weigel, P.A.; Liniger, M.A.; Appenzeller, C. Can multi-model combination really enhance the prediction skill of probabilistic ensemble forecasts? Q. J. R. Meteor. Soc. 2008, 134, 241–260. [Google Scholar] [CrossRef]

- Yun, W.T.; Stefanova, L.; Mitra, A.K.; Vijaya Kumar, T.S.V.; Dewar, W.; Krishnamurti, T.N. A multi-model superensemble algorithm for seasonal climate prediction using DEMETER forecasts. Tellus A Dyn. Meteorol. Oceanogr. 2005, 57, 280–289. [Google Scholar] [CrossRef]

- Cane, D.; Milelli, M. Weather forecasts obtained with a Multimodel SuperEnsemble Technique in a complex orography region. Meteorol. Z. 2006, 15, 207–214. [Google Scholar] [CrossRef]

- Zhou, B.; Du, J. Fog prediction from a multimodel mesoscale ensemble prediction system. Weather Forecast. 2010, 25, 303–322. [Google Scholar] [CrossRef]

- Du, J.; Zhou, B. A dynamical performance-ranking method for predicting individual ensemble member performance and its application to ensemble averaging. Mon. Weather Rev. 2011, 139, 3284–3303. [Google Scholar] [CrossRef]

- Zheng, M.; Chang, E.K.M.; Colle, A.B.; Luo, Y.; Zhu, Y. Applying fuzzy clustering to a multimodel ensemble for US East Coast winter storms: Scenario identification and forecast verification. Weather Forecast. 2017, 32, 881–903. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Kumar, V.; Sharma, A.; Sinha, T.; Singh, P.S. Application of Multimodel Superensemble Technique on the TIGGE Suite of Operational Models. Geomatics 2021, 1, 81–91. [Google Scholar] [CrossRef]

- Krishnamurti, T.N.; Kumar, V.; Simon, A.; Bhardwaj, A.; Ghosh, T.; Ross, R. A review of multimodel superensemble forecasting for weather, seasonal climate, and hurricanes. Rev. Geophys. 2016, 54, 336–377. [Google Scholar] [CrossRef]

- Krishnamurti, N.T.; Kishtawal, C.M.; Zhang, Z.; LaRow, T.; Bachiochi, D.; Williford, E.; Gadgil, S.; Surendran, S. Improved weather and seasonal climate forecasts from multimodel superensemble. Science 1999, 285, 1548–1550. [Google Scholar] [CrossRef] [Green Version]

- Krishnamurti, N.T.; Kumar, T.S.V.V.; Yun, W.-T.; Chakraborty, A.; Stefanova, L. Weather and seasonal climate forecasts using the superensemble approach. In Book of Predictability of Weather and Climate; Palmer, T., Hagedorn, R., Eds.; Cambridge University Press: Cambridge, UK, 2006; Chapter 20. [Google Scholar]

- Krishnamurti, N.T.; Basu, S.; Sanjay, J.; Gnanaseelan, G. Evaluation of several different planetary boundary layer schemes within a single model, a unified model and a superensemble. Tellus A 2008, 60, 42–61. [Google Scholar] [CrossRef]

- Sun, G.X.; Yin, J.F.; Zhao, Y. Using the Inverse of Expected Error Variance to Determine Weights of Individual Ensemble Members: Application to Temperature Prediction. J. Meteorol. Res. 2017, 31, 502–513. [Google Scholar] [CrossRef]

- Xie, P.P.; Arkin, A. Analyses of Global Monthly Precipitation Using Gauge Observations, Satellite Estimates, and Numerical Model Predictions. J. Clim. 1996, 9, 840–858. [Google Scholar] [CrossRef] [Green Version]

- Huffman, G.J.; Adler, R.F.; Rudolf, B.; Schneider, U.; Keehn, P.R. Global precipitation estimates based on a technique for combining satellite-based estimates, rain gauge analysis, and NWP model 490 precipitation information. J. Clim. 1995, 8, 1284–1295. [Google Scholar] [CrossRef] [Green Version]

- Huffman, G.J.; Adler, R.F.; Parkin, P.; Chang, A.; Ferraro, R.; Gruber, A.; Janowiak, J.; McNab, A.; Rudolf, B.; Schneider, U. The Global Precipitation Climatology Project (GPCP) combined precipitation dataset. Bull. Amer. Meteor. Soc. 1997, 78, 5–20. [Google Scholar] [CrossRef]

- Hamill, T.M.; Colucci, S.J. Evaluation of Eta-RSM ensemble probabilistic precipitation forecasts. Mon. Weather Rev. 1998, 126, 711–724. [Google Scholar] [CrossRef] [Green Version]

| 1-Mod | 2-Mods | 3-Mods | 4-Mods |

|---|---|---|---|

| ECMWF | ECMWF, GFS | ECMWF, GFS, GRAPES-MESO | ECMWF, GFS, GRAPES-MESO, T639 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, X.; Sun, X.; Sun, J.; Yin, J.; Sun, J.; Liu, C. A Comparative Study of Multi-Model Ensemble Forecasting Accuracy between Equal- and Variant-Weight Techniques. Atmosphere 2022, 13, 526. https://doi.org/10.3390/atmos13040526

Wei X, Sun X, Sun J, Yin J, Sun J, Liu C. A Comparative Study of Multi-Model Ensemble Forecasting Accuracy between Equal- and Variant-Weight Techniques. Atmosphere. 2022; 13(4):526. https://doi.org/10.3390/atmos13040526

Chicago/Turabian StyleWei, Xiaomin, Xiaogong Sun, Jilin Sun, Jinfang Yin, Jing Sun, and Chongjian Liu. 2022. "A Comparative Study of Multi-Model Ensemble Forecasting Accuracy between Equal- and Variant-Weight Techniques" Atmosphere 13, no. 4: 526. https://doi.org/10.3390/atmos13040526