Using the Multiple-Sensor-Based Frost Observation System (MFOS) for Image Object Analysis and Model Prediction Evaluation in an Orchard

Abstract

:1. Introduction

2. Materials and Methods

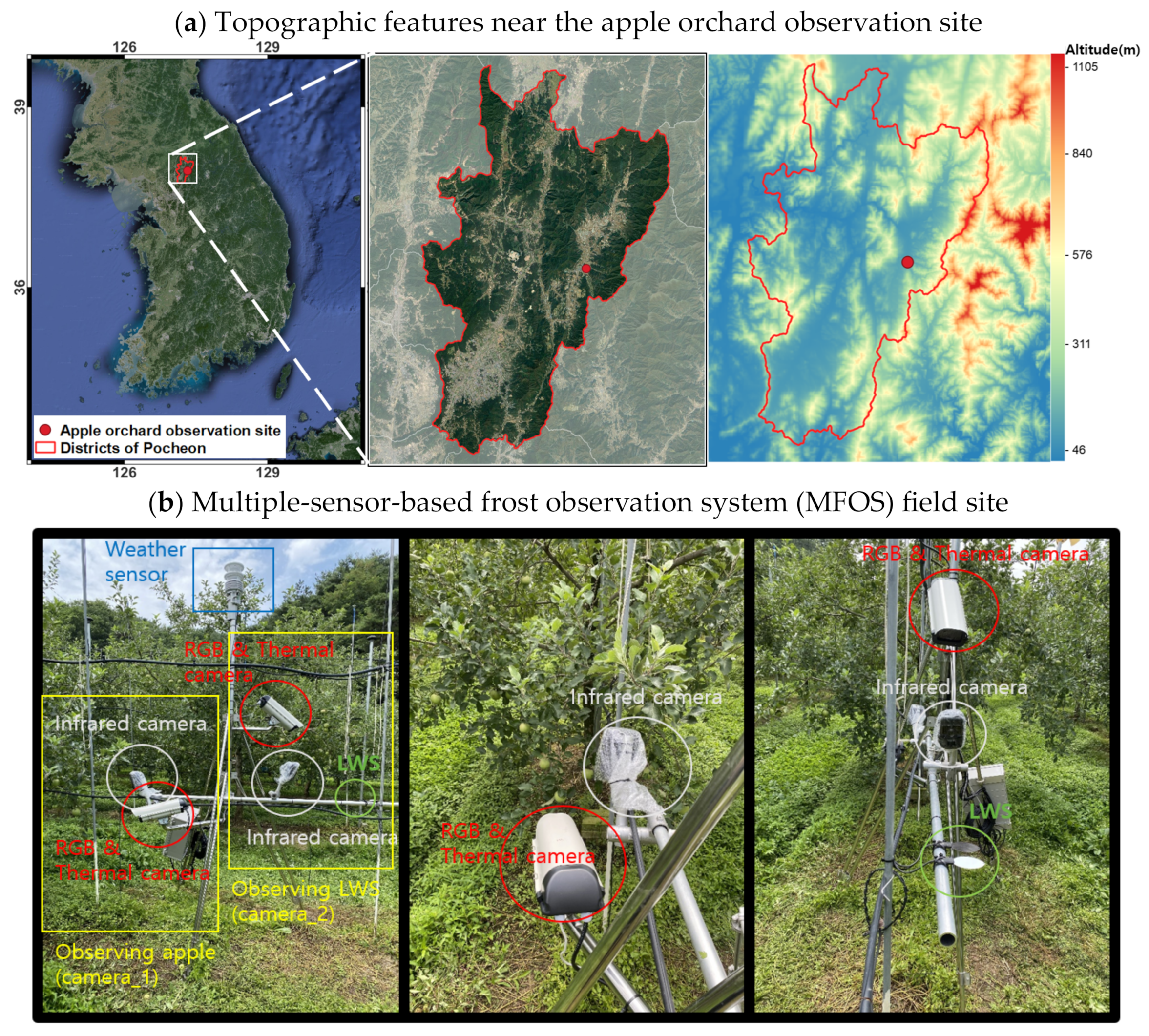

2.1. Study Area (Apple Farm)

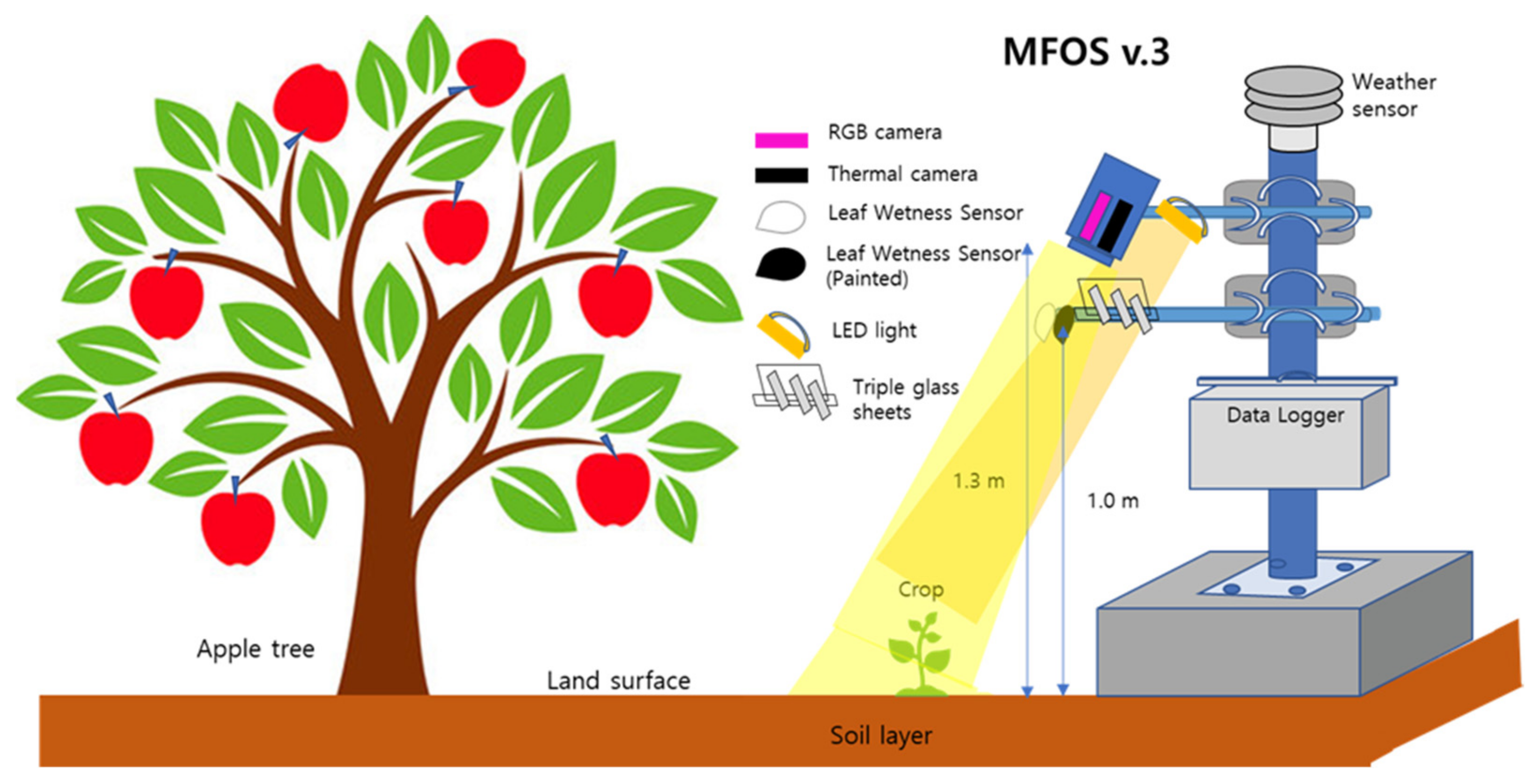

2.2. Observation System

2.3. Extraction of MFOS Surface Temperature

2.3.1. Object Surface Temperature Calculation and Accuracy

2.3.2. Apple Surface Temperature

2.4. Numerical Forecasting Models

3. Results and Discussion

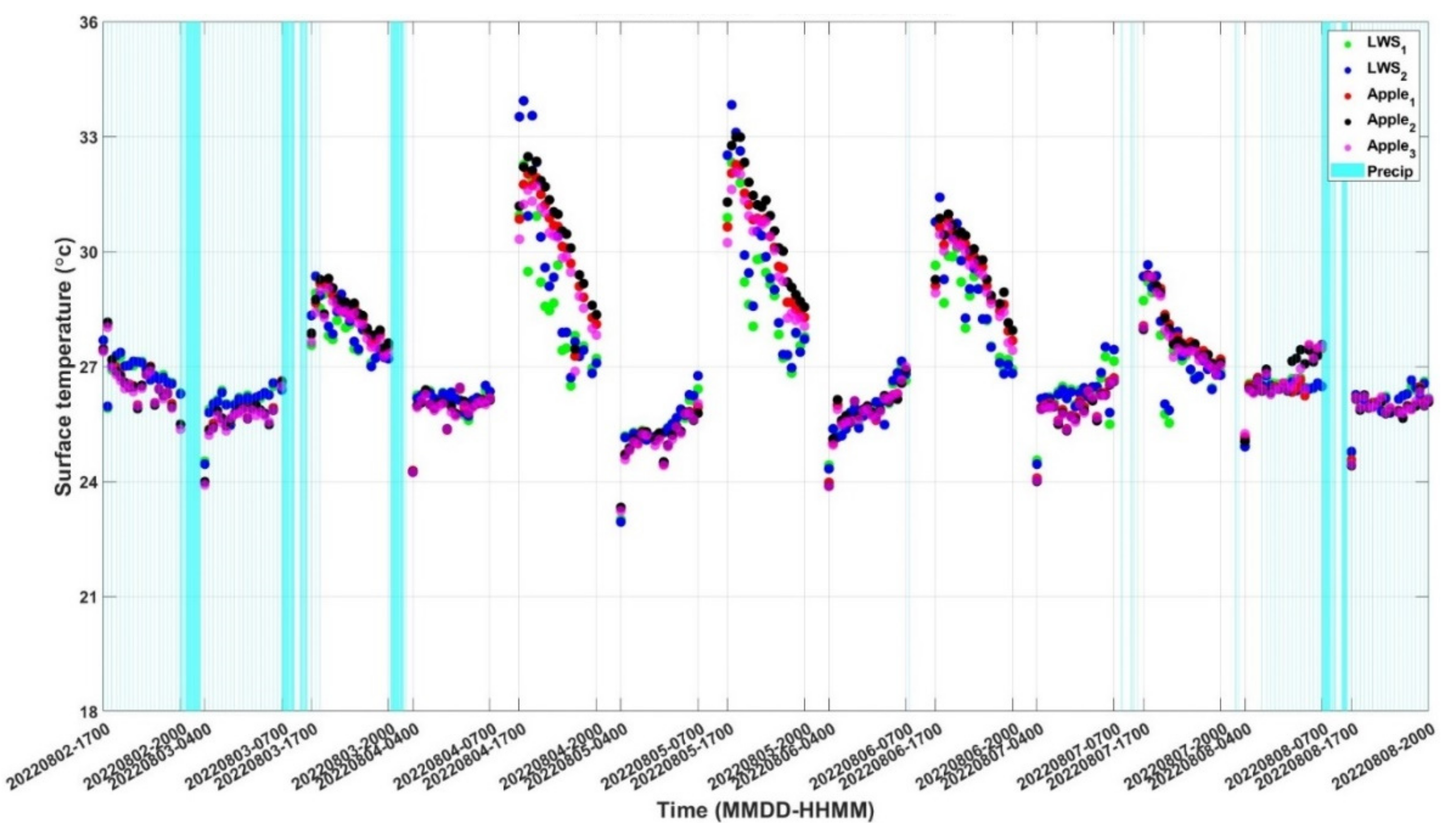

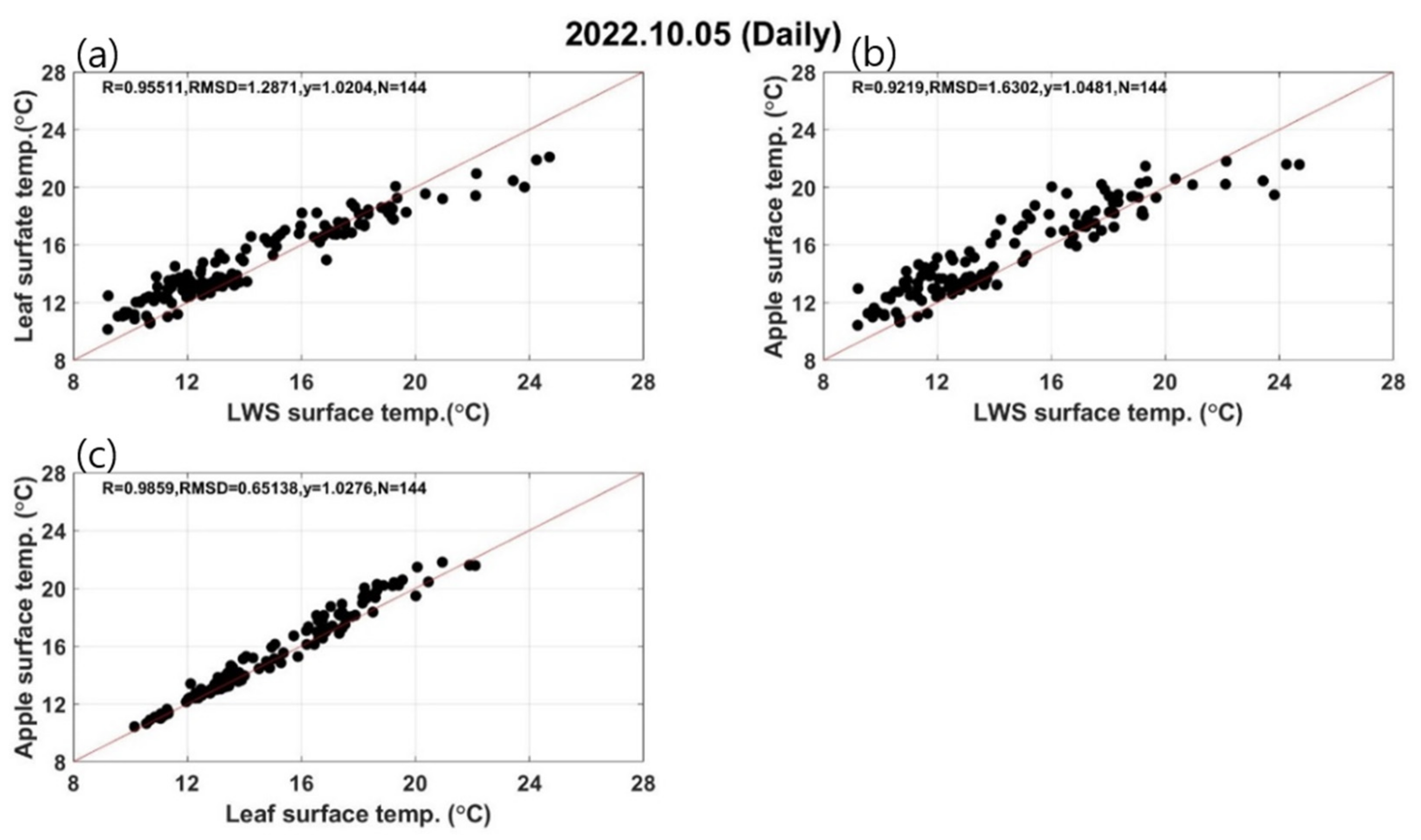

3.1. Preliminary Test: Evaluation of MFOS Surface Temperature

3.1.1. Apple Surface Temperature

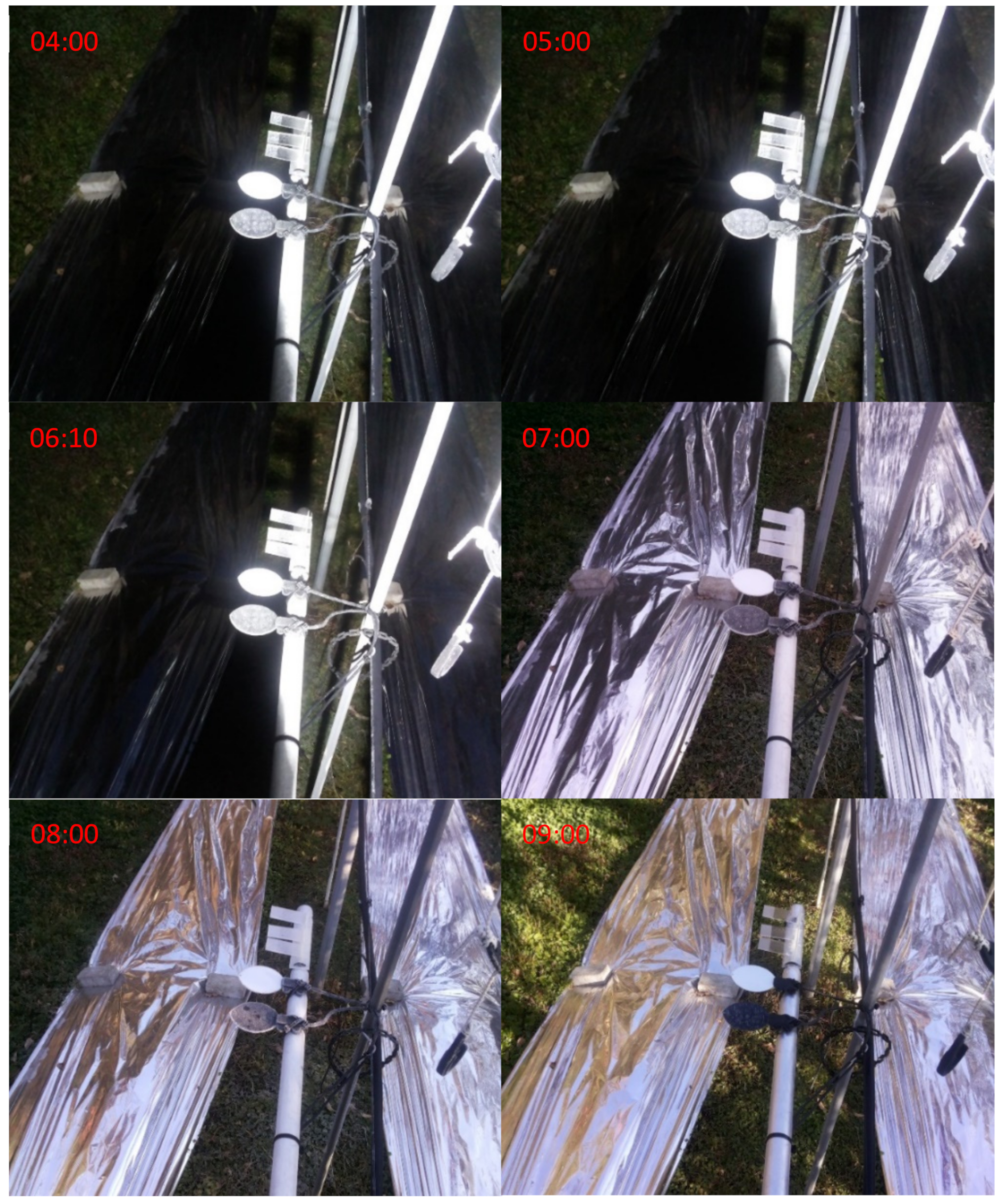

3.1.2. LWS Surface Temperature

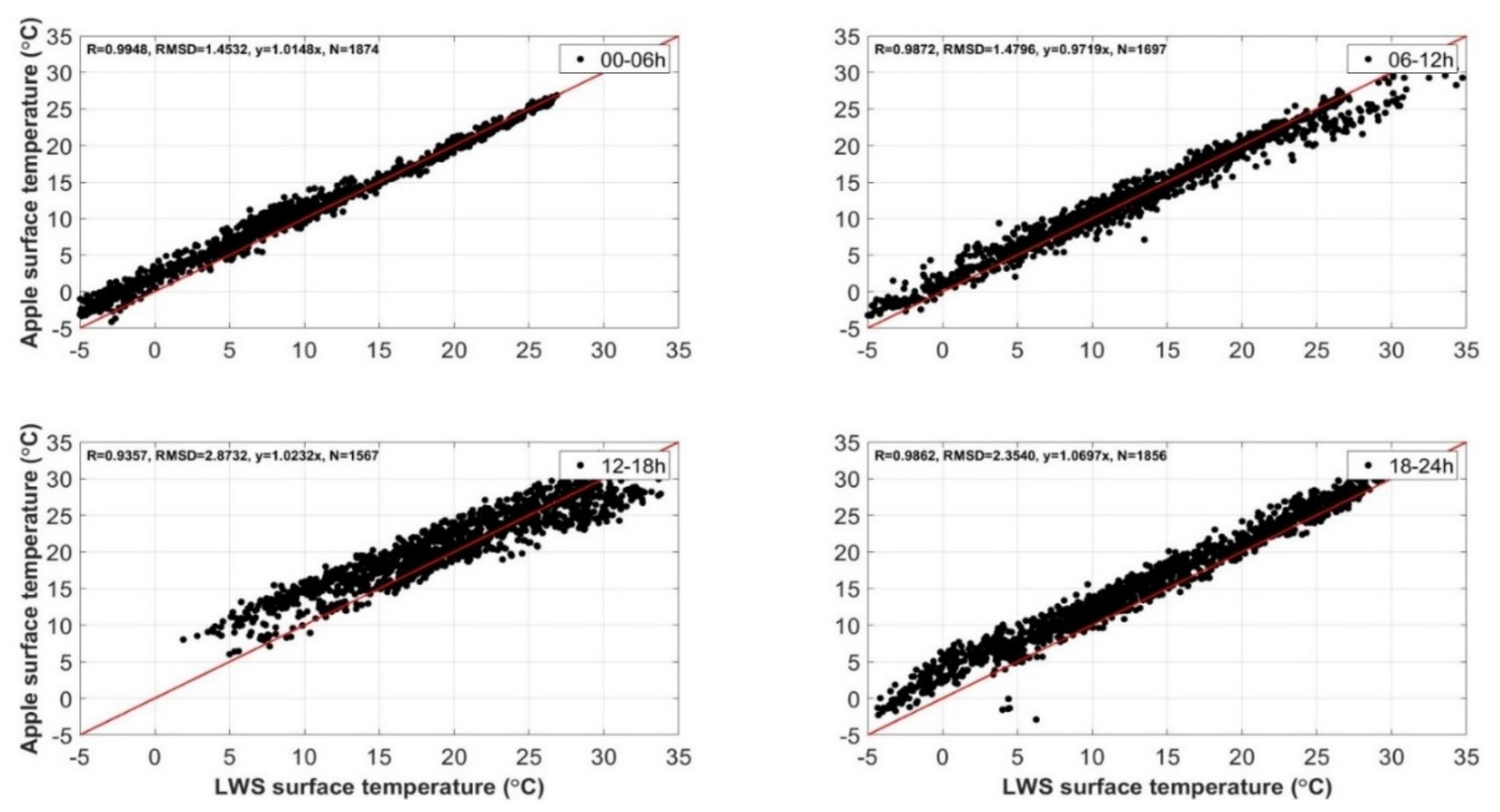

3.1.3. Comparison of Apple and LWS Surface Temperatures

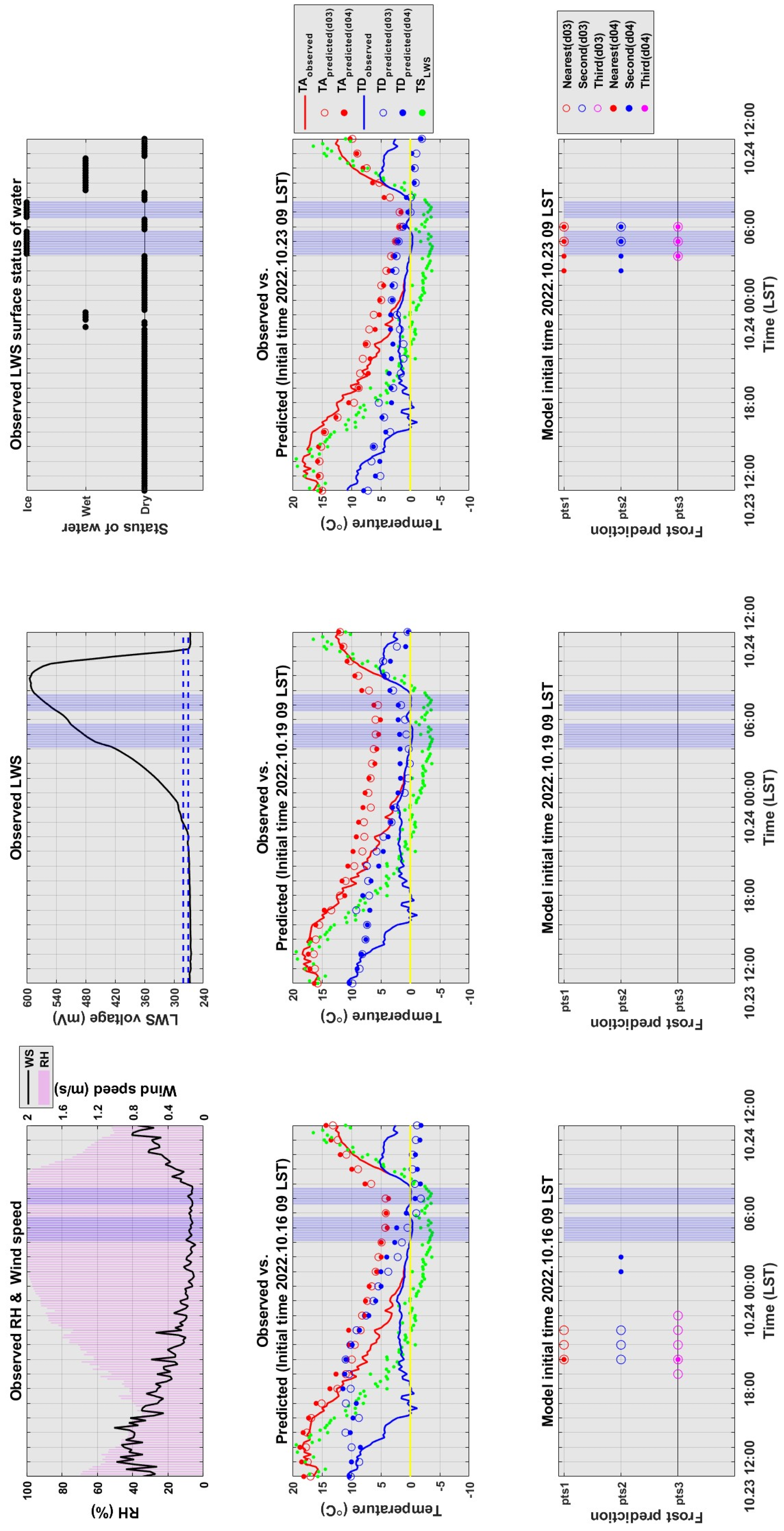

3.2. Actual Test: Evaluation of Frost Prediction Using MFOS

3.2.1. Case 1 (18 October 2022)

3.2.2. Case 2 (24 October 2022)

4. Summary and Concluding Remarks

- (1)

- Installing and operating this system in an apple orchard confirmed that the accuracy and efficiency of the automatic frost observation improved for both weak and robust frost events, thereby enhancing the usefulness of this observation system as an input for frost prediction models.

- (2)

- Resolution matching of the RGB and thermal infrared images was performed by resizing the images, matching them through horizontal movement, and conducting apple-centered averaging.

- (3)

- An evaluation of the frost forecast results from the LAMP/WRF numerical model showed that frost forecast evaluations could be conducted hourly, and the model could be validated in a shorter time by increasing its output frequency.

- (4)

- When objects were partially obscured by obstacles such as leaves, the accuracy significantly decreased, leading to failure in object detection. Unlike fruits, the wind sways tree leaves, and their position changes considerably depending on the time of image capture, resulting in relatively low accuracy in surface temperature estimation. Further research should be conducted on this topic, which will help in developing techniques for measuring the temperatures of various parts of crops.

- (1)

- To observe fruit tree surface temperatures in summer to examine the applicability of the studied system in high temperatures, such as during heat waves;

- (2)

- To upgrade the classification algorithm to estimate the surface temperatures of fruit trees using LWS and observe fruit damage from low and high temperatures;

- (3)

- To keep producing image (and video) observations for use in frost prediction models, and include them in a database;

- (4)

- To improve the representation of surface vegetation in the numerical weather model so that orchard farms can be more realistically implemented (e.g., [22]).

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Noh, I.S.; Doh, H.-W.; Kim, S.-O.; Kim, S.-H.; Shin, S.E.; Lee, S.-J. Machine learning-based hourly frost-prediction system optimized for orchards using automatic weather station and digital camera image data. Atmosphere 2021, 12, 846. [Google Scholar] [CrossRef]

- Rodrigo, J. Spring frosts in deciduous fruit trees—Morphological damage and flower hardiness. Sci. Hortic. 2000, 85, 155–173. [Google Scholar] [CrossRef]

- Korea Meteorological Administration (KMA). Abnormal Climate Report; KMA: Seoul, Republic of Korea, 2019.

- Korea Meteorological Administration (KMA). Ground Meteorological Observation Guidelines; KMA: Seoul, Republic of Korea, 2022.

- Groh, J.; Slawitsch, V.; Herndl, M.; Graf, A.; Vereecken, H.; Pütz, T. Determining dew and hoar frost formation for a low mountain range and alpine grassland site by weighable lysimeter. J. Hydrol. 2018, 563, 372–381. [Google Scholar] [CrossRef]

- Goswami, J.; Sharma, V.; Chaudhury, B.U.; Raju, P.L.N. Rapid identification of abiotic stress (frost) in in-filed maize crop using UAV remote sensing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 467–471. [Google Scholar] [CrossRef]

- Tait, A.; Zheng, X. Mapping frost occurrence using satellite data. J. Appl. Meteorol. 2003, 42, 193–203. [Google Scholar] [CrossRef]

- Wang, S.; Chen, J.; Rao, Y.; Liu, L.; Wang, W.; Dong, Q. Response of winter wheat to spring frost from a remote sensing perspective: Damage estimation and influential factors. ISPRS J. Photogramm. Remote Sens. 2020, 168, 221–235. [Google Scholar] [CrossRef]

- Valjarević, A.; Filipović, D.; Valjarević, D.; Milanović, M.; Milošević, S.; Živić, N.; Lukić, T. GIS and remote sensing techniques for the estimation of dew volume in the Republic of Serbia. Meteorol. Appl. 2020, 27, e1930. [Google Scholar] [CrossRef]

- Noh, I.S.; Lee, S.-J.; Lee, S.Y.; Kim, S.-J.; Yang, S.-D. A High-Resolution (20 m) Simulation of Nighttime Low Temperature Inducing Agricultural Crop Damage with the WRF–LES Modeling System. Atmosphere 2021, 12, 1562. [Google Scholar] [CrossRef]

- Kim, S.H.; Lee, S.-J.; Son, S.W.; Cho, S.S.; Jo, E.S.; Kim, K.R. Unmanned Multi-Sensor based Observation System for Frost Detection—Design, Installation and Test Operation. Korean J. Agric. For. Meteorol. 2022, 24, 95–114. [Google Scholar]

- Kim, S.H.; Lee, S.-J.; Kim, K.R. Improvement of Multiple-sensor based Frost Observation System (MFOS v2). Korean J. Agric. For. Meteorol. 2023, 25, 226–235. [Google Scholar]

- Campbell Scientific. LWS: Dielectric Leaf Wetness Sensor Instruction Manual; Revision 11/18; CSI: Ogden, UT, USA, 2018; p. 19. Available online: http://s.campbellsci.com/documents/us/manuals/lws.pdf (accessed on 2 May 2024).

- Savage, M.J. Estimation of frost occurrence and duration of frost for a short-grass surface. South Afr. J. Plant Soil 2012, 29, 173–187. [Google Scholar] [CrossRef]

- Zhu, L.; Cao, Z.; Zhuo, W.; Yan, R.; Ma, S. A new dew and frost detection sensor based on computer vision. J. Atmos. Ocean. Technol. 2014, 31, 2692–2712. [Google Scholar] [CrossRef]

- Chu, P.; Li, Z.; Lammers, K.; Lu, R.; Liu, X. Deep learning-based apple detection using a suppression mask R-CNN. Pattern Recognit. Lett. 2021, 147, 206–211. [Google Scholar] [CrossRef]

- Lee, S.-J.; Kim, J.; Kang, M.S.; Malla-Thakuri, B. Numerical simulation of local atmospheric circulations in the valley of Gwangneung KoFlux sites. Korean J. Agric. For. Meteorol. 2014, 16, 246–260. [Google Scholar] [CrossRef]

- Song, J.; Lee, S.-J.; Kang, M.S.; Moon, M.K.; Lee, J.-H.; Kim, J. High-resolution numerical simulations with WRF/Noah-MP in Cheongmicheon farmland in Korea during the 2014 special observation period. Korean J. Agric. For. Meteorol. 2015, 17, 384–398. [Google Scholar] [CrossRef]

- Lee, S.-J.; Song, J.; Kim, Y.J. The NCAM Land-Atmosphere Modeling Package (LAMP). Version 1: Implementation and Evaluation. Korean J. Agric. For. Meteorol. 2016, 18, 307–319. [Google Scholar] [CrossRef]

- Gultepe, I.; Zhou, B.; Milbrandt, J.; Bott, A.; Li, Y.; Heymsfield, A.J.; Ferrier, B.; Ware, R.; Pavolonis, M.; Kuhn, T.; et al. A review on ice fog measurements and modeling. Atmos. Res. 2015, 151, 2–19. [Google Scholar] [CrossRef]

- Kim, K.R.; Jo, E.S.; Ki, M.S.; Kang, J.H.; Hwang, Y.J.; Lee, Y.H. Implementation of an Automated Agricultural Frost Observation System (AAFOS). Korean J. Agric. For. Meteorol. 2024, 26, 63–74. [Google Scholar]

- Lee, S.J.; Berbery, E.H.; Alcaraz-Segura, D. Effect of implementing ecosystem functional type data in a mesoscale climate model. Adv. Atmos. Sci. 2013, 30, 1373–1386. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.H.; Lee, S.-M.; Lee, S.-J. Using the Multiple-Sensor-Based Frost Observation System (MFOS) for Image Object Analysis and Model Prediction Evaluation in an Orchard. Atmosphere 2024, 15, 906. https://doi.org/10.3390/atmos15080906

Kim SH, Lee S-M, Lee S-J. Using the Multiple-Sensor-Based Frost Observation System (MFOS) for Image Object Analysis and Model Prediction Evaluation in an Orchard. Atmosphere. 2024; 15(8):906. https://doi.org/10.3390/atmos15080906

Chicago/Turabian StyleKim, Su Hyun, Seung-Min Lee, and Seung-Jae Lee. 2024. "Using the Multiple-Sensor-Based Frost Observation System (MFOS) for Image Object Analysis and Model Prediction Evaluation in an Orchard" Atmosphere 15, no. 8: 906. https://doi.org/10.3390/atmos15080906