Weather Research and Forecasting Model (WRF) Sensitivity to Choice of Parameterization Options over Ethiopia

Abstract

1. Introduction

2. Materials and Methods

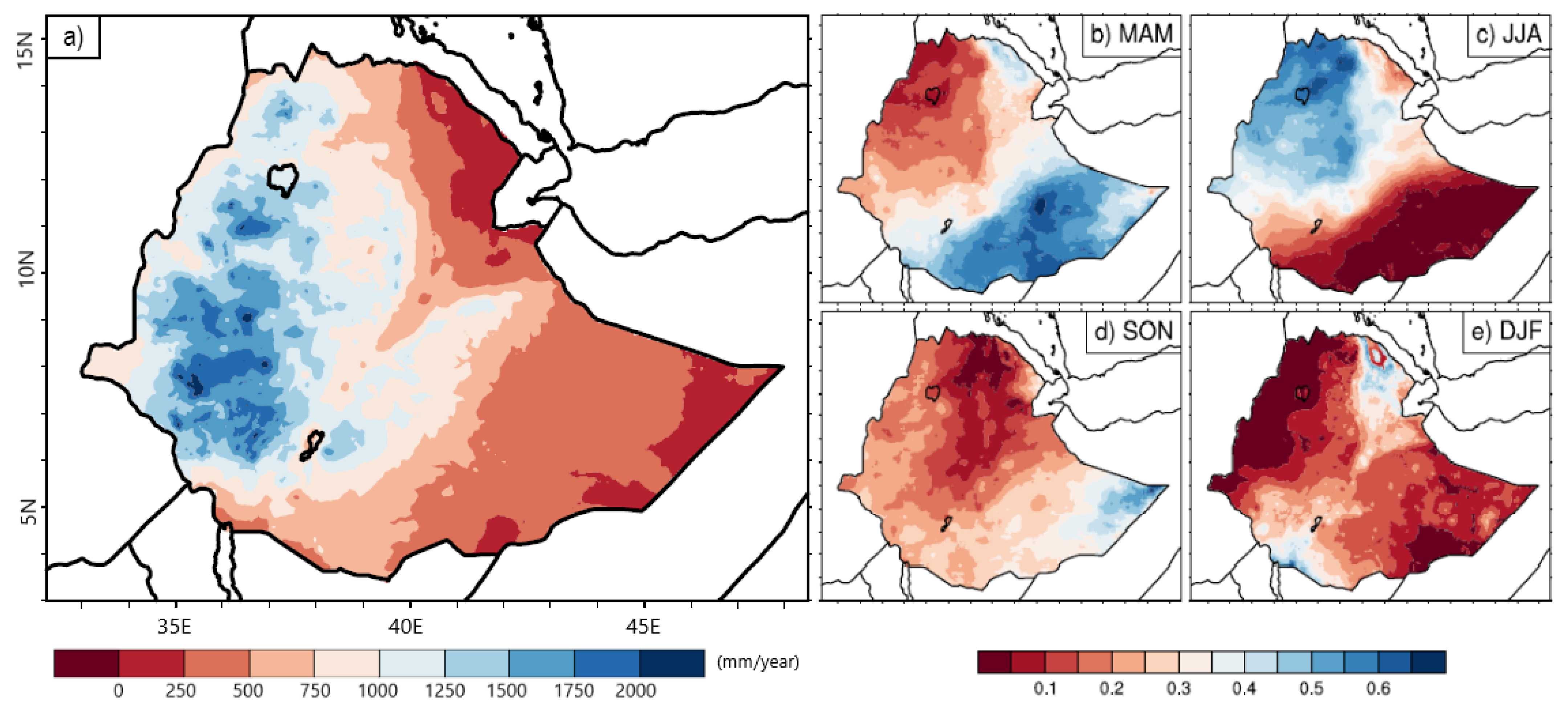

2.1. Verification Data

2.2. Initial and Boundary Conditions

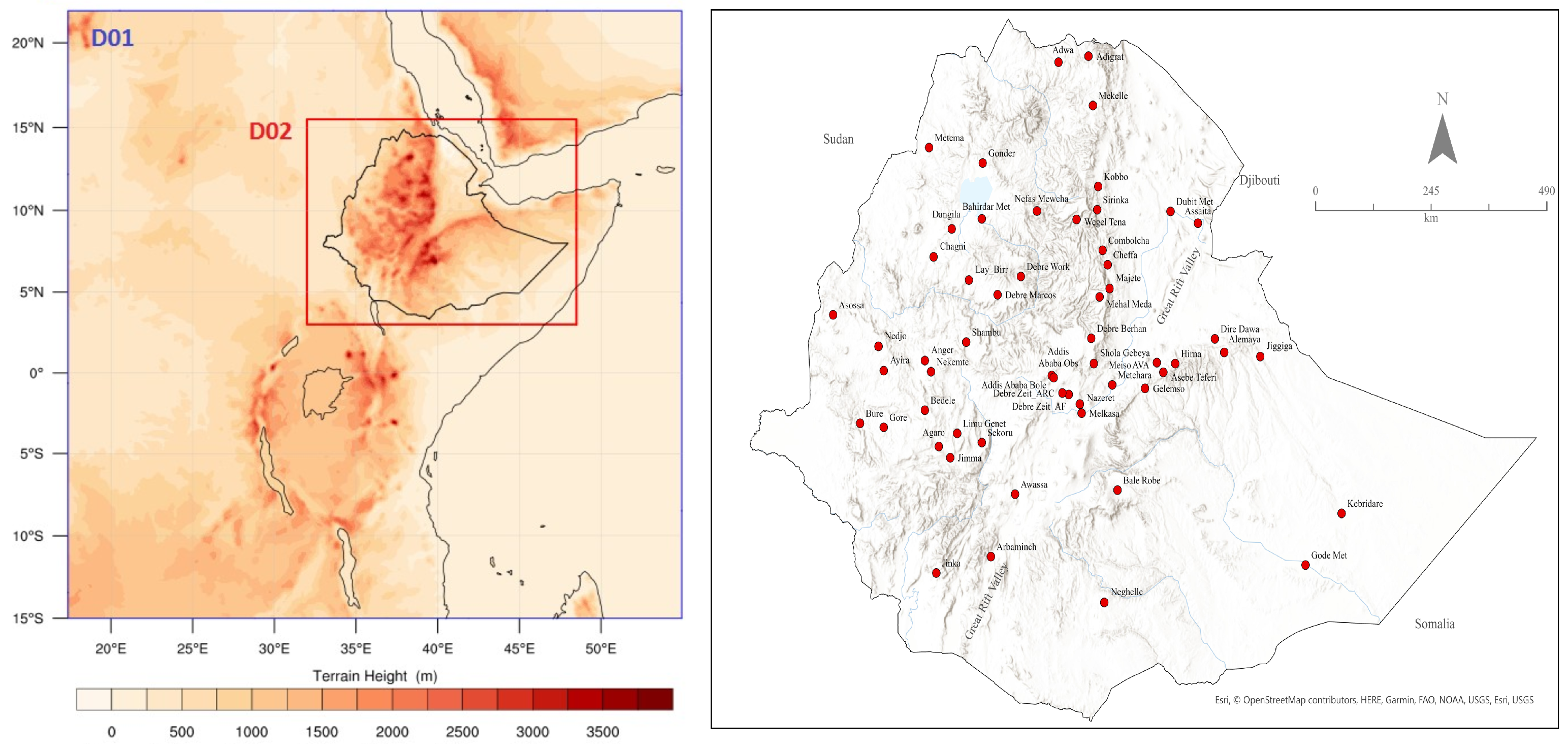

2.3. Model Set Up: Domain and Integration Time

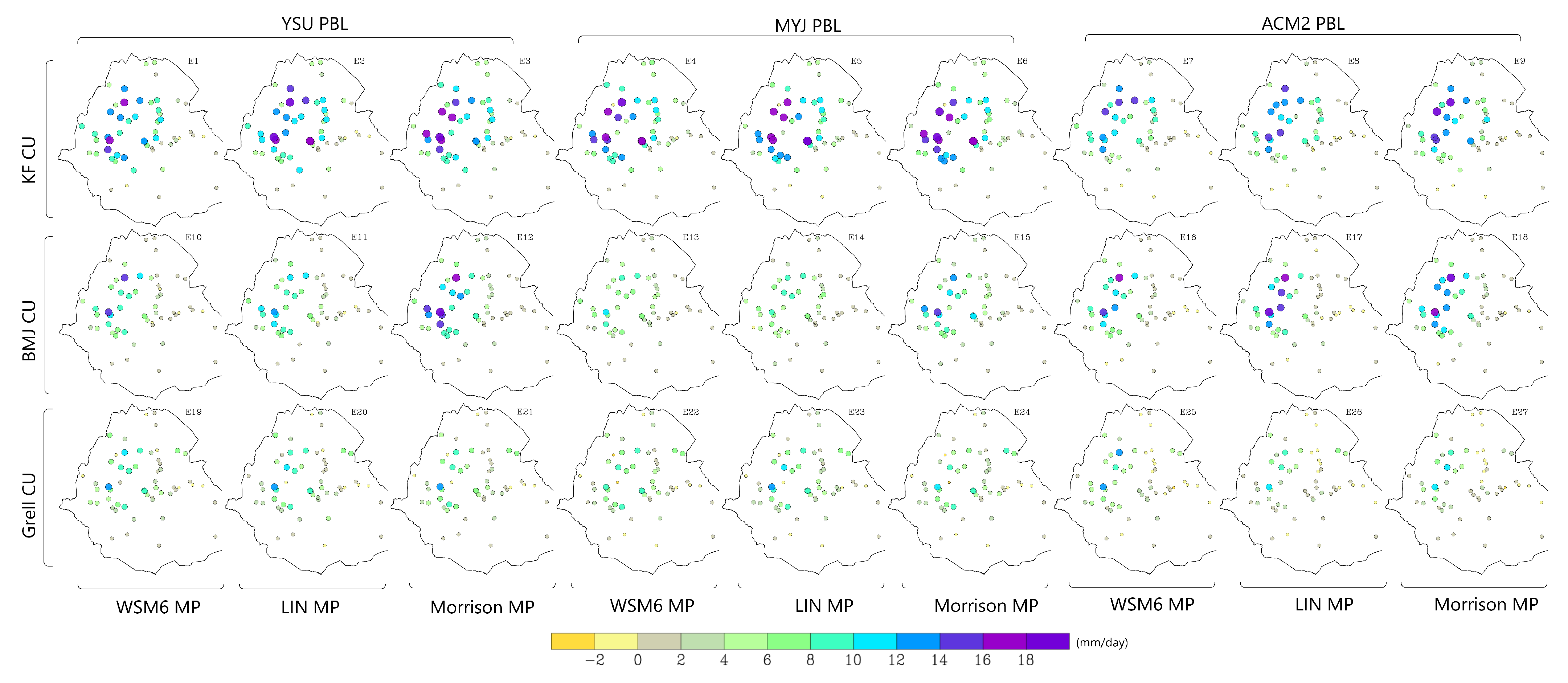

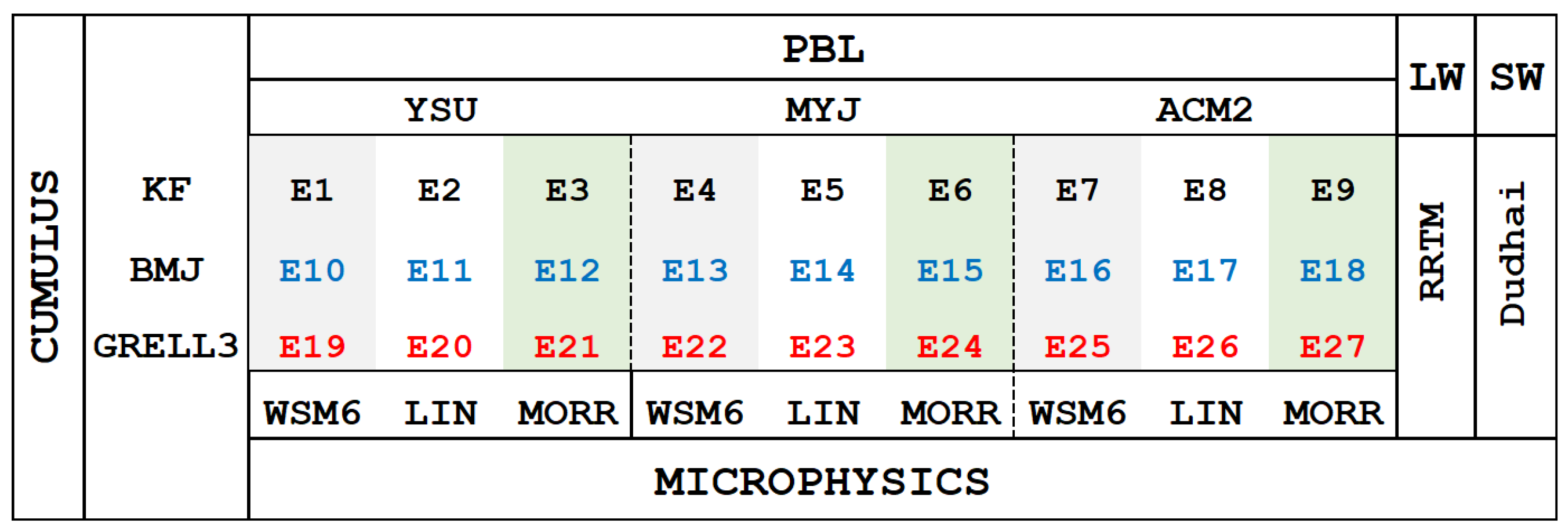

2.4. Experimental Setup

2.5. Evaluation Statistics

3. Results and Discussion

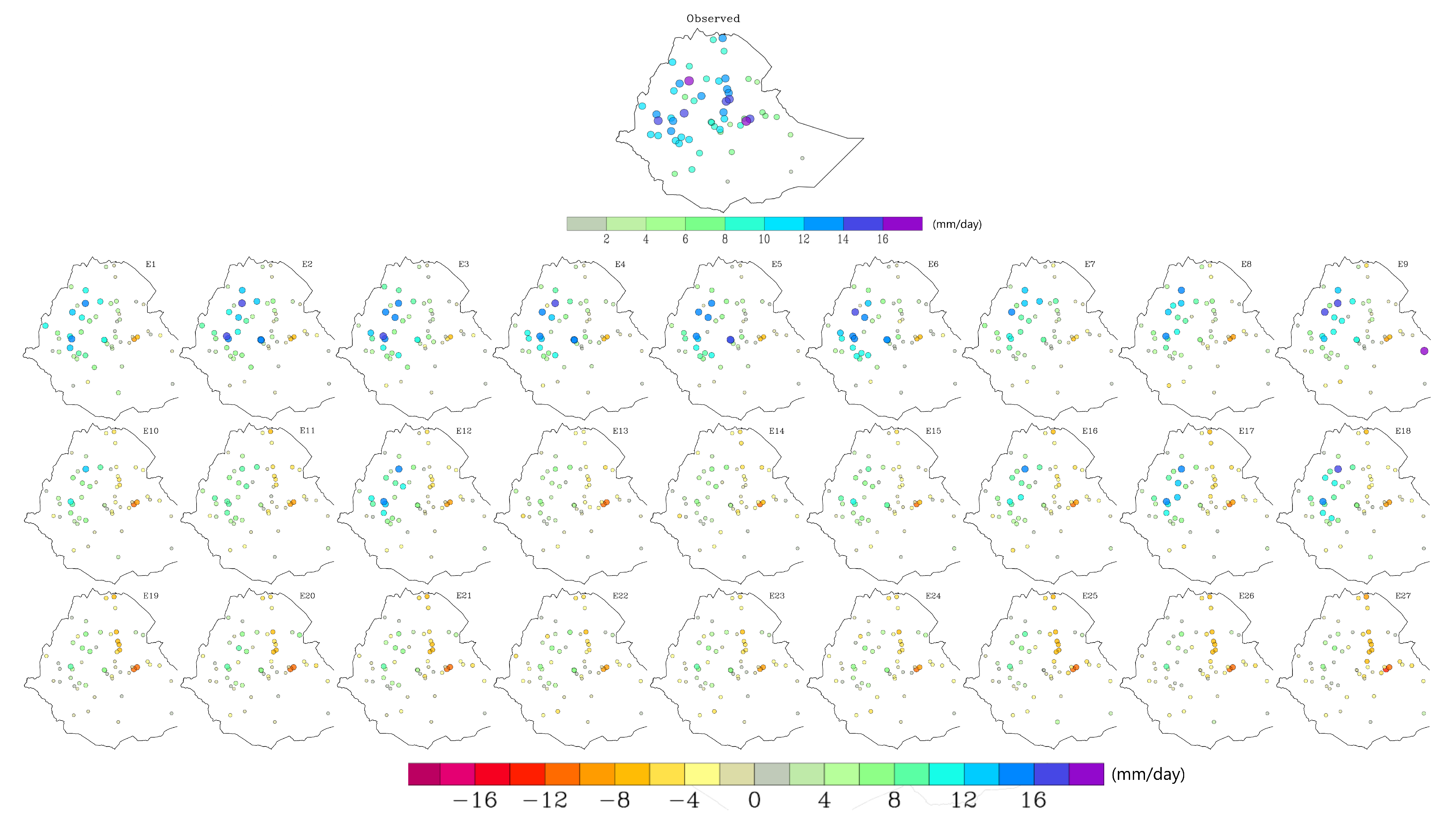

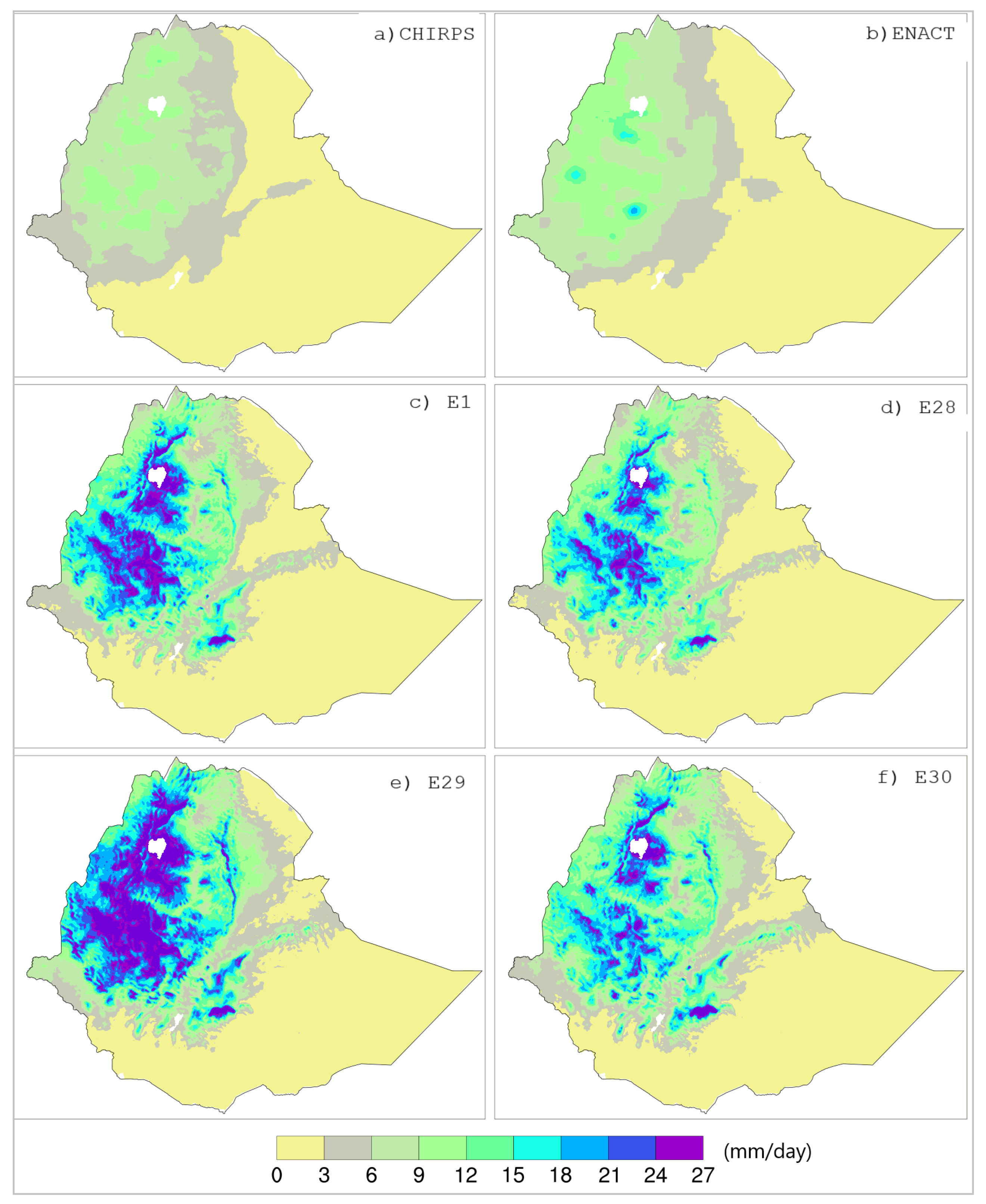

3.1. Mean Seasonal Precipitation

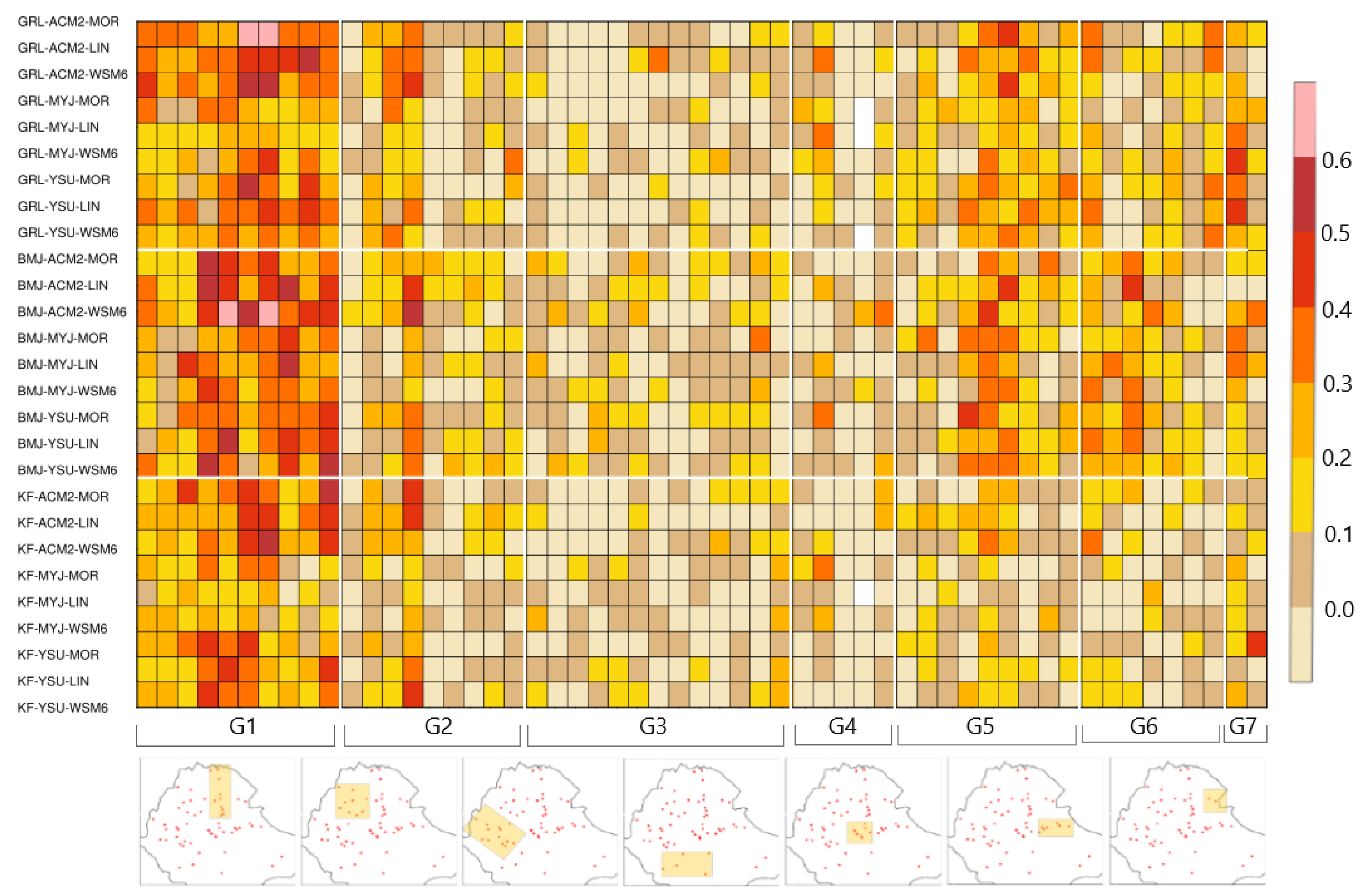

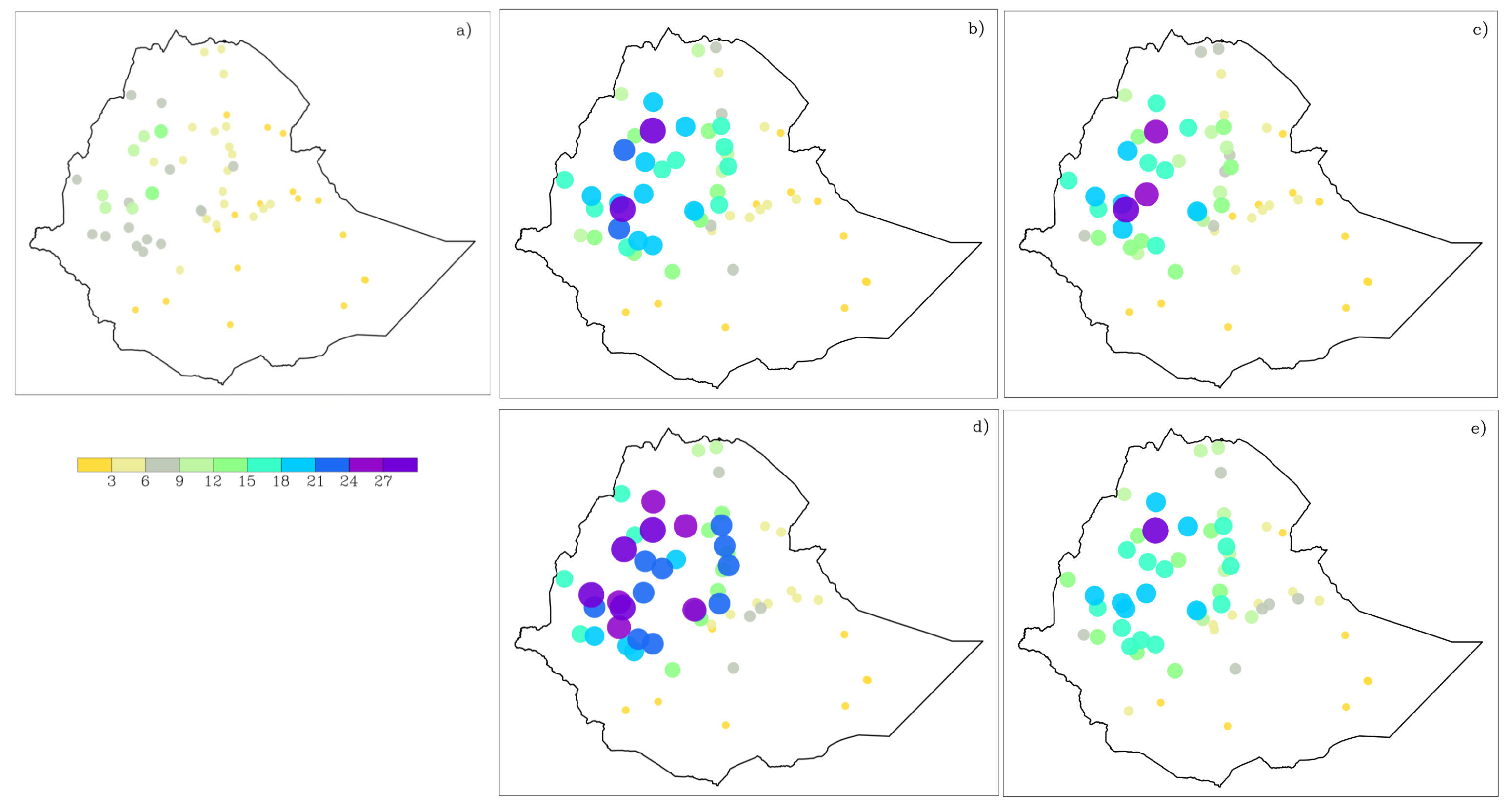

3.2. Rainy Day Frequency and Intensity

3.3. Radiation Schemes

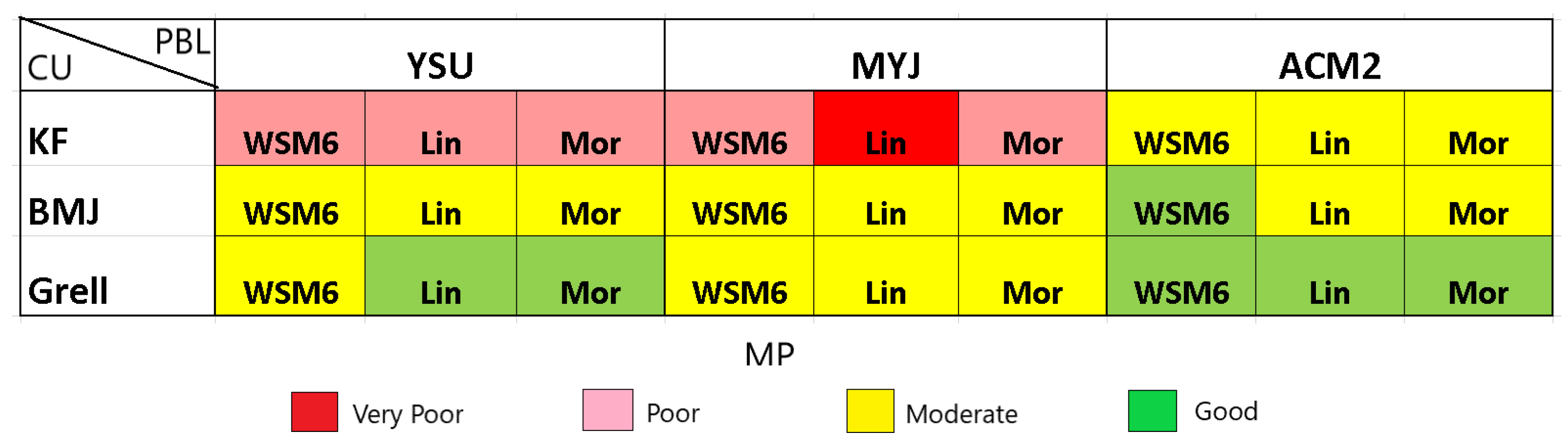

3.4. Ranking

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| No. of Stations in Each Bias Category | Max. Bias (Days) | Avg. Bias (Days) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| ID | 0–10 | 10–20 | 20–30 | 30–40 | 40–50 | 50–60 | 60–70 | ||

| E1 | 5 | 12 | 13 | 10 | 7 | 0 | 0 | 46 | 22.9 |

| E2 | 6 | 13 | 15 | 7 | 9 | 0 | 0 | 47 | 23.7 |

| E3 | 4 | 17 | 10 | 10 | 8 | 0 | 0 | 46 | 22.7 |

| E4 | 4 | 10 | 10 | 8 | 10 | 3 | 0 | 53 | 26.7 |

| E5 | 4 | 9 | 12 | 7 | 13 | 1 | 0 | 52 | 27.3 |

| E6 | 5 | 12 | 12 | 6 | 11 | 3 | 0 | 54 | 26.9 |

| E7 | 12 | 19 | 11 | 6 | 1 | 0 | 0 | 44 | 16 |

| E8 | 13 | 14 | 13 | 5 | 1 | 0 | 0 | 42 | 15.5 |

| E9 | 7 | 15 | 14 | 8 | 2 | 0 | 0 | 44 | 18.9 |

| E10 | 3 | 14 | 12 | 14 | 4 | 1 | 0 | 53 | 25.6 |

| E11 | 4 | 13 | 14 | 17 | 3 | 1 | 0 | 52 | 26.1 |

| E12 | 5 | 9 | 18 | 14 | 4 | 2 | 0 | 56 | 27.1 |

| E13 | 4 | 12 | 19 | 10 | 6 | 2 | 0 | 52 | 27.1 |

| E14 | 4 | 9 | 15 | 16 | 5 | 2 | 0 | 53 | 27.8 |

| E15 | 3 | 12 | 13 | 11 | 8 | 2 | 0 | 56 | 29.2 |

| E16 | 6 | 18 | 17 | 6 | 2 | 0 | 0 | 47 | 19.3 |

| E17 | 10 | 15 | 18 | 5 | 2 | 0 | 0 | 43 | 18.8 |

| E18 | 6 | 14 | 16 | 12 | 4 | 0 | 0 | 47 | 23.7 |

| E19 | 6 | 7 | 10 | 14 | 8 | 5 | 1 | 62 | 29.2 |

| E20 | 4 | 9 | 10 | 14 | 9 | 4 | 0 | 59 | 29.2 |

| E21 | 4 | 10 | 9 | 12 | 10 | 3 | 1 | 61 | 29.4 |

| E22 | 3 | 10 | 10 | 8 | 12 | 4 | 2 | 68 | 30.9 |

| E23 | 3 | 7 | 11 | 8 | 11 | 5 | 2 | 65 | 31.3 |

| E24 | 4 | 9 | 11 | 10 | 11 | 4 | 2 | 69 | 30.9 |

| E25 | 7 | 9 | 16 | 13 | 5 | 2 | 0 | 56 | 25.4 |

| E26 | 3 | 14 | 12 | 14 | 6 | 2 | 0 | 56 | 25.3 |

| E27 | 4 | 13 | 14 | 14 | 4 | 3 | 0 | 60 | 26.6 |

| Exp. ID | Mean Bias (mm) | MAB (mm) | No. of Stations | MAB/Option | |||

|---|---|---|---|---|---|---|---|

| Avg. | Min. | Max. | MAB < 5 | MAB > 10 | |||

| E1 | 4.1 | −8.3 | 15.1 | 5.2 | 24 | 10 | KF-only = 5.0 |

| E2 | 4.1 | −8 | 17.1 | 5.4 | 24 | 10 | BMJ-only = 4.0 |

| E3 | 4.3 | −7 | 16.5 | 5.4 | 24 | 9 | Grell-only = 3.4 |

| E4 | 4 | −8 | 17.3 | 5.2 | 22 | 11 | YSU-only = 4.3 |

| E5 | 4 | −7.6 | 16.8 | 5.1 | 23 | 9 | MYJ-only = 3.9 |

| E6 | 4.2 | −7.3 | 17.5 | 5.3 | 22 | 13 | ACM2-only = 4.2 |

| E7 | 3.2 | −7.6 | 14.7 | 4.4 | 21 | 6 | WSM6-only = 4.1 |

| E8 | 2.9 | −8.3 | 15.5 | 4.7 | 20 | 6 | LIN-only = 4.1 |

| E9 | 3.1 | −8.9 | 17.9 | 4.6 | 18 | 10 | MOR-only = 4.3 |

| E10 | 0.6 | −10.4 | 12 | 3.8 | 16 | 3 | |

| E11 | 0.8 | −9.9 | 9.4 | 4.2 | 18 | 0 | |

| E12 | 2 | −9.9 | 15 | 4.5 | 21 | 6 | |

| E13 | 0.2 | −11 | 7.1 | 3.1 | 10 | 2 | |

| E14 | 0 | −10.7 | 6.9 | 3 | 10 | 1 | |

| E15 | 1.2 | −10.1 | 10.9 | 3.5 | 14 | 2 | |

| E16 | 1.1 | −10.2 | 14.8 | 4.4 | 17 | 4 | |

| E17 | 1.1 | −11.6 | 15.7 | 4.8 | 18 | 6 | |

| E18 | 1.9 | −9.5 | 17.9 | 4.8 | 22 | 6 | |

| E19 | −0.2 | −10.2 | 8.9 | 3.4 | 18 | 2 | |

| E20 | −0.3 | −10.3 | 9.4 | 3.6 | 18 | 2 | |

| E21 | −0.6 | −11.3 | 8.2 | 3.6 | 19 | 2 | |

| E22 | −0.6 | −9.8 | 7.6 | 3.3 | 14 | 0 | |

| E23 | −0.4 | −9.3 | 8.8 | 3.2 | 13 | 0 | |

| E24 | −0.9 | −10.1 | 6.9 | 3.3 | 12 | 1 | |

| E25 | −1 | −11.7 | 9.6 | 3.7 | 13 | 2 | |

| E26 | −1.8 | −11.7 | 8.1 | 3.3 | 14 | 2 | |

| E27 | −1.8 | −12.1 | 7.9 | 3.3 | 15 | 2 | |

References

- DíEz, E.; Orfila, B.; FríAs, M.D.; FernáNdez, J.; CofiñO, A.S.; GutiéRrez, J.M. Downscaling ECMWF seasonal precipitation forecasts in Europe using the RCA model. Tellus A Dyn. Meteorol. Oceanogr. 2011, 63, 757–762. [Google Scholar] [CrossRef]

- Saha, S.; Moorthi, S.; Wu, X.; Wang, J.; Nadiga, S.; Tripp, P.; Behringer, D.; Hou, Y.T.; ya Chuang, H.; Iredell, M.; et al. The NCEP Climate Forecast System Version 2. J. Clim. 2014, 27, 2185–2208. [Google Scholar] [CrossRef]

- Molteni, F.; Stockdale, T.; Alonso-Balmaseda, M.; Balsamo, G.; Buizza, R.; Ferranti, L.; Magnusson, L.; Mogensen, K.; Palmer, T.N.; Vitart, F. The New ECMWF Seasonal Forecast System (System 4); European Centre for Medium-Range Weather Forecasts Reading: Reading, UK, 2011. [Google Scholar] [CrossRef]

- Zhong, A.; Hudson, D.; Alves, O.; Wang, G.; Hendon, H. Predictive Ocean Atmosphere Model for Australia (POAMA). In Proceedings of the 10th EMS Annual Meeting, Zürich, Switzerland, 13–17 September 2010; pp. 2010–2016. [Google Scholar]

- Robertson, A.W.; Qian, J.H.; Tippett, M.K.; Moron, V.; Lucero, A. Downscaling of Seasonal Rainfall over the Philippines: Dynamical versus Statistical Approaches. Mon. Weather. Rev. 2012, 140, 1204–1218. [Google Scholar] [CrossRef]

- Yuan, X.; Liang, X.Z.; Wood, E.F. WRF ensemble downscaling seasonal forecasts of China winter precipitation during 1982–2008. Clim. Dyn. 2012, 39, 2041–2058. [Google Scholar] [CrossRef]

- Davis, N.; Bowden, J.; Semazzi, F.; Xie, L.; Önol, B. Customization of RegCM3 Regional Climate Model for Eastern Africa and a Tropical Indian Ocean Domain. J. Clim. 2009, 22, 3595–3616. [Google Scholar] [CrossRef]

- Nikulin, G.; Asharaf, S.; Magariño, M.; Calmanti, S.; Cardoso, R.M.; Bhend, J.; Fernández, J.; Frías, M.; Fröhlich, K.; Früh, B.; et al. Dynamical and statistical downscaling of a global seasonal hindcast in eastern Africa. Clim. Serv. 2018, 9, 72–85. [Google Scholar] [CrossRef]

- Kerandi, N.M.; Laux, P.; Arnault, J.; Kunstmann, H. Performance of the WRF model to simulate the seasonal and interannual variability of hydrometeorological variables in East Africa: A case study for the Tana River basin in Kenya. Theor. Appl. Climatol. 2016, 130, 401–418. [Google Scholar] [CrossRef]

- Diro, G.T. Skill and economic benefits of dynamical downscaling of ECMWF ENSEMBLE seasonal forecast over southern Africa with RegCM4. Int. J. Climatol. 2015, 36, 675–688. [Google Scholar] [CrossRef]

- Diro, G.T.; Tompkins, A.M.; Bi, X. Dynamical downscaling of ECMWF Ensemble seasonal forecasts over East Africa with RegCM3. J. Geophys. Res. Atmos. 2012, 117, D16103. [Google Scholar] [CrossRef]

- Phan-Van, T.; Nguyen-Xuan, T.; Nguyen, H.; Laux, P.; Pham-Thanh, H.; Ngo-Duc, T. Evaluation of the NCEP Climate Forecast System and Its Downscaling for Seasonal Rainfall Prediction over Vietnam. Weather. Forecast. 2018, 33, 615–640. [Google Scholar] [CrossRef]

- Ogwang, B.A.; Chen, H.; Li, X.; Gao, C. Evaluation of the capability of RegCM4.0 in simulating East African climate. Theor. Appl. Climatol. 2016, 124, 303–313. [Google Scholar] [CrossRef]

- Siegmund, J.; Bliefernicht, J.; Laux, P.; Kunstmann, H. Toward a seasonal precipitation prediction system for West Africa: Performance of CFSv2 and high-resolution dynamical downscaling. J. Geophys. Res. Atmos. 2015, 120, 7316–7339. [Google Scholar] [CrossRef]

- Cheneka, B.R.; Brienen, S.; Fröhlich, K.; Asharaf, S.; Früh, B. Searching for an Added Value of Precipitation in Downscaled Seasonal Hindcasts over East Africa: COSMO-CLM Forced by MPI-ESM. Adv. Meteorol. 2016, 2016, 4348285. [Google Scholar] [CrossRef]

- Gao, X.; Shi, Y.; Han, Z.; Wang, M.; Wu, J.; Zhang, D.; Xu, Y.; Giorgi, F. Performance of RegCM4 over major river basins in China. Adv. Atmos. Sci. 2017, 34, 441–455. [Google Scholar] [CrossRef]

- Giorgi, F.; Mearns, L.O. Introduction to special section: Regional Climate Modeling Revisited. J. Geophys. Res. Atmos. 1999, 104, 6335–6352. [Google Scholar] [CrossRef]

- Xue, Y.; Janjic, Z.; Dudhia, J.; Vasic, R.; Sales, F. A review on regional dynamical downscaling in intraseasonal to seasonal simulation/prediction and major factors that affect downscaling ability. Atmos. Res. 2014, 147, 68–85. [Google Scholar] [CrossRef]

- Skamarock, W.C.; Klemp, J.B.; Dudhia, J.; Gill, D.O.; Barker, D.M.; Duda, M.G.; Huang, X.Y.; Wang, W.; Powers, J.G. A Description of the Advanced Research WRF Version 3; NCAR Tech Note NCAR/TN 475 STR; UCAR Communications: Boulder, CO, USA, 2008; Volume 3000, 125p. [Google Scholar]

- Ekström, M. Metrics to identify meaningful downscaling skill in WRF simulations of intense rainfall events. Environ. Model. Softw. 2016, 79, 267–284. [Google Scholar] [CrossRef]

- Evans, J.P.; Ekström, M.; Ji, F. Evaluating the performance of a WRF physics ensemble over South-East Australia. Clim. Dyn. 2012, 39, 1241–1258. [Google Scholar] [CrossRef]

- Ruiz, J.J.; Saulo, C.; Nogués-Paegle, J. WRF Model Sensitivity to Choice of Parameterization over South America: Validation against Surface Variables. Mon. Weather Rev. 2010, 138, 3342–3355. [Google Scholar] [CrossRef]

- Borge, R.; Alexandrov, V.; del Vas, J.; Lumbreras, J.; Rodríguez, E. A comprehensive sensitivity analysis of the WRF model for air quality applications over the Iberian Peninsula. Atmos. Environ. 2008, 42, 8560–8574. [Google Scholar] [CrossRef]

- Flaounas, E.; Bastin, S.; Janicot, S. Regional climate modelling of the 2006 West African monsoon: Sensitivity to convection and planetary boundary layer parameterisation using WRF. Clim. Dyn. 2011, 36, 1083–1105. [Google Scholar] [CrossRef]

- Kala, J.; Andrys, J.; Lyons, T.J.; Foster, I.J.; Evans, B.J. Sensitivity of WRF to driving data and physics options on a seasonal time-scale for the southwest of Western Australia. Clim. Dyn. 2015, 44, 633–659. [Google Scholar] [CrossRef]

- Ratna, S.B.; Ratnam, J.V.; Behera, S.K.; Rautenbach, C.D.; Ndarana, T.; Takahashi, K.; Yamagata, T. Performance assessment of three convective parameterization schemes in WRF for downscaling summer rainfall over South Africa. Clim. Dyn. 2014, 42, 2931–2953. [Google Scholar] [CrossRef]

- Crétat, J.; Pohl, B.; Richard, Y.; Drobinski, P. Uncertainties in simulating regional climate of Southern Africa: Sensitivity to physical parameterizations using WRF. Clim. Dyn. 2012, 38, 613–634. [Google Scholar] [CrossRef]

- Pohl, B.; Crétat, J.; Camberlin, P. Testing WRF capability in simulating the atmospheric water cycle over Equatorial East Africa. Clim. Dyn. 2011, 37, 1357–1379. [Google Scholar] [CrossRef]

- Funk, C.; Peterson, P.; Landsfeld, M.; Pedreros, D.; Verdin, J.; Shukla, S.; Husak, G.; Rowland, J.; Harrison, L.; Hoell, A.; et al. The climate hazards infrared precipitation with stations—a new environmental record for monitoring extremes. Sci. Data 2015, 2, sdata201566. [Google Scholar] [CrossRef]

- Dinku, T.; Hailemariam, K.; Maidment, R.; Tarnavsky, E.; Connor, S. Combined use of satellite estimates and rain gauge observations to generate high-quality historical rainfall time series over Ethiopia. Int. J. Climatol. 2014, 34, 2489–2504. [Google Scholar] [CrossRef]

- Dinku, T.; Block, P.; Sharoff, J.; Hailemariam, K.; Osgood, D.; del Corral, J.; Cousin, R.; Thomson, M.C. Bridging critical gaps in climate services and applications in africa. Earth Perspect. 2014, 1, 15. [Google Scholar] [CrossRef]

- NCAR. The NCAR Command Language (NCL); NCAR: Boulder, CO, USA, 2016. [Google Scholar] [CrossRef]

- Bayissa, Y.; Tadesse, T.; Demisse, G.; Shiferaw, A. Evaluation of satellite-based rainfall estimates and application to monitor meteorological drought for the Upper Blue Nile Basin, Ethiopia. Remote Sens. 2017, 9, 669. [Google Scholar] [CrossRef]

- Dinku, T.; Funk, C.; Peterson, P.; Maidment, R.; Tadesse, T.; Gadain, H.; Ceccato, P. Validation of the CHIRPS satellite rainfall estimates over eastern Africa. Q. J. R. Meteorol. Soc. 2018, 144, 292–312. [Google Scholar] [CrossRef]

- Saha, S.; Moorthi, S.; Pan, H.L.; Wu, X.; Wang, J.; Nadiga, S.; Tripp, P.; Kistler, R.; Woollen, J.; Behringer, D.; et al. The NCEP Climate Forecast System Reanalysis. Bull. Am. Meteorol. Soc. 2010, 91, 1015–1057. [Google Scholar] [CrossRef]

- Saha, S.; Moorthi, S.; Pan, H.L.; Wu, X.; Wang, J.; Nadiga, S.; Tripp, P.; Kistler, R.; Woollen, J.; Behringer, D.; et al. NCEP Climate Forecast System Reanalysis (CFSR) 6-Hourly Products, January 1979 to December 2010. 2010. Available online: https://data.ucar.edu/dataset/ncep-climate-forecast-system-reanalysis-cfsr-6-hourly-products-january-1979-to-december-2010 (accessed on 27 June 2024).

- Segele, Z.T.; Leslie, L.M.; Lamb, P.J. Evaluation and adaptation of a regional climate model for the Horn of Africa: Rainfall climatology and interannual variability. Int. J. Climatol. 2009, 29, 47–65. [Google Scholar] [CrossRef]

- Argüeso, D.; Hidalgo-Muñoz, J.M.; Gámiz-Fortis, S.R.; Esteban-Parra, M.J.; Dudhia, J.; Castro-Díez, Y. Evaluation of WRF Parameterizations for Climate Studies over Southern Spain Using a Multistep Regionalization. J. Clim. 2011, 24, 5633–5651. [Google Scholar] [CrossRef]

- Kain, J.S. The Kain-Fritsch convective parameterization: An update. J. Appl. Meteorol. 2004, 43, 170–181. [Google Scholar] [CrossRef]

- Janjić, Z.I. The step-mountain eta coordinate model: Further developments of the convection, viscous sublayer, and turbulence closure schemes. Mon. Weather Rev. 1994, 122, 927–945. [Google Scholar] [CrossRef]

- Janjić, Z.I. Comments on “Development and Evaluation of a Convection Scheme for Use in Climate Models”. J. Atmos. Sci. 2000, 57, 3686. [Google Scholar] [CrossRef]

- Grell, G.A. Prognostic evaluation of assumptions used by cumulus parameterizations. Mon. Weather. Rev. 1993, 121, 764–787. [Google Scholar] [CrossRef]

- Grell, G.A.; Dévényi, D. A generalized approach to parameterizing convection combining ensemble and data assimilation techniques. Geophys. Res. Lett. 2002, 29, 38-1–38-4. [Google Scholar] [CrossRef]

- Molinari, J.; Dudek, M. Parameterization of Convective Precipitation in Mesoscale Numerical Models: A Critical Review. Mon. Weather. Rev. 1992, 120, 326–344. [Google Scholar] [CrossRef]

- Hong, S.Y.; Noh, Y.; Dudhia, J. A New Vertical Diffusion Package with an Explicit Treatment of Entrainment Processes. Mon. Weather. Rev. 2006, 134, 2318–2341. [Google Scholar] [CrossRef]

- Pleim, J.E. A combined local and nonlocal closure model for the atmospheric boundary layer. Part I: Model description and testing. J. Appl. Meteorol. Climatol. 2007, 46, 1383–1395. [Google Scholar] [CrossRef]

- Jiménez, P.A.; Dudhia, J.; González-Rouco, J.F.; Navarro, J.; Montávez, J.P.; García-Bustamante, E. A Revised Scheme for the WRF Surface Layer Formulation. Mon. Weather. Rev. 2012, 140, 898–918. [Google Scholar] [CrossRef]

- Janjić, Z.I. The Step-Mountain Coordinate: Physical Package. Mon. Weather Rev. 1990, 118, 1429–1443. [Google Scholar] [CrossRef]

- Hong, S.Y.; Lim, J.O.J. The WRF single-moment 6-class microphysics scheme (WSM6). J. Korean Meteor. Soc. 2006, 42, 129–151. [Google Scholar]

- Lin, Y.L.; Farley, R.D.; Orville, H.D. Bulk parameterization of the snow field in a cloud model. J. Clim. Appl. Meteorol. 1983, 22, 1065–1092. [Google Scholar] [CrossRef]

- Morrison, H.; Thompson, G.; Tatarskii, V. Impact of cloud microphysics on the development of trailing stratiform precipitation in a simulated squall line: Comparison of one-and two-moment schemes. Mon. Weather Rev. 2009, 137, 991–1007. [Google Scholar] [CrossRef]

- Mlawer, E.J.; Taubman, S.J.; Brown, P.D.; Iacono, M.J.; Clough, S.A. Radiative transfer for inhomogeneous atmospheres: RRTM, a validated correlated-k model for the longwave. J. Geophys. Res. Atmos. 1997, 102, 16663–16682. [Google Scholar] [CrossRef]

- Dudhia, J. Numerical study of convection observed during the winter monsoon experiment using a mesoscale two-dimensional model. J. Atmos. Sci. 1989, 46, 3077–3107. [Google Scholar] [CrossRef]

- Iacono, M.J.; Delamere, J.S.; Mlawer, E.J.; Shephard, M.W.; Clough, S.A.; Collins, W.D. Radiative forcing by long-lived greenhouse gases: Calculations with the AER radiative transfer models. J. Geophys. Res. Atmos. 2008, 113. [Google Scholar] [CrossRef]

- Tewari, M.; Chen, F.; Wang, W.; Dudhia, J.; LeMone, M.A.; Mitchell, K.; Ek, M.; Gayno, G.; Wegiel, J.; Cuenca, R.H. Implementation and verification of the unified NOAH land surface model in the WRF model. In Proceedings of the 20th Conference on Weather Analysis and Forecasting/16th Conference on Numerical Weather Prediction, Seattle, WA, USA, 12–16 January 2004; Volume 1115. [Google Scholar]

- Survey, W.U. WRF Physics Use Survey—August 2015. 2015. Available online: https://www2.mmm.ucar.edu/wrf/users/physics/wrf_physics_survey.pdf (accessed on 4 March 2021).

- Endris, H.S.; Omondi, P.; Jain, S.; Lennard, C.; Hewitson, B.; Chang’a, L.; Awange, J.L.; Dosio, A.; Ketiem, P.; Nikulin, G.; et al. Assessment of the Performance of CORDEX Regional Climate Models in Simulating East African Rainfall. J. Clim. 2013, 26, 8453–8475. [Google Scholar] [CrossRef]

- Riddle, E.E.; Cook, K.H. Abrupt rainfall transitions over the Greater Horn of Africa: Observations and regional model simulations. J. Geophys. Res. Atmos. 2008, 113, D15109. [Google Scholar] [CrossRef]

- Tariku, T.B.; Gan, T.Y. Sensitivity of the weather research and forecasting model to parameterization schemes for regional climate of Nile River Basin. Clim. Dyn. 2018, 50, 4231–4247. [Google Scholar] [CrossRef]

- Wilks, D.S. Statistical Methods in the Atmospheric Sciences; Academic Press: Cambridge, MA, USA, 2011; Volume 100. [Google Scholar]

- Gbode, I.E.; Dudhia, J.; Ogunjobi, K.O.; Ajayi, V.O. Sensitivity of different physics schemes in the WRF model during a West African monsoon regime. Theor. Appl. Climatol. 2019, 136, 733–751. [Google Scholar] [CrossRef]

- Zeleke, T.; Giorgi, F.; Tsidu, G.M.; Diro, G.T. Spatial and temporal variability of summer rainfall over Ethiopia from observations and a regional climate model experiment. Theor. Appl. Climatol. 2013, 111, 665–681. [Google Scholar] [CrossRef]

- Awan, N.K.; Truhetz, H.; Gobiet, A. Parameterization-Induced Error Characteristics of MM5 and WRF Operated in Climate Mode over the Alpine Region: An Ensemble-Based Analysis. J. Clim. 2011, 24, 3107–3123. [Google Scholar] [CrossRef]

- Jeworrek, J.; West, G.; Stull, R. WRF Precipitation Performance and Predictability for Systematically Varied Parameterizations over Complex Terrain. Weather Forecast. 2021, 36, 893–913. [Google Scholar] [CrossRef]

- Leung, L.R.; Ghan, S.J. A subgrid parameterization of orographic precipitation. Theor. Appl. Climatol. 1995, 52, 95–118. [Google Scholar]

- Sugimoto, S.; Xue, Y.; Sato, T.; Takahashi, H.G. Influence of convective processes on Weather Research and Forecasting model precipitation biases over East Asia. Clim. Dyn. 2024, 62, 2859–2875. [Google Scholar] [CrossRef]

- Pleim, J.E. A Combined Local and Nonlocal Closure Model for the Atmospheric Boundary Layer. Part II: Application and Evaluation in a Mesoscale Meteorological Model. J. Appl. Meteorol. Climatol. 2007, 46, 1396–1409. [Google Scholar] [CrossRef]

- Göber, M.; Zsótér, E.; Richardson, D.S. Could a perfect model ever satisfy a naïve forecaster? On grid box mean versus point verification. Meteorol. Appl. 2008, 15, 359–365. [Google Scholar] [CrossRef]

| WRF Run | CU | PBL | MP | LW | SW |

|---|---|---|---|---|---|

| E28 | KF | YSU | WSM6 | RRTMG | Dudhia |

| E29 | KF | YSU | WSM6 | RRTM | RRTMG |

| E30 | KF | YSU | WSM6 | RRTMG | RRTMG |

| E31 | KF | MYJ | LIN | RRTMG | RRTMG |

| E32 | KF | MYJ | MOR | RRTMG | RRTMG |

| E33 | KF | MYJ | WSM6 | RRTMG | RRTMG |

| E34 | KF | MYJ | LIN | RRTMG | RRTMG |

| E35 | KF | MYJ | MOR | RRTMG | RRTMG |

| Physics Option | Parameterization Scheme | Mean Bias | Mean Bias (ACM2-Only) | Bias Range |

|---|---|---|---|---|

| KF | 24.8 | 18.2 | 16.6–30.8 | |

| CU | BMJ | 26.3 | 21.1 | 18.8–31.0 |

| Grell | 31.1 | 25.8 | 25.4–35.6 | |

| YSU | 28.9 | - | 25.7–32.7 | |

| PBL | MYJ | 31.6 | - | 28.4–35.6 |

| ACM2 | 21.7 | - | 16.6–26.0 | |

| WSM6 | 27.0 | 21.0 | 17.3–35.0 | |

| MP | LIN | 27.0 | 20.3 | 16.6–35.6 |

| MOR | 28.3 | 23.8 | 20.8–34.7 |

| Rank | ID | Description | MAEstn | MAEgrd | ERD | R | PCC | Agg. Score |

|---|---|---|---|---|---|---|---|---|

| 1 | E26 | Grell-ACM2-LIN | 0.00 | 0.00 | 0.63 | 0.15 | 0.28 | 1.06 |

| 2 | E27 | Grell-ACM2-MOR | 0.00 | 0.00 | 0.73 | 0.16 | 0.30 | 1.19 |

| 3 | E25 | Grell-ACM2-WSM6 | 0.14 | 0.11 | 0.65 | 0.31 | 0.44 | 1.65 |

| 4 | E16 | BMJ-ACM2-WSM6 | 0.33 | 0.29 | 0.28 | 0.00 | 0.84 | 1.74 |

| 5 | E21 | Grell-YSU-MOR | 0.20 | 0.31 | 0.89 | 0.36 | 0.05 | 1.81 |

| 6 | E20 | Grell-YSU-LIN | 0.24 | 0.39 | 0.87 | 0.33 | 0.10 | 1.93 |

| 7 | E22 | Grell-MYJ-WSM6 | 0.23 | 0.33 | 1.00 | 0.46 | 0.03 | 2.04 |

| 8 | E14 | BMJ-MYJ-LIN | 0.27 | 0.26 | 0.79 | 0.39 | 0.33 | 2.04 |

| 9 | E24 | Grell-MYJ-MOR | 0.19 | 0.22 | 0.98 | 0.59 | 0.07 | 2.06 |

| 10 | E13 | BMJ-MYJ-WSM6 | 0.31 | 0.22 | 0.75 | 0.50 | 0.29 | 2.06 |

| 11 | E19 | Grell-YSU-WSM6 | 0.27 | 0.39 | 0.89 | 0.44 | 0.10 | 2.09 |

| 12 | E17 | BMJ-ACM2-LIN | 0.36 | 0.30 | 0.26 | 0.21 | 1.00 | 2.13 |

| 13 | E10 | BMJ-YSU-WSM6 | 0.31 | 0.37 | 0.67 | 0.11 | 0.67 | 2.13 |

| 14 | E15 | BMJ-MYJ-MOR | 0.43 | 0.45 | 0.88 | 0.41 | 0.04 | 2.20 |

| 15 | E23 | Grell-MYJ-LIN | 0.27 | 0.34 | 1.00 | 0.61 | 0.00 | 2.21 |

| 16 | E7 | KF-ACM2-WSM6 | 0.59 | 0.45 | 0.01 | 0.49 | 0.75 | 2.29 |

| 17 | E8 | KF-ACM2-LIN | 0.57 | 0.42 | 0.00 | 0.47 | 0.91 | 2.38 |

| 18 | E18 | BMJ-ACM2-MOR | 0.48 | 0.40 | 0.54 | 0.26 | 0.75 | 2.43 |

| 19 | E12 | BMJ-YSU-MOR | 0.52 | 0.56 | 0.77 | 0.23 | 0.40 | 2.47 |

| 20 | E11 | BMJ-YSU-LIN | 0.38 | 0.38 | 0.70 | 0.32 | 0.71 | 2.49 |

| 21 | E9 | KF-ACM2-MOR | 0.63 | 0.47 | 0.20 | 0.56 | 0.82 | 2.69 |

| 22 | E1 | KF-YSU-WSM6 | 0.83 | 0.79 | 0.47 | 0.56 | 0.68 | 3.33 |

| 23 | E3 | KF-YSU-MOR | 0.92 | 0.85 | 0.45 | 0.71 | 0.43 | 3.36 |

| 24 | E2 | KF-YSU-LIN | 0.90 | 0.88 | 0.52 | 0.64 | 0.66 | 3.61 |

| 25 | E4 | KF-MYJ-WSM6 | 0.98 | 0.93 | 0.71 | 0.81 | 0.35 | 3.78 |

| 26 | E6 | KF-MYJ-MOR | 1.00 | 0.97 | 0.72 | 0.78 | 0.37 | 3.83 |

| 27 | E5 | KF-MYJ-LIN | 1.00 | 1.00 | 0.77 | 1.00 | 0.38 | 4.15 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shiferaw, A.; Tadesse, T.; Rowe, C.; Oglesby, R. Weather Research and Forecasting Model (WRF) Sensitivity to Choice of Parameterization Options over Ethiopia. Atmosphere 2024, 15, 974. https://doi.org/10.3390/atmos15080974

Shiferaw A, Tadesse T, Rowe C, Oglesby R. Weather Research and Forecasting Model (WRF) Sensitivity to Choice of Parameterization Options over Ethiopia. Atmosphere. 2024; 15(8):974. https://doi.org/10.3390/atmos15080974

Chicago/Turabian StyleShiferaw, Andualem, Tsegaye Tadesse, Clinton Rowe, and Robert Oglesby. 2024. "Weather Research and Forecasting Model (WRF) Sensitivity to Choice of Parameterization Options over Ethiopia" Atmosphere 15, no. 8: 974. https://doi.org/10.3390/atmos15080974

APA StyleShiferaw, A., Tadesse, T., Rowe, C., & Oglesby, R. (2024). Weather Research and Forecasting Model (WRF) Sensitivity to Choice of Parameterization Options over Ethiopia. Atmosphere, 15(8), 974. https://doi.org/10.3390/atmos15080974