An Application of Fish Detection Based on Eye Search with Artificial Vision and Artificial Neural Networks

Abstract

:1. Introduction

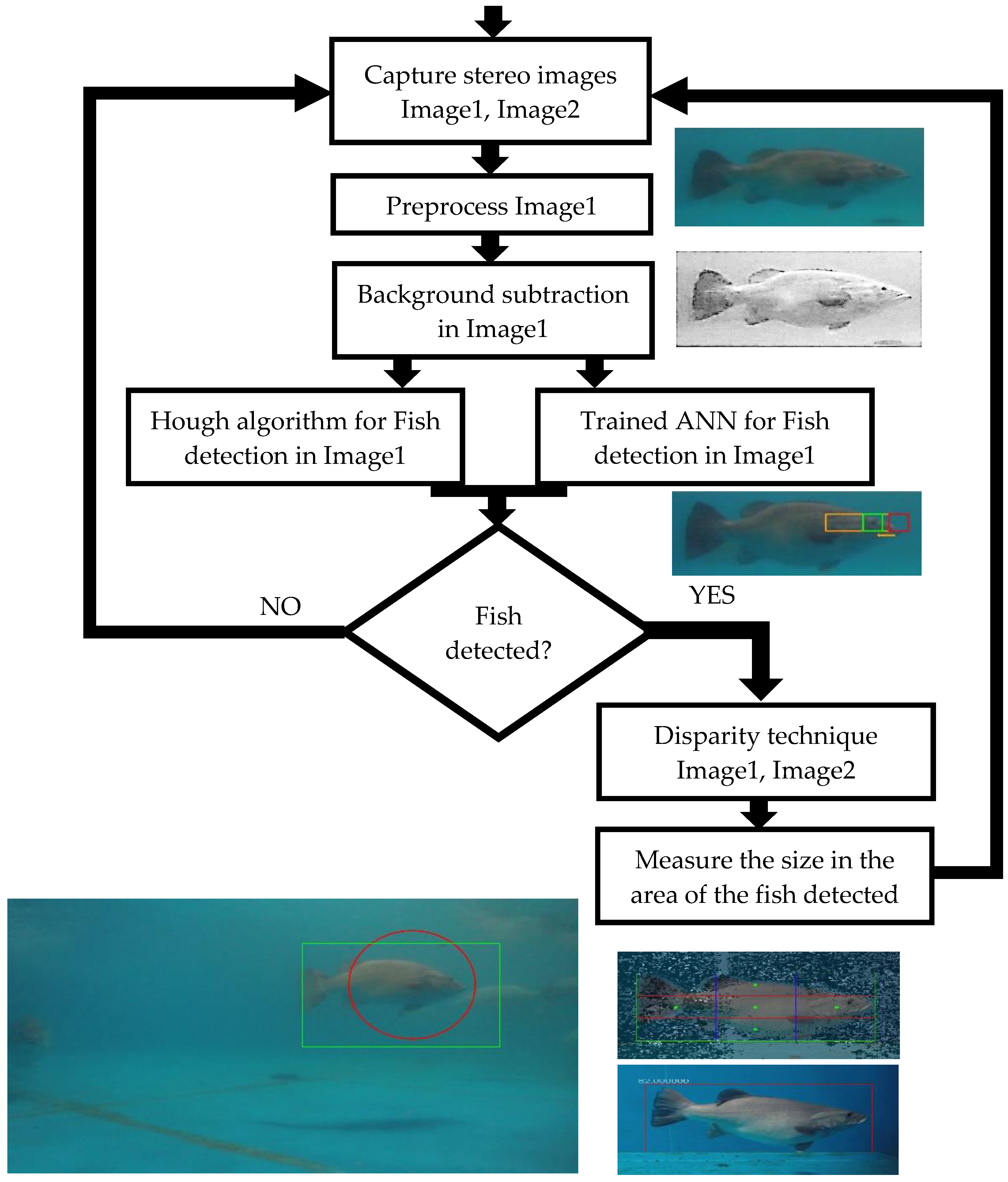

2. Materials and Methods

2.1. Image Filtering

2.2. Background Subtraction

2.3. Hough Eye Detection

2.4. Fish Detection Using a Feed-Forward Artificial Neural Network

3. Size Estimation

4. Results

- Intel Core i7-3770 CPU (4 cores, 8 threads)

- NVIDIA GT 640 graphics card

- 16 GB of 1600 MHz DDR3 RAM

- WDC WD10EZRX Hard Disk

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

References

- Castro-Santos, T.; Haro, A.; Walk, S. A passive integrated transponder (PIT) tag system for monitoring fishways. Fish. Res. 1996, 28, 253–261. [Google Scholar] [CrossRef]

- Ashraf, M.; Lucas, J. Underwater Object Recognition Techniques Using Ultrasonics. Presented at the IEEE Oceans 94 Osates, Brest, France, 13–16 September 1994. [Google Scholar]

- Bakar, S.A.A.; Ong, N.R.; Aziz, M.H.A.; Alcain, J.B.; Haimi, W.M.W.N.; Sauli, Z. Underwater detection by using ultrasonic sensor. In AIP Conference Proceedings; AIP Publishing LLC: Melville, NY, USA, 2017; Volume 1985, p. 020305. [Google Scholar]

- Ghobrial, M. Fish Detection Automation from ARIS and DIDSON SONAR Data; University of Oulu: Oulu, Finland, 2019. [Google Scholar]

- Schettini, R.; Corchs, S. Underwater Image Processing: State of the Art of Restoration and Image Enhancement Methods. EURASIP J. Adv. Signal Process. 2010, 2010, 746052. [Google Scholar] [CrossRef] [Green Version]

- Trucco, E.; Olmos-Antillon, A.T. Self-Tuning Underwater Image Restoration. IEEE J. Ocean. Eng. 2006, 31, 511–519. [Google Scholar] [CrossRef]

- Yamashita, A.; Fujii, M.; Kaneko, T. Color Registration of Underwater Images for Underwater Sensing with Consideration of Light Attenuation. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 4570–4575. [Google Scholar]

- Lee, D.; Kim, G.; Kim, D.; Myung, H.; Choi, H.-T. Vision-based object detection and tracking for autonomous navigation of underwater robots. Ocean Eng. 2012, 48, 59–68. [Google Scholar] [CrossRef]

- Puertas, J.; Cea, L.; Bermúdez, M.; Pena, L.; Rodríguez, A.; Rabuñal, J.; Balairón, L.; Lara, A.; Aramburu, E. Computer application for the analysis and design of vertical slot fishways in accordance with the requirements of the target species. Ecol. Eng. 2011, 48, 51–60. [Google Scholar] [CrossRef]

- Zhang, Z.; Lee, D.; Zhang, M.; Tippetts, B.; Lillywhite, K. Object recognition algorithm for the automatic identification and removal of invasive fish. Biosyst. Eng. 2016, 145, 65–75. [Google Scholar] [CrossRef]

- Ravanbakhsh, M.; Shortis, M.; Shafait, F.; Mian, A.; Harvey, E.; Seager, J. Automated Fish Detection in Underwater Images Using Shape-Based Level Sets. Photogramm. Record 2015, 30, 46–62. [Google Scholar] [CrossRef]

- Harveya, E.; Cappob, M.; Shortisc, M.; Robsond, S.; Buchanane, J.; Speareb, P. The accuracy and precision of underwater measurements of length and maximum body depth of southern bluefin tuna (Thunnus maccoyii) with a stereo–video camera system. Fish. Res. 2003, 63, 315–326. [Google Scholar] [CrossRef]

- Rodríguez, A.; Bermúdez, M.; Rabuñal, J.; Puertas, J.; Dorado, J.; Pena, L.; Balairón, L. Optical Fish Trajectory Measurement in Fishways through Computer Vision and Artificial Neural Networks. J. Comput. Civ. Eng. 2011, 25, 291–301. [Google Scholar] [CrossRef]

- Shortis, M.R.; Ravanbakskh, M.; Shaifat, F.; Harvey, E.S.; Mian, A.; Seager, J.W.; Culverhouse, P.F.; Cline, D.E.; Edgington, D.R. A review of techniques for the identification and measurement of fish in underwater stereo-video image sequences. In Videometrics, Range Imaging, and Applications XII; and Automated Visual Inspection; International Society for Optics and Photonics: Bellingham, WA, USA, 2013; p. 8791. [Google Scholar]

- Rico-Diaz, A.J.; Rodríguez, A.; Villares, D.; Rabuñal, J.; Puertas, J.; Pena, L. A Detection System for Vertical Slot Fishways Using Laser Technology and Computer Vision Techniques. In International Work-Conference on Artificial Neural Networks; Springer: Cham, Switzerland, 2015; pp. 218–226. [Google Scholar]

- Cui, S.; Zhou, Y.; Wang, Y.; Zhai, L. Fish Detection Using Deep Learning. Appl. Comput. Intell. Soft Comput. 2020, 2020, 1–13. [Google Scholar] [CrossRef]

- Shevchenko, V.; Eerola, T.; Kaarna, A. Fish Detection from Low Visibility Underwater Videos. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018. [Google Scholar]

- OPENCV: Open Source Computer Vision. Available online: http://opencv.org (accessed on 26 October 2020).

- Comaniciu, D.; Meer, P. Mean shift analysis and applications. In Proceedings of the Seventh IEEE International Conference, Munich, Greece, 19 July 1999; pp. 1197–1203. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean Shift: A Robust Approach toward Feature Space Analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef] [Green Version]

- Suzuki, S.; Abe, K. Topological Structural Analysis of Digitized Binary Images by Border Following. Comput. Vis. Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. PAMI 1986, 8, 679–698. [Google Scholar] [CrossRef]

- Nistér, D.; Stewénius, H. Linear Time Maximally Stable Extremal Regions. In Proceedings of the Computer Vision–ECCV 2008, Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 183–196. [Google Scholar]

- Illingworth, J.; Kittler, J. The adaptive hough transform. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 9, 8. [Google Scholar] [CrossRef] [PubMed]

- Yuen, H.K.; Princen, J.; Illingworth, J.; Kittler, J. Comparative study of Hough Transform methods for circle finding. Image Vis. Comput. 1990, 8, 71–77. [Google Scholar] [CrossRef] [Green Version]

- Lamb, T.D.; Collin, S.P.; Pugh, E.N. Evolution of the vertebrate eye: Opsins, photoreceptors, retina and eye cup. Nat. Rev. Neurosci. 2007, 8, 960–976. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Land, M.F.; Nilsson, D.-E. Animal Eyes; Oxford University Press: Oxford, UK, 2012. [Google Scholar]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Rico-Diaz, A.J.; Rodríguez, A.; Puertas, J.; Bermúdez, M. Fish monitoring, sizing and detection using stereovision, laser technology and camputer visio. In Multi-Core Computer Vision and Image Processing for Intelligent Applications; Mohan, S., Vani, V., Eds.; IGI Global: Hershey, PA, USA, 2016. [Google Scholar]

- Bouguet, J.Y. MATLAB Calibration Tool. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/ (accessed on 26 October 2020).

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Je, C.; Park, H.-M. Optimized hierarchical block matching for fast and accurate image registration. Signal Process. Image Commun. 2013, 28, 779–791. [Google Scholar] [CrossRef]

- Rodriguez, A.; Rico-Diaz, A.J.; Rabuñal, J.R.; Puertas, J.; Pena, L. Fish Monitoring and Sizing Using Computer Vision. In Bioinspired Computation in Artificial Systems, Proceedings of the International Work-Conference on the Interplay Between Natural and Artificial Computation, IWINAC 2015, Elche, Spain, 1–5 June 2015; Ferrández Vicente, J.M., Álvarez-Sánchez, J.R., de la Paz López, F., Toledo-Moreo, F.J., Adeli, H., Eds.; Springer: Cham, Switzerland, 2015; pp. 419–428. [Google Scholar]

| Results | European Perch | Brown Trout | Atlantic Wreckfish |

|---|---|---|---|

| Avg. Measured Size (cm) | 8.8 | 6.5 | 92.6 |

| Std. Dev. Measured Size (cm) | 0.8 | 0.8 | 8.4 |

| Avg. Absolute Error (cm) | 0.6 | 0.9 | 6.5 |

| Std. Dev. Absolute Error (cm) | 0.6 | 0.7 | 5.2 |

| Avg. Relative Error | 0.07 | 0.12 | 0.07 |

| Std. Dev. Relative Error | 0.09 | 0.09 | 0.06 |

| True Positives | 620 | 600 | 182 |

| Detected False Positives | 44 | 31 | 47 |

| Precision | 0.93 | 0.95 | 0.74 |

| False Positive Ratio | 0.07 | 0.05 | 0.26 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rico-Díaz, Á.J.; Rabuñal, J.R.; Gestal, M.; Mures, O.A.; Puertas, J. An Application of Fish Detection Based on Eye Search with Artificial Vision and Artificial Neural Networks. Water 2020, 12, 3013. https://doi.org/10.3390/w12113013

Rico-Díaz ÁJ, Rabuñal JR, Gestal M, Mures OA, Puertas J. An Application of Fish Detection Based on Eye Search with Artificial Vision and Artificial Neural Networks. Water. 2020; 12(11):3013. https://doi.org/10.3390/w12113013

Chicago/Turabian StyleRico-Díaz, Ángel J., Juan R. Rabuñal, Marcos Gestal, Omar A. Mures, and Jerónimo Puertas. 2020. "An Application of Fish Detection Based on Eye Search with Artificial Vision and Artificial Neural Networks" Water 12, no. 11: 3013. https://doi.org/10.3390/w12113013

APA StyleRico-Díaz, Á. J., Rabuñal, J. R., Gestal, M., Mures, O. A., & Puertas, J. (2020). An Application of Fish Detection Based on Eye Search with Artificial Vision and Artificial Neural Networks. Water, 12(11), 3013. https://doi.org/10.3390/w12113013