Critical Temperature Prediction of Superconductors Based on Atomic Vectors and Deep Learning

Abstract

1. Introduction

- (1)

- Extensive computational tests over three standard benchmark datasets demonstrate the advanced performance of our proposed HNN model.

- (2)

- The atomic vector characterization method used to represent superconductors, in addition to using Magpie, one-hot, and other methods, provides a better method for the characterization of superconductors, and this method can also be used to characterize other materials.

2. Materials and Methods

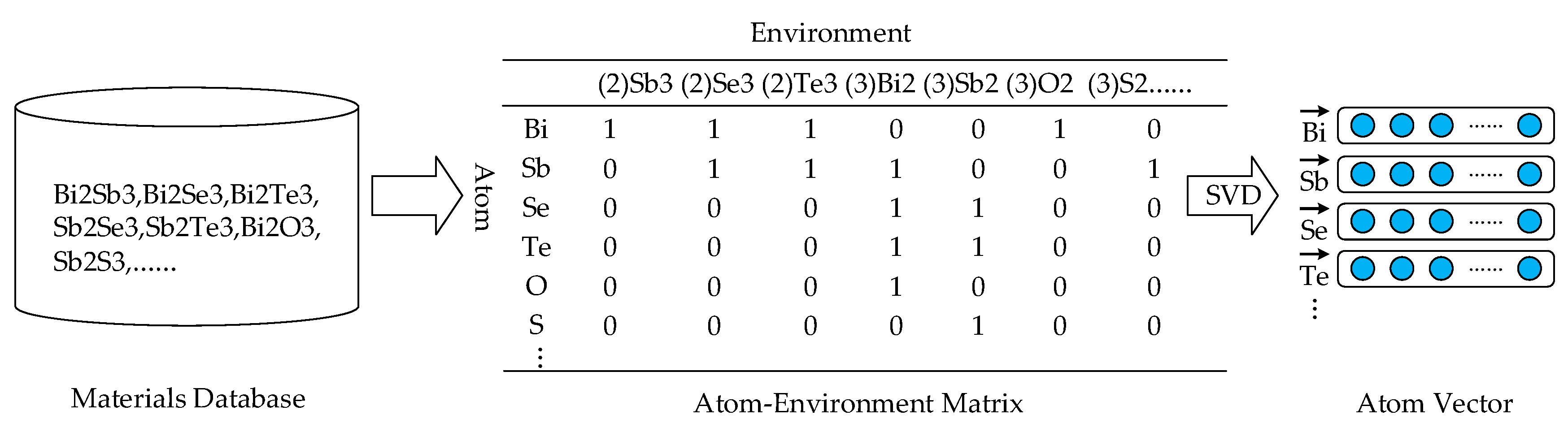

2.1. Atomic Vector Generation Methods

2.2. Dataset Selection and Material Characterization

2.3. Atomic Hierarchical Feature Extraction Model

2.3.1. Inter-Atomic Short-Dependence Feature Extraction Method Based on CNN

2.3.2. Inter-Atomic Long-Dependence Feature Extraction Method Based on LSTM

2.3.3. Architecture of HNN Model

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Muller, K.A.; BEDNORZ, J.G. The Discovery of a Class of High-Temperature Superconductors. Science 1987, 237, 1133–1139. [Google Scholar] [CrossRef] [PubMed]

- Suhl, H.; Matthias, B.; Walker, L. Bardeen-Cooper-Schrieffer theory of superconductivity in the case of overlapping bands. Phys. Rev. Lett. 1959, 3, 552. [Google Scholar] [CrossRef]

- Cooper, J.; Chu, C.; Zhou, L.; Dunn, B.; Grüner, G. Determination of the magnetic field penetration depth in superconducting yttrium barium copper oxide: Deviations from the Bardeen-Cooper-Schrieffer laws. Phys. Rev. B 1988, 37, 638. [Google Scholar] [CrossRef] [PubMed]

- Amoretti, A.; Areán, D.; Goutéraux, B.; Musso, D. DC resistivity at holographic charge density wave quantum critical points. arXiv 2017, arXiv:1712.07994. [Google Scholar]

- Szeftel, J.; Sandeau, N.; Khater, A. Comparative Study of the Meissner and Skin Effects in Superconductors. Prog. Electromagn. Res. M 2018, 69, 69–76. [Google Scholar] [CrossRef]

- Goldman, A.M.; Kreisman, P. Meissner effect and vortex penetration in Josephson junctions. Phys. Rev. 1967, 164, 544. [Google Scholar] [CrossRef]

- Orignac, E.; Giamarchi, T. Meissner effect in a bosonic ladder. Phys. Rev. B 2001, 64, 144515. [Google Scholar] [CrossRef]

- Jing, W. Gravitational Higgs Mechanism in Inspiraling Scalarized NS-WD Binary. Int. J. Astron. Astrophys. 2017, 7, 202–212. [Google Scholar]

- Kamihara, Y.; Watanabe, T.; Hirano, M.; Hosono, H. Iron-based layered superconductor La [O1-x F x] FeAs (x = 0.05− 0.12) with T c = 26 K. J. Am. Chem. Soc. 2008, 130, 3296–3297. [Google Scholar] [CrossRef]

- Stewart, G. Superconductivity in iron compounds. Rev. Mod. Phys. 2011, 83, 1589. [Google Scholar] [CrossRef]

- Bonn, D. Are high-temperature superconductors exotic? Nat. Phys. 2006, 2, 159–168. [Google Scholar] [CrossRef]

- Kalidindi, S.R.; Graef, M.D. Materials Data Science: Current Status and Future Outlook. Ann. Rev. Mater. Sci. 2015, 45, 171–193. [Google Scholar] [CrossRef]

- Curtarolo, S.; Hart, G.L.W.; Nardelli, M.B.; Mingo, N.; Sanvito, S.; Levy, O. The high-throughput highway to computational materials design. Nat. Mater. 2013, 12, 191–201. [Google Scholar] [CrossRef] [PubMed]

- Setyawan, W.; Gaume, R.M.; Lam, S.; Feigelson, R.S.; Curtarolo, S. High-Throughput Combinatorial Database of Electronic Band Structures for Inorganic Scintillator Materials. ACS Comb. Sci. 2011, 13, 382–390. [Google Scholar] [CrossRef]

- Perdew, J.P.; Burke, K.; Ernzerhof, M. Generalized gradient approximation made simple. Phys. Rev. Lett. 1996, 77, 3865–3868. [Google Scholar] [CrossRef]

- Yang, J.; Li, S.; Gao, Z.; Wang, Z.; Liu, W. Real-Time Recognition Method for 0.8 cm Darning Needles and KR22 Bearings Based on Convolution Neural Networks and Data Increase. Appl. Sci. 2018, 8, 1857. [Google Scholar] [CrossRef]

- Yang, J.; Li, S.; Wang, Z.; Yang, G. Real-time tiny part defect detection system in manufacturing using deep learning. IEEE Access 2019, 7, 89278–89291. [Google Scholar] [CrossRef]

- Dukenbayev, K.; Korolkov, I.V.; Tishkevich, D.I.; Kozlovskiy, A.L.; Trukhanov, S.V.; Gorin, Y.G.; Shumskaya, E.E.; Kaniukov, E.Y.; Vinnik, D.A.; Zdorovets, M.V. Fe3O4 Nanoparticles for Complex Targeted Delivery and Boron Neutron Capture Therapy. Nanomaterials 2019, 9, 494. [Google Scholar] [CrossRef]

- Tishkevich, D.I.; Grabchikov, S.S.; Lastovskii, S.B.; Trukhanov, S.V.; Zubar, T.I.; Vasin, D.S.; Trukhanov, A.V.; Kozlovskiy, A.L.; Zdorovets, M.M. Effect of the Synthesis Conditions and Microstructure for Highly Effective Electron Shields Production Based on Bi Coatings. Acs Appl. Energy Mater. 2018, 1, 1695–1702. [Google Scholar] [CrossRef]

- Yang, G.; Chen, Z.; Li, Y.; Su, Z. Rapid Relocation Method for Mobile Robot Based on Improved ORB-SLAM2 Algorithm. Remote Sens. 2019, 11, 149. [Google Scholar] [CrossRef]

- Li, X.; Dan, Y.; Dong, R.; Cao, Z.; Niu, C.; Song, Y.; Li, S.; Hu, J. Computational Screening of New Perovskite Materials Using Transfer Learning and Deep Learning. Appl. Sci. 2019, 9, 5510. [Google Scholar] [CrossRef]

- Zhuo, Y.; Mansouri Tehrani, A.; Brgoch, J. Predicting the Band Gaps of Inorganic Solids by Machine Learning. J. Phys. Chem. Lett. 2018, 9, 1668–1673. [Google Scholar] [CrossRef] [PubMed]

- Calfa, B.A.; Kitchin, J.R. Property prediction of crystalline solids from composition and crystal structure. Aiche J. 2016, 62, 2605–2613. [Google Scholar] [CrossRef]

- Xie, T.; Grossman, J.C. Crystal Graph Convolutional Neural Networks for an Accurate and Interpretable Prediction of Material Properties. Phys. Rev. Lett. 2018, 120, 145301. [Google Scholar] [CrossRef] [PubMed]

- Mansouri Tehrani, A.; Oliynyk, A.O.; Parry, M.; Rizvi, Z.; Couper, S.; Lin, F.; Miyagi, L.; Sparks, T.D.; Brgoch, J. Machine learning directed search for ultraincompressible, superhard materials. J. Am. Chem. Soc. 2018, 140, 9844–9853. [Google Scholar] [CrossRef]

- Agrawal, A.; Choudhary, A. Perspective: Materials informatics and big data: Realization of the “fourth paradigm” of science in materials science. APL Mater. 2016, 4, 053208. [Google Scholar] [CrossRef]

- Hey, A.J.; Tansley, S.; Tolle, K.M. The Fourth Paradigm: Data-Intensive Scientific Discovery; Microsoft Research: Redmond, WA, USA, 2009; Volume 1. [Google Scholar]

- Rajan, K. Materials informatics: The materials “gene” and big data. Ann. Rev. Mater. Res. 2015, 45, 153–169. [Google Scholar] [CrossRef]

- Hill, J.; Mulholland, G.; Persson, K.; Seshadri, R.; Wolverton, C.; Meredig, B. Materials science with large-scale data and informatics: Unlocking new opportunities. MRS Bull. 2016, 41, 399–409. [Google Scholar] [CrossRef]

- Ward, L.; Wolverton, C. Atomistic calculations and materials informatics: A review. Curr. Opin. Solid State Mater. Sci. 2017, 21, 167–176. [Google Scholar] [CrossRef]

- Ramprasad, R.; Batra, R.; Pilania, G.; Mannodi-Kanakkithodi, A.; Kim, C. Machine learning in materials informatics: Recent applications and prospects. NPJ Comput. Mater. 2017, 3, 54. [Google Scholar] [CrossRef]

- Pozun, Z.D.; Hansen, K.; Sheppard, D.; Rupp, M.; Müller, K.-R.; Henkelman, G. Optimizing transition states via kernel-based machine learning. J. Chem. Phys. 2012, 136, 174101. [Google Scholar] [CrossRef] [PubMed]

- Montavon, G.; Rupp, M.; Gobre, V.; Vazquez-Mayagoitia, A.; Hansen, K.; Tkatchenko, A.; Müller, K.-R.; Von Lilienfeld, O.A. Machine learning of molecular electronic properties in chemical compound space. New J. Phys. 2013, 15, 095003. [Google Scholar] [CrossRef]

- Agrawal, A.; Deshpande, P.D.; Cecen, A.; Basavarsu, G.P.; Choudhary, A.N.; Kalidindi, S.R. Exploration of data science techniques to predict fatigue strength of steel from composition and processing parameters. Integr. Mater. Manuf. Innov. 2014, 3, 90–108. [Google Scholar] [CrossRef]

- Stanev, V.; Oses, C.; Kusne, A.G.; Rodriguez, E.; Paglione, J.; Curtarolo, S.; Takeuchi, I. Machine learning modeling of superconducting critical temperature. NPJ Comput. Mater. 2018, 4, 29. [Google Scholar] [CrossRef]

- Ward, L.; Agrawal, A.; Choudhary, A.; Wolverton, C. A general-purpose machine learning framework for predicting properties of inorganic materials. NPJ Comput. Mater. 2016, 2, 16028. [Google Scholar] [CrossRef]

- Hamidieh, K. A data-driven statistical model for predicting the critical temperature of a superconductor. Comput. Mater. Sci. 2018, 154, 346–354. [Google Scholar] [CrossRef]

- Zhou, Q.; Tang, P.; Liu, S.; Pan, J.; Yan, Q.; Zhang, S.-C. Learning atoms for materials discovery. Proc. Natl. Acad. Sci. USA 2018, 115, E6411–E6417. [Google Scholar] [CrossRef]

- Lu, J.; Wang, C.; Zhang, Y.; Wang, C.; Zhang, Y. Predicting Molecular Energy Using Force-Field Optimized Geometries and Atomic Vector Representations Learned from an Improved Deep Tensor Neural Network. J. Chem. Theory Comput. 2019, 15, 4113–4121. [Google Scholar] [CrossRef]

- Jain, A.; Ong, S.P.; Hautier, G.; Wei, C.; Persson, K.A. Commentary: The Materials Project: A materials genome approach to accelerating materials innovation. APL Mater. 2013, 1, 011002. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y. Convolutional networks for images, speech, and time series. Handb. Brain Theory Neural Netw. 1995, 3361, 255–258. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Stateline, NV, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Su, Z.; Li, Y.; Yang, G. Dietary Composition Perception Algorithm Using Social Robot Audition for Mandarin Chinese. IEEE Access 2020, 8, 8768–8782. [Google Scholar] [CrossRef]

- Zhu, X.; Liu, H.; Lei, Z.; Shi, H.; Yang, F.; Yi, D.; Qi, G.; Li, S.Z. Large-scale bisample learning on id versus spot face recognition. Int. J. Comput. Vis. 2019, 127, 684–700. [Google Scholar] [CrossRef]

- Yu, Z.; Liu, F.; Liao, R.; Wang, Y.; Feng, H.; Zhu, X. Improvement of face recognition algorithm based on neural network. In Proceedings of the ICMTMA, Changsha, China, 10–11 February 2018; pp. 229–234. [Google Scholar]

- Yu, T.; Jin, H.; Nahrstedt, K. Mobile Devices based Eavesdropping of Handwriting. IEEE Trans. Mob. Comput. 2019, 1. [Google Scholar] [CrossRef]

- Tsai, R.T.-H.; Chen, C.-H.; Wu, C.-K.; Hsiao, Y.-C.; Lee, H.-y. Using Deep-Q Network to Select Candidates from N-best Speech Recognition Hypotheses for Enhancing Dialogue State Tracking. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hove, UK, 12–17 May 2019; pp. 7375–7379. [Google Scholar]

- Kim, Y.; Gao, Y.; Ney, H. Effective Cross-lingual Transfer of Neural Machine Translation Models without Shared Vocabularies. arXiv 2019, arXiv:1905.05475. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Li, Y.; Yuan, Y. Convergence analysis of two-layer neural networks with relu activation. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 597–607. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Suykens, J.A.; Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Quinlan, J.R. Simplifying decision trees. Int. J. Man Mach. Stud. 1987, 27, 221–234. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- An, S.; Liu, W.; Venkatesh, S. Face recognition using kernel ridge regression. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–7. [Google Scholar]

- Wagner, H.M. Linear programming techniques for regression analysis. J. Am. Stat. Assoc. 1959, 54, 206–212. [Google Scholar] [CrossRef]

- Jha, D.; Ward, L.; Paul, A.; Liao, W.-k.; Choudhary, A.; Wolverton, C.; Agrawal, A. Elemnet: Deep learning the chemistry of materials from only elemental composition. Sci. Rep. 2018, 8, 17593. [Google Scholar] [CrossRef] [PubMed]

| Layer | Input_Shape | Kernel Number | Kernel Size | Stride | Output Shape |

|---|---|---|---|---|---|

| Conv1 | [batch, 8, 21, 1] | 32 | (3, 21, 1) | (1, 1) | [batch, 6, 1, 32] |

| Conv2 | [batch, 6, 1, 32] | 64 | (3, 1, 32) | (1, 1) | [batch, 4, 1, 64] |

| Conv3 | [batch, 4, 1, 64] | 128 | (3, 1, 64) | (1, 1) | [batch, 2, 1, 128] |

| LSTM1 | [batch, 2, 1, 128] | 256 | - | - | [batch, 2, 1, 256] |

| LSTM2 | [batch, 2, 1, 256] | 256 | - | - | [batch, 1, 1, 256] |

| Reshape | [batch, 1, 1, 256] | - | - | - | [batch, 256] |

| Fc | [batch, 256] | - | - | - | [batch, 1] |

| Model | Batch Size | Learning Rate | Max Depth | Tree Number | Sampling Rate | Kernel | Criterion | Alpha | Gamma |

|---|---|---|---|---|---|---|---|---|---|

| HNN | 32 | 0.001 | - | - | - | - | - | - | - |

| SVM | - | - | - | - | - | RBF | - | 1 | 0.5 |

| RF | - | - | 15 | 500 | - | - | MSE | - | - |

| GBDT | - | 0.04 | 20 | 500 | 0.4 | - | MSE | - | - |

| KRR | - | - | - | - | - | Linear | - | 1 | 5 |

| DT | - | - | 15 | 1 | - | - | MSE | - | - |

| Model | CNN | LSTM | FNN | [35] | [37] | HNN |

|---|---|---|---|---|---|---|

| RMSE | 267.076 | 11.695 | 266.181 | - | - | 83.565 |

| MAE | 11.695 | 6.041 | 11.699 | - | 5.441 | 5.023 |

| R2 | 0.669 | 0.863 | 0.683 | 0.876 | 0.920 | 0.899 |

| Model | RMSE | MAE | R2 |

|---|---|---|---|

| SVM | 238.338 | 8.550 | 0.718 |

| RF | 98.205 | 5.096 | 0.880 |

| GBDT | 109.763 | 6.411 | 0.867 |

| KRR | 268.801 | 11.231 | 0.674 |

| DT | 140.701 | 6.339 | 0.829 |

| HNN | 83.565 | 5.023 | 0.899 |

| Model | RMSE | MAE | R2 |

|---|---|---|---|

| SVM | 404.074 | 11.265 | 0.510 |

| RF | 133.842 | 6.7112 | 0.884 |

| GBDT | 132.199 | 7.519 | 0.8667 |

| KRR | 432.056 | 15.417 | 0.490 |

| DT | 145.093 | 7.300 | 0.861 |

| HNN | 83.565 | 5.023 | 0.899 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Dan, Y.; Li, X.; Hu, T.; Dong, R.; Cao, Z.; Hu, J. Critical Temperature Prediction of Superconductors Based on Atomic Vectors and Deep Learning. Symmetry 2020, 12, 262. https://doi.org/10.3390/sym12020262

Li S, Dan Y, Li X, Hu T, Dong R, Cao Z, Hu J. Critical Temperature Prediction of Superconductors Based on Atomic Vectors and Deep Learning. Symmetry. 2020; 12(2):262. https://doi.org/10.3390/sym12020262

Chicago/Turabian StyleLi, Shaobo, Yabo Dan, Xiang Li, Tiantian Hu, Rongzhi Dong, Zhuo Cao, and Jianjun Hu. 2020. "Critical Temperature Prediction of Superconductors Based on Atomic Vectors and Deep Learning" Symmetry 12, no. 2: 262. https://doi.org/10.3390/sym12020262

APA StyleLi, S., Dan, Y., Li, X., Hu, T., Dong, R., Cao, Z., & Hu, J. (2020). Critical Temperature Prediction of Superconductors Based on Atomic Vectors and Deep Learning. Symmetry, 12(2), 262. https://doi.org/10.3390/sym12020262