1. Introduction

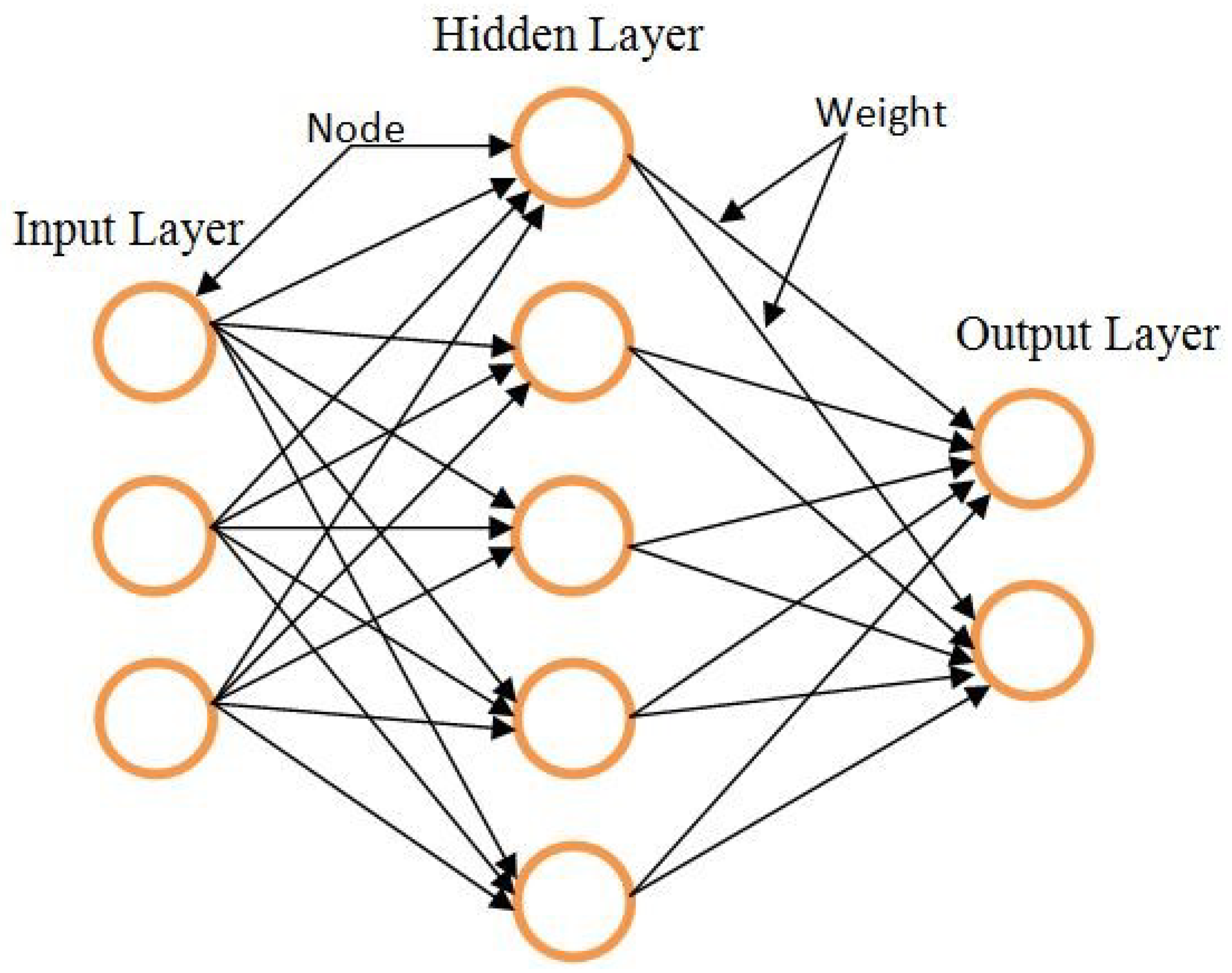

An artificial neural network (ANN) is a powerful but relatively crude classification tool, based on the neural structure of the human brain, that has had a profound impact on the many recent advancements in scientific and engineering research. They can be distinguished from conventional mathematics-based time-series models through their ability to learn complex nonlinear relationships between input-output patterns, learn sequential training paradigms, and make adaptations to the training dataset. The most widely used species of ANNs for classification problems are the feed-forward-based architectures comprising multi-layer perceptrons (MLP), radial-basis function (RBF) networks, and self-organizing maps (SOMs). SOMs were previously used for feature mapping and data clustering only. Nowadays, researchers from other fields have been interested in using ANNs to solve other problems such as bioinformatics data classification and medical diagnosis and prognosis. ANNs have some promising benefits, including nonlinearity, insensitivity to noise, high parallelism, learning, adaptability, and generalization. The basic building blocks of ANNs are the mathematically modeled artificial neurons proposed by McCulloch and Pitt in 1943 [

1]. By simple definition, an ANN consists of processing elements called neurons, which are interrelated and work together to respond to a specific problem. Neural networks (NNs) can be utilized in situations where trends and patterns are too difficult for human or other computer techniques to identify and interpret, as they have an exceptional ability to extract meaning from imprecise or complicated problems. They are constructed for many real-world applications such as pattern recognition and data mining through training and learning processes. Due to their parallel-processing capability, ANNs are suitable enough for real-time classification problems.

Feature selection (FS) has become the focus of many recent studies, particularly in bioinformatics, where it has numerous applications. Machine learning (ML) is a powerful tool that can select the most relevant features from datasets, but not all ML algorithms perform equally in FS techniques. Nonetheless, several methods have been proposed in bioinformatics for FS. In a classification problem, FS remains the most imperative task. It is a technique that can enhance classification accuracy. The three types of FS techniques—the filter technique, the wrapper technique, and the embedded techniques—are organized in view of classification based on how they combine the search for FS with the creation of the classification model [

2].

The filter and wrapper approaches are the two primary approaches that researchers utilize to tackle this problem. The important difference between the filter and wrapper approaches is their use of univariate and multivariate analyses, respectively. The filter approach performs FS as a preprocessing stage before the use of a classification algorithm [

3]. Studies of the feature reduction of microarray data most commonly use this approach [

4]. A commonly used test for statistics in microarray studies is the t-test, which is used to isolate significantly differentially expressed genes.

The wrapper approach is any method that incorporates a classifier to select relevant features in a group [

3]. This approach, while generally more accurate than the filter approach, comes at a computational cost [

4]. The wrapper approach relies on many evaluations of the data, while the filter approach uses a single evaluation.

The quest for an optimal subset of features is integrated into the classifier in the embedded approaches and possibly seen as a search in the combined space of the subsets and hypotheses of the features. The embedded approaches are specific to an assumed learning algorithm, like wrapper approaches. Embedded approaches have many advantages, including communication with the classification model, and are less computationally intensive than wrapping approaches [

5].

Cancer classification is a machine-learning-based data mining method that is utilized to categorize the elements of a dataset into predefined classes or groups [

6,

7]. In the field of medicine, classification has been extended to numerous theoretical and practical applications, including breast cancer, colon cancer, heart disease, liver disease, ovarian cancer, prostate cancer, and so on. Many medical researchers have intensified efforts to improve classification in order to obtain better outcomes when diagnosing or detecting associated diseases. Classification challenges are multifaceted because of the difficulties in collecting data and testing the classification methods. Data cleaning, the elimination of irrelevant attributes, and detailed sensitivity and specificity classification are amongst these challenges.

This paper is structured as follows:

Section 2 introduces related works, while

Section 3 details ANNs, best-first search, FS, the Taguchi method, cancer classification, and the dataset.

Section 4 discusses the ANN classifier performance evaluation criteria.

Section 5 and “Breast Cancer with FS” present the experimental results and a discussion.

Section 6 concludes the study and provides directions for future research.

2. Related Work

FS methods have become essential in various classification problems. These problems are characterized by a dataset with many features. There are a few features within such a collection of features that contain relevant information, with most of the features being either irrelevant or of low relevancy. Usually, the highly informative features are used to construct a classification model while disregarding the non-informative ones. By decreasing the number of features that are ultimately utilized for classification, there can be an enhancement in the performance of the algorithm [

3,

8,

9,

10,

11].

Various pattern recognition methods were not initially designed to handle a great deal of inappropriate features; hence, they have been combined with FS techniques [

12,

13,

14]. FS has a number of objectives; the topmost include (a) the avoidance of overfitting and improvement of model performance, i.e., prediction performance for supervised classification and well-cluster detection for clustering, (b) the provision of efficient and economical models, and (c) an increase in information around the processes of the data that are generated. However, the benefits of FS methods can become expensive, as the search for an optimal subset of relevant features in the modeling task can become more complex. The optimal model parameters for the optimized feature subset must be sought rather than simply optimizing the model parameters for the entire feature subset. This should be done since one cannot guarantee equality between the full feature set and the optimal feature subset [

15]. This results in the production of the optimal subset of appropriate features. In the model selection, the FS methods vary depending on the additional space of feature subsets.

Banerjee et al. [

16] utilized an evolutionary rough FS algorithm to classify datasets with three different cancer samples for the microarray gene expression. Nieto et al. [

17] utilized a multi-objective genetic algorithm (MOGA) in microarray datasets for gene selection. This algorithm carries out gene selection from a sensitivity and specificity viewpoint. In this algorithm, the classification task is achieved with support vector machines (SVMs); in addition, the resulting subsets use a 10-fold cross-validation. Based on the Wisconsin Breast Cancer Database (WBCD), Hasan et al. [

18] utilized an FS algorithm that utilized principal component analysis (PCA) and ANNs as classifiers and as the best tool to enhance the classification accuracy of benign or malignant tumors. Furthermore, there are three rules of thumb in PCA applications, namely, (i) the cumulative variance, (ii) the Kaiser–Guttman (KG), and (iii) the screen test rule, which were used for FS.

Rahideh et al. [

19] presented a cancer classification technique utilizing clustering-based gene selection and an ANN. The researchers utilized three different clustering algorithms for selecting the most valuable genes. The three different algorithms were fuzzy C-means clustering, K-means clustering, and SOMs. An MLP NN trained with the Levenberg–Marquardt learning algorithm was used for cancer classification. Dora et al. [

20] utilized a Gauss–Newton representation-based algorithm (GNRBA) and focused on optimal weight. They evaluated it with the Wisconsin Diagnostic Breast Cancer (WDBC) dataset and achieved a classification performance of 98.86% using a 70-30 data partition. Jeyasingh et al. [

21] utilized a modified bat algorithm (MBA) to select the optimal features from the WDBC dataset. They were thus able to achieve a classification accuracy of 96.85%. Mafarja et al. [

22] achieved a 97.10% classification accuracy using the WOA-CM method with this dataset. The reviewed and investigated literature provided information that helped in understanding different FS methods.

Resolving classification problems has become a challenge for researchers. Different types of classifiers have already been proposed in the literature for classification problems. These include decision tree (DT) algorithms, k-nearest neighbor (k-NN) algorithms, naive Bayes (NB) classifiers, NNs, rule-based algorithms, SVMs, and other statistical methods, such as linear discriminant analysis and logistical regression. The most important and desired factor in medical diagnosis ML techniques for the classification of the causes of cancer is accuracy. Zheng et al. [

23] utilized a hybrid K-means algorithm and an SVM (K-SVM) to diagnose breast cancer. Their experiment reached 97.38% accuracy when tested on the WDBC data. Moreover, Orkcu et al. [

24] evaluated the efficiency of three different algorithms using this dataset: (i) a real coded genetic algorithm (RCGA), (ii) a back propagation NN (BPNN), and (iii) a binary coded genetic algorithm (BCGA). They achieved accuracies of 96.5, 93, and 94.0%, respectively. Moreover, Salama et al. [

25] utilized five different classifiers: (i) NB, (ii) sequential minimal optimization (SMO), (iii) DT (J48), (iv) MLP, and (v) instance-based k-NN (IBK). The experiment used a confusion matrix based on the 10-fold cross-validation technique with three distinctive breast cancer databases to acquire classification accuracy. The experiment achieved the highest classification accuracy of 97.7% with SMO. Chunekar at al. [

26] used a Jordan-Elman NN technique for the classification of three different breast cancer databases. Their technique achieved a classification accuracy of 98.25% using 4 hidden neurons in the hidden layer.

On the other hand, Lavanya et al. [

27] used a CART classifier and achieved a 94.72% classification accuracy with WDBC datasets using a DT. The same researcher [

28] used a hybrid technique and was able to achieve a classification accuracy of 95.96%. Another group of researchers, Malmir et al. [

29], utilized the imperialist competitive algorithm (ICA) with an MLP network and particle swarm optimization (PSO). Classification accuracies of 97.75% and 97.63%, respectively, were achieved. In addition, out of nine classifiers tested by Koyuncu et al. [

30], the rotation-forest artificial neural network (RF-ANN) achieved the highest classification accuracy at 98.05%. Aalaei et al. [

31] utilized a genetic algorithm (GA) with three dissimilar classifiers, namely the PS-classifier, an ANN with 5 neurons, and a GA-based classifier, and the optimal feature subset was found using a GA. Their experiment after FS achieved a classification accuracy of 97.2, 97.3, and 96.6%, respectively, with WDBC datasets. Onur Inan et al. [

32] used breast cancer datasets for their experiment. They used an Apriori algorithm (AP) with a NN classifier for cancer classification. This study reported a classification accuracy of 98.29%. Nguyen et al. [

33] used a modified version of the analytical hierarchy process (AHP) to select the most informative genes for cancer classification. These genes served as inputs to a type-2 fuzzy logic system interval (IT2FLS). Aidaroos et al. [

34] used the NB classification algorithm for medical data classification on datasets, including breast cancer and lunch cancer datasets. Soria et al. [

35] proposed three different methods to classify data related to breast cancer. Their study utilized C4.5 tree, MLP, and NB classifiers. Mert et al. [

36] utilized SVMs to classify breast cancer. To decrease the data dimensionality, the authors used independent component analysis (ICA) and the WDBC dataset and achieved a classification accuracy of 94.40%.

Amrane et al. [

37] utilized ML techniques with two different classifiers, NB and k-NN classifiers, for breast cancer classification. The NB method achieved a 96.19% accuracy and the k-NN achieved 97.51%. Eleyan et al. [

38] tried to improve classification performance. They presented a technique to detect breast cancer based on time. This study used a 10-fold cross-validation technique and various classifiers. The performance of the classifiers was evaluated using the WBCD. Karabatak [

39] used the weighted NB (W-NB) classifier and carried out an experiment with five-fold cross-validation. The experiment was conducted using the WDBC dataset and achieved a classification accuracy of 98.54% along with a sensitivity of 99.11% and a specificity of 98.25%. Sheikhpour et al. [

40] utilized a hybrid model to classify breast cancer. The author built a hybrid model by using PSO and kernel density estimation (KDE) classifiers. The PSO-KDE model achieved an accuracy of 98.45%, a sensitivity of 97.73%, and a specificity of 98.66%.

Hasri et al. [

41] proposed an SVM and recursive feature elimination (RFE) technique for the detection of cancer. Wang et al. [

42] used an SVM-based ensemble learning algorithm to make a hybridization model of weighted area under the receiver operating characteristics (ROC) curve (AUC) ensemble (WAUCE). The authors achieved a classification accuracy of 97.68% from the WDBC dataset using the WAUCE model with 10-fold cross-validation. Obaid et al. [

43] utilized three different algorithms for classification breast cancer, namely DT, k-NN, and SVM. The highest classification accuracy of the experiment was 98.1%, using the SVM with the WDBC dataset. Emami et al. [

44] proposed an AP-AMBFA method for diagnosis of breast cancer. The affinity propagation (AP) method was used for reducing the noise data, and adaptive modified binary firefly algorithm (AMBFA) was used for FS and classification. The authors were able to reach the highest classification accuracy of 98.60% using the proposed technique. Muhammer et al. [

45] utilized harmony search and back propagation based on an ANN for the classification of breast cancer data. The accuracies achieved were 94.1 and 97.57% for back propagation and harmony search, respectively.

Shah et al. [

46] discussed three different data mining algorithms, namely NB, DT, and k-NN algorithms, for the classification of cancer. The researchers used the WEKA tool and a breast cancer dataset for their experiments. The algorithm that achieved the highest accuracy, 95.99%, was the NB algorithm. Moloud et al. [

47] proposed a nested ensemble technique to evaluate the WDBC dataset. The authors utilized classifiers and metaclassifiers to develop a two-layer nested ensemble classifier. They achieved the highest classification accuracy of 98.07%. Wang et al. [

48] tried to solve the medical decision-making issues. They utilized a context-based probability NN (CPNN). The experiments utilized three different datasets, including the WDBC dataset, and achieved a classification accuracy of 97.40%. Karabatak et al. [

49] proposed association rules (ARs) and an NN for performing cancer classification. To reduce the dimensionality of the breast cancer database, the authors applied ARs and an NN for intelligent classification. The authors utilized two dissimilar methods of association rules (ARs), AR1 and AR2. They described a classification accuracy of 95.2% from the NN, 97.4% from AR1+NN, and 95.6% from AR2+NN.

Senapati et al. [

50] presented some experiments to classify breast cancer tumors. The authors proposed a local linear wavelet NN for the detection of normal and abnormal cases. Using the said method, the fundamental linear model replaced the connection weights between the input layer and the output layer. The recursive least square (RLS) strategy was utilized to update the parameter for training in order to improve the performance. The presented technique is very strong and efficient; it achieved a classification accuracy of 97.2%. Nguyen et al. [

51] utilized a medical model for breast cancer classification based on the fuzzy standard additive model and a GA. In this system, the rule initialization was handled through the clustering of adaptive vector quantization. A GA optimizes the parameters. The parameter tuning was done through a gradient descent algorithm. In order to reduce the dimensionality of datasets, wavelet transform was applied. This model achieved a classification accuracy of 97.40%. Nguyen et al. [

52] proposed a classification model that combines the interval type-2 fuzzy logic system (IT2FLS) and wavelet transformation (WT). These methods were combined to precisely handle high dimensionality and indecision. The fuzzy logic system type-2 interval consists of fuzzy c-means clustering-based unsupervised learning and parameter tuning driven on GAs. Such logic device mechanisms have high computational costs, and wavelet transform functions to reduce such computational expenses. The model achieved a 97.88% classification accuracy for breast cancer diagnosis.