Abstract

Symmetric nonnegative matrix factorization (SNMF) approximates a symmetric nonnegative matrix by the product of a nonnegative low-rank matrix and its transpose. SNMF has been successfully used in many real-world applications such as clustering. In this paper, we propose an accelerated variant of the multiplicative update (MU) algorithm of He et al. designed to solve the SNMF problem. The accelerated algorithm is derived by using the extrapolation scheme of Nesterov and a restart strategy. The extrapolation scheme plays a leading role in accelerating the MU algorithm of He et al. and the restart strategy ensures that the objective function of SNMF is monotonically decreasing. We apply the accelerated algorithm to clustering problems and symmetric nonnegative tensor factorization (SNTF). The experiment results on both synthetic and real-world data show that it is more than four times faster than the MU algorithm of He et al. and performs favorably compared to recent state-of-the-art algorithms.

1. Introduction

Given a symmetric nonnegative matrix , symmetric nonnegative matrix factorization (SNMF) aims to find a nonnegative matrix (generally ) such that . Based on the widely used Frobenius norm metric, the nonnegative factor is obtained by solving the following objective function:

SNMF is a special but important class of symmetric nonnegative tensor factorization (SNTF). It can serve as a basic building block of SNTF algorithms for a higher order tensor [1]. Besides, SNMF has been widely applied to the clustering problems where the cluster structure is captured by pairwise affinity relationships among points [2,3,4,5,6,7,8,9]. Recent works have demonstrated that SNMF can provide superior clustering quality compared to many classic clustering algorithms, such as spectral clustering, see [9,10,11,12] and the references therein.

So far, many efficient algorithms have been proposed for SNMF. In [6], He et al. proposed three multiplicative update algorithms, including a basic algorithm and two fast algorithms: -SNMF and -SNMF algorithms. It was numerically shown that the three algorithms outperform other previous multiplicative update algorithms [9,13,14]. In [10], Kuang et al. proposed a Newton-like SNMF algorithm, which uses partial second-order information to guide the scaling of the gradient direction. Compared to the projected Newton algorithm, the Newton-like algorithm can greatly reduce the computational complexity. It is guaranteed to converge to a stationary solution. In [7], Vandaele et al. suggested to solve the SNMF problem by the block coordinate descent (BCD) algorithm, in which only one entry of is optimized each time and the corresponding subproblem is to find the best root of a cubic equation. The BCD algorithm has a low computational burden and storage requirement, which makes it suitable for solving large-scale problems. In [8], Shi et al. proposed two inexact BCD methods based on block successive upper-bounding minimization (BSUM), named scalar BSUM (sBSUM) and vector-wise BSUM (vBSUM). Both of them are guaranteed to converge to stationary solutions.

In this paper, we propose an accelerated variant of the basic multiplicative update (MU) algorithm of He et al. [6]. For convenience, we refer to the basic MU algorithm as MU-SNMF, and the accelerated variant as accelerated MU-SNMF (AMU-SNMF). We draw inspiration from Nesterov’s accelerated gradient (NAG) method and derive AMU-SNMF by applying the extrapolation scheme in NAG to MU-SNMF. To overcome the non-monotonic objective value caused by the extrapolation scheme, we restart the algorithm and take the current iteration as the new starting point when an increase in the objective value is observed. We apply AMU-SNMF to some real-world clustering problems and use it for SNTF following the framework of the averaging approach [1]. The experiment results show that AMU-SNMF can rapidly decrease the objective value and has good clustering performance in the applications of clustering. Furthermore, in SNTF, AMU-SNMF can achieve a low objective value of SNTF by the framework of the averaging approach.

The rest of this paper is organized as follows. In Section 2, we first review the MU-SNMF algorithm and the NAG method, and then present the AMU-SNMF algorithm. The averaging approach with AMU-SNMF for SNTF is discussed in the last part of Section 2. In Section 3, we conduct experiments on synthetic and real-world data. Finally, we conclude this paper in Section 4.

2. Accelerated SNMF Algorithm

2.1. Multiplicative Update Algorithm for SNMF

In this subsection, we briefly review the MU-SNMF algorithm proposed by He et al. [6]. It is derived from an auxiliary function (see Definition 1) of (1). At the th iteration, the auxiliary function is represented as

where denotes the status of after t-th iteration, and denotes the trace operator. By setting the gradient to zero, the multiplicative update rule of MU-SNMF is derived as follows:

Given a positive initialization, MU-SNMF will always preserve the nonnegativity constraints on . It has been proved that MU-SNMF decreases the objective value at each iteration. Moreover, MU-SNMF converges to a stationary point of (1) if is complete positive. For the detail proof, please refer to Proposition 1 and 2 in [6]. We summarize MU-SNMF in Algorithm 1.

Definition 1

(Auxiliary function). A function is an auxiliary function of if it satisfies two properties: (1) and (2) for all vectors .

| Algorithm 1: = MU-SNMF(, r). |

| Step 1: Initialization Initialize . Set . Step 2: Update stage repeat until the stopping criterion is satisfied Step 3: Output . ⊗ and denote elementwise product and division, respectively. |

2.2. Nesterov’s Accelerated Gradient

Accelerated gradient method [15] is an accelerated variant of gradient descent. It was proposed by Nesterov in 1983, and is commonly referred to as Nesterov’s accelerated gradient (NAG). Given a smooth convex function to be minimized, NAG takes an initial point and runs the following iterative scheme:

where the superscript of the variables denotes the iteration number, , and denotes the learning rate. At each iteration, NAG first updates the variable along the previous update direction. The intuition behind it is that the descent direction tends to persist across successive iterations. The result is denoted by a new variable . After that, NAG takes a gradient descent step from the point . The gradient descent step plays an important role in making a timely correction to if is indeed a poor update [16,17]. For Lipshitz convex functions, NAG can achieve a global convergence rate of , which is the optimal convergence rate as described in [18].

2.3. Accelerated MU-SNMF Algorithm

Inspired from the extrapolation scheme in NAG, we modify MU-SNMF as follows:

where ⊗ denotes elementwise product, and denotes elementwise division. Note that there might be some negative entries in since the entries of are not guaranteed to be nondecreasing during the iterative process. To keep elementwise positive, the entries of are truncated to a small positive number once they are less than , resulting in the following iterative scheme:

For ease of description, we temporarily refer to the algorithm with update rule (6) as accelerated MU-SNMF (AMU-SNMF). The performance of AMU-SNMF is very dependent on the choice of . If it is chosen too small, the extrapolation scheme would do little to accelerate MU-SNMF. If it is too large, AMU-SNMF would probably diverge. We find that AMU-SNMF can work well by setting .

Unlike MU-SNMF, AMU-SNMF is not guaranteed to be monotone in the objective value. To overcome this problem, we start AMU-SNMF again and take the current iteration as the new starting point when an increase in the objective value is observed. We summarize the whole procedure in Algorithm 2. In the following, when we refer to AMU-SNMF we mean the Algorithm 2.

| Algorithm 2: = AMU-SNMF(, r). |

| Step 1: Initialization Initialize . Set , , , . Step 2: Update stage repeat if then else end if if then end if until the stopping criterion is satisfied Step 3: Output . ⊗ and denote elementwise product and division, respectively. |

2.4. Symmetric Nonnegative Tensor Factorization (SNTF)

In this subsection, we apply AMU-SNMF to SNTF of third order symmetric nonnegative tensors. The SNTF problem is described as follows. Given a three order symmetric nonnegative tensor and a positive integer r, SNTF seeks to find a nonnegative matrix such that

where the symbol ∘ represents the vector outer product. Based on the widely used Euclidean distance, the matrix is obtained by solving the following objective function:

where the tensor norm is the square root of the sum of the squares of all its elements, e.g., for a three order tensor ,

For (8), Cichocki et al. [1] proposed a simple approach, referred to as averaging approach. The idea behind it is to convert the SNTF problem to a standard SNMF problem:

where , is the i-th frontal slice of the given tensor , , is a diagonal matrix with the diagonal elements . The matrix can be obtained by any SNMF algorithm.

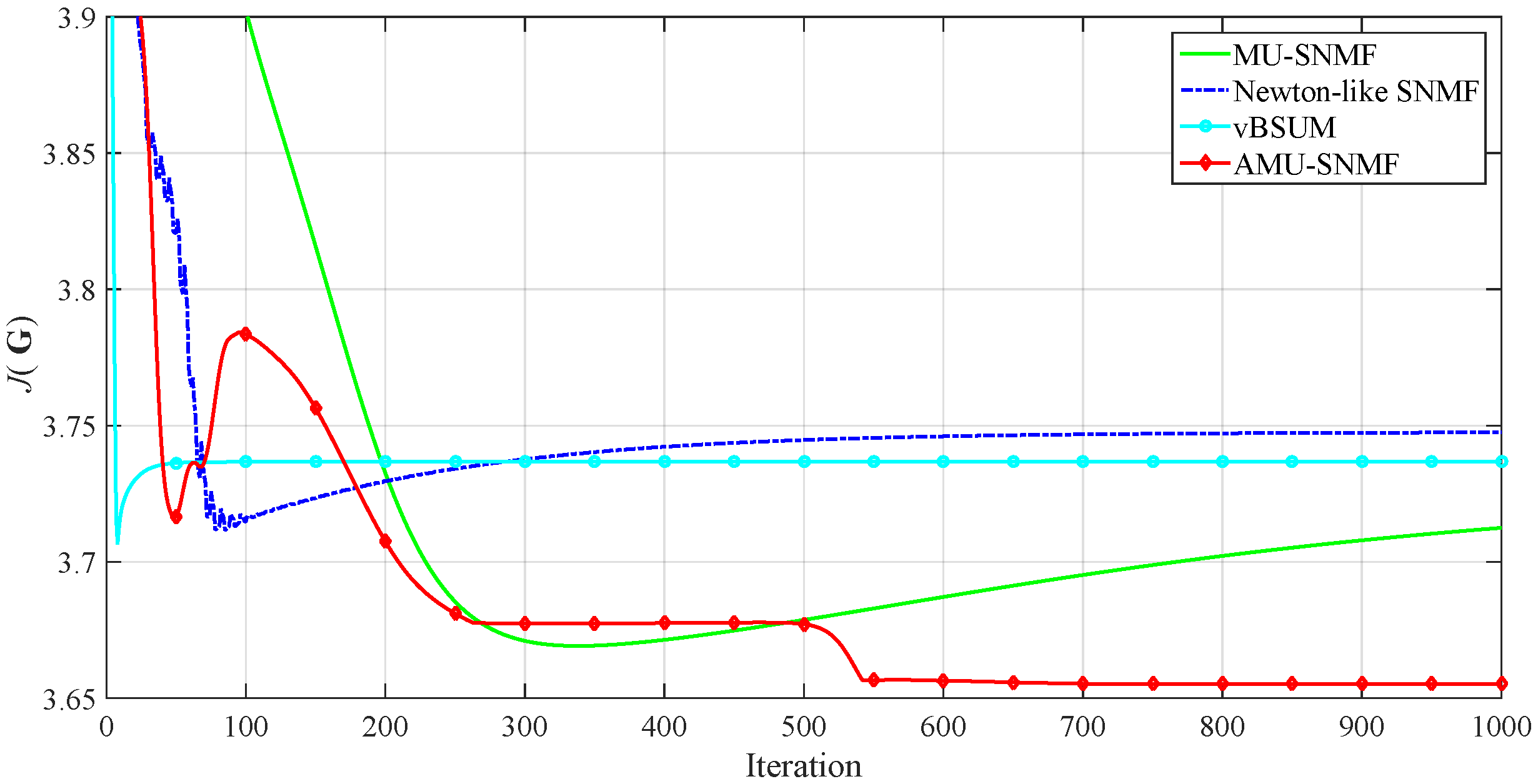

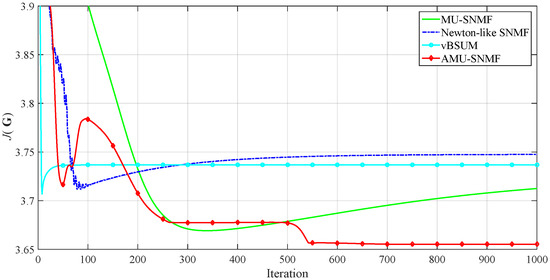

Following the framework of the averaging approach, we convert the SNTF problem (8) to the SNMF problem (10) and solve it by AMU-SNMF. Once is obtained, we compute from in the following way. Denote the j-th column of by . The vector is a normalization of such that the sum of is equal to 1. Then, is derived by , where satisfies . It is worth noting that the objective value is not guaranteed to be monotonically decreasing by the averaging approach. This can be visually understood in Figure 1, which shows the trajectories of generated by the averaging approach, where four different SNMF algorithms including MU-SNMF [6], Newton-like SNMF [10], vBSUM [8] and AMU-SNMF were separately used for (10), on a three order symmetric nonnegative tensor . We can see from Figure 1 that the matrix obtained at the last iteration may not have the lowest objective value compared to the previous iterations, and, therefore, may not be the best choice to be the output of the averaging approach. An alternative way, which we adopt in the experiments, is to store the estimation of that has the best and output it when the averaging approach terminates.

Figure 1.

The trajectories of generated by the averaging approach on a three order symmetric nonnegative tensor , where 4 different SNMF algorithms were used separately in the averaging approach.

3. Experiments and Results

In this section, we first test AMU-SNMF (Algorithm 2) on synthetic data, and then apply it to some real-world clustering problems. Finally, we test AMU-SNMF in SNTF using the framework of the averaging approach [1]. In the clustering experiments, the clustering quality is measured by clustering accuracy (CA) and normalized mutual information (NMI) [19]. CA is used for measuring the percentage of correctly clustered data points. Mutual information (MI) measures the mutual dependence between the predicted and ground truth clustering labels. Furthermore, NMI is a normalization of the MI score to scale the results between 0 (no mutual information) and 1 (perfect correlation). To demonstrate the effectiveness of AMU-SNMF, we compare it with seven SNMF algorithms including MU-SNMF [6], -SNMF [6], -SNMF [6], Newton-like SNMF [10] (the code of Newton-like SNMF is available from http://math.ucla.edu/dakuang/), BCD [7], sBSUM [8] and vBSUM [8]. For -SNMF and -SNMF algorithms, we use the default setting for the parameters and recommended by the authors of [6], i.e., , . In all the experiments, the SNMF algorithms are randomly initialized from points in the form of (i.e., ), where is a randomly generated nonnegative matrix and . We implement all the experiments on a computer with a 2.8-GHz Intel Core i5-4200 CPU and 8-GB memory by 64-bit MATLAB R2015a on Windows 7.

3.1. Synthetic Data

The synthetic data were generated in the following way: , where was generated randomly and half of its entries were zeros. was a matrix of noise whose intensity was determined by signal-to-noise ratio (SNR). We tested AMU-SNMF in both noise-free and noisy scenarios. In the noisy case, the noise level was dB. For each scenario, 20 different were generated to test AMU-SNMF. To decrease the effect of initializations, the SNMF algorithms were run 10 times with different initializations for each . The SNMF algorithms were stopped when their elapsed time exceeded 10 s. Following the strategy from [20], we report

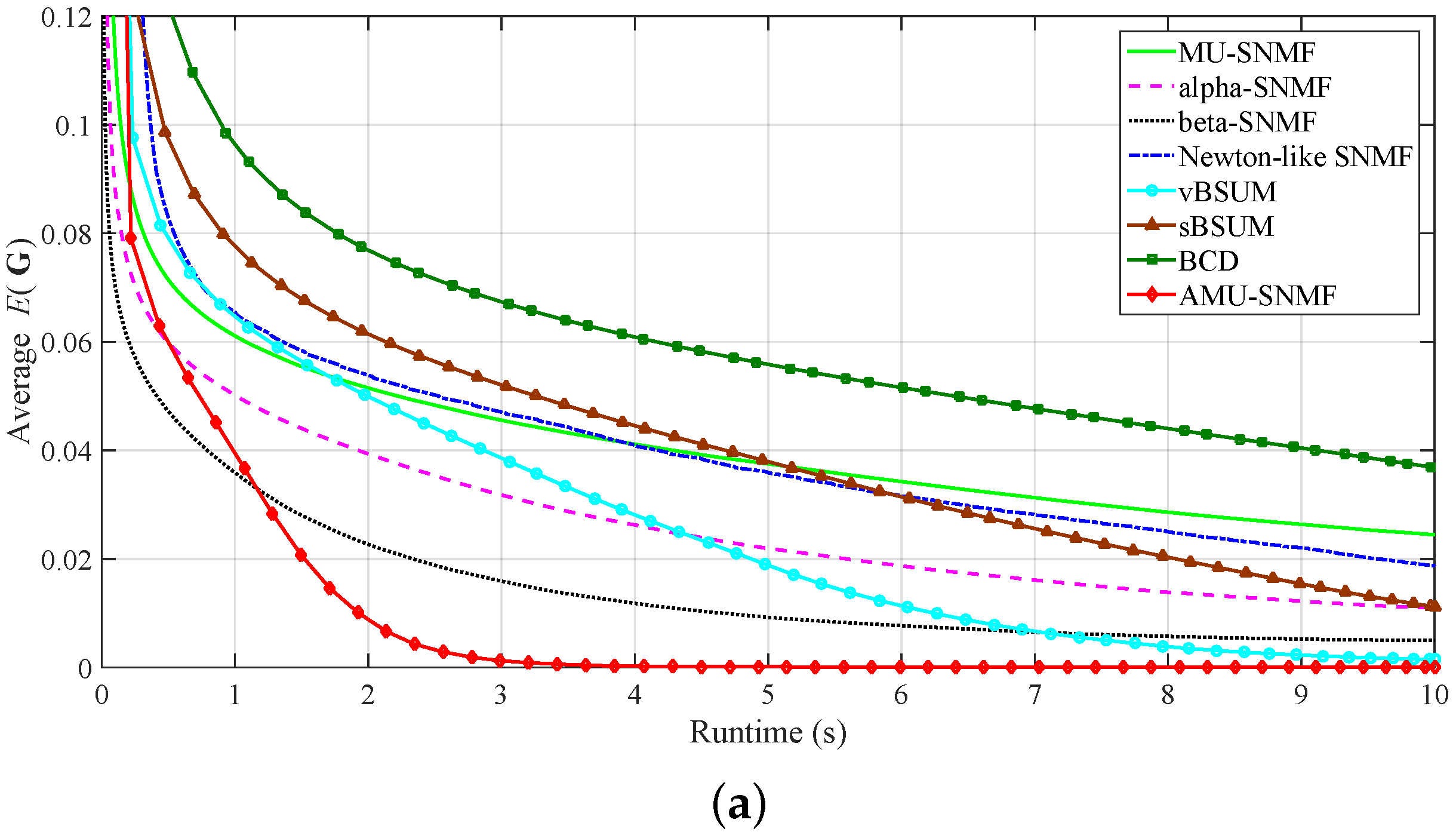

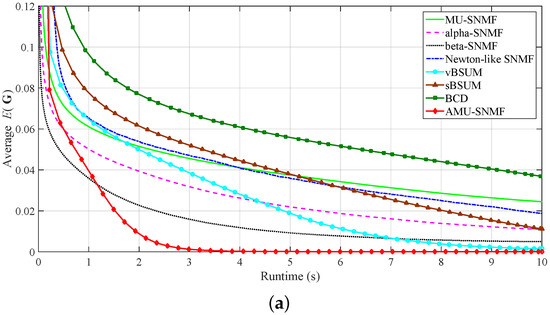

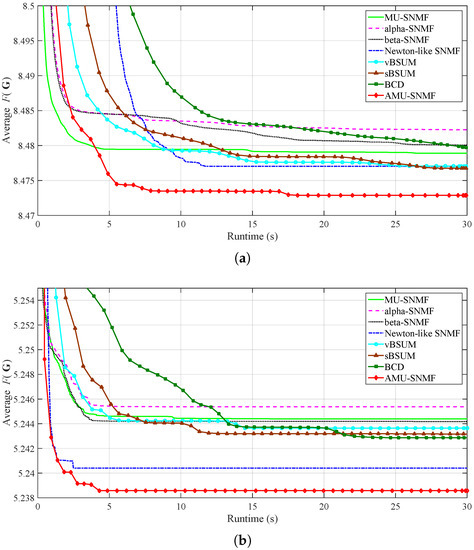

of all the SNMF algorithms, where denotes the lowest relative error obtained by any algorithm with any initialization. For the noise-free synthetic data, we use . The use of allows us to take meaningfully the average results over several synthetic data. We compute the average over 200 trials (20 different and 10 different initializations for each ), and plot them versus runtime in Figure 2. We can see that in both noise-free and noisy cases (1) it took AMU-SNMF less than 2 s to achieve lower average values than that MU-SNMF achieved at the end of the algorithm (10 s), i.e., AMU-SNMF was more than five times faster than MU-SNMF; (2) it took AMU-SNMF less than 4 s to achieve lower average values than that the other 6 algorithms (including -SNMF, -SNMF, Newton-like SNMF, BCD, sBSUM and vBSUM) achieved at the end of the algorithms (10 s), i.e., AMU-SNMF was more than two times faster than the six algorithms; (3) AMU-SNMF had lower average values at the end of the algorithm compared with all the other algorithms. The above observations demonstrate that AMU-SNMF is not only fast but also of high accuracy.

Figure 2.

The plots of the average versus runtime on the synthetic data. (a) Noise-free. (b) dB.

3.2. Document Clustering

In the experiment, we evaluate AMU-SNMF in the applications of document clustering. The goal of document clustering is to organize the documents into different semantic categories automatically [21]. Two widely used document collections, Topic Detection and Tracking 2 (TDT2) (http://projects.ldc.upenn.edu/TDT2/) and Reuters-21578 (http://www.daviddlewis.com/resources/testcollections/reuters21578/), were used here. The TDT2 corpus consists of data collected during the first half of 1998 and taken from six sources: ABC, CNN, VOA, NYT, PRI, and APW. It contains 11,201 documents from 96 semantic categories. The Reuters-21578 corpus was collected from the Reuters newswire in 1987. It contains 21,578 documents from 135 semantic categories. Both collections are highly unbalanced with the sizes of categories ranging from one to thousands of documents. To improve the reliability of our evaluations, we removed those documents appearing in two or more categories and selected 10 categories for each collection. We selected the 10th to 19th largest categories for the TDT2 corpus, and the 12th to 21th largest categories for the Reuters-21578 corpus. The sizes of the selected categories are just a little unbalanced (see Table 1).

Table 1.

The semantic categories from the TDT2 and the Reuters-21578 collections.

We first normalized each document vector to unit length. Then we constructed the affinity matrix as follows. Denote the normalized document vectors by , . The affinity matrix was computed by

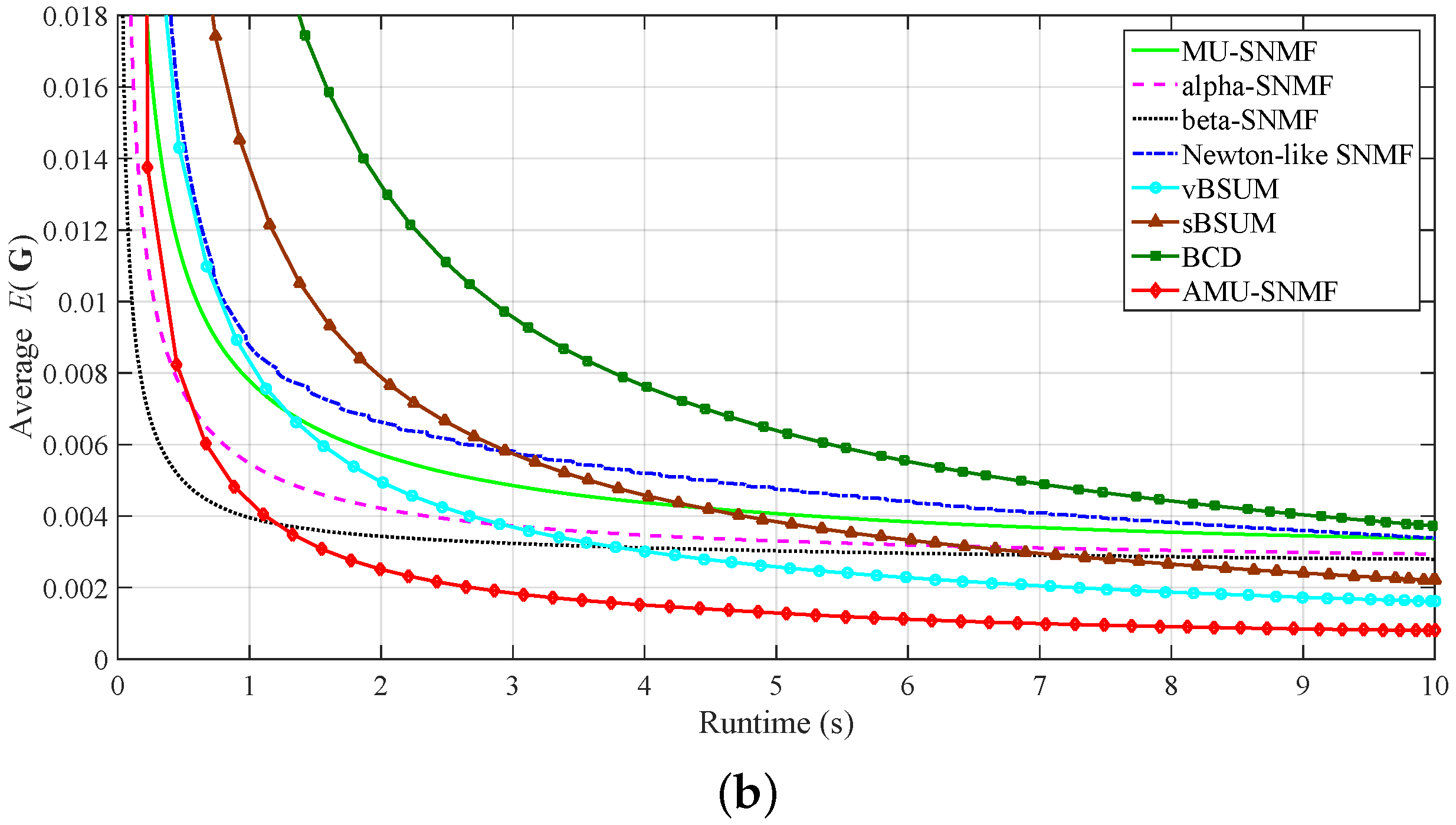

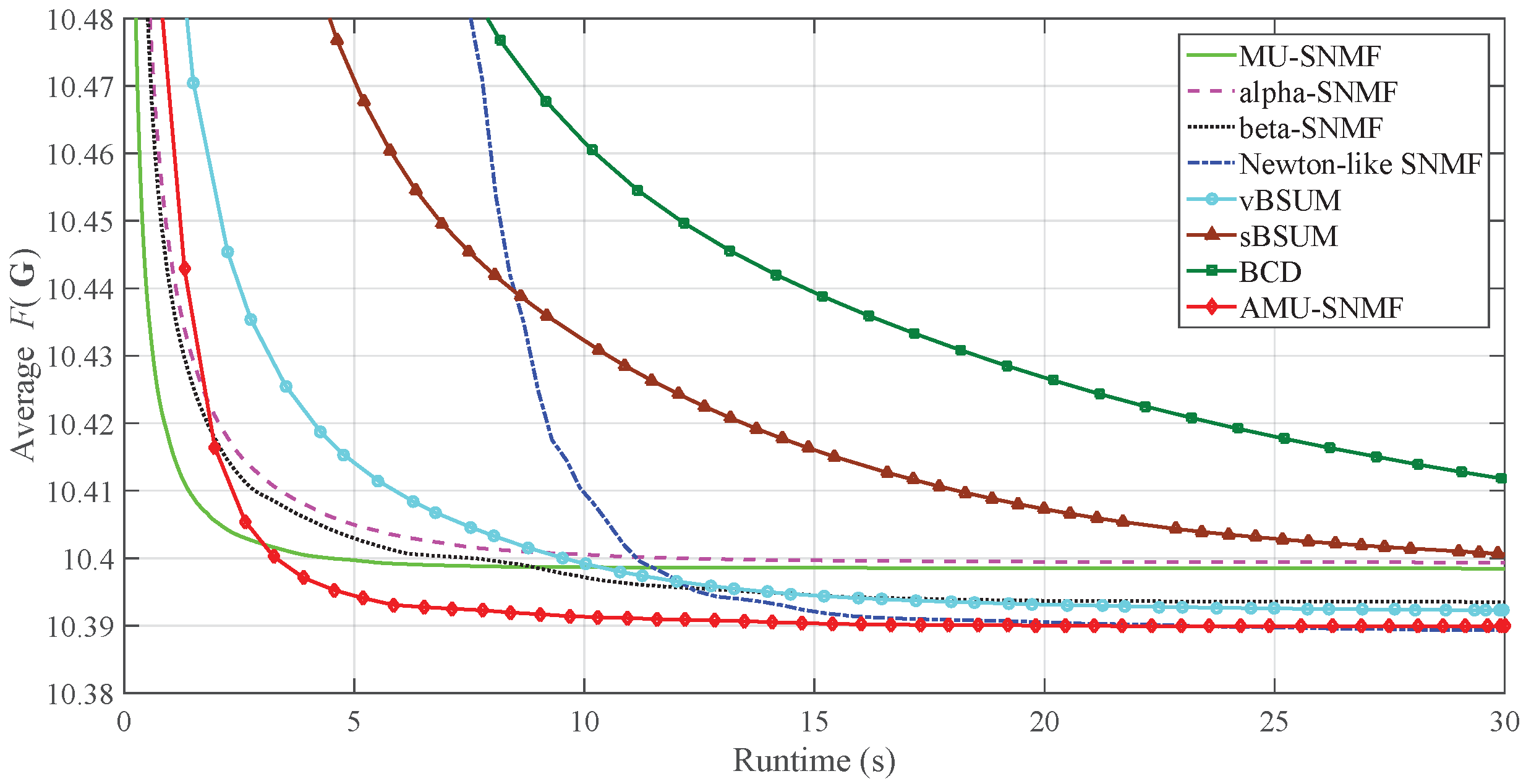

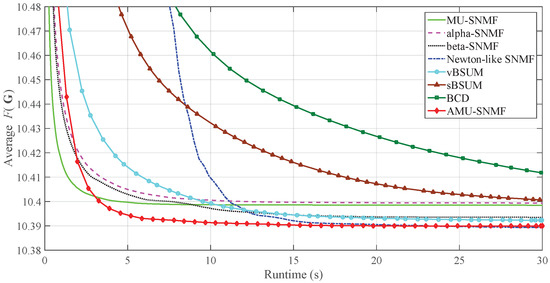

where , and the local scale parameter of each data point was set to be the distance between and its k-th neighbor. We used and as suggested in [10,22]. After that, we performed SNMF for document clustering, where every document was assigned to a cluster that had the largest entry in the corresponding row of . To decrease the effect of initializations, the SNMF algorithms were run 20 times with different initializations for each document collection. They were stopped when their elapsed time exceeded 30 s. The plots of the average versus runtime are displayed in Figure 3. We can see from Figure 3a that it took AMU-SNMF less than 5 s to achieve lower average value than that the other algorithms achieved at the end of the algorithms (30 s). Furthermore, we can see from Figure 3b that (1) all the algorithms converged, and their convergence time was about 15 s (MU-SNMF), 7 s (-SNMF), 5 s (-SNMF), 2.5 s (Newton-like SNMF), 25 s (BCD), 15 s (sBSUM), 15 s (vBSUM) and 5 s (AMU-SNMF), respectively; (2) it took AMU-SNMF less than 2 s to achieve lower average value than that the other algorithms achieved when they converged. The above observations demonstrate that AMU-SNMF was more than six times faster than MU-SNMF, and more than two times faster than the other algorithms except Newton-like SNMF. The clustering results on TDT2 and Reuters-21578, averaged over 20 trials with different initializations, are shown in Table 2. We can see that AMU-SNMF had higher, which demonstrates that AMU-SNMF achieved a better clustering performance compared with the other algorithms.

Figure 3.

The plots of the average versus runtime on (a) TDT2 and (b) Reuters-21578.

Table 2.

The mean of CA and the mean of NMI of the SNMF algorithms on the TDT2 and the Reuters-21578 collections.

3.3. Object Clustering

In the experiment, we evaluate AMU-SNMF on Columbia Object Image Library (COIL-20) dataset (http://www.cs.columbia.edu/CAVE/software/softlib/coil-20.php). COIL-20 is a database of gray-scale images of 20 objects. The objects were placed on a motorized turntable against a black background. The turntable was rotated through 360 degrees to vary object pose with respect to a fixed camera. Images of the objects were taken at pose intervals of 5 degrees. This corresponds to 72 images per object. Each image is pixels in size.

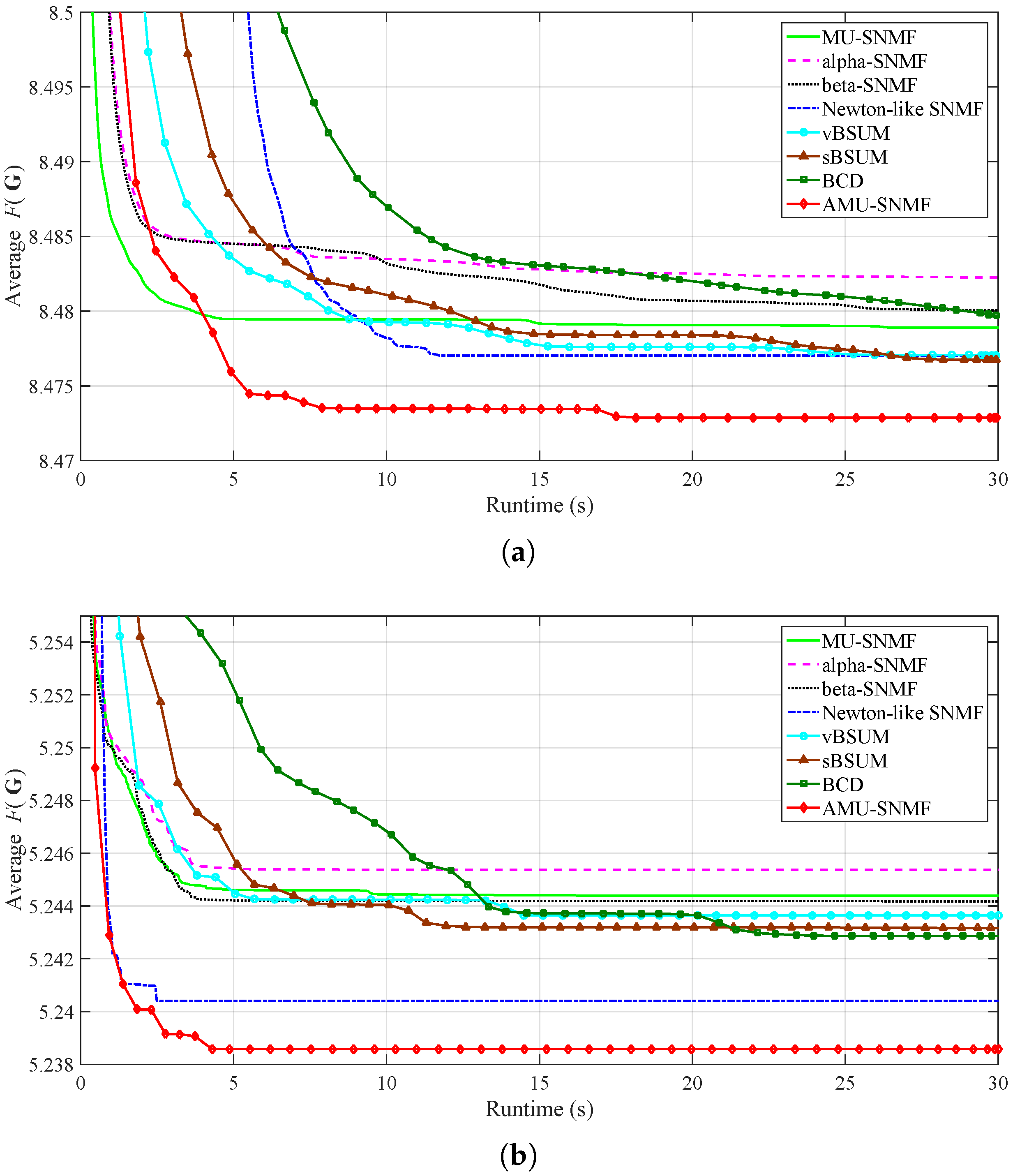

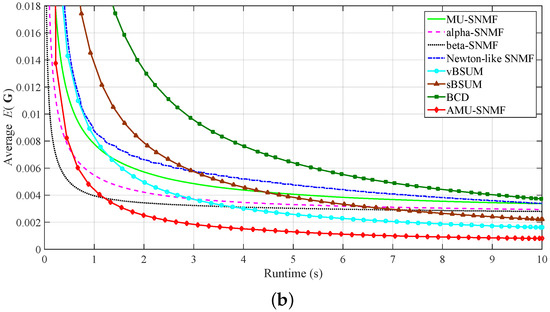

We first constructed the affinity matrix by (12). Then we performed SNMF for object clustering, where every image was assigned to a cluster that had the largest entry in the corresponding row of . To decrease the effect of initializations, the SNMF algorithms were run 20 times with different initializations. They were stopped when their elapsed time exceeded 30 s. The plot of the average versus runtime is displayed in Figure 4. We can see that (1) it took AMU-SNMF about 3.5 s to achieve the average value that MU-SNMF achieved when MU-SNMF converged (about 15 s), i.e., AMU-SNMF was more than four times faster than MU-SNMF; (2) AMU-SNMF was more than three times faster than -SNMF, -SNMF, BCD, sBSUM and vBSUM. The clustering results, averaged over 20 trials with different initializations, are shown in Table 3. We can see that AMU-SNMF had higher, which demonstrates that AMU-SNMF achieved a better clustering performance compared with the other algorithms.

Figure 4.

The plot of the average versus runtime on the COIL-20 dataset.

Table 3.

The mean of CA and the mean of NMI of the SNMF algorithms on the COIL-20 dataset.

3.4. SNTF

In the experiment, we test AMU-SNMF in SNTF using the framework of the averaging approach [1], which has been discussed in Section 2.4. The experiment was performed on synthetic data which were generated in the following way: , where , were generated randomly, and was a symmetric tensor of noise whose intensity was determined by signal-to-noise ratio (SNR). Both noise-free and noisy (SNR = 10 dB) synthetic data were used here with each including 10 different . The SNTF was performed by the averaging approach where eight different SNMF algorithms were separately used for (10). The averaging approach was stopped when its elapsed time exceeded 0.5 s. To decrease the effect of initializations, 10 trials were conducted by choosing different initializations for each . We measure the quality of SNTF by

The greater the value of , the better approximation of the tensor is obtained by . We compute the average of the averaging approach over 100 trials (10 different and 10 different initializations for each ). The results are listed in Table 4. We can see that the averaging approach with AMU-SNMF yielded better approximate tensor than that with other SNMF algorithms in both noise-free and noisy cases.

Table 4.

The mean of of the averaging approach using different SNMF algorithms.

4. Conclusions

In this paper, we propose an accelerated variant of the MU-SNMF algorithm designed to solve the SNMF problem. The accelerated algorithm is referred to as AMU-SNMF. It is derived by exploiting the extrapolation scheme in NAG and a restart strategy. Our contributions consist of two parts. First, we derive an accelerated variant of the MU-SNMF algorithm. It is numerically shown that AMU-SNMF is more than four times faster than MU-SNMF. Second, we empirically demonstrate that AMU-SNMF outperforms other 6 state-of-the-art algorithms, including -SNMF [6], -SNMF [6], Newton-like SNMF [10], BCD [7], sBSUM [8] and vBSUM [8]. The experiment results show that AMU-SNMF is more than two times faster than the above algorithms except the Newton-like SNMF algorithm. Moreover, the experiment results show that AMU-SNMF can achieve a better clustering performance in the real-world clustering problems compared with the above algorithms. Furthermore, AMU-SNMF is more suitable for the averaging approach in SNTF. As one of directions of future work, we plan to investigate further the convergence properties of the AMU-SNMF algorithm. Another direction of future work is that we could apply the methodologies adopted in this paper to other SNMF algorithm.

Author Contributions

Funding acquisition, Z.H. and J.L.; Methodology, P.W. and Z.H.; Supervision, Z.H.; Validation, P.W., J.L., B.T., Y.B., J.T., T.L. and Z.L.; Writing—original draft, P.W.; Writing—review and editing, P.W., Z.H., J.L., B.T., Y.B., J.T., T.L. and Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

The work was supported in part by National Natural Science Foundation of China under Grants 61773127 and 61727810, Ten Thousand Talent Program approved in 2018, Guangdong Province Foundation Grant 2019B1515120036, Natural Science Foundation of Guangdong Province under Grant 2018A030313306, Guangzhou Science and Technology Foundation under Grant 201802010037, and Key Areas of Research and Development Plan Project of Guangdong under Grant 2019B010147001, Natural Science Foundation of Guangdong Province under Grant 2018A030313306, PCL Future Regional Network Facilities for Large-Scale Experiments and Applications Project under Grant PCL2018KP001, GDHVPS 2014.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cichocki, A.; Jankovic, M.; Zdunek, R.; Amari, S.I. Sparse super symmetric tensor factorization. In Proceedings of the 2007 International Conference on Neural Information Processing (ICONIP), Kitakyushu, Japan, 13–16 November 2007; pp. 781–790. [Google Scholar]

- Lu, S.; Hong, M.; Wang, Z. A nonconvex splitting method for symmetric nonnegative matrix factorization: Convergence analysis and optimality. IEEE Trans. Signal Process. 2017, 65, 3120–3135. [Google Scholar] [CrossRef]

- Gao, T.; Olofsson, S.; Lu, S. Minimum-volume-regularized weighted symmetric nonnegative matrix factorization for clustering. In Proceedings of the 2016 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Washington, DC, USA, 7–9 December 2016; pp. 247–251. [Google Scholar]

- Marin, M.; Vlase, S.; Paun, M. Considerations on double porosity structure for micropolar bodies. Aip Adv. 2015, 5, 037113. [Google Scholar] [CrossRef]

- Ma, Y.; Hu, X.; He, T.; Jiang, X. Hessian regularization based symmetric nonnegative matrix factorization for clustering gene expression and microbiome data. Methods 2016, 111, 80–84. [Google Scholar] [CrossRef] [PubMed]

- He, Z.; Xie, S.; Zdunek, R.; Zhou, G.; Cichocki, A. Symmetric Nonnegative Matrix Factorization: Algorithms and Applications to Probabilistic Clustering. IEEE Trans. Neural Netw. 2011, 22, 2117–2131. [Google Scholar]

- Vandaele, A.; Gillis, N.; Lei, Q.; Zhong, K.; Dhillon, I. Efficient and Non-Convex Coordinate Descent for Symmetric Nonnegative Matrix Factorization. IEEE Trans. Signal Process. 2016, 64, 5571–5584. [Google Scholar] [CrossRef]

- Shi, Q.; Sun, H.; Lu, S.; Hong, M.; Razaviyayn, M. Inexact Block Coordinate Descent Methods for Symmetric Nonnegative Matrix Factorization. IEEE Trans. Signal Process. 2017, 65, 5995–6008. [Google Scholar] [CrossRef]

- Zass, R.; Shashua, A. A unifying approach to hard and probabilistic clustering. In Proceedings of the 2005 International Conference on Computer Vision (ICCV), Beijing, China, 17–20 October 2005; pp. 294–301. [Google Scholar]

- Kuang, D.; Ding, C.; Park, H. Symmetric Nonnegative Matrix Factorization for Graph Clustering. In Proceedings of the 2012 SIAM International Conference on Data Mining (SDM), Anaheim, CA, USA, 26–28 April 2012; pp. 106–117. [Google Scholar]

- Kuang, D.; Yun, S.; Park, H. SymNMF: Nonnegative Low-Rank Approximation of a Similarity Matrix for Graph Clustering. J. Glob. Optim. 2015, 62, 545–574. [Google Scholar] [CrossRef]

- Lu, S.; Wang, Z. Accelerated algorithms for eigen-value decomposition with application to spectral clustering. In Proceedings of the 2015 Asilomar Conference on Signals, Systems and Computers (ACSSC), Pacific Grove, CA, USA, 8–11 November 2015; pp. 355–359. [Google Scholar]

- Bo, L.; Zhang, Z.; Wu, X.; Yu, P.S. Relational clustering by symmetric convex coding. In Proceedings of the 2007 International Conference on Machine Learning (ICML), Corvallis, OR, USA, 20–24 June 2007; pp. 569–576. [Google Scholar]

- Long, B.; Zhang, Z.; Yu, P. Co-clustering by block value decomposition. In Proceedings of the 2005 SIGKDD Conference on Knowledge Discovery and Data Mining (SIGKDD), Chicago, IL, USA, 21–24 August 2005; pp. 635–640. [Google Scholar]

- Nesterov, Y. A method of solving a convex programming problem with convergence rate O(1/k2). Sov. Math. Dokl. 1983, 27, 372–376. [Google Scholar]

- Sutskever, I.; Martens, J.; Dahl, G.; Hinton, G. On the importance of initialization and momentum in deep learning. In Proceedings of the 2013 International Conference on Machine Learning (ICML), Atlanta Marriott Marquis, Atlanta, GA, USA, 16–21 June 2013; pp. 1139–1147. [Google Scholar]

- Botev, A.; Lever, G.; Barber, D. Nesterov’s accelerated gradient and momentum as approximations to regularised update descent. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1899–1903. [Google Scholar]

- Yudin, D.; Nemirovskii, A.S. Informational complexity and efficient methods for the solution of convex extremal problems. Matekon 1976, 13, 3–25. [Google Scholar]

- Wu, W.; Jia, Y.; Kwong, S.; Hou, J. Pairwise Constraint Propagation-Induced Symmetric Nonnegative Matrix Factorization. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 6348–6361. [Google Scholar] [CrossRef]

- Ang, A.M.S.; Gillis, N. Accelerating nonnegative matrix factorization algorithms using extrapolation. Neural Comput. 2019, 31, 417–439. [Google Scholar] [CrossRef] [PubMed]

- Cai, D.; He, X.; Han, J. Document clustering using locality preserving indexing. IEEE Trans. Knowl. Data Eng. 2005, 17, 1624–1637. [Google Scholar] [CrossRef]

- Zelnik-Manor, L.; Perona, P. Self-Tuning Spectral Clustering. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 13–18 December 2004; pp. 1601–1608. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).