Abstract

This paper studies a heteroscedastic partially linear model based on -mixing random errors, stochastically dominated and with zero mean. Under some suitable conditions, the strong consistency and -th mean consistency of least squares (LS) estimators and weighted least squares (WLS) estimators for the unknown parameter are investigated, and the strong consistency and -th mean consistency of the estimators for the non-parametric component are also studied. These results include the corresponding ones of independent, negatively associated (NA), and -mixing random errors as special cases. At last, two simulations are presented to support the theoretical results.

Keywords:

ρ−-mixing random variables; heteroscedastic; partially linear model; LS estimators; WLS estimators; strong consistency; p-th mean consistency MSC:

62G05; 62G20; 62F12

1. Introduction

Consider the following heteroscedastic partially linear model:

where , , , , and are known and nonrandom design points, represents an unknown parameter, and represent unknown functions, which are defined on a compact set , stands for the -th variables that can be observable at points , and stands for random errors.

In order to analyze the effect of temperature on electricity usage, Engle et al. [1] proposed the partially linear model

Since then, many statisticians have studied partially linear regression models. The model (2) was further investigated by Heckman [2], Speckman [3], Gao [4], Härdle et al. [5], Hu et al. [6], Zeng and Liu [7], and so forth. Some applications of the model were given. Inspired by the model (2), a more general model was proposed by Gao et al. [8]:

Gao et al. [8] established the asymptotic normality of least squares (LS) and weighted least squares (WLS) estimators for based on the family of non-parametric estimators for and in the model (3). Baek and Liang [9] investigated the asymptotic property in the model (3) for negatively associated errors. Zhou et al. [10] derived the moment consistency for and in model (3) under negatively associated samples. Hu [11] proposed a new partially linear model

and derived the strong and moment consistencies with independent and -mixing errors. Li and Yang [12,13] studied the moment and strong consistencies for and in the model (4) based on negatively associated samples. Wang et al. [14] and Wu and Wang [15] discussed the moment and strong consistencies for LS and WLS estimators of and with -mixing errors. In the present paper, we will investigate the model (1) based on the model (4). The model (1) can be used in hydrology, biology, and so on (see [16]).

Now, let us recall some concepts of dependent structures. Assume that is a set of natural numbers and are two non-empty disjoint sets. We define that .

Definition 1

([17]). A finite collection of random variables is called negatively associated (NA) if for each pair of disjoint subsets , of ,

where and are any coordinate-wise non-decreasing functions such that the covariance exists.

Definition 2

([18]). A random sequence is said to be -mixing if

where

and are -fields that are generated by and respectively, is the space of all square integral and -measurable random variables, and is defined in the same way.

Definition 3

([19,20]). A sequence is said to be -mixing if

where

, and is the set of non-decreasing functions.

We can easily see that and a -mixing sequence is NA (in particular, independent) if and only if . Therefore, -mixing sequences include -mixing sequences and NA sequences as special cases. However, -mixing sequences and NA sequences are not always -mixing sequences. Zhang and Wang [19] constructed the following example, which is a -mixing sequence, but not NA and also not -mixing.

Example 1

([19]). Assume that , , and are three independent sequences that are independent and identically distributed (i.i.d.) standard normal random variables. Denote

and . Then, is -mixing with . However, is neither NA nor -mixing.

On one hand, NA sequences have been widely applied to reliability theorem and multivariate statistical analysis (see [21,22]). On the other hand, some Markov Chains and moving average processes are -mixing sequences (see [23]). The concept of -mixing sequences is important in a lot of areas, for instance, finance, economics, and other sciences (see [24]). Therefore, studying -mixing sequences is of considerable significance.

Since Zhang and Wang [19] proposed the concept of -mixing sequences, many results on -mixing sequences have been established. One can refer to Zhang and Wang [19], Wang and Lu [25], and Yuan and An [26] for some moment inequalities and some limiting behavior; Zhang [20] and Zhang [27] for some central limit theorems; Chen et al. [28] for complete convergence for weighted sums of -mixing sequences; Zhang [29] for the complete moment convergence for the partial sum of -mixing moving average processes; Wu and Jiang [30] for almost sure convergence of -mixing sequences; and Xu and Wu [31] for an almost sure central limit theorem for the self-normalized partial sums.

However, we have not found studies on the model (1) under -mixing random errors in the literature. In the present paper, we will study the estimation problem for the model (1) based on the assumption that the errors are -mixing sequences that are stochastically dominated and zero mean. The strong consistency and mean consistency of LS estimators and WLS estimators for and are established respectively based on some suitable conditions. The results obtained in the paper deal with independent errors as well as dependent errors as special cases.

Next, we will recall the definition of stochastic domination.

Definition 4

([32]). A random sequence is stochastically dominated by a random variable if

for some , every and each .

The remainder of this paper is organized as follows. The LS estimators and WLS estimators of based on the family of non-parametric estimators for and some conditions are introduced in Section 2. We give the main results in Section 3. Several lemmas are given in Section 4. We provide the proofs of the main results in Section 5. Two simulations are carried out in Section 6. We conclude the paper in Section 7. Throughout the paper, let denote positive constants whose values may be different in various places. “i.i.d.” stands for independent and identically distributed. stands for the Euclidean norm.

2. Estimation and Conditions

Assume that satisfies the model (1) and is a weight function that is measurable on the compact set . For simplicity and convenience, the model (1) can be written as

We denote , , , , , , and .

For the model (5), one can get from that for , . Thus, for any given , we can define the non-parametric estimator of in terms of

Hence, the LS estimators of can be defined by

By (7), we have

When the random errors are heteroscedastic, we modify to a WLS estimator. We can define the WLS estimators of in terms of

By (9), we derive that

Taking into account and , we define the estimator of respectively:

and

In order to obtain the relevant theorems, several important conditions are given below.

- (i) ;(ii) ;(iii) and are continuous functions on compact set .

- (i) ;(ii) for some .

- (i) ;(ii) for any .

- .

Remark 1.

Conditions(i) (ii) are some regular conditions that are often imposed in studies of LS and WLS estimators in heteroscedastic partially linear models. One can refer to [5,8,9] and so on.(iii) is mild and holds for most commonly used functions, such as polynomial and trigonometric functions (see [9]). Conditions–are often applied to investigate strong consistency (see [9,33]) and mean consistency (see [10,16]).(ii) is weaker than the corresponding conditions of [16] and [33]. Thus, the above conditions are very mild. Moreover, by(i) (ii), one can get that

and

3. Main Results

In this paper, let be a -mixing sequence with zero mean, which is stochastically dominated by a random variable .

Theorem 1.

Assume that-hold. Iffor some, then

asand

as.

Theorem 2.

Under the conditions of Theorem 1, in addition, ifholds, then

asand

as.

Theorem 3.

Assume that-holds. Iffor some, then

and

Theorem 4.

Under the conditions of Theorem 3, in addition, ifholds, then

and

Remark 2.

Since-mixing sequences include NA (in particular, independent) and-mixing sequences, Theorems 1–4 also apply for NA and-mixing sequences.

4. Some Lemmas

From the definition of -mixing sequences, we can get the first lemma.

Lemma 1.

Ifis a-mixing sequence with mixing coefficients, thenis still a-mixing sequence with mixing coefficients not greater than. Here,are non-decreasing functions (non-increasing functions).

Lemma 2

(Rosenthal-type inequality, [25,29]). If is a -mixing sequence of zero mean with for some , then there exists a constant depending only on and such that

for any , here .

Lemma 3

([32]). If is a random sequence that is stochastically dominated by a random variable , for every and , we have

Therefore,

where is a positive constant.

Lemma 4.

Letbe a-mixing sequence of zero mean. Suppose thatis an array of functions defined on a compact setsuch thatandfor some. Iffor some, then

as.

Proof.

Denote

and

Without loss of generality, one can suppose that . Hence, we know by Lemma 1 that , , and are also -mixing sequences with zero mean. Note that . Hence, for any , we have

The proof of (24) is similar to that of the Lemma 3.3 in Zhou and Hu [34]. By Lemma 3, we have . Hence, for every , from the Markov inequality, Lemma 2 and , we get that

and

Hence, it follows from (24) through (26) and that

By the Borel–Cantenlli lemma, we obtain for any that

as . Therefore, (23) follows. □

Lemma 5.

Letbe a-mixing random sequence of zero mean. Suppose thatis an array of functions defined on a compact setsuch thatand. Iffor some, then

Proof.

Using the notations in the proof of Lemma 4 and by inequality (let be a random sequence, then for ), one gets that

For every , by Lemma 2, Lemma 3, and , we derived that

and

Therefore, (27) follows from (28) through (30). □

5. Proofs of the Main Results

By (5), (8), and (10), we derive that

and

where , and , , .

Proof of Theorem 1.

We only need to prove (16) since the proof of (15) is analogous. By (32), we can get that

Observe that . Hence, it follows from (i) and (ii) and (14) that

and

Thus, by Lemma 4, we have

as . Note that

Hence, it follows from (ii), , and (14) that

and

where is the same as that in (ii).

Thus, by Lemma 4, one can get that

as . By (14), we derive that

By (iii), (i), and , we obtain that

Thus, by (40) and (41), we have

as . Therefore, (16) follows from (33), (36), (39), and (42). □

Proof of Theorem 2.

We only need to prove (18) since the proof of (17) is analogous. In light of (12), we have

Hence,

By (16) and , we have

as . From (41), it follows that

as . By (ii) and , we can get that

and

Hence, from Lemma 4, it follows that

as . Therefore, (18) follows from (44) through (47). □

Proof of Theorem 3.

We only need to prove (20) since the proof of (19) is analogous. By (33), we have

Hence, it follows by inequality that

The rest of the proof is similar to the proof of (16), so we omitted the details here. □

Proof of Theorem 4.

We only need to prove (22) since the proof of (21) is analogous. By (43), we derive that

Hence, by inequality, we derive that

Since , together with (20) and , we can get that

From (41), it follows that

By (ii) and , we can get that

and

Hence, by Lemma 5, one can get that

Therefore, (22) follows from (49) through (52). □

6. Numerical Simulations

In this section, we will verify the validity of the theoretical results by two simulations.

6.1. Simulation 1

We will simulate a partially linear model

where , , , , , and random errors have the common distribution as that of in Example 1 of Section 1. Then, is a -mixing sequence, and it is neither NA nor -mixing.

In particular, we take the weight function as the following nearest neighbor weight function (see [11,35]). Without loss of generality, denote and . For each , we rewrite

as follows:

Take and define the nearest neighbor weight function as

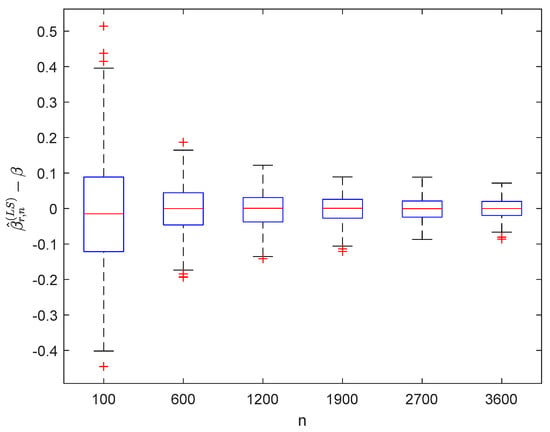

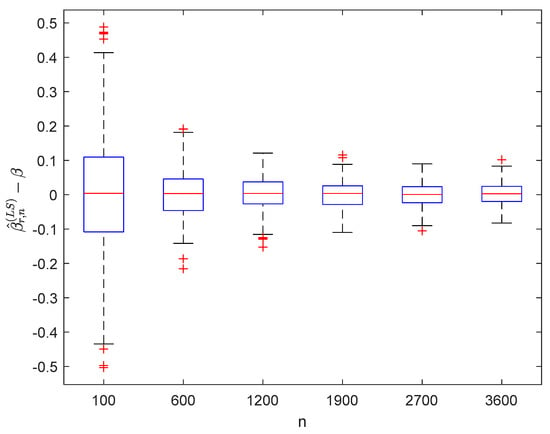

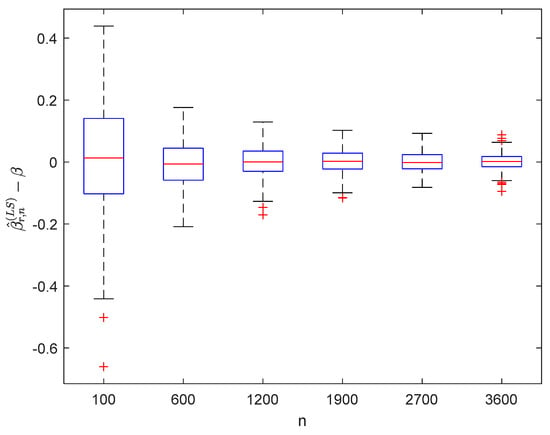

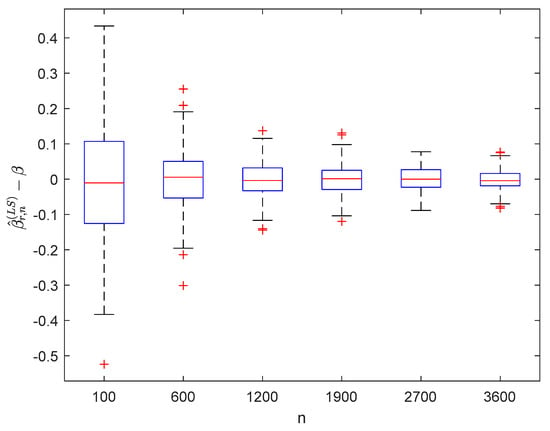

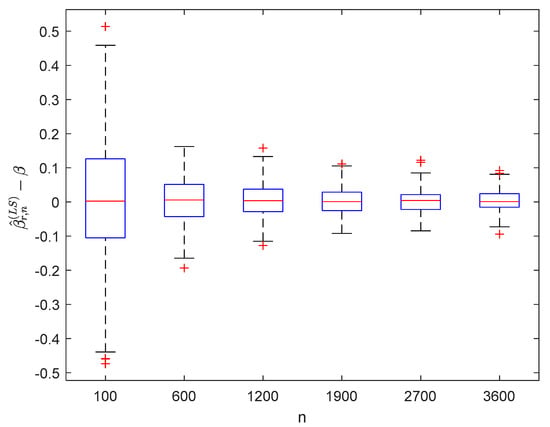

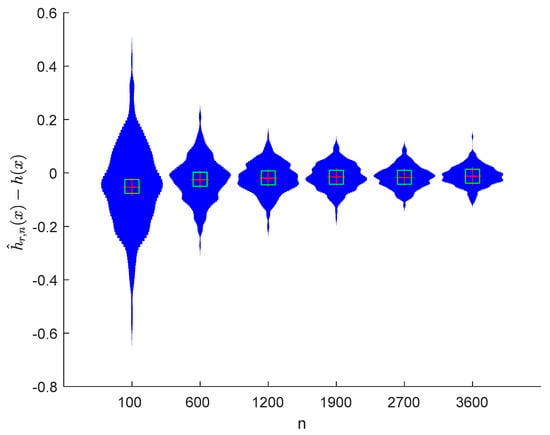

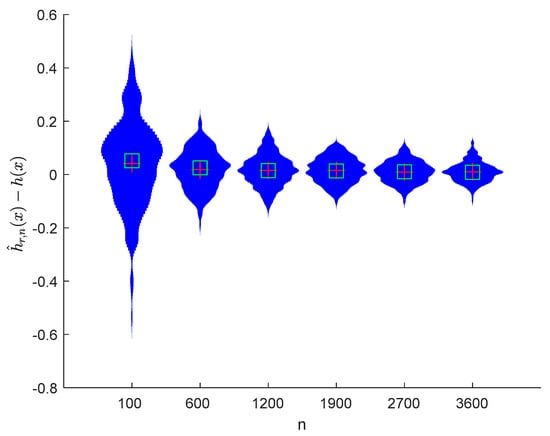

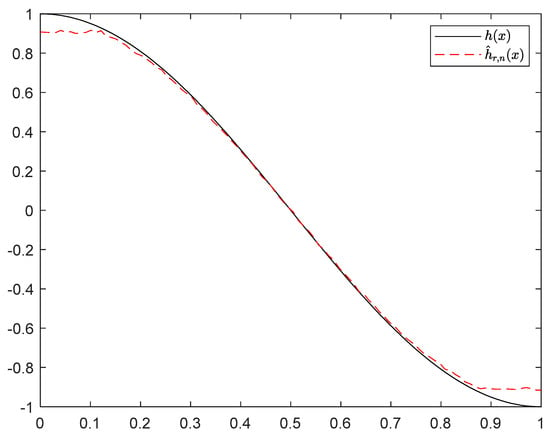

where the sample sizes are taken as , , , , , and and the points are taken as , , , and , respectively. We compute and for times, respectively. The boxplots of are provided in Figure 1, Figure 2, Figure 3 and Figure 4, the violin plots of are provided in Figure 5, Figure 6, Figure 7 and Figure 8, the curves of and are provided in Figure 9, and the mean squared errors (MSE) of and are presented in Table 1 and Table 2, respectively.

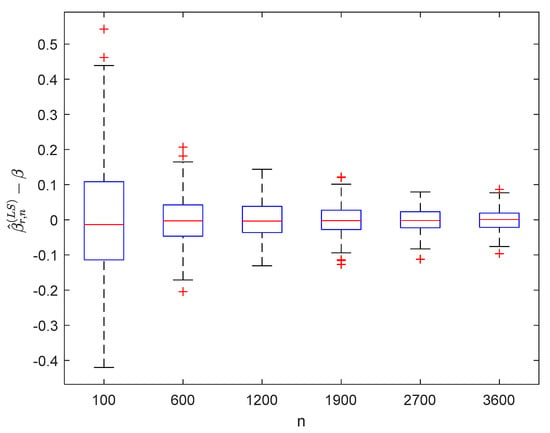

Figure 1.

Boxplots of with and .

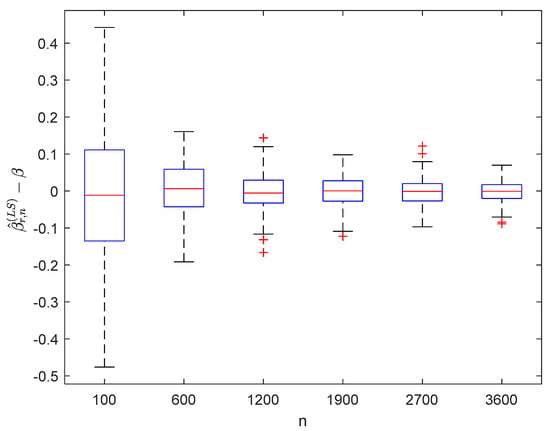

Figure 2.

Boxplots of with and .

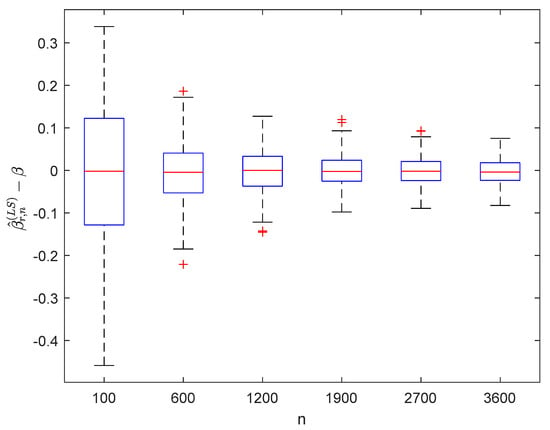

Figure 3.

Boxplots of with and .

Figure 4.

Boxplots of with and .

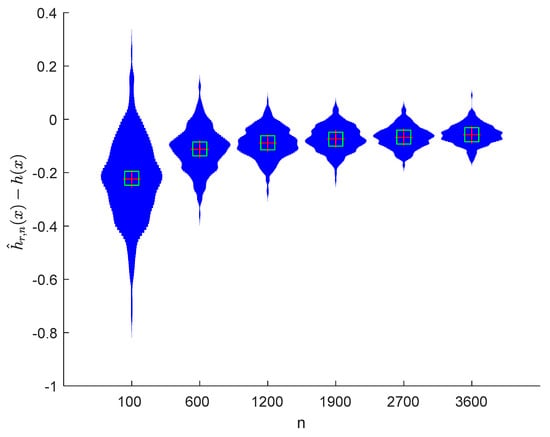

Figure 5.

Violin plots of with and .

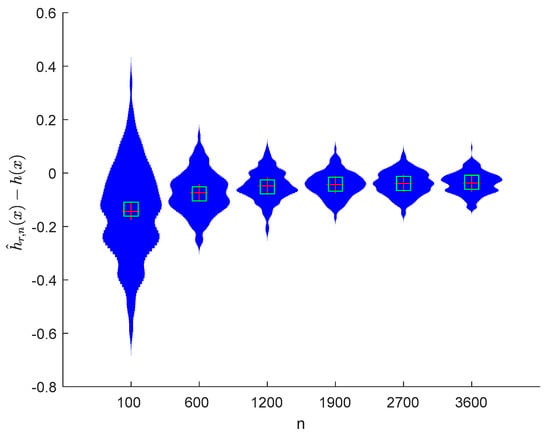

Figure 6.

Violin plots of with and .

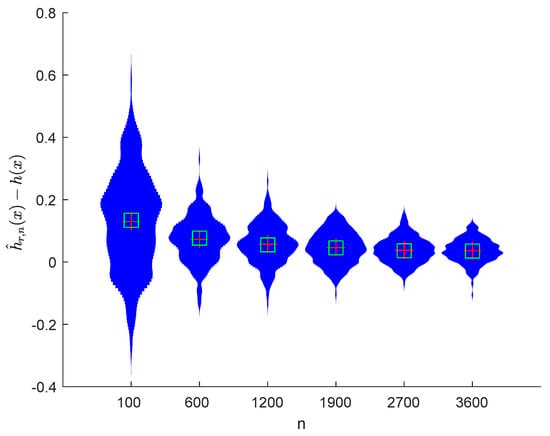

Figure 7.

Violin plots of with and .

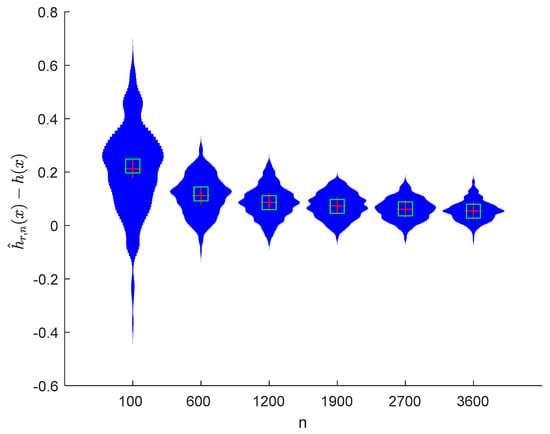

Figure 8.

Violin plots of with and .

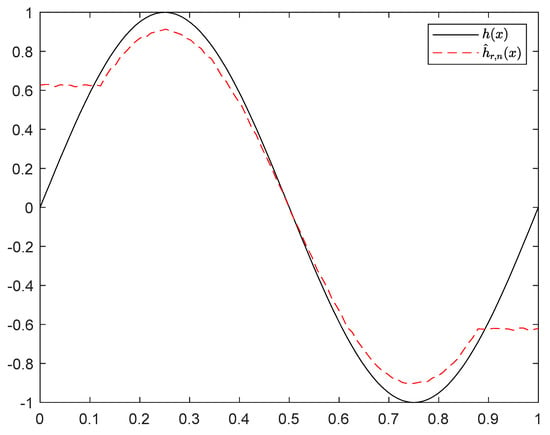

Figure 9.

Curves of and with and .

Table 1.

The MSEs of with and .

Table 2.

The MSEs of with and .

6.2. Simulation 2

We will simulate a partially linear model

where , , , , , and random errors have the same distribution as in Example 1 of Section 1. Then, is a -mixing sequence, and it is neither NA nor -mixing.

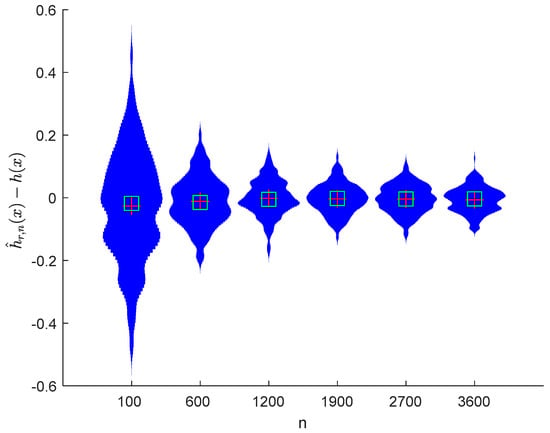

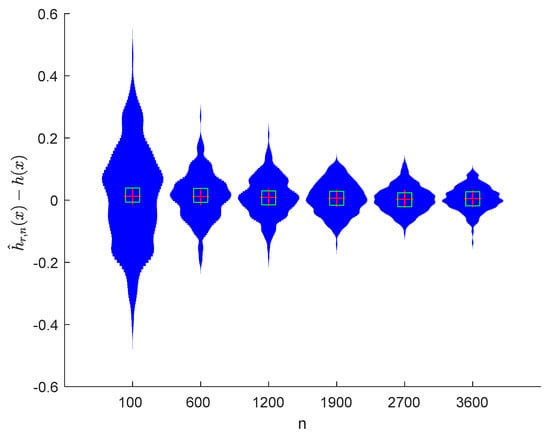

Using the same estimating methods as model (53), we compute and for times in model (54) under different values of , respectively. The boxplots of are provided in Figure 10, Figure 11, Figure 12 and Figure 13, the violin plots of are provided in Figure 14, Figure 15, Figure 16 and Figure 17, the curves of and are provided in Figure 18, and the MSEs of and are presented in Table 3 and Table 4, respectively.

Figure 10.

Boxplots of with and .

Figure 11.

Boxplots of with and .

Figure 12.

Boxplots of with and .

Figure 13.

Boxplots of with and .

Figure 14.

Violin plots of with and .

Figure 15.

Violin plots of with and .

Figure 16.

Violin plots of with and .

Figure 17.

Violin plots with and .

Figure 18.

Curves of and with and .

Table 3.

The MSEs of with and .

Table 4.

The MSEs of with and .

It can be seen from Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8 and Figure 10, Figure 11, Figure 12, Figure 13, Figure 14, Figure 15, Figure 16 and Figure 17 that regardless of the values of , and fluctuate to zero and the ranges of and decrease as increases. From Table 1, Table 2, Table 3 and Table 4, one can see that regardless of the values of , the MSEs decrease gradually as increases. Hence the estimators get closer and closer to their real values as increases. Figure 9 and Figure 18 further show that the estimators of function have good effects. The simulation results directly reflect our theoretical results.

7. Conclusions

In this paper, we mainly investigated the asymptotic properties of the estimators for the unknown parameter and non-parametric component in the heteroscedastic partially linear model (1). A lot of authors have derived the asymptotic properties of the estimators in partially linear models with independent random errors (see [4,5,6,8,33]). However, in many applications, the random errors are not independent. Here, we assumed that the random errors are -mixing, which includes independent, NA, and -mixing random variables as special cases. Under some suitable conditions, the strong consistency and -th mean consistency of the LS estimator and WLS estimator for the unknown parameter were investigated, and the strong consistency and -th mean consistency of the estimators for the non-parametric component were also studied. The results obtained in this paper include the corresponding ones of independent random errors, NA random errors (see [16]), and -mixing random errors as special cases. Furthermore, for the model (1), we carried out simulations to study the numerical performance of the asymptotic properties for the estimators of the unknown parameter and non-parametric component for the first time. -mixing sequences are widely used dependent sequences. Therefore, investigating the limit properties of the estimators in regression models under -mixing errors in future studies is an interesting subject.

Author Contributions

Methodology, Software, Writing—original draft, and Writing—review and editing, Y.Z.; Funding acquisition, Supervision, and Project administration, X.L.; Validation, Y.Z. and X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (61374183) and the Project of Guangxi Education Department (2017KY0720).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Engle, R.; Granger, C.; Rice, J.; Weiss, A. Nonparametric estimates of the relation between weather and electricity sales. J. Am. Stat. Assoc. 1986, 81, 310–320. [Google Scholar] [CrossRef]

- Heckman, N. Spline smoothing in partly linear models. J. R. Stat. Soc. Ser. B 1986, 48, 244–248. [Google Scholar] [CrossRef]

- Speckman, P. Kernel smoothing in partial linear models. J. R. Stat. Soc. Ser. B 1988, 50, 413–436. [Google Scholar] [CrossRef]

- Gao, J.T. Consistency of estimation in a semiparametric regression model (I). J. Syst. Sci. Math. Sci. 1992, 12, 269–272. [Google Scholar]

- Härdle, W.; Liang, H.; Gao, J.T. Partially Linear Models; Physica-Verlag: Heidelberg, Germany, 2000. [Google Scholar]

- Hu, H.C.; Zhang, Y.; Pan, X. Asymptotic normality of DHD estimators in a partially linear model. Stat. Papers 2016, 57, 567–587. [Google Scholar] [CrossRef]

- Zeng, Z.; Liu, X.D. Asymptotic normality of difference-based estimator in partially linear model with dependent errors. J. Inequal. Appl. 2018, 2018, 267. [Google Scholar] [CrossRef] [PubMed]

- Gao, J.T.; Chen, X.R.; Zhao, L.C. Asymptotic normality of a class of estimators in partial linear models. Acta Math. Sin. 1994, 37, 256–268. [Google Scholar]

- Baek, J.; Liang, H.Y. Asymptotic of estimators in semi-parametric model under NA samples. J. Stat. Plan. Inference 2006, 136, 3362–3382. [Google Scholar] [CrossRef]

- Zhou, X.C.; Hu, S.H. Moment consistency of estimators in semiparametric regression model under NA samples. Pure Appl. Math. 2010, 6, 262–269. [Google Scholar]

- Hu, S.H. Consistency estimate for a new semiparametric regression model. Acta Math. Sci. 1997, 40, 527–536. [Google Scholar]

- Li, J.; Yang, S.C. Moment consistency of estimators for semiparametric regression. Acta Math. Appl. Sin. 2004, 17, 257–262. [Google Scholar]

- Li, J.; Yang, S.C. Strong consistency of estimators for semiparametric regression. J. Math. Study 2004, 37, 431–437. [Google Scholar]

- Wang, X.J.; Deng, X.; Xia, F.X.; Hu, S.H. The consistency for the estimators of semiparametric regression model based on weakly dependent errors. Stat. Papers 2017, 58, 303–318. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, X.J. A note on the consistency for the estimators of semiparametric regression model. Stat. Papers 2018, 59, 1117–1130. [Google Scholar] [CrossRef]

- Zhou, X.C.; Liu, X.S.; Hu, S.H. Moment consistency of estimators in partially linear models under NA samples. Metrika 2010, 72, 415–432. [Google Scholar] [CrossRef]

- Joag, D.K.; Proschan, F. Negative association of random variables with applications. Ann. Stat. 1983, 11, 286–295. [Google Scholar] [CrossRef]

- Bradley, R.C. On the spectral density and asymptotic normality of weakly dependent random fields. J. Theor. Probab. 1992, 5, 355–373. [Google Scholar] [CrossRef]

- Zhang, L.X.; Wang, X.Y. Convergence rates in the strong laws of asymptotically negatively associated random fields. Appl. Math. J. Chin. Univ. 1999, 14, 406–416. [Google Scholar]

- Zhang, L.X. A functional central limit theorem for asymptotically negatively dependent random fields. Acta Math. Hung. 2000, 86, 237–259. [Google Scholar] [CrossRef]

- Liang, H.Y. Complete convergence for weighted sums of negatively associated random variables. Stat. Probab. Lett. 2000, 48, 317–325. [Google Scholar] [CrossRef]

- Wu, Q.Y. Strong consistency of M Estimator in linear model for negatively associated samples. J. Syst. Complex. 2006, 19, 592–600. [Google Scholar] [CrossRef]

- Thanh, L.V.; Yin, G.G.; Wang, L.Y. State observers with random sampling times and convergence analysis of double-indexed and randomly weighted sums of mixing processes. SIAM J. Control. Optim. 2011, 49, 106–124. [Google Scholar] [CrossRef]

- Tang, X.F.; Xi, M.M.; Wu, Y.; Wang, X.J. Asymptotic normality of a wavelet estimator for asymptotically negatively associated errors. Stat. Probab. Lett. 2018, 140, 191–201. [Google Scholar] [CrossRef]

- Wang, J.F.; Lu, F.B. Inequalities of maximum partial sums and weak convergence for a class of weak dependent random variables. Acta Math. Sin. 2006, 22, 693–700. [Google Scholar] [CrossRef]

- Yuan, D.M.; Wu, X.S. Limiting behavior of the maximum of the partial sum for asymptotically negatively associated random variables under residual Cesaro alpha-integrability assumption. J. Stat. Plan. Inference 2010, 140, 2395–2402. [Google Scholar] [CrossRef]

- Zhang, L.X. Central limit theorems for asymptotically negatively associated random fields. Acta Math. Sin. 2000, 16, 691–710. [Google Scholar] [CrossRef]

- Chen, Z.; Lu, C.; Shen, Y.; Wang, R.; Wang, X.J. On complete and complete moment convergence for weighted sums of ANA random variables and applications. J. Stat. Comput. Simul. 2009, 89, 2871–2898. [Google Scholar] [CrossRef]

- Zhang, Y. Complete moment convergence for moving average process generated by -mixing random variables. J. Inequal. Appl. 2015, 2015, 245. [Google Scholar] [CrossRef]

- Wu, Q.Y.; Jiang, Y.Y. Some Limiting behavior for asymptotically negative associated random variables. Probab. Eng. Inf. Sci. 2018, 32, 58–66. [Google Scholar] [CrossRef]

- Xu, F.; Wu, Q.Y. Almost sure central limit theorem for self-normalized partial -mixing sequences. Stat. Probab. Lett. 2017, 129, 17–27. [Google Scholar] [CrossRef]

- Shen, A.T.; Zhang, Y.; Volodin, A. Applications of the Rosenthal-Type inequality for negatively superadditive dependent random variables. Metrika 2015, 78, 295–311. [Google Scholar] [CrossRef]

- Chen, M.H.; Ren, Z.; Hu, S.H. Strong consistency of a class of estimators in partial linear model. Acta Math. Sin. 1998, 41, 429–439. [Google Scholar]

- Zhou, X.C.; Hu, S.H. Strong consistency of estimators in partially linear models under NA samples. J. Syc. Sci. Math. Sci. 2010, 30, 60–71. [Google Scholar]

- Hu, S.H. Fixed-Design semiparametric regression for linear time series. Acta Math. Sci. 2006, 26, 74–82. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).