An Application of the Kalman Filter Recursive Algorithm to Estimate the Gaussian Errors by Minimizing the Symmetric Loss Function

Abstract

:1. Introduction

2. Materials and Methods

2.1. The Kalman Filter Model

- φm—white noise

- Dm—carrier signal.

2.2. The General Case of the Kalman Filter

2.3. The Gaussian Case

- Xm and Zm are deterministic r × r and p × r matrices, respectively, and

- AmRr, θmRq, φm, and BmRp are random variables.

3. Applications to Kinetic Models

3.1. A Recursive KF Algorithm Application

- is a known state transition matrix applied to the t − 1 state xt−1,

- is the variance matrix, and

- is a process noise vector, which has the joint distribution as a multivariate Gaussian with a variance matrix and for .

- vt is the observation noise and

- Ht is an observation matrix.

3.2. EKF Recursive Algorithm Application

3.3. Simulation Using Synthetic Data

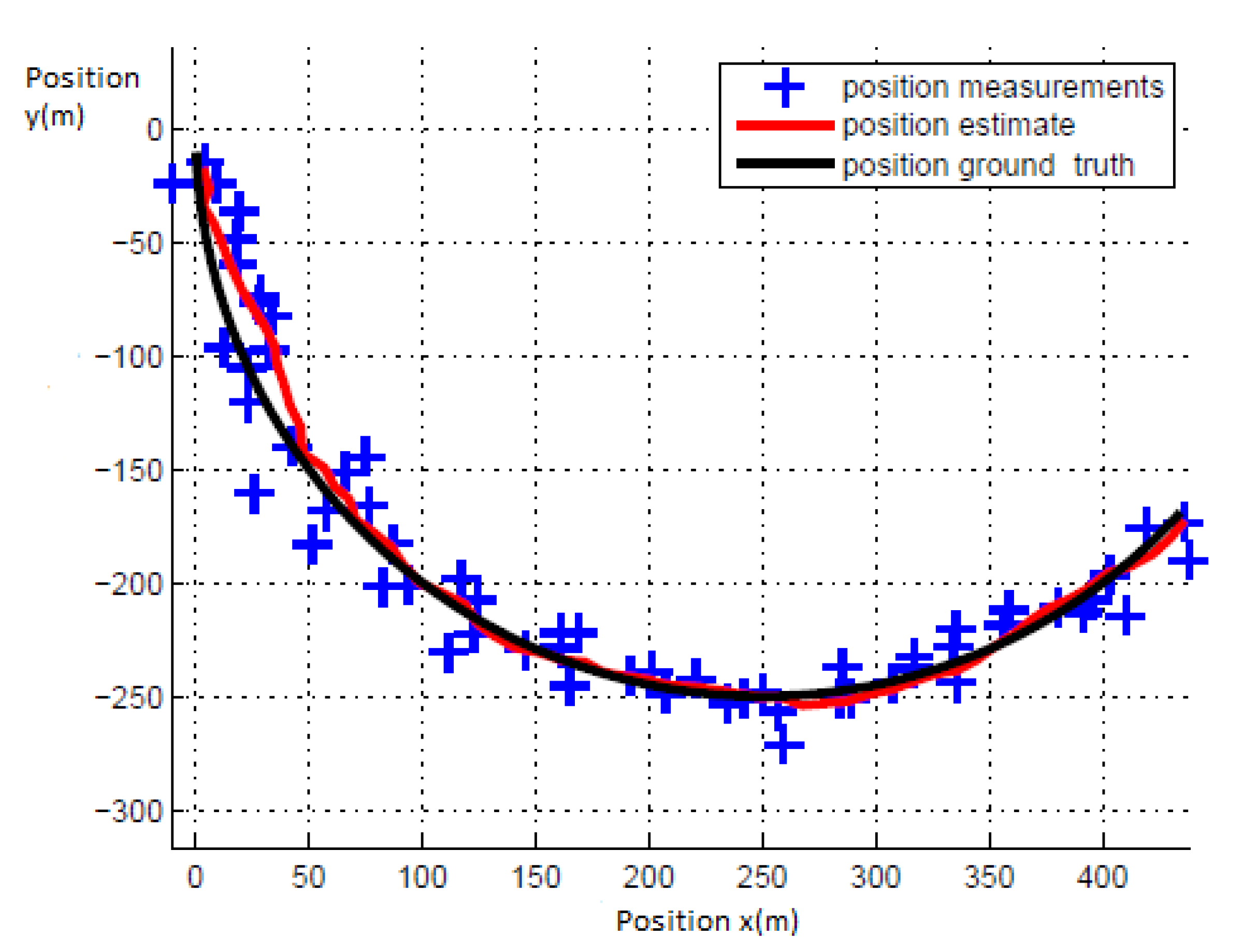

- The red line represents the estimated vehicle trajectory.

- The black line stands for the ground truth of the vehicle trajectory.

- The blue crosses are the GPS measurements of the position of the vehicle.

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Musoff, H.; Zarchan, P. Fundamentals of Kalman Filtering: A Practical Approach; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2009. [Google Scholar]

- Wolpert, D.; Ghahramani, Z. Computational Principles of Movement Neuroscience. Nat. Neurosci. 2000, 3, 1212–1217. [Google Scholar] [CrossRef] [PubMed]

- Hameed, Z.; Hong, Y.S.; Cho, Y.M.; Ahn, S.H.; Song, C.K. Condition monitoring and fault detection of wind turbines and related algorithms: A review. Renew. Sustain. Energy Rev. 2009, 13, 1–39. [Google Scholar] [CrossRef]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35. [Google Scholar] [CrossRef] [Green Version]

- Tseng, C.H.; Lin, S.F.; Jwo, D.J. Fuzzy adaptive cubature Kalman filter for integrated navigation systems. Sensors 2016, 16, 1167. [Google Scholar] [CrossRef] [Green Version]

- Yamauchi, T. Modeling Mindsets with Kalman Filter. Mathematics 2018, 6, 205. [Google Scholar] [CrossRef] [Green Version]

- Julier, S.J.; Uhlmann, J.K. Unscented filtering and nonlinear estimation. Proc. IEEE 2004, 92, 401–422. [Google Scholar] [CrossRef] [Green Version]

- Singh Sidhu, H.; Siddhamshetty, P.; Kwon, J. Approximate Dynamic Programming Based Control of Proppant Concentration in Hydraulic Fracturing. Mathematics 2018, 6, 132. [Google Scholar] [CrossRef] [Green Version]

- Song, H.; Yu, K.; Li, X.; Han, J. Error estimation of load identification based on linear sensitivity analysis and interval technique. Struct. Multidiscip. Optim. 2017, 55, 423–436. [Google Scholar] [CrossRef]

- Ma, C.; Tuan, P.; Lin, D.; Liu, C. A study of an inverse method for the estimation of impulsive loads. Int. J. Syst. Sci. 1998, 29, 663–672. [Google Scholar] [CrossRef]

- Ma, C.; Ho, C. An inverse method for the estimation of input forces acting on non-linear structural systems. J. Sound Vib. 2004, 275, 953–971. [Google Scholar] [CrossRef]

- Lin, D. Input estimation for nonlinear systems. Inverse Probl. Sci. Eng. 2010, 18, 673–689. [Google Scholar] [CrossRef]

- Kim, J.; Kim, K.; Sohn, H. Autonomous dynamic displacement estimation from data fusion of acceleration and intermittent displacement measurements. Mech. Syst. Signal. Process. 2014, 42, 194–205. [Google Scholar] [CrossRef]

- Casciati, F.; Casciati, S.; Faravelli, L.; Vece, M. Validation of a data-fusion based solution in view of the real-time monitoring of cable-stayed bridges. Procedia Eng. 2014, 199, 2288–2293. [Google Scholar] [CrossRef]

- Zhang, C.; Xu, Y. Structural damage identification via multi-type sensors and response reconstruction. Struct. Health Monit. 2016, 15, 715–729. [Google Scholar] [CrossRef]

- Zhu, W.; Wang, W.; Yuan, G. An improved interacting multiple model filtering algorithm based on the cubature Kalman filter for maneuvering target tracking. Sensors 2016, 16, 805. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Amin, M.; Rahman, M.; Hossain, M.; Islam, M.; Ahmed, K.; Miah, B. Unscented Kalman Filter Based on Spectrum Sensing in a Cognitive Radio Network Using an Adaptive Fuzzy System. Big Data Cogn. Comput. 2018, 2, 39. [Google Scholar] [CrossRef] [Green Version]

- Giffin, A.; Urniezius, R. The Kalman Filter Revisited Using Maximum Relative Entropy. Entropy 2014, 16, 1047–1069. [Google Scholar] [CrossRef]

- Cao, M.; Qiu, Y.; Feng, Y.; Wang, H.; Li, D. Study of Wind Turbine Fault Diagnosis Based on Unscented Kalman Filter and SCADA Data. Energies 2016, 9, 847. [Google Scholar] [CrossRef]

- Kim, D.-W.; Park, C.-S. Application of Kalman Filter for Estimating a Process Disturbance in a Building Space. Sustainability 2017, 9, 1868. [Google Scholar] [CrossRef] [Green Version]

- Feng, K.; Li, J.; Zhang, X.; Zhang, X.; Shen, C.; Cao, H.; Yang, Y.; Liu, J. An Improved Strong Tracking Cubature Kalman Filter for GPS/INS Integrated Navigation Systems. Sensors 2018, 18, 1919. [Google Scholar] [CrossRef] [Green Version]

- Anderson, B.D.; Moore, J.B. Optimal Filtering; Courier Corporation: Chelmsford, MA, USA, 2012. [Google Scholar]

- Jazwinski, A.H. Stochastic Processes and Filtering Theory; Courier Corporation: Chelmsford, MA, USA, 2007. [Google Scholar]

- Gelb, A. Applied Optimal Estimation; MIT press: Cambridge, MA, USA, 1974. [Google Scholar]

- Tanizaki, H. Nonlinear Filters: Estimation and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Mihail, B.; Sorin, C. An Application of the Kalman Filter for Market Studies. Ovidius Univ. Ann. Ser. Econ. Sci. 2013, 13, 726–731. [Google Scholar]

- Shelkovich, V.M. The Riemann problem admitting δ-, δ′-shocks, and vacuum states (the vanishing viscosity approach). J. Differ. Equ. 2006, 231, 459–500. [Google Scholar] [CrossRef] [Green Version]

- Bougerol, P. Kalman filtering with random coefficients and contractions. Siam J. Control. Optim. 1993, 31, 942–959. [Google Scholar] [CrossRef]

- Shayman, M.A. Phase portrait of the matrix Riccati equation. Siam J. Control Optim. 1986, 24, 1–65. [Google Scholar] [CrossRef]

- Wojtkowski, M. Invariant families of cones and Lyapunov exponents. Ergod. Theory Dyn. Syst. 1985, 5, 145–161. [Google Scholar] [CrossRef] [Green Version]

- Smith, D.R. Decoupling Order Reduction via the Riccati Transformation. Siam Rev. 1987, 29, 91–113. [Google Scholar] [CrossRef]

- Birkhoff, G. Extensions of Jentzsch’s theorem. Trans. Am. Math. Soc. 1957, 85, 219–227. [Google Scholar]

- Piegorsch, W.W.; Smith, E.P.; Edwards, D.; Smith, R.L. Statistical advances in environmental science. Stat. Sci. 1998, 13, 186–208. [Google Scholar] [CrossRef]

- Bril, G. Forecasting hurricane tracks using the Kalman filter. Environmetrics 1995, 6, 7–16. [Google Scholar] [CrossRef]

- LeBlanc, D.C. Spatial and temporal variations in the prevalence of growth decline in red spruce populations of the northeastern United States: Reply. Can. J. Res. 1993, 23, 1494–1496. [Google Scholar] [CrossRef]

- Cook, E.R.; Johnson, A.H. Climate change and forest decline: A review of the red spruce case. Water Air Soil Pollut. 1989, 48, 127–140. [Google Scholar] [CrossRef]

- Whittaker, J.; Fruhwirth-Schnatter, S. A dynamic change point model for detecting the onset of growth in bacteriological infections. Appl. Stat. 1994, 43, 625–640. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Busu, C.; Busu, M. An Application of the Kalman Filter Recursive Algorithm to Estimate the Gaussian Errors by Minimizing the Symmetric Loss Function. Symmetry 2021, 13, 240. https://doi.org/10.3390/sym13020240

Busu C, Busu M. An Application of the Kalman Filter Recursive Algorithm to Estimate the Gaussian Errors by Minimizing the Symmetric Loss Function. Symmetry. 2021; 13(2):240. https://doi.org/10.3390/sym13020240

Chicago/Turabian StyleBusu, Cristian, and Mihail Busu. 2021. "An Application of the Kalman Filter Recursive Algorithm to Estimate the Gaussian Errors by Minimizing the Symmetric Loss Function" Symmetry 13, no. 2: 240. https://doi.org/10.3390/sym13020240