1. Introduction

During underground operations, rockburst is a sudden and violent release of elastic energy stored in rock and coal masses. This causes rock fragments to eject, potentially causing injury, collapse, and deformation of supporting structures, as well as damage to facilities [

1,

2,

3]. Related activity occurs in open cuts in the mass of the joint rock [

4,

5]. For both the civil and mining engineering industries, its economic consequences are important. The mechanism is not yet well understood owing to the difficulty and uncertainty of the rockburst. In order to mitigate the risks caused by rockburst, such as damage to equipment, access closure, delays, and loss of property and life, it is important to accurately predict or estimate the realistic potential of rockburst for the safety and efficient construction and serviceability of underground projects.

Conventional mechanics-based methods fail to provide precise rockburst hazard detection due to the highly complex relationship between geological, geometric, and mechanical parameters of rock masses in underground environments. Further, mechanics-based methods have several underlying assumptions, which, if flouted, may yield biased model predictions. This has forced many researchers in recent years to investigate alternative methods for better hazard prediction and detection of the rockburst phenomenon. Several researchers suggested several indicators to assess burst potential. The strain energy storage index (

Wet), proposed by Kidybinski [

6], is the ratio of strain energy stored (

Wsp) to strain energy dissipated (

Wst).Wattimena et al. [

7] used elastic strain energy density as a burst potential measure. The rock brittleness coefficient, which is based on the ratio of uniaxial compressive stress (UCS) to tensile stress, is another widely used burst liability index [

8]. A tangential stress criterion, the ratio between tangential stress around underground excavations (

σθ), and UCS of rock (

σc), can be employed to assess the risk of rock bursts [

9]. Energy-based burst potential index was developed by Mitri et al. [

10] to diagnose burst proneness. However, many techniques have been developed in the last few decades to predict or assess rockburst, but there has been no advancement or a widely accepted technique preferred over other rockburst methods.

Over the past few decades, data mining techniques have been shown to be efficient in getting complex non-linear relationships between predictor and response variables and may be used to identify sites that are prone to rockburst events, as case history information is increasingly available. A number of approaches have been suggested by several researchers to predict the rockburst, such as Support Vector Machine (SVM) [

11], Artificial Neural Networks (ANNs) [

12], Distance Discriminant Analysis (DDA) [

13], Bayes Discriminant Analysis (BDA) [

14], and Fisher Linear Discriminant Analysis (LDA) [

15], and moreover, some systems are based upon hybrid (Zhou et al. [

16]; Adoko et al. [

17]; Liu et al. [

18]) or ensemble (Ge and Feng [

19]; Dong et al. [

20]) learning methods in long-term prediction of rockburst and their prediction accuracies are compared. Zhao and Chen [

21], recently developed and compared a data-driven model based on a convolutional neural network (CNN) to a traditional neural network. In rockburst prediction, this proposed CNN model has a high potential compared to the conventional neural network. These algorithms used a number of rockburst indicators as input features, and the size of their training samples varied. While most of the aforementioned techniques have been effective in predicting rockburst hazard, they do have shortcomings. For example, the optimal structure (e.g., number of inputs, hidden layers, and transfer functions) must be specified a priori in the ANN method. This is usually accomplished by a process of trial and error. The black box nature of the ANN model, as well as the fact that the relationship between the system’s input and output parameters is described in terms of a weight matrix and biases that are not available to the user, is another major limitation [

22].

Table 1 summarizes the main rockburst prediction studies that used machine learning (ML) methods with input parameters and accuracy.

The majority of the established models (

Table 1) are black boxes, meaning they do not show a clear and understandable relationship between input and output. These studies attempted to solve the problems of rockburst, but they were never entirely successful. In certain instances, a particular procedure can be appropriate, but not in others. Notably, precision ranges from 66.5 to 100%, which is a major variance in rockburst prediction. Rockburst prediction is a complex and nonlinear process that is hindered by model and parameter uncertainty, as well as limited by inadequate knowledge, lack of information characterization, and noisy data. Machine learning has been widely recognized in mining and geotechnical engineering applications for dealing with nonlinear problems and developing predictive data-mining models [

25,

26,

27,

28,

29,

30,

31].

In this study, the random tree and J48 algorithms have been specifically selected on the basis of these considerations because they are primarily used in civil engineering but have not yet been thoroughly evaluated with each other and because of their open-source availability. The primary aim of this research was to reveal and compare the suitability of random tree and J48 algorithms in underground projects for rockburst hazard prediction. First, rockburst hazards classification cases are collected from the published literature. Next, these two algorithms are used to predict the rockburst hazard classification. Finally, their detailed performance is evaluated and compared with empirical models.

3. Construction of Prediction Model

The construction method of the prediction model is presented in

Figure 3. The data is divided in two sub-datasets, i.e., training and testing. A training dataset of 137 cases is chosen to train the model and the remaining 28 cases are used to test the model. A hold-out technique is used to tune the hyper parameters of the model. The prediction model is fitted using the optimal configuration of hyperparameters, based on the training dataset. Different performance indexes such as classification accuracy (ACC), kappa statistic, producer accuracy (PA), and user accuracy (UA) are used to evaluate model performance. Last, the optimum model is calculated by evaluating the comprehensive performance of these models. If the predicted performance of this model is appropriate, then it can be adopted for deployment. In WEKA, the entire method of calculation is carried out.

3.1. Hyperparameter Optimization

Tuning is the process of maximizing a model’s performance and avoiding overfitting or excessive variance. This is achieved in machine learning by choosing suitable “hyperparameters”. Choosing the right set of hyperparameters is critical for model accuracy, but can be computationally challenging. Hyperparameters are different from other model parameters in that they are not automatically learned by the model through training methods, instead, these parameters must be set manually. Critical hyperparameters in random tree and J48 algorithms are tuned to determine the optimum value of algorithm parameters such as the minimum number of instances per leaf and the confidence factor. For both algorithms, the trial and error approach is used to evaluate these parameters in a particular search range in order to achieve the best classification accuracy. The search range of the same hyperparameters is kept consistent. Furthermore, according to the maximum accuracy, the optimal values for each set of hyperparameters are obtained. In this analysis, the optimum values for the J48 algorithm were found as: the minimum number of instances was 1 and the confidence factor was 0.25, the tree size was 63, the number of leaves was 32, and in the case of a random tree, the tree size was 103 and the minimum total weight of the instances in a leaf was 1.

3.2. Model Evaluation Indexes

J48 and random tree algorithms are used as described in

Section 2.2 in order to construct a predictive model for the classification of a rockburst hazard. The uncertainty matrix is a projected results visualization table where each row of the matrix represents the cases in the actual class, while each column displays the cases in the predicted class. It is normally constructed with

m rows and

m columns, where

m is equal to the number of classes. The accuracy, kappa, producer accuracy, and user accuracy found for each class of the confusion matrix sample are used to test the predictive model’s efficacy. Let

xij (

i and

j = 1, 2, …,

m) be the joint frequency of observations designated to class

i by prediction and class

j by observation data,

xi+ is the total frequency of class

i as obtained from prediction, and

x + j is the total frequency of class

j based on observed data (see

Table 5).

Classification accuracy (ACC) is determined by summing correctly classified diagonal instances and dividing them by the total number of instances. The ACC is given as:

Cohen’s kappa index, which is a robust index that considers the likelihood of an event classification by chance, measures the proportion of units that are correctly categorized units proportions after removing the probability of chance agreement [

43]. Kappa can be calculated using the following formula:

where

n is the number of instances,

m is the number of class values,

xii is the number of cells in the main diagonal, and

xi+,

x+i are the cumulative counts of the rows and columns, respectively.

Landis and Koch [

44] suggested a scale to show the degree of concordance (see

Table 6). A kappa value of less than 0.4 indicates poor agreement, while a value of 0.4 and above indicates good agreement (Sakiyama et al. [

45]; Landis and Koch [

44]). The producer’s accuracy of class

i (PA

i) can be measured using Congalton and Green’s formula [

46].

and user’s accuracy of class

i (UA

i) can be found as:

The J48 and random tree models are studied for the suitability of predicting rockburst. Comparison is made with the traditional empirical criteria of the rockburst including the Russenes criterion [

47], the rock brittleness coefficient criterion [

48], ANN [

21], and CNN [

21] models using performance measures i.e., ACC, PA, UA, and kappa.

4. Results and Discussion

The performance of both models was tested to predict rockburst hazard using various evaluation criteria as mentioned in

Section 3. Based on the statistical assessment criteria, it was observed that both models had very high predictive accuracy capability (J48: 92.857%; Random tree: 100%). The result of the kappa coefficient showed that both of these models are almost perfect but the random tree model performed best, owing to having the highest kappa coefficient value (1.0), followed by the J48 (0.904). With comparisons of the results from the four models, it is found that the prediction accuracy (ACC) and kappa coefficient of random tree and CNN models are equivalent, which is higher than that of other models shown in

Table 7.

In terms of the producer accuracy (PA) value, the random tree model also had the highest PA (100%) for all ranks of rockburst, followed by the J48. PA and UA show that some of the features are better classified than others (see

Table 8). As can been seen from the

Table 8, in the J48 model, both “no rockburst” and “moderate rockburst” both have at par PA values (100%) compared to “strong rockburst” (88.889%) and “no rockburst” (83.333%). While in the J48 model, both “no rockburst” and “violent rockburst” have at par UA values (100%) compared to “moderate rockburst” (88.889%) and “strong rockburst” (87.5%). The results showed that there was a statistically significant difference between the random tree and the J48 models, and it was found that the performance of the J48 is just secondary to the random tree model in prediction of rockburst hazard.

To illustrate and verify the performances of J48 and random tree models, the prediction capacity of these models is compared with the other models including the Russenes criterion [

47], the rock brittleness coefficient criterion [

48], ANN [

21], and CNN [

21]. The confusion matrix and the performance indices for traditional empirical criteria of the rockburst including Russenes criterion [

47], the rock brittleness coefficient criterion [

48], ANN [

21], and CNN [

21] models are presented in

Table 8. The comparison of these models reveals that J48 and random tree models can be applied efficiently, but the random model exhibits the best and at par performance with the CNN [

21] model and after that the J48 model. The random tree model can predict “no rockburst”, “moderate rockburst”, “strong rockburst” and “violent rockburst” cases with overall accuracy of 100%.

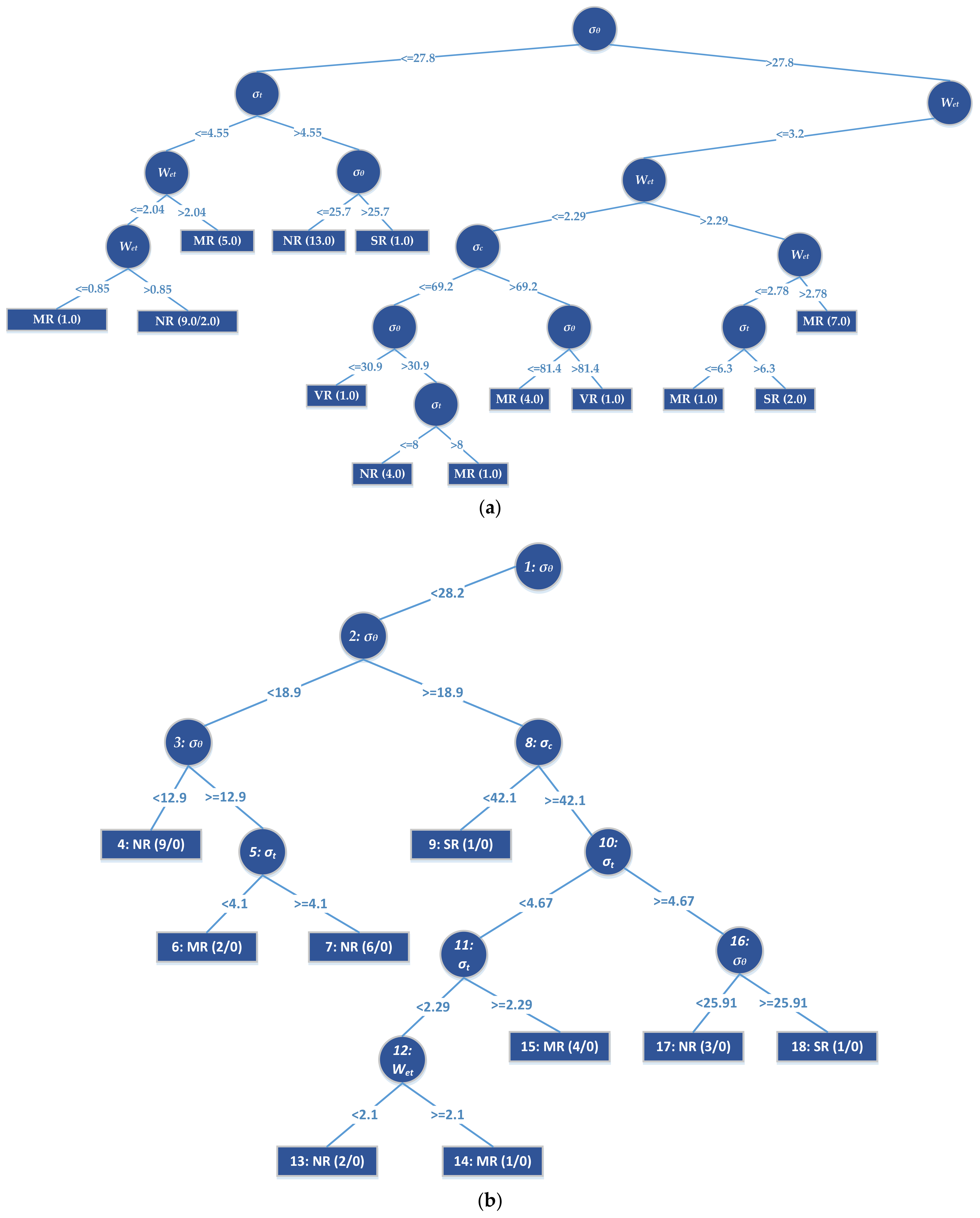

Although existing models such as SVM do not provide explicit equations for professionals, the established J48 and random tree models (see

Figure 4) can be used by civil and mining engineering professionals with the aid of a spreadsheet to analyze potential rockburst events without going into the complexities of model development. Furthermore, the J48 and random tree approaches do not require data normalization or scaling, which is an advantage over most other approaches.

In general, both of the established models performed well in the testing data phase, while overall performance of the random tree model showed better performance (see

Table 7 and

Table 8) and it is shown that the random tree model is preferred over other models. It was found that for a larger dataset with almost no sampling bias (i.e., disparity in class ratio between population and sample) in the training and testing phases, predictive performance should be further examined. Though the dataset (165) used in this study is small, the J48 and random tree models can always be updated to yield better results, as new data become available. Study results suggest that proposed models show improved predictive performance compared to the majority of existing studies. This, in turn, will ensure the reduction in the loss of property, human lives, and injuries from a practical viewpoint.

5. Conclusions and Limitations

In underground mining and civil engineering projects, models for predicting rockburst can be valuable tools. This study compared J48 and random tree models for predicting rockburst. Four variables (σθ, σc, σt, and Wet) were selected as influence factors for predicting rockburst using a dataset of 165 rockburst events compiled from recent published research, which was used to construct the decision tree models. The following conclusions can be drawn, based on the results of the study:

The performance measures of the testing dataset for the J48 and random tree algorithms conclude that it is rational and feasible to choose the maximum tangential stress of surrounding rock σθ (MPa), the uniaxial compressive strength σc (MPa), the uniaxial tensile strength σt (MPa), and the strain energy storage index Wet as indexes for predicting rockburst.

The classification accuracy of J48 and random tree models in the test phase is 92.857% and 100%, respectively, which shows that the random tree model is an accurate and efficient approach to predicting the potential for rockburst classification ranks.

The kappa index of the developed J48 and random tree models is in the range of 0.904–1.000, which means that the correlation between observed and predicted values is nearly perfect.

The comparison of models’ performances reveals that the random tree model gives more reliable predictions and their application is easier owing to a clear graphical outcome that can be used by civil and mining engineering professionals.

Although the proposed method yields adequate prediction results, certain limitations should be discussed in the future.

(1) The sample size is restricted and unbalanced. The number and quality of datasets have a significant impact on the prediction performance of random tree and J48 algorithms. In general, the generalization and reliability of all data-driven models are affected by the size of the dataset. Although the random tree and J48 algorithms perform well, larger datasets can yield better prediction results. Furthermore, the dataset is unbalanced, particularly for samples with violent rockburst (17%) and samples with no rockburst (19%). As a result, establishing a larger and more balanced rockburst database is essential.

(2) Other variables may have an effect on the prediction outcomes. Numerous factors influence the risk of a rockburst, including rock properties, energy, excavation depth, and support structure, among others. Although the four indicators used in this study can define the required conditions for rockburst hazard assessment to some degree, some other indicators, such as the buried depth of the tunnel (H), failure duration time, and energy-based burst potential index, may also have an impact on rockburst hazard. As a consequence, it is crucial to look into the effects of these variables on the prediction outcomes.