AdvAndMal: Adversarial Training for Android Malware Detection and Family Classification

Abstract

1. Introduction

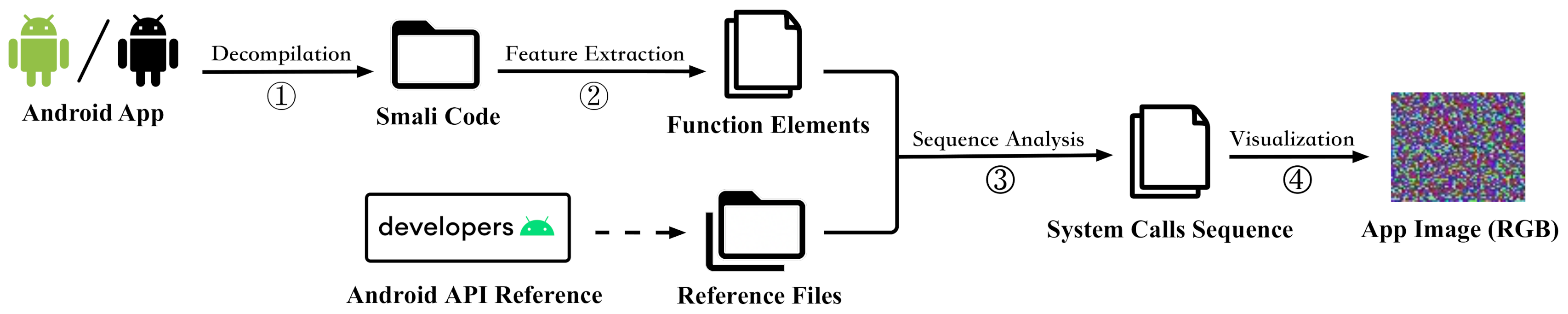

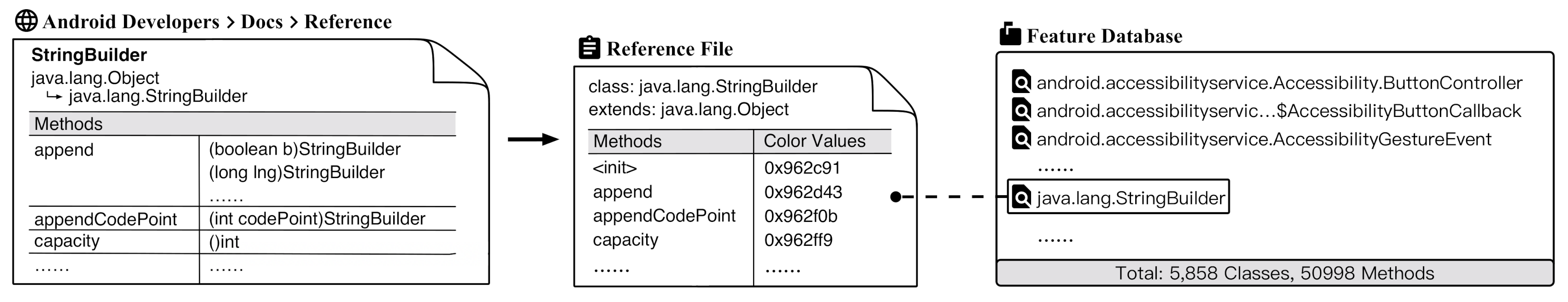

- A feature database for system APIs. The database is a basis for the next feature sequence analysis and visualization, including a total of 50,998 methods in 5858 classes of Android API from level 1 to 30, and with a fixed color value setting for each system method.

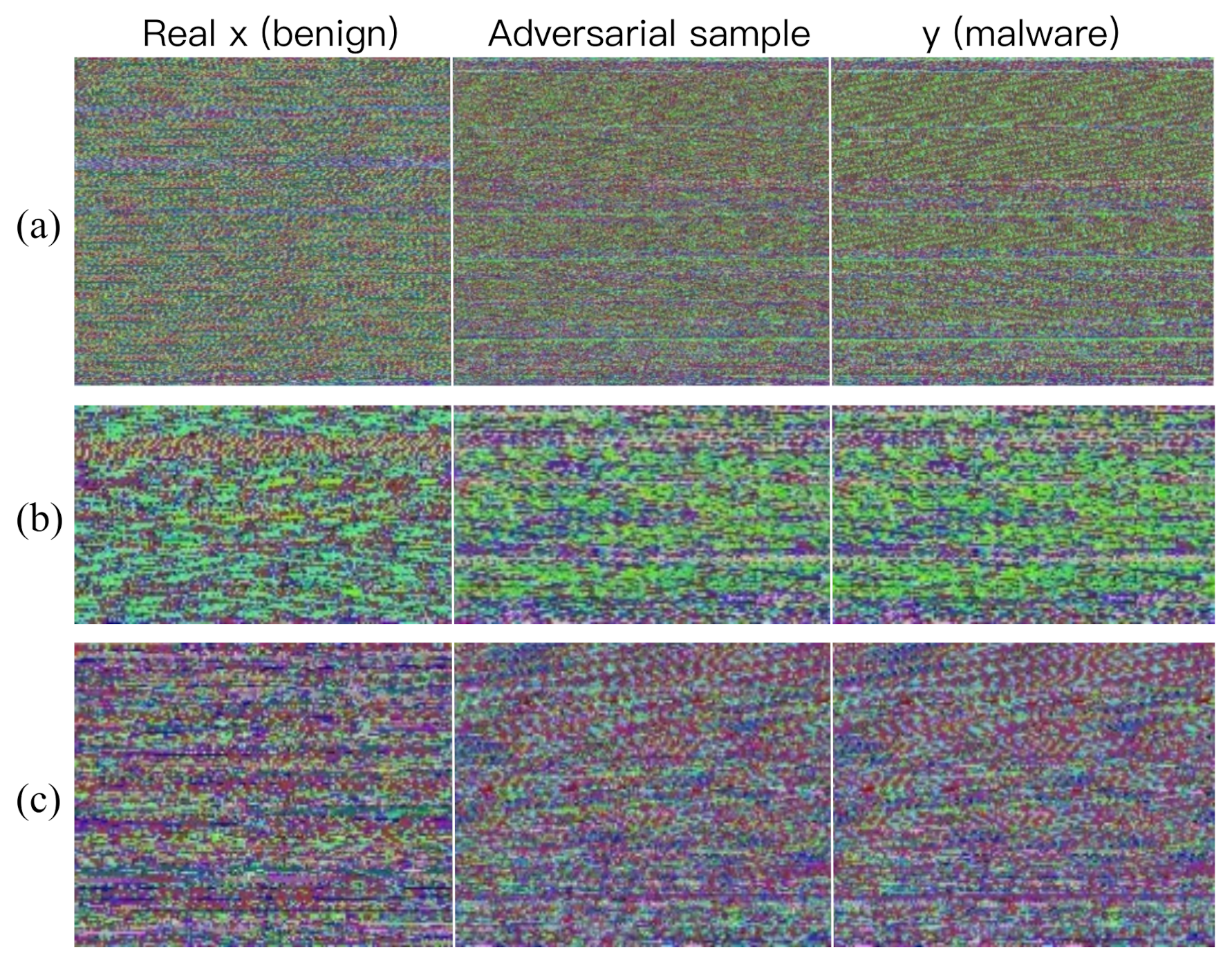

- A visualization method for API call sequence. We choose the depth-first search (DFS) algorithm to substitute all internal function call methods in the application with a system-level API call sequence one by one. In order to generate adversarial samples more efficiently, we visualized the sequence feature by means of matching the fixed color value from the feature database, in the way to convert the system call sequence into an RGB image.

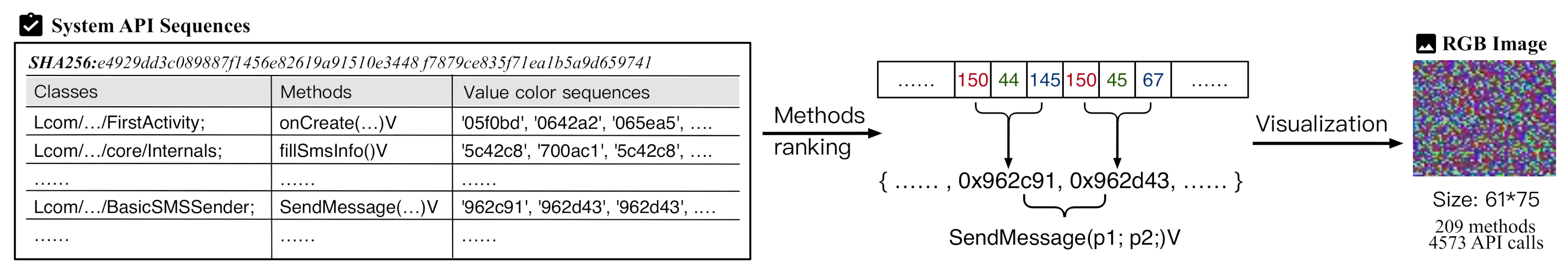

- A general framework named AdvAndMal for adversarial training. AdvAndMal is proposed to expand and balance the training set of the classifier, and increase the distribution of malicious samples in the model feature space, so that the boundary between benign and malicious applications or malware families in the feature space is adjusted, which increases the attack cost of real malware variants. The virtual malware variants are generated by pix2pix according to adversarial training, and we utilize real data set to train the independent model parameters for malware detection and family classification as two control groups, then with the same ways to train experimental groups on the mixed data set.

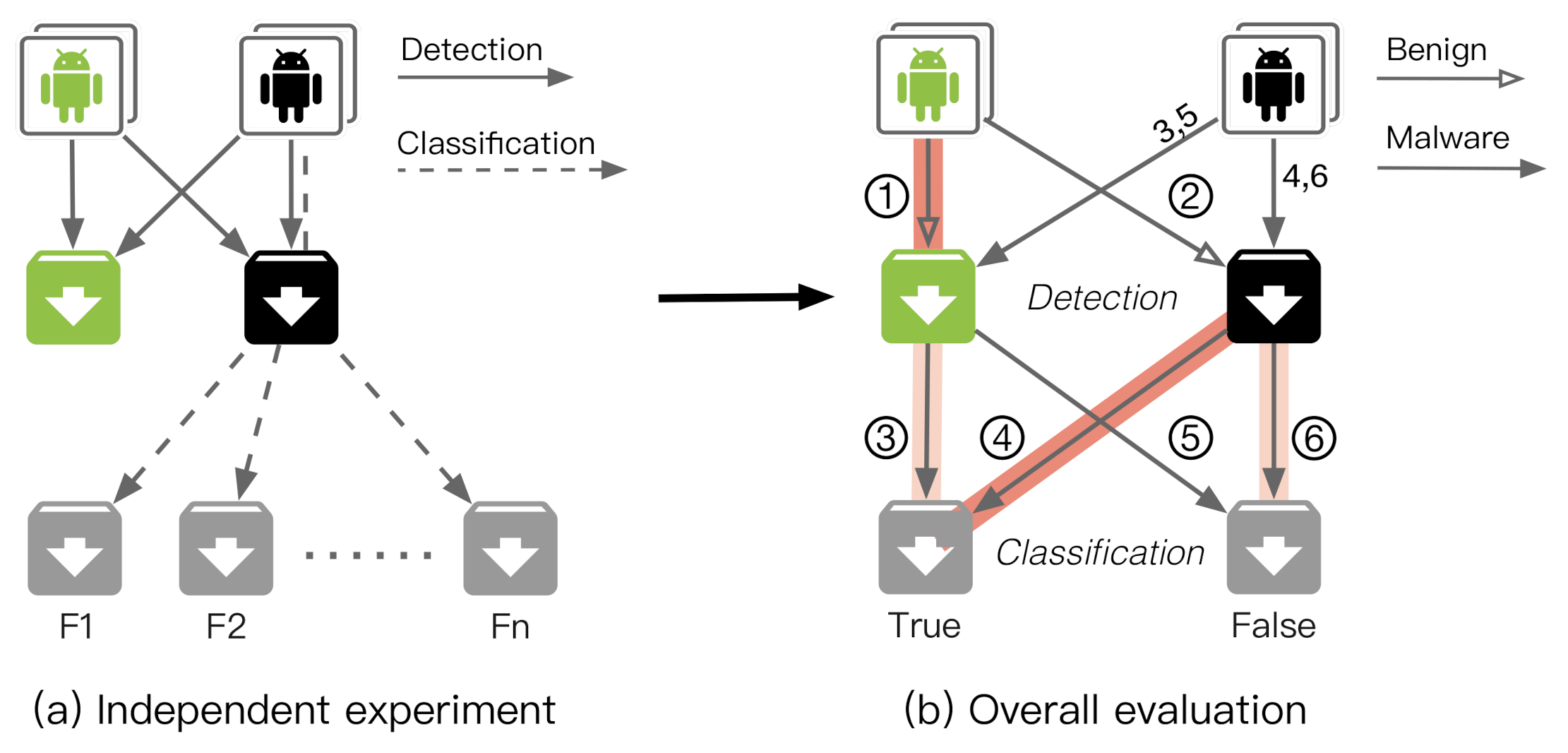

- A robustness evaluation method for the overall framework. we utilize the independent experiment results to construct the overall evaluation path architecture, and obtain the robustness expectation of the framework on the test set, then calculate the average value as an indicator to evaluate the robustness of the model.

2. Related Work

2.1. Android Malware Behaviour Detection

2.2. Adversarial Attacks

2.2.1. Adversarial Algorithms

2.2.2. Adversarial Malware Samples

2.3. Adversarial Training

3. Methodology

3.1. Feature Processing

3.1.1. Decompilation and Feature Extraction

3.1.2. Sequence Analysis and Visualization

3.2. Adversarial Training Framework

- STEP 1: Switch ①②③ off, train the classifier L on the original training set, and gain the validation results as the baseline;

- STEP 2: Switch ① off, ②③ on, train pix2pix;

- STEP 3: Switch ① on, ②③ off, generate and verify adversarial samples. retrain L with the mixed training set, and compare the new validation result with the baseline, the loss is passed to the generator for retraining;

- STEP 4: Repeat steps 2 and 3 until the test result of the verification set is higher than the baseline and tends to be stable, then test on the test set.

3.2.1. Adversarial Sample Generation Layer

3.2.2. Malware Classification Layer

4. Experimental Results

4.1. Datasets

4.2. Robustness Evaluation Method

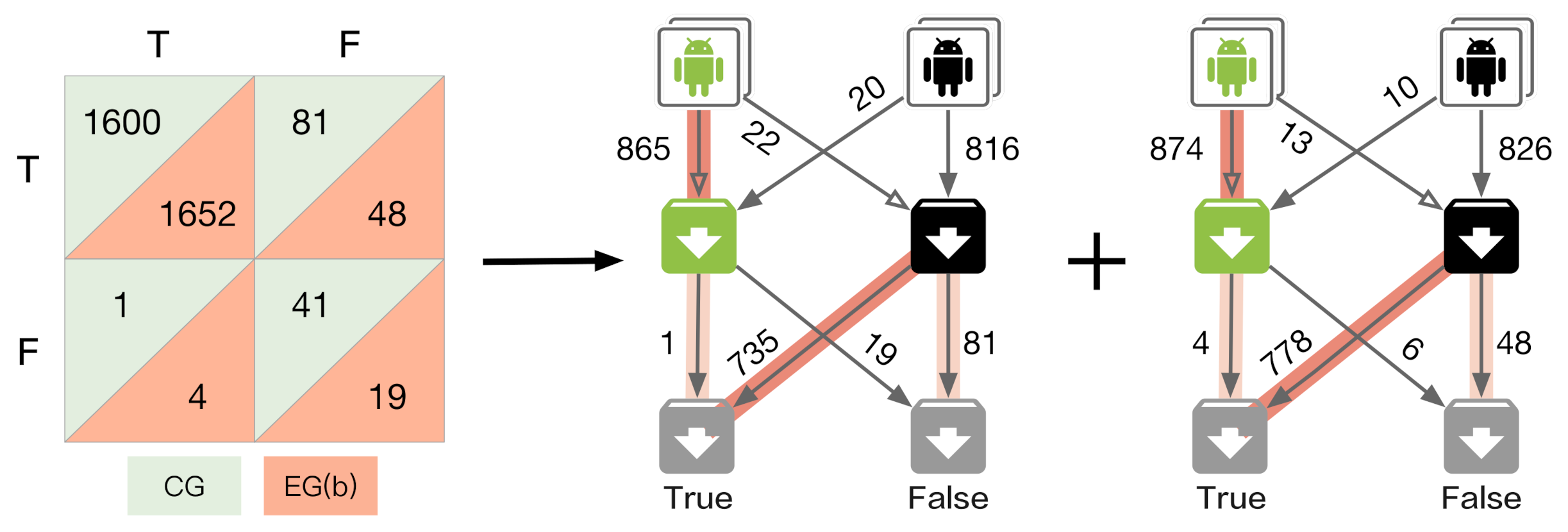

4.2.1. Independent Experiment Evaluation

4.2.2. Overall Framework Evaluation

4.3. Results and Analysis

4.3.1. Android Malware Feature Image

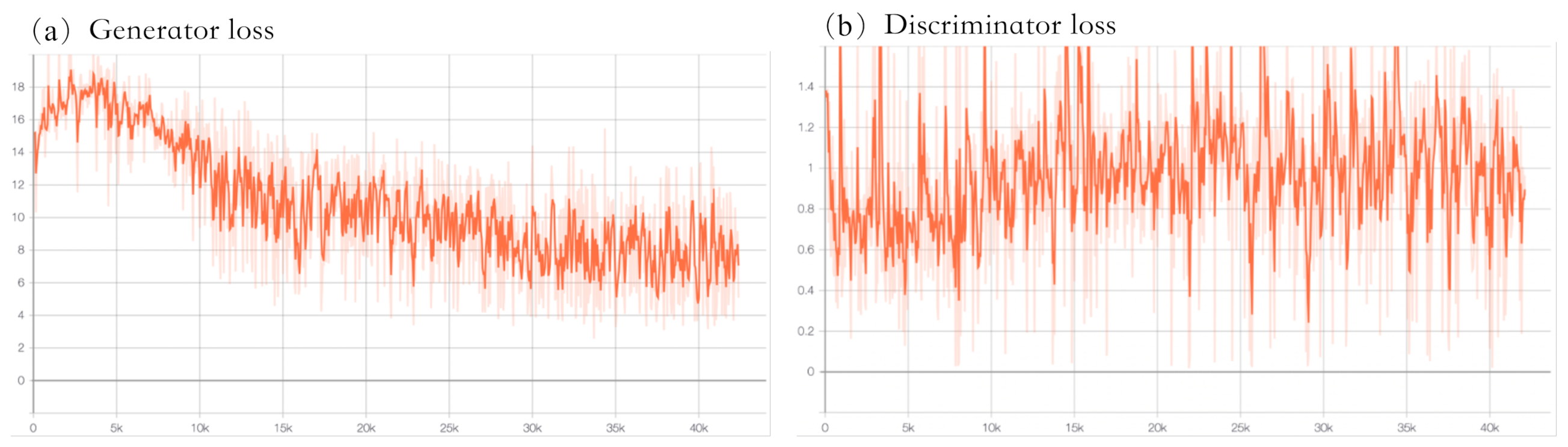

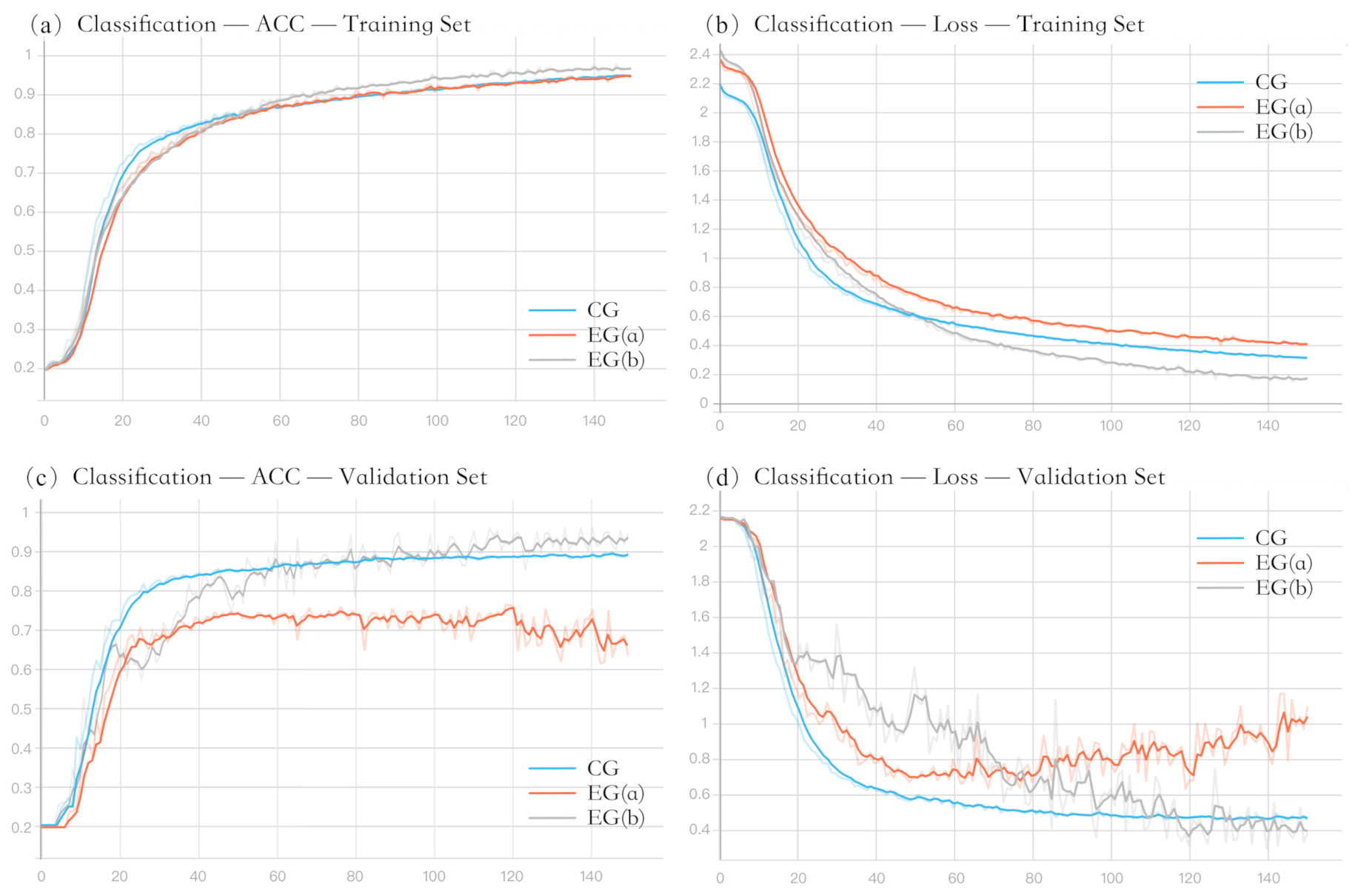

4.3.2. Adversarial Training

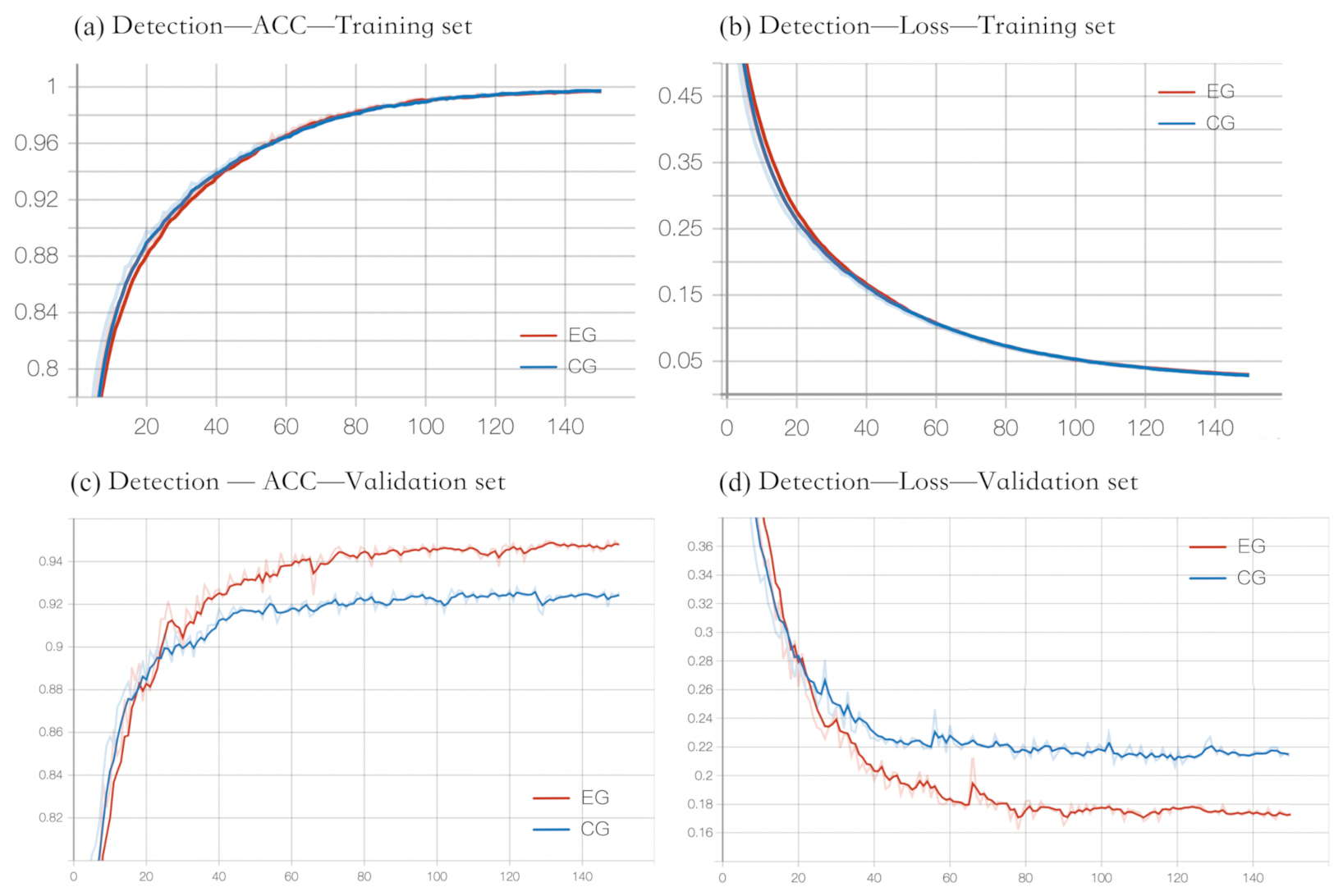

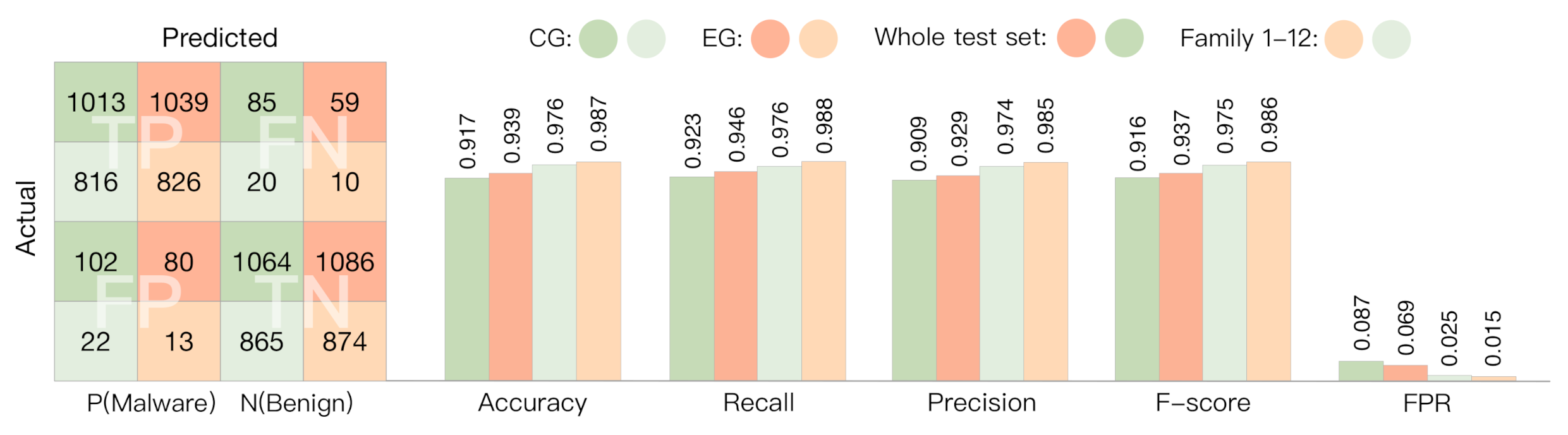

4.3.3. Malware Detection

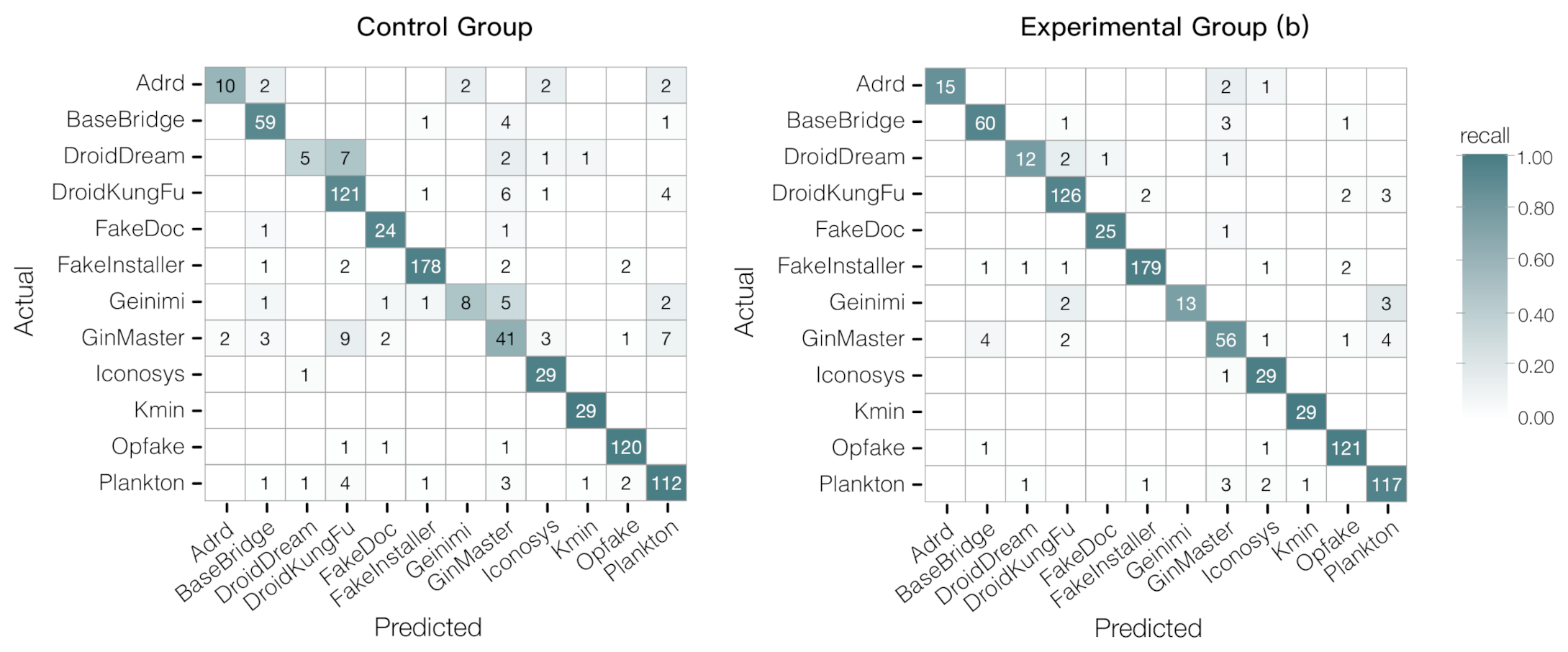

4.3.4. Family Classification

4.3.5. Robustness Evaluation

5. Conclusions

- The quality of the generation of adversarial samples is unstable. The adversarial samples generated by CGAN cannot be used to visually confirm whether they have malicious attributes or not, and without measuring the statistically significant differences between the different results. The generated datasets used to improve the model effect may contain useless or problematic samples, which will affect the learning effect of the model. In this stage, adversarial samples that can improve the classification effect are just screened through model training and verification in batches. In the future, we will combine the behavioral characteristics of Android malware to generate adversarial samples effectively.

- The classifier used in this article has a small number of layers and network parameters, which is easy to train, but the learning ability of the model will be limited. Moreover, the data set required for CGAN training has a one-to-one correspondence between real samples and conditions. If the paired images are too different, it will affect the effectiveness of the generated samples. Therefore, our model need to be further improved to apply into many scenarios.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Market Share of Mobile Operating Systems Worldwide 2012–2021. Available online: https://www.statista.com/statistics/272698/global-market-share-held-by-mobile-operating-systems-since-2009/ (accessed on 8 February 2021).

- 2020 Vulnerability and Threat Trends (Mid-Year Update). Available online: https://lp.skyboxsecurity.com/WICD-2020-07-WW-VT-Trends_Asset.html (accessed on 22 July 2020).

- Mobile Malware Evolution 2020. Available online: https://securelist.com/mobile-malware-evolution-2020/101029/ (accessed on 1 March 2021).

- Zhang, Y.; Dong, Y.; Liu, C.; Lei, K.; Sun, H. Situation, Trends and Prospects of Deep Learning Applied to Cyberspace Security. Comput. Res. Dev. 2018, 55, 1117–1142. [Google Scholar]

- Rosenberg, I.; Shabtai, A.; Rokach, L.; Elovici, Y. Generic Black-Box End-to-End Attack Against State of the Art API Call Based Malware Classifiers. Available online: https://arxiv.org/abs/1707.05970v4 (accessed on 15 February 2018).

- Hu, W.; Tan, Y. Generating Adversarial Malware Examples for Black-Box Attacks Based on GAN. Available online: https://arxiv.org/abs/1702.05983 (accessed on 20 May 2017).

- Kawai, M.; Ota, K.; Dong, M. Improved MalGAN: Avoiding Malware Detector by Leaning Cleanware Features. In Proceedings of the 2019 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Okinawa, Japan, 11–13 February 2019; pp. 40–45. [Google Scholar]

- Grosse, K.; Papernot, N.; Manoharan, P.; Backes, M.; McDaniel, P. Adversarial Examples for Malware Detection. In Proceedings of the 22nd European Symposium on Research in Computer Security, Oslo, Norway, 11–15 September 2017; pp. 62–79. [Google Scholar]

- Yuan, J.; Zhou, S.; Lin, L.; Wang, F.; Cui, J. Black-Box Adversarial Attacks Against Deep Learning Based Malware Binaries Detection with GAN. In Proceedings of the 24th European Conference on Artificial Intelligence, Santiago de Compostela, Spain, 29 August–8 September 2020; pp. 2536–2542. [Google Scholar]

- Yang, W.; Kong, D.; Xie, T.; Gunter, C.A. Malware Detection in Adversarial Settings: Exploiting Feature Evolutions and Confusions in Android Apps. In Proceedings of the 33rd Annual Computer Security Applications Conference, Orlando, FL, USA, 4–8 December 2017; pp. 288–302. [Google Scholar]

- Cara, F.; Scalas, M.; Giacinto, G.; Maiorca, D. On the Feasibility of Adversarial Sample Creation Using the Android System API. Information 2020, 11, 433. [Google Scholar] [CrossRef]

- Papernot, N.; McDaniel, P.; Wu, X.; Jha, S.; Swami, A. Distillation as a defense to adversarial perturbations against deep neural networks. In Proceedings of the 2016 IEEE Symposium on Security and Privacy, San Jose, CA, USA, 22–26 May 2016; pp. 582–597. [Google Scholar]

- Wang, Q.; Guo, W.; Zhang, K.; Ororbia, A.G.; Xing, X.; Liu, X.; Giles, C.L. Adversary resistant deep neural networks with an application to malware detection. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 13 August 2017; pp. 1145–1153. [Google Scholar]

- Lyu, C.; Huang, K.; Liang, H.N. A unified gradient regularization family for adversarial examples. In Proceedings of the 2015 IEEE International Conference on Data Mining (ICDM), Atlantic City, NJ, USA, 14–17 November 2015; pp. 301–309. [Google Scholar]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing Properties of Neural Networks. Available online: https://arxiv.org/abs/1312.6199 (accessed on 21 December 2013).

- Kwon, H.; Lee, J. Diversity Adversarial Training against Adversarial Attack on Deep Neural Networks. Symmetry 2021, 13, 428. [Google Scholar] [CrossRef]

- Hosseini, H.; Chen, Y.; Kannan, S.; Zhang, B.; Poovendran, R. Blocking Transferability of Adversarial Examples in Black-Box Learning Systems. Available online: https://arxiv.org/abs/1703.04318 (accessed on 13 March 2017).

- Li, D.; Li, Q. Adversarial Deep Ensemble: Evasion Attacks and Defenses for Malware Detection. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3886–3900. [Google Scholar] [CrossRef]

- Onwuzurike, L.; Mariconti, E.; Andriotis, P.; Cristofaro, E.D.; Ross, G.; Stringhini, G. MaMaDroid: Detecting Android Malware by Building Markov Chains of Behavioral Models (Extended Version). ACM Trans. Priv. Secur. 2019, 22, 1–33. [Google Scholar] [CrossRef]

- Sun, Y.S.; Chen, C.C.; Hsiao, S.W.; Chen, M.C. ANTSdroid: Automatic Malware Family Behaviour Generation and Analysis for Android Apps. In Proceedings of the 23rd Information Security and Privacy, Wollongong, NSW, Australia, 11–13 July 2018; pp. 796–804. [Google Scholar]

- Mirzaei, O.; Suarez-Tangil, G.; de Fuentes, J.M.; Tapiador, J.; Stringhini, G. AndrEnsemble: Leveraging API Ensembles to Characterize Android Malware Families. In Proceedings of the AsiaCCS’19, Auckland, New Zealand, 9–12 July 2019; pp. 304–314. [Google Scholar]

- Tao, G.; Zheng, Z.; Guo, Z.; Lyu, M.R. MalPat: Mining Patterns of Malicious and Benign Android Apps via Permission-Related APIs. IEEE Trans. Reliab. 2018, 67, 355–369. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Y.; Wang, X. A Novel Android Malware Detection Approach Based on Convolutional Neural Network. In Proceedings of the 2nd International Conference on Cryptography, Security and Privacy, Guiyang, China, 16–18 March 2018; pp. 144–149. [Google Scholar]

- Hojjatinia, S.; Hamzenejadi, S.; Mohseni, H. Android Botnet Detection using Convolutional Neural Networks. In Proceedings of the 28th Iranian Conference on Electrical Engineering (ICEE2020), Tabriz, Iran, 4–6 August 2019; pp. 674–679. [Google Scholar]

- Jung, J.; Choi, J.; Cho, S.J.; Han, S.; Park, M.; Hwang, Y. Android malware detection using convolutional neural networks and data section images. In Proceedings of the RACS ’18, Honolulu, HI, USA, 9–12 October 2018; pp. 149–153. [Google Scholar]

- Jiang, J.; Li, S.; Yu, M.; Li, G.; Liu, C.; Chen, K.; Liu, H.; Huang, W. Android Malware Family Classification Based on Sensitive Opcode Sequence. In Proceedings of the 2019 IEEE Symposium on Computers and Communications, Barcelona, Spain, 29 June–3 July 2019; pp. 1–7. [Google Scholar]

- Ikram, M.; Beaume, P.; Kaafar, M.A. DaDiDroid: An Obfuscation Resilient Tool for Detecting Android Malware via Weighted Directed Call Graph Modelling. In Proceedings of the 16th International Joint Conference on e-Business and Telecommunications—SECRYPT, Prague, Czech Republic, 26–28 July 2019; pp. 211–219. [Google Scholar]

- Zhao, B. Mapping System Level Behaviors with Android APIs via System Call Dependence Graphs. Available online: https://arxiv.org/pdf/1906.10238v1.pdf (accessed on 24 June 2019).

- Xu, Z.; Ren, K.; Qin, S.; Craciun, F. CDGDroid: Android Malware Detection Based on Deep Learning Using CFG and DFG. In Proceedings of the 20th International Conference on Formal Engineering Methods, Gold Coast, QLD, Australia, 12–16 November 2018; pp. 177–193. [Google Scholar]

- Xu, Z.; Ren, K.; Song, F. Android Malware Family Classification and Characterization Using CFG and DFG. In Proceedings of the 2019 International Symposium on Theoretical Aspects of Software Engineering (TASE), Guilin, China, 29–31 July 2019; pp. 49–56. [Google Scholar]

- Türker, S.; Can, A.B. AndMFC: Android Malware Family Classification Framework. In Proceedings of the 2019 IEEE 30th International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC Workshops), Istanbul, Turkey, 8 September 2019; pp. 1–6. [Google Scholar]

- Calleja, A.; Martín, A.; Menéndez, H.D.; Tapiador, J.; Clark, D. Picking on the family: Disrupting android malware triage by forcing misclassification. Expert Syst. Appl. 2018, 95, 113–126. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Annual Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. Available online: https://arxiv.org/pdf/1412.6572 (accessed on 12 December 2014).

- Papernot, N.; McDaniel, P.; Jha, S.; Fredrikson, M.; Celik, Z.B.; Swami, A. The Limitations of Deep Learning in Adversarial Settings. In Proceedings of the 2016 IEEE European Symposium on Security and Privacy, Saarbrucken, Germany, 21–24 March 2016; pp. 372–387. [Google Scholar]

- Biggio, B.; Rieck, K.; Ariu, D.; Wressnegger, C.; Corona, I.; Giacinto, G.; Roli, F. Poisoning Behavioral Malware Clustering. In Proceedings of the 2014 ACM Workshop on Artificial Intelligent and Security, Scottsdale, AZ, USA, 7 November 2014; pp. 27–36. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar]

- Ledig, C.; Theis, L.; Huszar, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1063–6919. [Google Scholar]

- Wang, C.; Xu, C.; Wang, C.; Tao, D. Perceptual Adversarial Networks for Image-to-Image Transformation. IEEE Trans. Image Process. 2018, 27, 4066–4079. [Google Scholar] [CrossRef] [PubMed]

- Vondrick, C.; Pirsiavash, H.; Torralba, A. Generating Videos with Scene Dynamics. In Proceedings of the 2016 Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 5–10 December 2016; pp. 1–11. [Google Scholar]

- Tulyakov, S.; Liu, M.Y.; Yang, X.; Kautz, J. MoCoGAN: Decomposing Motion and Content for Video Generation. Available online: https://arxiv.org/abs/1707.04993 (accessed on 14 December 2017).

- Xie, X.; Chen, J.; Li, Y.; Shen, L.; Ma, K.; Zheng, Y. MI2GAN: Generative Adversarial Network for Medical Image Domain Adaptation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention (MICCAI 2020), Lima, Peru, 4–8 October 2020; pp. 1–10. [Google Scholar]

- Chang, Q.; Qu, H.; Zhang, Y.; Sabuncu, M.; Chen, C.; Zhang, T.; Metaxas, D.N. Synthetic Learning: Learn From Distributed Asynchronized Discriminator GAN Without Sharing Medical Image Data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 13856–13866. [Google Scholar]

- Kwon, H.; Yoon, H.; Park, K.W. POSTER: Detecting Audio Adversarial Example through Audio Modification. In Proceedings of the 26th ACM Conference on Computer and Communications Security (CCS’19), London, UK, 11–15 November 2019; pp. 2521–2523. [Google Scholar]

- Kwon, H.; Yoon, H.; Park, K.W. Acoustic-Decoy: Detection of Adversarial Examples through Audio Modification on Speech Recognition System. Neurocomputing 2020, 417, 357–370. [Google Scholar] [CrossRef]

- Dey, S.; Kumar, A.; Sawarkar, M.; Singh, P.K.; Nandi, S. EvadePDF: Towards Evading Machine Learning Based PDF Malware Classifiers. In Proceedings of the 2019 International Conference on Security and Privacy(ISEA-ISAP 2019), Jaipur, India, 9–11 January 2019; pp. 140–150. [Google Scholar]

- Rosenberg, I.; Shabtai, A.; Elovici, Y.; Rokach, L. Defense Methods Against Adversarial Examples for Recurrent Neural Networks. Available online: https://arxiv.org/pdf/1901.09963.pdf (accessed on 20 November 2019).

- Singh, A.; Dutta, D.; Saha, A. MIGAN: Malware Image Synthesis Using GANs. In Proceedings of the 33rd AAAI Conference on Artificial Intelligence, Hilton Hawaiian Village, Honolulu, HI, USA, 27 January–1 February 2019; pp. 10033–10034. [Google Scholar]

- Chen, L.; Hou, S.; Ye, Y.; Xu, S. DroidEye: Fortifying Security of Learning-Based Classifier Against Adversarial Android Malware Attacks. In Proceedings of the 2018 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), Barcelona, Spain, 28–31 August 2018; pp. 782–789. [Google Scholar]

- Chen, Y.M.; Yang, C.H.; Chen, G.C. Using Generative Adversarial Networks for Data Augmentation in Android Malware Detection. In Proceedings of the 2021 IEEE Conference on Dependable and Secure Computing (DSC), Aizuwakamatsu, Fukushima, Japan, 30 January–2 February 2021; pp. 1–8. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional Generative Adversarial Nets. Available online: https://arxiv.org/abs/1411.1784 (accessed on 6 November 2014).

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer Assisted Interventions, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Allix, K.; Bissyandé, T.F.; Klein, J.; Le Traon, Y. AndroZoo: Collecting Millions of Android Apps for the Research Community. In Proceedings of the 13th International Conference on Mining Software Repositories (MSR), Austin, TX, USA, 14–15 May 2016; pp. 468–471. [Google Scholar]

- Arp, D.; Spreitzenbarth, M.; Hubner, M.; Gascon, H.; Rieck, K.; Siemens, C.E.R.T. DREBIN: Effective and Explainable Detection of Android Malware in Your Pocket. In Proceedings of the 2014 Network and Distributed System Security (NDSS), San Diego, CA, USA, 23–26 February 2014; pp. 1–12. [Google Scholar]

| Layer | Feature Map | Size | Kernal Size | Stride | Activation | Paras |

|---|---|---|---|---|---|---|

| Input (Image) | 32 × 32 | 1 | - | - | - | - |

| C1/Convolution | 28 × 28 | 8 | 5 × 5 | 1 | tanh | 608 |

| S2/Pooling | 14 × 14 | 8 | 2 × 2 | 2 | - | 0 |

| C3/Convolution | 10 × 10 | 16 | 5 × 5 | 1 | tanh | 3216 |

| S4/Pooling | 5 × 5 | 16 | 2 × 2 | 2 | - | 0 |

| C5/Convolution | 1 × 1 | 120 | 5 × 5 | 1 | tanh | 48,120 |

| F6/Full connection | 84 | - | - | - | relu | 10,164 |

| Output_Detection | 2 | - | - | - | softmax | 170 |

| Output_Classification | 12 | - | - | - | softmax | 765 |

| EPOCHS = 150 Batch_Size = 32 INIT_LR = 0.001 decay = INIT_LR/EPOCHS | ||||||

| Field | Explanation |

|---|---|

| sha256 | Signature hash |

| sha1 | Signature hash |

| md5 | Signature hash |

| dex_date | The date attached to the dex file inside the zip |

| apk_size | The size of the apk |

| pkg_name | The name of the android Package |

| vercode | The version code |

| vt_detection | The number of AV from VirusTotal that detected the apk as a malware |

| vt_scan_date | Scan date of the VirusTotal scan reports |

| dex_size | The size of the classes.dex file |

| markets | Source (App store) |

| No. | Family | Number | No. | Family | Number |

|---|---|---|---|---|---|

| 1 | FakeInstaller | 925 | 8 | Kmin | 147 |

| 2 | DroidKungFu | 665 | 9 | FakeDoc | 131 |

| 3 | Plankton | 625 | 10 | Adrd | 91 |

| 4 | Opfake | 613 | 11 | Geinimi | 90 |

| 5 | GinMaster | 339 | 12 | DroidDream | 81 |

| 6 | BaseBridge | 327 | - | others | 1360 |

| 7 | Iconosys | 152 | total | - | 5546 |

| Detection: n = 2 | Predicted | ||||

|---|---|---|---|---|---|

| Classification: n = 12 | Class 1 | Class 2 | ...... | Class n | |

| Actual | Class 1 | ...... | |||

| Class 2 | ...... | ||||

| ...... | ...... | ...... | ...... | ||

| Class n | ...... | ||||

| Domain | Indicator | Formula | |

|---|---|---|---|

| (5) | |||

| Detection & Classification | (6) | ||

| (7) | |||

| (8) | |||

| Aver-Precision | (9) | ||

| Classification | Aver-Recall | (10) | |

| Aver-Fscore | (11) |

| T | F | Category | Explanation | Path | |

|---|---|---|---|---|---|

| T | TT | FT | TT, TT | True Detection, True Classification | ①, ④ |

| FT | False Detection, True Classification | ③ | |||

| F | TF | FF | TF | True Detection, False Classification | ⑥ |

| FF, FF | False Detection, False Classification | ②, ⑤ |

| Family | Precision | Recall | F-Score | |||

|---|---|---|---|---|---|---|

| CG | EG(b) | CG | EG(b) | CG | EG(b) | |

| Adrd | 0.833 | 1 | 0.556 | 0.833 | 0.667 | 0.909 |

| BaseBridge | 0.868 | 0.909 | 0.908 | 0.923 | 0.868 | 0.909 |

| DroidDream | 0.714 | 0.857 | 0.313 | 0.75 | 0.435 | 0.8 |

| DroidKungFu | 0.84 | 0.94 | 0.91 | 0.947 | 0.874 | 0.944 |

| FakeDoc | 0.857 | 0.962 | 0.923 | 0.962 | 0.889 | 0.962 |

| FakeInstaller | 0.978 | 0.984 | 0.962 | 0.968 | 0.97 | 0.975 |

| Geinimi | 0.8 | 1 | 0.444 | 0.722 | 0.571 | 0.839 |

| GinMaster | 0.63 | 0.836 | 0.603 | 0.824 | 0.617 | 0.83 |

| Iconosys | 0.806 | 0.829 | 0.967 | 0.967 | 0.879 | 0.892 |

| Kmin | 0.967 | 0.983 | 1 | 1 | 0.935 | 0.967 |

| Opfake | 0.96 | 0.953 | 0.976 | 0.984 | 0.968 | 0.968 |

| Plankton | 0.875 | 0.921 | 0.896 | 0.936 | 0.885 | 0.929 |

| Average | 0.841 | 0.929 | 0.788 | 0.901 | 0.801 | 0.912 |

| Accuary | CG: 0.88 | EG(b): 0.935 | ||||

| Experiment | Chen et al. | This Paper |

|---|---|---|

| Training Model | CNN, GAN | CNN, CGAN |

| Dataset | 10 families, Drebin | 12 families, Drebin |

| Data Augmentation | Adrd, DroidDream, Geinimi | Families with small sizes and poor results |

| Evaluation Indicators | F-score, p-values | Accuracy, Recall, Precision, F-score |

| Results (F-score) | Adrd: 60.1 | Adrd: 66.7 |

| DroidDream: 73.1 | DroidDream: 43.5 | |

| Geinimi: 50.2 | Geinimi: 57.1 |

| Experiment | Accuracy | Robustness Value | R-C | ||

|---|---|---|---|---|---|

| Detection | Classification | Framework | |||

| CG | 0.976 | 0.88 | 0.976 | 1477 | 0.857 |

| EG(b) | 0.987 | 0.935 | 0.989 | 1580.835 | 0.917 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Zhang, L.; Zhao, K.; Ding, X.; Wang, X. AdvAndMal: Adversarial Training for Android Malware Detection and Family Classification. Symmetry 2021, 13, 1081. https://doi.org/10.3390/sym13061081

Wang C, Zhang L, Zhao K, Ding X, Wang X. AdvAndMal: Adversarial Training for Android Malware Detection and Family Classification. Symmetry. 2021; 13(6):1081. https://doi.org/10.3390/sym13061081

Chicago/Turabian StyleWang, Chenyue, Linlin Zhang, Kai Zhao, Xuhui Ding, and Xusheng Wang. 2021. "AdvAndMal: Adversarial Training for Android Malware Detection and Family Classification" Symmetry 13, no. 6: 1081. https://doi.org/10.3390/sym13061081

APA StyleWang, C., Zhang, L., Zhao, K., Ding, X., & Wang, X. (2021). AdvAndMal: Adversarial Training for Android Malware Detection and Family Classification. Symmetry, 13(6), 1081. https://doi.org/10.3390/sym13061081