Bateson and Wright on Number and Quantity: How to Not Separate Thinking from Its Relational Context

Abstract

:1. Multilevel Meanings and Logical Types

2. The Schizophrenia of Symmetry Violations Ignoring Logical Types

In the absence of a central authority or standardized protocol, how is robustness of findings (and decision making) achieved? The answer from the scientific community is complex and twofold: they create objects that are both plastic and coherent through a collective course of action.

3. Separating and Balancing Levels of Reference

4. Numeric Counts vs. Quantitative Measures

4.1. Bateson on Convergent Stochastic Sequences

The computational mechanics approach [to stochastic processes] recognises that, where a noisy pattern in a string of characters is observed as a time or spatial sequence, there is the possibility that future members of the sequence can be predicted. The approach shows that one does not need to know the whole past to optimally predict the future of an observed stochastic sequence, but only what causal state the sequence comes from. All members of the set of pasts that generate the same stochastic future belong to the same causal state.

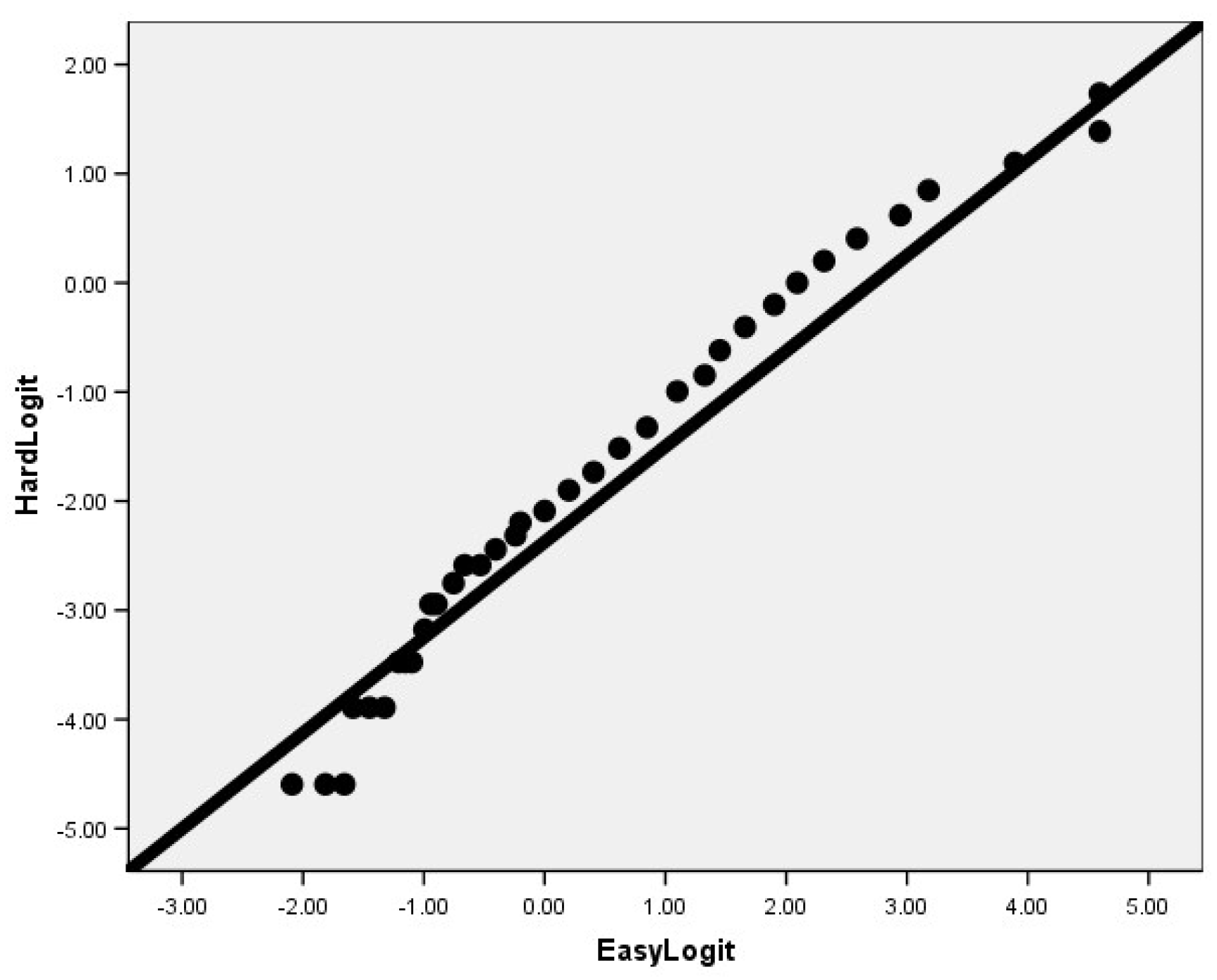

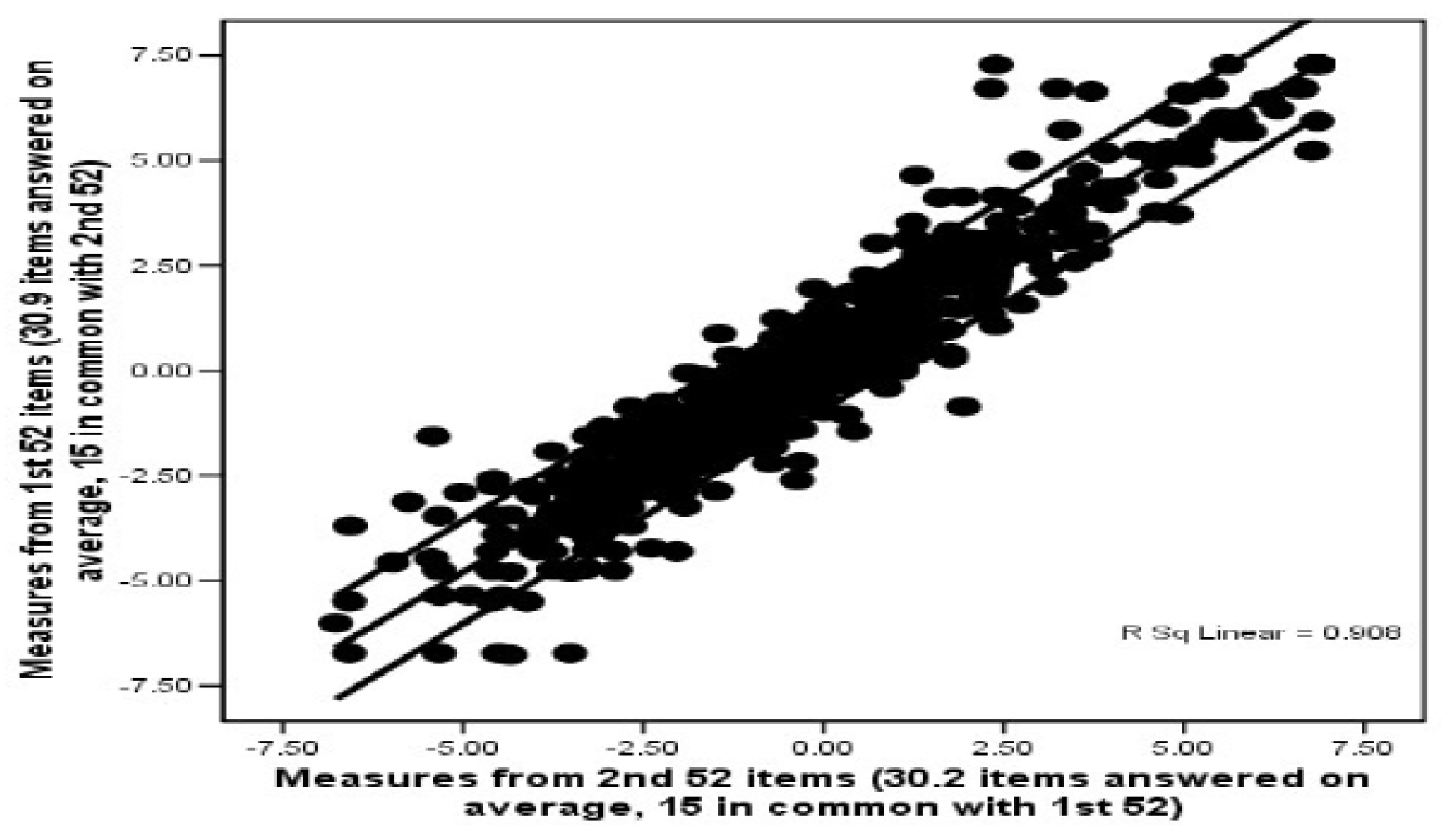

4.2. Wright’s Expansions on Rasch’s Stochastic Models for Measurement

4.3. The Difference That Makes a Difference as a Model Separating Logical Types

5. Discussion

Inverse probability reconceives our raw observations as a probable consequence of a relevant stochastic process with a useful formulation. The apparent determinism of formulae like F = MA depends on the prior construction of relatively precise measures of F and M… The first step from raw observation to inference is to identify the stochastic process by which an inverse probability can be defined. Bernoulli’s binomial distribution is the simplest process. The compound Poisson is the stochastic parent of all such measuring distributions.

- Denotative data reports for formative feedback on concrete responses mapping individualized growth;

- Metalinguistic Wright maps used in psychometric scaling evaluations and abstract instrument calibrations;

- Metacommunicative construct maps and specification equations illustrating explanatory models and formal predictive theories.

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Russell, B. Mathematical logic as based on the theory of types. Am. J. Math. 1908, 30, 222–262. [Google Scholar] [CrossRef] [Green Version]

- Whitehead, A.N.; Russell, B. Principia Mathematica; Cambridge University Press: Cambridge, UK, 1996. [Google Scholar]

- Bateson, G. Steps to an Ecology of Mind: Collected Essays in Anthropology, Psychiatry, Evolution, and Epistemology; University of Chicago Press: Chicago, IL, USA, 1972. [Google Scholar]

- Fisher, W.P., Jr. Wright, Benjamin D. In SAGE Research Methods Foundations; Atkinson, P., Delamont, S., Cernat, A., Sakshaug, J.W., Williams, R., Eds.; Sage Publications: Thousand Oaks, CA, USA, 2020. [Google Scholar]

- Wilson, M.; Fisher, W.P., Jr. (Eds.) Psychological and Social Measurement: The Career and Contributions of Benjamin D Wright; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Star, S.L.; Ruhleder, K. Steps toward an ecology of infrastructure: Design and access for large information spaces. Inf. Syst. Res. 1996, 7, 111–134. [Google Scholar] [CrossRef] [Green Version]

- Bowker, G.C. Susan Leigh Star Special Issue. Mind Cult. Act. 2015, 22, 89–91. [Google Scholar] [CrossRef]

- Bowker, G.C. How knowledge infrastructures learn. In Infrastructures and Social Complexity: A Companion; Harvey, P., Jensen, C.B., Morita, A., Eds.; Routledge: New York, NY, USA, 2016; pp. 391–403. [Google Scholar]

- Alker, H.R. A typology of ecological fallacies. In Quantitative Ecological Analysis in the Social Sciences; Dogan, M., Rokkan, S., Eds.; MIT Press: Cambridge, MA, USA, 1969; pp. 69–86. [Google Scholar]

- Rousseau, D.M. Issues of level in organizational research: Multi-level and cross-level perspectives. Res. Organ. Behav. 1985, 7, 1–37. [Google Scholar]

- Deleuze, G.; Guattari, F. Anti-Oedipus: Capitalism and Schizophrenia; Penguin: New York, NY, USA, 1977. [Google Scholar]

- Brown, N.O. Love’s Body; University of California Press: Berkeley, CA, USA, 1966. [Google Scholar]

- Wright, B.D. On behalf of a personal approach to learning. Elem. Sch. J. 1958, 58, 365–375. [Google Scholar] [CrossRef]

- Wright, B.D.; Bettelheim, B. Professional identity and personal rewards in teaching. Elem. Sch. J. 1957, 297–307. [Google Scholar] [CrossRef]

- Townes, C.H.; Merritt, F.R.; Wright, B.D. The pure rotational spectrum of ICL. Phys. Rev. 1948, 73, 1334–1337. [Google Scholar] [CrossRef]

- Wright, B.D. A Simple Method for Factor Analyzing Two-Way Data for Structure; Social Research Inc.: Chicago, IL, USA, 1957. [Google Scholar]

- Wright, B.D.; Evitts, S. Multiple regression in the explanation of social structure. J. Soc. Psychol. 1963, 61, 87–98. [Google Scholar] [CrossRef] [PubMed]

- Wright, B.D.; Loomis, E.; Meyer, L. The semantic differential as a diagnostic instrument for distinguishing schizophrenic, retarded, and normal pre-school boys. Am. Psychol. 1962, 17, 297. [Google Scholar]

- Wright, B.D.; Loomis, E.; Meyer, L. Observational Q-sort differences between schizophrenic, retarded, and normal preschool boys. Child Dev. 1963, 34, 169–185. [Google Scholar] [CrossRef]

- Wright, B.D. Georg Rasch and measurement. Rasch Meas. Trans. 1988, 2, 25–32. [Google Scholar]

- Bateson, G.; Jackson, D.D.; Haley, J.; Weakland, J. Toward a theory of schizophrenia. Behav. Sci. 1956, 1, 251–264. [Google Scholar] [CrossRef]

- Boundas, C.V. (Ed.) Schizoanalysis and Ecosophy: Reading Deleuze and Guattari; Bloomsbury Publishing: London, UK, 2018. [Google Scholar]

- Dodds, J. Psychoanalysis and Ecology at the Edge of Chaos: Complexity Theory, Deleuze, Guattari and Psychoanalysis for a Climate in Crisis; Routledge: New York, NY, USA, 2012. [Google Scholar]

- Robey, D.; Boudreau, M.C. Accounting for the contradictory organizational consequences of information technology: Theoretical directions and methodological implications. Inf. Syst. Res. 1999, 10, 167–185. [Google Scholar] [CrossRef] [Green Version]

- Cullin, J. Double bind: Much more than just a step ‘Toward a Theory of Schizophrenia’. Aust. N. Z. J. Fam. Ther. 2013, 27, 135–142. [Google Scholar] [CrossRef]

- Olson, D.H. Empirically unbinding the double bind: Review of research and conceptual reformulations. Fam. Process 1972, 11, 69–94. [Google Scholar] [CrossRef]

- Radman, A. Double bind: On material ethics. In Schizoanalysis and Ecosophy: Reading Deleuze and Guattari; Boundas, C.V., Ed.; Bloomsbury Publishing: London, UK, 2018; pp. 241–256. [Google Scholar]

- Stagoll, B. Gregory Bateson (1904–1980): A reappraisal. Aust. N. Z. J. Psychiatr. 2005, 39, 1036–1045. [Google Scholar]

- Visser, M. Gregory Bateson on deutero-learning and double bind: A brief conceptual history. J. Hist. Behav. Sci. 2003, 39, 269–278. [Google Scholar] [CrossRef]

- Watzlawick, P. A review of the double bind theory. Fam. Process 1963, 2, 132–153. [Google Scholar] [CrossRef]

- Star, S.L. The structure of ill-structured solutions: Boundary objects and heterogeneous distributed problem solving. In Boundary Objects and Beyond: Working with Leigh Star; Bowker, G., Timmermans, S., Clarke, A.E., Balka, E., Eds.; The MIT Press: Cambridge, MA, USA, 2015; pp. 243–259. [Google Scholar]

- Miller, J.G. Living Systems; McGraw Hill: New York, NY, USA, 1978. [Google Scholar]

- Subramanian, S.V.; Jones, K.; Kaddour, A.; Krieger, N. Revisiting Robinson: The perils of individualistic and ecologic fallacy. Int. J. Epidemiol. 2009, 38, 342–360. [Google Scholar] [CrossRef] [Green Version]

- Van de Vijver, F.J.; Van Hemert, D.A.; Poortinga, Y.H. (Eds.) Multilevel Analysis of Individuals and Cultures; Psychology Press: New York, NY, USA, 2015. [Google Scholar]

- Palmgren, E. A constructive examination of a Russell-style ramified type theory. Bull. Symb. Log. 2018, 24, 90–106. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.; Chang, J.Y.; Moon, R.H. Conceptualization of emergent constructs in a multilevel approach to understanding individual creativity in organizations. In Individual Creativity in the Workplace; Reiter-Palmon, R., Kennel, V.L., Kaufman, J.C., Eds.; Academic Press: San Diego, CA, USA, 2018; pp. 83–100. [Google Scholar]

- Maschler, Y. Metalanguaging and discourse markers in bilingual conversation. Lang. Soc. 1994, 23, 325–366. [Google Scholar] [CrossRef]

- Kaiser, A. Learning from the future meets Bateson’s levels of learning. Learn. Org. 2018, 25, 237–247. [Google Scholar] [CrossRef]

- von Goldammer, E.; Paul, J. The logical categories of learning and communication: Reconsidered from a polycontextural point of view: Learning in machines and living systems. Kybernetes 2007, 36, 1000–1011. [Google Scholar] [CrossRef]

- Volk, T.; Bloom, J.W.; Richards, J. Toward a science of metapatterns: Building upon Bateson’s foundation. Kybernetes 2007, 36, 1070–1080. [Google Scholar] [CrossRef] [Green Version]

- Blok, A.; Nakazora, M.; Winthereik, B.R. Infrastructuring environments. Sci. Cult. 2016, 25, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Crabu, S.; Magaudda, P. Bottom-up infrastructures: Aligning politics and technology in building a wireless community network. Comput. Support. Coop. Work 2018, 27, 149–176. [Google Scholar] [CrossRef] [Green Version]

- Guribye, F. From artifacts to infrastructures in studies of learning practices. Mind Cult. Act. 2015, 22, 184–198. [Google Scholar] [CrossRef]

- Hanseth, O.; Monteiro, E.; Hatling, M. Developing information infrastructure: The tension between standardization and flexibility. Sci. Technol. Hum. Values 1996, 21, 407–426. [Google Scholar] [CrossRef] [Green Version]

- Hutchins, E. Cognitive ecology. Top. Cogn. Sci. 2010, 2, 705–715. [Google Scholar] [CrossRef]

- Hutchins, E. Concepts in practice as sources of order. Mind Cult. Act. 2012, 19, 314–323. [Google Scholar] [CrossRef]

- Karasti, H.; Millerand, F.; Hine, C.M.; Bowker, G.C. Knowledge infrastructures: Intro to Part, I. Sci. Technol. Stud. 2016, 29, 2–12. [Google Scholar]

- Rolland, K.H.; Monteiro, E. Balancing the local and the global in infrastructural information systems. Inf. Soc. 2002, 18, 87–100. [Google Scholar] [CrossRef] [Green Version]

- Shavit, A.; Silver, Y. “To infinity and beyond!”: Inner tensions in global knowledge infrastructures lead to local and pro-active ‘location’ information. Sci. Technol. Stud. 2016, 29, 31–49. [Google Scholar] [CrossRef]

- Vaast, E.; Walsham, G. Trans-situated learning: Supporting a network of practice with an information infrastructure. Inf. Syst. Res. 2009, 20, 547–564. [Google Scholar] [CrossRef]

- Krippendorff, K. [Review] Angels Fear: Toward an Epistemology of the Sacred, by Gregory Bateson and Mary Catherine Bateson. New York: MacMillian, 1987. J. Commun. 1988, 38, 167–171. [Google Scholar]

- Ladd, H.F. No Child Left Behind: A deeply flawed federal policy. J. Policy Anal. Manag. 2017, 36, 461–469. [Google Scholar] [CrossRef]

- Bateson, G. Mind and Nature: A Necessary Unity; E. P. Dutton: New York, NY, USA, 1979. [Google Scholar]

- Devine, S.D. Algorithmic Information Theory: Review for Physicists and Natural Scientists; Victoria Management School, Victoria University of Wellington: Wellington, New Zealand, 2014. [Google Scholar]

- Rasch, G. Probabilistic Models for Some Intelligence and Attainment Tests; University of Chicago Press: Chicago, IL, USA, 1980. [Google Scholar]

- Rasch, G. On general laws and the meaning of measurement in psychology. In Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability: Volume IV: Contributions to Biology and Problems of Medicine; Neyman, J., Ed.; University of California Press: Berkeley, CA, USA, 1961; pp. 321–333. [Google Scholar]

- Commons, M.L.; Goodheart, E.A.; Pekker, A.; Dawson-Tunik, T.L.; Adams, K.M. Using Rasch scaled stage scores to validate orders of hierarchical complexity of balance beam task sequences. J. Appl. Meas. 2008, 9, 182–199. [Google Scholar] [PubMed]

- Dawson, T.L. New tools, new insights: Kohlberg’s moral reasoning stages revisited. Int. J. Behav. Dev. 2002, 26, 154–166. [Google Scholar] [CrossRef]

- Dawson, T.L. Assessing intellectual development: Three approaches, one sequence. J. Adult Dev. 2004, 11, 71–85. [Google Scholar] [CrossRef] [Green Version]

- De Boeck, P.; Wilson, M. (Eds.) Explanatory Item Response Models: A Generalized Linear and Nonlinear Approach; Springer: New York, NY, USA, 2004. [Google Scholar]

- Embretson, S.E. A general latent trait model for response processes. Psychometrika 1984, 49, 175–186. [Google Scholar] [CrossRef]

- Embretson, S.E. Measuring Psychological Constructs: Advances in Model.-Based Approaches; American Psychological Association: Washington, DC, USA, 2010. [Google Scholar]

- Fischer, G.H. The linear logistic test model as an instrument in educational research. Acta Psychol. 1973, 37, 359–374. [Google Scholar] [CrossRef]

- Fischer, K.W. A theory of cognitive development: The control and construction of hierarchies of skills. Psychol. Rev. 1980, 87, 477–531. [Google Scholar] [CrossRef]

- Melin, J.; Cano, S.; Pendrill, L. The role of entropy in construct specification equations (CSE) to improve the validity of memory tests. Entropy 2021, 23, 212. [Google Scholar] [CrossRef]

- Stenner, A.J.; Smith, M., III. Testing construct theories. Percept. Mot. Skills 1982, 55, 415–426. [Google Scholar] [CrossRef]

- Stenner, A.J.; Fisher, W.P., Jr.; Stone, M.H.; Burdick, D.S. Causal Rasch models. Front. Psychol. Quant. Psychol. Meas. 2013, 4, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Fisher, W.P., Jr. A practical approach to modeling complex adaptive flows in psychology and social science. Procedia Comp. Sci. 2017, 114, 165–174. [Google Scholar] [CrossRef]

- Andrich, D. Rasch Models for Measurement; Sage University Paper Series on Quantitative Applications in the Social Sciences; Sage Publications: Beverly Hills, CA, USA, 1988; Volume 07-068. [Google Scholar]

- Bond, T.; Fox, C. Applying the Rasch Model: Fundamental Measurement in the Human Sciences, 3rd ed.; Routledge: New York, NY, USA, 2015. [Google Scholar]

- Engelhard, G., Jr. Invariant Measurement: Using Rasch Models in the Social, Behavioral, and Health Sciences; Routledge Academic: New York, NY, USA, 2012. [Google Scholar]

- Fisher, W.P., Jr.; Wright, B.D. (Eds.) Applications of probabilistic conjoint measurement. Int. J. Educ. Res. 1994, 21, 557–664. [Google Scholar]

- Hobart, J.C.; Cano, S.J.; Zajicek, J.P.; Thompson, A.J. Rating scales as outcome measures for clinical trials in neurology: Problems, solutions, and recommendations. Lancet Neurol. 2007, 6, 1094–1105. [Google Scholar] [CrossRef]

- Kelley, P.R.; Schumacher, C.F. The Rasch model: Its use by the National Board of Medical Examiners. Eval. Health Prof. 1984, 7, 443–454. [Google Scholar] [CrossRef]

- Wilson, M. Constructing Measures: An Item Response Modeling Approach; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2005. [Google Scholar]

- Wright, B.D. A history of social science measurement. Educ. Meas. Issues Pract. 1997, 16, 33–45. [Google Scholar] [CrossRef]

- Wright, B.D. Fundamental measurement for psychology. In The New Rules of Measurement: What Every Educator and Psychologist Should Know; Embretson, S.E., Hershberger, S.L., Eds.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1999; pp. 65–104. [Google Scholar]

- Cano, S.; Pendrill, L.; Melin, J.; Fisher, W.P., Jr. Towards consensus measurement standards for patient-centered outcomes. Measurement 2019, 141, 62–69. [Google Scholar] [CrossRef]

- Mari, L.; Maul, A.; Irribara, D.T.; Wilson, M. Quantities, quantification, and the necessary and sufficient conditions for measurement. Measurement 2016, 100, 115–121. [Google Scholar] [CrossRef]

- Mari, L.; Wilson, M. An introduction to the Rasch measurement approach for metrologists. Measurement 2014, 51, 315–327. [Google Scholar] [CrossRef]

- Mari, L.; Wilson, M.; Maul, A. Measurement across the Sciences; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Pendrill, L. Man as a measurement instrument [Special Feature]. J. Meas. Sci. 2014, 9, 22–33. [Google Scholar]

- Pendrill, L. Quality Assured Measurement: Unification Across Social and Physical Sciences; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Pendrill, L.; Fisher, W.P., Jr. Counting and quantification: Comparing psychometric and metrological perspectives on visual perceptions of number. Measurement 2015, 71, 46–55. [Google Scholar] [CrossRef]

- Wilson, M.; Fisher, W.P., Jr. Preface: 2016 IMEKO TC1-TC7-TC13 Joint Symposium: Metrology across the Sciences: Wishful Thinking? J. Phys. Conf. Ser. 2016, 772, 011001. [Google Scholar]

- Wilson, M.; Fisher, W.P., Jr. Preface of special issue, Psychometric Metrology. Measurement 2019, 145, 190. [Google Scholar] [CrossRef]

- Fisher, W.P., Jr. Invariance and traceability for measures of human, social, and natural capital: Theory and application. Measurement 2009, 42, 1278–1287. [Google Scholar] [CrossRef]

- Fisher, W.P., Jr. What the world needs now: A bold plan for new standards [Third place, 2011 NIST/SES World Standards Day paper competition]. Stand. Eng. 2012, 64, 3–5. [Google Scholar]

- Fisher, W.P., Jr. Measurements toward a future SI: On the longstanding existence of metrology-ready precision quantities in psychology and the social sciences. In Sensors and Measurement Science International (SMSI) 2020 Proceedings; Gerlach, G., Sommer, K.-D., Eds.; AMA Service GmbH: Wunstorf, Germany, 2020; pp. 38–39. [Google Scholar]

- Newby, V.A.; Conner, G.R.; Grant, C.P.; Bunderson, C.V. The Rasch model and additive conjoint measurement. J. Appl. Meas. 2009, 10, 348–354. [Google Scholar]

- Andersen, E.B. Sufficient statistics and latent trait models. Psychometrika 1977, 42, 69–81. [Google Scholar] [CrossRef]

- Andrich, D. Sufficiency and conditional estimation of person parameters in the polytomous Rasch model. Psychometrika 2010, 75, 292–308. [Google Scholar] [CrossRef]

- Fischer, G.H. On the existence and uniqueness of maximum-likelihood estimates in the Rasch model. Psychometrika 1981, 46, 59–77. [Google Scholar] [CrossRef]

- Wright, B.D. Rasch model from counting right answers: Raw scores as sufficient statistics. Rasch Meas. Trans. 1989, 3, 62. [Google Scholar]

- Fisher, R.A. On the mathematical foundations of theoretical statistics. Philos. Trans. R. Soc. Lond. A 1922, 222, 309–368. [Google Scholar]

- Fisher, R.A. Two new properties of mathematical likelihood. Proc. R. Soc. A 1934, 144, 285–307. [Google Scholar]

- Arnold, S.F. Sufficiency and invariance. Stat. Probab. Lett. 1985, 3, 275–279. [Google Scholar] [CrossRef]

- Hall, W.J.; Wijsman, R.A.; Ghosh, J.K. The relationship between sufficiency and invariance with applications in sequential analysis. Ann. Math. Stat. 1965, 36, 575–614. [Google Scholar] [CrossRef]

- Wright, B.D. Thinking with raw scores. Rasch Meas. Trans. 1993, 7, 299–300. [Google Scholar]

- Wright, B.D. Measuring and counting. Rasch Meas. Trans. 1994, 8, 371. [Google Scholar]

- Wright, B.D.; Linacre, J.M. Observations are always ordinal; measurements, however, must be interval. Arch. Phys. Med. Rehabil. 1989, 70, 857–867. [Google Scholar] [PubMed]

- Michell, J. Measurement scales and statistics: A clash of paradigms. Psychol. Bull. 1986, 100, 398–407. [Google Scholar] [CrossRef]

- Sijtsma, K. Playing with data--or how to discourage questionable research practices and stimulate researchers to do things right. Psychometrika 2016, 81, 1–15. [Google Scholar] [CrossRef]

- Linacre, J.M. Estimation methods for Rasch measures. J. Outcome Meas. 1998, 3, 382–405. [Google Scholar]

- Narens, L.; Luce, R.D. Measurement: The theory of numerical assignments. Psychol. Bull. 1986, 99, 166–180. [Google Scholar] [CrossRef]

- Barney, M.; Fisher, W.P., Jr. Adaptive measurement and assessment. Annu. Rev. Organ. Psychol. Organ. Behav. 2016, 3, 469–490. [Google Scholar] [CrossRef]

- Lunz, M.E.; Bergstrom, B.A.; Gershon, R.C. Computer adaptive testing. Int. J. Educ. Res. 1994, 21, 623–634. [Google Scholar] [CrossRef]

- Kielhofner, G.; Dobria, L.; Forsyth, K.; Basu, S. The construction of keyforms for obtaining instantaneous measures from the Occupational Performance History Interview Ratings Scales. Occup. Particip. Health 2005, 25, 23–32. [Google Scholar] [CrossRef]

- Linacre, J.M. Instantaneous measurement and diagnosis. Phys. Med. Rehabil. State Art Rev. 1997, 11, 315–324. [Google Scholar]

- Fisher, W.P., Jr.; Elbaum, B.; Coulter, W.A. Reliability, precision, and measurement in the context of data from ability tests, surveys, and assessments. J. Phys. Conf. Ser. 2010, 238, 12036. [Google Scholar] [CrossRef] [Green Version]

- Sijtsma, K. Introduction to the measurement of psychological attributes. Measurement 2011, 42, 1209–1219. [Google Scholar] [CrossRef] [Green Version]

- Graßhoff, U.; Holling, H.; Schwabe, R. Optimal designs for linear logistic test models. In MODa9—Advances in Model.-Oriented Design and Analysis: Contributions to Statistics; Giovagnoli, A., Atkinson, A.C., Torsney, B., May, C., Eds.; Physica-Verlag HD: Heidelberg, Germany, 2010; pp. 97–104. [Google Scholar]

- Green, K.E.; Smith, R.M. A comparison of two methods of decomposing item difficulties. J. Educ. Stat. 1987, 12, 369–381. [Google Scholar] [CrossRef]

- Kubinger, K.D. Applications of the linear logistic test model in psychometric research. Educ. Psychol. Meas. 2009, 69, 232–244. [Google Scholar] [CrossRef]

- Latimer, S.L. Using the Linear Logistic Test Model to investigate a discourse-based model of reading comprehension. Rasch Model. Meas. Educ. Psychol. Res. 1982, 9, 73–94. [Google Scholar]

- Prien, B. How to predetermine the difficulty of items of examinations and standardized tests. Stud. Educ. Eval. 1989, 15, 309–317. [Google Scholar] [CrossRef]

- Likert, R. A technique for the measurement of attitudes. Arch. Psychol. 1932, 140, 5–55. [Google Scholar]

- Thurstone, L.L. Attitudes can be measured. Am. J. Sociol. 1928, XXXIII, 529–544. In The Measurement of Values; Midway Reprint Series; University of Chicago Press: Chicago, IL, USA, 1959; pp. 215–233. [Google Scholar]

- Fisher, W.P., Jr. Survey design recommendations. Rasch Meas. Trans. 2006, 20, 1072–1074. [Google Scholar]

- Linacre, J.M. Rasch-based generalizability theory. Rasch Meas. Trans. 1993, 7, 283–284. [Google Scholar]

- Fisher, W.P., Jr. Thurstone’s missed opportunity. Rasch Meas. Trans. 1997, 11, 554. [Google Scholar]

- Fisher, W.P., Jr. Metrology note. Rasch Meas. Trans. 1999, 13, 704. [Google Scholar]

- He, W.; Li, S.; Kingsbury, G.G. A large-scale, long-term study of scale drift: The micro view and the macro view. J. Phys. Conf. Ser. 2016, 772, 12022. [Google Scholar] [CrossRef] [Green Version]

- Jaeger, R.M. The national test equating study in reading (The Anchor Test Study). Meas. Educ. 1973, 4, 1–8. [Google Scholar]

- Rentz, R.R.; Bashaw, W.L. The National Reference Scale for Reading: An application of the Rasch model. J. Educ. Meas. 1977, 14, 161–179. [Google Scholar] [CrossRef]

- Fisher, W.P., Jr.; Stenner, A.J. Theory-based metrological traceability in education: A reading measurement network. Measurement 2016, 92, 489–496. [Google Scholar] [CrossRef] [PubMed]

- Wright, B.D. Common sense for measurement. Rasch Meas. Trans. 1999, 13, 704–705. [Google Scholar]

- Luce, R.D.; Tukey, J.W. Simultaneous conjoint measurement: A new kind of fundamental measurement. J. Math. Psychol. 1964, 1, 1–27. [Google Scholar] [CrossRef]

- Fisher, W.P., Jr. Rasch, Maxwell’s Method of Analogy, and the Chicago Tradition; University of Copenhagen School of Business, FUHU Conference Centre: Copenhagen, Denmark, 2010. [Google Scholar]

- Fisher, W.P., Jr.; Stenner, A.J. On the potential for improved measurement in the human and social sciences. In Pacific Rim Objective Measurement Symposium 2012 Conference Proceedings; Zhang, Q., Yang, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 1–11. [Google Scholar]

- Fisher, W.P., Jr. The standard model in the history of the natural sciences, econometrics, and the social sciences. J. Phys. Conf. Ser. 2010, 238, 12016. [Google Scholar] [CrossRef]

- Berg, M.; Timmermans, S. Order and their others: On the constitution of universalities in medical work. Configurations 2000, 8, 31–61. [Google Scholar] [CrossRef]

- Galison, P.; Stump, D.J. The Disunity of Science: Boundaries, Contexts, and Power; Stanford University Press: Stanford, CA, USA, 1996. [Google Scholar]

- Robinson, M. Double-level languages and co-operative working. AI Soc. 1991, 5, 34–60. [Google Scholar] [CrossRef]

- O’Connell, J. Metrology: The creation of universality by the circulation of particulars. Soc. Stud. Sci. 1993, 23, 129–173. [Google Scholar] [CrossRef] [Green Version]

- Star, S.L.; Griesemer, J.R. Institutional ecology, ‘translations’, and boundary objects: Amateurs and professionals in Berkeley’s Museum of Vertebrate Zoology, 1907–1939. Soc. Stud. Sci. 1989, 19, 387–420. [Google Scholar] [CrossRef]

- Finkelstein, L. Representation by symbol systems as an extension of the concept of measurement. Kybernetes 1975, 4, 215–223. [Google Scholar] [CrossRef]

- Finkelstein, L. Widely-defined measurement—An analysis of challenges. Meas. Concern. Found. Concepts Meas. Spec. Issue Sect. 2009, 42, 1270–1277. [Google Scholar] [CrossRef]

- Fisher, W.P., Jr. Contextualizing sustainable development metric standards: Imagining new entrepreneurial possibilities. Sustainability 2020, 12, 9661. [Google Scholar] [CrossRef]

- Bond, T.G. Piaget and measurement II: Empirical validation of the Piagetian model. Arch. Psychol. 1994, 63, 155–185. [Google Scholar]

- Commons, M.L.; Miller, P.M.; Goodheart, E.A.; Danaher-Gilpin, D. Hierarchical Complexity Scoring System (HCSS): How to Score Anything; Dare Institute: Cambridge, MA, USA, 2005. [Google Scholar]

- Dawson, T.L.; Fischer, K.W.; Stein, Z. Reconsidering qualitative and quantitative research approaches: A cognitive developmental perspective. New Ideas Psychol. 2006, 24, 229–239. [Google Scholar] [CrossRef]

- Mueller, U.; Sokol, B.; Overton, W.F. Developmental sequences in class reasoning and propositional reasoning. J. Exp. Child Psychol. 1999, 74, 69–106. [Google Scholar] [CrossRef] [Green Version]

- Overton, W.F. Developmental psychology: Philosophy, concepts, and methodology. Handb. Child Psychol. 1998, 1, 107–188. [Google Scholar]

- Stein, Z.; Dawson, T.; Van Rossum, Z.; Rothaizer, J.; Hill, S. Virtuous cycles of learning: Using formative, embedded, and diagnostic developmental assessments in a large-scale leadership program. J. Integral Theory Pract. 2014, 9, 1–11. [Google Scholar]

- Box, G.E.P. Some problems of statistics of everyday life. J. Am. Stat. Assoc. 1979, 74, 1–4. [Google Scholar] [CrossRef]

- Wright, B.D.; Mead, R.; Ludlow, L. KIDMAP: Person-by-Item Interaction Mapping; Research Memorandum 29; University of Chicago, Statistical Laboratory, Department of Education: Chicago, IL, USA, 1980; p. 6. [Google Scholar]

- Chien, T.W.; Chang, Y.; Wen, K.S.; Uen, Y.H. Using graphical representations to enhance the quality-of-care for colorectal cancer patients. Eur. J. Cancer Care 2018, 27, e12591. [Google Scholar] [CrossRef] [Green Version]

- Black, P.; Wilson, M.; Yao, S. Road maps for learning: A guide to the navigation of learning progressions. Meas. Interdiscip. Res. Perspect. 2011, 9, 1–52. [Google Scholar] [CrossRef]

- Fisher, W.P., Jr.; Oon, E.P.-T.; Benson, S. Rethinking the role of educational assessment in classroom communities: How can design thinking address the problems of coherence and complexity? Educ. Des. Res. 2021, 5, 1–33. [Google Scholar]

- Irribarra, T.D.; Freund, R.; Fisher, W.P., Jr.; Wilson, M. Metrological traceability in education: A practical online system for measuring and managing middle school mathematics instruction. J. Phys. Conf. Ser. 2015, 588, 12042. [Google Scholar] [CrossRef]

- Wilson, M.; Scalise, K. Assessment of learning in digital networks. In Assessment and Teaching of 21st Century Skills: Methods and Approach; Griffin, P., Care, E., Eds.; Springer: Dordrecht, The Netherlands, 2015; pp. 57–81. [Google Scholar]

- Wilson, M.; Sloane, K. From principles to practice: An embedded assessment system. Appl. Meas. Educ. 2000, 13, 181–208. [Google Scholar] [CrossRef]

- Fisher, W.P., Jr.; Wilson, M. Building a productive trading zone in educational assessment research and practice. Pensam. Educ. Rev. Investig. Educ. Latinoam. 2015, 52, 55–78. [Google Scholar] [CrossRef]

- Fisher, W.P., Jr.; Wilson, M. An online platform for sociocognitive metrology: The BEAR Assessment System Software. Meas. Sci. Technol. 2020, 31, 34006. [Google Scholar] [CrossRef]

- Adams, R.J.; Wu, M.L.; Wilson, M.R. ACER ConQuest: Generalized Item Response Modelling Software, Version 4; Australian Council for Educational Research: Camberwell, Australia, 2015. [Google Scholar]

- Andrich, D.; Sheridan, B.; Luo, G. RUMM 2030: Rasch Unidimensional Models for Measurement; RUMM Laboratory Pty Ltd.: Perth, Australia, 2017. [Google Scholar]

- Linacre, J.M. A User’s Guide to WINSTEPS Rasch-Model; Computer Program, v. 4.8.1; Winsteps: Beaverton, OR, USA, 2021. [Google Scholar]

- Mair, P. Rasch goes Open Source: Rasch models within the R environment for statistical computing. In Horizons in Computer Science Research. Vol. 5. Horizons in Computer Science Research; Clary, T.S., Ed.; Nova Science Publisher: Hauppauge, NY, USA, 2012. [Google Scholar]

- Whitehead, A.N. An Introduction to Mathematics; Henry Holt and Co.: New York, NY, USA, 1911. [Google Scholar]

- Kahneman, D. Thinking Fast and Slow; Farrar, Straus and Giroux: New York, NY, USA, 2011. [Google Scholar]

- Barab, S.A.; Plucker, J.A. Smart people or smart contexts? Cognition, ability, and talent development in an age of situated approaches to knowing and learning. Educ. Psychol. 2002, 37, 165–182. [Google Scholar] [CrossRef]

- Commons, M.L.; Goodheart, E.A. Cultural progress is the result of developmental level of support. World Futur. J. New Paradig. Res. 2008, 64, 406–415. [Google Scholar] [CrossRef]

- Fischer, K.W.; Farrar, M.J. Generalizations about generalization: How a theory of skill development explains both generality and specificity. Int. J. Psychol. 1987, 22, 643–677. [Google Scholar] [CrossRef]

- Sutton, J.; Harris, C.B.; Keil, P.G.; Barnier, A.J. The psychology of memory, extended cognition, and socially distributed remembering. Phenomenol. Cogn. Sci. 2010, 9, 521–560. [Google Scholar] [CrossRef]

- Vygotsky, L.S. Mind and Society: The Development of Higher Mental Processes; Harvard University Press: Cambridge, MA, USA, 1978. [Google Scholar]

- Bradley, K.; Hult, A.; Cars, G. From eco-modernizing to political ecologizing. In Sustainable Stockholm: Exploring Urban. Sustainability in Europe’s Greenest City; Metzger, J., Olsson, A.R., Eds.; Routledge: London, UK, 2013; pp. 168–194. [Google Scholar]

- Fisher, W.P., Jr.; Stenner, A.J. Ecologizing vs modernizing in measurement and metrology. J. Phys. Conf. Ser. 2018, 1044, 12025. [Google Scholar] [CrossRef] [Green Version]

- Latour, B. To modernise or ecologise? That is the question. In Remaking Reality: Nature at the Millennium; Braun, B., Castree, N., Eds.; Routledge: London, UK, 1998; pp. 221–242. [Google Scholar]

- Berque, A. An enquiry into the ontological and logical foundations of sustainability: Toward a conceptual integration of the interface ‘Nature/Humanity’. Glob. Sustain. 2019, 2, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Clark, A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 2013, 36, 181–204. [Google Scholar] [CrossRef]

- Latour, B. Science in Action: How to Follow Scientists and Engineers Through Society; Harvard University Press: New York, NY, USA, 1987. [Google Scholar]

- Latour, B. Reassembling the Social: An. Introduction to Actor-Network-Theory; Clarendon Lectures in Management Studies; Oxford University Press: Oxford, UK, 2005. [Google Scholar]

- Scott, J.C. Seeing Like a State: How Certain Schemes to Improve the Human Condition Have Failed; Yale University Press: New Haven, CT, USA, 1998. [Google Scholar]

- Hayek, F.A. Individualism and Economic Order; University of Chicago Press: Chicago, IL, USA, 1948. [Google Scholar]

- Hawken, P. Blessed Unrest: How the Largest Movement in the World Came into Being and Why No One Saw It Coming; Viking Penguin: New York, NY, USA, 2007. [Google Scholar]

- Olsson, L.; Jerneck, A.; Thoren, H.; Persson, J.; O’Byrne, D. Why resilience is unappealing to social science: Theoretical and empirical investigations of the scientific use of resilience. Sci. Adv. 2015, 1, e1400217. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Diener, P. Quantum adjustment, macroevolution, and the social field: Some comments on evolution and culture [and comments and reply]. Curr. Anthropol. 1980, 21, 423–443. [Google Scholar] [CrossRef]

- Jensen, C.B.; Morita, A. Introduction: Infrastructures as ontological experiments. Ethnos 2017, 82, 615–626. [Google Scholar] [CrossRef]

- Oppenheimer, R. Analogy in science. Am. Psychol. 1956, 11, 127–135. [Google Scholar] [CrossRef]

- Bohr, N. Essays 1958–1962 on Atomic Physics and Human Knowledge; John Wiley & Sons: New York, NY, USA, 1963. [Google Scholar]

- Nersessian, N.J. Maxwell and “The method of physical analogy”: Model-based reasoning, generic abstraction, and conceptual change. In Reading Natural Philosophy: Essays in the History and Philosophy of Science and Mathematics; Malament, D., Ed.; Open Court: Lasalle, IL, USA, 2002; pp. 129–166. [Google Scholar]

- Gadamer, H.-G.; Smith, P.C. Dialogue and Dialectic: Eight Hermeneutical Studies on Plato; Yale University Press: New Haven, CT, USA, 1980. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fisher, W.P., Jr. Bateson and Wright on Number and Quantity: How to Not Separate Thinking from Its Relational Context. Symmetry 2021, 13, 1415. https://doi.org/10.3390/sym13081415

Fisher WP Jr. Bateson and Wright on Number and Quantity: How to Not Separate Thinking from Its Relational Context. Symmetry. 2021; 13(8):1415. https://doi.org/10.3390/sym13081415

Chicago/Turabian StyleFisher, William P., Jr. 2021. "Bateson and Wright on Number and Quantity: How to Not Separate Thinking from Its Relational Context" Symmetry 13, no. 8: 1415. https://doi.org/10.3390/sym13081415

APA StyleFisher, W. P., Jr. (2021). Bateson and Wright on Number and Quantity: How to Not Separate Thinking from Its Relational Context. Symmetry, 13(8), 1415. https://doi.org/10.3390/sym13081415