A Fast Circle Detector with Efficient Arc Extraction

Abstract

:1. Introduction

- A method of image edge refinement that reduces edge redundancy while effectively eliminating intersections;

- An improved CTAR [35] corner-detection algorithm that ensures complete corner detection of contour curves by adding positive–negative point detection, adaptive sampling interval, and adaptive thresholds;

- One complete circle dataset and one incomplete circle dataset are constructed, and the circle detection algorithm was fully tested on these datasets.

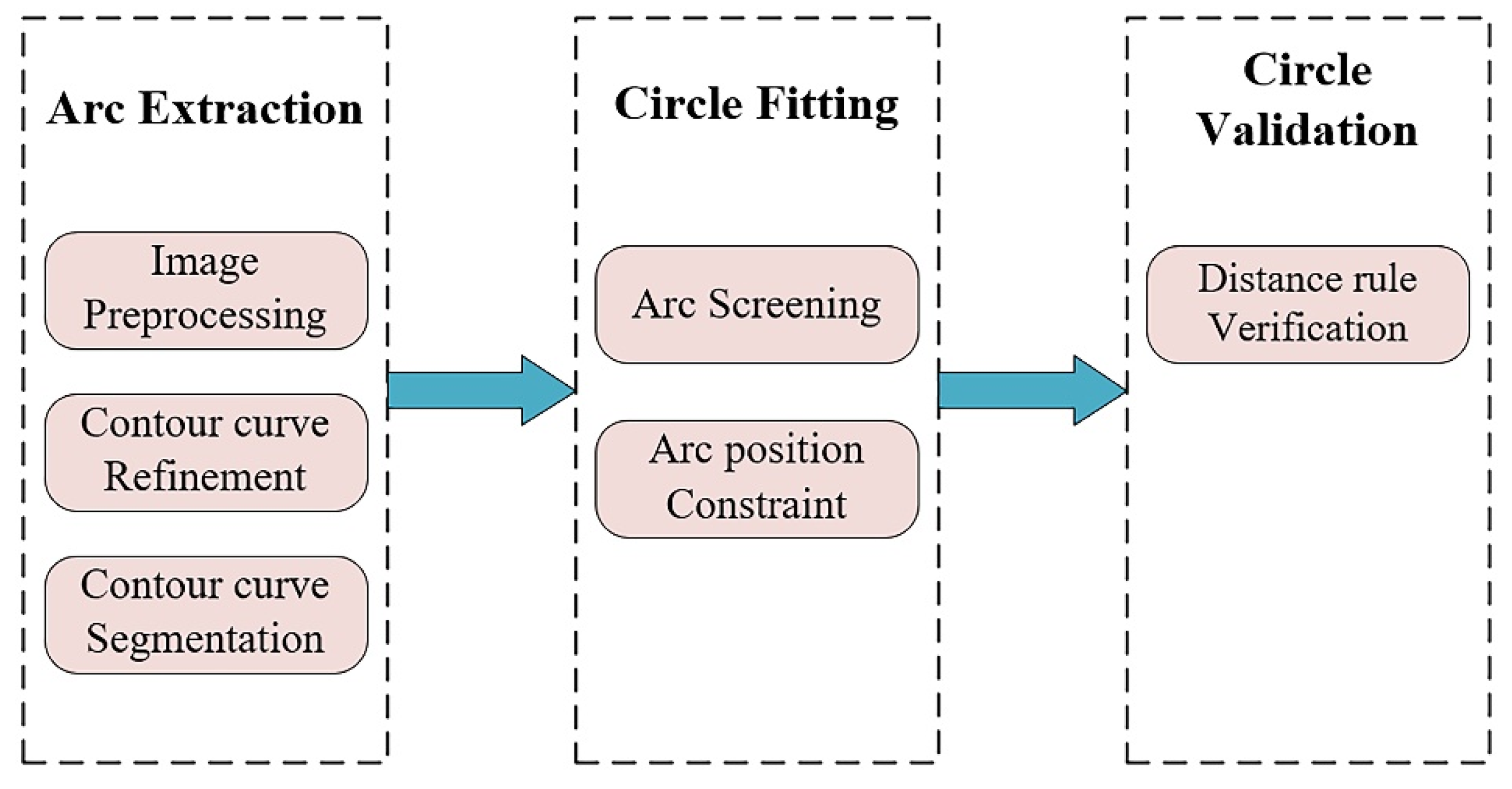

2. Methodology

2.1. Arc Extraction

2.1.1. Image Preprocessing

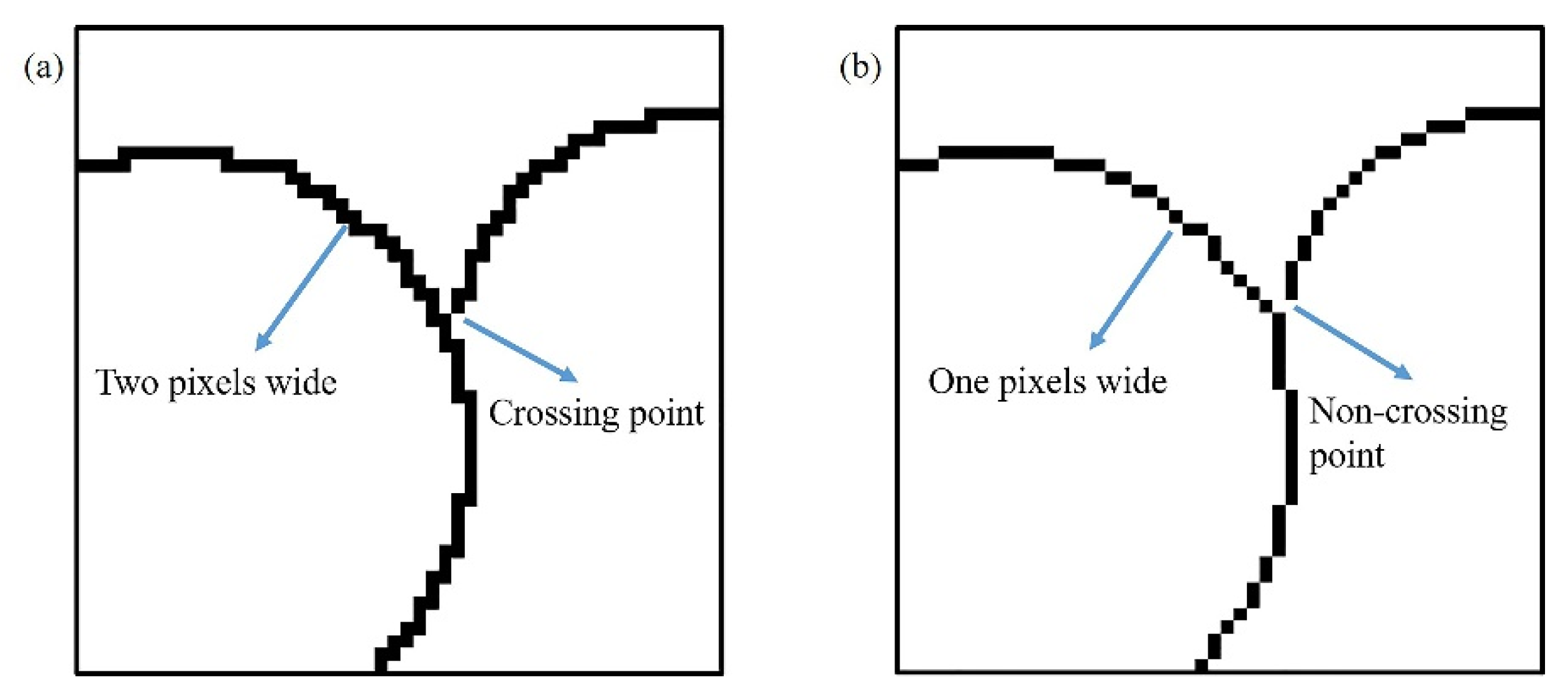

2.1.2. Contour Curve Refinement

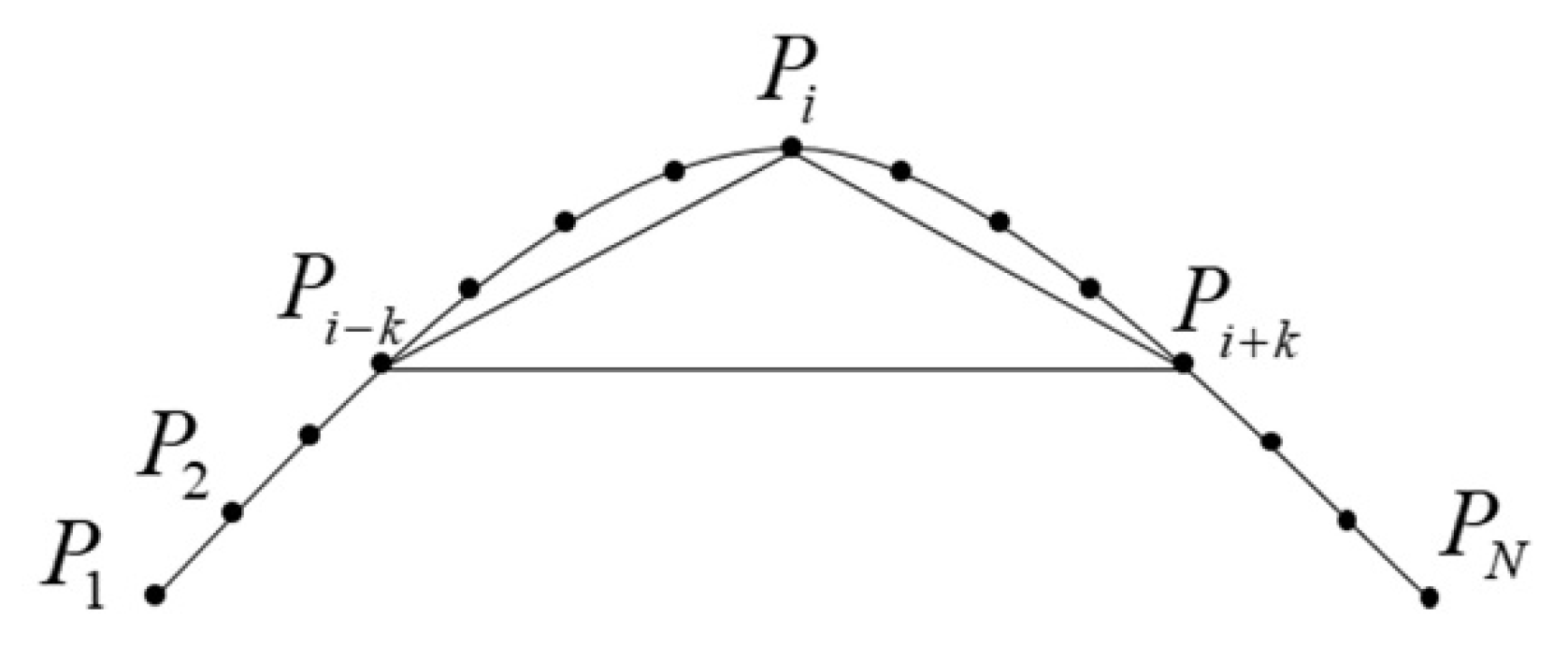

2.1.3. Contour Curve Segmentation

- (a)

- Calculate k according to Equation (7);

- (b)

- The curvature of the point on the curve is estimated;

- (c)

- Calculate Tc based on Equation (8);

- (d)

- Derive the corner set based on Tc;

- (e)

- Positive–negative point detection, sliding window filtering of positive–negative point sequence, and statistical support area determination;

- (f)

- The curvature is estimated by k = 3 in the support area, and the maximum curvature point of each support area is the corner point; thus, is derived;

- (g)

- Split the curve according to and .

2.2. Circle Fitting

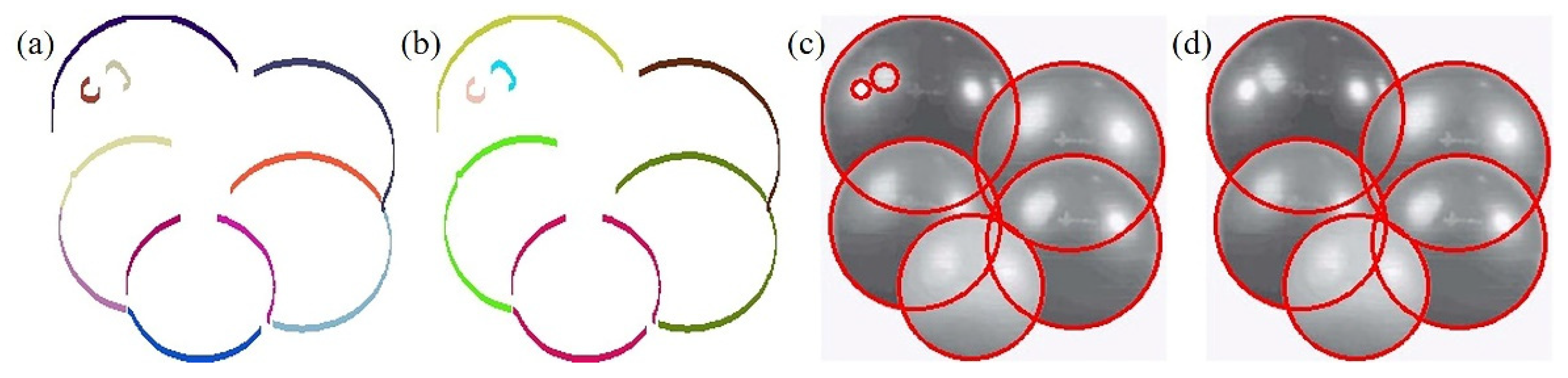

2.2.1. Arc Screening

| Algorithm 1 Contour Curve Segmentation | |||||

| Input: Curve set | |||||

| Output: Curve group set | |||||

| 1 Initialize parameters | |||||

| 2 while do | |||||

| 3 | for do | ||||

| 4 | Initialize curvature set | ||||

| 5 | Initialize direction set | ||||

| 6 | Initialize corner set | ||||

| 7 | Calculate by Equation (7) and then limit the maximum value to 15 | ||||

| 8 | for do | ||||

| 9 | Calculate by Equation (6) and then push it in | ||||

| 10 | Calculate by Equation (9) and then push its coordinate in | ||||

| 11 | end for | ||||

| 12 | Twice smoothed the | ||||

| 13 | Obtain the set of support area form | ||||

| 14 | Set | ||||

| 15 | Initialize | ||||

| 16 | for do | ||||

| 17 | Initialize | ||||

| 18 | for do | ||||

| 19 | Calculate R by Equation (6) and then push it in CH | ||||

| 20 | end for | ||||

| 21 | Find the maximum value in CH and then push its coordinate in C2 | ||||

| 22 | end for | ||||

| 23 | Calculate corner threshold Tc by Equation (8) | ||||

| 24 | Initialize | ||||

| 25 | for do | ||||

| 26 | if then | ||||

| 27 | Push coordinate of P in C1 | ||||

| 28 | end if | ||||

| 29 | end for | ||||

| 30 | Push the set of curves obtained by dividing by C1 and C2 into | ||||

| 31 | end for | ||||

| 32 | end while | ||||

| 33 Return | |||||

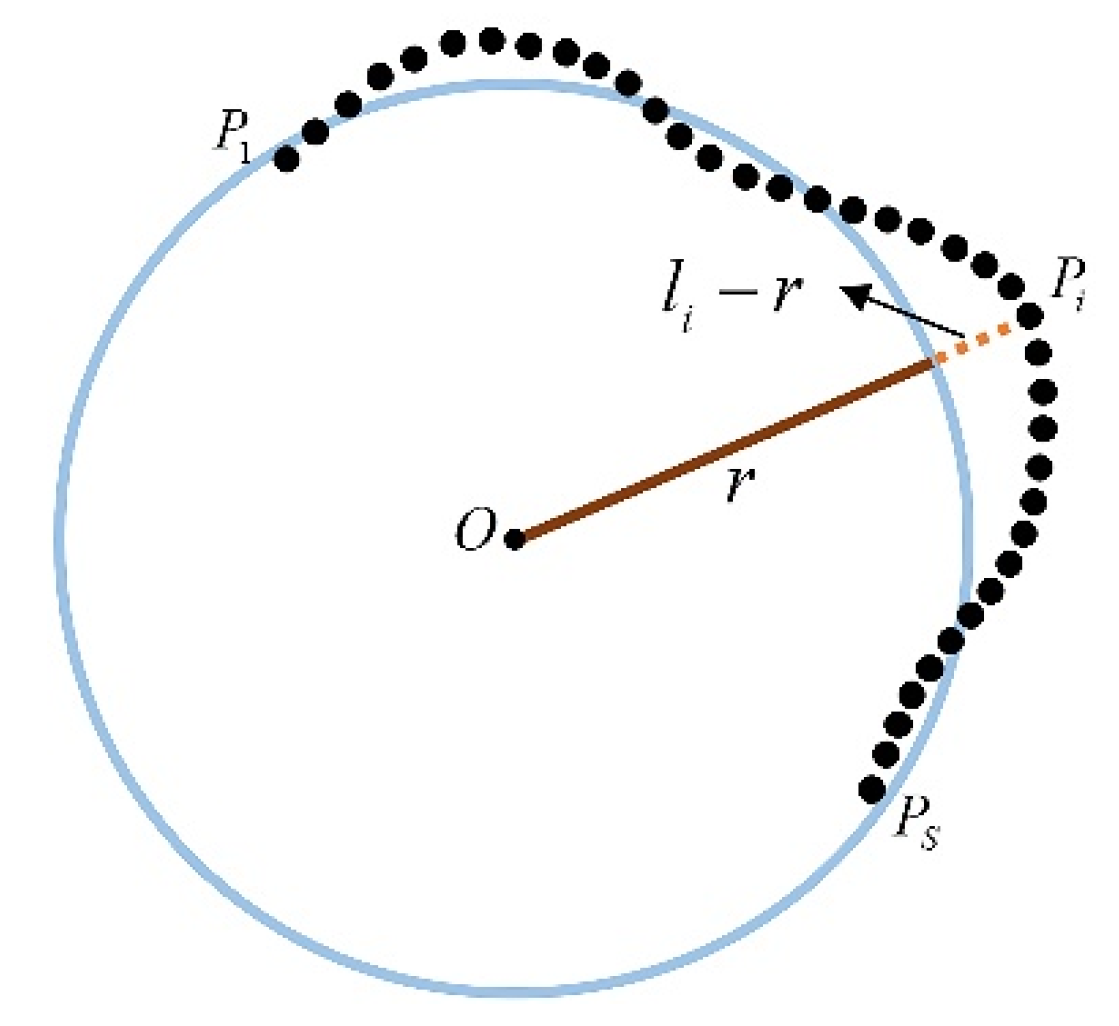

2.2.2. Arc Relative Position Constraint

2.3. Circle Validation

3. Experimental

3.1. Performance Metrics

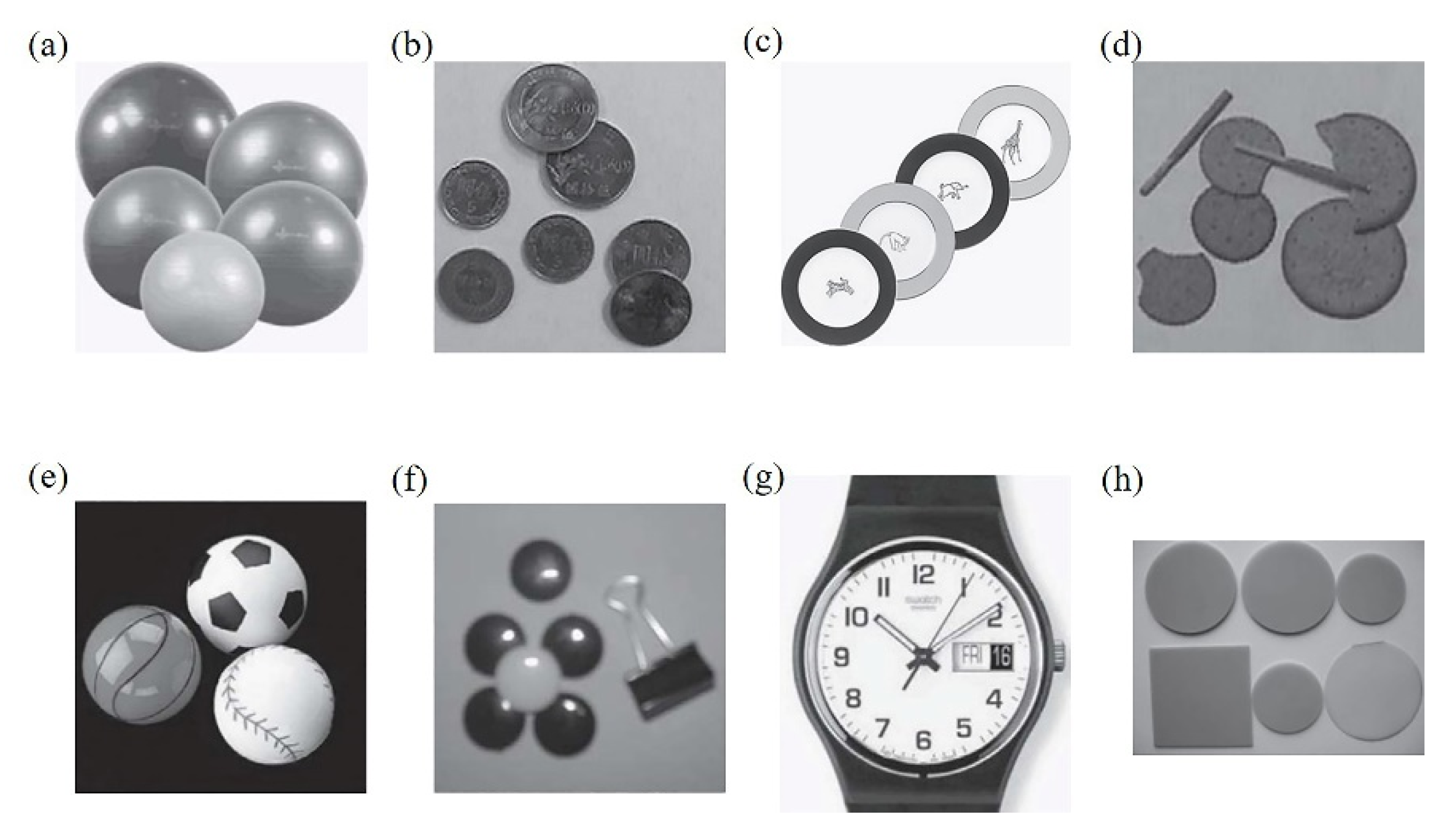

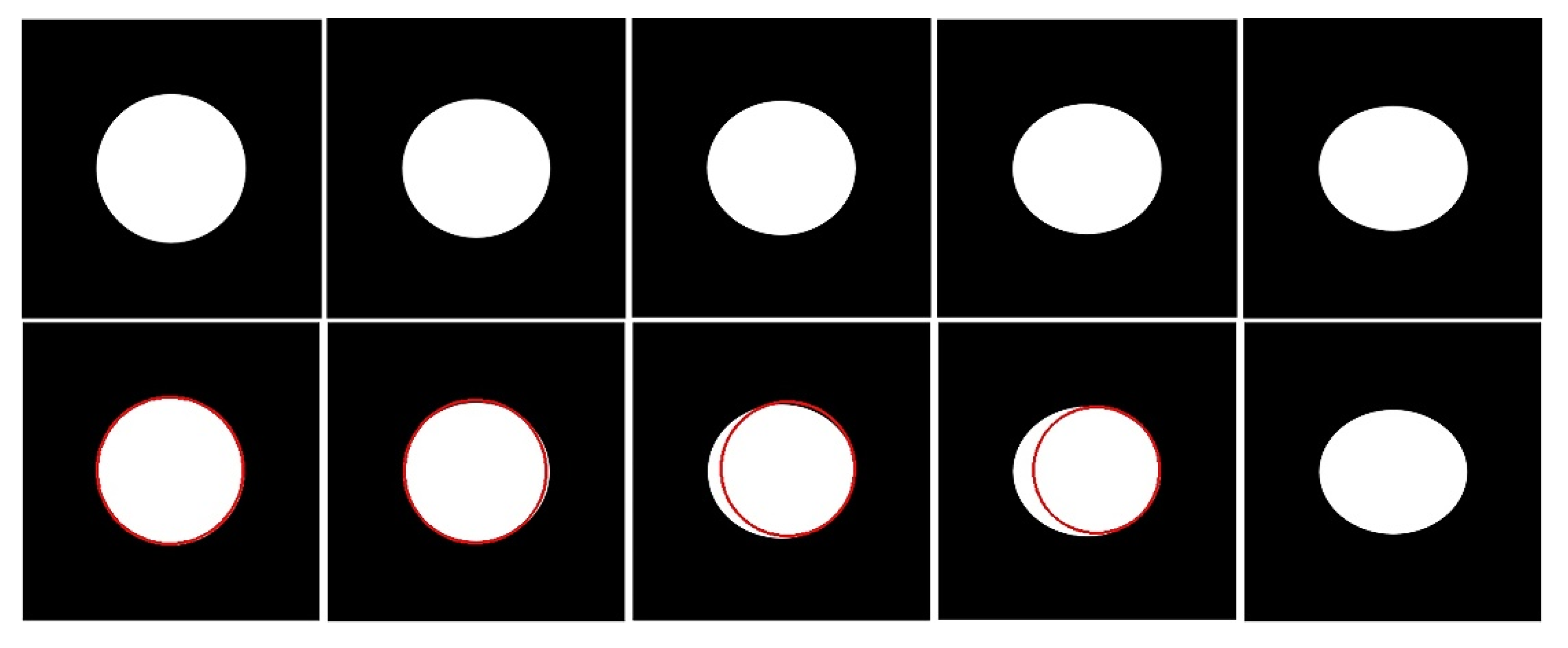

3.2. Dataset

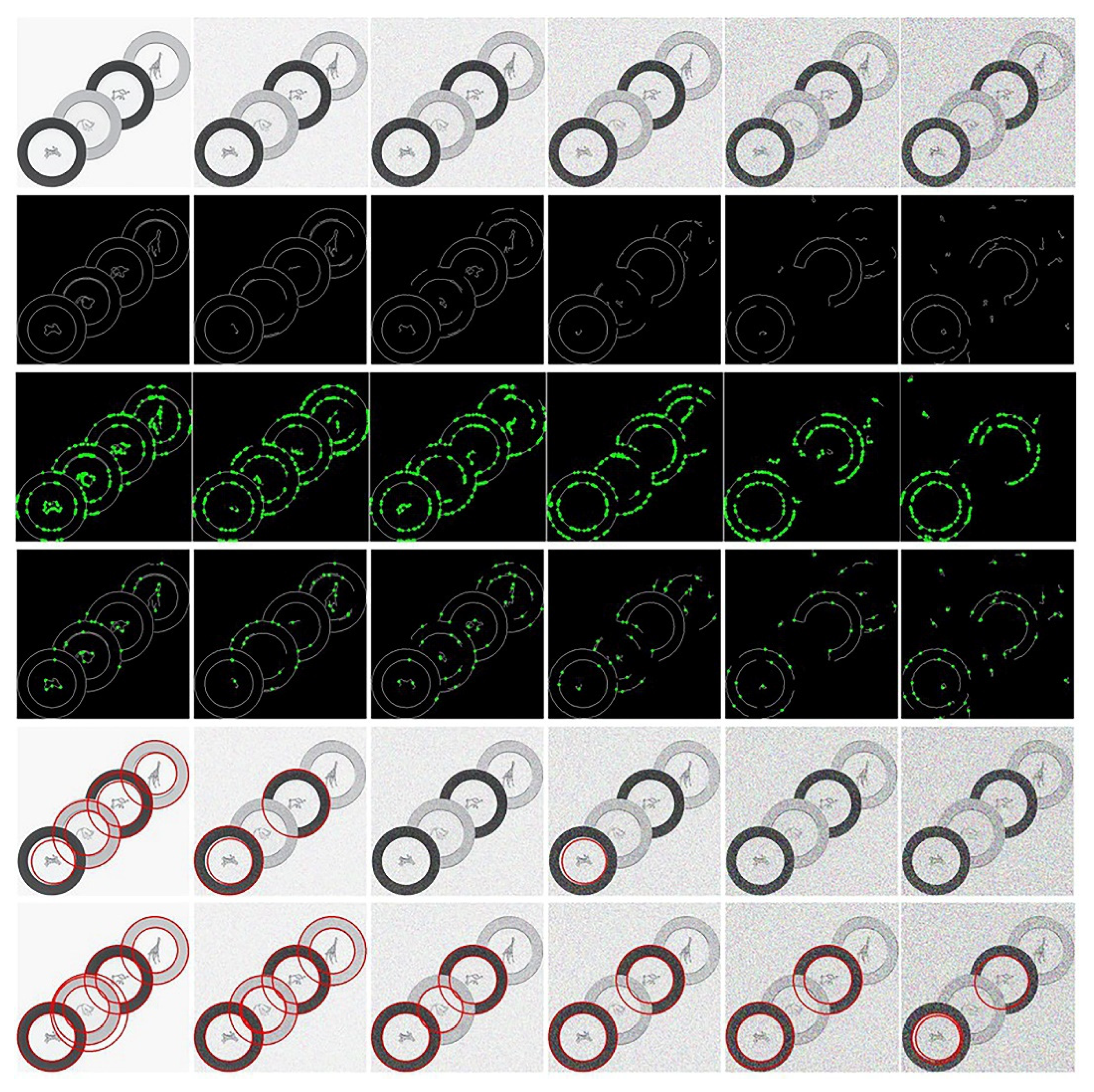

3.3. Method Analysis

3.3.1. Ablative Analysis

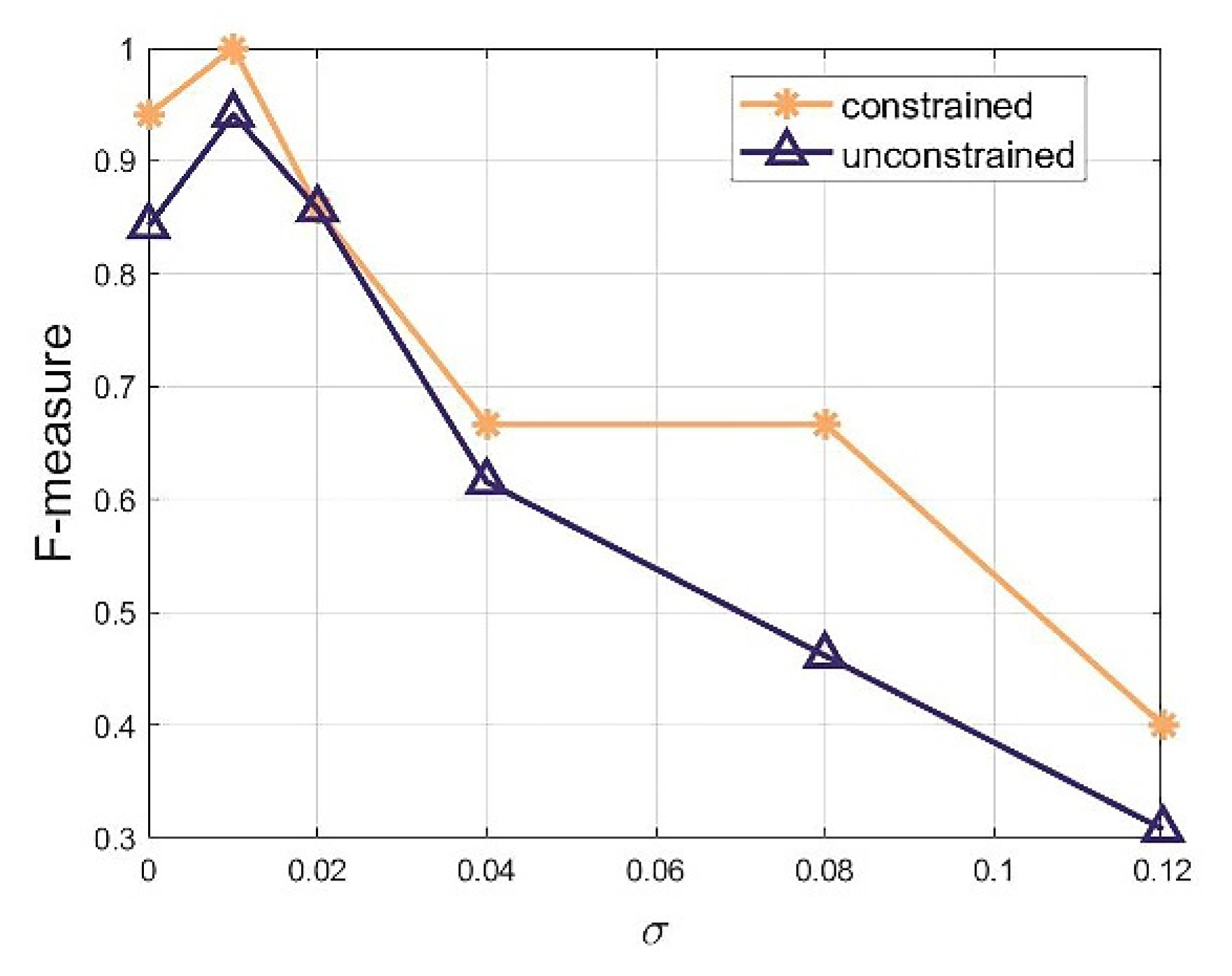

3.3.2. Threshold Analysis

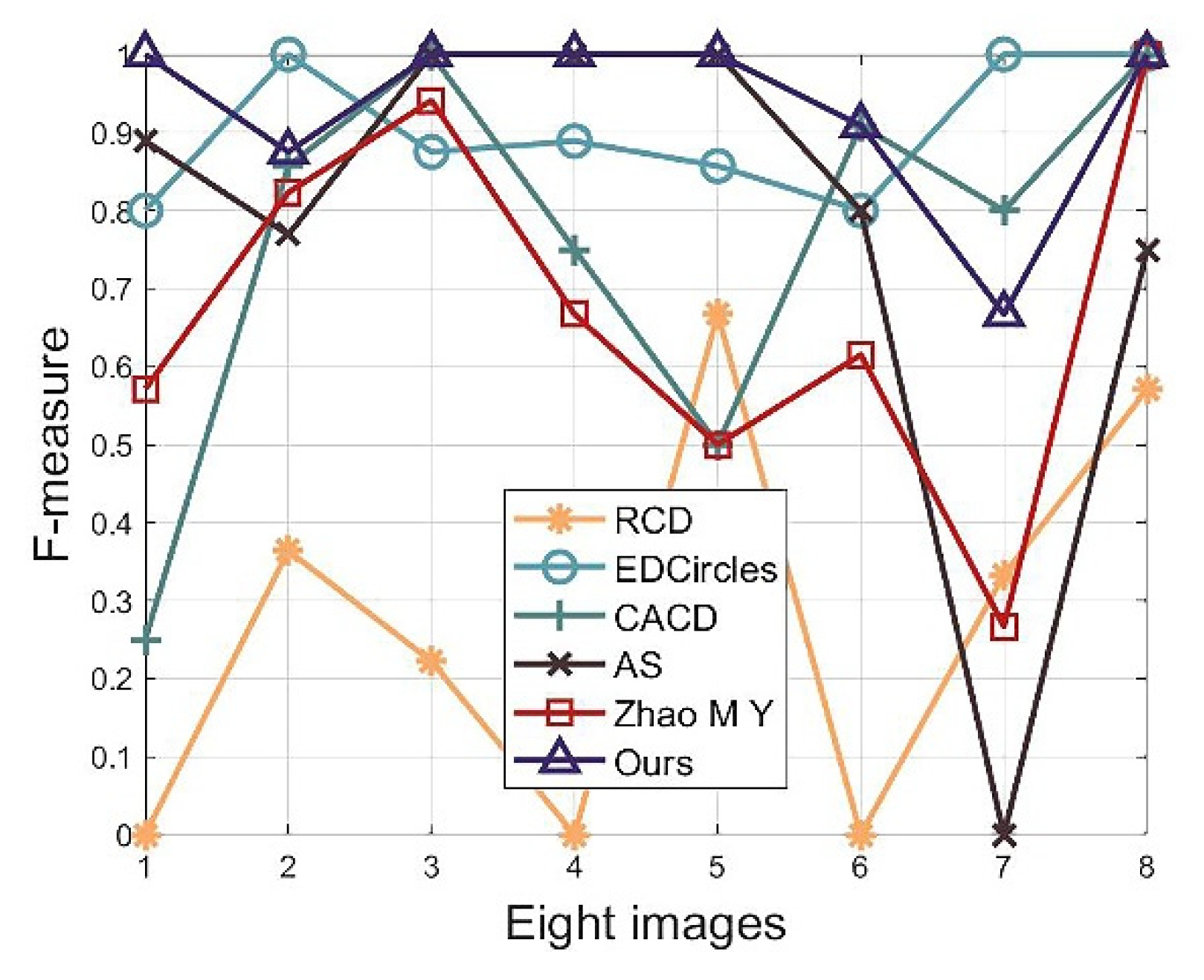

3.4. Performance Comparison

3.5. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yang, H.; Deng, R.; Lu, Y.; Zhu, Z.; Chen, Y.; Roland, J.T.; Lu, L.; Landman, B.A.; Fogo, A.B.; Huo, Y. CircleNet: Anchor-Free Glomerulus Detection with Circle Representation, Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Lima, Peru, 4–8 October 2020; Springer: Cham, Switzerland, 2020; pp. 35–44. [Google Scholar]

- Acharya, V.; Kumar, P. Identification and red blood cell automated counting from blood smear images using computer-aided system. Med. Biol. Eng. Comput. 2018, 56, 483–489. [Google Scholar] [CrossRef] [PubMed]

- Safuan, S.N.M.; Tomari, M.R.M.; Zakaria, W.N.W. White blood cell (WBC) counting analysis in blood smear images using various color segmentation methods. Measurement 2018, 116, 543–555. [Google Scholar] [CrossRef]

- Yu, L.; Zhang, D.; Peng, N.; Liang, X. Research on the application of binary-like coding and Hough circle detection technology in PCB traceability system. J. Ambient. Intell. Humaniz. Comput. 2021, 1–11. [Google Scholar] [CrossRef]

- Zhu, W.B.; Gu, H.; Su, W.M. A fast PCB hole detection method based on geometric features. Meas. Sci. Technol. 2020, 31, 095402. [Google Scholar] [CrossRef]

- Berkaya, S.K.; Gunduz, H.; Ozsen, O.; Akinlar, C.; Gunal, S. On circular traffic sign detection and recognition. Expert Syst. Appl. 2016, 48, 67–75. [Google Scholar] [CrossRef]

- Fleyeh, H.; Davami, E. Eigen-based traffic sign recognition. Iet. Intell. Transp. Sy. 2011, 5, 190–196. [Google Scholar] [CrossRef]

- Wu, B.; Ye, D.; Guo, Y.; Chen, G. Multiple circle recognition and pose estimation for aerospace application. Optik 2017, 145, 148–157. [Google Scholar] [CrossRef]

- Xue, P.; Jiang, Y.L.; Wang, H.M.; He, H. Accurate Detection Method of Aviation Bearing Based on Local Characteristics. Symmetry 2019, 11, 1069. [Google Scholar] [CrossRef] [Green Version]

- Djekoune, A.O.; Messaoudi, K.; Amara, K. Incremental circle hough transform: An improved method for circle detection. Optik 2017, 133, 17–31. [Google Scholar] [CrossRef]

- Soelistio, Y.E.; Postma, E.; Maes, A. Circle-based Eye Center Localization (CECL). In Proceedings of the 2015 14th Iapr International Conference on Machine Vision Applications (Mva), Tokyo, Japan, 18–22 May 2015; pp. 349–352. [Google Scholar]

- Jan, F.; Usman, I.; Khan, S.A.; Malik, S.A. A dynamic non-circular iris localization technique for non-ideal data. Comput. Electr. Eng. 2014, 40, 215–226. [Google Scholar] [CrossRef]

- Wang, S.; Xu, Y.; Zheng, Y.; Zhu, M.; Yao, H.; Xiao, Z. Tracking a golf ball with high-speed stereo vision system. IEEE Trans. Instrum. Meas. 2018, 68, 2742–2754. [Google Scholar] [CrossRef]

- Cornelia, A.; Setyawan, I. Ball Detection Algorithm for Robot Soccer based on Contour and Gradient Hough Circle Transform. In Proceedings of the 2017 4th International Conference on Information Technology, Computer, and Electrical Engineering (Icitacee), Semarang, Indonesia, 18–19 October 2017; pp. 136–141. [Google Scholar]

- Smith, E.H.B.; Lamiroy, B. Circle Detection Performance Evaluation Revisited, Proceedings of the International Workshop on Graphics Recognition, Sousse, Tunisia, 20–21 August 2015; Springer: Cham, Switzerland, 2015; pp. 3–18. [Google Scholar]

- Ballard, D.H. Generalizing the Hough transform to detect arbitrary shapes. Pattern Recogn. 1981, 13, 111–122. [Google Scholar] [CrossRef] [Green Version]

- Duda, R.O.; Hart, P.E. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Schuster, G.M.; Katsaggelos, A.K. Robust circle detection using a weighted MSE estimator. In Proceedings of the Icip: International Conference on Image Processing, Singapore, 24–27 October 2004; Volume 1–5, pp. 2111–2114. [Google Scholar]

- Xu, L.; Oja, E.; Kultanen, P. A new curve detection method: Randomized Hough transform (RHT). Pattern Recogn. Lett. 1990, 11, 331–338. [Google Scholar] [CrossRef]

- Yao, Z.J.; Yi, W.D. Curvature aided Hough transform for circle detection. Expert Syst. Appl. 2016, 51, 26–33. [Google Scholar] [CrossRef]

- Su, Y.Q.; Zhang, X.N.; Cuan, B.N.; Liu, Y.H.; Wang, Z.H. A sparse structure for fast circle detection. Pattern Recogn. 2020, 97, 107022. [Google Scholar] [CrossRef]

- De Marco, T.; Cazzato, D.; Leo, M.; Distante, C. Randomized circle detection with isophotes curvature analysis. Pattern Recogn. 2015, 48, 411–421. [Google Scholar] [CrossRef]

- Chung, K.L.; Huang, Y.H.; Shen, S.M.; Krylov, A.S.; Yurin, D.V.; Semeikina, E.V. Efficient sampling strategy and refinement strategy for randomized circle detection. Pattern Recogn. 2012, 45, 252–263. [Google Scholar] [CrossRef]

- Le, T.; Duan, Y. Circle Detection on Images by Line Segment and Circle Completeness. IEEE Image Proc. 2016, 3648–3652. [Google Scholar]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A Fast Line Segment Detector with a False Detection Control. IEEE Trans. Pattern Anal. 2010, 32, 722–732. [Google Scholar] [CrossRef]

- Akinlar, C.; Topal, C. EDCircles: A real-time circle detector with a false detection control. Pattern Recogn. 2013, 46, 725–740. [Google Scholar] [CrossRef]

- Akinlar, C.; Topal, C. Edpf: A Real-Time Parameter-Free Edge Segment Detector with a False Detection Control. Int. J. Pattern Recogn. 2012, 26, 1255002. [Google Scholar] [CrossRef]

- Topal, C.; Akinlar, C. Edge Drawing: A combined real-time edge and segment detector. J. Vis. Commun. Image Represent. 2012, 23, 862–872. [Google Scholar] [CrossRef]

- Topal, C.; Ozsen, O.; Akinlar, C. Real-time Edge Segment Detection with Edge Drawing Algorithm. In Proceedings of the 7th International Symposium on Image and Signal Processing and Analysis (ISPA), Dubrovnik, Croatia, 4–6 September 2011; pp. 313–318. [Google Scholar]

- Zhao, M.Y.; Jia, X.H.; Yan, D.M. An occlusion-resistant circle detector using inscribed triangles. Pattern Recogn. 2021, 109, 107588. [Google Scholar] [CrossRef]

- Pottmann, H.; Wallner, J.; Huang, Q.X.; Yang, Y.L. Integral invariants for robust geometry processing. Comput. Aided Geom. Des. 2009, 26, 37–60. [Google Scholar] [CrossRef]

- Lu, C.S.; Xia, S.Y.; Huang, W.M.; Shao, M.; Fu, Y. Circle Detection by Arc-Support Line Segments. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 76–80. [Google Scholar]

- Dasgupta, S.; Das, S.; Biswas, A.; Abraham, A. Automatic circle detection on digital images with an adaptive bacterial foraging algorithm. Soft Comput. 2010, 14, 1151–1164. [Google Scholar] [CrossRef]

- Ayala-Ramirez, V.; Garcia-Capulin, C.H.; Perez-Garcia, A.; Sanchez-Yanez, R.E. Circle detection on images using genetic algorithms. Pattern Recogn. Lett. 2006, 27, 652–657. [Google Scholar] [CrossRef]

- Teng, S.W.; Sadat, R.M.N.; Lu, G.J. Effective and efficient contour-based corner detectors. Pattern Recogn. 2015, 48, 2185–2197. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Kanchanatripop, P.; Zhang, D.F. Adaptive Image Edge Extraction Based on Discrete Algorithm and Classical Canny Operator. Symmetry 2020, 12, 1749. [Google Scholar] [CrossRef]

- Jia, Q.; Fan, X.; Luo, Z.; Song, L.; Qiu, T. A fast ellipse detector using projective invariant pruning. IEEE Trans. Image Process. 2017, 26, 3665–3679. [Google Scholar] [CrossRef] [PubMed]

- McClelland, G.H. Nasty Data: Unruly, Ill-Mannered Observations Can Ruin Your Analysis; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Wang, B.; Wang, D.; Chen, L. Quick Locating Algorithm for Turning Points in Discrete Point Set of Curve. J. Syst. Sci. Inf. 2004, 2, 721–726. [Google Scholar]

- Kåsa, I. A circle fitting procedure and its error analysis. IEEE Trans. Instrum. Meas. 1976, 8–14. [Google Scholar] [CrossRef]

- Lopez-Martinez, A.; Cuevas, F.J. Automatic circle detection on images using the Teaching Learning Based Optimization algorithm and gradient analysis. Appl. Intell. 2019, 49, 2001–2016. [Google Scholar] [CrossRef]

- Zhang, H.Q.; Wiklund, K.; Andersson, M. A fast and robust circle detection method using isosceles triangles sampling. Pattern Recogn. 2016, 54, 218–228. [Google Scholar] [CrossRef]

- Gonzalez, M.R.; Martinez, M.E.; Cosio-Leon, M.; Cervantes, H.; Brizuela, C.A. Multiple circle detection in images: A simple evolutionary algorithm approach and a new benchmark of images. Pattern Anal. Appl. 2021, 24, 1583–1603. [Google Scholar] [CrossRef]

| Images | RCD | EDCircles | CACD | AS | Zhao MY | Ours |

|---|---|---|---|---|---|---|

| Stability-ball | 2237 | 80.80 | 59.50 | 29.40 | 97.70 | 24.67 |

| Coin | 2648 | 126.1 | 105.1 | 45.90 | 131.3 | 37.78 |

| Plates | 3276 | 234.8 | 316.7 | 68.80 | 207.3 | 73.45 |

| Cake | 2357 | 79.30 | 81.00 | 27.00 | 123.7 | 28.85 |

| Ball | 2473 | 84.40 | 58.90 | 34.20 | 112.0 | 29.45 |

| Gobang | 1921 | 70.80 | 56.60 | 17.50 | 84.30 | 30.12 |

| Swatch | 4485 | 47.70 | 33.90 | 19.10 | 66.70 | 19.97 |

| Insulator | 1442 | 95.10 | 52.40 | 28.70 | 108.3 | 27.68 |

| Method | Precision | Recall | F-Measure | Time (ms) |

|---|---|---|---|---|

| RCD | 0.2756 | 0.2075 | 0.1952 | 6.1543 |

| EDCircles | 0.8209 | 0.8313 | 0.7952 | 0.3321 |

| CACD | 0.7511 | 0.7488 | 0.7242 | 0.9485 |

| AS | 0.8364 | 0.7675 | 0.7868 | 0.0986 |

| Zhao M Y | 0.7489 | 0.8497 | 0.7885 | 0.3624 |

| Ours | 0.8116 | 0.8569 | 0.8067 | 0.0859 |

| Method | Precision | Recall | F-Measure | Time (ms) |

|---|---|---|---|---|

| RCD | 0.2452 | 0.1887 | 0.1836 | 5.5795 |

| EDCircles | 0.8115 | 0.6484 | 0.6784 | 0.2836 |

| CACD | 0.6805 | 0.5016 | 0.5205 | 0.5975 |

| AS | 0.8456 | 0.6175 | 0.6899 | 0.0895 |

| Zhao M Y | 0.6597 | 0.7544 | 0.6767 | 0.3495 |

| Ours | 0.7341 | 0.7759 | 0.7151 | 0.0688 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Deng, H.; Zhang, Z.; Xu, Q. A Fast Circle Detector with Efficient Arc Extraction. Symmetry 2022, 14, 734. https://doi.org/10.3390/sym14040734

Liu Y, Deng H, Zhang Z, Xu Q. A Fast Circle Detector with Efficient Arc Extraction. Symmetry. 2022; 14(4):734. https://doi.org/10.3390/sym14040734

Chicago/Turabian StyleLiu, Yang, Honggui Deng, Zeyu Zhang, and Qiguo Xu. 2022. "A Fast Circle Detector with Efficient Arc Extraction" Symmetry 14, no. 4: 734. https://doi.org/10.3390/sym14040734

APA StyleLiu, Y., Deng, H., Zhang, Z., & Xu, Q. (2022). A Fast Circle Detector with Efficient Arc Extraction. Symmetry, 14(4), 734. https://doi.org/10.3390/sym14040734