A Large-Scale Mouse Pose Dataset for Mouse Pose Estimation

Abstract

:1. Introduction

- We propose a large-scale mouse pose dataset for mouse pose estimation. It makes up for the shortage of uniform and standardized datasets in mouse pose estimation.

- We design a fast and convenient keypoint annotation tool. The features of being easy to reproduce and employ make it have extensive potential applications in related work.

- A simple and efficient pipeline as a benchmark is proposed for evaluation on our dataset.

2. Related Work

2.1. Datasets for the Mouse Poses

2.2. Annotating Software and Hardware Devices

2.3. Algorithms and Baselines of Pose Estimation

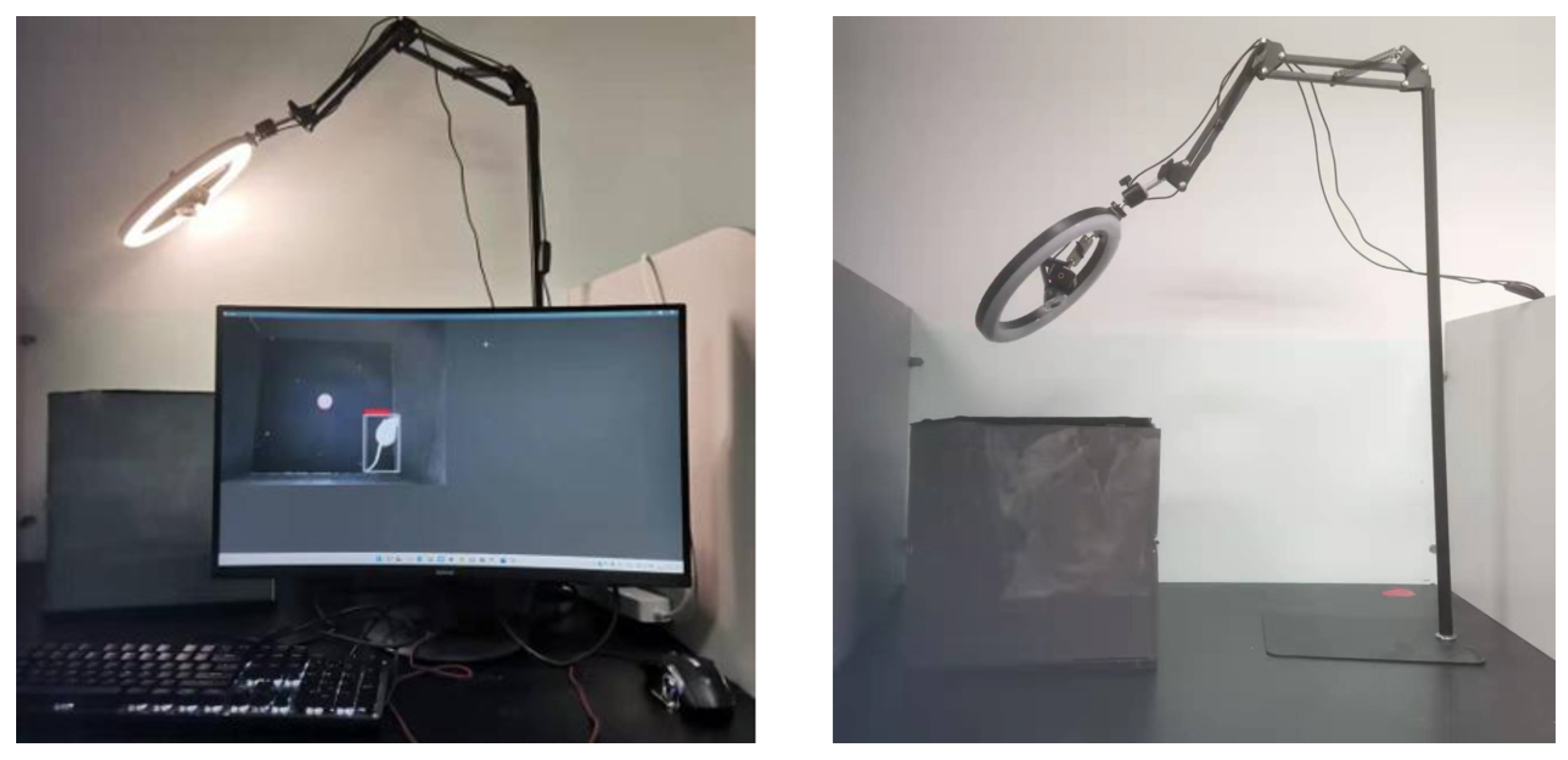

3. Capturing Device

4. Data Description

- A series of 2D RGB images of mice in the experimental setting.

- The bounding box for positioning the mouse in the image.

- Annotated mouse keypoint coordinates.

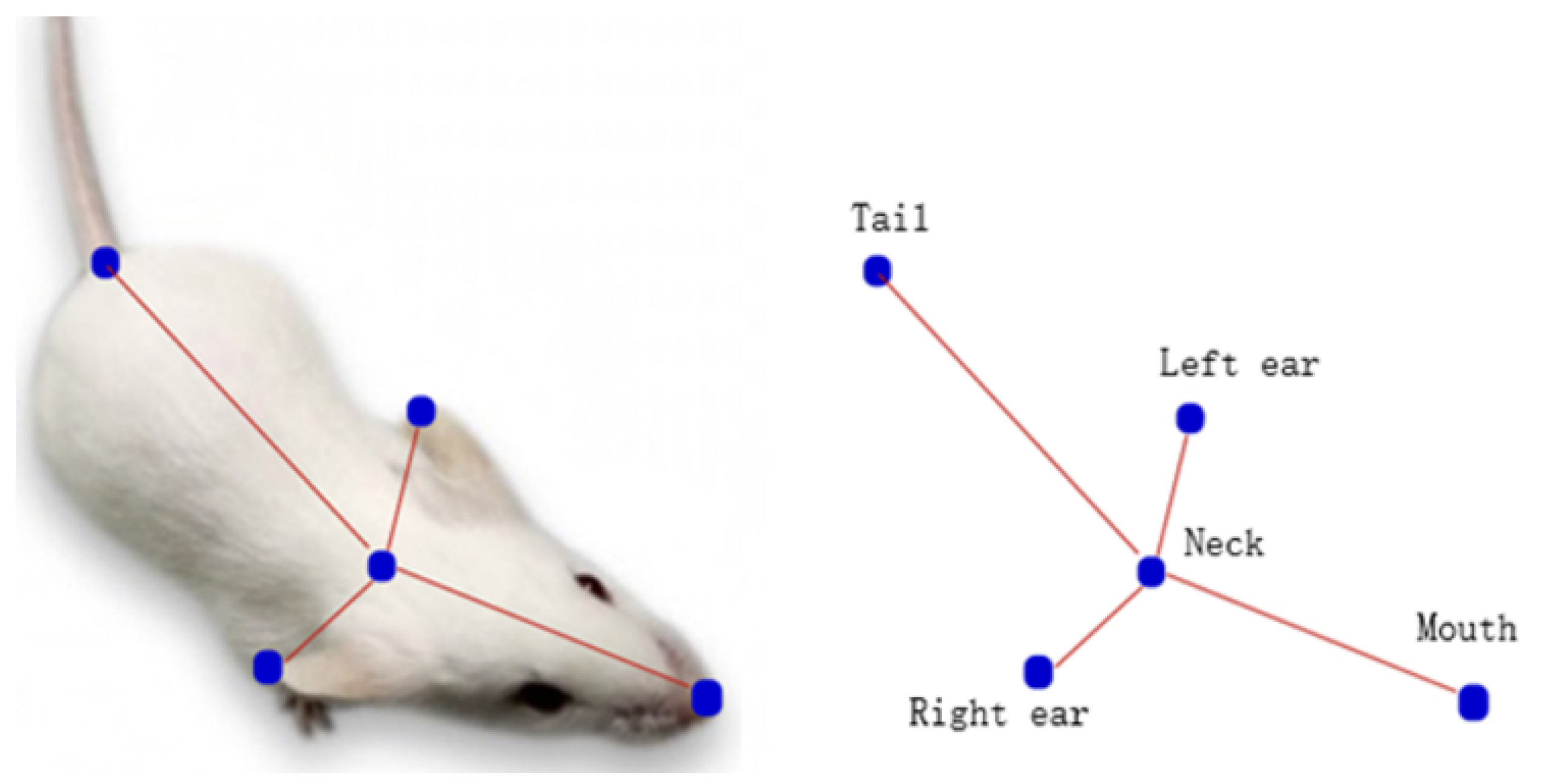

4.1. Definitions of Mouse 2D Joint Points

4.2. Color Images of a Mouse

4.3. Mouse 2D Joint Point Annotations

4.4. Variability and Generalization Capabilities

5. Benchmark—2D Keypoint Estimations

5.1. Mouse Detection

5.2. Mouse Pose Estimation

5.3. Evaluation Standard

5.4. Experimental Settings

5.5. Experimental Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Sample Availability

References

- Lewejohann, L.; Hoppmann, A.M.; Kegel, P.; Kritzler, M.; Krüger, A.; Sachser, N. Behavioral phenotyping of a murine model of alzheimer’s disease in a seminaturalistic environment using rfid tracking. Behav. Res. Methods 2009, 41, 850–856. [Google Scholar] [CrossRef] [PubMed]

- Geuther, B.Q.; Peer, A.; He, H.; Sabnis, G.; Philip, V.M.; Kumar, V. Action detection using a neural network elucidates the genetics of mouse grooming behavior. Elife 2021, 10, e63207. [Google Scholar] [CrossRef] [PubMed]

- Hutchinson, L.; Steiert, B.; Soubret, A.; Wagg, J.; Phipps, A.; Peck, R.; Charoin, J.E.; Ribba, B. Models and machines: How deep learning will take clinical pharmacology to the next level. CPT Pharmacomet. Syst. Pharmacol. 2019, 8, 131. [Google Scholar] [CrossRef]

- Ritter, S.; Barrett, D.G.; Santoro, A.; Botvinick, M.M. Cognitive psychology for deep neural networks: A shape bias case study. In Proceedings of the International Conference on Machine Learning (PMLR 2017), Sydney, Australia, 6–11 August 2017; pp. 2940–2949. [Google Scholar]

- Fang, H.-S.; Xie, S.; Tai, Y.-W.; Lu, C. Rmpe: Regional multi-person pose estimation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2334–2343. [Google Scholar]

- Supancic, J.S.; Rogez, G.; Yang, Y.; Shotton, J.; Ramanan, D. Depth-based hand pose estimation: Data, methods, and challenges. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1868–1876. [Google Scholar]

- Toshev, A.; Szegedy, C. Deeppose: Human pose estimation via deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar]

- Hu, B.; Seybold, B.; Yang, S.; Ross, D.; Sud, A.; Ruby, G.; Liu, Y. Optical mouse: 3d mouse pose from single-view video. arXiv 2021, arXiv:2106.09251. [Google Scholar]

- Li, X.; Cai, C.; Zhang, R.; Ju, L.; He, J. Deep cascaded convolutional models for cattle pose estimation. Comput. Electron. Agric. 2019, 164, 104885. [Google Scholar] [CrossRef]

- Badger, M.; Wang, Y.; Modh, A.; Perkes, A.; Kolotouros, N.; Pfrommer, B.G.; Schmidt, M.F.; Daniilidis, K. 3d bird reconstruction: A dataset, model, and shape recovery from a single view. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 1–17. [Google Scholar]

- Psota, E.T.; Mittek, M.; Pérez, L.C.; Schmidt, T.; Mote, B. Multi-pig part detection and association with a fully-convolutional network. Sensors 2019, 19, 852. [Google Scholar] [CrossRef] [Green Version]

- Sanakoyeu, A.; Khalidov, V.; McCarthy, M.S.; Vedaldi, A.; Neverova, N. Transferring dense pose to proximal animal classes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5233–5242. [Google Scholar]

- Pereira, T.D.; Aldarondo, D.E.; Willmore, L.; Kislin, M.; Wang, S.S.; Murthy, M.; Shaevitz, J.W. Fast animal pose estimation using deep neural networks. Nat. Methods 2019, 16, 117–125. [Google Scholar] [CrossRef] [PubMed]

- Behringer, R.; Gertsenstein, M.; Nagy, K.V.; Nagy, A. Manipulating the Mouse Embryo: A Laboratory Manual, 4th ed.; Cold Spring Harbor Laboratory Press: Cold Spring Harbor, NY, USA, 2014. [Google Scholar]

- Andriluka, M.; Iqbal, U.; Insafutdinov, E.; Pishchulin, L.; Milan, A.; Gall, J.; Schiele, B. Posetrack: A benchmark for human pose estimation and tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5167–5176. [Google Scholar]

- Andriluka, M.; Pishchulin, L.; Gehler, P.; Schiele, B. 2d human pose estimation: New benchmark and state of the art analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3686–3693. [Google Scholar]

- Chen, Y.; Wang, Z.; Peng, Y.; Zhang, Z.; Yu, G.; Sun, J. Cascaded pyramid network for multi-person pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7103–7112. [Google Scholar]

- Insafutdinov, E.; Pishchulin, L.; Andres, B.; Andriluka, M.; Schiele, B. Deepercut: A deeper, stronger, and faster multi-person pose estimation model. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 34–50. [Google Scholar]

- Iqbal, U.; Milan, A.; Gall, J. Posetrack: Joint multi-person pose estimation and tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2011–2020. [Google Scholar]

- Tompson, J.J.; Jain, A.; LeCun, Y.; Bregler, C. Joint training of a convolutional network and a graphical model for human pose estimation. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar] [CrossRef]

- Liu, X.; Yu, S.-Y.; Flierman, N.; Loyola, S.; Kamermans, M.; Hoogland, T.M.; De Zeeuw, C.I. Optiflex: Video-based animal pose estimation using deep learning enhanced by optical flow. BioRxiv 2020. [Google Scholar] [CrossRef]

- Machado, A.S.; Darmohray, D.M.; Fayad, J.; Marques, H.G.; Carey, M.R. A quantitative framework for whole-body coordination reveals specific deficits in freely walking ataxic mice. Elife 2015, 4, e07892. [Google Scholar] [CrossRef] [PubMed]

- Marks, M.; Qiuhan, J.; Sturman, O.; von Ziegler, L.; Kollmorgen, S.; von der Behrens, W.; Mante, V.; Bohacek, J.; Yanik, M.F. Deep-learning based identification, pose estimation and end-to-end behavior classification for interacting primates and mice in complex environments. bioRxiv 2021. [Google Scholar] [CrossRef]

- Pereira, T.D.; Tabris, N.; Li, J.; Ravindranath, S.; Papadoyannis, E.S.; Wang, Z.Y.; Turner, D.M.; McKenzie-Smith, G.; Kocher, S.D.; Falkner, A.L.; et al. Sleap: Multi-animal pose tracking. BioRxiv 2020. [Google Scholar] [CrossRef]

- Ou-Yang, T.H.; Tsai, M.L.; Yen, C.-T.; Lin, T.-T. An infrared range camera-based approach for three-dimensional locomotion tracking and pose reconstruction in a rodent. J. Neurosci. Methods 2011, 201, 116–123. [Google Scholar] [CrossRef] [PubMed]

- Hong, W.; Kennedy, A.; Burgos-Artizzu, X.P.; Zelikowsky, M.; Navonne, S.G.; Perona, P.; Anderson, D.J. Automated measurement of mouse social behaviors using depth sensing, video tracking, and machine learning. Proc. Natl. Acad. Sci. USA 2015, 112, E5351–E5360. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xiao, B.; Wu, H.; Wei, Y. Simple baselines for human pose estimation and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 466–481. [Google Scholar]

- Zhou, F.; Jiang, Z.; Liu, Z.; Chen, F.; Chen, L.; Tong, L.; Yang, Z.; Wang, H.; Fei, M.; Li, L.; et al. Structured context enhancement network for mouse pose estimation. IEEE Trans. Circuits Syst. Video Technol. 2021. [Google Scholar] [CrossRef]

- Xu, C.; Govindarajan, L.N.; Zhang, Y.; Cheng, L. Lie-x: Depth image based articulated object pose estimation, tracking, and action recognition on lie groups. Int. J. Comput. Vis. 2017, 123, 454–478. [Google Scholar] [CrossRef] [Green Version]

- Mu, J.; Qiu, W.; Hager, G.D.; Yuille, A.L. Learning from synthetic animals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12386–12395. [Google Scholar]

- Sun, J.J.; Karigo, T.; Chakraborty, D.; Mohanty, S.P.; Wild, B.; Sun, Q.; Chen, C.; Anderson, D.J.; Perona, P.; Yue, Y.; et al. The multi-agent behavior dataset: Mouse dyadic social interactions. arXiv 2021, arXiv:2104.02710. [Google Scholar]

- Marshall, J.D.; Klibaite, U.; Gellis, A.J.; Aldarondo, D.E.; Olveczky, B.P.; Dunn, T.W. The pair-r24m dataset for multi-animal 3d pose estimation. bioRxiv 2021. [Google Scholar] [CrossRef]

- Lauer, J.; Zhou, M.; Ye, S.; Menegas, W.; Nath, T.; Rahman, M.M.; Di Santo, V.; Soberanes, D.; Feng, G.; Murthy, V.N.; et al. Multi-animal pose estimation and tracking with deeplabcut. BioRxiv 2021. [Google Scholar] [CrossRef]

- Günel, S.; Rhodin, H.; Morales, D.; Campagnolo, J.; Ramdya, P.; Fua, P. Deepfly3d, a deep learning-based approach for 3d limb and appendage tracking in tethered, adult drosophila. Elife 2019, 8, e48571. [Google Scholar] [CrossRef]

- Mathis, M.W.; Mathis, A. Deep learning tools for the measurement of animal behavior in neuroscience. Curr. Opin. Neurobiol. 2020, 60, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Salem, G.; Krynitsky, J.; Hayes, M.; Pohida, T.; Burgos-Artizzu, X. Three-dimensional pose estimation for laboratory mouse from monocular images. IEEE Trans. Image Process. 2019, 28, 4273–4287. [Google Scholar] [CrossRef]

- Nanjappa, A.; Cheng, L.; Gao, W.; Xu, C.; Claridge-Chang, A.; Bichler, Z. Mouse pose estimation from depth images. arXiv 2015, arXiv:1511.07611. [Google Scholar]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. Deeplabcut: Markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 2018, 21, 1281–1289. [Google Scholar] [CrossRef] [PubMed]

- Nath, T.; Mathis, A.; Chen, A.C.; Patel, A.; Bethge, M.; Mathis, M.W. Using deeplabcut for 3d markerless pose estimation across species and behaviors. Nat. Protoc. 2019, 14, 2152–2176. [Google Scholar] [CrossRef]

- Graving, J.M.; Chae, D.; Naik, H.; Li, L.; Koger, B.; Costelloe, B.R.; Couzin, I.D. Deepposekit, a software toolkit for fast and robust animal pose estimation using deep learning. Elife 2019, 8, e47994. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Park, H.S. Multiview supervision by registration. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Seattle, WA, USA, 14–19 June 2020; pp. 420–428. [Google Scholar]

- Wang, Z.; Mirbozorgi, S.A.; Ghovanloo, M. An automated behavior analysis system for freely moving rodents using depth image. Med. Biol. Eng. Comput. 2018, 56, 1807–1821. [Google Scholar] [CrossRef] [PubMed]

- Moon, G.; Yu, S.; Wen, H.; Shiratori, T.; Lee, K.M. Interhand2. 6m: A dataset and baseline for 3d interacting hand pose estimation from a single rgb image. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 548–564. [Google Scholar]

- Martinez, J.; Hossain, R.; Romero, J.; Little, J.J. A simple yet effective baseline for 3d human pose estimation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2640–2649. [Google Scholar]

- TzuTa Lin. Labelimg. 2015. Available online: https://github.com/tzutalin/labelImg (accessed on 1 March 2022).

- Bochkovskiy, A.; Wang, C.; Liao, H.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Lin, T.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

| Joint ID | Semantic Name |

|---|---|

| Tag 1 | Mouth |

| Tag 2 | Left Ear |

| Tag 3 | Right Ear |

| Tag 4 | Neck |

| Tag 5 | Tail Root |

| Item | Object Detection |

|---|---|

| Ground Truth | 7844 |

| Detected | 7535 |

| Average Precision | 0.91 |

| Counting Accuracy | 0.96 |

| Frames Per Second | 30 |

| Item | Pose Estimation |

|---|---|

| Ground-Truth | 37,502 |

| Percentage of Correct Keypoints (PCK) | 85% |

| Frames Per Second | 27 |

| Method | Intersection Over Union (IOU) | Percentage of Correct Keypoints (PCK) |

|---|---|---|

| Object Detection | 0.9 | \ |

| Pose Estimation | \ | 85% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, J.; Wu, J.; Liao, X.; Wang, S.; Wang, M. A Large-Scale Mouse Pose Dataset for Mouse Pose Estimation. Symmetry 2022, 14, 875. https://doi.org/10.3390/sym14050875

Sun J, Wu J, Liao X, Wang S, Wang M. A Large-Scale Mouse Pose Dataset for Mouse Pose Estimation. Symmetry. 2022; 14(5):875. https://doi.org/10.3390/sym14050875

Chicago/Turabian StyleSun, Jun, Jing Wu, Xianghui Liao, Sijia Wang, and Mantao Wang. 2022. "A Large-Scale Mouse Pose Dataset for Mouse Pose Estimation" Symmetry 14, no. 5: 875. https://doi.org/10.3390/sym14050875

APA StyleSun, J., Wu, J., Liao, X., Wang, S., & Wang, M. (2022). A Large-Scale Mouse Pose Dataset for Mouse Pose Estimation. Symmetry, 14(5), 875. https://doi.org/10.3390/sym14050875