Learning Multifeature Correlation Filter and Saliency Redetection for Long-Term Object Tracking

Abstract

:1. Introduction

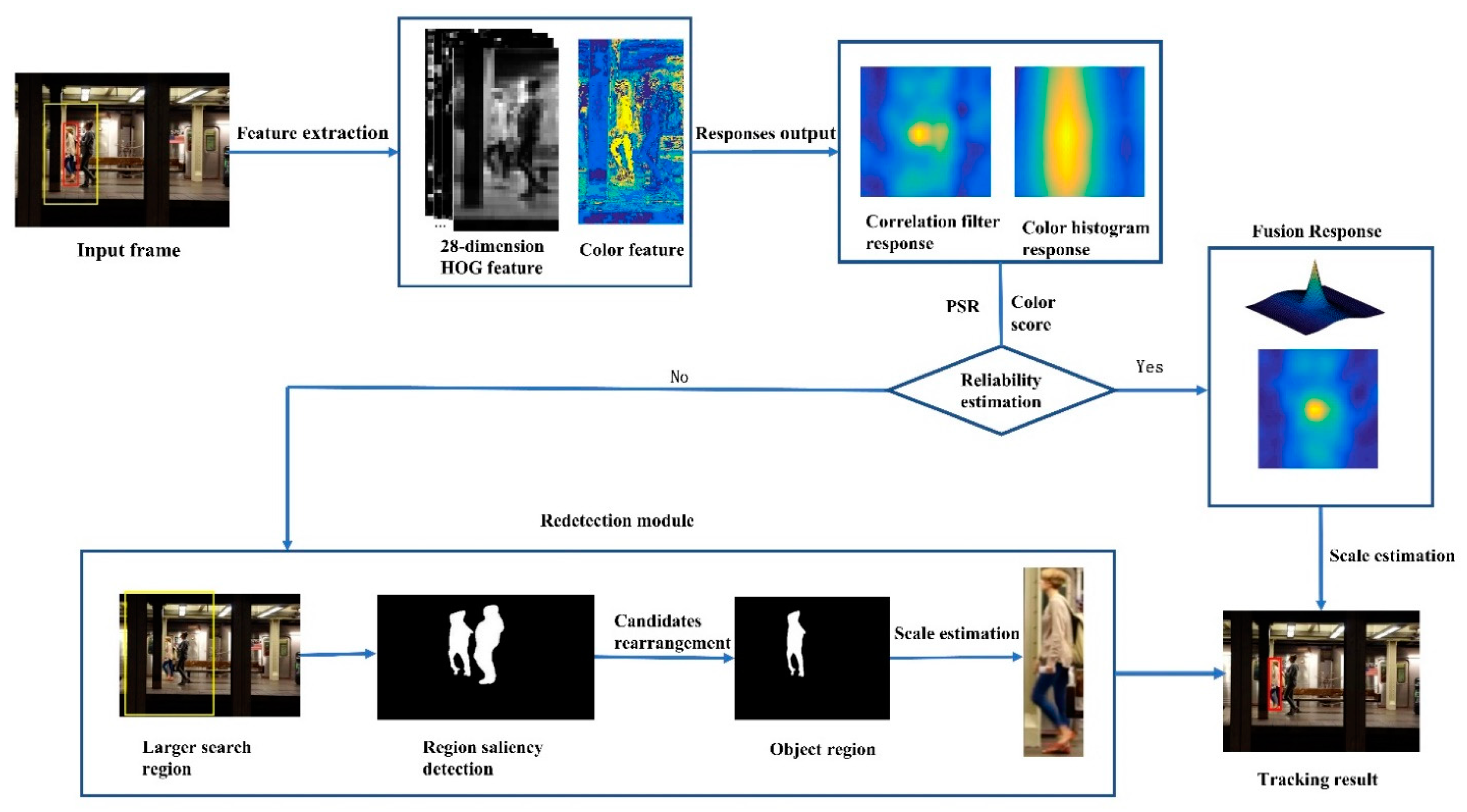

- As a crucial part of our tracker, the redetector part contains a reliable estimation module and redetector module. The proposed tracking-by-detection part integrates with multiple features in the correlation filter, which is equipped with color and HOG features for tracking.

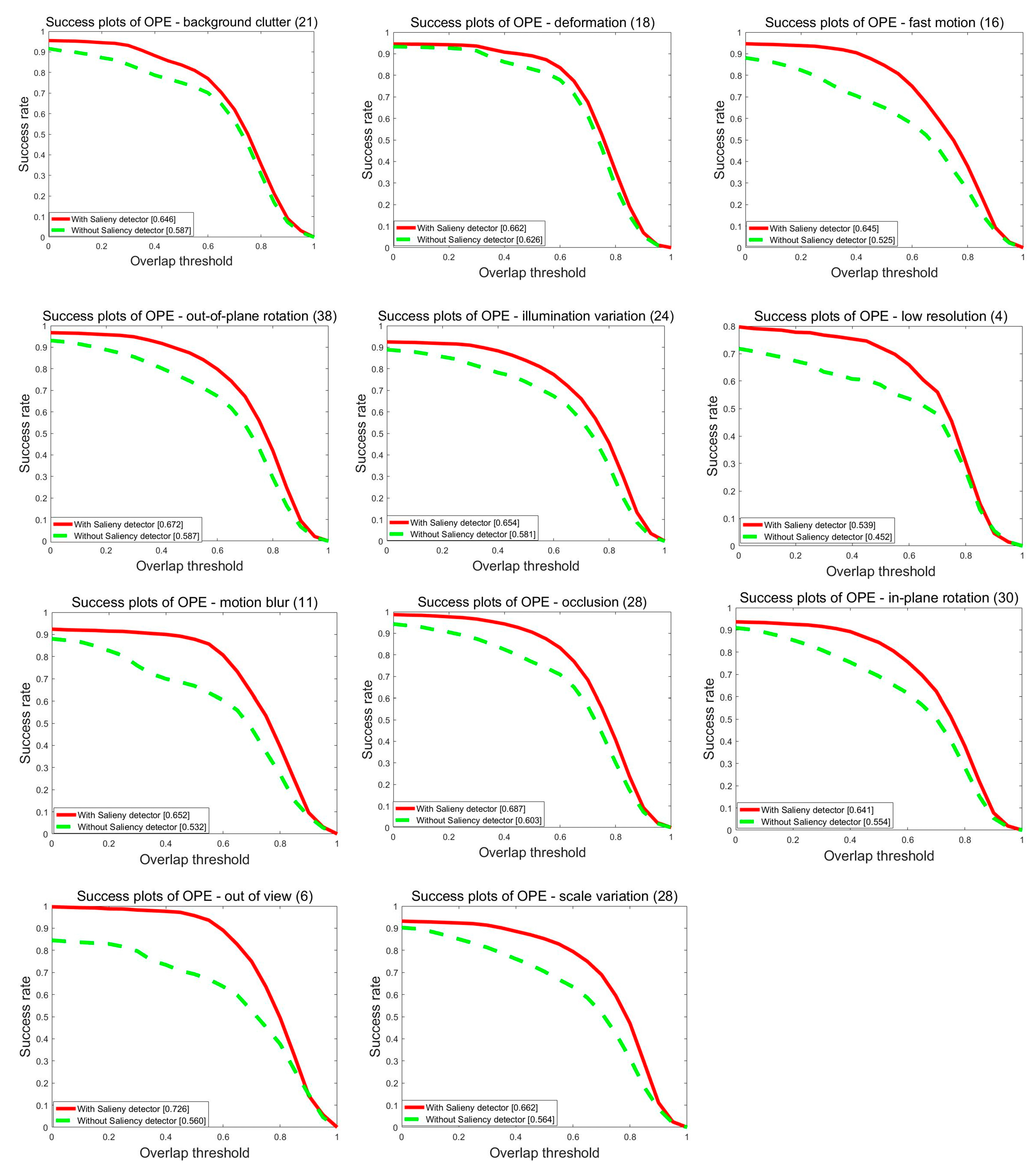

- The estimation module determines whether it is necessary to replace the previous tracking result and whether to start the redetection process. Considering the tracking speed and performance, we employ a saliency detector for redetecting the tracked object, which is fast and valid for object detection in a limited region. This re-detection module is more effective and can locate the object after it reappears in the image.

2. Related Work

2.1. Correlation-Filter-Based Tracking

2.2. Tracking-by-Detection

2.3. Long-Term Tracking

3. Method

3.1. Framework

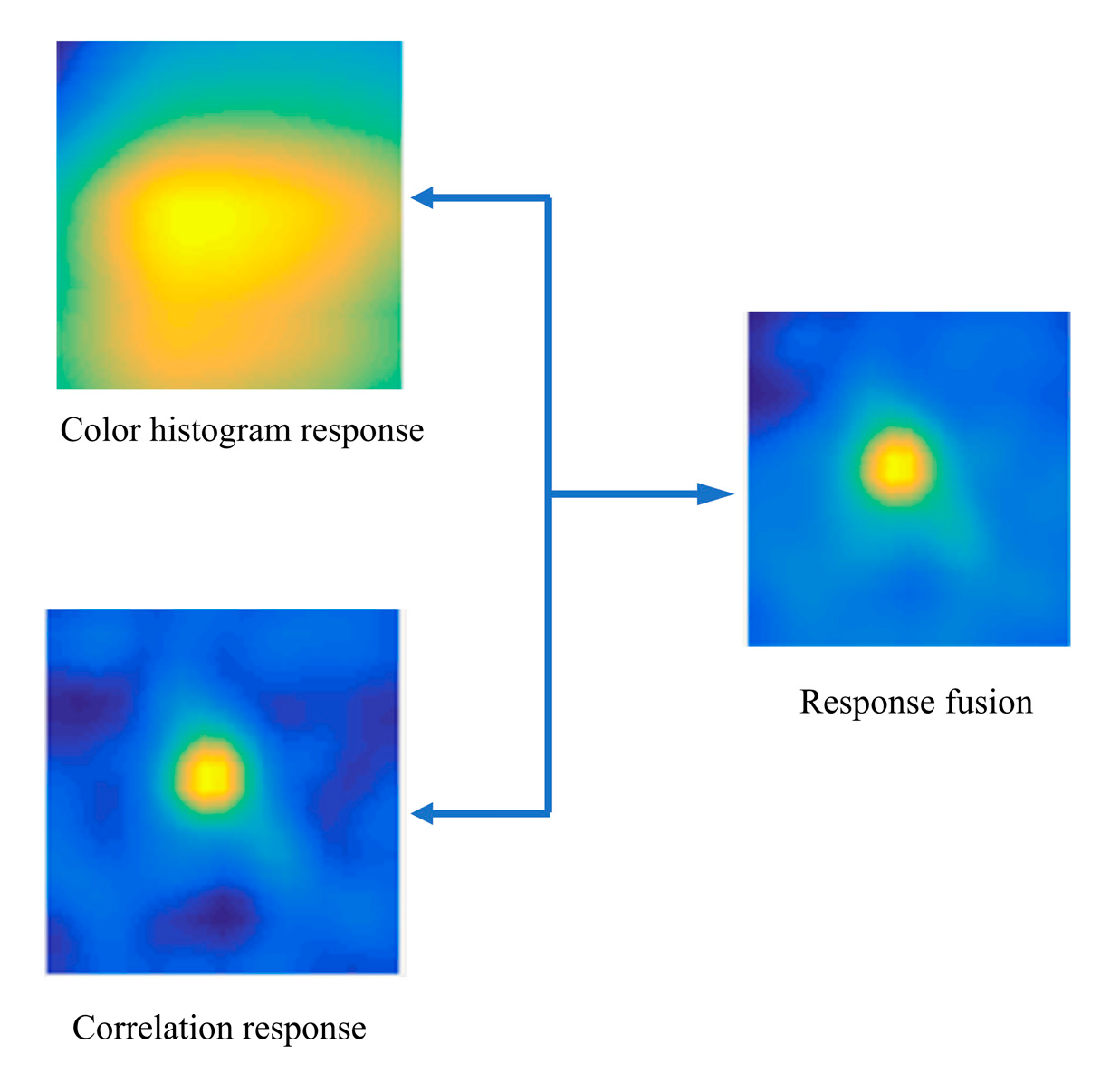

3.2. Multifeature Fusion

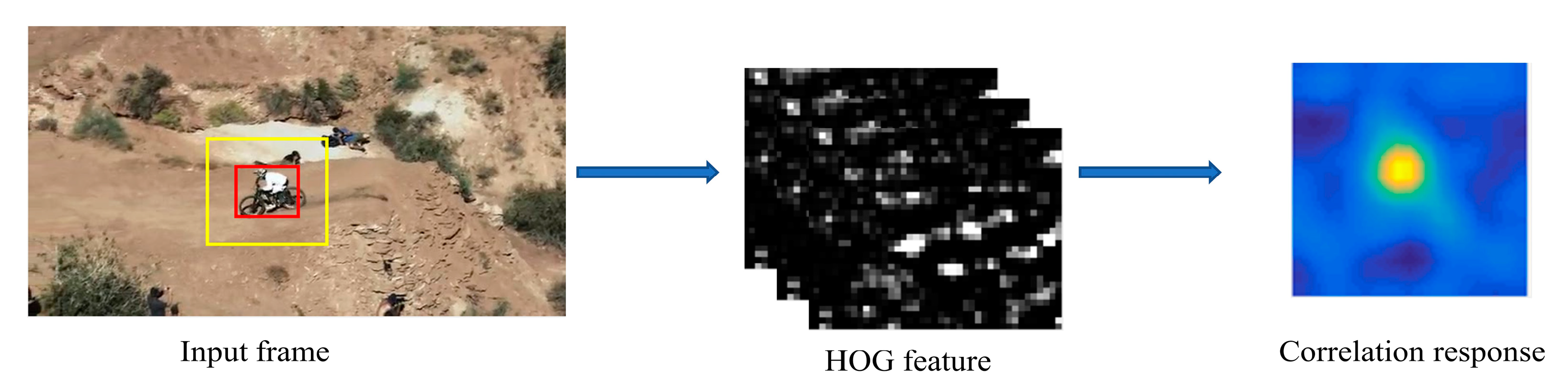

- Correlation Filter Response with HOG Features

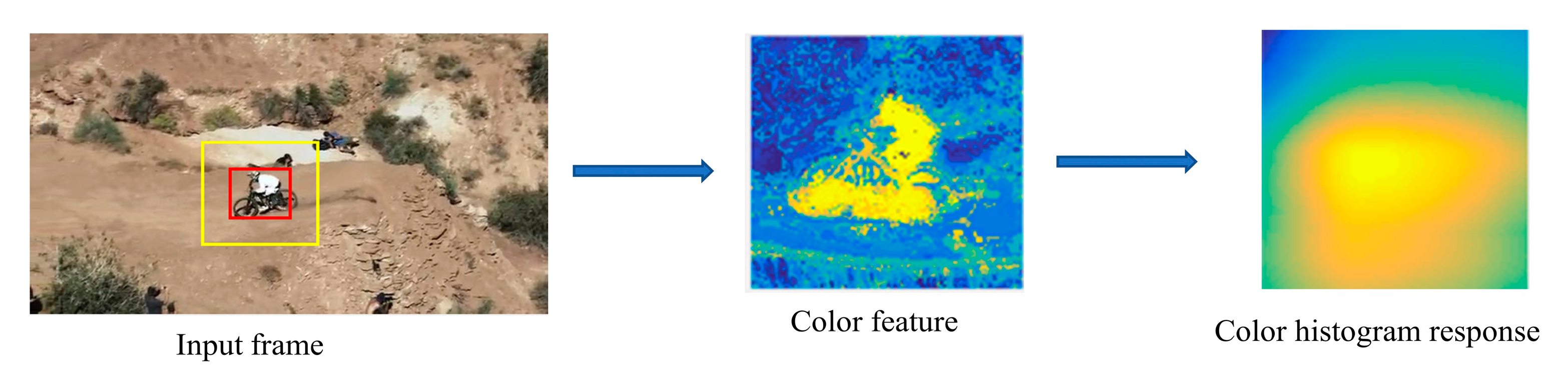

- Color Histogram Response

- Response Fusion

3.3. Redetection Module

3.3.1. Reliability Estimation

3.3.2. Saliency Detection and Candidates Sort

3.4. Algorithm Description

| Algorithm 1: Long-term tracker with multiple features and saliency redetection (MSLT). |

| Input: The initial position , tracked object position , and scale of the ()th frame; Output: The predicted object position and scale of the th frame; Repeat: 1. Extract features and compute related HOG features and color features maps in the search region of the th frame. 2. Compute the correlation filter and color histogram responses, respectively. 3. Compute the reliability estimation PSR value and color score by using Equations (13) and (15). 4. If and , then Start the saliency detection in a larger search region, and obtain N salient candidates ; If N = 1 Take this object as saliency detection object; Else Compute the correlations between salient candidates and original correlation filter template using (17). Sort the responses of all candidate boxes, and set the maximum response as the final salient object; End if Compute the center coordinate () of the salient object using Equations (19) and (20), and estimate the related scale; Else Fuse the response using Equation (12), and set the maximum value of the fusion response as the central position of the target; estimate the scale of tracked object and obtain the final tracking result. End if 5. Update the correlation filter model and color histogram by using (3), (4), (9), and (10). |

4. Experiments

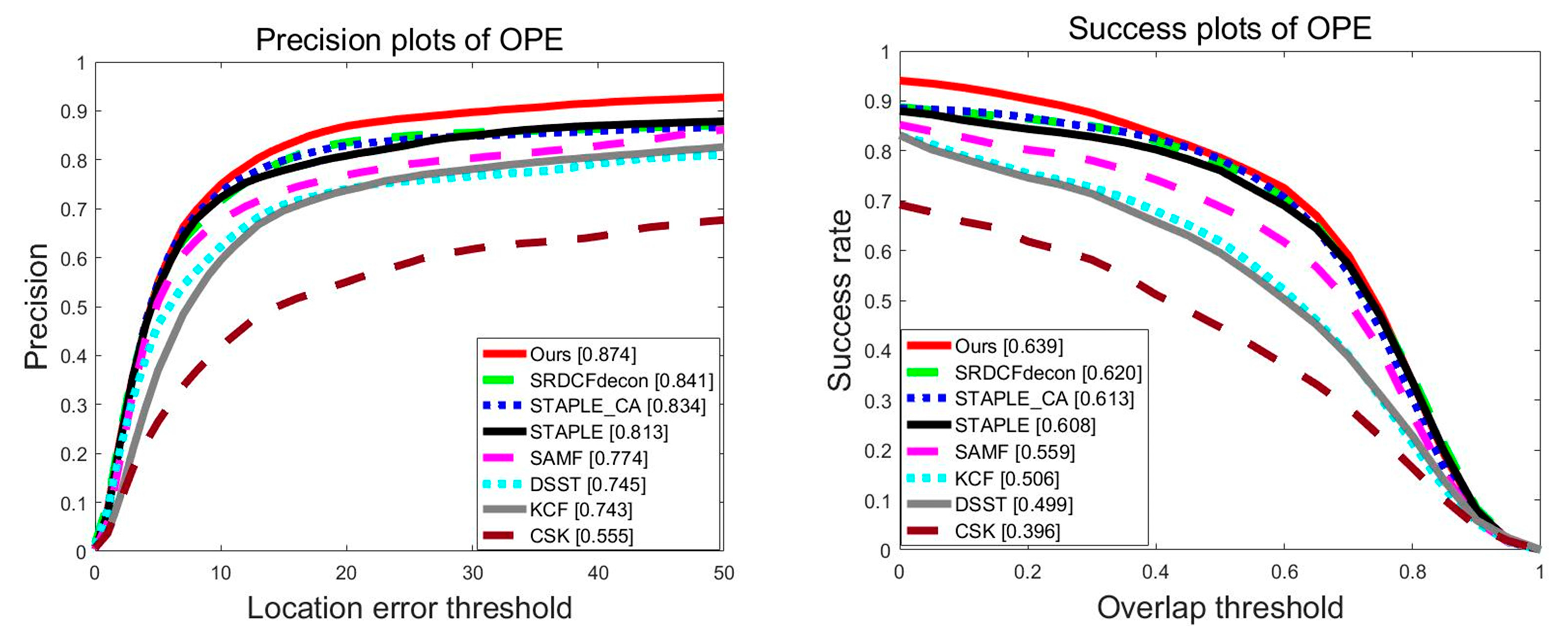

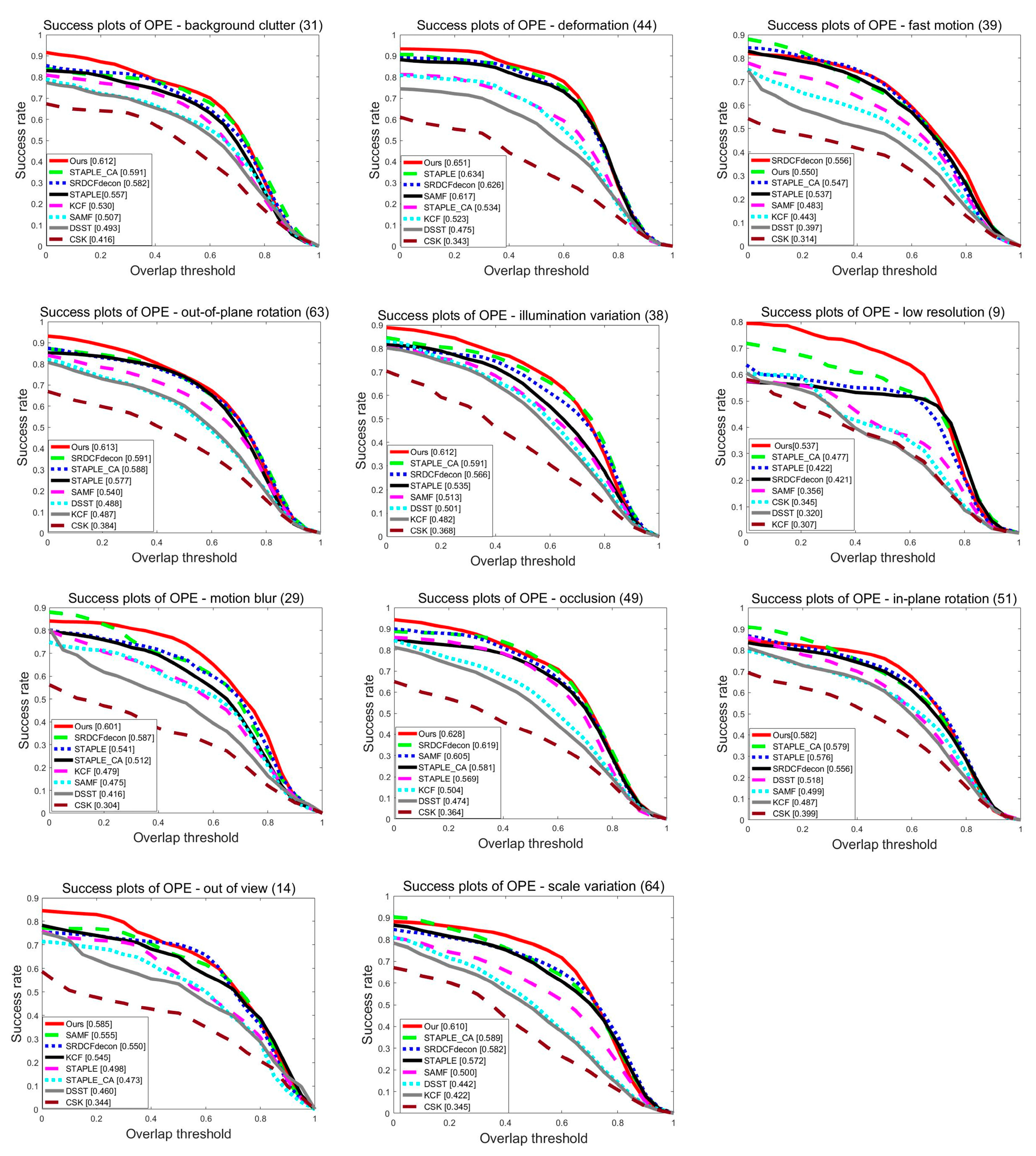

4.1. OTB2015 Dataset

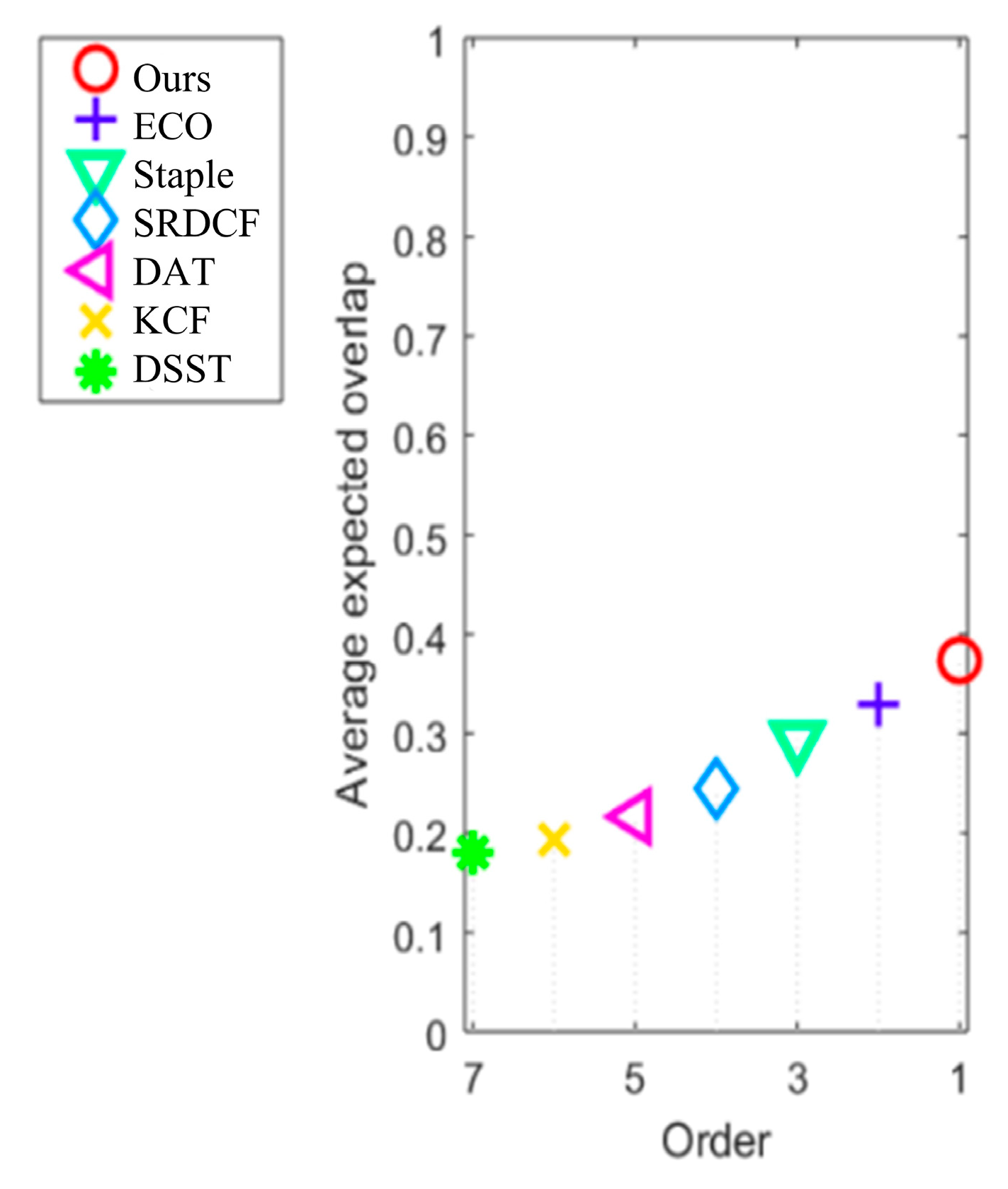

4.2. VOT2016 Dataset

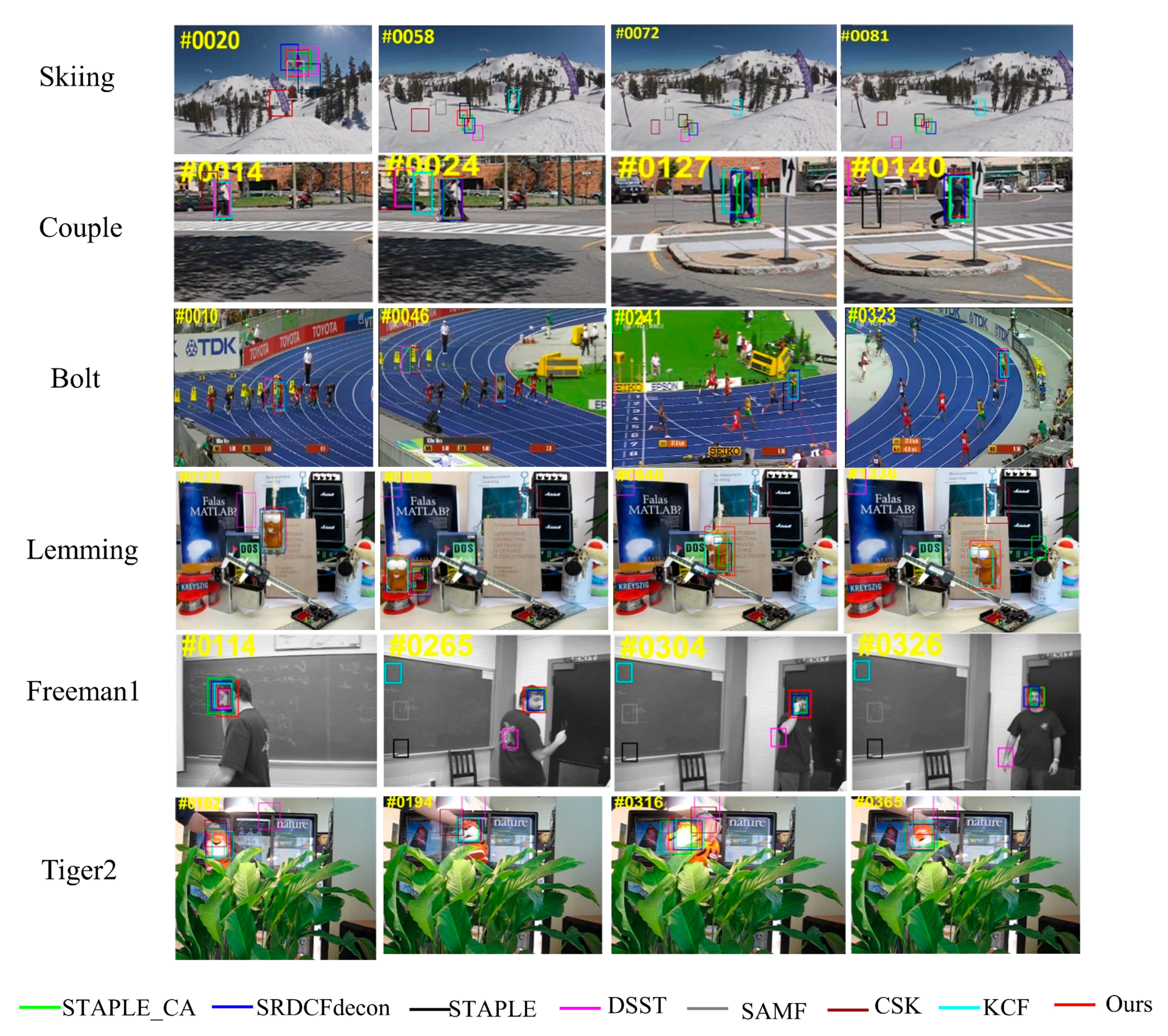

4.3. Qualitative Evaluation

4.4. Ablation Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Smeulders, A.W.; Chu, D.M.; Cucchiara, R.; Calderara, S.; Dehghan, A. Visual tracking: An experimental survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1442–1468. [Google Scholar] [PubMed] [Green Version]

- Yilmaz, A.; Javed, O.; Shah, M. Object tracking: A survey. ACM Comput. Surv. 2006, 38, 13. [Google Scholar] [CrossRef]

- Li, P.; Wang, D.; Wang, L.; Lu, H. Deep visual tracking: Review and experimental comparison. Pattern Recognit. 2018, 76, 323–338. [Google Scholar] [CrossRef]

- Zhang, K.; Liu, Q.; Wu, Y.; Yang, M.-H. Robust Visual Tracking via Convolutional Networks without Training. IEEE Trans. Image Process. 2016, 25, 1779–1792. [Google Scholar] [CrossRef] [PubMed]

- Jang, J.; Jiang, H. MeanShift++: Extremely Fast Mode-Seeking With Applications to Segmentation and Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 4100–4111. [Google Scholar] [CrossRef]

- Sundararaman, R.; De Almeida Braga, C.; Marchand, E.; Pettré, J. Tracking Pedestrian Heads in Dense Crowd. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 3864–3874. [Google Scholar] [CrossRef]

- Liu, L.; Cao, J. End-to-end learning interpolation for object tracking in low frame-rate video. IET Image Process. 2020, 14, 1066–1072. [Google Scholar] [CrossRef]

- Chen, F.; Wang, X. Adaptive Spatial-Temporal Regularization for Correlation Filters Based Visual Object Tracking. Symmetry 2021, 13, 1665. [Google Scholar] [CrossRef]

- Fawad; Khan, M.J.; Rahman, M.; Amin, Y.; Tenhunen, H. Low-Rank Multi-Channel Features for Robust Visual Object Tracking. Symmetry 2019, 11, 1155. [Google Scholar] [CrossRef] [Green Version]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, D.; Zhang, Z.; Zou, L.; Xie, Z.; He, F.; Wu, Y.; Tu, Z. Part-based visual tracking with spatially regularized correlation filters. Vis. Comput. 2019, 36, 509–527. [Google Scholar] [CrossRef]

- Gong, L.; Wang, C. Research on Moving Target Tracking Based on FDRIG Optical Flow. Symmetry 2019, 11, 1122. [Google Scholar] [CrossRef] [Green Version]

- Liu, T.; Wang, G.; Yang, Q. Real-time part-based visual tracking via adaptive correlation filters. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4902–4912. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, T.; Cao, X.; Xu, C. Structural Correlation Filter for Robust Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4312–4320. [Google Scholar] [CrossRef]

- Supancic, J.S.; Ramanan, D. Self-paced learning for long-term tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 2379–2386. [Google Scholar]

- Lebeda, K.; Hadfield, S.; Matas, J.; Bowden, R. Long-term tracking through failure cases. In Proceedings of the IEEE International Conference on Computer Vision Workshops (CVPRW), Portland, OR, USA, 23–28 June 2013; pp. 153–160. [Google Scholar]

- Lee, H.; Choi, S.; Kim, C. A memory model based on the siamese network for long-term tracking. In Proceedings of the European Conference on Computer Vision Workshops (ECCVW), Glasgow, UK, 8–14 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 100–115. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, D.; Wang, L.; Qi, J.; Lu, H. Learning regression and verification networks for long-term visual tracking. arXiv 2018, arXiv:1809.04320. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar] [CrossRef] [Green Version]

- Wu, Y.; Lim, J.; Yang, M.-H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Matej, K.; Ales, L.; Jiri, M.; Michael, F.; Roman, P.P.; Luka, C.; Tomas, V.; Gustav, H.; Alan, L.; Gustavo, F. The visual object tracking VOT2016 challenge results. In Proceedings of the European Conference on Computer Vision Workshops (ECCVW), Munich, Germany, 8 October 2016. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the Twenty-Third IEEE Conference on Computer Vision and Pattern Recognition, CVPR, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar] [CrossRef]

- Danelljan, M.; Häger, G.; Khan, F.S.; Michael, F. Accurate scale estimation for robust tracking. In Proceedings of the 2014 British Machine Vision Conference, Nottingham, UK, 1–5 September 2014; BMVA Press: Nottingham, UK, 2014; pp. 65.1–65.11. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Zhu, J. A scale adaptive kernel correlation filter tracker with feature integration. In Proceedings of the European Conference on Computer Vision Workshops (ECCVW), Zurich, Switzerland, 6–7 September 2014; pp. 254–265. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015; pp. 4310–4318. [Google Scholar] [CrossRef] [Green Version]

- Galoogahi, H.K.; Fagg, A.; Lucey, S. Learning Background-Aware Correlation Filters for Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1144–1152. [Google Scholar] [CrossRef] [Green Version]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Convolutional Features for Correlation Filter Based Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015; pp. 621–629. [Google Scholar] [CrossRef] [Green Version]

- Ma, C.; Huang, J.B.; Yang, X.; Yang, M.H. Hierarchical convolutional features for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3074–3082. [Google Scholar]

- Danelljan, M.; Robinson, A.; Shahbaz, K.F.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6931–6939. [Google Scholar] [CrossRef] [Green Version]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H.S. End-to-End Representation Learning for Correlation Filter Based Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5000–5008. [Google Scholar] [CrossRef] [Green Version]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-learning-detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, M.; Liu, Y.; Huang, Z. Large margin object tracking with circulant feature maps. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 21–26. [Google Scholar]

- Zhang, J.; Ma, S.; Sclaroff, S. Meem: Robust tracking via multiple experts using entropy minimization. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 188–203. [Google Scholar]

- Wei, Z.; Lu, H.; Yang, M.H. Robust object tracking via sparsity-based collaborative model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 1838–1845. [Google Scholar]

- Zhang, T.; Bibi, A.; Ghanem, B. In defense of sparse tracking: Circulant sparse tracker. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3880–3888. [Google Scholar]

- Hong, Z.; Chen, Z.; Wang, C.; Mei, X.; Prokhorov, D.; Tao, D. Multistore tracker (muster): A cognitive psychology inspired approach to object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 749–758. [Google Scholar]

- Ma, C.; Yang, X.; Zhang, C.Y.; Yang, M. Long-term correlation tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5388–5396. [Google Scholar] [CrossRef]

- Fan, H.; Ling, H. Parallel tracking and verifying: A framework for real-time and high accuracy visual tracking. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5486–5494. [Google Scholar]

- Tang, F.; Ling, Q. Contour-Aware Long-Term Tracking with Reliable Re-Detection. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4739–4754. [Google Scholar] [CrossRef]

- Wang, N.; Zhou, W.; Li, H. Reliable Re-Detection for Long-Term Tracking. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 730–743. [Google Scholar] [CrossRef]

- Wang, N.; Zhou, W.; Tian, Q.; Hong, R.; Wang, M.; Li, H. Multicue correlation filters for robust visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4844–4853. [Google Scholar]

- Liu, L.; Cao, J.; Niu, Y. Visual Saliency Detection Based on Region Contrast and Guided Filter. In Proceedings of the 2nd IEEE International Conference on Computational Intelligence and Applications (ICCIA), Beijing, China, 8–11 September 2017; pp. 327–330. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M. Online Object Tracking: A Benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar] [CrossRef] [Green Version]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Adaptive Decontamination of the Training Set: A Unified Formulation for Discriminative Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1430–1438. [Google Scholar] [CrossRef] [Green Version]

- Mueller, M.; Smith, N.; Ghanem, B. Context-aware correlation filter tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1396–1404. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the Circulant Structure of Tracking-by-Detection with Kernels. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 702–715. [Google Scholar] [CrossRef]

- Possegger, H.; Mauthner, T.; Bischof, H. In Defense of Color-based Model-free Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 2113–2120. [Google Scholar] [CrossRef]

- Matej, K.; Jiri, M.; Alexs, L.; Tomas, V.; Roman, P.; Gustavo, F.; Georg, N.; Fatih, P.; Luka, C. A Novel Performance Evaluation Methodology for Single-Target Trackers. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2137–2155. [Google Scholar] [CrossRef] [Green Version]

- Thakur, N.; Han, C.Y. An Ambient Intelligence-Based Human Behavior Monitoring Framework for Ubiquitous Environments. Information 2021, 12, 81. [Google Scholar] [CrossRef]

| Camera Motion | Empty | Illum Change | Motion Change | Occlusion | Size Change | Mean | Weighted Mean | Pooled | |

|---|---|---|---|---|---|---|---|---|---|

| MSLT | 0.5757 | 0.5943 | 0.6975 | 0.5078 | 0.5253 | 0.4232 | 0.5338 | 0.5484 | 0.5524 |

| ECo | 0.5667 | 0.5748 | 0.7084 | 0.4997 | 0.5019 | 0.4631 | 0.5423 | 0.5395 | 0.5499 |

| Staple | 0.5513 | 0.5833 | 0.7091 | 0.5051 | 0.5110 | 0.4328 | 0.5491 | 0.5403 | 0.5400 |

| SRDCF | 0.5517 | 0.5785 | 0.6802 | 0.4846 | 0.4750 | 0.4043 | 0.5290 | 0.5258 | 0.5335 |

| DSST | 0.5306 | 0.5794 | 0.6710 | 0.4834 | 0.5036 | 0.4060 | 0.5290 | 0.5245 | 0.5318 |

| DAT | 0.4608 | 0.4978 | 0.4350 | 0.4632 | 0.3998 | 0.4507 | 0.4512 | 0.4518 | 0.4687 |

| KCF | 0.4937 | 0.5496 | 0.6872 | 0.4291 | 0.4700 | 0.4301 | 0.5058 | 0.4916 | 0.4936 |

| Camera Motion | Empty | Illum Change | Motion Change | Occlusion | Size Change | Mean | Weighted Mean | Pooled | |

|---|---|---|---|---|---|---|---|---|---|

| MSLT | 15.000 | 5.000 | 1.000 | 17.000 | 10.000 | 17.000 | 10.833 | 11.673 | 43.000 |

| ECo | 15.000 | 8.000 | 2.000 | 18.000 | 13.000 | 10.000 | 11.000 | 12.582 | 44.000 |

| Staple | 34.000 | 13.000 | 7.000 | 35.000 | 15.000 | 24.000 | 21.333 | 23.895 | 81.000 |

| SRDCF | 43.000 | 16.000 | 8.000 | 36.000 | 21.000 | 22.000 | 24.333 | 28.317 | 90.000 |

| DSST | 66.000 | 31.000 | 6.000 | 60.000 | 33.000 | 22.000 | 36.333 | 44.813 | 151.00 |

| DAT | 36.000 | 25.000 | 6.000 | 30.000 | 22.000 | 22.000 | 25.000 | 28.353 | 103.00 |

| KCF | 55.000 | 24.000 | 8.000 | 52.000 | 31.000 | 20.000 | 31.667 | 38.082 | 122.00 |

| Method | All |

|---|---|

| MSLT | 0.3737 |

| CCOT | 0.3293 |

| Staple | 0.2941 |

| SRDCF | 0.2458 |

| DAT | 0.2116 |

| KCF | 0.1935 |

| DSST | 0.1805 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, L.; Feng, T.; Fu, Y. Learning Multifeature Correlation Filter and Saliency Redetection for Long-Term Object Tracking. Symmetry 2022, 14, 911. https://doi.org/10.3390/sym14050911

Liu L, Feng T, Fu Y. Learning Multifeature Correlation Filter and Saliency Redetection for Long-Term Object Tracking. Symmetry. 2022; 14(5):911. https://doi.org/10.3390/sym14050911

Chicago/Turabian StyleLiu, Liqiang, Tiantian Feng, and Yanfang Fu. 2022. "Learning Multifeature Correlation Filter and Saliency Redetection for Long-Term Object Tracking" Symmetry 14, no. 5: 911. https://doi.org/10.3390/sym14050911

APA StyleLiu, L., Feng, T., & Fu, Y. (2022). Learning Multifeature Correlation Filter and Saliency Redetection for Long-Term Object Tracking. Symmetry, 14(5), 911. https://doi.org/10.3390/sym14050911