An Embedding Skeleton for Fish Detection and Marine Organisms Recognition

Abstract

:1. Introduction

- 1.

- Rapid and precise detection by the proposed YOLOv4-embedding structure for marine organisms under various environmental conditions.

- 2.

- We have compared Efficientdet [28] and discussed the fish detection results to verify the applicability and effectiveness, and recommended a method in marine organisms detection.

2. Associated Work on Fish and Marine Organisms Discovery

3. Architecture Design of YOLOv4-Embedding

3.1. Data and Relevant Methods

3.2. Detection Procedure

- Step 1: Feed Marine organisms photo into the network.

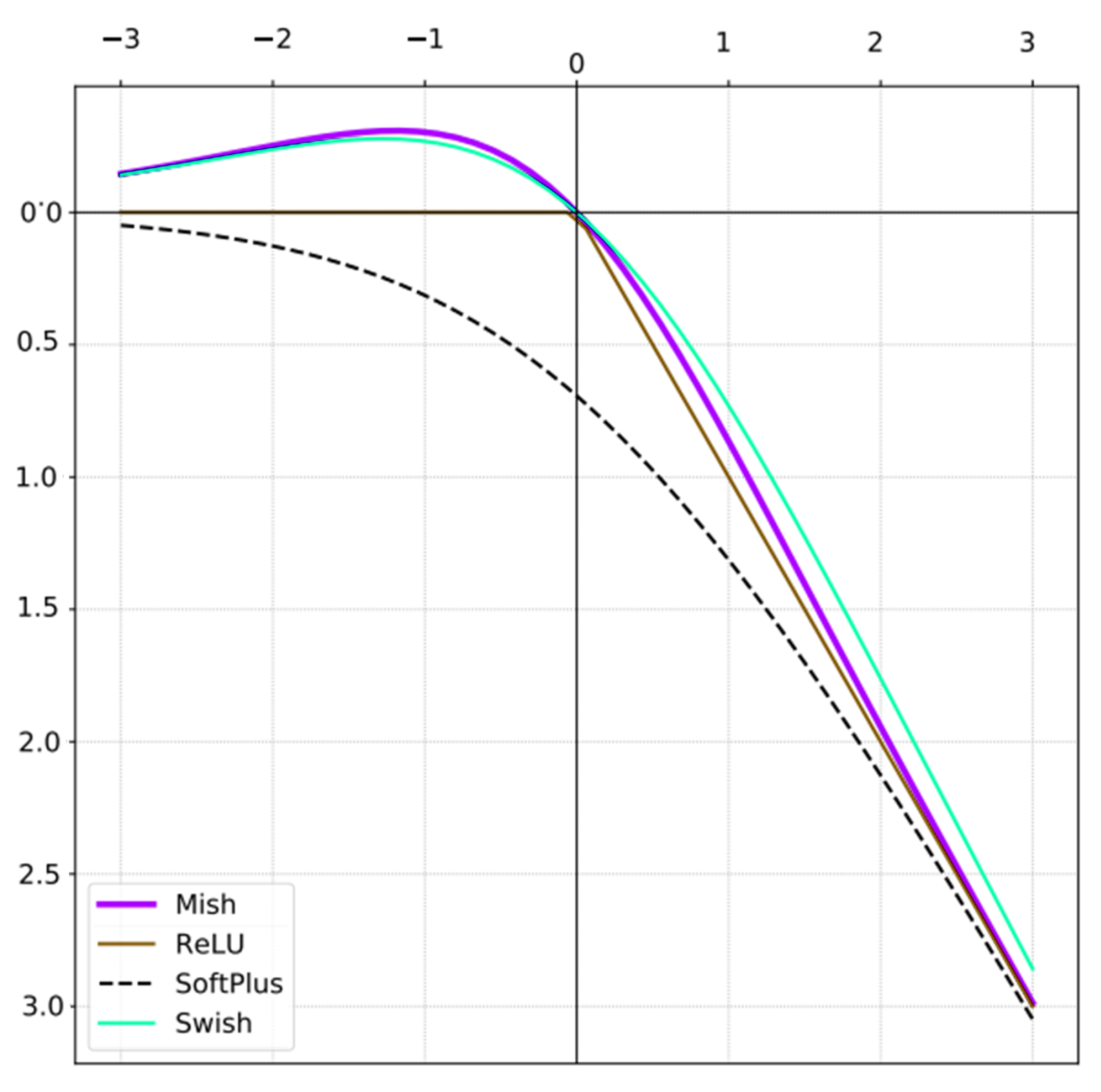

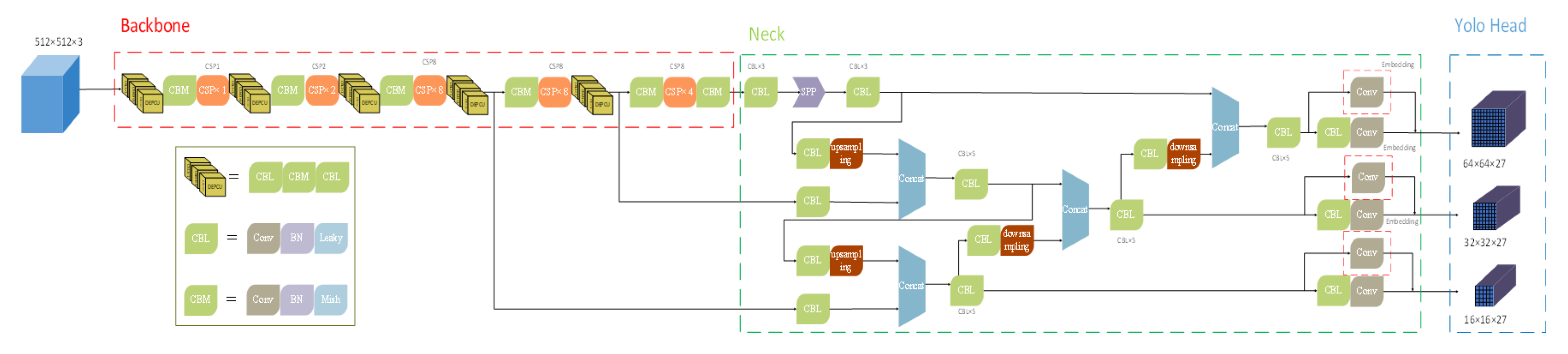

- Step 2: The CSPDarknet53 backbone maintains the Darknet53 skeleton and uses the CSP organization. The Leaky and Mish activation functions extract the image’s info.

- Step 3: Assemble SPP(Spatial Pyramid Pooling) [37] module and FPN (Feature Pyramid Networks) + PAN (Path Aggregation Network) pattern to the feature collected by the backbone. PAN uses path aggregation and characteristic pyramid technique to make the propagation of low-level messages to the top-level easier [38]. The multi-scale forecasting for three styles of goals: small ones, medium ones, and large ones.

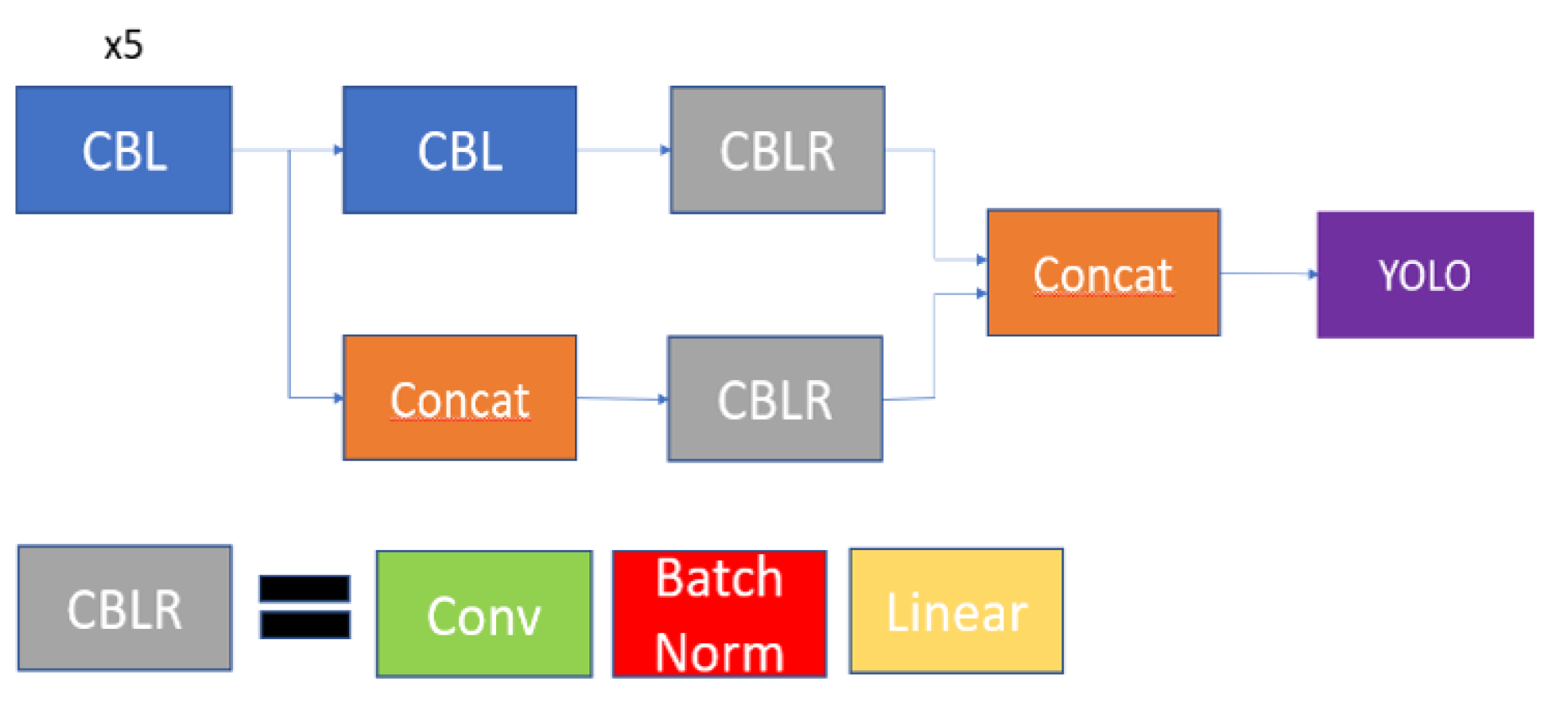

- Step 4: Embedding linear activation function and the convolution layer at the end of the YOLOv4-embedding Neck. Conv + Batch normalization + Liner(CBLR) engaged in the network. The structure shows in Figure 3 Concat is the addition of dimensionality and tensors, which add the characteristic of the two CBLR. After Conv processed and Batch Normalization, the data obtain new values, then put into the linear activation function after regulation.

- Step 5: The YOLOv4-embedding head executes predicting, which produces the final disclosure consequence. Here is the expounding of the concrete building blocks. The backbone CSPDarknet53 structure that incorporates 5 CSP (Cross Stage Partial connections) model, 11 Convolutional + Batch normalization + Mish(CBM) model and 10 Convolutional + Batch normalization + Leaky(CBL). The CBM model implements the convolution task using Mish activation functions and Batch Normalization. In contrast, the CBL module performs the convolution mission using Batch Normalization and Leaky Relu activation functions.

4. Tentative Results

4.1. Experimental Setup

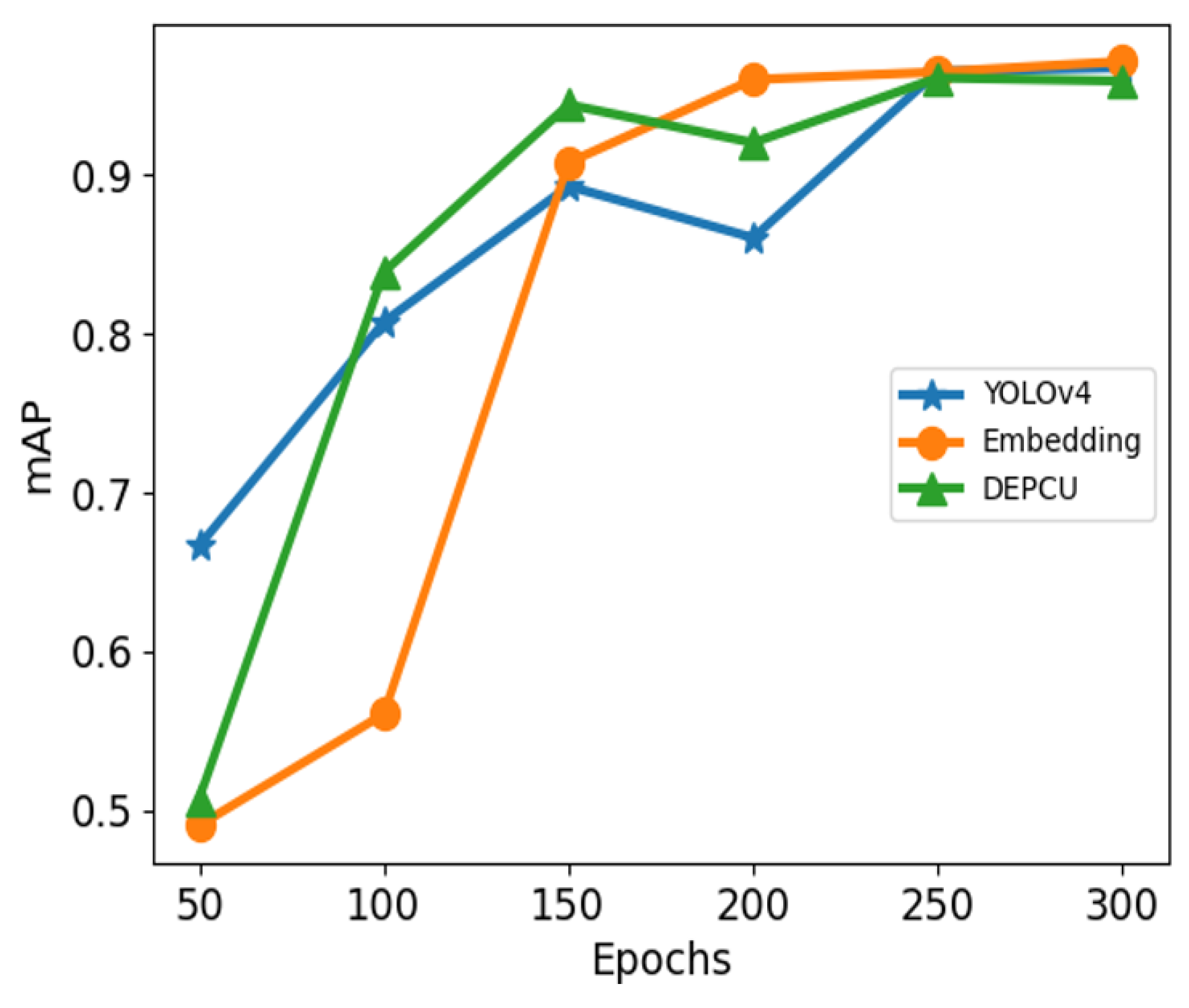

4.2. Assess Training Models

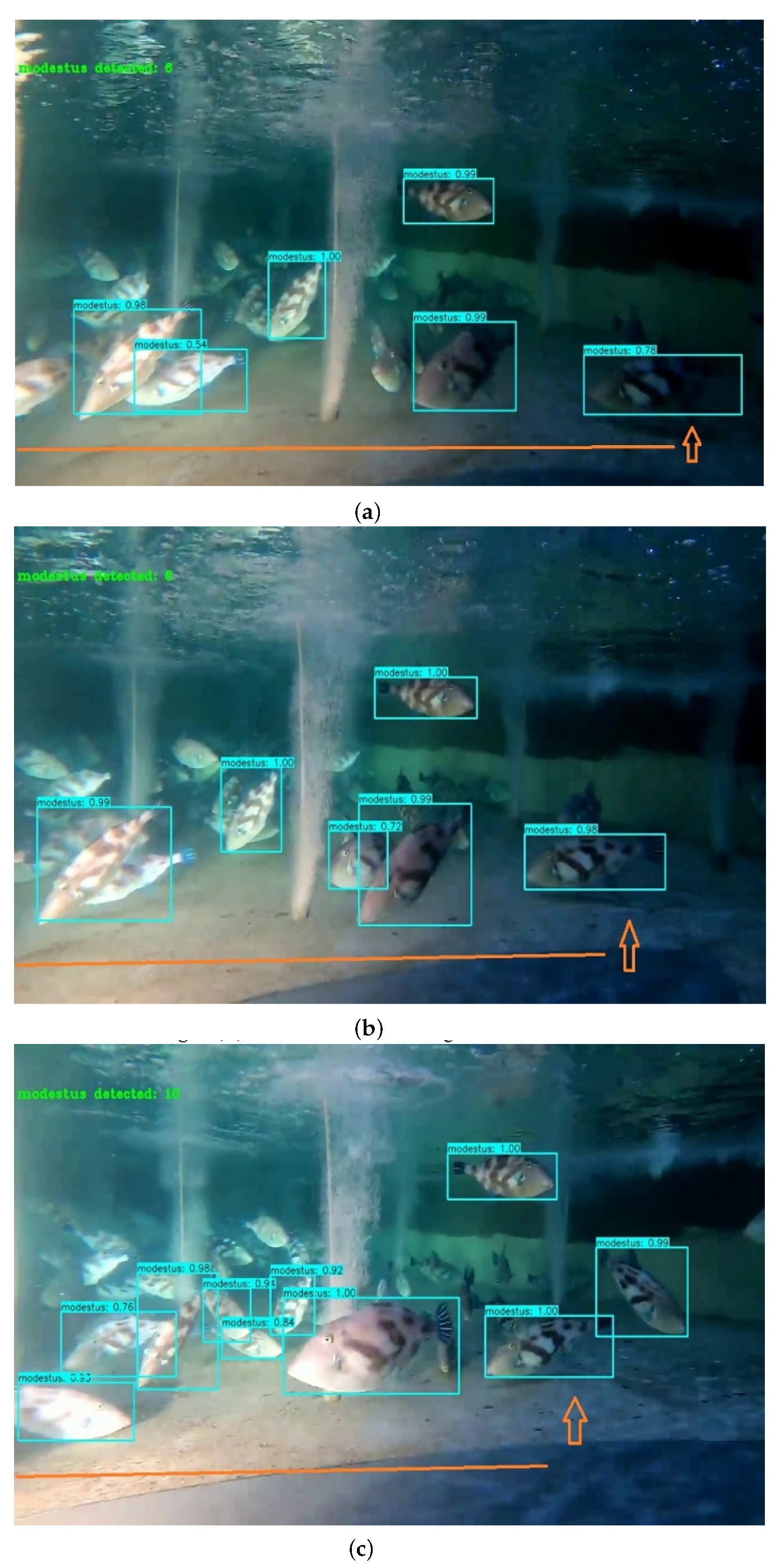

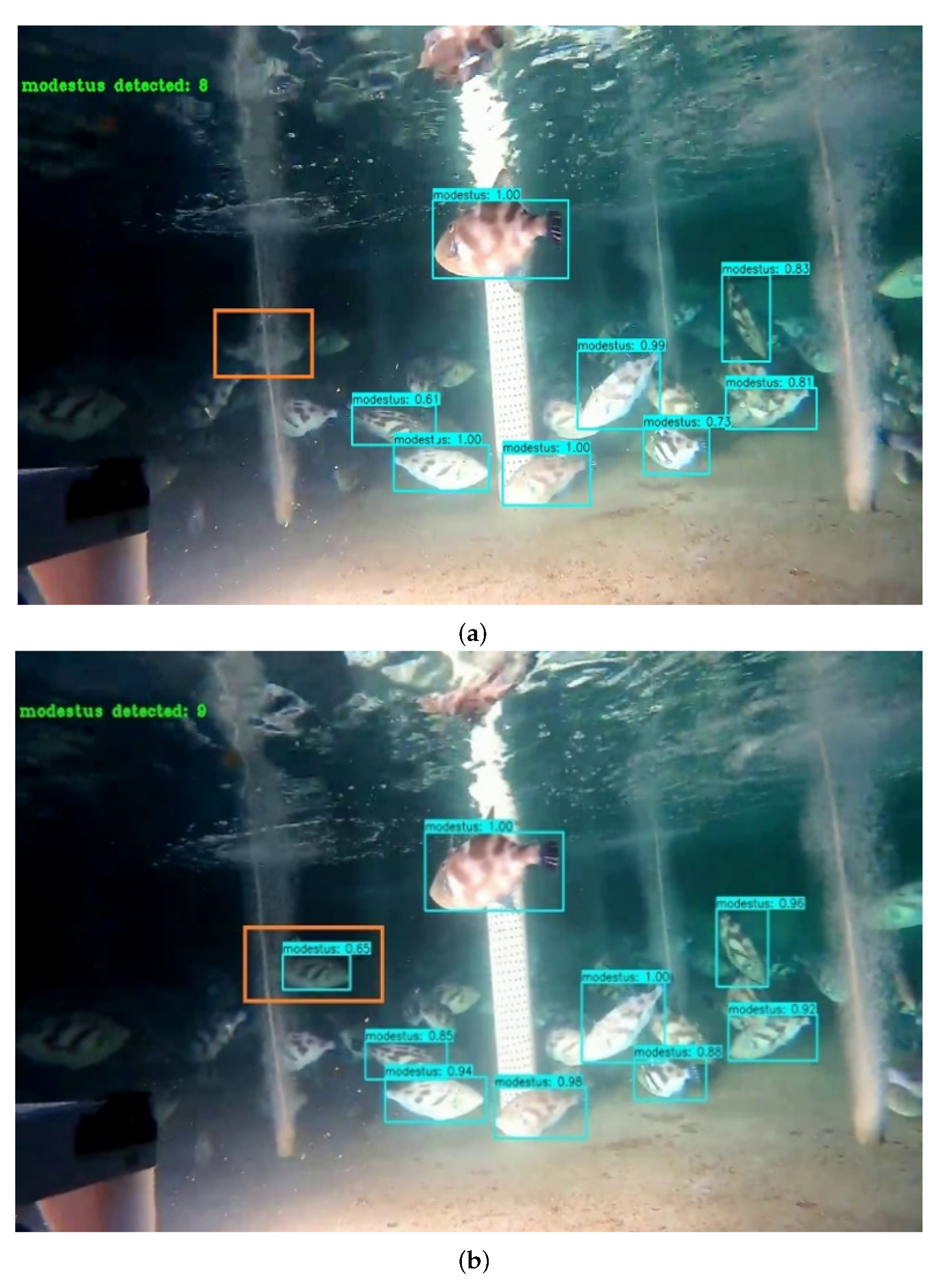

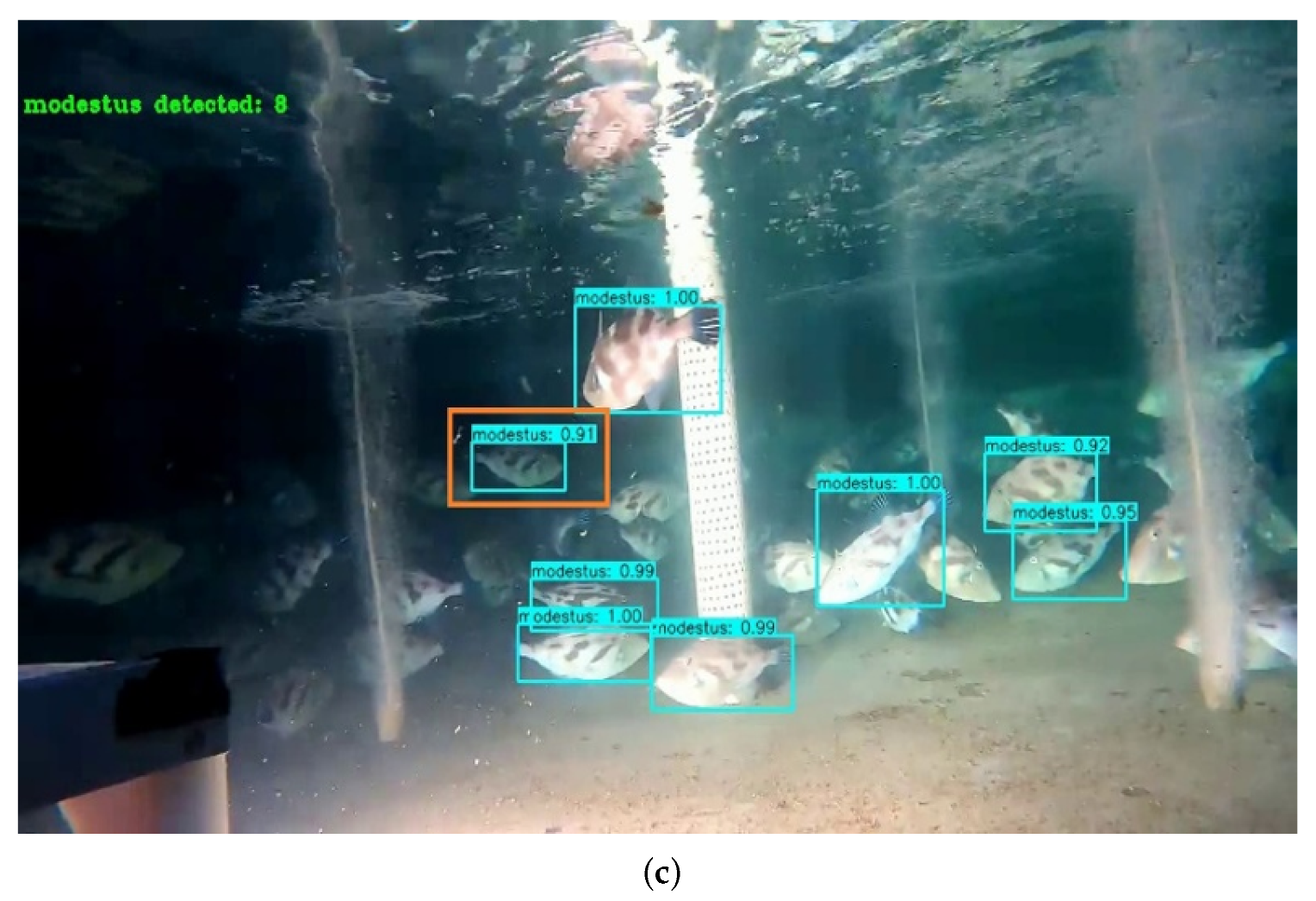

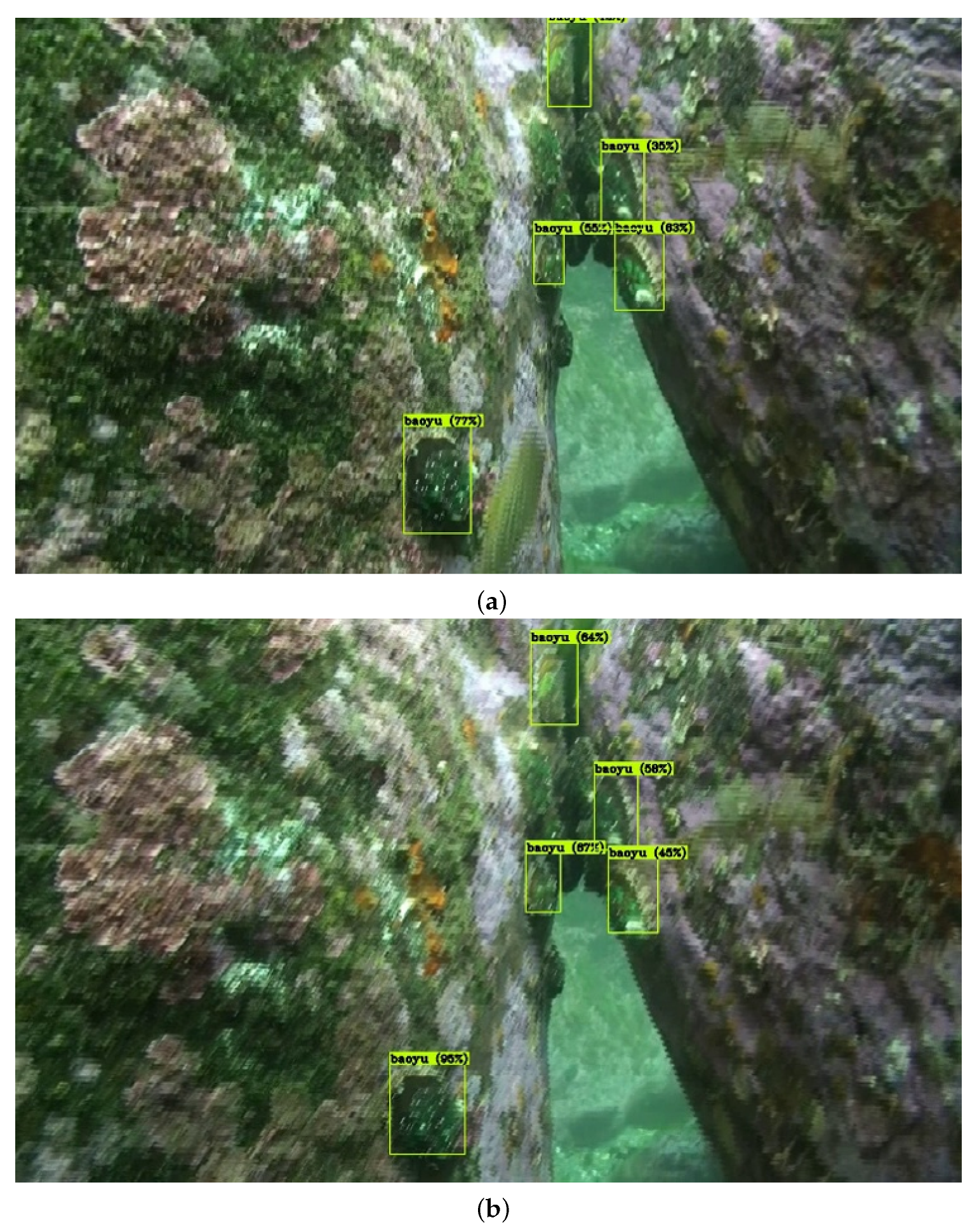

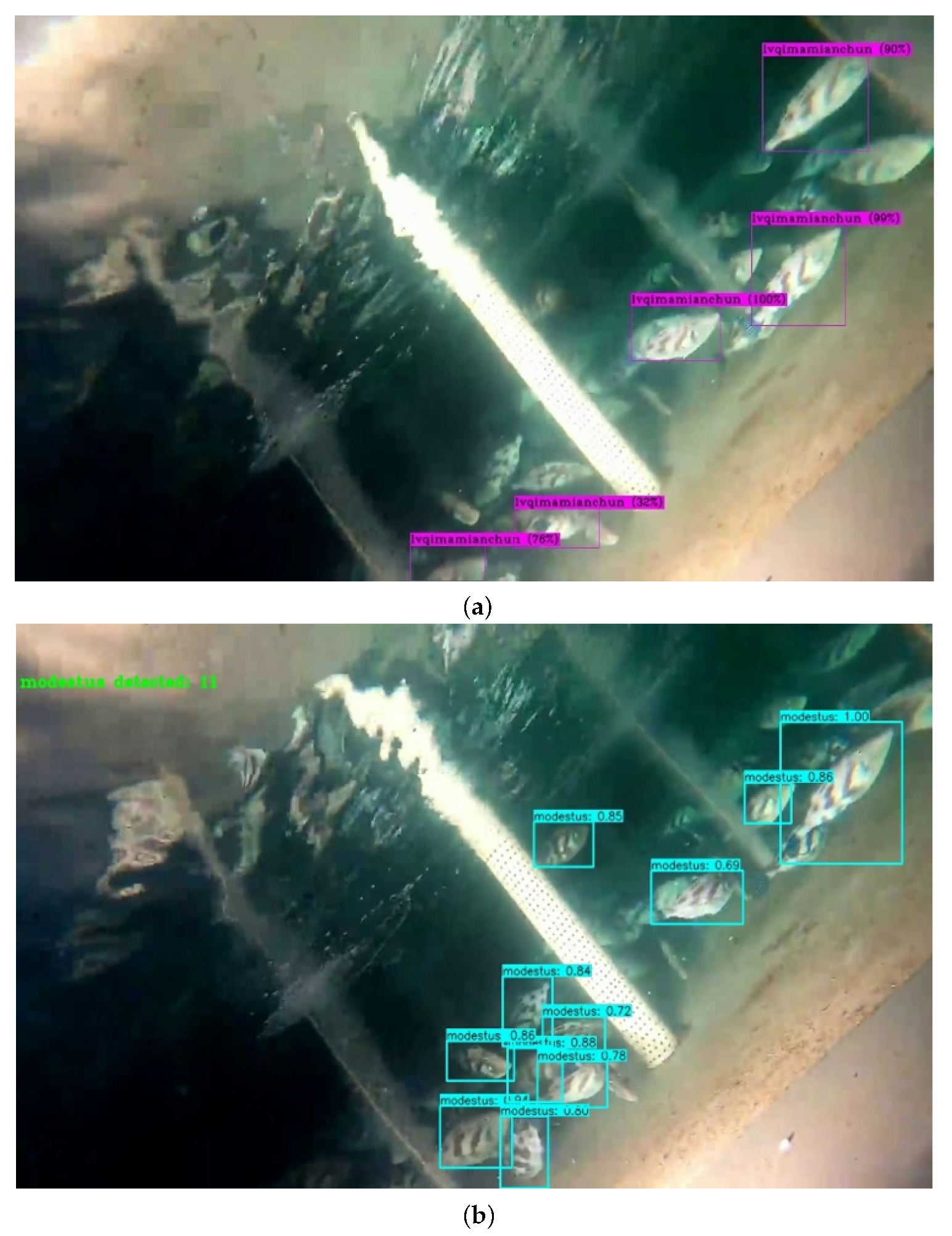

4.3. Detection Consequence

5. Discussion

- The training steps are 30,938.

- The scheduling strategy’s polynomial decay learning rate adopted with an initial learning rate of 0.0013.

- The iteration steps are 1000.

5.1. Comparison of YOLOv4 and YOLOv4-Embedding in Marine Organism Detection

5.2. Comparison of YOLOv4 and YOLOv4-Embedding in Marine Organism Detection

6. Conclusions and Future Works

- (i)

- We found suitable deep learning algorithm for marine organism invention in the farming pool. This paper analyzed the structural characteristic of the YOLOv4-embedding neural network and the key issues of farming pool invention. In the network, CSPDarknet53 deepens the network that could collect more deep marine organism features and reduce the interference of background; Embedding the linear activation and convolution layer at the end of the YOLOv4-embedding Neck. YOLOv4-embedding architecture increases the acceptance range of network characteristics with less computational expense. Furthermore, Conv + Batch normalization + Liner(CBLR) employed in the network, which extracts more profound semantic information and positioning information repeatedly, detects marine organisms more accurately. Therefore, accurate detection is achieved when the marine organism measurement are highly different in one image.

- (ii)

- The marine organism detection arithmetic using the YOLOv4-embedding neural network in the farming pool, achieves precise detection under different occlusion and illumination for other matureness and breeds, providing accurate information for other animals breeds and maturity of the marine organism intelligent management and underwater machinery.

- (iii)

- YOLOv4-embedding neural networks are built to achieve the fast diversity of marine organisms. The image feature extraction by deep learning and intermediate data of CNN when the network training is steady are studied. This paper also discussed the effect of convolution embedded scheme, training rounds, and sample number on network training speed and accuracy. In the detection section, YOLOv4-embedding with high confidence and high mAP. In conclusion, the proposed architecture is suitable for marine organism detection in the farming pool. The future work will mainly get the assort value of marine organisms in the real world, realize the localization of marine organisms, compute the picking dot’s position, deploy a marine organism invention model in tiny terminals, and develop moving mechanics in future work.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Zhao, Z.; Liu, Y.; Sun, X.; Liu, J.; Yang, X.; Zhou, C. Composited FishNet: Fish Detection and Species Recognition From Low-Quality Underwater Videos. IEEE Trans. Image Process. 2021, 30, 4719–4734. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Liu, Y.; Yu, H.; Fang, X.; Song, L.; Li, D.; Chen, Y. Computer vision models in intelligent aquaculture with emphasis on fish detection and behavior analysis: A review. Arch. Comput. Methods Eng. 2021, 28, 2785–2816. [Google Scholar] [CrossRef]

- Kim, S.; Jeong, M.; Ko, B.C. Self-supervised keypoint detection based on multi-layer random forest regressor. IEEE Access 2021, 9, 40850–40859. [Google Scholar] [CrossRef]

- Chen, L.; Liu, Z.; Tong, L.; Jiang, Z.; Wang, S.; Dong, J.; Zhou, H. Underwater object detection using Invert Multi-Class Adaboost with deep learning. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Zhang, X.; Zhang, L.; Lou, X. A Raw Image-Based End-to-End Object Detection Accelerator Using HOG Features. IEEE Trans. Circuits Syst. I Regul. Pap. 2022, 69, 323–333. [Google Scholar] [CrossRef]

- Odaudu, S.N.; Adedokun, E.A.; Salaudeen, A.T.; Marshall, F.F.; Ibrahim, Y.; Ikpe, D.E. Sequential feature selection using hybridized differential evolution algorithm and haar cascade for object detection framework. Covenant J. Inform. Commun. Technol. 2020, 8, 2354–3507. [Google Scholar] [CrossRef]

- Smotherman, H.; Connolly, A.J.; Kalmbach, J.B.; Portillo, S.K.; Bektesevic, D.; Eggl, S.; Juric, M.; Moeyens, J.; Whidden, P.J. Sifting through the Static: Moving Object Detection in Difference Images. Astron. J. 2021, 162, 245. [Google Scholar] [CrossRef]

- Pramanik, A.; Harshvardhan; Djeddi, C.; Sarkar, S.; Maiti, J. Region proposal and object detection using HoG-based CNN feature map. In Proceedings of the 2020 International Conference on Data Analytics for Business and Industry: Way Towards a Sustainable Economy (ICDABI), Sakheer, Bahrain, 26–27 October 2020; pp. 1–5. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 25 (NIPS 2012); 2012; Volume 25. Available online: https://www.researchgate.net/publication/319770183_Imagenet_classification_with_deep_convolutional_neural_networks (accessed on 1 February 2022).

- He, T.; Zhang, Z.; Zhang, H.; Zhang, Z.; Xie, J.; Li, M. Bag of tricks for image classification with convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 558–567. [Google Scholar]

- Duan, K.; Xie, L.; Qi, H.; Bai, S.; Huang, Q.; Tian, Q. Corner proposal network for anchor-free, two-stage object detection. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2020; pp. 399–416. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning; 2015; pp. 448–456. Available online: https://proceedings.mlr.press/v37/ioffe15.html (accessed on 1 February 2022).

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence; 2017. Available online: https://ojs.aaai.org/index.php/AAAI/article/view/11231 (accessed on 1 February 2022).

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Aziz, A.; Sohail, A.; Fahad, L.; Burhan, M.; Wahab, N.; Khan, A. Channel boosted convolutional neural network for classification of mitotic nuclei using histopathological images. In Proceedings of the 2020 17th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 14–18 January 2020; pp. 277–284. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015); 2015; Volume 28. Available online: https://dl.acm.org/doi/10.5555/2969239.2969250 (accessed on 1 February 2022).

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. IEEE Int. Conf. Comput. Vis. 2017, 42, 318–327. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Niu, B.; Li, G.; Peng, F.; Wu, J.; Zhang, L.; Li, Z. Survey of fish behavior analysis by computer vision. J. Aquac. Res. Dev. 2018, 9. Available online: https://www.researchgate.net/publication/325968943_Survey_of_Fish_Behavior_Analysis_by_Computer_Vision (accessed on 1 February 2022). [CrossRef]

- Woźniak, M.; Połap, D. Soft trees with neural components as image-processing technique for archeological excavations. Pers. Ubiquitous Comput. 2020, 24, 363–375. [Google Scholar] [CrossRef] [Green Version]

- Rathi, D.; Jain, S.; Indu, S. Underwater fish species classification using convolutional neural network and deep learning. In Proceedings of the 2017 Ninth International Conference on Advances in Pattern Recognition (ICAPR), Bangalore, India, 27–30 December 2017; pp. 1–6. [Google Scholar]

- Marini, S.; Fanelli, E.; Sbragaglia, V.; Azzurro, E.; Del Rio Fernandez, J.; Aguzzi, J. Tracking fish abundance by underwater image recognition. Sci. Rep. 2018, 8, 1–12. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mandal, R.; Connolly, R.M.; Schlacher, T.A.; Stantic, B. Assessing fish abundance from underwater video using deep neural networks. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–6. [Google Scholar]

- Konovalov, D.A.; Saleh, A.; Bradley, M.; Sankupellay, M.; Marini, S.; Sheaves, M. Underwater fish detection with weak multi-domain supervision. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Uemura, T.; Lu, H.; Kim, H. Marine organisms tracking and recognizing using yolo. In 2nd EAI International Conference on Robotic Sensor Networks; Springer: Cham, Switzerland, 2020; pp. 53–58. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

| Network | Epoch | ||

|---|---|---|---|

| YOLOv4 | 100 | 0.9676 | 0.625 |

| 200 | 0.9717 | 0.7209 | |

| 300 | 0.9709 | 0.8268 | |

| YOLOv4-embedding | 100 | 0.9673 | 0.7085 |

| 200 | 0.9675 | 0.7651 | |

| 300 | 0.9719 | 0.856 |

| Network | Epoch | Precision | Recall |

|---|---|---|---|

| YOLOv4 | 100 | 0.87 | 0.92 |

| 200 | 0.86 | 0.95 | |

| 300 | 0.86 | 0.95 | |

| YOLOv4-embedding | 100 | 0.78 | 0.74 |

| 200 | 0.85 | 0.76 | |

| 300 | 0.86 | 0.82 |

| Arithmetic | EfficientDet-D3 | YOLOv4 | v4-Embedding | v4-DEPCU |

|---|---|---|---|---|

| 71.20% | 97.09% | 97.19% | 95.90% | |

| 61.60% | 82.68% | 85.60% | 84.75% | |

| Average detection time | 72 ms | 19.31 ms | 19.46 ms | 20.20 ms |

| Weight size | 46.33 MB | 244.22 MB | 244.55 MB | 270.90 MB |

| Training time (300 epoch) | 5.47 h | 5.98 h | 6.08 h | 8.40 h |

| FPS | 43 | 51.8 | 51.4 | 49.5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, J.; He, W.; Weng, W.; Zhang, T.; Mao, Y.; Yuan, X.; Ma, P.; Mao, G. An Embedding Skeleton for Fish Detection and Marine Organisms Recognition. Symmetry 2022, 14, 1082. https://doi.org/10.3390/sym14061082

Zhu J, He W, Weng W, Zhang T, Mao Y, Yuan X, Ma P, Mao G. An Embedding Skeleton for Fish Detection and Marine Organisms Recognition. Symmetry. 2022; 14(6):1082. https://doi.org/10.3390/sym14061082

Chicago/Turabian StyleZhu, Jinde, Wenwu He, Weidong Weng, Tao Zhang, Yuze Mao, Xiutang Yuan, Peizhen Ma, and Guojun Mao. 2022. "An Embedding Skeleton for Fish Detection and Marine Organisms Recognition" Symmetry 14, no. 6: 1082. https://doi.org/10.3390/sym14061082

APA StyleZhu, J., He, W., Weng, W., Zhang, T., Mao, Y., Yuan, X., Ma, P., & Mao, G. (2022). An Embedding Skeleton for Fish Detection and Marine Organisms Recognition. Symmetry, 14(6), 1082. https://doi.org/10.3390/sym14061082