Conjugate Gradient Algorithm for Least-Squares Solutions of a Generalized Sylvester-Transpose Matrix Equation

Abstract

:1. Introduction

2. Auxiliary Results from Matrix Theory

3. Least-Squares Solutions via the Kronecker Linearization

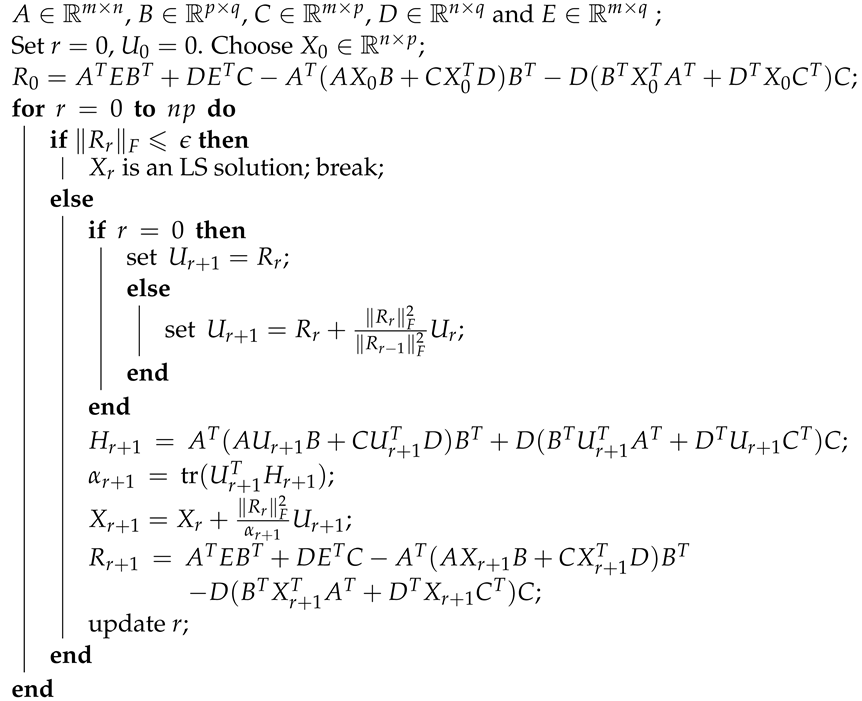

4. Least-Squares Solution via a Conjugate Gradient Algorithm

| Algorithm 1: A conjugate gradient iterative algorithm for Equation (1) |

|

| Algorithm 2: A conjugate gradient iterative algorithm for Equation (2) |

|

5. Minimal-Norm Least-Squares Solution via Algorithm 1

6. Least-Squares Solution Closest to a Given Matrix

7. Numerical Experiments

| Y | Initial V | Iterations | CPU | ||

|---|---|---|---|---|---|

| 0 | 18 | 0.104135 | 0.000006 | 4.3116 | |

| 20 | 0.108153 | 0.000005 | 4.3116 | ||

| I | 0 | 18 | 0.113960 | 0.000009 | 0.8580 |

| 20 | 0.108499 | 0.000006 | 0.8580 |

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Geir, E.D.; Fernando, P. A Course in Robust Control Theory: A Convex Approach; Springer: New York, NY, USA, 1999. [Google Scholar]

- Lewis, F. A survey of linear singular systems. Circ. Syst. Signal Process. 1986, 5, 3–36. [Google Scholar] [CrossRef]

- Dai, L. Singular Control Systems; Springer: Berlin, Germany, 1989. [Google Scholar]

- Enright, W.H. Improving the efficiency of matrix operations in the numerical solution of stiff ordinary differential equations. ACM Trans. Math. Softw. 1978, 4, 127–136. [Google Scholar] [CrossRef]

- Aliev, F.A.; Larin, V.B. Optimization of Linear Control Systems: Analytical Methods and Computational Algorithms; Stability Control Theory, Methods Applications; CRC Press: Boca Raton, FL, USA, 1998. [Google Scholar]

- Calvetti, D.; Reichel, L. Application of ADI iterative methods to the restoration of noisy images. SIAM J. Matrix Anal. Appl. 1996, 17, 165–186. [Google Scholar] [CrossRef]

- Duan, G.R. Eigenstructure assignment in descriptor systems via output feedback: A new complete parametric approach. Int. J. Control 1999, 72, 345–364. [Google Scholar] [CrossRef]

- Duan, G.R. Parametric approaches for eigenstructure assignment in high-order linear systems. Int. J. Control Autom. Syst. 2005, 3, 419–429. [Google Scholar]

- Kim, Y.; Kim, H.S. Eigenstructure assignment algorithm for second order systems. J. Guid. Control Dyn. 1999, 22, 729–731. [Google Scholar] [CrossRef]

- Fletcher, L.R.; Kuatsky, J.; Nichols, N.K. Eigenstructure assignment in descriptor systems. IEEE Trans. Autom. Control 1986, 31, 1138–1141. [Google Scholar] [CrossRef]

- Frank, P.M. Fault diagnosis in dynamic systems using analytical and knowledge-based redundancy a survey and some new results. Automatica 1990, 26, 459–474. [Google Scholar] [CrossRef]

- Epton, M. Methods for the solution of AXD - BXC = E and its applications in the numerical solution of implicit ordinary differential equations. BIT Numer. Math. 1980, 20, 341–345. [Google Scholar] [CrossRef]

- Zhou, B.; Duan, G.R. On the generalized Sylvester mapping and matrix equations. Syst. Control Lett. 2008, 57, 200–208. [Google Scholar] [CrossRef]

- Horn, R.; Johnson, C. Topics in Matrix Analysis; Cambridge University Press: New York, NY, USA, 1991. [Google Scholar]

- Kilicman, A.; Al Zhour, Z.A. Vector least-squares solutions for coupled singular matrix equations. Comput. Appl. Math. 2007, 206, 1051–1069. [Google Scholar] [CrossRef] [Green Version]

- Simoncini, V. Computational methods for linear matrix equations. SIAM Rev. 2016, 58, 377–441. [Google Scholar] [CrossRef]

- Hajarian, M. Developing BiCG and BiCR methods to solve generalized Sylvester-transpose matrix equations. Int. J. Autom. Comput. 2014, 11, 25–29. [Google Scholar] [CrossRef]

- Hajarian, M. Matrix form of the CGS method for solving general coupled matrix equations. Appl. Math. Lett. 2014, 34, 37–42. [Google Scholar] [CrossRef]

- Hajarian, M. Generalized conjugate direction algorithm for solving the general coupled matrix equations over symmetric matrices. Numer. Algorithms 2016, 73, 591–609. [Google Scholar] [CrossRef]

- Dehghan, M.; Mohammadi-Arani, R. Generalized product-type methods based on Bi-conjugate gradient (GPBiCG) for solving shifted linear systems. Comput. Appl. Math. 2017, 36, 1591–1606. [Google Scholar] [CrossRef]

- Zadeh, N.A.; Tajaddini, A.; Wu, G. Weighted and deflated global GMRES algorithms for solving large Sylvester matrix equations. Numer. Algorithms 2019, 82, 155–181. [Google Scholar] [CrossRef]

- Kittisopaporn, A.; Chansangiam, P.; Lewkeeratiyukul, W. Convergence analysis of gradient-based iterative algorithms for a class of rectangular Sylvester matrix equation based on Banach contraction principle. Adv. Differ. Equ. 2021, 2021, 17. [Google Scholar] [CrossRef]

- Boonruangkan, N.; Chansangiam, P. Convergence analysis of a gradient iterative algorithm with optimal convergence factor for a generalized Sylvester-transpose matrix equation. AIMS Math. 2021, 6, 8477–8496. [Google Scholar] [CrossRef]

- Zhang, X.; Sheng, X. The relaxed gradient based iterative algorithm for the symmetric (skew symmetric) solution of the Sylvester equation AX + XB = C. Math. Probl. Eng. 2017, 2017. [Google Scholar] [CrossRef]

- Xie, Y.J.; Ma, C.F. The accelerated gradient based iterative algorithm for solving a class of generalized Sylvester transpose matrix equation. Appl. Math. Comp. 2016, 273, 1257–1269. [Google Scholar] [CrossRef]

- Tian, Z.; Tian, M.; Gu, C.; Hao, X. An accelerated Jacobi-gradient based iterative algorithm for solving Sylvester matrix equations. Filomat 2017, 31, 2381–2390. [Google Scholar] [CrossRef]

- Sasaki, N.; Chansangiam, P. Modified Jacobi-gradient iterative method for generalized Sylvester matrix equation. Symmetry 2020, 12, 1831. [Google Scholar] [CrossRef]

- Kittisopaporn, A.; Chansangiam, P. Gradient-descent iterative algorithm for solving a class of linear matrix equations with applications to heat and Poisson equations. Adv. Differ. Equ. 2020, 2020, 324. [Google Scholar] [CrossRef]

- Heyouni, M.; Saberi-Movahed, F.; Tajaddini, A. On global Hessenberg based methods for solving Sylvester matrix equations. Comp. Math. Appl. 2018, 2019, 77–92. [Google Scholar] [CrossRef]

- Hajarian, M. Extending the CGLS algorithm for least squares solutions of the generalized Sylvester-transpose matrix equations. J. Franklin Inst. 2016, 353, 1168–1185. [Google Scholar] [CrossRef]

- Xie, L.; Ding, J.; Ding, F. Gradient based iterative solutions for general linear matrix equations. Comput. Math. Appl. 2009, 58, 1441–1448. [Google Scholar] [CrossRef]

- Kittisopaporn, A.; Chansangiam, P. Approximated least-squares solutions of a generalized Sylvester-transpose matrix equation via gradient-descent iterative algorithm. Adv. Differ. Equ. 2021, 2021, 266. [Google Scholar] [CrossRef]

- Tansri, K.; Choomklang, S.; Chansangiam, P. Conjugate gradient algorithm for consistent generalized Sylvester-transpose matrix equations. AIMS Math. 2022, 7, 5386–5407. [Google Scholar] [CrossRef]

- Wang, M.; Cheng, X. Iterative algorithms for solving the matrix equation AXB + CXTD = E. Appl. Math. Comput. 2007, 187, 622–629. [Google Scholar] [CrossRef]

- Chen, X.; Ji, J. The minimum-norm least-squares solution of a linear system and symmetric rank-one updates. Electron. J. Linear Algebra 2011, 22, 480–489. [Google Scholar] [CrossRef]

| Method | Iterations | CPU | |

|---|---|---|---|

| CG | 20 | 0.199308 | 6.407766 |

| GI | 20 | 0.129715 | 10.907665 |

| LSI | 20 | 0.179449 | 14.390460 |

| TAUOpt | 20 | 0.073866 | 7.806273 |

| Direct | − | 7.048632 | 0 |

| Iterations | CPU | ||

|---|---|---|---|

| 0 | 6 | 0.036523 | 0.000008 |

| 0.02 × ones | 11 | 0.036540 | 0.000009 |

| −0.01 | 10 | 0.038425 | 0.000009 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tansri, K.; Chansangiam, P. Conjugate Gradient Algorithm for Least-Squares Solutions of a Generalized Sylvester-Transpose Matrix Equation. Symmetry 2022, 14, 1868. https://doi.org/10.3390/sym14091868

Tansri K, Chansangiam P. Conjugate Gradient Algorithm for Least-Squares Solutions of a Generalized Sylvester-Transpose Matrix Equation. Symmetry. 2022; 14(9):1868. https://doi.org/10.3390/sym14091868

Chicago/Turabian StyleTansri, Kanjanaporn, and Pattrawut Chansangiam. 2022. "Conjugate Gradient Algorithm for Least-Squares Solutions of a Generalized Sylvester-Transpose Matrix Equation" Symmetry 14, no. 9: 1868. https://doi.org/10.3390/sym14091868

APA StyleTansri, K., & Chansangiam, P. (2022). Conjugate Gradient Algorithm for Least-Squares Solutions of a Generalized Sylvester-Transpose Matrix Equation. Symmetry, 14(9), 1868. https://doi.org/10.3390/sym14091868