1. Introduction

Sylvester-type matrix equations are closely related to ordinary differential equations (ODEs), which can be adapted to several problems in control engineering and information sciences; see e.g., monographs [

1,

2,

3]. The Sylvester matrix equation

and the famous special case Lyapunov equation

have several applications in numerical methods for ODEs, control and system theory, signal processing, and image restoration; see e.g., [

4,

5,

6,

7,

8,

9]. The Sylvester-transpose equation

is utilized in eigenstructure assignment in descriptor systems [

10], pole assignment [

3], and fault identification in dynamic systems [

11]. In addition, if we require that the solution

X to be symmetric, then the Sylvester-transpose equation coincides with the Sylvester one. A generalized Sylvester equation

can be applied to implicit ODEs, and a general dynamical linear model for vibration and structural analysis, robot and spaceship controls; see e.g., [

12,

13].

The mentioned matrix equations are special cases of a generalized Sylvester-transpose matrix equation:

or more generally

A direct algebraic method to find a solution of Equation (

2) is to use the Kronecker linearization transforming the matrix equation into an equivalent linear system; see e.g., [

14] (Ch. 4). The same technique together with the notion of weighted Moore–Penrose inverse were adapted to solve a coupled inconsistent Sylvester-type matrix equations [

15] for least-squares (LS) solutions. Another algebraic method is to apply a generalized Sylvester mapping [

13], so that the solution is expressed in terms of polynomial matrices. However, when the sizes of coefficient matrices are moderate or large, it is inconvenient to use matrix factorizations or another traditional methods since they require a large memory to calculate an exact solution. Thus, the Kronecker linearization and other algebraic methods are only suitable for small matrices. That is why it is important to find solutions that are easy to compute, leading many researchers to come up with algorithms that can reduce the time and memory usage of solving large matrix equations.

In the literature, there are two notable techniques to derive iterative procedures for solving linear matrix equations; see more information in a monograph [

16]. The conjugate gradient (CG) technique aims to create an approximate sequence of solutions so that the respective residual matrix creates a perpendicular base. The desired solution will come out in the final step of iterations. In the last decade, many authors developed CG-type algorithms for Equation (

2) and its special cases, e.g., BiCG [

17], BiCR [

17], CGS [

18], GCD [

19], GPBiCG [

20], and CMRES [

21]. The second technique, known as gradient-based iterative (GI) technique, intends to construct a sequence of approximate solutions from the gradient of the associated norm-error function. If we carefully set parameters of GI algorithm, then the generated sequence would converge to the desired solution. In the last five years, many GI algorithms have been introduced; see e.g., GI [

22,

23], relaxed GI [

24], accelerated GI [

25], accelerated Jacobi GI [

26], modified Jacobi GI [

27], gradient-descent algorithm [

28], and global generalized Hessenberg algorithm [

29]. For LS solutions of Sylvester-type matrix equations, there are iterative solvers, e.g., [

30,

31].

Recently, the work [

32] developed an effective gradient-descent iterative algorithm to produce approximated LS solutions of Equation (

2). When Equation (

2) is consistent, a CG-type algorithm was derived to obtain a solution within finite steps; see [

33]. This work is a continuation of [

33], i.e., we consider Equation (

1) with rectangular coefficient matrices and a rectangular unknown matrix

X. Suppose that Equation (

1) is inconsistent. We propose a CG-type algorithm to approximate LS solutions, which will solve the following problems:

Problem 1. Find a matrix that minimizes .

Let

be the set of least-squares solutions of Equation (

1). The second problem is to find an LS solution with the minimal norm:

Problem 2. Find the matrix such that The last one is to find an LS solution closest to a given matrix:

Problem 3. Let . Find the matrix such that Moreover, we extend our studies to the matrix Equation (

2). We verify the results from theoretical and numerical points of view.

The organization of this article is as follows. In

Section 2, we recall preliminary results from matrix theory that will be used in later discussions. In

Section 3, we explain how the Kronecker linearization can transform Equation (

1) into an equivalent linear system to obtain LS solutions. In

Section 4, we propose a CG-type algorithm to solve Problem 1 and verify the theoretical capability of the algorithm. After that, Problems 2 and 3 are investigated in

Section 5 and

Section 6, respectively. To verify the theory, we provide numerical experiments in

Section 7 to show the applicability and efficiency of the algorithm, compared to the Kronecker linearization and recent iterative algorithms. We summarize the whole work in the last section.

3. Least-Squares Solutions via the Kronecker Linearization

From now on, we investigate the generalized Sylvester-transpose matrix Equation (

1), with corresponding coefficient matrices

,

,

,

,

, and a rectangular unknown matrix

. We focus our attention when Equation (

1) is inconsistent. In this case, we will seek for its LS solution, that is, a matrix

that solves the following minization problem:

A traditional algebraic way to solve a linear matrix equation is known as the Kronecker linearization–to transform the matrix equation into an equivalent linear system using the notions of vector operator and Kronecker products. Indeed, taking the vector operator to Equation (

1) and applying Lemmas 2 and 3 yield

Let us denote

,

, and

Thus, a matrix

X is an LS solution of Equation (

1) if and only if

x is an LS solution of the linear system

, or equivalently, a solution of the associated normal equation

The linear system (

14) is always consistent, i.e., Equation (

1) always has an LS solution. From the normal Equation (

14) and Lemmas 2 and 3, we can deduce:

Lemma 6 ([

34]).

Problem 1 is equivalent to the following consistent matrix equation Moreover, the normal Equation (

14) has a unique solution if and only if the matrix

M is of full-column rank, i.e.,

is invertible. In this case, the unique solution is given by

, and the LS error can be computed as follows:

If

M is of not full-column rank (i.e., the kernel of

M is nontrivial), then the system

has many solutions. In this case, the LS solutions appear in the form

where

is the Moore–Penrose inverse of

M, and

u is an arbitrary vector in the kernel of

M. Among all these solutions,

is the unique one having minimal norm.

4. Least-Squares Solution via a Conjugate Gradient Algorithm

In this section, we propose a CG-type algorithm to solve Problem 1. We do not impose any assumption on the matrix

M, so that LS solutions of Equation (

1) may not be unique.

We shall adapt the conjugate-gradient technique to solve the equivalent matrix Equation (

15). Recall that the set of LS solutions of Equation (

1) is denoted by

. From Lemma 6, observe that the residual of a matrix

according to Equation (

1) is given by

Lemma 6 states that

if and only if

. From this, we propose the following algorithm. Indeed, the next approximate solution

is equal to the current approximation

along with a search direction

of suitable step size.

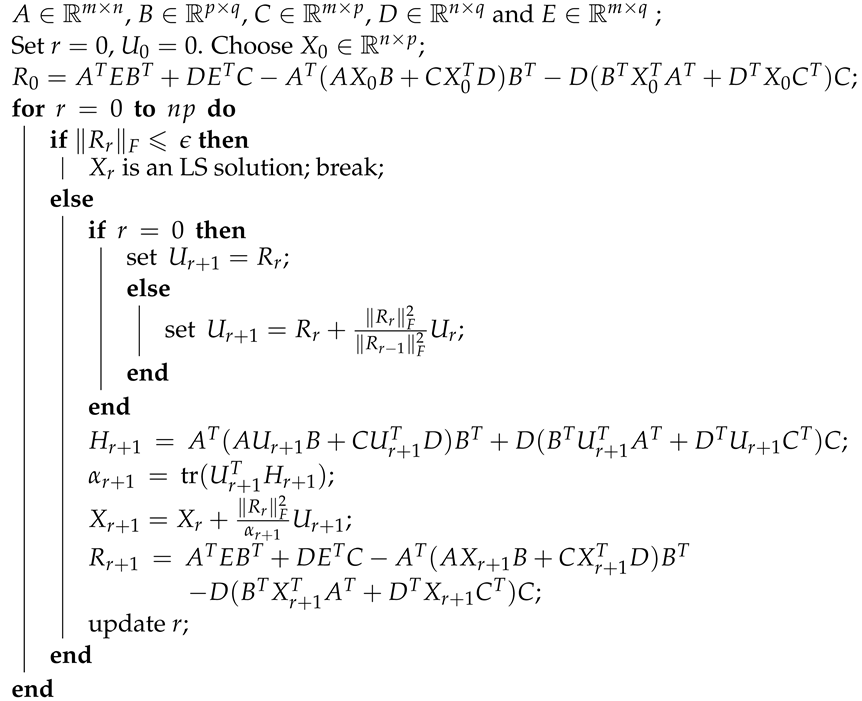

| Algorithm 1: A conjugate gradient iterative algorithm for Equation (1) |

![Symmetry 14 01868 i001]() |

Remark 1. To terminate the algorithm, one can alternatively set the stopping rule to be where is the positive square root of the LS error described in Equation (16) and is a small tolerance. For any given initial matrix

, we will show that Algorithm 1 generates a sequence of approximate solutions

of Equation (

1), so that the set of residual matrices

is orthogonal. It follows that a unique LS solution will be obtained within finite steps.

Lemma 7. Assume that the sequences and are generated by Algorithm 1. We get Proof. From Algorithm 1, we have that for any

r,

□

Lemma 8. The sequences and generated by Algorithm 1 satisfy Proof. Using the properties of the Kronecker product and the vector operator in Lemmas 1–5, we have

□

Lemma 9. The sequences , and generated by Algorithm 1 satisfy Proof. We use the induction principle to prove (

21). In order to calculate related terms, we utilize Lemmas 7 and 8. For

we get

These imply that (

21) holds for

. Now, we proceed the inductive step by assuming that

and

. Then

Hence, Equationd (

21) holds for any

r. □

Lemma 10. Suppose the sequences , and are constructed from Algorithm 1. Then Proof. The initial step

holds due to Lemma 9. Now, assume that Equation (

22) is valid for all

. From Lemmas 7 and 8, we get

and

Hence, Equation (

22) holds for any

r. □

Lemma 11. Suppose the sequences , and are constructed from Algorithm 1. Then for any integers m and n such that , we have Proof. According to Lemma 8 and the fact that

for any integers m and n, it remains to prove two equalities in (

23) for only

such that

. For

, the two equalities hold by Lemma 9. For

, we have

and, similarly, we have

Moreover,

and, similarly,

In a similar way, we can write

and

in terms of

and

, respectively. Continuing this process until the terms

and

appear. By Lemma 10, we get

and

. Similarly, we have

and

for

.

Suppose that

for

. Then for

, we have

and

Hence,

and

for any

such that

. □

Theorem 1. Algorithm 1 solves Problem 1 within finite steps. More precisely, for any given initial matrix , the sequence constructed from Algorithm 1 converges to an LS solution of Equation (1) in at most iterations. Proof. Assume that

for

. Assume that

. By Lemma 11, the set

of residual matrices is orthogonal in

with respect to the Frobenius inner product (

5). Therefore, the set

of

elements is linearly independent. This contradicts the fact that the dimension of

is

. Thus,

, and

satisfies Equation (

15) in Lemma 6. Hence

is an LS solution of Equation (

1). □

We adapt the same idea as that for Algorithm 1 to derive an algorithm for Equation (

2) as follows:

| Algorithm 2: A conjugate gradient iterative algorithm for Equation (2) |

![Symmetry 14 01868 i002]() |

The stopping rule of Algorithm 2 may be described as where is the positive square root of the associated LS error and is a small tolerance.

Theorem 2. Consider Equation (

2)

where , , , , , are given constant matrices and is an unknown matrix. Assume that the matrixis of full-column rank. Then, for any given initial matrix , the sequence constructed from Algorithm 2 converges to a unique LS solution. Proof. The proof of is similar to that of Theorem 1. □

7. Numerical Experiments

In this section, we provide numerical results to show the efficiency and effectiveness of Algorithm 2 (denoted by CG), which is an extension of Algorithm 1. We perform experiments when the coefficients in a given matrix equation are dense/sparse rectangular matrices of moderate/large sizes. We denote by the m-by-n matrix whose all entries are 1. Each random matrix has all entries belonging to the interval . Each experiment contains some comparisons of Algorithm 2 with the direct Kronecker linearization as well as well-known iterative algorithms. All iterations were performed by MATLAB R2021a, on Mac operating system (M1 chip 8C CPU/8C GPU/8GB/512GB). The performance of algorithms is investigated through the number of iterations, the norm of residual matrices, and the CPU time. The latter is measured in seconds using the functions and on MATLAB.

The next is an example of Problem 1.

Example 1. Consider a generalized Sylvester-transpose matrix equationwhere the coefficient matrices are given byIn fact, we have , i.e., the matrix equation does not have an exact solution. However, M is of full-column rank, so this equation has a unique LS solution. We run Algorithm 2 using an initial matrix and a tolerance error , where . It turns out that Algorithm 2 takes 20 iterations to get a least-square solution, consuming around s, while the direct method consumes around 7 s. Thus, Algorithm 2 takes 35 times less computational time than the direct method. We compare the performance of Algorithm 2 with other well-known iterative algorithms: GI method [31], LSI method [31], and TAUOpt method [32]. The numerical results are shown in Table 1 and Figure 1. We see that after running 20 iterations, Algorithm 2 consumes CTs slightly more than other methods, but the relative error is less than those of the others. Hence, Algorithm 2 is applicable and has a good performance. The next is an example of Problem 2.

Example 2. Consider a generalized Sylvester-transpose matrix equationwhereIn this case, Equation (29) is inconsistent and the associated matrix M is not of full-column rank. Thus, Equation (29) has many LS solutions. The direct method concerning Moore–Penrose inverse (17) takes s to get the minimal-norm LS solutions. Alternatively, MNLS method [35] can be also used to this kind of problem. However, some coefficient matrices are triangular matrices with multiple zeros, causing the MNLS algorithm diverges and cannot provide answer. Therefore, let us apply Algorithm 2 using a tolerance error . According to Theorem 4, we choose three different matrices to generate the initial matrix . The numerical results are shown in Table 2 and Figure 2. Figure 2 shows that the logarithm of the relative errors

for CG algorithms, using three initial matrices, are rapidly decreasing to zero. All of them consume around

s to arrive at the desired solution, which is 16 times less than the direct method.

The following is an example of Problem 3.

Example 3. Consider a generalized Sylvester-transpose matrix equationwhereIn fact, Equation (30) is inconsistent and has many LS solutions. The first task is to find the LS solution of Equation (30) closest to . According to Theorem 6, we apply Algorithm 2 with two different matrices V to construct the initial matrix . Algorithm 2 with and are denoted in Figure 3 by and , respectively. The second task is to solve Problem 3 when we are given . Similarly, we use two different matrices and to construct the initial matrix.

Figure 3.

The logarithm of the relative error for Example 3 with Y = 0.1 .

Figure 3.

The logarithm of the relative error for Example 3 with Y = 0.1 .

Figure 4.

The logarithm of the relative error for Example 3 with Y = I.

Figure 4.

The logarithm of the relative error for Example 3 with Y = I.

Table 3.

Relative error and computational time for Example 3.

Table 3.

Relative error and computational time for Example 3.

| Y | Initial V | Iterations | CPU | | |

|---|

| 0 | 18 | 0.104135 | 0.000006 | 4.3116 |

| 20 | 0.108153 | 0.000005 | 4.3116 |

| I | 0 | 18 | 0.113960 | 0.000009 | 0.8580 |

| 20 | 0.108499 | 0.000006 | 0.8580 |

We apply Algorithm 2 with a tolerance error

. The numerical results in

Figure 3 and

Figure 4, and

Table 3 illustrate that, in each case, the relative error converges rapidly to zero within 20 iterations, consuming around

s. Thus, Algorithm 2 performs well in both the number of iterations and computational time. Moreover, changing initial matrix and the desired matrix

Y does not siginificantly affect the performance of algorithm.