Abstract

We investigate a backward problem of the time-space fractional symmetric diffusion equation with a source term, wherein the negative Laplace operator contained in the main equation belongs to the category of uniformly symmetric elliptic operators. The problem is ill-posed because the solution does not depend continuously on the measured data. In this paper, the existence and uniqueness of the solution and the conditional stability for the inverse problem are given and proven. Based on the least squares technique, we construct a Galerkin regularization method to overcome the ill-posedness of the considered problem. Under a priori and a posteriori selection rules for the regularization parameter, the Hölder-type convergence results of optimal order for the proposed method are derived. Meanwhile, we verify the regularized effect of our method by carrying out some numerical experiments where the initial value function is a smooth function or a non-smooth one. Numerical results show that this method works well in dealing with the backward problem of the time-space fractional parabolic equation.

1. Introduction

In the fields of thermodynamics and biology, classical integer order parabolic equations are used to describe normal diffusion phenomena. However, with the development of technology and driven by the in-depth study of some practical scientific problems, people find that more and more abnormal diffusion phenomena appear in the real world, such as the diffusion problems that occur in some medias with memory and genetic characteristics. For this reason, with the help of fractional calculus theories and methods, mathematicians and physicists have derived various time or space fractional parabolic equations. Here, the time fractional derivative in a fractional parabolic equation can be used to describe particle sticking and trapping phenomena, and the space fractional derivative usually describes the long particle jump. In the past few decades, the direct problems of fractional parabolic equations have attracted more and more attention, and fractional calculus has been used in many scientific fields, such as bioengineering, pharmacokinetics, tissue dynamics, economic, and epidemiology [1,2,3,4]. In recent years, in view of the requirement of dealing with some practical problems, more and more people are focusing on the inverse problems of fractional parabolic equations, which mainly include the inverse source problem [5], parameter identification problem [6], sideways problem [7], backward problem in time (inverse initial value problem or final value problem) [8], etc.

In the present paper, we consider the backward problem of the time-space fractional parabolic equation:

where , is a fixed final time, and and are fractional orders of the time and space derivatives, respectively. The fractional derivative denotes the Caputo fractional derivative about the time variable of the function , which is defined as [9]:

and is the Gamma function. The fractional Laplacian operator of order is defined by using the spectral decomposition of the Laplace operator. The definition is summarized in Section 2; also see [10]. is the exact final value data, and denotes the source term. Let be the bound of the measured error, denoting and as the noisy final value and source term data, respectively, which satisfy:

Our task is to determine the initial value from the noisy datum and .

Note that, when , , the governing equation of (1) is the classical diffusion equation, this equation is usually used to describe the normal diffusion phenomenon, and in the normal diffusion process the diffusion flux follows Fick’s law. In physics and biology, the classical diffusion equation is also used to describe the density distribution of particles in Brownian motion. When , , the governing equation of (1) is called the time-fractional diffusion equation, which can be used to describe some anomalous diffusion phenomena. This is because many anomalous diffusions do not follow Fick’s law, but rather follow the time-fractional Fick’s law in natural science. In physics and biology, the time-fractional diffusion equation is also used to describe the density distribution of particles in fractional Brownian motion. When , , the governing equation of (1) is called the space-fractional diffusion equation. This equation often describes the diffusion law of particles driven by a Lévy flights. When , , as is the case in this paper, the governing equation of (1) can be used to describe the diffusion law of particles that simultaneously follow the Lévy and fractional Brownian processes; see [11,12].

We know that the backward problem of the time-fractional parabolic equation has been studied by many researchers, and some meaningful regularization methods have been presented and used to overcome the ill-posedness—for instance, the quasi-reversible method [13,14], total variation method [15], iterative fractional Tikhonov method [16], projection method [17], truncation method [18], simplified Tikhonov method [19], and fractional Tikhonov method [20]. For the backward problem of the space-fractional parabolic equation , there also have been some research works in which some regularization methods have been developed, such as the convolution and spectral methods [21], iteration method [22], simplified Tikhonov method [23], the logarithmic, negative exponential and fractional Tikhonov methods [24,25,26], optimal method [27], modified kernel method [28], and generalized Tikhonov method [29]. For the backward problem of the time-space fractional parabolic equation, some works have recently been published, in which some interesting and efficient regularization methods have been proposed—for instance, the fundamental kernel-based method [30], variable total variation method [31], modified iterated Lavrentiev method [32], fractional Tikhonov method [33,34], quasi-boundary value method [35], modified Tikhonov method [36], and modified quasi-boundary value method [37].

Followed on the above existing works, this paper continues to consider the backward problem (1). There are many examples of abnormal diffusion in Physics, such as the diffusion of pollen or haze. In the diffusion process, we only know the solute concentration in a certain position at the current moment. However, we often hope to know the solute concentration at the initial time. The above problem can be transformed into the solution of the backward problem of time-space fractional diffusion Equation (1), which is an ill-posed problem. Based on the ill-posedness analysis, the results of the existence and the uniqueness of the solution and conditional stability are given and proven. Then, we construct a Galerkin regularization method based on the least squares technique to recover the stability of the solution and apply it to solve the backward problem of the time-space fractional diffusion equation. Meanwhile, with the help of the conditional stability result, the Hölder-type a priori and a posteriori convergence results for the regularization method are derived. Finally, we solve a forward problem to construct the exact datum, and compute the exact and regularized solutions through respective analytic expression. The simulation effects of the regularization method are also verified by carrying out some numerical experiments.

The Galerkin method is an excellent numerical calculation method, because it has an approximate space composed of discontinuous piecewise functions. These discontinuities occur at the boundaries of the partitioning elements, and these are continuous inside the partitioning elements. Therefore, this method is often used to solve the problems faced in the finite element method; see [38]. Recently, various Galerkin methods have been developed and used in some fields of inverse problems. For instance, Ref. [39] presented and used a local discontinuous Galerkin method to identify the space-dependent source term in a time-fractional diffusion equation. In [40], the authors used the local discontinuous Galerkin method to research a time-fractional diffusion inverse problem subject to an extra measurement. Ref. [41] constructed a spectral Galerkin method to solve a Cauchy problem of the Helmholtz equation. For other works related to this type of method, please see [42,43], etc. The method in this paper is a discrete regularization one. The basic idea of this method is: under the action of orthogonal projection, we can transform an ill-posed operator equation in infinite dimensional space into a linear system in finite dimensional subspace, and construct the regularization solution of the original inverse problem with the help of the least squares technique. Here, the dimension of the finite dimensional subspace plays the role of the regularization parameter. From the numerical algorithm perspective, this method has low computational complexity, and it is easy to be implemented. In addition, the Galerkin method has the convergence property of high order in the sense of weak norms, and there are also many applications in some specific problems, such as the boundary element methods for solving boundary value problems. On the general theory of the discrete regularization method, we can refer to [44,45], and so on.

The rest of this article is arranged as follows. In Section 2, we present some preparatory knowledge. Section 3 presents and proves the results of existence and uniqueness, as well as the conditional stability for te inverse problem. Section 4 describes the construction procedure of the regularized method. Section 5 derives a priori and a posteriori convergence results of the regularization method. In Section 6, we verify the simulation effect of our method by performing some numerical experiments. Some conclusions and discussions are presented in Section 7.

2. Preliminary Knowledge

We first need a few properties of the eigenvalues of the negative Laplace operator ; please see [9,46], etc.

Proposition 1.

1. Denote the eigenvalues of as , and suppose that , and as . 2. Let be the corresponding eigenfunctions; thus, satisfy the boundary value problem:

Let:

then, if , we define the operator by:

which maps into , with the following equivalence:

In the following, we introduce some other preliminary knowledge, which is useful for the demonstration of the related theory results.

Definition 1

([9]). The two-parameter Mittag–Leffler function is defined as:

where and are arbitrary constants.

Lemma 1

([47]). For , , and positive integer , we have:

Lemma 2

([48]). For and , we have . Moreover, is completely monotonic, that is:

Lemma 3

([13]). Assume that ; then, there exist the constants , depending only on , such that for all , we have:

Lemma 4.

For and any positive integer k, there exist the positive constants and , such that:

where and .

Proof.

It can be proven by applying Lemma 3 and the similar method in [35]. □

Lemma 5.

Let ; then, the following estimate holds:

here, .

Proof.

By using Lemma 1 for , , Lemma 2, and the similar method in [16], this Lemma can be proven. □

3. Existence and Uniqueness of the Solution and Conditional Stability

3.1. Existence and Uniqueness of the Solution

In order to make a mathematical formulation for the inverse problem, we first consider the forward problem:

By separating the variables and Laplace transform of the Mittag–Leffler function, the formal solution of the forward problem (12) can be expressed as:

where , denotes the inner product in . Additionally, with when k is even, and when k is odd. It is easy to check that the eigenfunctions form an orthonormal basis in .

Let and bring it into Expression (13); then, we can obtain that:

According to the Fourier series expansion, , we have:

Hence,

As , the formal solution can be written as:

In fact, we can give and prove the existence and uniqueness, and the stability of the solution to the backward problem (1) as below.

Theorem 1.

Proof.

Applying the similar mentality in [16] and Lemma 3, we can prove Theorem 1. □

Remark 1.

From Result (19), it is easy to find that Problem (1) is well-posed for all . This is different from the backward problem of the standard diffusion equation (, ). The explanation of this phenomenon is that the current state depends on the past state; it is called the hereditary or memory property of fractional derivative (see the description in [16,19,49], etc.). However, the state at is an exception, i.e., the solution at does not continuously depend on the given data, but if it satisfies a certain priori condition, and one can establish the conditional stability of the solution at .

3.2. Conditional Stability

In this subsection, we derive the estimate of conditional stability for the backward problem (1) at . Assume that there exists a constant such that the solution of the backward problem satisfies a priori condition:

where is the norm in Sobolev space , and defined by:

Theorem 2.

Proof.

We denote , and . Because , with . By using the Hölder inequality, we obtain:

By using the Parseval equality and Lemma 5, we can derive that:

On the other hand, from Lemma 4 and a priori condition (20), we have:

Finally, we obtain that:

□

4. Regularization Method

According to the analysis and the result of the conditional stability in Section 3, we have found that Problem (1) is only ill-posed at the initial time . In this section, we firstly describe its ill-posedness by adopting the form of an operator equation, and then construct a Galerkin regularization method to overcome the ill-posedness of Problem (1) at the initial time.

4.1. The Operator Equation and Ill-Posedness Analysis

Let us denote that:

where and ; thus, .

Based on (18), we define a linear operator as follows:

where . Note that ; then, K is a self-adjoint operator. Additionally, by adopting a similar procedure as in [46], it can be proven that K is a compact operator, so (24) is a Fredholm integral equation of the first type. This means that the inversion problem of initial value is an ill-posed one. In the following, we construct a Galerkin regularization method based on the least squares technique to recover the stability of the solution (or recover the continuous dependency of the solution on measurement data).

4.2. Galerkin Regularization Method

Let be the linear self-adjoint compact operator defined in (24), and be finite dimensional subspaces of dimension N, and be a projection operator. We know that the singular values of K are , and the corresponding eigenvectors are for . Assume that is dense in and that is one-to-one. According to the references [44,45], in the original projection method, a regularized solution with the parameter N satisfies the projection equation:

and the solution of Equation (25) can be represented as follows:

where is defined as the regularization operator, and N plays a role of the regularization parameter.

Since the projection operator is an orthogonal projection, the above method is called a Galerkin method. The projection Equation (25) can be formulated as the following Galerkin equation:

In particular, when choosing in (27), one can establish a Galerkin regularization method based on least squares technique, and the solution is characterized by:

since is finite-dimensional and is one-to-one, Equation (28) has a unique solution .

Let and be the basis functions of subspaces and , respectively, and we take and , ; then,

Setting:

then, is the solution of projection Equation (25) if and only if satisfies finite dimensional linear equations:

Taking in Galerkin Equation (27), the coefficients and right-hand side terms in (31) can be written as:

i.e., Equation (31) can be expressed as:

Since is orthogonality, we have:

and therefore,

Substituting into (30), we obtain the regularized solution with exact data as:

where N is the regularization parameter. Ultimately, we define the regularization solution with the measured data as:

5. Convergence Estimates for a Priori and a Posteriori Rules

In this section, we choose the regularization parameters N by a priori and a posteriori rules, and derive the convergence estimates for the regularization method.

5.1. A Priori Convergence Estimate

In order to make the convergence estimate, the uniform boundedness condition of operator sequence is required.

Lemma 6

Theorem 3.

Proof.

First, from (24) and (26), we have ; then, the following expression holds:

It follows that:

Hence:

Notice that .

Denote ; thus, and . We define as follows:

through (40), (41) and the monotonicity of Mittag–Leffler function, then:

For every , owing to the triangle inequality and (42):

Note that, is also a projection operator from ; then, using the projection theorem, it can be obtained that:

In addition, according to Lemma 4 and (43), we have:

Combining with (42), (43), (44), note that:

From Theorem 3.10 in [44], we know that the Galerkin method based on the least squares technique is convergent and . Furthermore, we can obtain that:

Finally, from (45), (46), we have:

then, is uniformly bounded, and the result (39) can be established. □

Theorem 4.

(A priori convergence estimate) Let given by (18) be the exact solution at for problem (1) with the exact data and source item , and is the Galerkin regularization solution defined by (36) with the measured data and , which satisfy (4) and (5). Suppose that a priori condition (20) is satisfied. If choosing the regularization parameters N as:

then, we have the convergence estimate:

where , denotes the largest integer part of a real number.

Proof.

From the triangle inequality, (35), and (36), we have:

On the one hand, we first estimate , from (28):

hence:

Note that, . Denote ; then, and . We define , and it is not difficult to find that , so we have:

which yields:

On the other hand, from Lemma 5 and the inequality , we can obtain:

Note that, for any real number a, it holds that . Meanwhile, combining with (49), (50), (4), (5), and Lemma 4, we can derive that:

5.2. A Posteriori Convergence Estimate

In this section, based on the Morozov discrepancy principle [50], we select the regularization parameter N by an a posteriori stopping rule. Here, N does not depend on a priori bound E of the exact solution; rather, it depends on the noisy level and measured datum and , which is more reasonable and has important theoretical and practical significance.

In the Morozov discrepancy principle, the regularization parameter is usually chosen by the stopping rule , , and is a positive constant. However, from (36) we note that there is . Therefore, we choose the regularization parameter as the first integer N such that:

where is a positive constant.

Lemma 7.

Proof.

Theorem 5.

(A posteriori convergence estimate) Let given by (18) be the exact solution at for Problem (1) with the exact data and source item , and is the Galerkin regularization solution defined by (36) with the measured data and , which satisfy (4) and (5). Suppose that a priori condition (20) is satisfied. If the regularization parameter N is selected by a posteriori rule (54), we have the convergence estimate:

where .

Proof.

Below, we make an estimate for . Note, that using the Hölder inequality, we have:

By the Parseval equality, we can obtain that:

On the other hand, from Lemma 4 and a priori condition (20), we have:

Combined with the above estimates, we can obtain:

6. Numerical Implementations

In this section, the stability and feasibility of the regularization method are verified by carrying out some numerical experiments. In the actual research process, since the analytic solution of Problem (1) is generally difficult to be expressed explicitly, we consider the forward problem (12) with the initial value and source term to construct the exact final data , where the function is chosen as the smooth and non-smooth function, respectively. On this basis, the measured datum is generated by the random perturbation as below:

where denotes the noisy level of g, denotes the noisy level of f, and the corresponding measured error bound is calculated by . Meanwhile, we calculate the relative error between the exact and Galerkin regularization solutions by:

Note, that for the a priori case, we always require the a priori bound E of the exact solution during the computational procedure. We know that, as belongs to (), one can compute =. Moreover, is obvious. Additionally, we then take , and . In order to compute the Mittag–Leffler function, we adopt the algorithm in [51]. For the a priori case, the regularization parameter N is chosen by (47), and the regularization parameter under the a posteriori case is chosen by the stopping rule (54). In Example 1 and 2, we verify the computation results of the relative errors using the Galerkin method and the Quasi-Boundary Value method (QBV method) mentioned in [35]. Meanwhile, for the a priori case, the regularization parameter of the QBV method is chosen by . For the a posteriori case, we adopt the stopping rule presented in this paper.

Example 1.

In the forward problem (12), we chose:

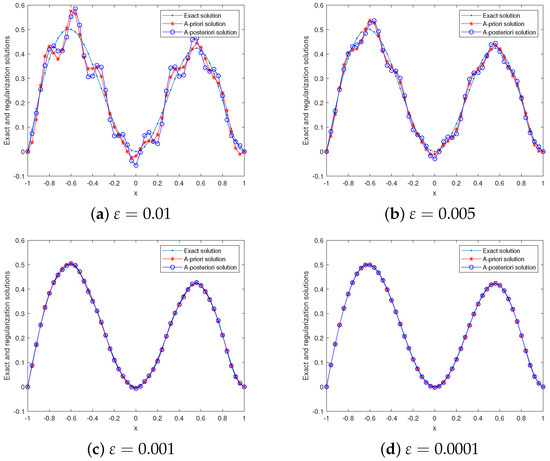

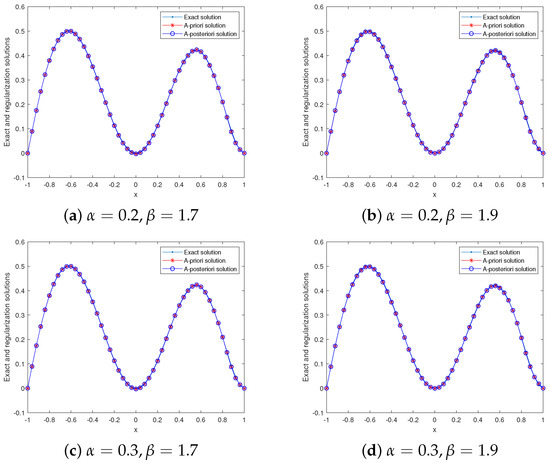

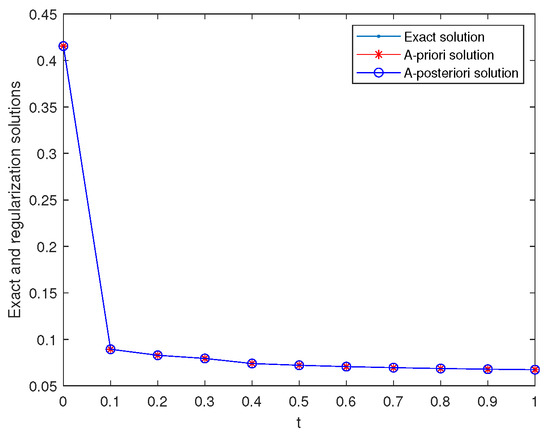

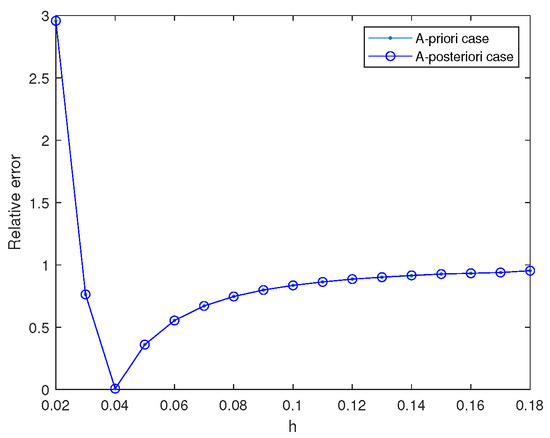

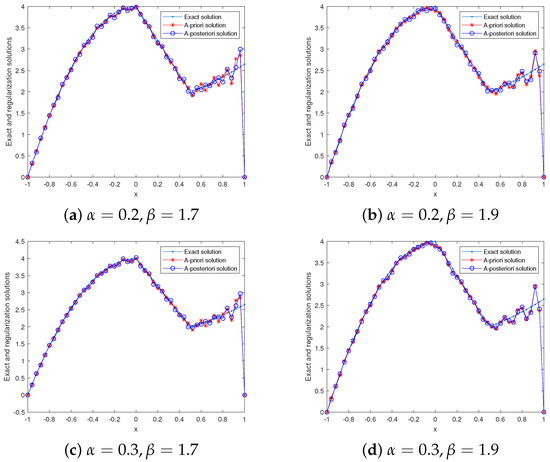

It is clear that is a smooth function, and it belongs to . Next, we selected to perform the numerical experiment. Since =, we took . In Figure 1, we show the comparison results between the exact and regularized solutions for various noise levels in the case of . For , , and , and , numerical results of the exact and regularization solutions are shown in Figure 2. For and , the calculation results for the relative errors by the Galerkin method and the QBV method are presented in Table 1. From Figure 1, it can be concluded that as decreases, the calculation effect improves. Figure 2 and Table 1 show that our method is stable and feasible in dealing with the case in which the solution of the backward problem is a smooth function. At the same time, it also shown that our method is slightly better than the QBV method in handling a smooth function. In order to describe the inversion process of the backward problem on the entire time scale, in Figure 3, we show the comparison results between the exact and regularized solutions at in the case of . From result (19), it is easy to find that problem (1) is well-posed for all . Thus, when , we use expression (36) to calculate the regularization solution, and the exact solution is calculated by expression (18). For the exact and regularization solutions are both computed by expression (17). Finally, we compare the relative errors under the different step sizes (grids), which are shown in Figure 4. Figure 4 shows that, as the step size increases, the relative error first decreases and then increases.

Figure 1.

Example 1: , , , the exact and regularization solutions for various .

Figure 2.

Example 1: , , , the exact and regularization solutions for various , .

Table 1.

Example 1: , , the computation results for the relative errors by the Galerkin method and the QBV method.

Figure 3.

Example 1: , the exact and regularization solutions for various t at .

Figure 4.

Example 1: , the relative error for various step h.

Example 2.

In the forward problem (12), we take:

Note, that is a continuous function, but it only belongs to , and =; thus, we chose a priori bound . For , , and , , and , the computation results of the exact and regularization solutions are shown in Figure 5. For and , the calculation results for the relative errors by the Galerkin method and the QBV method are presented in Table 2. Figure 5 and Table 2 show that our method is also effective in solving the case in which the solution of the backward problem only belongs to the space . Meanwhile, Table 2 also shows that our method is slightly better than the QBV method in dealing with the inverse problem whose solution belongs to the space .

Figure 5.

Example 2: , , , the exact and regularization solutions for various , .

Table 2.

Example 2: , , the computation results for the relative errors by the Galerkin method and the QBV method.

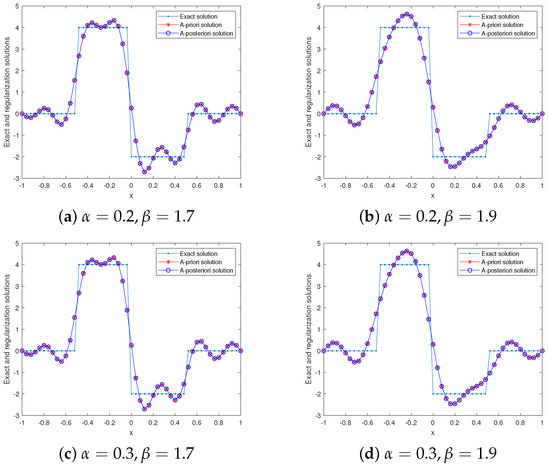

Example 3.

In the forward problem (12), is taken as a non-smooth function:

Since , we took , and selected to perform the numerical experiment. For , , and , , and , the computation results of the exact and regularization solutions are shown in Figure 6. For , , and , the calculation results for the relative errors and a priori and a posteriori regularization parameters are presented in Table 3. Figure 6 and Table 3 show that our method is also effective in solving the inverse problem whose solution is a non-smooth function.

Figure 6.

Example 3: , , , the exact and regularization solutions for various , .

Table 3.

Example 3: , , , the computation results for the relative error and regularization parameter.

7. Conclusions and Further Discussions

This article studies a backward problem of the time-space fractional parabolic equation. This problem is ill-posed in the sense that its solution is unstable. We prove the existence and uniqueness of a solution and conditional stability for the inverse problem. Based on the ill-posedness analysis, a Galerkin regularization method is constructed to recover the stability of the solution, and a priori and a posteriori convergence results are derived. Additionally, by making some numerical experiments we also verified the simulation effect of our method. Numerical results show that the Galerkin method is stable and feasible in solving the considered problem.

This paper mainly researches the Galerkin regularization theory and numerical algorithm for the case of continuous datum, which satisfy (4), (5). We point out that in this method we also can encounter the case of discrete data, but at this moment we first need solve the discrete system (31) with noisy data , and then according to (30) to compute the regularization solution. Meanwhile, a priori and a posteriori convergence results need to be derived. In fact, we have tried and derived a similar a priori convergence result with the case of continuous data. However, for the a posteriori convergence result, at present we have not explored the appropriate selection rule of the regularization parameter. In future works, we will continue to consider and overcome this difficulty.

Author Contributions

Investigation, writing—original draft preparation, H.Z.; Investigation, writing —review and editing, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

The work described in this paper was supported by the NSF of Ningxia (2022AAC03234), the NSF of China (11761004), the Construction Project of First-Class Disciplines in Ningxia Higher Education (NXYLXK2017B09), and the Postgraduate Innovation Project of North Minzu University (YCX22094).

Data Availability Statement

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

Acknowledgments

The authors would like to thank the reviewers for their constructive comments and valuable suggestions that improved the quality of our paper.

Conflicts of Interest

The authors declare that they have no conflict of interest.

References

- Magin, E.L. Fractional calculus models of complex dynamics in biological tissues. Comput. Math. Appl. 2009, 59, 1586–1593. [Google Scholar] [CrossRef]

- Sopasakis, P.; Sarimveis, H.; Macheras, P.; Dokoumetzidis, A. Fractional calculus in pharmacokinetics. J. Pharmacokinet. Pharmacodyn. 2018, 45, 107–125. [Google Scholar] [CrossRef] [PubMed]

- Tenreiro Machado, J.A.; Mata, M.E.; Lopes, A.M. Fractional dynamics and pseudo-phase space of country economic processes. Mathematics 2020, 8, 81. [Google Scholar] [CrossRef]

- Ionescu, C.; Lopes, A.; Copot, D.; Machado, J.T.; Bates, J.H. The role of fractional calculus in modeling biological phenomena: A review. Commun. Nonlinear Sci. Numer. Simul. 2017, 51, 141–159. [Google Scholar] [CrossRef]

- Liu, S.S.; Sun, F.Q.; Feng, L.X. Regularization of inverse source problem for fractional diffusion equation with Riemann-Liouville derivative. Comput. Appl. Math. 2021, 40, 112. [Google Scholar] [CrossRef]

- Sun, L.L.; Wei, T. Identification of the zeroth-order coefficient in a time fractional diffusion equation. Appl. Numer. Math. 2017, 111, 160–180. [Google Scholar] [CrossRef]

- Tran, B.N.; Nguyen, H.T.; Mokhtar, K. Regularization of a sideways problem for a time-fractional diffusion equation with nonlinear source. J. Inverse Ill-Posed Probl. 2020, 28, 1–29. [Google Scholar]

- Zhao, J.; Liu, S.; Liu, T. An inverse problem for space-fractional backward diffusion problem. Math. Methods Appl. Sci. 2014, 37, 1147–1158. [Google Scholar] [CrossRef]

- Podlubny, I. Fractional Differential Equations; Academic Press: San Diego, CA, USA, 1999. [Google Scholar]

- Tatar, S.; Tnaztepe, R.; Ulusoy, S. Determination of an unknown source term in a space-time fractional diffusion equation. J. Fract. Calc. Appl. 2015, 6, 83–90. [Google Scholar]

- Dipierro, S.; Lippi, E.P.; Valdinoci, E. (Non) local logistic equations with Neumann conditions. arXiv 2021, arXiv:2101.02315. [Google Scholar] [CrossRef]

- Cassani, D.; Vilasi, L.; Wang, Y.J. Local versus nonlocal elliptic equations:short-long range field interactions. Adv. Nonlinear Anal. 2021, 10, 895–921. [Google Scholar] [CrossRef]

- Liu, J.J.; Yamamoto, M. A backward problem for the time-fractional diffusion equation. Appl. Anal. 2010, 89, 1769–1788. [Google Scholar] [CrossRef]

- Yang, F.; Ren, Y.P.; Li, X.X. The quasi-reversibility method for a final value problem of the time-fractional diffusion equation with inhomogeneous source. Math. Methods Appl. Sci. 2018, 41, 1774–1795. [Google Scholar] [CrossRef]

- Wang, L.Y.; Liu, J.J. Total variation regularization for a backward time-fractional diffusion problem. Inverse Probl. 2013, 29, 1–22. [Google Scholar] [CrossRef]

- Yang, S.P.; Xiong, X.T.; Nie, Y. Iterated fractional Tikhonov regularization method for solving the spherically symmetric backward time-fractional diffusion equation. Appl. Numer. Math. 2021, 160, 217–241. [Google Scholar] [CrossRef]

- Ren, C.; Xu, X.; Lu, S. Regularization by projection for a backward problem of the time-fractional diffusion equation. J. Inverse Ill-Posed Probl. 2014, 22, 121–139. [Google Scholar] [CrossRef]

- Wang, L.Y.; Liu, J.J. Data regularization for a backward time-fractional diffusion problem. Comput. Math. Appl. 2012, 64, 3613–3626. [Google Scholar] [CrossRef]

- Wang, J.G.; Wei, T.; Zhou, Y.B. Optimal error bound and simplified Tikhonov regularization method for a backward problem for the time-fractional diffusion equation. J. Comput. Appl. Math. 2015, 279, 277–292. [Google Scholar] [CrossRef]

- Dinh, L.L.; Kim, V.H.; Duy, B.H.; Reza, S. On backward problem for fractional spherically symmetric diffusion equation with observation data of nonlocal type. Adv. Differ. Equ. 2021, 2021, 445. [Google Scholar]

- Zheng, G.H.; Wei, T. Two regularization methods for solving a Riesz-Feller space-fractional backward diffusion problem. Inverse Probl. 2010, 26, 1–23. [Google Scholar] [CrossRef]

- Cheng, H.; Fu, C.L.; Zheng, G.H.; Gao, J. A regularization for a Riesz-Feller space-fractional backward diffusion problem. Inverse Probl. Sci. Eng. 2014, 22, 860–872. [Google Scholar] [CrossRef]

- Yang, F.; Li, X.X.; Li, D.G.; Wang, L. The simplified Tikhonov regularization method for solving a Riesz-Feller space-fractional backward diffusion problem. Math. Comput. Sci. 2017, 11, 91–110. [Google Scholar] [CrossRef]

- Zheng, G.H.; Zhang, Q.G. Recovering the initial distribution for space-fractional diffusion equation by a logarithmic regularization method. Appl. Math. Lett. 2016, 61, 143–148. [Google Scholar] [CrossRef]

- Zheng, G.H.; Zhang, Q.G. Determining the initial distribution in space-fractional diffusion by a negative exponential regularization method. Inverse Probl. Sci. Eng. 2017, 25, 965–977. [Google Scholar] [CrossRef]

- Zheng, G.H.; Zhang, Q.G. Solving the backward problem for space-fractional diffusion equation by a fractional Tikhonov regularization method. Math. Comput. Simul. 2018, 148, 37–47. [Google Scholar] [CrossRef]

- Zhang, Z.Q.; Wei, T. An optimal regularization method for space-fractional backward diffusion problem. Math. Comput. Simul. 2013, 92, 14–27. [Google Scholar] [CrossRef]

- Liu, S.S.; Feng, L.X. Optimal error bound and modified kernel method for a space-fractional backward diffusion problem. Adv. Differ. Equ. 2018, 2018, 268. [Google Scholar] [CrossRef]

- Zhang, H.W.; Zhang, X.J. Solving the Riesz-Feller space-fractional backward diffusion problem by a generalized Tikhonov method. Adv. Differ. Equ. 2020, 2020, 376–384. [Google Scholar] [CrossRef]

- Dou, F.F.; Hon, Y.C. Fundamental kernel-based method for backward space-time fractional diffusion problem. Comput. Math. Appl. 2016, 71, 356–367. [Google Scholar] [CrossRef]

- Jia, J.X.; Peng, J.G.; Gao, J.H.; Li, Y. Backward problem for a time-space fractional diffusion equation. Inverse Probl. Imaging 2018, 12, 773–799. [Google Scholar] [CrossRef]

- Trong, D.D.; Hai, D.N.D.; Dien, N.M. On a time-space fractional backward diffusion problem with inexact orders. Comput. Math. Appl. 2019, 78, 1572–1593. [Google Scholar] [CrossRef]

- Wang, J.L.; Xiong, X.T.; Cao, X.X. Fractional Tikhonov regularization method for a time-fractional backward heat equation with a fractional Laplacian. J. Partial. Differ. Equ. 2018, 4, 333–342. [Google Scholar]

- Djennadi, S.; Shawagfeh, N.; Abu Arqub, O. A fractional Tikhonov regularization method for an inverse backward and source problems in the time-space fractional diffusion equations. Chaos Solitons Fractals 2021, 150, 111127. [Google Scholar] [CrossRef]

- Yang, F.; Zhang, Y.; Liu, X.; Li, X. The quasi-boundary value method for identifying the initial value of the space-time fractional diffusion equation. Acta Math. Sci. 2020, 40, 641–658. [Google Scholar] [CrossRef]

- Feng, X.L.; Zhao, M.X.; Qian, Z. A Tikhonov regularization method for solving a backward time-space fractional diffusion problem. J. Comput. Appl. Math. 2022, 411, 114236. [Google Scholar] [CrossRef]

- Trong, D.D.; Hai, D.N. Backward problem for time-space fractional diffusion equations in Hilbert scales. Comput. Math. Appl. 2021, 93, 253–264. [Google Scholar] [CrossRef]

- Asadzadeh, M.; Beilina, L. A posteriori error analysis in a globally convergent numerical method for a hyperbolic coefficient inverse. Inverse Probl. 2010, 26, 115–127. [Google Scholar] [CrossRef]

- Yeganeh, S.; Mokhtari, R.; Hesthaven, J.S. Space-dependent source determination in a time-fractional diffusion equation using a local discontinuous Galerkin method. Bit Numer. Math. 2017, 57, 685–707. [Google Scholar] [CrossRef]

- Qasemi, S.; Rostamy, D.; Abdollahi, N. The time-fractional diffusion inverse problem subject to an extra measurement by a local discontinuous Galerkin method. Bit Numer. Math. 2019, 59, 183–212. [Google Scholar] [CrossRef]

- Xiong, X.T.; Zhao, X.; Wang, J. Spectral Galerkin method and its application to a Cauchy problem of Helmholtz equation. Numer. Algorithms 2013, 63, 691–711. [Google Scholar] [CrossRef]

- Kien, B.T.; Qin, X.; Wen, C.F.; Yao, J.C. The Galerkin Method and Regularization for Variational Inequalities in Reflexive Banach Spaces. J. Optim. Theory Appl. 2021, 189, 578–596. [Google Scholar] [CrossRef]

- Zhao, Q.L. Regularization of Three Inverse Boundary Value Problems. Master’s Thesis, Lanzhou University, Lanzhou, China, 2008. (In Chinese). [Google Scholar]

- Kirsch, A. An Introduction to the Mathematical Theory of Inverse Problems; Springer: New York, NY, USA, 2011. [Google Scholar]

- Liu, J.J. Regularization Method for Ill-Posed Problem and Its Application; Science Press: Beijing, China, 2005. (In Chinese) [Google Scholar]

- Kilbas, A.A.; Srivastava, H.M.; Trujillo, J.J. Theory and applications of fractional differential equations. North-Holl. Math. Stud. 2006, 204, 2453–2461. [Google Scholar]

- Sakamoto, K.; Yamamoto, M. Initial value/boundary value problems for fractional diffusion-wave equations and applications to some inverse problems. J. Math. Anal. Appl. 2011, 382, 426–447. [Google Scholar] [CrossRef]

- Pollard, H. The completely monotonic character of the Mittag–Leffler function Eα(−x). Bull. Am. Math. Soc. 1948, 54, 1115–1116. [Google Scholar] [CrossRef]

- Mishura, Y.S. Stochastic Calculus for Fractional Brownian Motion and Related Processes; Springer: Berlin, Germany, 2008. [Google Scholar]

- Morozov, V.A.; Nashed, Z.; Aries, A.B. Methods for Solving Incorrectly Posed Problems; Springer: New York, NY, USA, 1984. [Google Scholar]

- Podlubny, I.; Kaccenak, M. Mittag–Leffler Function. The Matlabroutine. 2006. Available online: http://www.mathworks.com/matlabcentral/fileexchange (accessed on 26 February 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).