Abstract

This paper proposes a novel approach to decision-making based on a three-phase application of a new fuzzy logic model that embraces the principles of symmetry by balancing competing objectives in data collection and analysis. Our study, which employs a three-stage stratified random sample strategy with a randomized response technique, addresses the critical challenges of cost management and volatility reduction. Using the alpha-cut method, our model creates an effective allocation strategy that finds a balance between cost constraints and variance reduction objectives. We use numerical examples from real-world scenarios to demonstrate our approach’s durability and practicality. Our revolutionary technique maintains data quality and cost-effectiveness while offering a game-changing answer to sensitive information acquisition concerns. By combining randomized response techniques and fuzzy logic, this study establishes a new standard for decision-making models that prioritizes both data-gathering precision and privacy preservation, encapsulating the essential principle of symmetry in balancing competing aims.

1. Introduction

Within the field of statistical inference, numerous approaches can be utilized to arrive at findings, depending on the circumstances and goals of the investigation. A common method is to include the inference procedure in a probabilistic framework by utilizing models designed for enumeration-based and analytical inference. This technique is widely recognized as a fundamental component of statisticians’ arsenal, supporting the development of trustworthy inferences from observable data. It is crucial to understand, however, that enumeration inference requires a different probability structure than analytical inference. This discrepancy highlights the necessity for flexibility in statistical modeling and results from the different conditions and assumptions inherent in these approaches. A turning point in statistical inquiry was reached with the extraordinary upsurge in sampling-related research that followed World War II. Few statistical problems attracted as much attention and scientific investigation at this time as sampling theory. The main driver of this increase may be traced to the wide range of real-world uses of sampling theory, which sparked a radical change in data-gathering techniques. This revolutionary influence is demonstrated by the large number of distinguished statisticians who have devoted large amounts of their research efforts to the analysis of sample surveys. In practical applications, these surveys are vital instruments for gathering information from segments of the general public. Survey settings are noteworthy for their capacity to utilize supplementary data. Redistributing some of the survey resources makes it possible to expedite access to additional data. A vast array of repositories, such as census data, previous surveys, or pilot studies, might be included in these auxiliary data sources. These flexible data streams can take many different shapes and offer insightful information about one or more relevant variables. Specific questions are frequently asked in surveys, and answers are gathered from a carefully chosen sample of the community being studied. One such innovative survey approach that has surfaced is the randomized response (RR) technique, which was first developed by [1]. Using this method, a basic random sample, usually consisting of ‘n’ individuals, is selected with replacement from the population. The main goal is to determine the percentage of the population that possesses a sensitive characteristic, represented by the letter “G”. Every member of the sample receives an identical randomization device designed to produce results based on a predetermined probability distribution. “I do not possess the trait G” and “I possess the trait G” are both considered genuine with a predefined probability “P”. The respondent then chooses “Yes” or “No”, depending on how well the results of the randomization device match their real situation. Interested parties can consult a number of seminal articles written by [2,3,4,5,6,7,8,9], among others, for a more in-depth examination of the nuances and complexity present in these methodologies. These publications provide thorough insights and direction in this area. These scholarly works provide deeper insights into statistical inference, sampling strategies, and the changing landscape of data-gathering methodologies, making them invaluable knowledge repositories for both researchers and practitioners.

2. Some Related Models

Ref. [10] used the randomization device carrying three types of cards bearing statements: (i) “I belong to sensitive group A1”, (ii) “I belong to group A2”, and (iii) “Blank cards”, with corresponding probabilities Q1, Q2, and Q3, respectively, such that . If the blank card is drawn by the respondent, he/she will report “no.” The rest of the procedure remains as usual. The probability of a “Yes” answer is given by

where and are the true proportion of the rare sensitive attribute A1 and the rare unrelated attribute A2 in the population, respectively. From the above Equation, the estimator of is as

where the observed proportion of “yes” answers in the sample. The variance of the estimator is given as

An approach to calculating the mean number of persons possessing a rare sensitive trait using stratified sampling was proposed by Ref. [9], Bayesian by Ref. [11], and others Refs. [12,13,14,15,16,17,18,19,20,21]. In light of the aforementioned research, we have attempted in this paper to extend the unrelated randomized response model of [10] to a three-stage procedure for unrelated randomized responses in stratified and stratified random double sampling using a Poisson distribution to estimate the rare sensitive attribute in cases where the rare unrelated question’s parameter is known. The recommended model has been shown to perform better through empirical investigations. Analyses of the data are provided, along with appropriate suggestions.

3. Problem Formulation

Over time, significant advancements in electrical system size and efficiency have been observed, mostly as a result of the use of optimization techniques. By continuously modifying and optimizing parameters in real-time, these approaches are crucial for improving the performance of both linear and nonlinear systems. Whether they represent maxima or minima, optimization strategies are flexible and able to find optimal values by fine-tuning system parameters. It is important to recognize, though, that it would be premature to declare any specific electrical system design to be the best option, given how quickly technology is developing. Technology constantly reshapes what is possible, pushing the envelope and breaking preconceived notions of what is possible. A problem of optimization occurs in the context of Tarray and Singh’s two-stage stratified random sampling model with fuzzy costs. In 2015, fuzzy, nonlinear programming was used to solve this problem. This model’s optimal allocation was the main problem to be solved. The method of Lagrange multipliers, a potent optimization tool, was employed to solve the problem. Furthermore, at a predefined alpha threshold, the fuzzy numbers—which represented the ideal allocation—were transformed into discrete integers using the alpha-cut approach. For practical applications, working with integer sample sizes is essential;hence, finding an integer-based solution is quite important. Utilizing LINGO software 21.0, the researchers were able to arrive at this answer without having to round off the continuous data. By framing the issue as a fuzzy integer nonlinear programming problem, this method produced a more accurate and useful solution for electrical system design. This methodology allows for the reliable collection of data on sensitive attributes within the population while also protecting the privacy of the respondents. The challenge of optimal allocation in stratified random sampling with fuzzy costs is successfully addressed through the innovative use of fuzzy nonlinear programming and sophisticated optimization techniques, offering important insights for the design and enhancement of electrical systems.

Initially, Ω is a finite population of size N, which is composed of L strata of size Nh (h = 1, 2, 3…, L). A sample of size nh is drawn by simple random sampling with replacement (SRSWR) from the hth stratum. It is assumed that the stratum is known. The nh respondents from the hth stratum are provided with the following three-stage randomization device:

| Statements | Selection probability |

|

Statement 1: Are you a member of a rare sensitive Group A1?

Statement 2: Go to randomization device R2h |

Second-stage randomization device R2h consists of two statements

| Statements | Selection probability |

|

Statement 1: Are you a member of a rare sensitive Group A1?

Statement 2: Go to randomization device R3h |

Ph (1 − Ph) |

The randomization device R3h utilized three statements. Let Xh and Yh denotethe number of cards that the respondent drew from the first and second decks, respectively, to obtain the cards that represent their personal status. might be stated as, if is the ith respondent in the hth stratum:

with

and

Using (2)

with a sampling variance

or

where

with variance

where is the available fixed budget for the survey, is the available fixed budget for the survey, and is the overhead expense. Nonlinear programming (NLPP) problem with fixed costs

The limitations and are put in place, respectively.

4. Fuzzy Formulation

This new problem has led to the development of a field called privacy-preserving data mining (PPDM). Randomization is one of the potential techniques for privacy-preserving data mining. Before providing the actual data to data sleuths, this technique disguises it. Fuzzy numbers can be classified as triangular fuzzy numbers (TFN).

and is triangular fuzzy numbers with membership function

The following is a comparable description of the membership function for the available budget:

Moreover, we discuss the trapezoidal fuzzy number (TrFN).

and is trapezoidal fuzzy numbers with membership function.

with this

5. Lagrange Multipliers Formulation

Possibly, the Lagrangian function

with

or

Also,

which gives

or

also,

and

Convert fuzzy allocations into a crisp allocation using the cut method.

6. Procedure for Conversation of Fuzzy Numbers

An alpha-cut for ,

computed as

and

where .

Given by is the analogous crisp allocation,

Equal Disbursement. This method divides the total sample size n equally among the strata, yielding the hth stratum, so the reduced equation will be

with

n can be computed from,

And

or

and for

having

7. Numerical Illustration

Using a population size of 1000 and total survey budgets of 3500, 4000, and 4800 units, respectively, for TFNs and 3500, 4000, 4400, and 4600 units for TrFNS, the required FNLLP is calculated based on the values provided in Table 1 and Table 2 as follows:

Table 1.

The stratified population with two strata.

Table 2.

Calculated values of and .

The required optimal allocations for the issue may be obtained by entering the values from Table 1 and Table 2 in (9) at =0.5.

In a similar way, the problem of the optimal allocation will be determined by inserting values from Table 1, Table 2 and Table 3 at a value of 0.50.

Table 3.

Optimum allocation and variance values.

Case—I:

Using the above minimization problem, we obtain the optimal solution as , , and the optimal value is Minimize = 0.000777032.

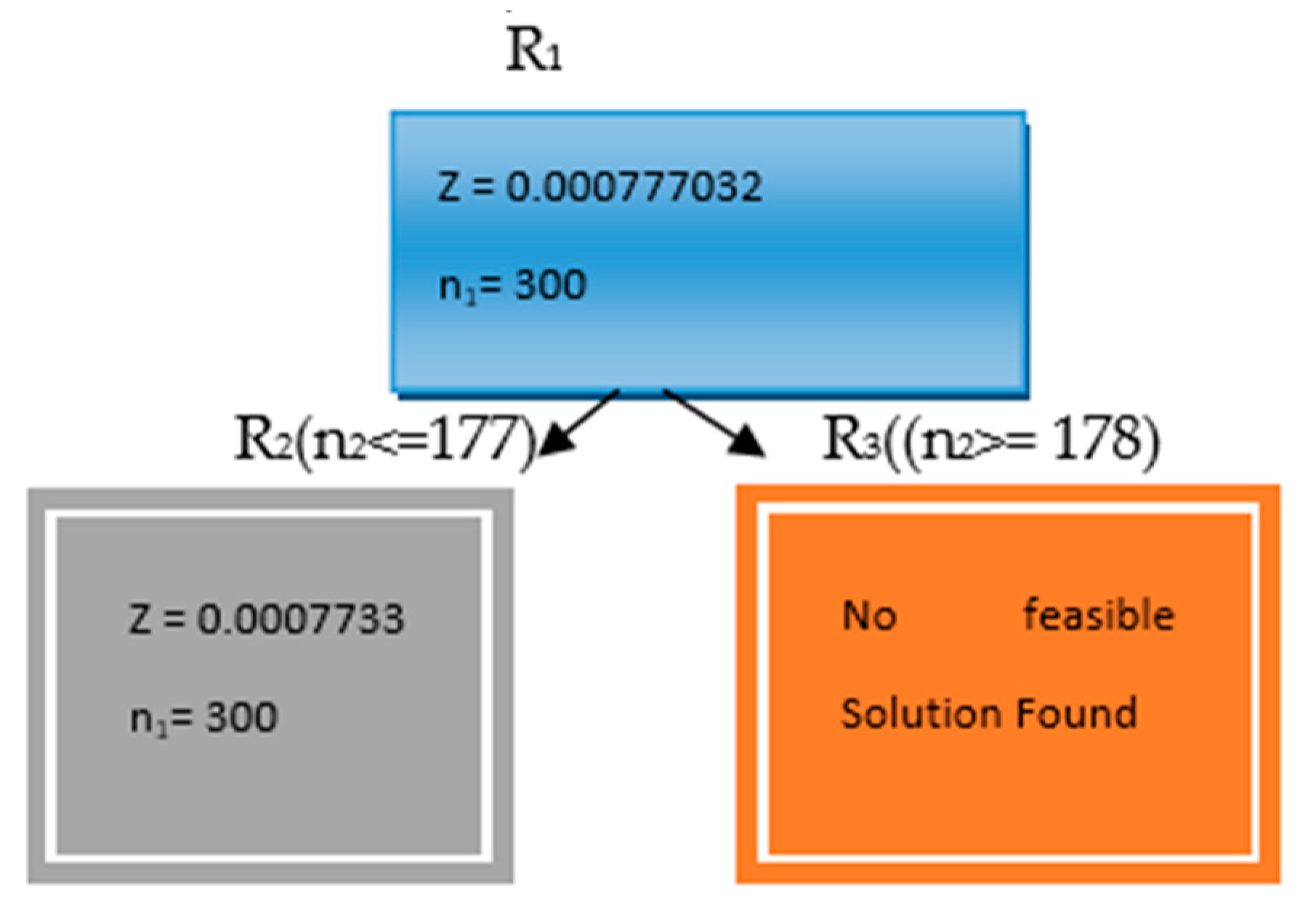

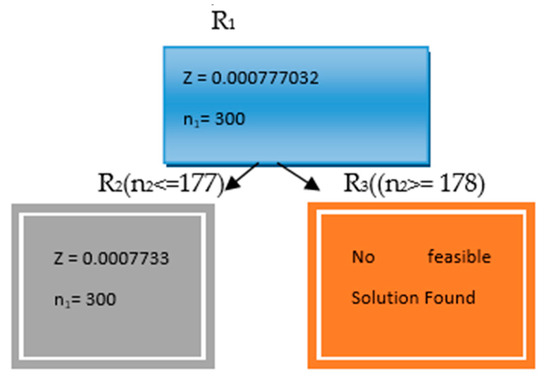

Since n1 and n2 are required to be integers, we branch problem R1 into two sub-problems, R2 and R3, by introducing the constraints n2 ≤ 177 and n2 ≥ 178, respectively, indicated by the value n1 = 300. The optimal solution is then determined as n1 = 300 and n2 = 177, minimizing V () = 0.000777032. Notably, these optimal integer values are the same as those obtained by rounding ni to the nearest integer. Assuming V () = Z, the various nodes for the NLPP using case I are illustrated in Figure 1.

Figure 1.

Various nodes of NLPP.

Case—II:

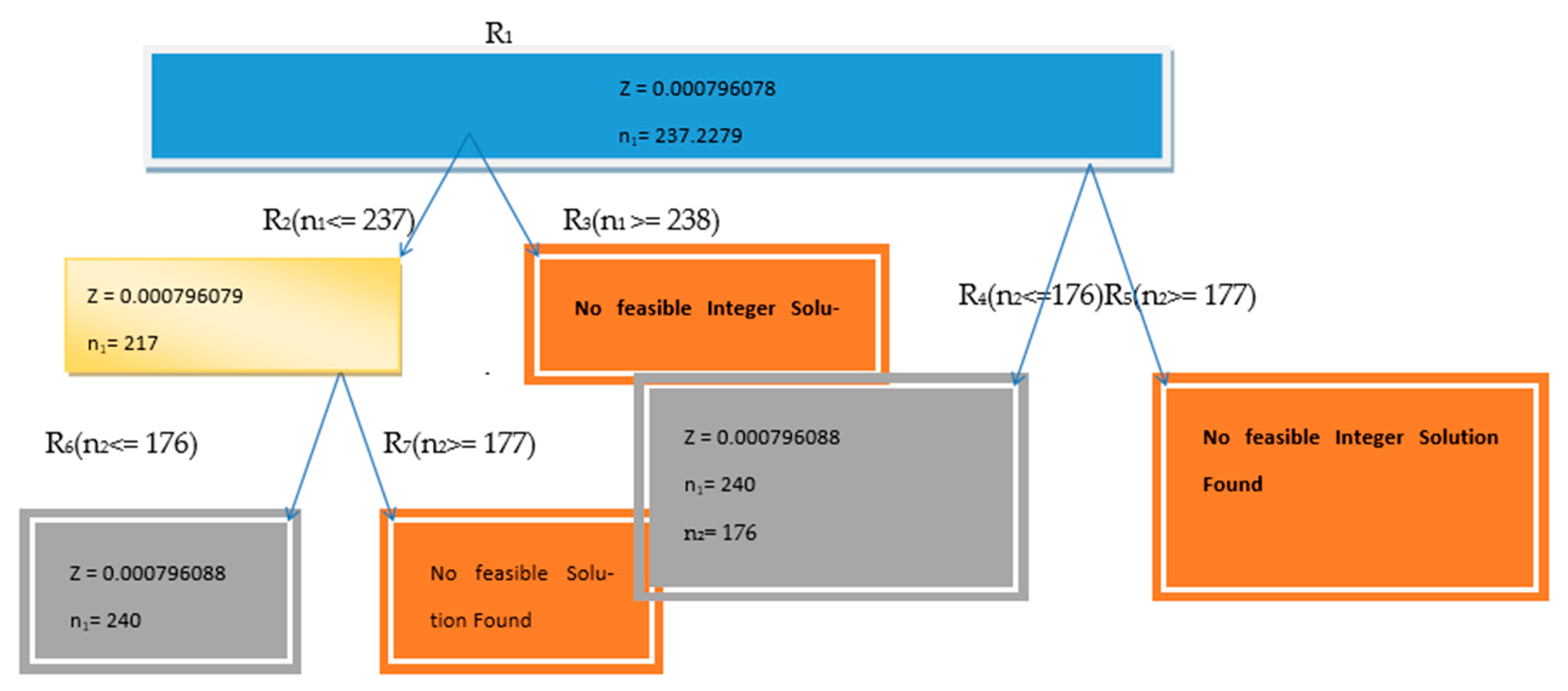

Using the above minimization problem, we obtain the optimal solution as , , and the optimal value is minimized .

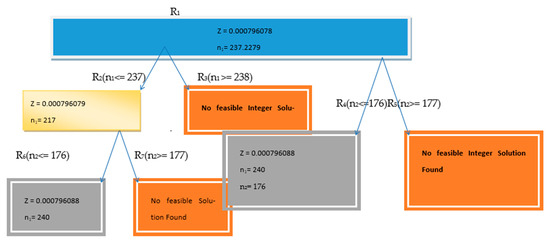

Since n1 and n2 are required to be integers, problem R1 is further branched into sub-problems R2, R3, R4, and R5 with additional constraints of n1 ≤ 237, n1 ≥ 238, n2 ≤ 176, and n2 ≥ 177, respectively. Problem R4 is resolved optimally as the solutions for n1 and n2 are integers. Problem R2 is further divided into sub-problems R6 and R7 with constraints n2 ≤ 176 and n2 ≥ 177, respectively. R6 is resolved optimally, while R7 has no feasible solution. The optimal solution is n1 = 240 and n2 = 176, minimizing V () = 0.000796088. Assuming V () = Z, the various nodes for the NLPP using case II are shown in Figure 2.

Figure 2.

Various nodes of NLPP.

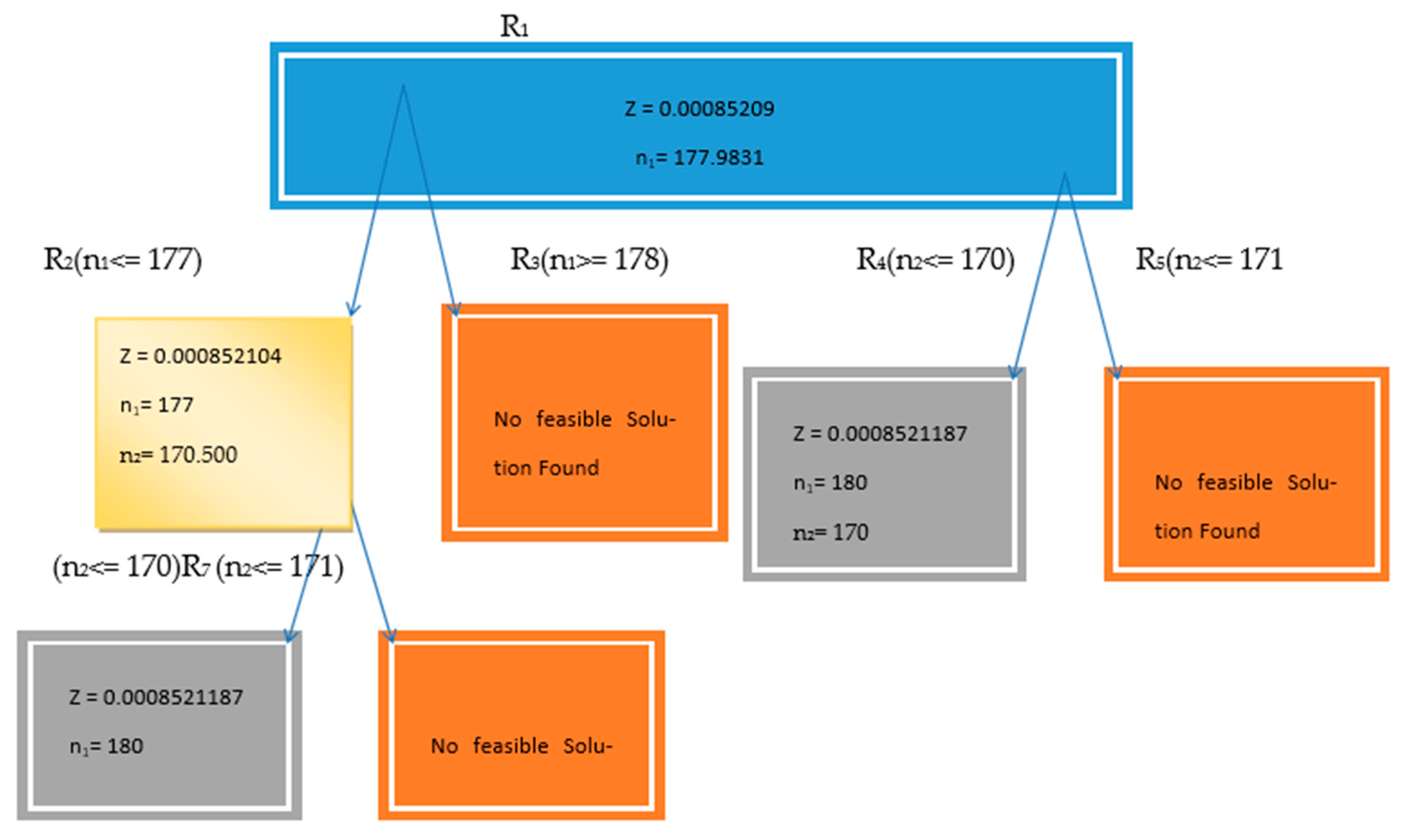

Case—III:

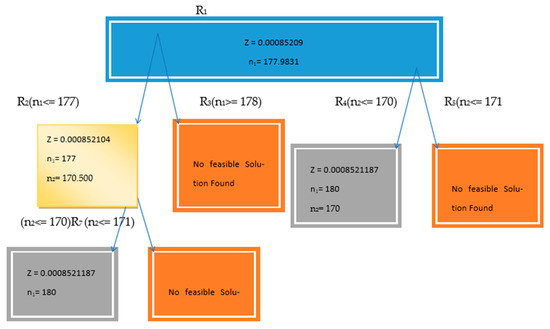

Using the above minimization problem, we obtain the optimal solution as , , and the optimal value is minimized = 0.00085209. Since n1 and n2 are required to be integers, problem R1 is further branched into sub-problems R2 and R3, R4, and R5 with additional constraints of n1 ≤ 177 and n1 ≥ 178, respectively. Problems R4 are resolved optimally with integer solutions for n1 and n2. Problem R2 is further divided into sub-problems R6 and R7 with constraints of n2 ≤ 170 and n2 ≥ 171, respectively. R6 is resolved optimally, while R7 has no feasible solution. The optimal solution is n1 = 180 and n2 = 170, minimizing V () = 0.0008521187. Assuming V() = Z, the various nodes for the NLPP using case III are shown in Figure 3.

Figure 3.

Various nodes of NLPP.

8. Discussion Section

Using a randomized response technique, we have developed a novel fuzzy logic approach to decision-making that can be implemented in three phases and is specifically designed to minimize variation while taking costs into consideration in stratified random sampling. Although our model is useful in practice and provides a thorough framework for enhancing data collection techniques, it is crucial to recognize its limitations and consider future research directions. Our method assumes fixed costs for each stratum, but additional research into adaptive models will be necessary because these costs could change as a result of logistical issues, inflation, and resource availability. Although our model allocates using the alpha-cut method, it might not accurately reflect the uncertainty in real-world data. Machine learning or other alternative fuzzy logic approaches could increase the flexibility and robustness of the model. Additionally, the main focus of our research has been the theoretical and numerical verification of the suggested model. To evaluate the practical applicability and efficacy of our technique in various circumstances, empirical validation via real-world case studies is necessary. Field research and partnerships with business partners may yield insightful information and aid in the model’s empirical, evidence-based refinement. We demonstrated the practical value of our concept by applying it to three different cases. Our approach’s effectiveness was validated in each case by the notable decrease in variance that was maintained at a reasonable cost. However, the results also pointed out several areas that needed work. These point to the need for more accurate cost estimation methods as well as more optimization of the allocation process inside the model.

9. Conclusions

A comprehensive investigation was conducted for this research project with the goal of creating and evaluating a three-stage randomized response model. The model attracted a great deal of scholarly attention for its novel features and tactical suggestions. It was painstakingly created to answer a particular difficulty. The main goal was to investigate and evaluate the efficacy of the methods used in this model. With fuzzy cost considerations, the project’s main goal was to solve the optimal allocation problem that arises in a three-stage stratified random sampling system. Fuzzy nonlinear programming techniques were the main emphasis of the resilient solution approach that was used to address this challenging challenge. The main problem was figuring out how to allocate resources inside this complex system, which was skillfully solved by applying the Lagrange multipliers approach, a powerful optimization technique. This comprehensive study’s results provided an important realization: the suggested approach routinely beats a recently created estimate. This important finding emphasizes the usefulness and effectiveness of the suggested approach, emphasizing its advantage over competing tactics. The outcomes provide insight into the model’s functionality and show how it might improve decision-making in situations where three-stage randomized response models are used. The present investigation not only confirms the effectiveness of the suggested methodologies but also underscores their significance in enhancing the precision and dependability of data gathering and examination in intricate sampling contexts.

Author Contributions

Conceptualization and data curation: Z.G.K. Formal analysis andinvestigation: Z.A.G. Supervision, validation, and writing—review and editing: T.A.T. Visualization, writing—original draft preparation: A.S. Methodology, Writing—review & editing and Visualization: F.D. Software, Validation, Resources and Project administration and funding acquisition: O.A. All authors have read and agreed to the published version of the manuscript.

Funding

The research was supported by JKST&IC/SRE/921-25 under G.O.No: 43—JK(ST) of 2023.

Data Availability Statement

No new data were created.

Acknowledgments

The research was supported by JKST&IC/SRE/921-25 under G.O.No: 43—JK(ST) of 2023.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Warner, S.L. Randomized response: A survey technique for eliminating evasive answer bias. J. Am. Stat. Assoc. 1965, 60, 63–69. [Google Scholar] [CrossRef] [PubMed]

- Ganie, A.H.; Tarray, T.A.; Ganie, Z.A.; Koka, N.A.; Alahmadi, R.A. An innovative fuzzy logic for decision-making that employs the randomized response technique. Pak. J. Stat. 2024, 40, 1–16. [Google Scholar]

- Baig, A.; Masood, S.; Tarray, T.A. Improved class of difference-type estimators for population median in survey sampling. Commun. Stat.-Theory Methods 2020, 49, 5778–5793. [Google Scholar] [CrossRef]

- Kuk, A.Y.C. Asking sensitive questions indirectly. Biomerika 1990, 77, 436–438. [Google Scholar] [CrossRef]

- Zadeh, L.A. Fuzzy sets. Inf. Comp. 1995, 8, 338–353. [Google Scholar]

- Kim, J.M.; Warde, W.D. A Mixed Randomized Response Model. J. Stat. Plan. Inference 2005, 133, 211–221. [Google Scholar] [CrossRef]

- Clark, D.A.; Beck, A.T. Personality factors in dysphoria: A psychometric refinement of Beck’s Sociotropy-Autonomy Scale. J. Psychopathol. Behav. Assess. 1986, 13, 369–388. [Google Scholar] [CrossRef]

- Waldman, I.D.; Robinson, B.F.; Rowe, D.C. A logistic regression based extension of the TDT for continuous and categorical traits. Ann. Hum. Genet. 1999, 63, 329–340. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.M.; Elam, M.E. A stratified unrelated question randomized response model. Stat. Pap. 2007, 48, 215–233. [Google Scholar] [CrossRef]

- Singh, S.; Horn, S.; Singh, R.; Mangat, N.S. On the use of modified randomization device for estimating the prevalence of a sensitive attribute. Stat. Transit. 2003, 6, 515–522. [Google Scholar]

- Ateeq, K.; Altaf, S.S.; Aslam, M. Modeling and analysis of recovery time for the COVID-19 patients: A Bayesian approach. Arab. J. Basic Appl. Sci. 2023, 30, 1–12. [Google Scholar] [CrossRef]

- Geurts, M.D. Using a randomized response research design to eliminate non-response and response biases in business research. J. Acad. Mark. Sci. 1980, 8, 83–91. [Google Scholar] [CrossRef]

- Mirhosseini, Z.; Pakdel, P.; Ebrahimi, M. Women, Sexual Harassment, and Coping Strategies: A Descriptive Analysis. Iran. Rehabil. J. 2023, 21, 117–126. [Google Scholar] [CrossRef]

- Kassa, E.T. Factors influencing taxpayers to engage in tax evasion: Evidence from Woldia City administration micro, small, and large enterprise taxpayers. J. Innov. Entrep. 2021, 10, 8. [Google Scholar] [CrossRef]

- Greenberg, B.G.; Abul-Ela AL, A.; Simmons, W.R.; Horvitz, D.G. The unrelated question randomized response model: Theoretical framework. J. Am. Stat. Assoc. 1969, 64, 520–539. [Google Scholar] [CrossRef]

- Moors, J.J.A. Optimization of the unrelated question randomized response model. J. Am. Stat. Assoc. 1971, 66, 627–629. [Google Scholar] [CrossRef]

- Christofides, T.C. A generalized randomized response technique. Metrika 2003, 57, 195–200. [Google Scholar] [CrossRef]

- Huang, K.C. A survey technique for estimating the proportion and sensitivity in a dichotomous finite population. Stat. Neerl. 2004, 58, 75–82. [Google Scholar] [CrossRef]

- Mangat, N.S.; Singh, R. An alternative randomized response procedure. Biometrika 1990, 77, 439–442. [Google Scholar] [CrossRef]

- Hsieh, S.H.; Lee, S.M.; Li, C.S. A two-stage multilevel randomized response technique with proportional odds models and missing covariates. Sociol. Methods Res. 2022, 51, 439–461. [Google Scholar] [CrossRef]

- Halim, A.; Arshad, I.A.; Haroon, S.; Shair, W. Effect of Misclassification on Test of Independence Using Different Randomized Response Techniques. J. Policy Res. 2022, 8, 427–438. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).