Abstract

In this paper, we develop an effective iterative algorithm to solve a generalized Sylvester tensor equation over quaternions which includes several well-studied matrix/tensor equations as special cases. We discuss the convergence of this algorithm within a finite number of iterations, assuming negligible round-off errors for any initial tensor. Moreover, we demonstrate the unique minimal Frobenius norm solution achievable by selecting specific types of initial tensors. Additionally, numerical examples are presented to illustrate the practicality and validity of our proposed algorithm. These examples include demonstrating the algorithm’s effectiveness in addressing three-dimensional microscopic heat transport and color video restoration problems.

1. Introduction

An order N tensor over a field is a multidimensional array with entries in , where N is a positive integer [1,2]. The set of all such N tensors is denoted by . Over the past few decades, there has been extensive research on tensors, which has been driven by their diverse applications in fields such as physics, computer vision, data mining, and more (see, e.g., [1,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23]).

In this paper, we examine the conditions under which certain tensor equations over quaternions have solutions. This is motivated by the recent research on tensor equations as well as a long research history of solving matrix equations, which are briefly outlined as follows. It is well known that the following Sylvester matrix equation

and its generalized forms have been widely investigated and found numerous applications in many areas.

During the past few decades, many methods have been developed for solving Sylvester-type matrix equations over the quaternion algebra. For example, Kyrchei [24] provided explicit determinantal representation formulas for the solutions to Equation (1). Heyouni et al. [25] presented the SGl-CMRH method and preconditioned framework of this method to solve matrix Equation (1) when . Zhang [26] investigated the general system of generalized Sylvester quaternion matrix equations. Ahmadi-Asl and Beik [27,28,29] developed efficient iterative algorithms for solving various quaternion matrix equations. Song [30] investigated the general solution to a system of quaternion matrix equation by Cramer’s rule. Wang et al. [31] investigated the solvability of a system of constrained two-sided coupled generalized Sylvester quaternion matrix equations. Zhang et al. [32] derived specific least-squares solutions of the quaternion matrix equation . Meanwhile, Huang et al. [33] applied the modified conjugate gradient method to address the generalized coupled Sylvester conjugate matrix equations.

Quaternions provide more versatility and flexibility than real and complex numbers, especially when dealing with multidimensional problems. This unique property has attracted growing interest among scholars, leading to numerous valuable achievements in quaternion-related research (see, e.g., [34,35,36,37,38,39,40]). The tensor equation is a natural extension of the matrix equation.

In this paper, we examine the following generalized Sylvester tensor equation over :

where the tensors , , and are given, and the tensors , are unknown. The n-mode product of a tensor with a matrix is defined as

Observe that the n-mode product can also be represented using unfolded quaternion tensors:

where is the mode-n unfolding of [1]. We address the following problems related to (2):

Problem 1.1: Given the tensor , and the matrices , find the tensors such that

Problem 1.2: Let denote the solution set of Problem 1.1. For given tensors , find the tensors and such that

It is worth emphasizing that the tensor Equation (2) includes several well-studied matrix/tensor equations as special cases. For example, if and in (2) are order 2 tensors, i.e., matrices, then Equation (2) can be reduced to the following extended Sylvester matrix equation

In the case of , Equation (2) becomes the following equation

which has been the subject of extensive research in recent years. For instance, Saberi et al. [41,42] investigated the SGMRES-BTF method and SGCRO-BTF method to solve Equation (3) over . Wang et al. [43] proposed the conjugate gradient least-squares method to solve Equation (3) over . Zhang and Wang [44] introduced the tensor formulations of the bi-conjugate gradient (BiCG-BTF) and bi-conjugate residual (BiCR-BTF) methods for solving tensor Equation (3) in the real number field . Chen and Lu [45] explored a projection method using a Kronecker product preconditioner to solve Equation (3) over . Karimi and Dehghan [46] introduced the tensor formulation of the global least-squares method for approximating solutions to (3). Additionally, Najafi-Kalyani et al. [47] developed several iterative algorithms based on the global Hessenberg process in their tensor forms to address Equation (3). Considering Equation (3) over and , that is,

It has been shown that Equation (4) plays an important role in finite difference [48], thermal radiation [11], information retrieval [15], finite elements [49], and microscopic heat transport problem [18]. Therefore, our study of Equation (2) will provide a unified treatment for these matrix/tensor equations.

The remainder of this paper is structured as follows. In Section 2, we review key definitions and notations and prove several lemmas related to transforming Equation (2). In Section 3, we develop the BiCG iterative algorithm for solving the quaternion tensor Equation (2) and prove that our algorithm is correct. We also demonstrate that the minimal Frobenius norm solution can be achieved by selecting specific types of initial tensors. Section 4 provides numerical examples to illustrate the effectiveness and applications of the proposed algorithm. Finally, we summarize our contributions in Section 5.

2. Preliminaries

First, we review some notations and definitions. For two complex matrices and , the notation represents the Kronecker product of U and V.

The operator is defined as: for a matrix A and a tensor ,

respectively, where is the kth column of A and is the mode-1 unfolding of the tensor . The inner product of two tensors is defined as follows:

where represents the quaternion conjugate of . If , then we say that tensors and are orthogonal. The Frobenius norm of tensor is of the form:

For any , it is well known that can be uniquely represented as , where . Next, we define n-mode operators for .

Let , where . For , we define

Next, replacing in the above equations by ’s, we define the following notations:

The following lemma establishes that the quaternion tensor Equation (2) can be reduced to a system of four real tensor equations.

Lemma 1.

In Equation (2), we assume that , and . Thus, the quaternion Sylvester tensor Equation (2) can be expressed as the following system of real tensor equations

where , are defined by (5) and (6). Furthermore, the system of real tensor Equation (7) is equivalent to the following linear system

where

and denotes the identity matrix of size n.

Proof of Lemma 1.

We apply the definition of n-mode product of the quaternion tensor for (2).

By the definitions of and , Equation (7) holds. To show (8), we make use of operator “vec” to and , that is,

Similarly, we have the following results for the rest of ’s and ’s:

By writing up above equations, we obtain the system (8). □

Lemma 2

([8,50]). Assume that , , and the linear matrix equation has a solution . Then, is the unique solution with the minimum norm for the equation .

From Lemmas 1 and 2, it is straightforward to observe that the uniqueness of the solution to Equation (2) can be characterized as follows:

Theorem 1.

Given fixed matrices , we define the following linear operators

Using the property in [10], the following lemma can be easily proven.

Lemma 3.

Let . Then

where

Clearly, defined above is a linear mapping. The following lemma provides the uniqueness of the dual mapping for these kinds of linear mappings.

Lemma 4

([43]). Let be a linear mapping from tensor space to tensor space For any tensors and , there exists a unique linear mapping from tensor space to tensor space such that

Finally, we use linear operators and to describe the inner products involving and , which we will use in the next sections.

3. An Iterative Algorithm for Solving the Problems 1.1 and 1.2

The purpose of this section is to propose an iterative algorithm for obtaining the solution of Sylvester tensor Equation (2). As it is well known that the classical bi-conjugate gradient (BiCG) methods for solving nonsymmetric linear systems of equations are feasible and efficient, one may refer to [44,51,52,53,54]. We extend the BiCG method using the tensor format (BTF) for solving Equation (2) and discuss its convergence. Clearly, the tensor Equations (2) and (8) have the same solution from Lemma 1. However, the size of in Equation (8) is usually too large to save computation time and memory space. Beik et al. [55] demonstrated that algorithms using tensor formats generally outperform their classical counterparts in terms of efficiency. Inspired by these issues, we propose the following least-squares algorithm, formulated in tensor format, for solving tensor Equation (2):

Note that and are defined by (5), (6) and (11), (12), respectively. Next, we discuss some bi-orthogonality properties of Algorithm 1.

| Algorithm 1 BiCG-BTF method for solving Equation (2). |

Input: , . Output: The norm and the solutions , . Initialization: ;

|

Theorem 2.

Assume that iterative sequences , , , and are generated by Algorithm 1. Thus, we obtain

Proof of Theorem 2.

We apply mathematics induction on k. Let us consider first.

When , the conclusion holds, as the following calculations show:

and

It has been found clearly. Now, assume that (13) and (14) hold for (). Then,

and

The equality (15) holds for all . Next, we will prove that Equations (13) and (14) hold for all .

and

Similarly, Equations (13) and (14) also hold for the case Therefore, the facts illustrate that (13) and (14) are satisfied for . □

Corollary 1.

Assume the conditions in Theorem 2 are satisfied. Then,

Proof of Corollary 1.

From Algorithm 1 and Theorem 2, we have

and

□

Theorem 3.

Let tensor sequences , be generated by Algorithm 1. If Algorithm 1 does not break down, then the tensor sequences

converge to the solution of Equation (2) within a finite iteration steps in the absence of round-off errors.

Proof of Theorem 3.

We will prove that there exists a such that . By contradiction, assume that for all , and thus we can compute . Suppose that is a dependent sequence, then there exist real numbers , not all zero, such that

for Then

which implies . This is a contradiction, since we cannot calculate in this case. Therefore, there must exist a such that , that is, the exact solution to the tensor Equation (2) can be determined by Algorithm 1 within a finite number of iterations, assuming no round-off errors. □

In the following theorem, we show that if we choose special kinds of initial tensor, then Algorithm 1 can yield the unique minimal Frobenius norm solution of the tensor Equation (2).

Theorem 4.

Proof of Theorem 4.

By selecting the initial tensors as specified in (18), it can be easily verified that the tensors and obtained from Algorithm 1 will have the following form:

where tensors . Now, we show that , is the unique solution of the tensor equation with the minimal Frobenius norm (2). Let

where and are defined by (9). According to Theorem 1, we can conclude that , produced by Algorithm 1; this solution is the unique minimal Frobenius norm solution for the tensor Equation (2). □

Now, we solve Problem 1.2. If the tensor Equation (2) is consistent, the solution pair set for Problem 1.1 is non-empty, for given tensors , we have

Let , and , then solving the tensor nearness Problem 1.2 is equivalent to first finding the solution with the minimal Frobenius norm for the tensor equation

By applying Algorithm 1 and setting the initial tensors as , , where , where are arbitrary tensors (in particular, we take , we can derive the unique solution with the minimal Frobenius norm of Equation (19). Once the above tensors are obtained, the unique solution of Problem 1.2 can be determined. In this situation, and can be represented as , .

4. Numerical Examples

In this section, we will give some numerical examples to support the efficiency and applications of Algorithm 1. The codes in our computation are written in Matlab R2018a with 2.3 GHz central processing unit (Intel(R) Core(TM) i5), 8 GB memory. Moreover, we implemented all the operations based on tensor toolbox (version 3.2.1) proposed by Bader and Kolda [1]. For all of the examples, the iterations begin with the initial values in Algorithm 1 with a stopping criterion of Res and the number of iteration steps exceeding 2000. We describe some notations that appear in the following examples in Table 1.

Table 1.

Some denotations in numerical examples.

Example 1.

We consider the tensor Equation (2) under the following conditions:

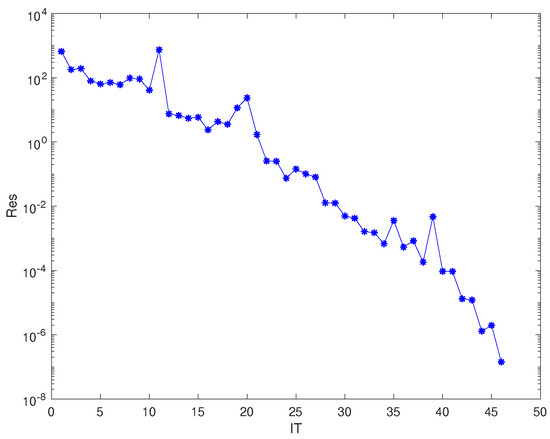

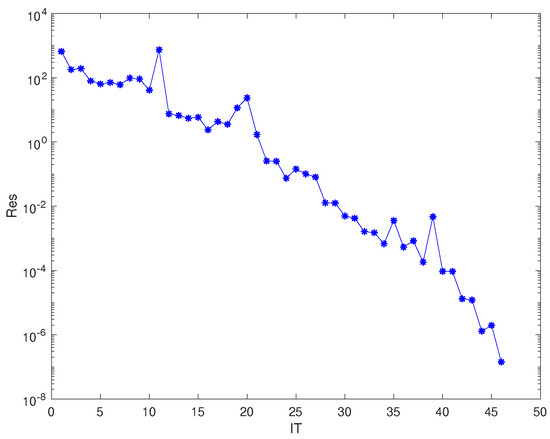

Applying for Algorithm 1, the IT is 46, the CPU time is 3.9496 s and the Res is . Figure 1 illustrates that Algorithm 1 is feasible.

Figure 1.

Convergence history of Example 1.

Example 2

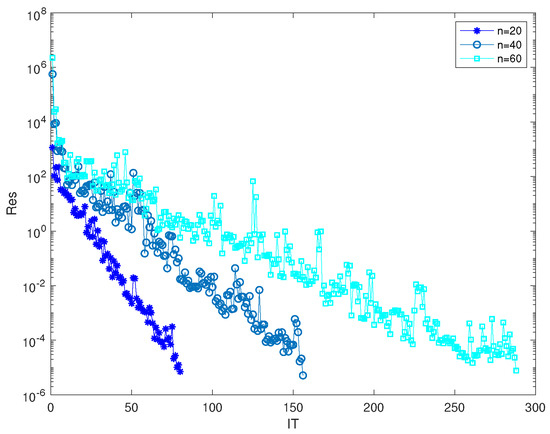

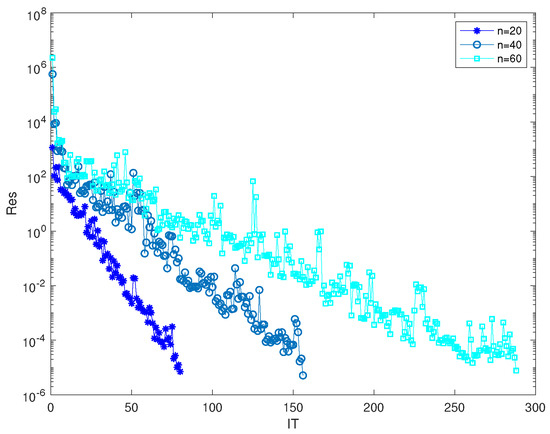

(Test matrices from [28]). We examine the quaternion tensor Equation (2) under the condition that

where

Choosingthe initial tensor , we illustrate the convergence curves of Algorithm 1 for various values of n in Figure 2. In Table 2, for and , we provide the CPU time incurred, residual norms after a finite number of steps, and the relative errors of the approximate solutions obtained using Algorithm 1.

Figure 2.

Convergence history of Example 2.

Table 2.

Numerical results for Example 2.

Example 3.

We consider the solution of the following convection–diffusion equation over the quaternion algebra [56]

Based on a standard finite difference discretization on a uniform grid for the diffusion term and a second-order convergent scheme (Fromm’s scheme) for the convection term with the mesh-size , we solve the quaternion tensor Equation (3) with

where

and The right-hand side tensor is constructed so that the exact solution to Equation (3) is .

We consider two cases in order to compare Algorithm 1 with the CGLS algorithm in [43]. In case I, we choose different and to obtain the results. In Table 3, we set

to obtain with the same real part and imaginary part. In Table 4, we set

to obtain with different real parts and imaginary parts.

Table 3.

CPU time (IT) for Example 3 with parameter setup in (20).

Table 4.

CPU time (IT) for Example 3 with parameter setup in (21).

In case II, we set , we apply Algorithm 1 and the CGLS algorithm in [43] with for grid . The relative errors of approximate solution computed by these methods are shown in Table 5.

Table 5.

The relative errors of the solution (IT) for Example 3.

The previous results show that Algorithm 1 has faster convergent rates than the CGLS algorithm in [43] as p increases.

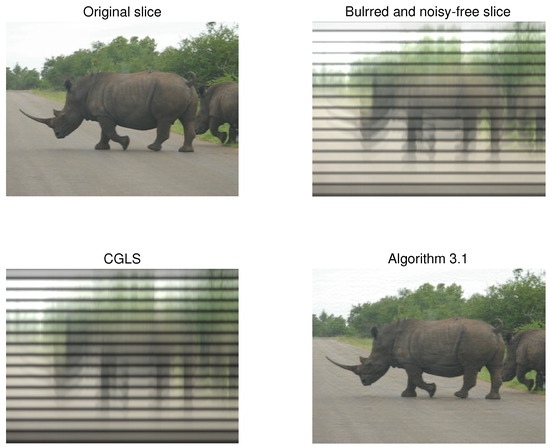

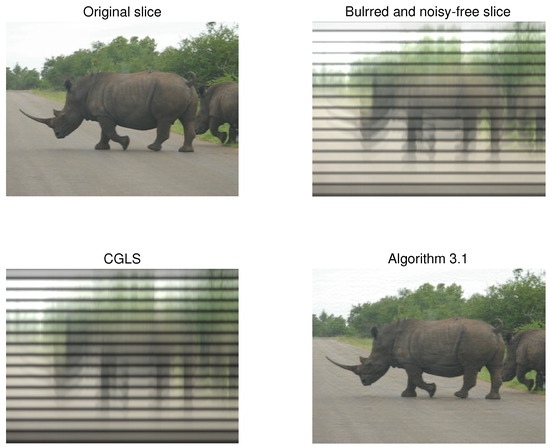

Example 4.

We employ Algorithm 1 to compare its performance with the CGLS algorithm in restoring a color video comprising a sequence of RGB images (slices). The video, titled ‘Rhinos,’ originates from Matlab and is saved in AVI format. Each frontal slice of this color video is represented by a pure quaternion matrix with dimensions of pixels. For , we consider to represent the orginal colour video, A as the blurred matrix, and as a noise tensor. When , is referred to as the blurred and noise-free color video. In this scenario, we select a blurred matrix , where and are Toeplitz matrices with entries defined as follows:

We denote as the resulting restored color video. The performance of the algorithm is evaluated using the peak signal-to-noise ratio (PSNR), which is measured in decibels (dB):

where d denotes the maximum possible pixel value of the image. represents the relative error, which is defined as

In this case, we use and set the variance . Table 6 shows the peak signal-to-noise ratio (PSNR) and the relative error (RE) for Algorithm 1 and the CGLS algorithm with various parameters. As indicated, the PSNR and the relative error of our algorithms are significantly superior to those of CGLS. For the case where and with slice No. 7 of the color video, we illustrate the original image, blurred image, and the restored image by CGLS and Algorithm 1 in Figure 3. This figure demonstrates that our algorithm can effectively restore blurred and noise-free color video with high quality.

Table 6.

The numerical results for Example 4.

Figure 3.

The restored color video for the case with and , using slice No. 7.

5. Conclusions

The main goal of this paper is to solve the generalized quaternion tensor Equation (2). We hereby develop a BiCG iterative algorithm in tensor format to efficiently solve Equation (2), and we also prove the convergence of our proposed method. Moreover, we demonstrate that the solution with a minimal Frobenius norm can be achieved by initializing specific types of tensors. We provide several examples to effectively illustrate the effectiveness of our algorithm. Furthermore, our algorithm is successfully applied to the restoration of color videos. This contribution significantly advances the current understanding of quaternion tensor equations by introducing a practical iterative approach.

Author Contributions

Conceptualization, M.X. and Q.-W.W.; methodology, M.X.; software, M.X.; validation, M.X., Q.-W.W. and Y.Z.; formal analysis, M.X.; investigation, M.X.; resources, Q.-W.W.; data curation, M.X.; writing—original draft preparation, M.X.; writing—review and editing, Y.Z.; visualization, M.X.; supervision, Q.-W.W.; project administration, M.X., Q.-W.W. and Y.Z.; funding acquisition, M.X., Q.-W.W. and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

The first author is supported by the Natural Science Foundation of China under Grant No. 12301028 and Startup Foundation for Young Teachers of Shanghai Ocean University. The second author is supported by the Natural Science Foundation of China under Grant No. 12371023. The third author is supported by the Canada NSERC under Grant No. RGPIN-2020-06746.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors would like to thank the editor and reviewers for their valuable suggestions and comments.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kolda, T.G.; Bader, B.W. Tensor decompositions and applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Qi, L.; Luo, Z. Tensor Analysis: Spectral Theory and Special Tensors; SIAM: Philadelphia, PA, USA, 2017. [Google Scholar]

- Beik, F.P.; Jbilou, K.; Najafi-Kalyani, M.; Reichel, L. Golub–Kahan bidiagonalization for ill-conditioned tensor equations with applications. Numer. Algorithms 2020, 84, 1535–1563. [Google Scholar] [CrossRef]

- Duan, X.F.; Zhang, Y.S.; Wang, Q.W. An efficient iterative method for solving a class of constrained tensor least squares problem. Appl. Numer. Math. 2024, 196, 104–117. [Google Scholar] [CrossRef]

- Guan, Y.; Chu, D. Numerical computation for orthogonal low-rank approximation of tensors. SIAM J. Matrix Anal. Appl. 2019, 40, 1047–1065. [Google Scholar] [CrossRef]

- Guan, Y.; Chu, M.T.; Chu, D. Convergence analysis of an SVD-based algorithm for the best rank-1 tensor approximation. Linear Algebra Appl. 2018, 555, 53–69. [Google Scholar] [CrossRef]

- Guan, Y.; Chu, M.T.; Chu, D. SVD-based algorithms for the best rank-1 approximation of a symmetric tensor. SIAM J. Matrix Anal. 2018, 39, 1095–1115. [Google Scholar] [CrossRef]

- Hu, J.; Ke, Y.; Ma, C. Efficient iterative method for generalized Sylvester quaternion tensor equation. Comput. Appl. Math. 2023, 42, 237. [Google Scholar] [CrossRef]

- Ke, Y. Finite iterative algorithm for the complex generalized Sylvester tensor equations. J. Appl. Anal. Comput. 2020, 10, 972–985. [Google Scholar] [CrossRef]

- Kolda, T.G. Multilinear Operators for Higher-Order Decompositions; Sandia National Laboratory (SNL): Albuquerque, NM, USA; Livermore, CA, USA, 2006. [Google Scholar]

- Li, B.W.; Tian, S.; Sun, Y.S.; Hu, Z.M. Schur-decomposition for 3D matrix equations and its application in solving radiative discrete ordinates equations discretized by Chebyshev collocation spectral method. J. Comput. Phys. 2010, 229, 1198–1212. [Google Scholar] [CrossRef]

- Li, T.; Wang, Q.-W.; Duan, X.-F. Numerical algorithms for solving discrete Lyapunov tensor equation. J. Comput. Appl. Math. 2020, 370, 112676. [Google Scholar] [CrossRef]

- Li, T.; Wang, Q.-W.; Zhang, X.-F. Gradient based iterative methods for solving symmetric tensor equations. Numer. Linear Algebra Appl. 2022, 29, e2414. [Google Scholar] [CrossRef]

- Li, T.; Wang, Q.-W.; Zhang, X.-F. A Modified conjugate residual method and nearest kronecker product preconditioner for the generalized coupled Sylvester tensor equations. Mathematics 2022, 10, 1730. [Google Scholar] [CrossRef]

- Li, X.; Ng, M.K. Solving sparse non-negative tensor equations: Algorithms and applications. Front. Math. China 2015, 10, 649–680. [Google Scholar] [CrossRef]

- Liang, Y.; Silva, S.D.; Zhang, Y. The tensor rank problem over the quaternions. Linear Algebra Appl. 2021, 620, 37–60. [Google Scholar] [CrossRef]

- Lv, C.; Ma, C. A modified CG algorithm for solving generalized coupled Sylvester tensor equations. Appl. Math. Comput. 2020, 365, 124699. [Google Scholar] [CrossRef]

- Malek, A.; Momeni-Masuleh, S.H. A mixed collocation–finite difference method for 3D microscopic heat transport problems. J. Comput. Appl. Math. 2008, 217, 137–147. [Google Scholar] [CrossRef][Green Version]

- Qi, L. Eigenvalues of a real supersymmetric tensor. J. Symb. Comput. 2005, 40, 1302–1324. [Google Scholar] [CrossRef]

- Qi, L. Symmetric nonnegative tensors and copositive tensors. Linear Algebra Appl. 2013, 439, 228–238. [Google Scholar] [CrossRef]

- Qi, L.; Chen, H.; Chen, Y. Tensor Eigenvalues and Their Applications; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Zhang, X.-F.; Li, T.; Ou, Y.-G. Iterative solutions of generalized Sylvester quaternion tensor equations. Linear Multilinear Algebra 2024, 72, 1259–1278. [Google Scholar] [CrossRef]

- Zhang, X.-F.; Wang, Q.-W. On RGI Algorithms for Solving Sylvester Tensor Equations. Taiwan. J. Math. 2022, 26, 501–519. [Google Scholar] [CrossRef]

- Kyrchei, I. Cramer’s rules for Sylvester quaternion matrix equation and its special cases. Adv. Appl. Clifford Algebras 2018, 28, 1–26. [Google Scholar] [CrossRef]

- Heyouni, M.; Saberi-Movahed, F.; Tajaddini, A. On global Hessenberg based methods for solving Sylvester matrix equations. Comput. Math. Appl. 2019, 77, 77–92. [Google Scholar] [CrossRef]

- Zhang, X. A system of generalized Sylvester quaternion matrix equations and its applications. Appl. Math. Comput. 2016, 273, 74–81. [Google Scholar] [CrossRef]

- Beik, F.; Ahmadi-Asl, S. An iterative algorithmfor η-(anti)-Hermitian least-squares solutions of quaternion matrix equations. Electron. J. Linear Algebra 2015, 30, 372–401. [Google Scholar] [CrossRef]

- Ahmadi-Asl, S.; Beik, F.P.A. An efficient iterative algorithm for quaternionic least-squares problems over the generalized η-(anti-)bi-Hermitian matrices. Linear Multilinear Algebra 2017, 65, 1743–1769. [Google Scholar] [CrossRef]

- Ahmadi-Asl, S.; Beik, F.P.A. Iterative algorithms for least-squares solutions of a quaternion matrix equation. J. Appl. Math. Comput. 2017, 53, 95–127. [Google Scholar] [CrossRef]

- Song, G.; Wang, Q.-W.; Yu, S. Cramer’s rule for a system of quaternion matrix equations with applications. Appl. Math. Comput. 2018, 336, 490–499. [Google Scholar] [CrossRef]

- Wang, Q.-W.; He, Z.-H.; Zhang, Y. Constrained two-sided coupled Sylvester-type quaternion matrix equations. Automatica 2019, 101, 207–213. [Google Scholar] [CrossRef]

- Zhang, F.; Mu, W.; Li, Y.; Zhao, J. Special least squares solutions of the quaternion matrix equation AXB + CXD = E. Comput. Math. Appl. 2016, 72, 1426–1435. [Google Scholar] [CrossRef]

- Huang, N.; Ma, C.-F. Modified conjugate gradient method for obtaining the minimum-norm solution of the generalized coupled Sylvester-conjugate matrix equations. Appl. Math. Model. 2016, 40, 1260–1275. [Google Scholar] [CrossRef]

- Gao, Z.-H.; Wang, Q.-W.; Xie, L. The (anti-)η-Hermitian solution to a novel system of matrix equations over the split quaternion algebra. Math. Meth. Appl. Sci. 2024, 1–18. [Google Scholar] [CrossRef]

- He, Z.-H.; Wang, X.-X.; Zhao, Y.-F. Eigenvalues of quaternion tensors with applications to color video processing. J. Sci. Comput. 2023, 94, 1. [Google Scholar] [CrossRef]

- Jia, Z.; Wei, M.; Zhao, M.X.; Chen, Y. A new real structure-preserving quaternion QR algorithm. J. Comput. Appl. Math. 2018, 343, 26–48. [Google Scholar] [CrossRef]

- Li, Y.; Wei, M.; Zhang, F.; Zhao, J. Real structure-preserving algorithms of Householder based transformations for quaternion matrices. J. Comput. Appl. Math. 2016, 305, 82–91. [Google Scholar] [CrossRef]

- Mehany, M.S.; Wang, Q.-W.; Liu, L. A System of Sylvester-like quaternion tensor equations with an application. Front. Math. 2024, 19, 749–768. [Google Scholar] [CrossRef]

- Xie, M.; Wang, Q.-W. Reducible solution to a quaternion tensor equation. Front. Math. China 2020, 15, 1047–1070. [Google Scholar] [CrossRef]

- Xie, M.Y.; Wang, Q.W.; He, Z.H.; Saad, M.M. A system of Sylvester-type quaternion matrix equations with ten variables. Acta Math. Sin. (Engl. Ser.) 2022, 38, 1399–1420. [Google Scholar] [CrossRef]

- Saberi-Movahed, F.; Tajaddini, A.; Heyouni, M.; Elbouyahyaoui, L. Some iterative approaches for Sylvester tensor equations, Part I: A tensor format of truncated Loose Simpler GMRES. Appl. Numer. Math. 2022, 172, 428–445. [Google Scholar] [CrossRef]

- Saberi-Movahed, F.; Tajaddini, A.; Heyouni, M.; Elbouyahyaoui, L. Some iterative approaches for Sylvester tensor equations, Part II: A tensor format of Simpler variant of GCRO-based methods. Appl. Numer. Math. 2022, 172, 413–427. [Google Scholar] [CrossRef]

- Wang, Q.-W.; Xu, X.; Duan, X. Least squares solution of the quaternion Sylvester tensor equation. Linear Multilinear Algebra 2021, 69, 104–130. [Google Scholar] [CrossRef]

- Zhang, X.-F.; Wang, Q.-W. Developing iterative algorithms to solve Sylvester tensor equations. Appl. Math. Comput. 2021, 409, 126403. [Google Scholar] [CrossRef]

- Chen, Z.; Lu, L. A projection method and Kronecker product preconditioner for solving Sylvester tensor equations. Sci. China Math. 2012, 55, 1281–1292. [Google Scholar] [CrossRef]

- Karimi, S.; Dehghan, M. Global least squares method based on tensor form to solve linear systems in Kronecker format. Trans. Inst. Measure. Control 2018, 40, 2378–2386. [Google Scholar] [CrossRef]

- Najafi-Kalyani, M.; Beik, F.P.A.; Jbilou, K. On global iterative schemes based on Hessenberg process for (ill-posed) Sylvester tensor equations. J. Comput. Appl. Math. 2020, 373, 112216. [Google Scholar] [CrossRef]

- Bai, Z.-Z.; Golub, G.H.; Ng, M.K. Hermitian and skew-Hermitian splitting methods for non-Hermitian positive definite linear systems. SIAM J. Matrix Anal. Appl. 2003, 24, 603–626. [Google Scholar] [CrossRef]

- Grasedyck, L. Existence and computation of low Kronecker-rank approximations for large linear systems of tensor product structure. Computing 2004, 72, 247–265. [Google Scholar] [CrossRef]

- Peng, Y.; Hu, X.; Zhang, L. An iteration method for the symmetric solutions and the optimal approximation solution of the matrix equation AXB = C. Appl. Math. Comput. 2005, 160, 763–777. [Google Scholar] [CrossRef]

- Bank, R.E.; Chan, T.-F. An analysis of the composite step biconjugate gradient method. Numer. Math. 1993, 66, 295–319. [Google Scholar] [CrossRef]

- Bank, R.E.; Chan, T.-F. A composite step bi-conjugate gradient algorithm for nonsymmetric linear systems. Numer. Algorithms 1994, 7, 1–16. [Google Scholar] [CrossRef]

- Freund, R.W.; Golub, G.H.; Nachtigal, N.M. Iterative solution of linear systems. Acta Numer. 1992, 1, 44. [Google Scholar] [CrossRef]

- Hajarian, M. Developing Bi-CG and Bi-CR methods to solve generalized Sylvester-transpose matrix equations. Int. J. Auto. Comput. 2014, 11, 25–29. [Google Scholar] [CrossRef]

- Beik, F.; Panjeh, F.; Movahed, F.; Ahmadi-Asl, S. On the Krylov subspace methods based on tensor format for positive definite Sylvester tensor equations. Numer. Linear Algebra Appl. 2016, 23, 444–466. [Google Scholar] [CrossRef]

- Ballani, J.; Grasedyck, L. A projection method to solve linear systems in tensor format. Numer. Linear Algebra Appl. 2013, 20, 27–43. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).