Abstract

The major aim of this article is to show how to estimate direct errors using the J iteration method. Direct error estimation of iteration processes is being investigated in different journals. We also illustrate that an error in the J iteration process can be controlled. Furthermore, we express J iteration convergence by using distinct initial values.

MSC:

47H09; 47H10; 54H25

1. Introduction

Fixed point theory combines analysis, topology, and geometry in a unique way. Fixed point technology in particular applies to biology, chemistry, economics, gaming, and physics. Once the existence of a fixed point of a mapping has been established, determining the value of that fixed point is a difficult task, which is why we employ iteration procedures to do so. Iterative algorithms are utilized for the computation of approximate solutions of stationary and evolutionary problems associated with differential equations. A lot of iterative processes have been established, and it is difficult to cover each one. A famous Banach’s contraction theorem uses Picard’s iterative procedure to approach a fixed point. Other notable iterative methods can be found in references [1,2,3,4,5,6,7,8,9,10,11,12,13,14]. Fastest convergent methodes can be seen in references [15,16,17,18,19,20,21,22,23,24,25]. Also for errors, stability and Data dependency of different iteration proess can be seen in references [26,27,28].

In an iteration process, “rate of convergence”, “stability”, and “error” all play important roles. According to Rhoades [4], the Mann iterative model converges faster than the Ishikawa iterative procedure for decreasing functions, whereas the Ishikawa iteration method is preferable for increasing functions. In addition, it appears that the Mann iteration process is unaffected by the starting prediction. Liu [2] first proposed the Mann iteration procedure with errors in 1995. One of the authors, Xu [6], recently pointed out that Liu’s definition, which is based on the convergence of error terms, is incompatible with randomness because error terms occur at random. As a result, Xu created new types of random error Mann and Ishikawa iterative processes. Agarwal [3] demonstrated results for contraction mappings, where the Agarwal iteration process converges at the same rate as the Picard iteration process and quicker than the Mann iteration process. For quasi-contractive operators in Banach spaces, Chugh [7] defined that the CR iteration process is equivalent to and faster than the Picard, Mann, Ishikawa, Agarwal, Noor, and SP iterative processes. The authors in [5] demonstrated that for the class of contraction mappings, the CR iterative process converges faster than the iterative process. The authors showed in [18] that for the class of Suzuki generalized nonexpansive mappings, the Thakur iteration process converges quicker than the Picard, Mann, Ishikawa, Agarwal, Noor, and Abbas iteration processes. Abbas [1] offers numerical examples to illustrate that their iterative process is more quickly convergent than existing iterative processes for non-expansive mapping. In [19], the study shows that the iterative method has superior convergence than the iterative procedures in [1]. In [20], another iteration technique, M, is proposed, and its convergence approach was better than to those of Agarwal and [1]. In [11], a new iterative algorithm, known as the K iterative algorithm, was introduced, demonstrating that it is faster than the previous iterative techniques in achieving convergence. The study also demonstrated that their method is T-stable. In [17], the authors devised a novel iterative process termed “” and demonstrated the convergence rate and stability of their iterative method. Recently, in [12], a new iterative scheme, namely, the “J” iterative algorithm, was developed. They have proved the convergence rate and stability for their iteration process.

The following question arises: Is the direct error estimate of the iterative process in [12] bounded and controllable?

The error of the “J” iteration algorithm is estimated in this article, and it is shown that this estimation for the iteration process in [12] is also bounded and controlled. Furthermore, as shown in [4], certain iterative processes converge to increase function while others converge to decrease function. The initial value selection affects the convergence of these iterative processes. For any initial value, we present a numerical example to support the analytical finding and to demonstrate that the J iteration process has a higher convergence rate than the other iteration methods mentioned above.

2. Preliminaries

Definition 1

([15]). If for each (0,2] 0 s.t for r,s having and , then X is called uniformly convex.

Definition 2

([17]). Let be a random sequence in M. The iteration technique is said to be F-stable if it converges to a fixed point p. Consider for , if .

Definition 3

([10]). Consider F and are contraction map. If for some 0, then is an approximate contraction for F. We have for all .

Definition 4

([10]). Let and be two different fixed point I.M that approach to unique fixed point p and and , for all 0. If the sequence and approaches to j and k, respectively, and , then approaches faster than to p.

3. Estimation of an Error for J Scheme

We will suppose all through this section that is a real-valued Banach space that can be selected randomly. S is a subspace of X, which is closed as well as convex, also let a mapping F: S → S, which is nonexpensive, and and [0 1] are parameter sequences that satisfy specific control constraints.

We primarily wish to assess the J iterative method’s error estimates in X, defined in [12].

Many researchers have come close to achieving this goal in a roundabout way. A few publications in the literature have recently surfaced in terms of their direct computations (estimation). As direct error estimation in [15,16,28]. In reference [9], the authors have calculated direct error estimation for the iteration process defined in [28]. We have established an approach for the direct estimate of the J iteration error in terms of accumulation in this article. It should be emphasized that this method’s direct error calculations are significantly more complex than the iteration process as in [26,27].

Define the errors of , and by:

for all , where , and are the exact values of , and , respectively, that is, , and are approximate values of , and , respectively. The theory of errors implies that , and are bounded. Set:

where and are the absolute error boundaries of , and , respectively, and (1) has accumulated errors as a result of , and , hence we can set:

where , and are exact values of , and , respectively. Obviously, each iteration error will affect the next (n+1) steps. Now, for the initial step in x, y, z, we have:

Now for the z term we have:

As F is nonexpansive, we have:

from (2) and (5) we have:

Now, for the y term, we have:

As F is nonexpansive, we have:

from (2) and (6), we have:

hence:

Now, for an error in the first step of x, y, z, we have the following:

Firstly, for x, we have:

As F is nonexpansive, we have:

from (2) and (7), we have:

hence:

Now, for y, we have:

As F is nonexpansive, we have:

from (2) and (8), we have:

hence:

Now, for z we have,

As F is nonexpansive, we have:

from (2) and (9), we have:

hence:

Now, for an error in the second step of x, y, z, we have the following:

As F is nonexpansive, we have:

from (2) and (10), we have:

hence:

As F is nonexpansive, we have:

from (2) and (11) we have:

hence:

As F is nonexpansive, we have:

from (2) and (12), we have:

hence:

Now, we calculate an error in third step of x, y, z as follows:

As F is nonexpansive, we have:

from (2) and (13), we have:

hence:

As F is nonexpansive, we have:

Now, by using (2) and (14), we have:

hence:

As F is nonexpansive, we have:

from (2) and (15), we have:

hence:

Repeating the above process, we have:

Define:

We discovered that in the J iterative scheme, the error grew to (n + 1) iterations, defined as and .

Next we present the following outcomes.

Theorem 1.

Let S, F, M, , and be as defined above and ϵ be a positive fixed real number:

- (i)

- If or , then the errors estimation of (1) is bounded and cannot exceed the number N;

- (ii)

- If and, then random errors of (1) are controllable.

Proof.

(i) It is well known that implies , by (Remark 2.1 of [18]). By using this fact and the above inequalities, we have:

which implies:

Hence, we have .

Indeed, implies the following:

Let 1-

We have:

On the other hand, the conditions and and s.t ∀, we have . Using this fact, we obtain:

Similarly, the condition = 0 and N s.t ∀ n , we have Now, we have:

Thus, we conclude that , and can be controlled for suitable choice of the parameter sequences and for all . □

Remark 1.

Theorem 1 indicates that the direct error estimation for an iterative algorithm defined in [12] is controllable and bounded, which is the actual aim of our research. The following example illustrates that not only is the direct error in the iterative algorithm defined in [12] controlled and bounded, but it is also independent of the initial value selection. The efficiency of the J iteration approach is represented in both tables and graphs.

Example 1.

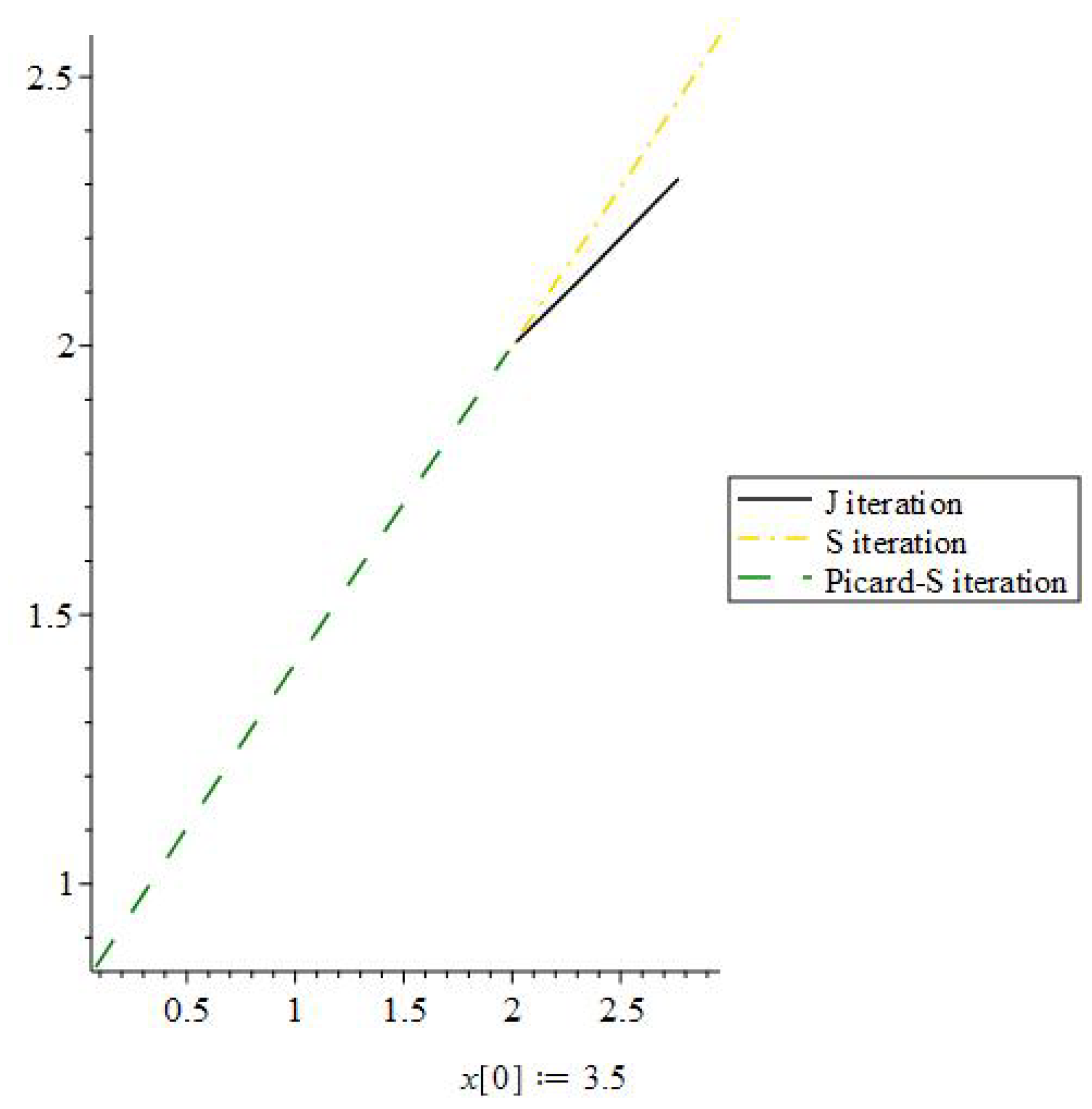

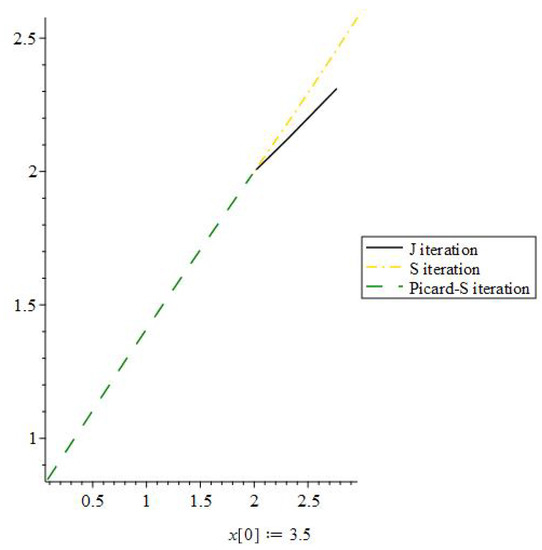

Let us start by defining a function by Then, Q is definitely a contraction mapping. Let and The iterative values for are given in Table 1. The convergence graph can be seen in Figure 1. The effectiveness of the J iteration method is undeniable.

Table 1.

Sequence formed by J, Picard-S, and S Iteration methods, having initial value for contraction mapping Q of Example 1.

Figure 1.

J iteration process convergence when the initial value is 3.5.

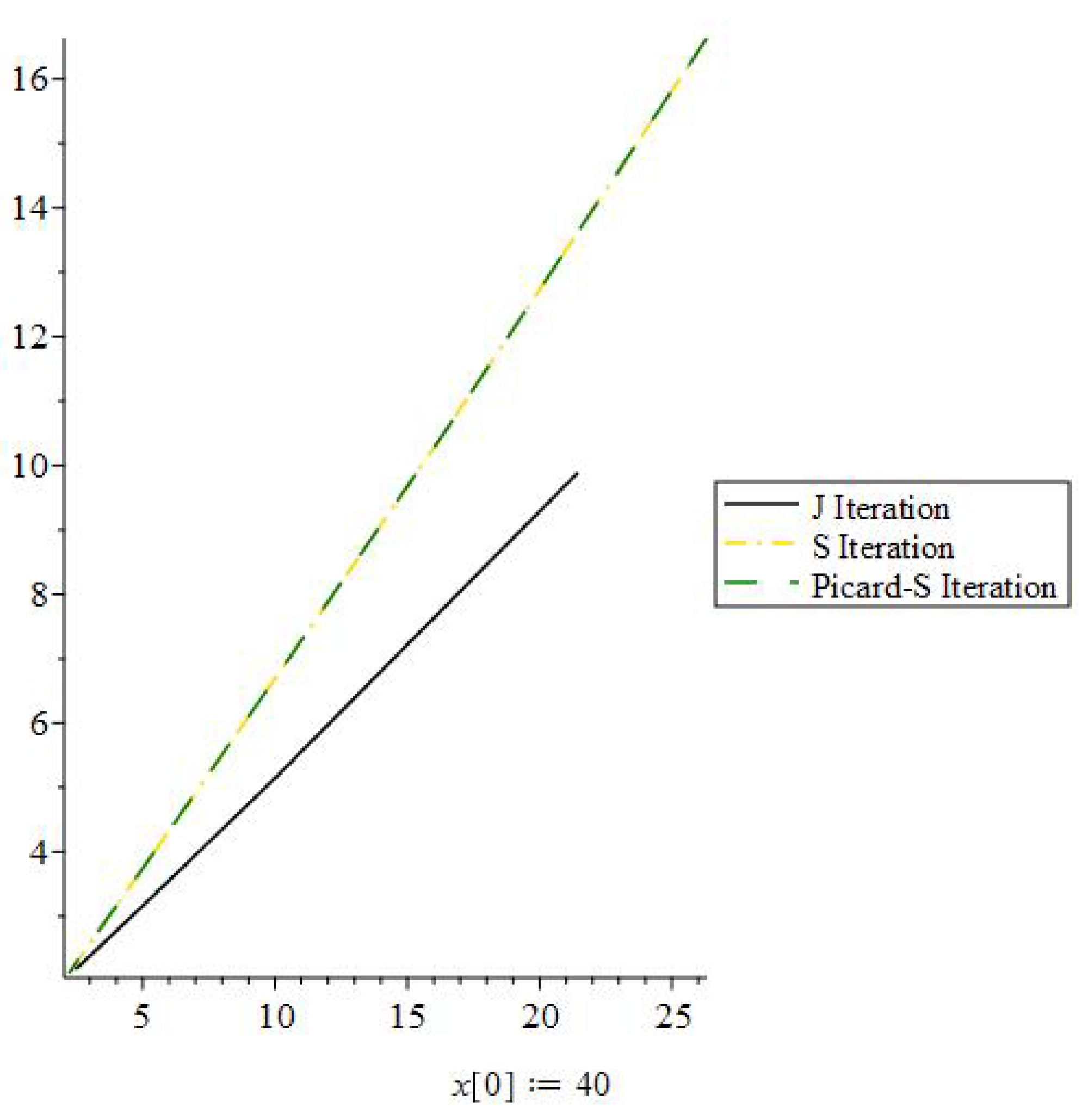

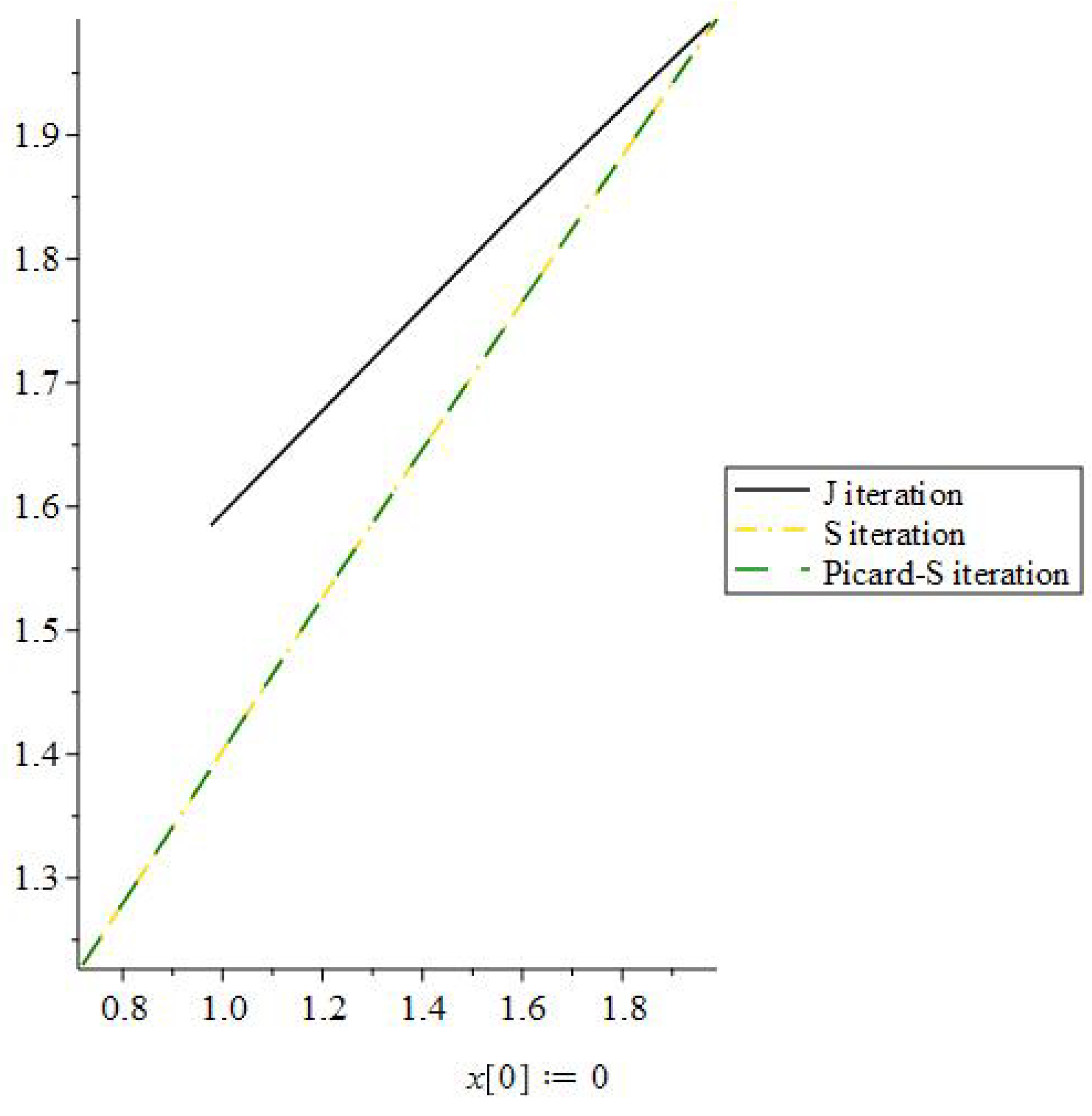

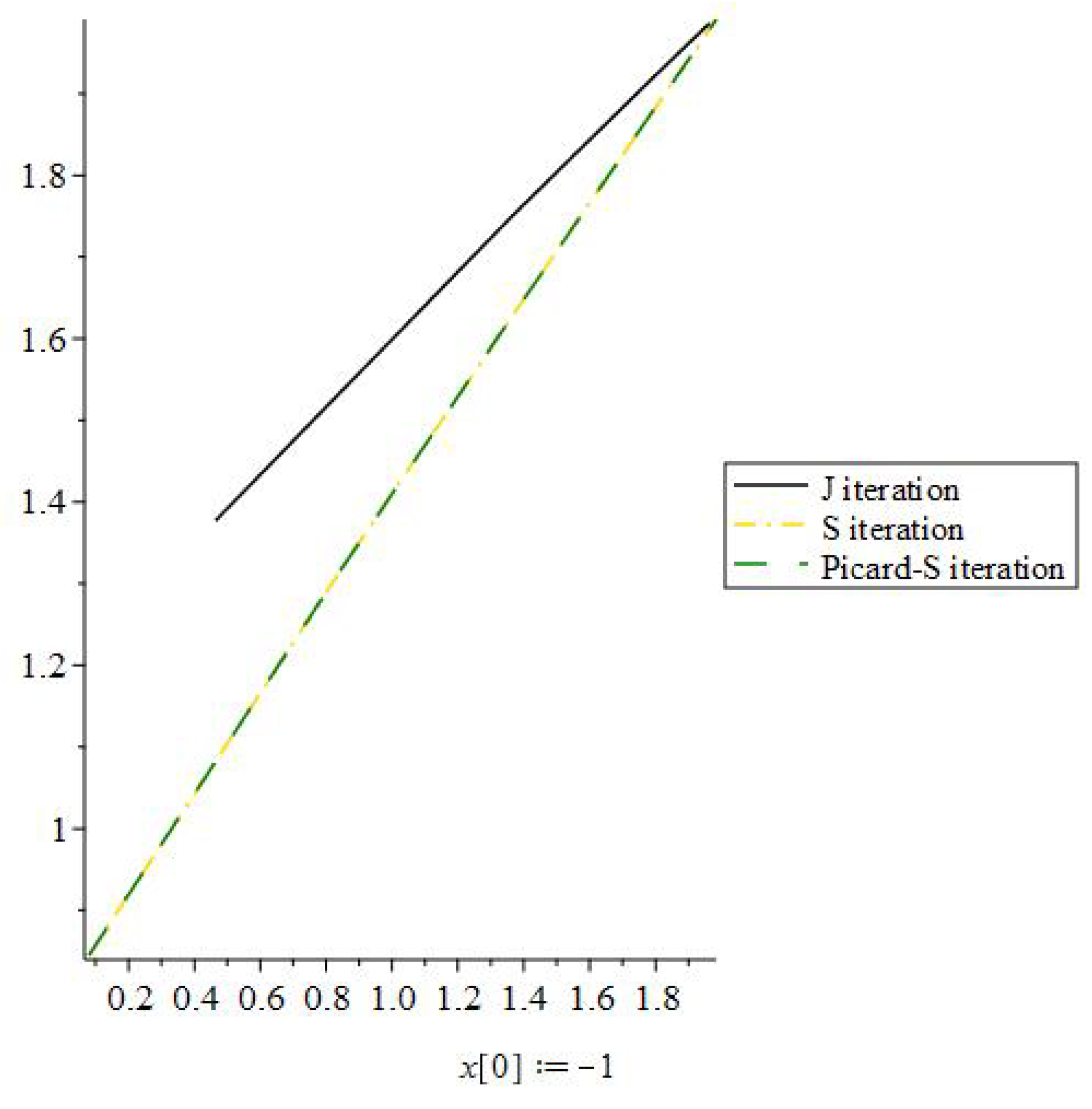

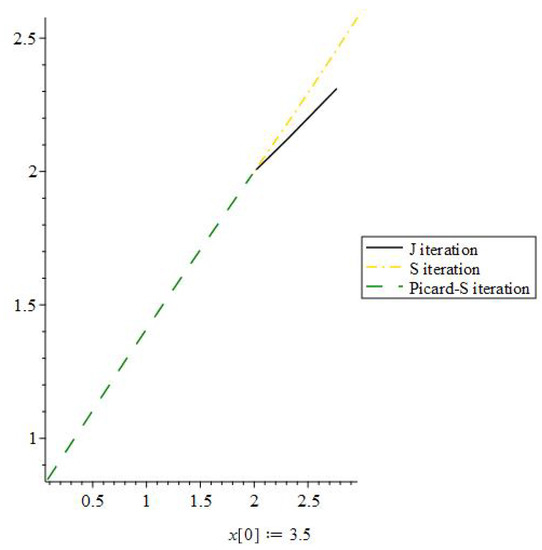

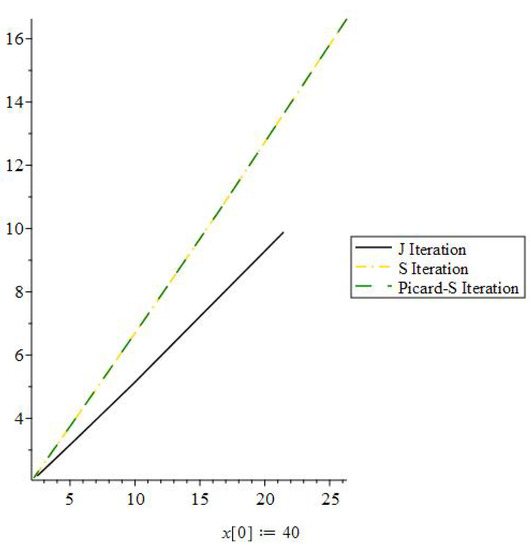

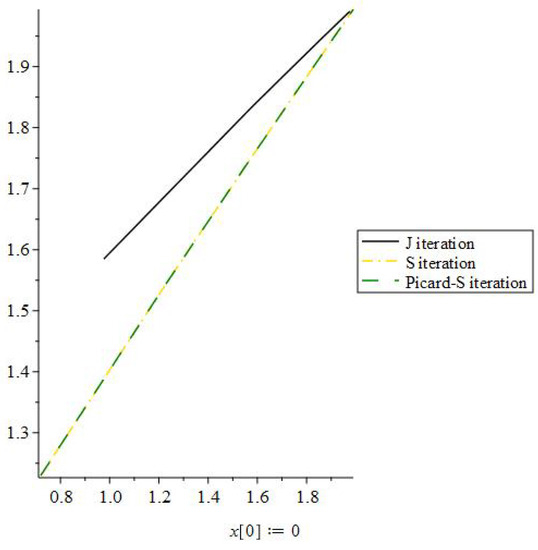

In Table 1, it is shown that the J iterative process is more efficient than other iterative algorithm in terms of approaching a fixed point quickly. Following that, we show some graphs demonstrating that the J iteration strategy is effective for any initial value. Figure 1, Figure 2, Figure 3 and Figure 4, J, Picard-S, and S Iteration process approach 2, which is fixed point of Q, by utilizing different initial guesses for mapping Q in Example 1.

Figure 2.

J iteration process convergence when the initial value is 40.

Figure 3.

J iteration process convergence when the initial value is 0.

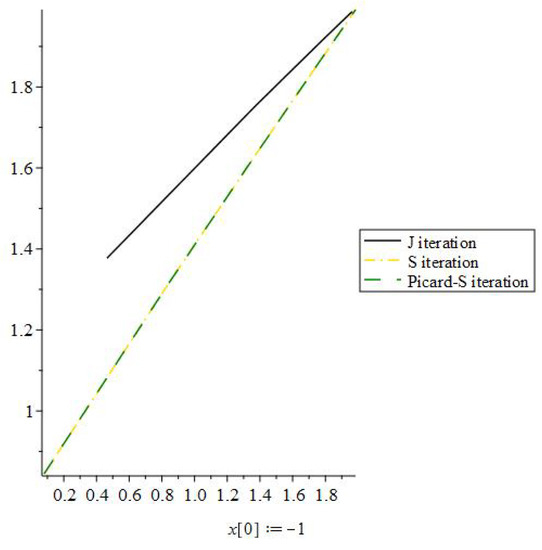

Figure 4.

J iteration process convergence when the initial value is −1.

In this graph, we have compared the rate of convergence of the J iteration process, the S iteration process, and the Picard-S iteration process, letting 3.5 be the initial value. From the graph, the efficiency of the J iteration method is clear. Next, we consider 40 to be an initial value.

We compared the rate of converge of the J iteration process, S iteration process, and Picard-S iteration process in this graph, using 40 as the beginning value. The efficiency of the J iteration method is shown in the graph. Next, we will use 0 as a starting point.

In this graph, we used 0 as the starting value to compare the rate of convergence of the J iteration process, S iteration process, and Picard-S iteration process. The graph depicts the efficiency of the J iteration approach. Now, as a starting point, we will choose −1.

To compare the rate of convergence of the J iteration process, S iteration process, and Picard-S iteration process, we utilized −1 as the starting value in this graph. The efficiency of the J iteration strategy is depicted in the graph.

All of the graphs above, as well as Table 1, show that the J iteration approach has a fast convergence rate and is not affected by the initial value selection.

4. Conclusions

Applying specific criteria on parametric sequences is a typical practice for the iteration method described in the articles “Data dependence for Ishikawa iteration when dealing with contractive like operators”, “On estimation and control of errors of the Mann iteration process”, and “On the rate of convergence of Mann, Ishikawa, Noor and SP iterations for continuous functions on an arbitrary interval” such as and and and for all n∈ N for broad I.M to acquire the rate of convergence, stability, and dependency on initial guesses in findings and also estimate their error directly. In our corresponding results, none of these conditions were employed. Generalizing this, we proved that the direct error estimation of (1) is controllable as well as bounded. Consequently, our analysis more precise in terms of all of the preceding comparisons. Moreover, the graphical analyses of the rate of convergence of the J iteration for different initial values chosen were above or below the fixed point.

Author Contributions

All authors contributed equally and significantly in writing this article. All authors have read and agreed to the published version of the manuscript.

Funding

This paper received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

It is not applicable for our paper.

Acknowledgments

The authors thanks to their universities.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Abbas, M.; Nazir, T. A new faster iteration process applied to constrained minimization and feasibility problems. Mat. Vesn 2014, 66, 223–234. [Google Scholar]

- Liu, L.S. Ishikawa and Mann iterative process with errors for nonlinear strongly accretive mappings in Banach spaces. J. Math. Anal. Appl. 1995, 194, 114–125. [Google Scholar] [CrossRef]

- Agarwal, R.P.; Regan, D.O.; Sahu, D.R. Iterative construction of fixed points of nearly asymptotically nonexpansive mappings. J. Nonlinear Convex Anal. 2007, 8, 61–79. [Google Scholar]

- Rhoades, B.E. Some Fixed point iteration procedures. J. Nonlinear Convex Anal. 2007, 8, 61–79. [Google Scholar] [CrossRef]

- Karakaya, V.; Gursoy, F.; Erturk, M. Comparison of the speed of convergence among various iterative schemes. arXiv 2014, arXiv:1402.6080. [Google Scholar]

- Xu, Y. Ishikawa and Mann iterative process with errors for nonlinear strongly accretive operator equations. J. Math. Anal. Appl. 1998, 224, 91–101. [Google Scholar] [CrossRef]

- Chugh, R.; Kumar, V.; Kumar, S. Strong Convergence of a new three step iterative scheme in Banach spaces. Am. J. Comp. Math. 2012, 2, 345–357. [Google Scholar] [CrossRef]

- Karahan, I.; Ozdemir, M. A general iterative method for approximation of fixed points and their applications. Adv. Fixed. Point. Theor. 2013, 3, 510–526. [Google Scholar]

- Khan, A.R.; Gursoy, F.; Dogan, K. Direct Estimate of Accumulated Errors for a General Iteration Method. MAPAS 2019, 2, 19–24. [Google Scholar]

- BBerinde, V. Iterative Approximation of Fixed Points; Springer: Berlin, Germany, 2007. [Google Scholar]

- Hussain, N.; Ullah, K.; Arshad, M. Fixed point Approximation of Suzuki Generalized Nonexpansive Mappings via new Faster Iteration Process. JLTA 2018, 19, 1383–1393. [Google Scholar]

- BBhutia, J.D.; Tiwary, K. New iteration process for approximating fixed points in Banach spaces. JLTA 2019, 4, 237–250. [Google Scholar]

- Hussain, A.; Ali, D.; Karapinar, E. Stability data dependency and errors estimation for general iteration method. Alexandra Eng. J. 2021, 60, 703–710. [Google Scholar] [CrossRef]

- Noor, M.A. New approximation schemes for general variational inequalities. J. Math. Anal. Appl. 2000, 251, 217–229. [Google Scholar] [CrossRef]

- Mann, W.R. Mean value methods in iteration. Proc. Am. Math. Soc. 1953, 4, 506–510. [Google Scholar] [CrossRef]

- Opial, Z. Weak convergence of the sequence of successive approximations for nonexpensaive mappings. Bull. Am. Math. Soc. 1967, 73, 595–597. [Google Scholar] [CrossRef]

- Ullah, K.; Arshad, M. New three-step iteration process and fixed point approximation in Banach spaces. JLTA 2018, 7, 87–100. [Google Scholar]

- Thakur, B.S.; Thakur, D.; Postolache, M. A new iterative scheme for numerical reckoning fixed points of Suzuki’s generalized nonexpansive mappings. App. Math. Comp. 2016, 275, 147–155. [Google Scholar] [CrossRef]

- Ullah, K.; Arshad, M. New Iteration Process and numerical reckoning fixed points in Banach. U.P.B. Sci. Bull. Ser. A 2017, 79, 113–122. [Google Scholar]

- Ullah, K.; Arshad, M. Numerical reckoning fixed points for Suzuki’s generalized nonexpansive mappings via new iteration process. Filomat 2018, 32, 187–196. [Google Scholar] [CrossRef]

- Alqahtani, B.; Aydi, H.; Karapinar, E.; Rakocevic, V. A Solution for Volterra Fractional Integral Equations by Hybrid Contractions. Mathematics 2019, 7, 694. [Google Scholar] [CrossRef]

- Goebel, K.; Kirk, W.A. Topic in Metric Fixed Point Theory Application; Cambridge Universty Press: Cambridge, UK, 1990. [Google Scholar]

- Harder, A.M. Fixed Point Theory and Stability Results for Fixed Point Iteration Procedures. Ph.D Thesis, University of Missouri-Rolla, Parker Hall, USA, 1987. [Google Scholar]

- Weng, X. Fixed point iteration for local strictly pseudocontractive mapping. Proc. Am. Math. Soc. 1991, 113, 727–731. [Google Scholar] [CrossRef]

- Soltuz, S.M.; Grosan, T. Data dependence for Ishikawa iteration when dealing with contractive like operators. Fixed Point Theory Appl. 2008, 2008, 1–7. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, Z. On estimation and control of errors of the Mann iteration process. J. Math. Anal. Appl. 2003, 286, 804–806. [Google Scholar] [CrossRef][Green Version]

- Xu, Y.; Liu, Z.; Kang, S.M. Accumulation and control of random errors in the Ishikawa iterative process in arbitrary Banach space. Comput. Math. Appl. 2011, 61, 2217–2220. [Google Scholar] [CrossRef][Green Version]

- Suantai, S.; Phuengrattana, W. On the rate of convergence of Mann, Ishikawa, Noor and SP iterations for continuous functions on an arbitrary interval. J. Comput. Appl. Math. 2011, 235, 3006–3014. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).