Abstract

In this manuscript, we introduce a novel parametric family of multistep iterative methods designed to solve nonlinear equations. This family is derived from a damped Newton’s scheme but includes an additional Newton step with a weight function and a “frozen” derivative, that is, the same derivative than in the previous step. Initially, we develop a quad-parametric class with a first-order convergence rate. Subsequently, by restricting one of its parameters, we accelerate the convergence to achieve a third-order uni-parametric family. We thoroughly investigate the convergence properties of this final class of iterative methods, assess its stability through dynamical tools, and evaluate its performance on a set of test problems. We conclude that there exists one optimal fourth-order member of this class, in the sense of Kung–Traub’s conjecture. Our analysis includes stability surfaces and dynamical planes, revealing the intricate nature of this family. Notably, our exploration of stability surfaces enables the identification of specific family members suitable for scalar functions with a challenging convergence behavior, as they may exhibit periodical orbits and fixed points with attracting behavior in their corresponding dynamical planes. Furthermore, our dynamical study finds members of the family of iterative methods with exceptional stability. This property allows us to converge to the solution of practical problem-solving applications even from initial estimations very far from the solution. We confirm our findings with various numerical tests, demonstrating the efficiency and reliability of the presented family of iterative methods.

Keywords:

nonlinear equations; optimal iterative methods; convergence analysis; dynamical study; stability MSC:

65H05

1. Introduction

A multitude of challenges in Computational Sciences and other fields in Science and Technology can be effectively represented as nonlinear equations through mathematical modeling, see for example [1,2,3]. Finding solutions to nonlinear equations of the form stands as a classical yet formidable problem in the realms of Numerical Analysis, Applied Mathematics, and Engineering. Here, the function is assumed to be differentiable enough within the open interval I. Extensive overviews of iterative methods for solving nonlinear equations published in recent years can be found in [4,5,6], and their associated references.

In recent years, many iterative methods have been developed to solve nonlinear equations. The essence of these methods is as follows: if one knows a sufficiently small domain that contains only one root of the equation , and we select a sufficiently close initial estimate of the root , we generate a sequence of iterates , by means of a fixed point function , which under certain conditions converges to . The convergence of the sequence is guaranteed, among other elements, by the appropriate choice of the function g and the initial approximation .

The method described by the iteration function such that

starting from a given initial estimate , includes a large number of iterative schemes. These differ from each other by the way the iteration function g is defined.

Among these methods, Newton’s scheme is widely acknowledged as the most renowned approach for locating a solution . This scheme is defined by the iterative formula:

where , and denotes the derivative of the function f evaluated in the kth iteration.

A very important concept of iterative methods is their order of convergence, which provides a measure of the speed of convergence of the iterates. Let be a sequence of real numbers such that . The convergence is called (see [7]):

- (a)

- Linear, if there exist C, and such that

- (b)

- Is of order p, if there exist and such that

We denote by the error of the k-th iteration. Moreover, equation is called the error equation of the iterative method, where p is its order of convergence and C is called the asymptotic error constant.

It is known (see, for example, [4]) that if is an iterative point-to-point method with d functional evaluations per step, then the order of convergence of the method is, at most, . On the other hand, Traub proves in [4] that to design a point-to-point method of order p, the iterative expression must contain derivatives of the nonlinear function whose zero we are looking for, at least of order . This is why point-to-point methods are not efficient if we seek to simultaneously increase the order of convergence and computational efficiency.

These restrictions of point-to-point methods are the starting point for the growing interest of researchers in multipoint methods, see for example [4,5,6]. In such schemes, also called predictor–corrector, the -th iterate is obtained by using functional evaluations of the k-th iterate and also of other intermediate points. For example, a two-step multipoint method has the expression

Thus, the main motivation for designing new iterative schemes is to increase the order of convergence without adding many functional evaluations. The first multipoint schemes were designed by Traub in [4]. At that time the concept of optimality had not yet been defined and the fact of designing multipoint schemes with the same order as classical schemes such as Halley or Chebyshev, but with a much simpler iterative expression and without using second derivatives, was of great importance. The techniques used then have been the seed of those that allowed the appearance of higher-order methods.

In recent years, different authors have developed a large number of optimal schemes for solving nonlinear equations [6,8]. A common way to increase the convergence order of an iterative scheme is to use the composition of methods, based on the following result (see [4]).

Theorem 1.

Let and be the fixed-point functions of orders and , respectively. Then, the iterative method resulting from composing them, , , has an order of convergence .

However, this composition necessarily increases the number of functional evaluations. So, to preserve optimality, the number of evaluations must be reduced. There are many techniques used for this purpose by different authors, such as approximating some of the evaluations that have appeared with the composition by means of interpolation polynomials, Padé approximants, inverse interpolation, Adomian polynomials, etc. (see, for example, [6,9,10]). If after the reduction of functional evaluations the resulting method is not optimal, the weight function technique, introduced by Chun in [11], can be used to increase its order of convergence.

There are also other ways in the literature to compare different iterative methods with each other. Traub in [4] defined the information efficiency of an iterative method as

where p is the order of convergence and d is the number of functional evaluations per iteration. On the other hand, Ostrowski in [12] introduced the so-called efficiency index,

which, in turn, gives rise to the concept of optimality of an iterative method.

Regarding the order of convergence, Kung and Traub in their conjecture (see [13]) establish what is the highest order that a multipoint iterative scheme without memory can reach. Schemes that attain this limit are called optimal methods. Such a conjecture states that the order of convergence of any memoryless multistep method cannot exceed (called optimal order), where d is the number of functional evaluations per iteration, with efficiency index (called optimal index). In this sense, Newton is an optimal method.

Furthermore, in order to numerically test the behavior of the different iterative methods, Weerakoon and Fernando in [14] introduced the so-called computational order of convergence (COC),

where , and are three consecutive approximations of the root of the nonlinear equation, obtained in the iterative process. However, the value of the zero is not known in practice, which motivated the definition in [15] of the approximate computational convergence order ACOC,

On the other hand, the dynamical analysis of rational operators derived from iterative schemes, particularly when applied to low-degree nonlinear polynomial equations, has emerged as a valuable tool for assessing the stability and reliability of these numerical methods. This approach is detailed, for instance, in Refs. [16,17,18,19,20] and their associated references.

Using the tools of complex discrete dynamics, it is possible to compare different algorithms in terms of their basins of attraction, the dynamical behavior of the rational functions associated with the iterative method on low-degree polynomials, etc. Varona [21], Amat et al. [22], Neta et al. [23], Cordero et al. [24], Magreñán [25], Geum et al. [26], among others, have analyzed many schemes and parametric families of methods under this point of view, obtaining interesting results about their stability and reliability.

The dynamical analysis of an iterative method focuses on the study of the asymptotic behavior of the fixed points (roots, or not, of the equation) of the operator, as well as on the basins of attraction associated with them. In the case of parametric families of iterative methods, the analysis of the free critical points (points where the derivative of the operator cancels out that are not roots of the nonlinear function) and stability functions of the fixed points allows us to select the most stable members of these families. Some of the existing works in the literature related to this approach are Refs. [27,28], among others.

In this paper, we introduce a novel parametric family of multistep iterative methods tailored for solving nonlinear equations. This family is constructed by enhancing the traditional Newton’s scheme, incorporating an additional Newton step with a weight function and a frozen derivative. As a result, the family is characterized by a two-step iterative expression that relies on four arbitrary parameters.

Our approach yields a third-order uni-parametric family and a fourth-order member. However, in the course of developing these iterative schemes, we initially start with a first-order quad-parametric family. By selectively setting just one parameter, we manage to accelerate its convergence to a third-order scheme, and for a specific value of this parameter, we achieve an optimal member. To substantiate these claims, we conduct a comprehensive convergence analysis for all classes.

The stability of this newly introduced family is rigorously examined using dynamical tools. We construct stability surfaces and dynamical planes to illustrate the intricate behavior of this class. These stability surfaces help us to identify specific family members with exceptional behavior, making them well-suited for practical problem-solving applications. To further demonstrate the efficiency and reliability of these iterative schemes, we conduct several numerical tests.

The rest of the paper is organized as follows. In Section 2, we present the proposed class of iterative methods depending on several parameters, which is step-by-step modified in order to achieve the highest order of convergence. Section 3 is devoted to the dynamical study of the uni-parametric family; by means of this analysis, we find the most stable members, less dependent from their initial estimation. In Section 4, the previous theoretical results are checked by means of numerical tests on several nonlinear problems, using a wide variety of initial guesses and parameter values. Finally, some conclusions are presented.

2. Convergence Analysis of the Family

In this section, we conduct a convergence analysis of the newly introduced quad-parametric iterative family, with the following iterative expression:

where , , , are arbitrary parameters and .

Additionally, we present a strategy for simplifying it into a uni-parametric class to enhance convergence speed. Consequently, even though the quad-parametric family has a first-order convergence rate, we employ higher-order Taylor expansions in our proof, as they are instrumental in establishing the convergence rate of the uni-parametric subfamily. In Appendix A, the Mathematica code used for checking it is available.

Theorem 2 (quad-parametric family).

Let be a sufficiently differentiable function in an open interval I and a simple root of the nonlinear equation . Let us suppose that is continuous and nonsingular at ξ, and is an initial estimate close enough to ξ. Then, the sequence obtained by using the expression (3) converges to ξ with order one, being its error equation

where , and α, β, γ, and δ are free parameters.

Proof.

Let us consider as the simple root of nonlinear function and . We calculate the Taylor expansion of and around the root , we get

and

where ,

Again a Taylor expansion of around allows us to get

From Theorem 2, it is evident that the newly introduced quad-parametric family exhibits a convergence order of one, irrespective of the values assigned to , , , and . Nevertheless, we can expedite convergence by holding only two parameters constant, effectively reducing the family to a bi-parametric iterative scheme. In Appendix B, the Mathematica code used for checking it is available.

Theorem 3 (bi-parametric family).

Let be a sufficiently differentiable function in an open interval I and a simple root of the nonlinear equation . Let us suppose that is continuous and nonsingular at ξ, and is an initial estimate close enough to ξ. Then, the sequence obtained by using the expression (3) converges to ξ with order three, provided that and , being its error equation

where , , , and α, δ are arbitrary parameters.

Proof.

Using the results of Theorem 2 to cancel and accompanying and in (12), respectively, it must be satisfied that

According to the findings in Theorem 3, it is evident that the newly introduced bi-parametric family

where , and consistently exhibits a third-order convergence across all values of and . Nevertheless, it is noteworthy that by restricting one of the parameters while transitioning to a uni-parametric iterative scheme, not only can we sustain convergence, but we can also enhance performance. This improvement arises from the reduction in the error equation complexity, resulting in more efficient computations.

Corollary 1 (uni-parametric family).

Let be a sufficiently differentiable function in an open interval I and a simple root of the nonlinear equation . Let us suppose that is continuous and nonsingular at ξ and is an initial estimate close enough to ξ. Then, the sequence obtained by using the expression (17) converges to ξ with order three, provided that , being its error equation

where , , , and α is an arbitrary parameter. Indeed, and, therefore, provides the only member of the family of the optimal fourth-order of convergence.

Proof.

Using the results of Theorem 3 to reduce the expression of accompanying in (15), it must be satisfied that and/or . It is easy to show that the first equation has infinite solutions for

Based on the outcomes derived from Corollary 1, it becomes apparent that the recently introduced uni-parametric family, which we will call MCCTU(),

where , , and consistently exhibits a convergence order of three, regardless of the chosen value for . Nevertheless, a remarkable observation emerges when : in such a case, a member of this family attains an optimal convergence order of four.

Due to the previous results, we have chosen to concentrate our efforts solely on the MCCTU() class of iterative schemes moving forward. To pinpoint the most effective members within this family, we will utilize dynamical techniques outlined in Section 3.

3. Stability Analysis

This section delves into the examination of the dynamical characteristics of the rational operator linked to the iterative schemes within the MCCTU() family. This exploration provides crucial insights into the stability and dependence of the members of the family with respect to the initial estimations used. To shed light on the performance, we create rational operators and visualize their dynamical planes. These visualizations enable us to discern the behavior of specific methods in terms of the attraction basins of periodic orbits, fixed points, and other relevant dynamics.

Now, we introduce the basic concepts of complex dynamics used in the dynamical analysis of iterative methods. The texts [29,30], among others, provide extensive and detailed information on this topic.

Given a rational function , where is the Riemann sphere, the orbit of a point is defined as:

We are interested in the study of the asymptotic behavior of the orbits depending on the initial estimate analyzed in the dynamical plane of the rational function R defined by the different iterative methods.

To obtain these dynamical planes, we must first classify the fixed or periodic points of the rational operator R. A point is called a fixed point if it satisfies . If the fixed point is not a solution of the equation, it is called a strange fixed point. is said to be a periodic point of period if and , . A critical point is a point where .

On the other hand, a fixed point is called attracting if , superattracting if , repulsive if , and parabolic if .

The basin of attraction of an attractor is defined as the set of pre-images of any order:

The Fatou set consists of the points whose orbits have an attractor (fixed point, periodic orbit or infinity). Its complementary in is the Julia set, . Therefore, the Julia set includes all the repulsive fixed points and periodic orbits, and also their pre-images. So, the basin of attraction of any fixed point belongs to the Fatou set. Conversely, the boundaries of the basins of attraction compose the Julia set.

The following classical result, which is due to Fatou [31] and Julia [32], includes both periodic points (of any period) and fixed points, considered as periodic points of the unit period.

Theorem 4. ([31,32]).

Let R be a rational function. The immediate basins of attraction of each attracting periodic point contain at least one critical point.

By means of this key result, all the attracting behavior can be found using the critical points as a seed.

3.1. Rational Operator

While the fixed-point operator can be formulated for any nonlinear function, our focus here lies on constructing this operator for low-degree nonlinear polynomial equations, in order to get a rational function. This choice stems from the fact that the stability or instability criteria applied to methods on these equations can often be extended to other cases. Therefore, we introduce the following nonlinear equation represented by :

where are the roots of the polynomial.

Let us remark that when MCCTU() is directly applied to , parameter disappears in the resulting rational expression; so, no dynamical analysis can be made. However, if we use parameter appearing in Corollary 1 the same class of iterative methods can be expressed as MCCTU() and the dynamical analyisis can be made depending on .

Proposition 1 (rational operator).

Proof.

Let be a generic quadratic polynomial function with roots . We apply the iterative scheme MCCTU() given in (20) on and obtain a rational function that depends on the roots and the parameters . Then, by using a Möbius transformation (see [22,33,34]) on with

satisfying and , we get

which depends on two arbitrary parameters , thus completing the proof. □

From Proposition 1, if we set , we obtain

and, then, it is easy to show that the rational operator simplifies to the expression

which does not depend on any free parameters.

3.2. Fixed Points

Now, we calculate all the fixed points of given by (22), to afterwards analyze their character (attracting, repulsive, or neutral or parabolic).

Proposition 2.

The fixed points of are , , and also five strange fixed points:

- ,

- , and

- .

By using Equation (24), the strange fixed points and do not depend on any free parameter,

- , and

- .

Morover, strange fixed points depending on ϵ are conjugated, and . If , and , so the amount of strange fixed points is three. Indeed, and .

From Proposition 2, we establish that there are seven fixed points. Among these, 0 and ∞ come from the roots a and b of . comes from the divergence of the original scheme, previous to the Möbius transformation.

Proposition 3.

The strange fixed point , , has the following character:

- (i)

- If , then is an attractor.

- (ii)

- If , then is a repulsor.

- (iii)

- If , then is parabolic.

Moreover, can be attracting but not superattracting. The superattracting fixed points of are , , and the strange fixed points for and .

In the particular case of (using the Equation (24)), all the strange fixed points are repulsive.

Proof.

We prove this result by analyzing the stability of the fixed points found in Proposition 2. It must be done by evaluating at each fixed point and, if it is lower, equal, or greater than one it is called attracting, neutral, or repulsive, respectively.

The cases of and ∞ are straightforward from the expression of . When is studied, then

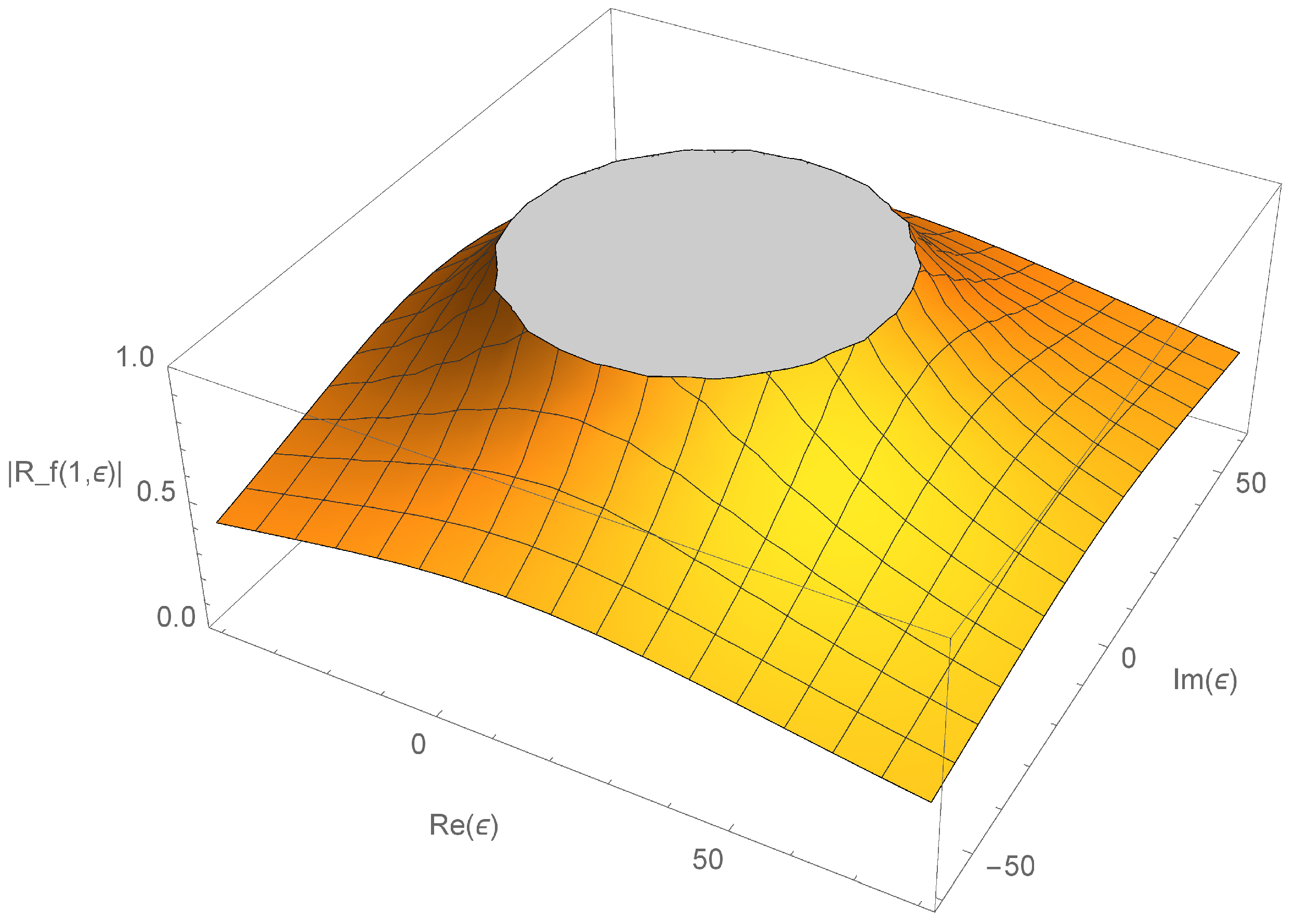

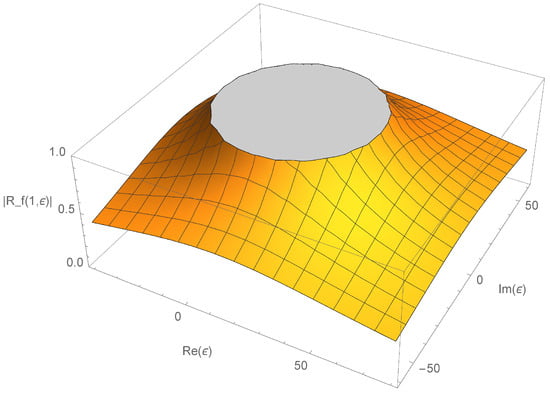

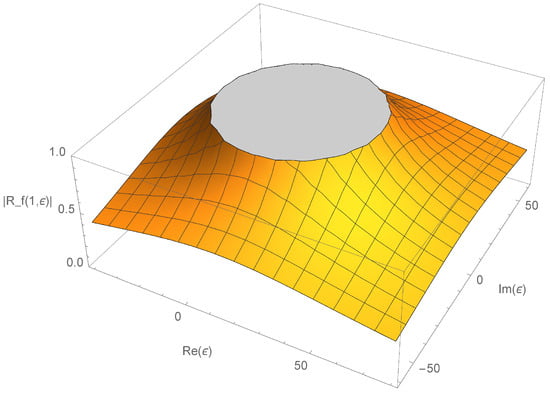

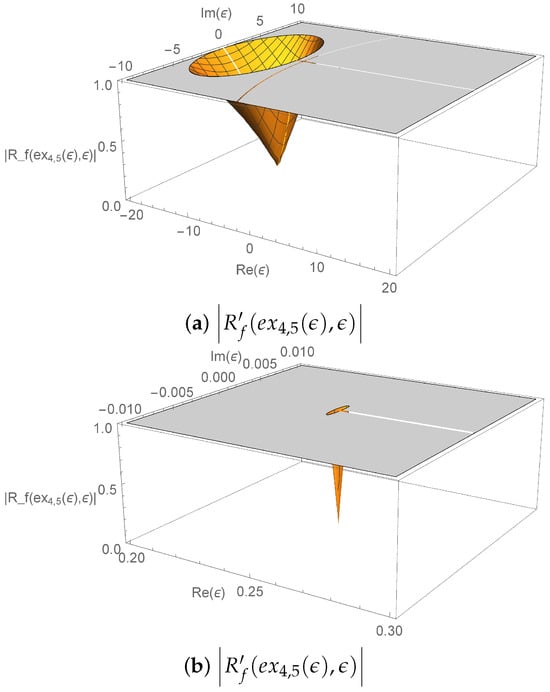

so it is attracting, repelling or neutral if is greater, lower, or equal to 32. It can be graphically viewed in Figure 1.

Figure 1.

Stability function of , for a complex .

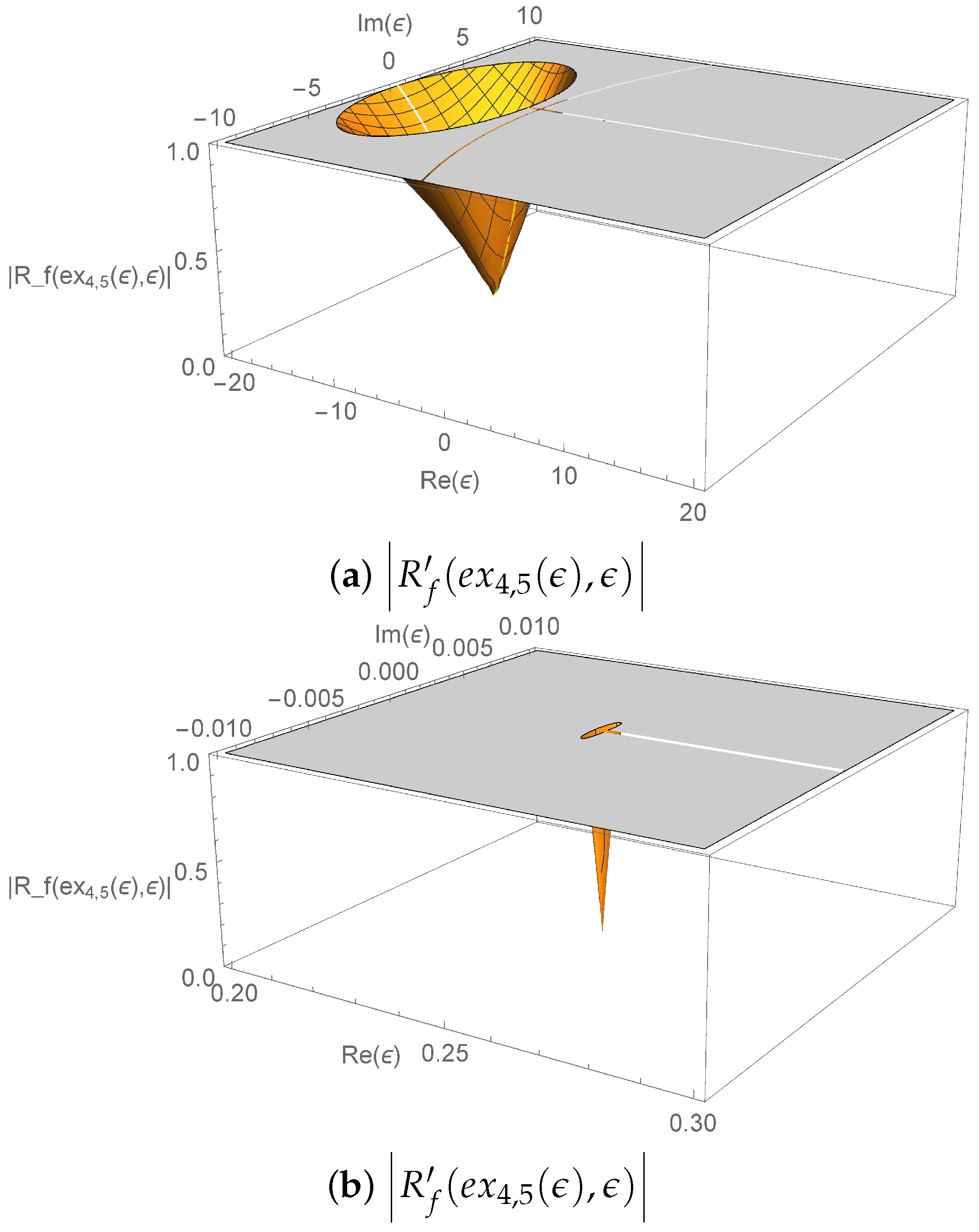

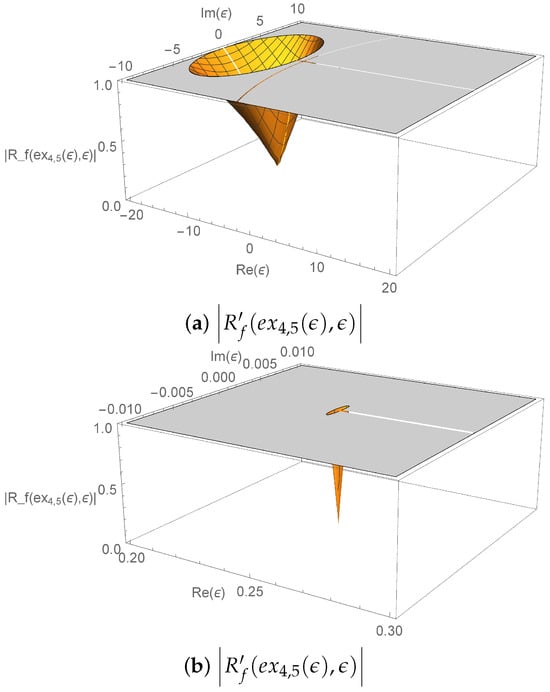

By a graphical and numerical study of , , it can be deduced that are repulsive for all , meanwhile are superattracting for or . Their stability function is presented in Figure 2a,b. Moreover, can not be a superattractor as . □

Figure 2.

Stability surfaces of for different complex regions.

It is clear that 0 and ∞ are always superattracting fixed points, but the stability of the remaining fixed points depends on the values of . According to Proposition 3, two strange fixed points can become superattractors. This implies that there would exist basins of attraction for them, potentially causing the method to fail to converge to the solution. However, even when they are only attracting (that can be the case of ), these basins of attraction exist.

As we have stated previously, Figure 1 represents the stability function of the strange fixed point . In this figure, the zones of attraction are the yellow area and the repulsion zone corresponds to the grey area. For values of within the disk, is repulsive; whereas for values of outside the grey disk, becomes attracting. So, it is natural to select values within the grey disk, as a repulsive divergence improves the performance of the iterative scheme.

Similar conclusions can be stated from the stability region of strange fixed points , appearing in Figure 2. When a value of parameter is taken in the yellow area of Figure 2, both points are simultaneously attracting, so there are at least four different basins of attraction.

However, the basins of attraction also appear when there exist attracting periodic orbits of any period. To detect this kind of behavior, the role of critical points is crucial.

3.3. Critical Points

Now, we obtain the critical points of .

Proposition 4.

The critical points of are , and also:

- , and

- .

Morover, if , critical points are not free . In any other case, are conjugated free critical points.

From Proposition 4, we establish that, in general, there are five critical points. The free critical point is a pre-image of the strange fixed point . Therefore, the stability of corresponds to the stability of (see Section 3.2). Note that if the Equation (24) is satisfied, the only remaining free critical point is . Since is the pre-image of , it would be a repulsor.

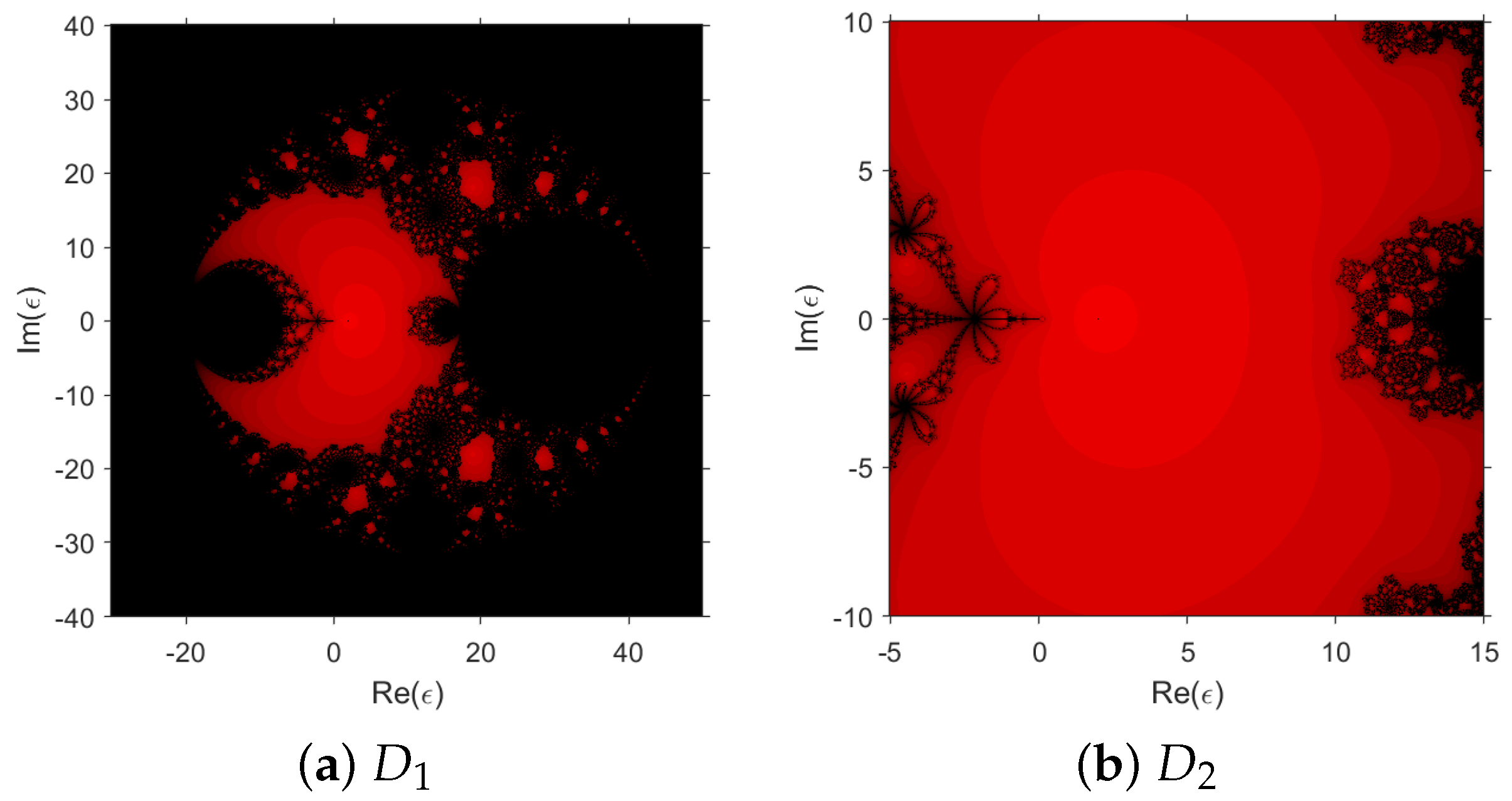

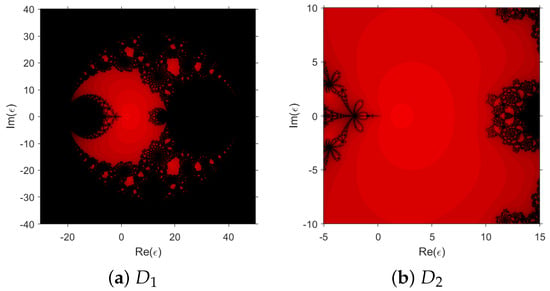

Then, we use the only independent free critical point (conversely, , as they are conjugates) to generate the parameter plane. This a graphical representation of the global stability performance of the member of the class of iterative methods. In a definite area of the complex plane, a mesh of points is generated. Each one of these points is used as a value of parameter , i.e., we get a particular element of the family. For each one of these values, we get as our initial guess the critical point and calculate its orbit. If it converges to or , then the point corresponding to this value of is represented using a red color. In other case, it is left in black. So, convergent schemes to the original roots of the quadratic equations appear in the red stable area and the black area corresponds to schemes of the classes that are not able to converge to them, by reason of an attracting strange fixed point or periodic orbit. This performance can be seen in Figure 3, representing the domain , where a wide area of stable performance can be found around the origin, (Figure 3b).

Figure 3.

Parameter plane of on domain and a detail on .

3.4. Dynamical Planes

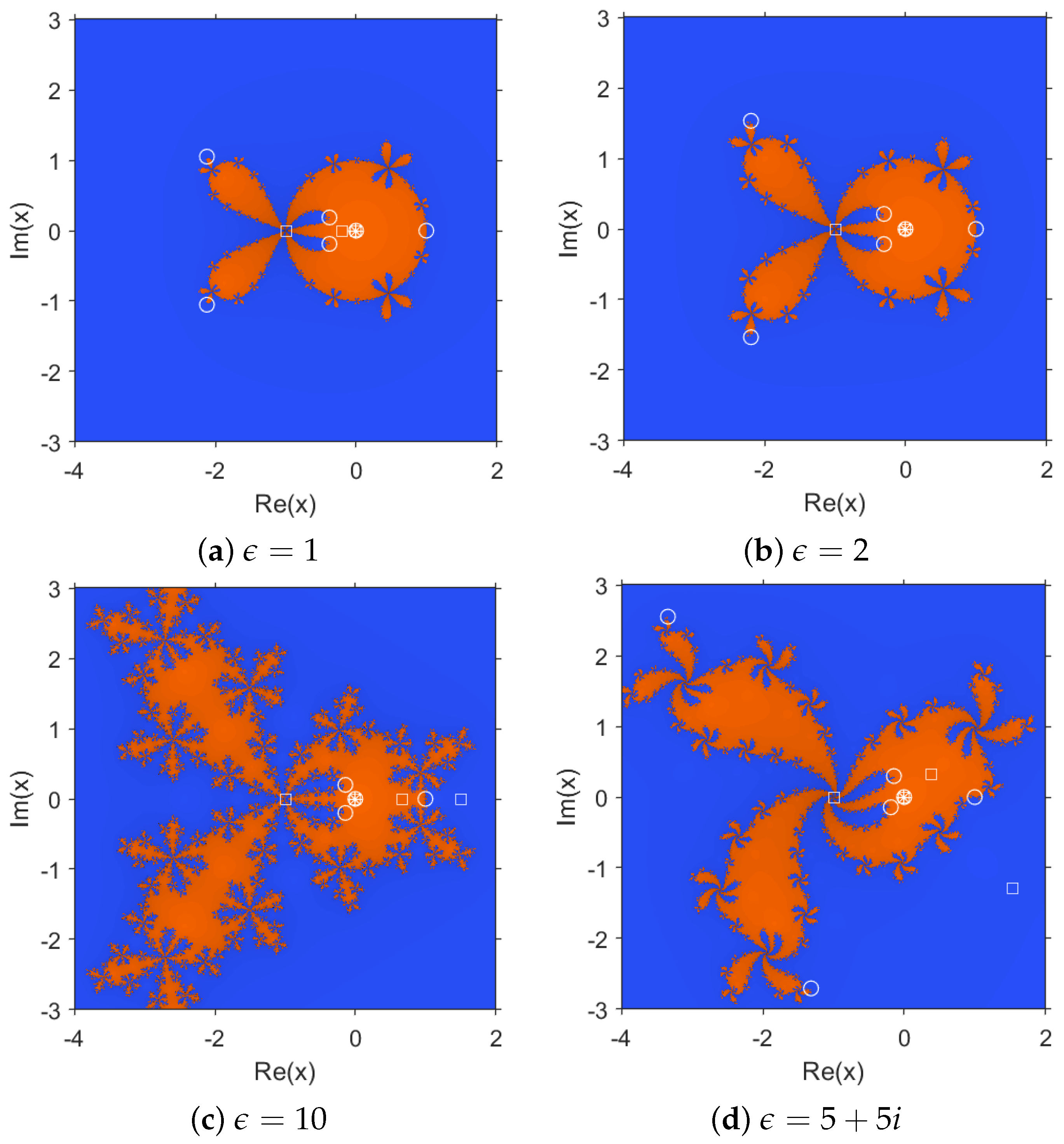

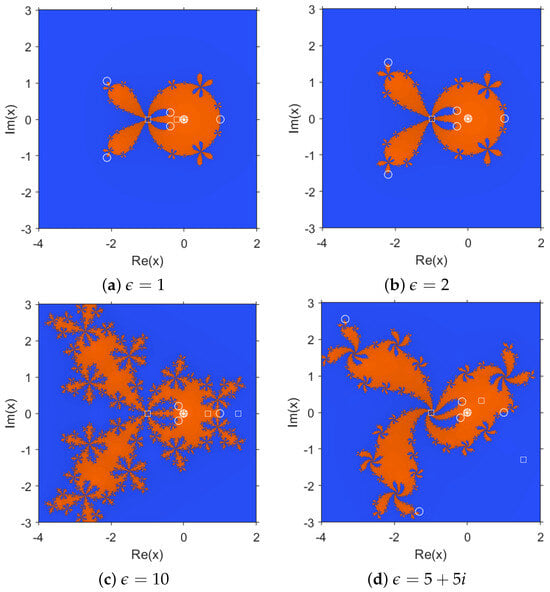

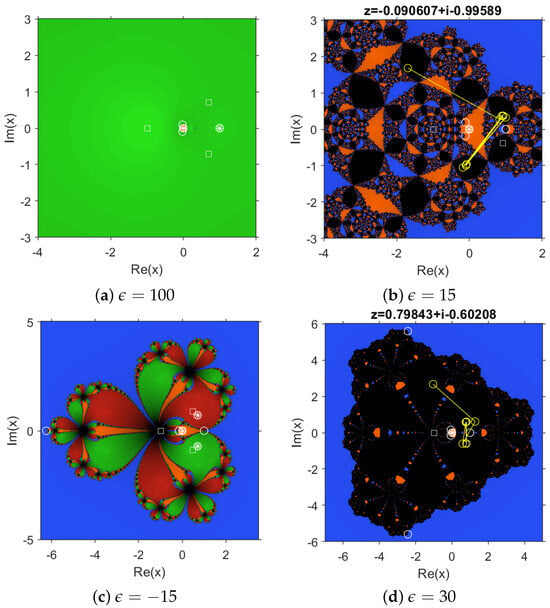

A dynamical plane is defined as a mesh in a limited domain of the complex plane, where each point corresponds to a different initial estimate . The graphical representation shows the method’s convergence starting from within a maximum of 80 iterations and as the tolerance. Fixed points appear as a white circle ‘○’, critical points are ‘□’, and a white asterisk ‘∗’ symbolizes an attracting point. Additionally, the basins of attraction are depicted in different colors. To generate this graph, we use MATLAB R2020b with a resolution of pixels.

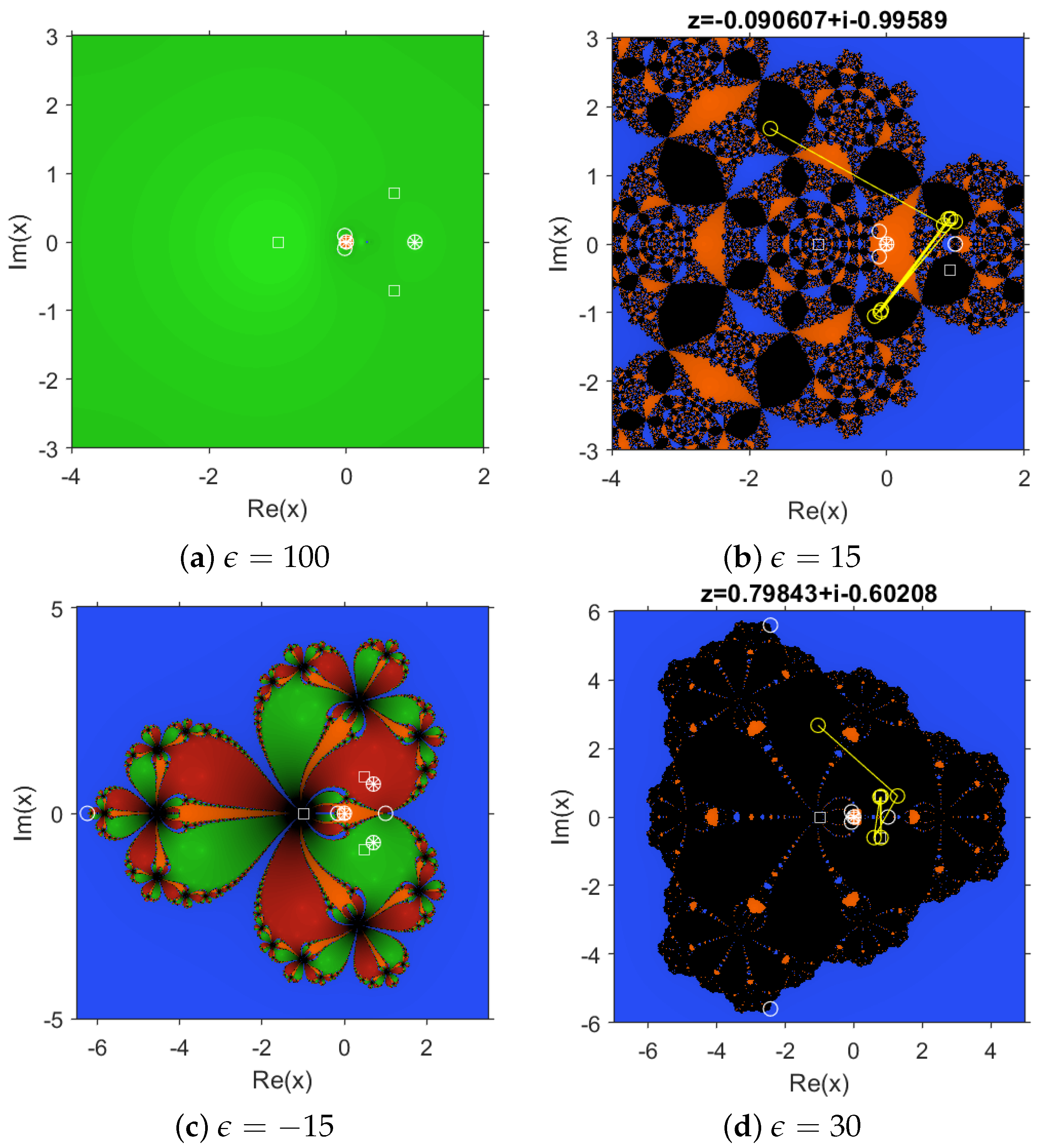

Here, we analyze the stability of various MCCTU() methods using dynamical planes. We consider methods with values both inside and outside the stability surface of , specifically, in the red and black areas of the parameter plane represented in Figure 3a.

Firstly, examples of methods within the stability region are provided for . Their dynamical planes, along with their respective basins of attraction, are shown in Figure 4. Let us remark that all selected values of lie in the red area of the parameter plane and have only two basins of attraction, corresponding to (in orange color in the figures) and (blue in the figures).

Figure 4.

Dynamical planes for some stable methods.

Secondly, some schemes outside the stability region (in black in the parameter plane) are provided for . Their dynamical planes are shown in Figure 5. Each of these members have specific characteristics: in Figure 5a, the widest basin of attraction (in green color) corresponds to , which is attracting for this value of , the basin of is a very narrow area around the point; for , we observe in Figure 5b three different basins of attraction, the third of the two being attracting periodic orbits of period 2 (one of them is plotted in yellow in the figure); Figure 5c corresponds to , inside the stability area of (see Figure 2), where both are simultaneously attracting; finally, for , the widest basin of attraction corresponds to an attracting periodic orbit of period 2, see Figure 5d.

Figure 5.

Unstable dynamical planes.

4. Numerical Results

In this section, we conduct several numerical tests to validate the theoretical convergence and stability results of the MCCTU() family obtained in previous sections. We use both stable and unstable methods from (20) and apply them to ten nonlinear test equations, with their expressions and corresponding roots provided in Table 1.

Table 1.

Nonlinear test equations and corresponding roots.

We aim to demonstrate the theoretical results by testing the MCCTU() family. Specifically, we evaluate three representative members of the family with and , , and . Therefore, in all cases, .

We conduct two experiments. In the first experiment, we analyze the stability of the MCCTU() family using two of its methods, chosen based on stable and unstable values of the parameter . In the second experiment, we perform an efficiency analysis of the MCCTU() family through a comparative study between its optimal stable member and fifteen different fourth-order methods from the literature: Ostrowski (OS) in [12,35], King (KI) in [35,36], Jarratt (JA) in [35,37], Özban and Kaya (OK1, OK2, OK3) in [8], Chun (CH) in [38], Maheshwari (MA) in [39], Behl et al. (BMM) in [40], Chun et al. (CLND1, CLND2) in [41], Artidiello et al. (ACCT1, ACCT2) in [42], Ghanbari (GH) in [43], and Kou et al. (KLW) in [44].

While performing these numerical tests, we start the iterations with different initial estimates: close (), far (), and very far () from the root . This approach allows us to evaluate how sensitive the methods are to the initial estimation when finding a solution.

The calculations are performed using the MATLAB R2020b programming package with variable precision arithmetic set to 200 digits of mantissa (in Appendix C, an example with double-precision arithmetics is included). For each method, we analyze the number of iterations (iter) required to converge to the solution, with stopping criteria defined as or . Here, represents the error estimation between two consecutive iterations, and is the residual error of the nonlinear test function.

To check the theoretical order of convergence (p), we calculate the approximate computational order of convergence (ACOC) as described by Cordero and Torregrosa in [15]. In the numerical results, if the ACOC values do not stabilize throughout the iterative process, it is marked as ‘-’; and if any method fails to converge within a maximum of 50 iterations, it is marked as ‘nc’.

4.1. First Experiment: Stability Analysis of MCCTU() Family

In this experiment, we conducted a stability analysis of the MCCTU() family by considering values of both within the stability regions () and outside of them (), setting . The methods analyzed are of order 3, consistent with the theoretical convergence results. A special case occurs when , where the associated method never converges to the solution because the denominator in the relation becomes zero, causing to grow indefinitely.

The numerical performance of the iterative methods MCCTU(2) and MCCTU(100) is presented in Table 2 and Table 3, using initial estimates that are close, far, and very far from the root. This approach enables us to assess the stability and reliability of the methods under various initial conditions.

Table 2.

Numerical performance of MCCTU(2) method on nonlinear equations (“nc” means non-convergence).

Table 3.

Numerical performance of MCCTU(100) method on nonlinear equations (“nc” means non-convergence).

From the analysis of the first experiment, it is evident that the MCCTU(2) method exhibits robust performance. For initial estimates close to the root (), the method consistently converges to the solution with very low errors, achieving convergence in three or four iterations, and the ACOC value stabilizes at 3. For initial estimates that are far (), the number of iterations increases, but the method still converges to the solution in nine out of ten cases. For initial estimates that are very far (), the method holds a similar performance, converging to the solution in eight out of ten cases. It is notable that as the initial condition moves further away, the method shows a slight difficulty in finding the solution. This slight dependence is understandable given the complexity of the nonlinear functions and . Nonetheless, the method is shown to be stable and robust, with a convergence order of 3, verifying the theoretical results.

On the other hand, MCCTU(100) method encounters significant difficulties in finding the solution. As the initial conditions move further away, the number of iterations increases. Despite lacking good stability characteristics, the method converges to the solution for initial estimates close to the root. However, for initial estimates that are far and very far from the root, it fails to converge in four out of ten cases. Additionally, the method never stabilizes the ACOC value in any case. These results confirm the theoretical instability of the method, as lies outside the stability surface studied in Section 3.

4.2. Second Experiment: Efficiency Analysis of MCCTU() Family

In this experiment, we conduct a comparative study between an optimal method of the MCCTU() family and the fifteen fourth-order methods mentioned in the introduction of Section 4, to contrast their numerical performances on nonlinear equations. We consider the method associated with and , denoted as MCCTU(1), as the optimal stable member of the MCCTU() family with fourth-order of convergence.

Thus, in Table 4, Table 5, Table 6, Table 7, Table 8, Table 9, Table 10, Table 11, Table 12, Table 13 and Table 14, we present the numerical results for the sixteen known methods, considering initial estimates that are close, far, and very far from the root, as well as the ten test equations.

Table 4.

Numerical performance of iterative methods on nonlinear equations for close to (1/4).

Table 5.

Numerical performance of iterative methods on nonlinear equations for close to (2/4).

Table 6.

Numerical performance of iterative methods on nonlinear equations for close to (3/4).

Table 7.

Numerical performance of iterative methods on nonlinear equations for close to (4/4).

Table 8.

Numerical performance of iterative methods on nonlinear equations for far from (“nc” means non-convergence) (1/4).

Table 9.

Numerical performance of iterative methods on nonlinear equations for far from (“nc” means non-convergence) (2/4).

Table 10.

Numerical performance of iterative methods in nonlinear equations for far from (“nc” means non-convergence) (3/4).

Table 11.

Numerical performance of iterative methods on nonlinear equations for far from (4/4).

Table 12.

Numerical performance of iterative methods on nonlinear equations for very far from (“nc” means non-convergence) (1/4).

Table 13.

Numerical performance of iterative methods on nonlinear equations for very far from (“nc” means non-convergence) (2/4).

Table 14.

Numerical performance of iterative methods on nonlinear equations for very far from (“nc” means non-convergence) (3/4).

In Table 4, Table 5, Table 6 and Table 7, we observe that MCCTU(1) consistently converges to the solution for initial estimates close to the root (), with a similar number of iterations as other methods across all equations. The theoretical convergence order is confirmed by the ACOC, which is close to 4. However, what about the dependence of MCCTU(1) on initial estimates? To answer this, we analyze the method for initial estimates far and very far from the solution, specifically for and , respectively. The results are shown in Table 8, Table 9, Table 10, Table 11, Table 12, Table 13, Table 14 and Table 15.

Table 15.

Numerical performance of iterative methods in nonlinear equations for very far from (“nc” means non-convergence) (4/4).

The results presented in Table 8, Table 9, Table 10 and Table 11 are promising. MCCTU(1) converges to the solution in nine out of the ten nonlinear equations, even when the initial estimate is far from the root (). In these cases, the ACOC consistently stabilizes and approaches 4. Only in one instance, for the function , does MCCTU(1) fail to converge, similar to the other thirteen methods. For this particular equation, only two methods successfully approximate the root. In the remaining equations, MCCTU(1) converges to the solution with a comparable number of iterations to other methods and even requires fewer iterations than Ostrowski’s method, as seen with function . Therefore, we confirm that this method is robust, consistent with the stability results shown in previous sections.

The results presented in Table 12, Table 13, Table 14 and Table 15 confirm the exceptional robustness of the MCCTU(1) method for initial estimates that are very far from the root (), as the method converges in eight out of ten cases. A slight dependence on the initial estimate is observed for functions and , where the method does not converge; however, in these two cases, the other methods also fail to approximate the solution, except for the ACCT2 method, which converges to the root of function with 50 iterations. The complexity of the nonlinear equations plays a significant role in finding their solutions. Moreover, in the cases where the MCCTU(1) method converges to the roots, it does so with a comparable number of iterations to other methods and often with fewer iterations, as seen in function . Additionally, for these cases, the ACOC consistently stabilizes at values close to 4.

Therefore, based on the results of the second experiment, we conclude that the MCCTU() family demonstrates impressive numerical performance when using the optimal stable member with as a representative, highlighting its robustness and efficiency even with challenging initial conditions. Overall, the selected MCCTU(1) method exhibits low errors and requires a similar or fewer number of iterations compared to other methods. In certain cases, as the complexity of the nonlinear equation increases, the MCCTU(1) method outperforms Ostrowski’s method and others. The theoretical convergence order is also confirmed by the ACOC, which is always close to 4.

5. Conclusions

The development of the parametric family of multistep iterative schemes MCCTU() based on the damped Newton scheme has proven to be an effective strategy for solving nonlinear equations. The inclusion of an additional Newton step with a weight function and a “frozen” derivative significantly improved the convergence speed from a first-order class to a uniparametric third-order family.

The numerical results confirm the robustness of the MCCTU(2) method for initial estimates close to the root (), with very low errors and convergence in three or four iterations. As the initial estimates move further away () and (), the method continues to show solid performance, converging in most cases and confirming its theoretical stability and robustness.

Through the analysis of stability surfaces and dynamical planes, specific members of the MCCTU() family with exceptional stability were identified. These members are particularly suitable for scalar functions with challenging convergence behavior, exhibiting attractive periodic orbits and strange fixed points in their corresponding dynamical planes. The MCCTU(1) member stood out for its optimal and stable performance.

In the comparative analysis, the MCCTU(1) method demonstrated superior numerical performance in many cases, requiring a similar or fewer number of iterations compared to well-established fourth-order methods such as Ostrowski’s method. This superior performance is especially notable in more complex nonlinear equations, where MCCTU(1) outperforms several alternative methods.

The theoretical convergence order of the MCCTU() family was confirmed by calculating the approximate computational order of convergence (ACOC). In most cases, the ACOC value stabilized close to 3, validating the effectiveness and accuracy of the proposed methods both theoretically and practically. Additionally, it was confirmed that the convergence order of the method associated with is optimal, achieving a fourth-order convergence.

Finally, the analysis revealed that certain members of the MCCTU() family, particularly those with values outside the stability surface, exhibited significant instability. These methods struggled to converge to the solution, especially when initial estimates were far or very far from the root. For instance, the method with failed to stabilize and did not meet the convergence criteria in four out of ten cases. Additionally, the ACOC values for this method did not stabilize, confirming its theoretical instability. This highlights the importance of selecting appropriate parameter values within the stability regions to ensure reliable performance.

Author Contributions

Conceptualization, A.C. and J.R.T.; methodology, G.U.-C. and M.M.-M.; software, M.M.-M. and G.U.-C.; validation, M.M.-M.; formal analysis, J.R.T.; investigation, A.C.; writing—original draft preparation, M.M.-M.; writing—review and editing, A.C. and F.I.C.; supervision, J.R.T. All authors have read and agreed to the published version of the manuscript.

Funding

Funded with Ayuda a Primeros Proyectos de Investigación (PAID-06-23), Vicerrectorado de Investigación de la Universitat Politècnica de València (UPV).

Data Availability Statement

Data is contained within the article.

Acknowledgments

The authors would like to thank the anonymous reviewers for their useful comments and suggestions.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Detailed Computation of Theorem 2

The comprehensive proof of Theorem 2, methodically detailed step-by-step in Section 2, is further validated in Wolfram Mathematica software v13.2 using the following code:

- fx = dFa SeriesData[Subscript[e, k], 0, {0, 1, Subscript[C, 2], Subscript[C, 3],

- Subscript[C, 4], Subscript[C, 5]}, 0, 5, 1];

- dfx = D[fx, Subscript[e, k]];

- fx/dfx // Simplify;

- (∗Error in the first step∗)

- Subscript[y, e] = Simplify[Subscript[e, k] - \[Alpha]∗fx/dfx];

- fy = fx /. Subscript[e, k] -> Subscript[y, e] // Simplify;

- (∗Error in the second step∗)

- Subscript[x, e] = Subscript[y, e] - (\[Beta] + \[Gamma]∗fy/fx +

- \[Delta]∗(fy/fx)^2)∗(fx/dfx) // Simplify

Appendix B. Detailed Computation of Theorem 3

The comprehensive proof of Theorem 3, methodically detailed step-by-step in Section 2, is further validated in Wolfram Mathematica software v13.2 using the following code:

- fx = dFa SeriesData[Subscript[e, k], 0, {0, 1, Subscript[C, 2], Subscript[C, 3],

- Subscript[C, 4], Subscript[C, 5]}, 0, 5, 1];

- dfx = D[fx, Subscript[e, k]];

- fx/dfx // Simplify;

- (∗Error in the first step∗)

- Subscript[y, e] = Simplify[Subscript[e, k] - \[Alpha]∗fx/dfx];

- fy = fx /. Subscript[e, k] -> Subscript[y, e] // Simplify;

- (∗Error in the second step∗)

- Subscript[x, e] = Subscript[y, e] - (\[Beta] + \[Gamma]∗fy/fx +

- \[Delta]∗(fy/fx)^2)∗(fx/dfx) // Simplify;

- Solve[1 - \[Beta] - \[Gamma] - \[Delta] - \[Alpha]^2 \[Delta] + \[Alpha]

- (-1 + \[Gamma] + 2 \[Delta]) == 0 && \[Beta] + \[Gamma] + \[Delta] + 2 \[Alpha]^3

- \[Delta] - \[Alpha]^2 (\[Gamma] + \[Delta]) - \[Alpha] (-1 + \[Gamma] + 2 \[Delta])

- == 0, {\[Alpha], \[Beta], \[Gamma], \[Delta]}];

- Subscript[x, e] = FullSimplify[Subscript[x, e] /. {\[Beta] -> ((-1 + \[Alpha])^2

- (-1 - \[Alpha] + \[Alpha]^2 \[Delta]))/\[Alpha]^2, \[Gamma] -> (1 - 2 \[Alpha]^2

- \[Delta] + 2 \[Alpha]^3 \[Delta])/\[Alpha]^2}]

Appendix C. Additional Experiment Focused on Practical Calculations

In this comprehensive experiment, we conduct an in-depth efficiency analysis of the MCCTU(1) method, set with , specifically tailored for practical calculations. This analysis begins with initial estimates that closely approximate the roots (). All computations are carried out using the MATLAB R2020b software package with standard floating-point arithmetic. We assess the number of iterations (iter) each method requires to reach the solution, with sttopping criteria of . We also calculate the Approximate Computational Order of Convergence (ACOC) to verify the theoretical order of convergence (p). Our findings indicate that fluctuating ACOC values are marked with a ‘-’, and methods that do not converge within 50 iterations are labeled as ‘nc’. Additionally, this study aims to examine how the convergence order is influenced by the number of digits in the variable precision arithmetic employed in the experiments, using the same ten nonlinear test equations listed in Table 1. Thus, the numerical results are presented in Table A1.

Table A1.

Numerical results of MCCTU(1) in practical calculations for close to .

Table A1.

Numerical results of MCCTU(1) in practical calculations for close to .

| Function | Iter | ACOC | |||

|---|---|---|---|---|---|

| −0.6 | 8.4069 | 3 | 4.0111 | −0.6367 | |

| 0.2 | 4.0915 | 3 | 3.9624 | 0.2575 | |

| 0.6 | 1.8066 | 3 | 4.0121 | 0.6392 | |

| −14.1 | 3.6467 | 2 | - | −14.1013 | |

| 1.3 | 1.2827 | 3 | 4.0226 | 1.3652 | |

| 0.1 | 1.1439 | 3 | 3.9969 | 0.1281 | |

| −1.2 | 8.1090 | 3 | 4.0025 | −1.2076 | |

| 2.3 | 3.2363 | 3 | 4.0010 | 2.3320 | |

| −1.4 | 1.2504 | 3 | 3.9982 | −1.4142 | |

| −0.9 | 1.3096 | 3 | 4.0263 | −0.9060 |

From the analysis of this experiment, it is confirmed that convergence to the solution is achieved in all cases, with errors smaller than the set threshold, reaching convergence within 2 or 3 iterations. The value of the ACOC stabilizes at 4, thus verifying the theoretical results. Furthermore, it is clear that the convergence order is not affected by the number of digits in the variable precision arithmetic used. The number of digits plays a crucial role when higher precision is required, particularly for smaller errors, preventing divisions by zero in this case. Additionally, it is noted that the ACOC for function cannot be calculated, due to convergence to the solution in just 2 iterations, while (2) requires at least 3 iterations to calculate the approximate order of convergence.

References

- Danchick, R. Gauss meets Newton again: How to make Gauss orbit determination from two position vectors more efficient and robust with Newton–Raphson iterations. Appl. Math. Comput. 2008, 195, 364–375. [Google Scholar] [CrossRef]

- Tostado-Véliz, M.; Kamel, S.; Jurado, F.; Ruiz-Rodriguez, F.J. On the Applicability of Two Families of Cubic Techniques for Power Flow Analysis. Energies 2021, 14, 4108. [Google Scholar] [CrossRef]

- Arroyo, V.; Cordero, A.; Torregrosa, J.R. Approximation of artificial satellites’ preliminary orbits: The efficiency challenge. Math. Comput. Model. 2011, 54, 1802–1807. [Google Scholar] [CrossRef]

- Traub, J.F. Iterative Methods for the Solution of Equations; Prentice-Hall: Englewood Cliffs, NJ, USA, 1964. [Google Scholar]

- Petković, M.; Neta, B.; Petković, L.; Džunić, J. Multipoint Methods for Solving Nonlinear Equations; Academic Press: Boston, MA, USA, 2013. [Google Scholar]

- Amat, S.; Busquier, S. Advances in Iterative Methods for Nonlinear Equations; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Ostrowski, A.M. Solution of Equations in Euclidean and Banach Spaces; Academic Press: New York, NY, USA, 1973. [Google Scholar]

- Özban, A.Y.; Kaya, B. A new family of optimal fourth-order iterative methods for nonlinear equations. Results Control Optim. 2022, 8, 1–11. [Google Scholar] [CrossRef]

- Adomian, G. Solving Frontier Problem of Physics: The Decomposition Method; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1994. [Google Scholar]

- Petković, M.; Neta, B.; Petković, L.; Džunić, J. Multipoint methods for solving nonlinear equations: A survey. Appl. Math. Comput. 2014, 226, 635–660. [Google Scholar] [CrossRef]

- Chun, C. Some fourth-order iterative methods for solving nonlinear equations. Appl. Math. Comput. 2008, 195, 454–459. [Google Scholar] [CrossRef]

- Ostrowski, A.M. Solution of Equations and Systems of Equations; Academic Press: New York, NY, USA, 1960. [Google Scholar]

- Kung, H.T.; Traub, J.F. Optimal Order of One-Point and Multipoint Iteration. J. Assoc. Comput. Mach. 1974, 21, 643–651. [Google Scholar] [CrossRef]

- Weerakoon, S.; Fernando, T. A variant of Newton’s method with accelerated third-order convergence. Appl. Math. Lett. 2000, 13, 87–93. [Google Scholar] [CrossRef]

- Cordero, A.; Torregrosa, J.R. Variants of Newton’s Method using fifth-order quadrature formulas. Appl. Math. Comput. 2007, 190, 686–698. [Google Scholar] [CrossRef]

- Kansal, M.; Cordero, A.; Bhalla, S.; Torregrosa, J.R. New fourth- and sixth-order classes of iterative methods for solving systems of nonlinear equations and their stability analysis. Numer. Algorithms 2021, 87, 1017–1060. [Google Scholar] [CrossRef]

- Cordero, A.; Soleymani, F.; Torregrosa, J.R. Dynamical analysis of iterative methods for nonlinear systems or how to deal with the dimension? Appl. Math. Comput. 2014, 244, 398–412. [Google Scholar] [CrossRef]

- Cordero, A.; Moscoso-Martínez, M.; Torregrosa, J.R. Chaos and Stability in a New Iterative Family for Solving Nonlinear Equations. Algorithms 2021, 14, 101. [Google Scholar] [CrossRef]

- Husain, A.; Nanda, M.N.; Chowdary, M.S.; Sajid, M. Fractals: An Eclectic Survey, Part I. Fractal Fract. 2022, 6, 89. [Google Scholar] [CrossRef]

- Husain, A.; Nanda, M.N.; Chowdary, M.S.; Sajid, M. Fractals: An Eclectic Survey, Part II. Fractal Fract. 2022, 6, 379. [Google Scholar] [CrossRef]

- Varona, J.L. Graphic and numerical comparison between iterative methods. Math. Intell. 2002, 24, 37–46. [Google Scholar] [CrossRef]

- Amat, S.; Busquier, S.; Plaza, S. Review of some iterative root–finding methods from a dynamical point of view. SCI. A Math. Sci. 2004, 10, 3–35. [Google Scholar]

- Neta, B.; Chun, C.; Scott, M. Basins of attraction for optimal eighth order methods to find simple roots of nonlinear equations. Appl. Math. Comput. 2014, 227, 567–592. [Google Scholar] [CrossRef]

- Cordero, A.; García-Maimó, J.; Torregrosa, J.R.; Vassileva, M.P.; Vindel, P. Chaos in King’s iterative family. Appl. Math. Lett. 2013, 26, 842–848. [Google Scholar] [CrossRef]

- Magreñán, A.; Argyros, I. A Contemporary Study of Iterative Methods; Academic Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Geum, Y.H.; Kim, Y.I. Long–term orbit dynamics viewed through the yellow main component in the parameter space of a family of optimal fourth-order multiple-root finders. Discrete Contin. Dyn. Syst. B 2020, 25, 3087–3109. [Google Scholar] [CrossRef]

- Cordero, A.; Torregrosa, J.; Vindel, P. Dynamics of a family of Chebyshev-Halley type method. Appl. Math. Comput. 2012, 219, 8568–8583. [Google Scholar] [CrossRef]

- Magreñán, A. Different anomalies in a Jarratt family of iterative root-finding methods. Appl. Math. Comput. 2014, 233, 29–38. [Google Scholar]

- Devaney, R. An Introduction to Chaotic Dynamical Systems; Addison-Wesley Publishing Company: Boston, MA, USA, 1989. [Google Scholar]

- Beardon, A. Iteration of Rational Functions; Graduate Texts in Mathematics; Springer: New York, NY, USA, 1991. [Google Scholar]

- Fatou, P. Sur les équations fonctionelles. Bull. Soc. Mat. Fr. 1919, 47, 161–271. [Google Scholar] [CrossRef]

- Julia, G. Mémoire sur l’iteration des fonctions rationnelles. Mat. Pur. Appl. 1918, 8, 47–245. [Google Scholar]

- Scott, M.; Neta, B.; Chun, C. Basin attractors for various methods. Appl. Math. Comput. 2011, 218, 2584–2599. [Google Scholar] [CrossRef]

- Blanchard, P. Complex analytic dynamics on the Riemann sphere. Bull. Am. Math. Soc. 1984, 11, 85–141. [Google Scholar] [CrossRef]

- José, L. Hueso, E.M.; Teruel, C. Multipoint efficient iterative methods and the dynamics of Ostrowski’s method. Int. J. Comput. Math. 2019, 96, 1687–1701. [Google Scholar] [CrossRef]

- King, R.F. A Family of Fourth Order Methods for Nonlinear Equations. SIAM J. Numer. Anal. 1973, 10, 876–879. [Google Scholar] [CrossRef]

- Jarratt, P. Some fourth order multipoint iterative methods for solving equations. Math. Comput. 1966, 20, 434–437. [Google Scholar] [CrossRef]

- Chun, C. Construction of Newton-like iteration methods for solving nonlinear equations. Numer. Math. 2006, 104, 297–315. [Google Scholar] [CrossRef]

- Maheshwari, A.K. A fourth order iterative method for solving nonlinear equations. Appl. Math. Comput. 2009, 211, 383–391. [Google Scholar] [CrossRef]

- Behl, R.; Maroju, P.; Motsa, S. A family of second derivative free fourth order continuation method for solving nonlinear equations. J. Comput. Appl. Math. 2017, 318, 38–46. [Google Scholar] [CrossRef]

- Chun, C.; Lee, M.Y.; Neta, B.; Džunić, J. On optimal fourth-order iterative methods free from second derivative and their dynamics. Appl. Math. Comput. 2012, 218, 6427–6438. [Google Scholar] [CrossRef]

- Artidiello, S.; Chicharro, F.; Cordero, A.; Torregrosa, J.R. Local convergence and dynamical analysis of a new family of optimal fourth-order iterative methods. Int. J. Comput. Math. 2013, 90, 2049–2060. [Google Scholar] [CrossRef]

- Ghanbari, B. A new general fourth-order family of methods for finding simple roots of nonlinear equations. J. King Saud Univ. Sci. 2011, 23, 395–398. [Google Scholar] [CrossRef]

- Kou, J.; Li, Y.; Wang, X. A composite fourth-order iterative method for solving non-linear equations. Appl. Math. Comput. 2007, 184, 471–475. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).