An Efficient Anti-Noise Zeroing Neural Network for Time-Varying Matrix Inverse

Abstract

1. Introduction

- •

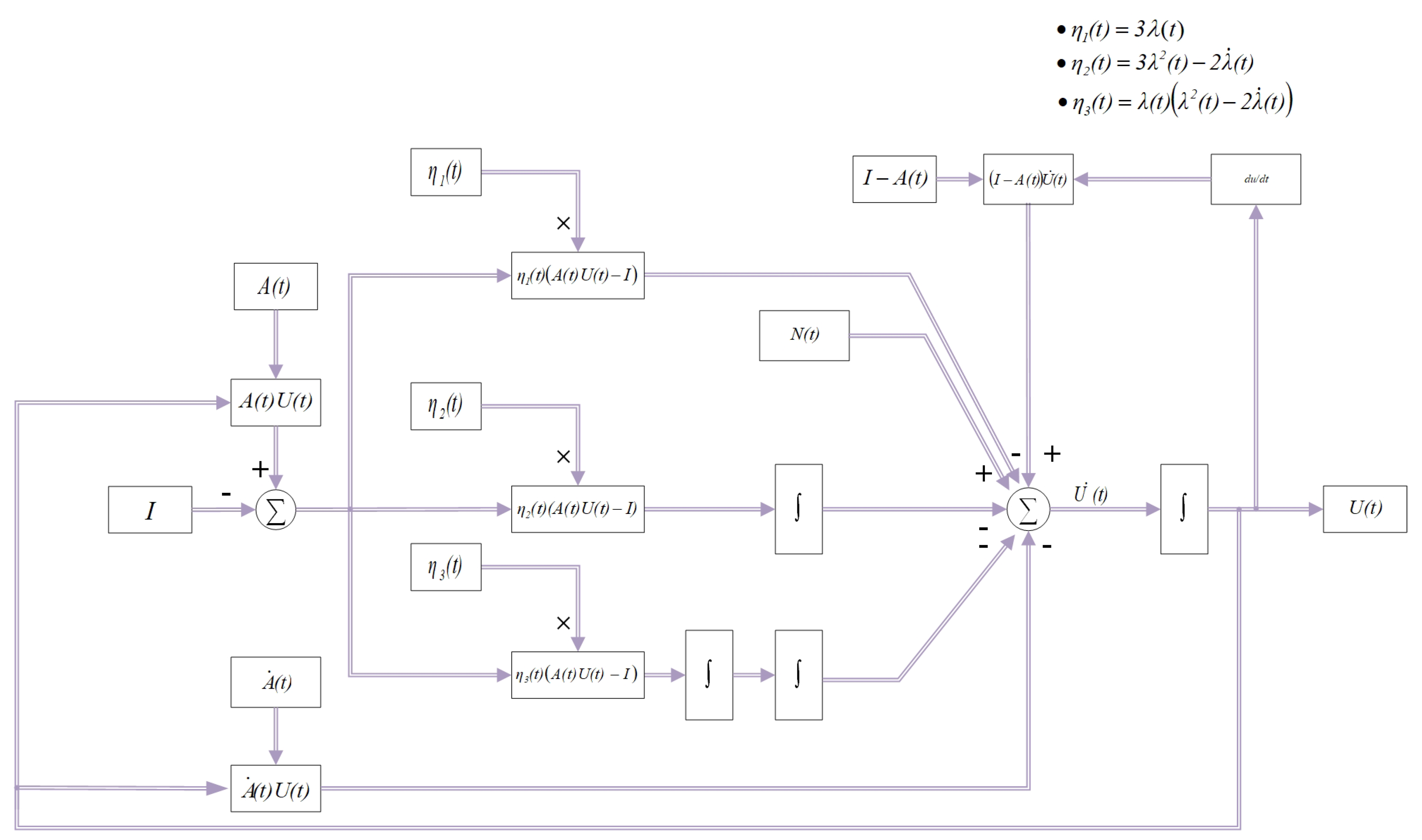

- Unlike previous ZNN models, the novel EANZNN model designed in this paper employs an innovative piecewise time-varying parameter that includes an upper bound. This design accelerates the model’s convergence speed while maintaining good convergence performance. Additionally, a double integral term is introduced to solve TVMI problems under constant and linear noise, enhancing the model’s convergence speed and noise resistance.

- •

- Theoretical analysis based on Lyapunov stability theory rigorously demonstrates that the EANZNN model possesses excellent convergence and robustness when addressing the TVMI problem.

- •

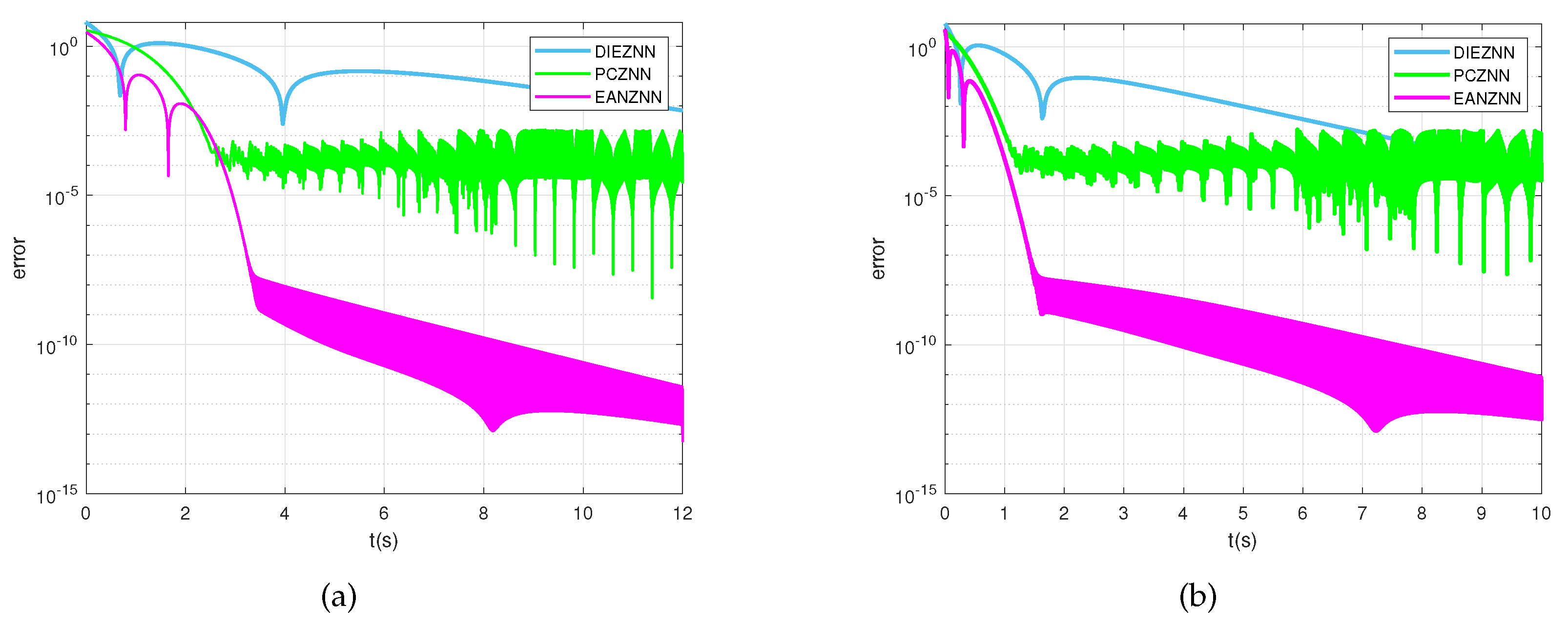

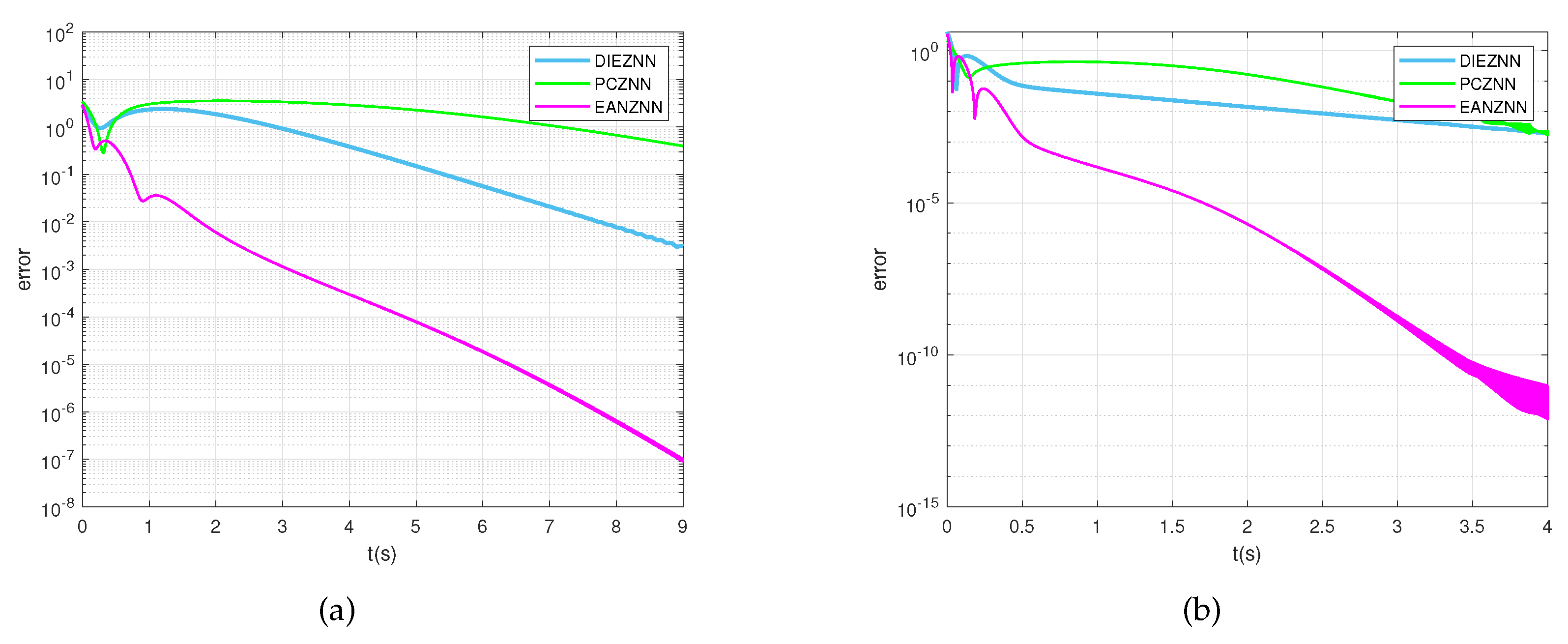

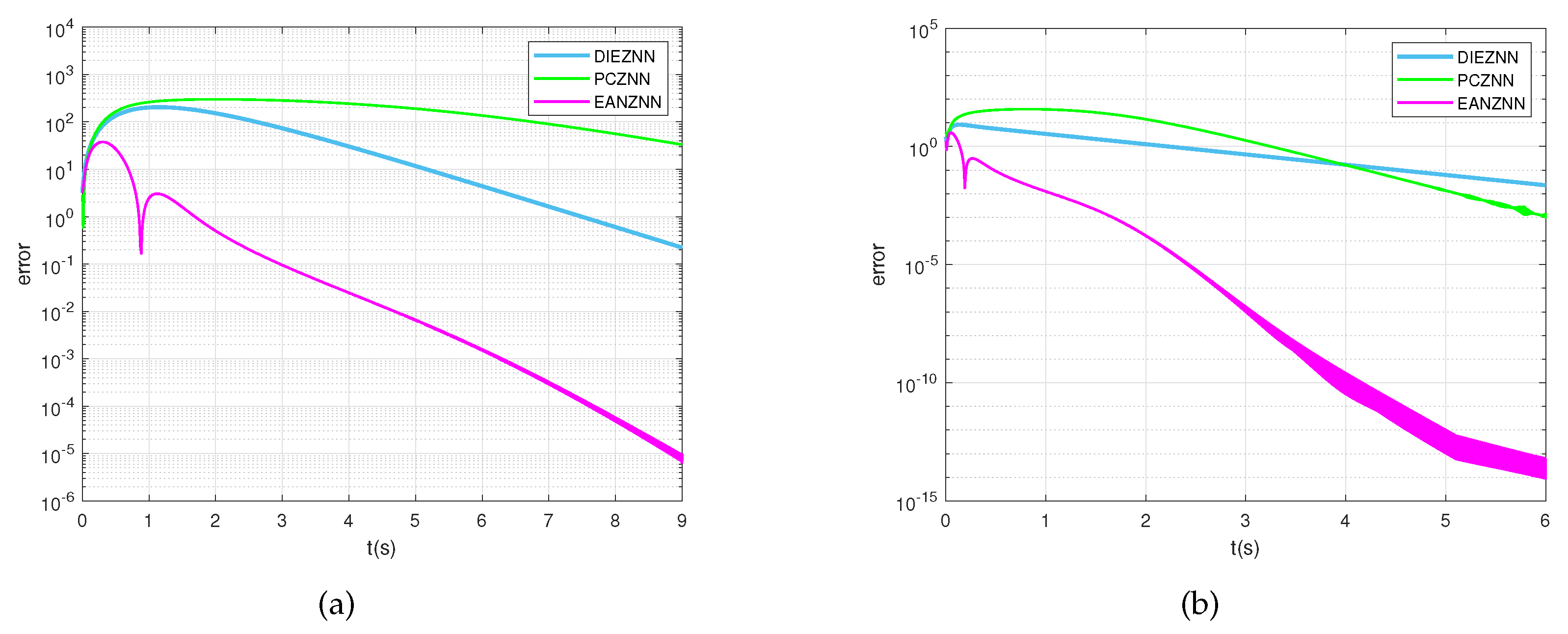

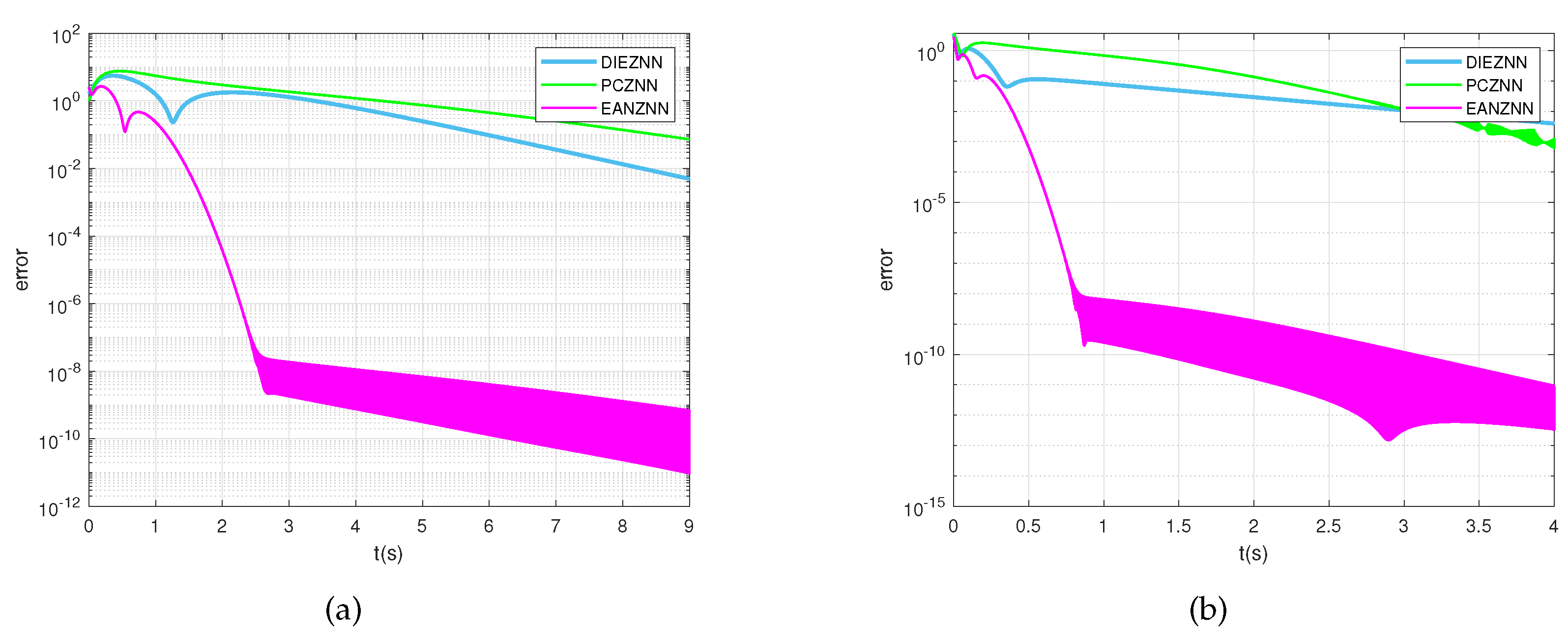

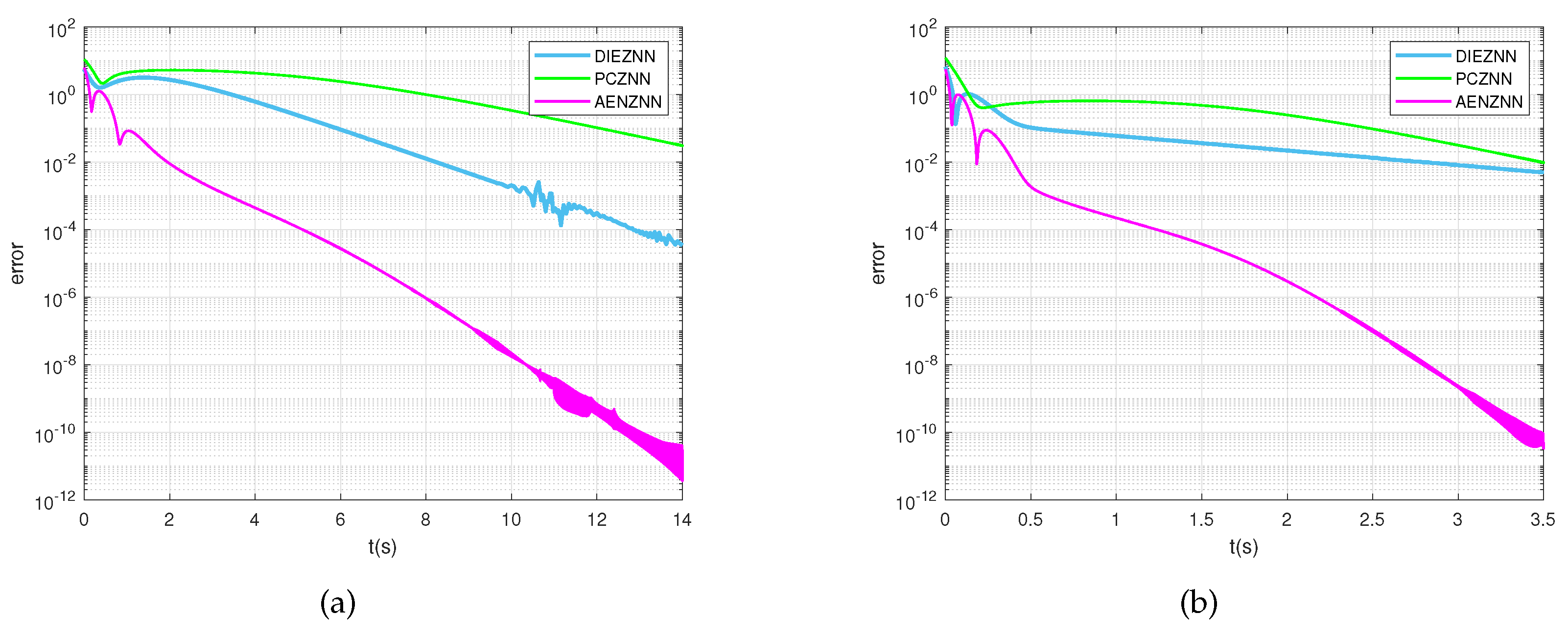

- Experimental results show that under noise-free conditions, the EANZNN model achieves a faster convergence speed in solving the TVMI issue compared to the DIEZNN and PCZNN models. Under constant and linear noise conditions, the EANZNN model not only converges faster but also demonstrates superior robustness.

2. TVMI Description and Model Design

2.1. Description of TVMI

2.2. Relevant Model Design

- First, define an appropriate error function based on the specific problem to be solved;

- Design an evolution formula that ensures the error function converges to zero;

- Substitute the defined error function into the evolution formula to obtain the corresponding ZNN model.

2.3. EANZNN Model Design

3. Theoretical Analyses

3.1. Convergence

3.2. Robustness

4. Example Verification

4.1. Experiment 1—Convergence

4.2. Experiment 2—Robustness

- Linear noise: ;

- Linear noise: ;

- Constant noise: .

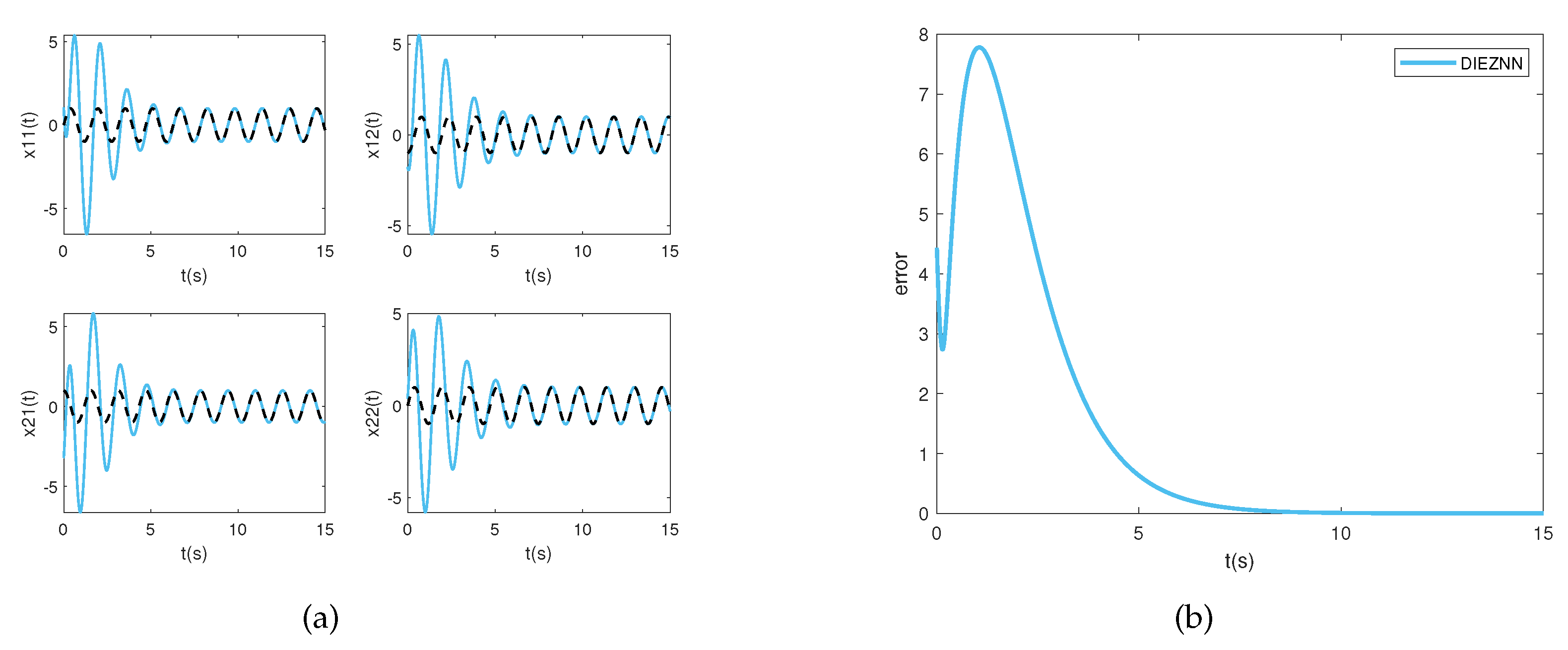

4.3. Experiment 3—High-Dimensional Matrix

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| TVMI | Time-Varying Matrix Inversion |

| ZNN | Zeroing Neural Network |

| EANZNN | Efficient Anti-Noise Zeroing Neural Network |

| DIEZNN | Double Integral-Enhanced ZNN |

| PCZNN | Parameter-Changing ZNN |

| GNN | Gradient-based Recurrent Neural Network |

| FTZNN | Finite-Time ZNN |

| DCMI | Dynamic complex matrix inversion |

| CVNTZNN | Classical Complex-Valued Noise-Tolerant ZNN |

| FTCNTZNN | Fixed-Time Convergent and Noise-Tolerant ZNN |

| EVPZNN | Exponential-enhanced-type Varying-parameter ZNN |

| FPZNN | Fixed-Parameter ZNN |

| CVPZNN | Complex Varying-Parameter ZNN |

| AF | Activation Function |

| IEZNN | Integration-Enhanced ZNN |

| VAF | Versatile Activation Function |

| NAF | Novel Activation Function |

References

- Fang, W.; Zhen, Y.; Kang, Q.; Xi, S.D.; Shang, L.Y. A simulation research on the visual servo based on pseudo-inverse of image jacobian matrix for robot. Appl. Mech. Mater. 2014, 494, 1212–1215. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Z.; Li, S. Solving time-varying system of nonlinear equations by finite-time recurrent neural networks with application to motion tracking of robot manipulators. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 2210–2220. [Google Scholar] [CrossRef]

- Steriti, R.J.; Fiddy, M.A. Regularized image reconstruction using SVD and a neural network method for matrix inversion. IEEE Trans. Signal Process. 1993, 41, 3074–3077. [Google Scholar] [CrossRef]

- Cho, C.; Lee, J.G.; Hale, P.D.; Jargon, J.A.; Jeavons, P.; Schlager, J.B.; Dienstfrey, A. Calibration of time-interleaved errors in digital real-time oscilloscopes. IEEE Trans. Microw. Theory Tech. 2016, 64, 4071–4079. [Google Scholar] [CrossRef]

- Guo, D.; Zhang, Y. Zhang neural network, Getz–Marsden dynamic system, and discrete-time algorithms for time-varying matrix inversion with application to robots’ kinematic control. Neurocomputing 2012, 97, 22–32. [Google Scholar] [CrossRef]

- Ramos, H.; Monteiro, M.T.T. A new approach based on the Newton’s method to solve systems of nonlinear equations. J. Comput. Appl. Math. 2017, 318, 3–13. [Google Scholar] [CrossRef]

- Jäntschi, L Eigenproblem Basics and Algorithms. Symmetry 2023, 15, 2046. [CrossRef]

- Li, X.; Xu, Z.; Li, S.; Su, Z.; Zhou, X. Simultaneous obstacle avoidance and target tracking of multiple wheeled mobile robots with certified safety. IEEE Trans. Cybern. 2021, 52, 11859–11873. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, S.; Weng, J.; Liao, B. GNN model for time-varying matrix inversion with robust finite-time convergence. IEEE Trans. Neural Netw. Learn. Syst. 2022 35, 559–569. [CrossRef]

- Zhang, Y.; Ge, S.S. Design and analysis of a general recurrent neural network model for time-varying matrix inversion. IEEE Trans. Neural Netw. 2005, 16, 1477–1490. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, K.; Tan, H.Z. Performance analysis of gradient neural network exploited for online time-varying matrix inversion. IEEE Trans. Autom. Control 2009, 54, 1940–1945. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Z. Zhang neural network for online solution of time-varying convex quadratic program subject to time-varying linear-equality constraints. Phys. Lett. A. 2009, 373, 1639–1643. [Google Scholar] [CrossRef]

- Zhang, Y.; Qi, Z.; Qiu, B.; Yang, M.; Xiao, M. Zeroing neural dynamics and models for various time-varying problems solving with ZLSF models as minimization-type and euler-type special cases [Research Frontier]. IEEE Comput. Intell. Mag. 2019, 14, 52–60. [Google Scholar] [CrossRef]

- Xiao, L. A new design formula exploited for accelerating Zhang neural network and its application to time-varying matrix inversion. Theor. Comput. Sci. 2016, 647, 50–58. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Y.; Zuo, Q.; Dai, J.; Li, J.; Tang, W. A noise-tolerant zeroing neural network for time-dependent complex matrix inversion under various kinds of noises. IEEE Trans. Ind. Inform. 2019, 16, 3757–3766. [Google Scholar] [CrossRef]

- Jin, J.; Zhu, J.; Zhao, L.; Chen, L. A fixed-time convergent and noise-tolerant zeroing neural network for online solution of time-varying matrix inversion. Appl. Soft Comput. 2022, 130, 109691. [Google Scholar] [CrossRef]

- Guo, D.; Li, S.; Stanimirović, P.S. Analysis and application of modified ZNN design with robustness against harmonic noise. IEEE Trans. Ind. Inform. 2019, 16, 4627–4638. [Google Scholar] [CrossRef]

- Dzieciol, H.; Sillekens, E.; Lavery, D. Extending phase noise tolerance in UDWDM access networks. In Proceedings of the 2020 IEEE Photonics Society Summer Topicals Meeting Series (SUM), Cabo San Lucas, Mexico, 13–15 July 2020; IEEE: New York, NY, USA, 2020; pp. 1–2. [Google Scholar]

- Xiao, L.; Tan, H.; Jia, L.; Dai, J.; Zhang, Y. New error function designs for finite-time ZNN models with application to dynamic matrix inversion. Neurocomputing 2020, 402, 395–408. [Google Scholar] [CrossRef]

- Jin, J.; Zhu, J.; Gong, J.; Chen, W. Novel activation functions-based ZNN models for fixed-time solving dynamirc Sylvester equation. Neural Comput. Appl. 2022, 34, 14297–14315. [Google Scholar] [CrossRef]

- Zhang, Y.; Jiang, D.; Wang, J. A recurrent neural network for solving Sylvester equation with time-varying coefficients. IEEE Trans. Neural Netw. 2002, 13, 1053–1063. [Google Scholar] [CrossRef]

- Hu, Z.; Xiao, L.; Dai, J.; Xu, Y.; Zuo, Q.; Liu, C. A unified predefined-time convergent and robust ZNN model for constrained quadratic programming. IEEE Trans. Ind. Inform. 2020, 17, 1998–2010. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, L.; Wang, M. An exponential-enhanced-type varying-parameter RNN for solving time-varying matrix inversion. Neurocomputing 2019, 338, 126–138. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Katsikis, V.N.; Zhang, Z.; Li, S.; Chen, J.; Zhou, M. Varying-parameter Zhang neural network for approximating some expressions involving outer inverses. Optim. Methods Softw. 2020, 35, 1304–1330. [Google Scholar] [CrossRef]

- Han, L.; Liao, B.; He, Y.; Xiao, X. Dual noise-suppressed ZNN with predefined-time convergence and its application in matrix inversion. In Proceedings of the 2021 11th International Conference on Intelligent Control and Information Processing (ICICIP), Dali, China, 3–7 December 2021; IEEE: New York, NY, USA, 2021; pp. 410–415. [Google Scholar]

- Xiao, L.; He, Y.; Dai, J.; Liu, X.; Liao, B.; Tan, H. A variable-parameter noise-tolerant zeroing neural network for time-variant matrix inversion with guaranteed robustness. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 1535–1545. [Google Scholar] [CrossRef] [PubMed]

- Johnson, M.A.; Moradi, M.H. PID Control; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Jin, L.; Zhang, Y.; Li, S. Integration-enhanced Zhang neural network for real-time-varying matrix inversion in the presence of various kinds of noises. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 2615–2627. [Google Scholar] [CrossRef]

- Liao, B.; Han, L.; Cao, X.; Li, S.; Li, J. Double integral-enhanced Zeroing neural network with linear noise rejection for time-varying matrix inverse. CAAI Trans. Intell. Technol. 2024, 9, 197–210. [Google Scholar] [CrossRef]

- Dai, J.; Yang, X.; Xiao, L.; Jia, L.; Li, Y. ZNN with fuzzy adaptive activation functions and its application to time-varying linear matrix equation. IEEE Trans. Ind. Inform. 2021, 18, 2560–2570. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Y.; Dai, J.; Chen, K.; Yang, S.; Li, W.; Liao, B.; Ding, L.; Li, J. A new noise-tolerant and predefined-time ZNN model for time-dependent matrix inversion. Neural Netw. 2019, 117, 124–134. [Google Scholar] [CrossRef]

- Xiao, L.; Tao, J.; Dai, J.; Wang, Y.; Jia, L.; He, Y. A parameter-changing and complex-valued zeroing neural-network for finding solution of time-varying complex linear matrix equations in finite time. IEEE Trans. Ind. Inform. 2021, 17, 6634–6643. [Google Scholar] [CrossRef]

- Jin, L.; Li, S.; Liao, B.; Zhang, Z. Zeroing neural networks: A survey. Neurocomputing 2017, 267, 597–604. [Google Scholar] [CrossRef]

- Liao, B.; Hua, C.; Cao, X.; Katsikis, V.N.; Li, S. Complex noise-resistant zeroing neural network for computing complex time-dependent Lyapunov equation. Mathematics 2022, 10, 2817. [Google Scholar] [CrossRef]

- Nguyen, N.T.; Nguyen, N.T. Lyapunov stability theory. In Model-Reference Adaptive Control: A Primer; Springer: Berlin/Heidelberg, Germany, 2018; pp. 47–81. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, J.; Yang, F.; Huang, Y. An Efficient Anti-Noise Zeroing Neural Network for Time-Varying Matrix Inverse. Axioms 2024, 13, 540. https://doi.org/10.3390/axioms13080540

Hu J, Yang F, Huang Y. An Efficient Anti-Noise Zeroing Neural Network for Time-Varying Matrix Inverse. Axioms. 2024; 13(8):540. https://doi.org/10.3390/axioms13080540

Chicago/Turabian StyleHu, Jiaxin, Feixiang Yang, and Yun Huang. 2024. "An Efficient Anti-Noise Zeroing Neural Network for Time-Varying Matrix Inverse" Axioms 13, no. 8: 540. https://doi.org/10.3390/axioms13080540

APA StyleHu, J., Yang, F., & Huang, Y. (2024). An Efficient Anti-Noise Zeroing Neural Network for Time-Varying Matrix Inverse. Axioms, 13(8), 540. https://doi.org/10.3390/axioms13080540