An Intelligent Inspection Robot for Underground Cable Trenches Based on Adaptive 2D-SLAM

Abstract

:1. Introduction

- The hardware of an inspection robot is designed according to the demands of the underground cable trench inspection works.

- An adaptive 2D-SLAM method is established for better localization and more accurate mapping within such structured environments of uneven ground.

- A path planning method for the underground cable trench is proposed.

2. Related Work

3. Methods

3.1. System Demands

- (i)

- The visual module. To observe and detect abnormalities of the underground cable trench by infrared thermal imaging and a high-definition camera.

- (ii)

- The information detection module. To detect harmful gases, temperature, and humidity by multi-sensor.

- (iii)

- The automatic navigation module. Perform SLAM and plan inspection path.

- (iv)

- The assessment module. To assess the underground cable trench environment by the diagnosis system based on leading-edge intelligence.

3.2. System Overview

3.3. Simultaneous Localization and Mapping

3.4. Path Planning

- The improvement in the node search scope

- II.

- The improvement in avoiding collision

- III.

- Improvement in path smoothing

- (i)

- Due to the narrow space in the trench, the local planning will lead to more inflection points during the straight section of the global path, which would destroy the smoothness of the global path and make the motion of the robot discontinuous.

- (ii)

- If dynamic obstacles appear in the global path, the dynamic window approach may be called several times, which makes the robot linger around the obstacles for a while and lower the inspection efficiency.

4. Results and Analysis

- A.

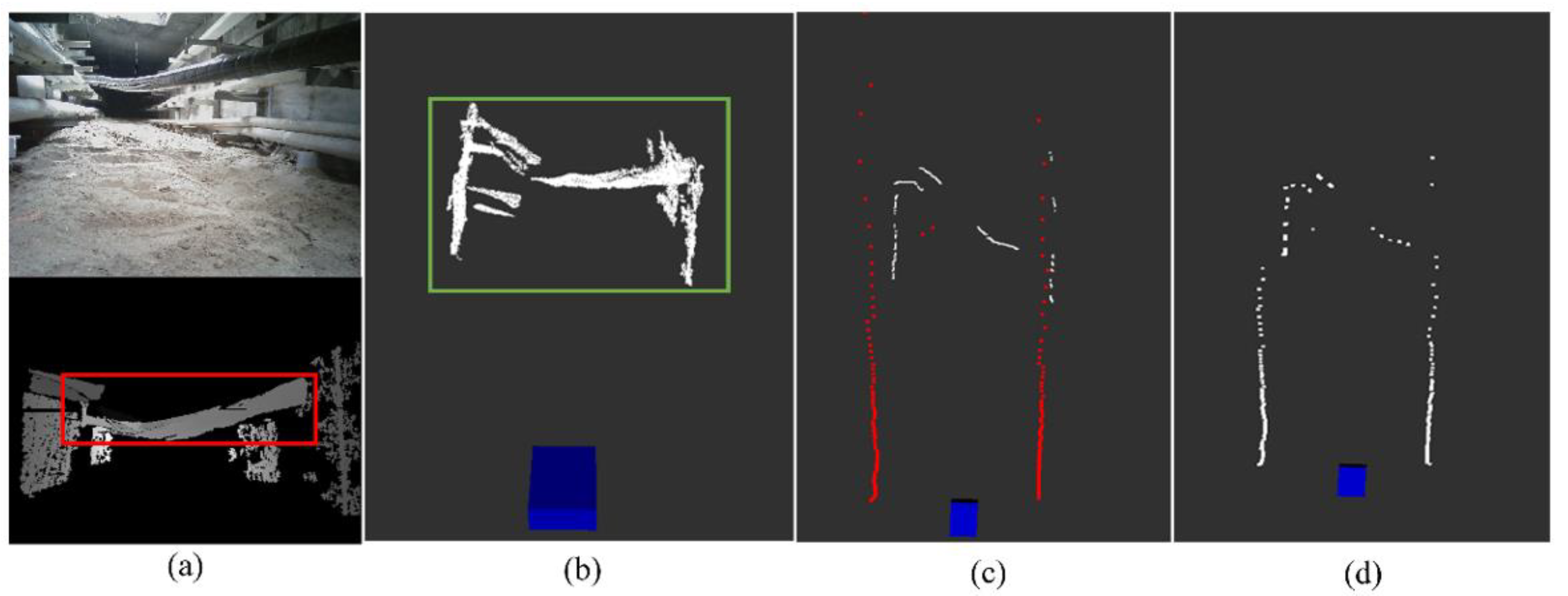

- Experiments of the fusion of LIDAR and depth camera

- B.

- Experiments of the adaptive registration method

- C.

- Experiments of our global path planning algorithm

- D.

- Experiments of the fusion of global path and local path

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ge, R. On the utilization of urban underground space from the construction of cable tunnel. Shanghai Electr. Power 2006, 3, 243–245. (In Chinese) [Google Scholar]

- Khan, A.A.; Malik, N.; Al-Arainy, A.; Alghuwainem, S. A review of condition monitoring of underground power cables. In Proceedings of the International Conference on Condition Monitoring & Diagnosis, Bali, Indonesia, 23–27 September 2013. [Google Scholar]

- Jia, Z.; Tian, Y.; Liu, Z.; Fan, S. Condition Assessment of the Cable Trench Based on an Intelligent Inspection Robot. Front. Energy Res. 2022, 10, 860461. [Google Scholar] [CrossRef]

- Commission of the European Communities. Background Paper under Grounding of Electricity Line in Europe; Commission of the European Communities: Brussels, Belgium, 2003. [Google Scholar]

- Hepburn, D.M.; Zhou, C.; Song, X.; Zhang, G.; Michel, M. Analysis of on-line power cable signals. In Proceedings of the International Conference on Condition Monitoring & Diagnosis, Beijing, China, 21–24 April 2008. [Google Scholar]

- Deng, F. Research on Key Technology of Tunnel Inspection Robot. Master’s Thesis, North China Electric Power University, Beijing, China, 2013. (In Chinese). [Google Scholar]

- Silvaa, B.; Ferreiraa, R.; Gomes, S.C.; Calado, F.A.; Andrade, R.M.; Porto, M.P. On-rail solution for autonomous inspections in electrical substations. Infrared Phys. Technol. 2018, 90, 53–58. [Google Scholar] [CrossRef]

- Xie, Z. Research on Robot Control System for Comprehensive Detection of Cable Tunnel. Ph.D. Thesis, Shanghai Jiaotong University, Shanghai, China, 2008. (In Chinese). [Google Scholar]

- Lu, S.; Zhang, Y.; Su, J. Mobile robot for power substation inspection: A survey. IEEE/CAA J. Autom. Sin. 2017, 4, 830–847. [Google Scholar] [CrossRef]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved Techniques for Grid Mapping With Rao-Blackwellized Particle Filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef] [Green Version]

- Sim, R.; Elinas, P.; Griffin, M.; Shyr, A.; Little, J.J. Design and analysis of a framework for real-time vision-based SLAM using Rao-Blackwellised particle filters. In Proceedings of the Conference on Computer & Robot Vision, Quebec, QC, Canada, 7–9 June 2006. [Google Scholar]

- Wang, C.; Yin, L.; Zhao, Q.; Wang, W.; Li, C.; Luo, B. An Intelligent Robot for Indoor Substation Inspection. Ind. Robot. 2020. ahead-of-print. [Google Scholar] [CrossRef]

- Wu, Y.; Ding, Z. Research on laser navigation mapping and path planning of tracked mobile robot based on hector SLAM. In Proceedings of the International Conference on Intelligent Informatics & Biomedical Sciences, Bangkok, Thailand, 21–24 October 2018; pp. 210–215. [Google Scholar]

- Mur-Artal, R.; Tardos, J.D. ORB-SLAM2:an open-source SLAM system for monocular, stereo and RGB—D cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Endres, F.; Hess, J.; Sturm, J.; Cremers, D.; Burgard, W. 3D mapping with an RGB—D camera. IEEE Trans. Robot. 2017, 30, 177–187. [Google Scholar] [CrossRef]

- Li, S.-X. Research on 3D SLAM Based on Lidar/Camera Coupled System. Master’s Thesis, PLA Strategic Support Force Information Engineering University, Zhengzhou, China, 2018. [Google Scholar]

- Rui Huang, Y.Z. An adaptive scheme for degradation suppression in Lidar based SLAM. Sens. Rev. 2021, 41, 361–367. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real time loop closure in 2d lidar slam. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Chou, C.-C.; Chou, C.-F. Efficient and Accurate Tightly-Coupled Visual-Lidar SLAM. In IEEE Transactions on Intelligent Transportation Systems; IEEE: Manhattan, NY, USA, 2022. [Google Scholar] [CrossRef]

- LaValle, S.M.; Kuffner, J.J. Randomized kinodynamic planning. Int. J. Robot. Res. 2016, 20, 378–400. [Google Scholar] [CrossRef]

- Choubey, N.; Gupta, M. Analysis of working of Dijkstra and A* to obtain optimal path. Int. J. Comput. Sci. Manag. Res. 2013, 2, 1898–1904. [Google Scholar]

- Adel, A.; Bennaceur, H.; Châari, I.; Koubâa, A.; Alajlan, M. Relaxed Dijkstra and A* with linear complexity for robot path planning problems in large-scale grid environments. Methodol. Appl. 2016, 20, 4149–4171. [Google Scholar]

- Blaich, M.; Rosenfelder, M.; Schuster, M.; Bittel, O.; Reuter, J. Extended grid based collision avoidance considering COLREGs for vessels. IFAC Proc. Vol. 2012, 45, 416–421. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, Z.; Huang, C.K.; Zhao, Y.W. Mobility based on improved A* algorithm. Robot path planning. Robot 2018, 40, 903–910. (In Chinese) [Google Scholar]

- Chaari, I.; Koubaa, A.; Bennaceur, H.; Ammar, A.; Alajlan, M.; Youssef, H. Design and performance analysis of global path planning techniques for autonomous mobile robots in grid environments. Int. J. Adv. Robot. Syst. 2017, 14, 172988141666366. [Google Scholar] [CrossRef]

- Cheng, C.; Hao, X.; Li, J.; Zhang, Z.; Sun, G. Global dynamic path planning based on fusion of improved A*algorithm and dynamic window approach. J. Xi’an Jiaotong Univ. 2017, 11, 137–143. (In Chinese) [Google Scholar]

- Li, Z.; Chen, L.; Zheng, Q.; Dou, X.; Yang, L. Control of a path following caterpillar robot based on a sliding mode variable structure algorithm. Biosyst. Eng. 2019, 186, 293–306. [Google Scholar] [CrossRef]

| Algorithm | Forward A* | Improved A* | RRT | Dijkstra | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Map scenario | 3 | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 |

| Average time (ms) | 11.78 | 12.12 | 12.45 | 13.57 | 13.56 | 15.01 | 6.28 | 6.96 | 8.45 | 14.28 | 14.75 | 15.46 |

| Inflection point | 3 | 3 | 4 | 5 | 6 | 5 | 11 | 9 | 16 | 7 | 8 | 6 |

| Risk of collision | Low | low | low | high | high | high | high | high | high | high | high | high |

| Algorithm | Traditional Integration Method | Proposed Integration Method | ||||

|---|---|---|---|---|---|---|

| Inspection channel length (m) | 10 | 20 | 30 | 10 | 20 | 30 |

| Average time (s) | 79.4 | 164.3 | 240.7 | 61.2 | 131.6 | 197.8 |

| Average total path length (m) | 12.2 | 24.6 | 35.7 | 11.4 | 21.8 | 32.7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, Z.; Liu, H.; Zheng, H.; Fan, S.; Liu, Z. An Intelligent Inspection Robot for Underground Cable Trenches Based on Adaptive 2D-SLAM. Machines 2022, 10, 1011. https://doi.org/10.3390/machines10111011

Jia Z, Liu H, Zheng H, Fan S, Liu Z. An Intelligent Inspection Robot for Underground Cable Trenches Based on Adaptive 2D-SLAM. Machines. 2022; 10(11):1011. https://doi.org/10.3390/machines10111011

Chicago/Turabian StyleJia, Zhiwei, Haohui Liu, Haoliang Zheng, Shaosheng Fan, and Zheng Liu. 2022. "An Intelligent Inspection Robot for Underground Cable Trenches Based on Adaptive 2D-SLAM" Machines 10, no. 11: 1011. https://doi.org/10.3390/machines10111011