A Novel Optimistic Local Path Planner: Agoraphilic Navigation Algorithm in Dynamic Environment

Abstract

1. Introduction

2. Development of the Agoraphilic Navigation Algorithm in Dynamic Environment

- Sensory data processing (SDP) module;

- Obstacle tracking (OT) module;

- Dynamic obstacles’ position prediction (DOPP) module;

- Current and future free space histogram (FSH) generation module;

- Free space force (FSF) generation module;

- Force-shaping module;

- Instantaneous driving Force component (Fc) generation module;

- Instantaneous driving force component weighting module.

- Fc generation for the current global map (CGM)-CGM-Fc1;

- Fc generation for the future global maps (FGMs)-Fc2, Fc3, …, FcN (prediction).

2.1. Sensory Data Processing Module

- A 3600 LiDAR sensor;

- A Real Sense camera.

| orientation of the robot | ||

| coordinates with respect to | ||

| the world reference frame | ||

| coordinates with respect to | ||

| the robot’s axis system | ||

| robot’s current position with | ||

| respect to the world reference frame |

2.2. Obstacle Tracking Module

- (1)

- The current global map (CGM): This map represents the robot’s surrounding environment with respect to the global coordinate frame. The CGM consists of information about the current locations of static and moving obstacles.

- (2)

- The estimated states( position and velocity) of dynamic obstacles: The position and velocity of moving obstacles are given by the estimated states.

| ∼ | N(0,R) | |

| ∼ | N(0,Q) | |

| p | error covariance | |

| estimated position of | ||

| the moving obstacle | ||

| measured position of | ||

| the moving obstacle | ||

| acceleration of the | ||

| moving obstacle |

2.3. Dynamic Obstacle Position Prediction Module

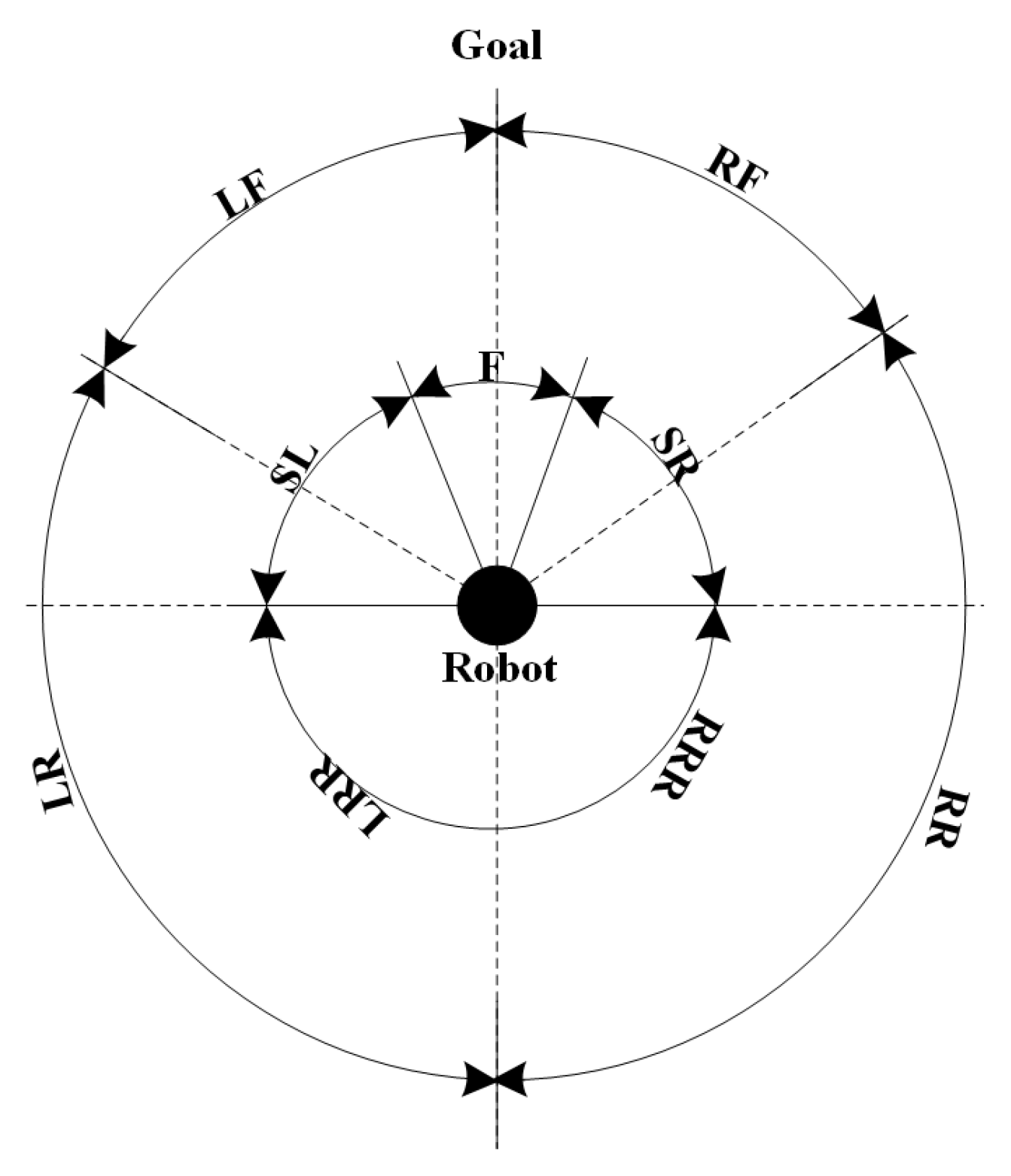

2.4. Free Space Histogram Generation Module

2.5. Free-Space Force Generation Module

2.6. Force-Shaping Module

2.6.1. Numerical Function

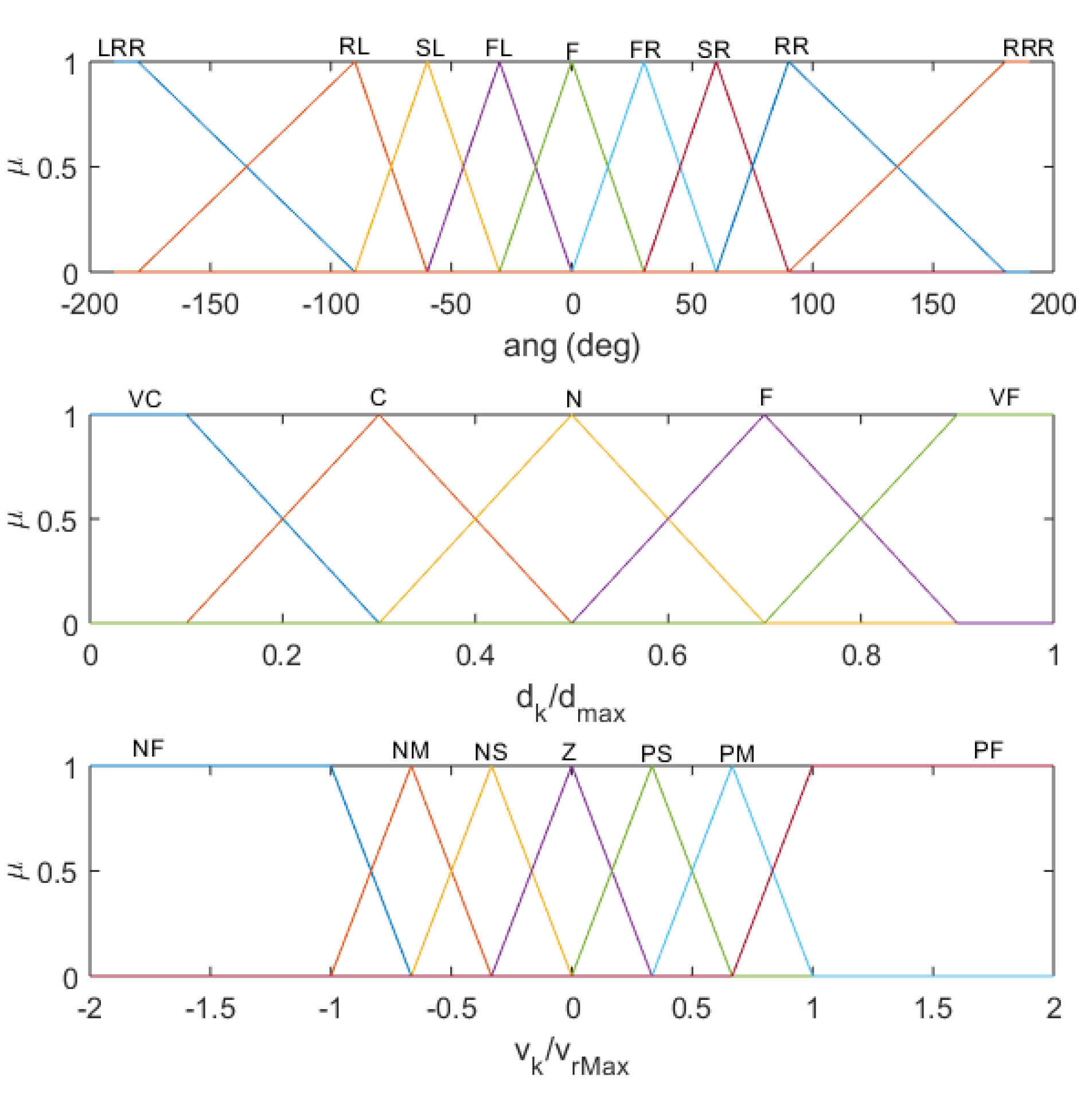

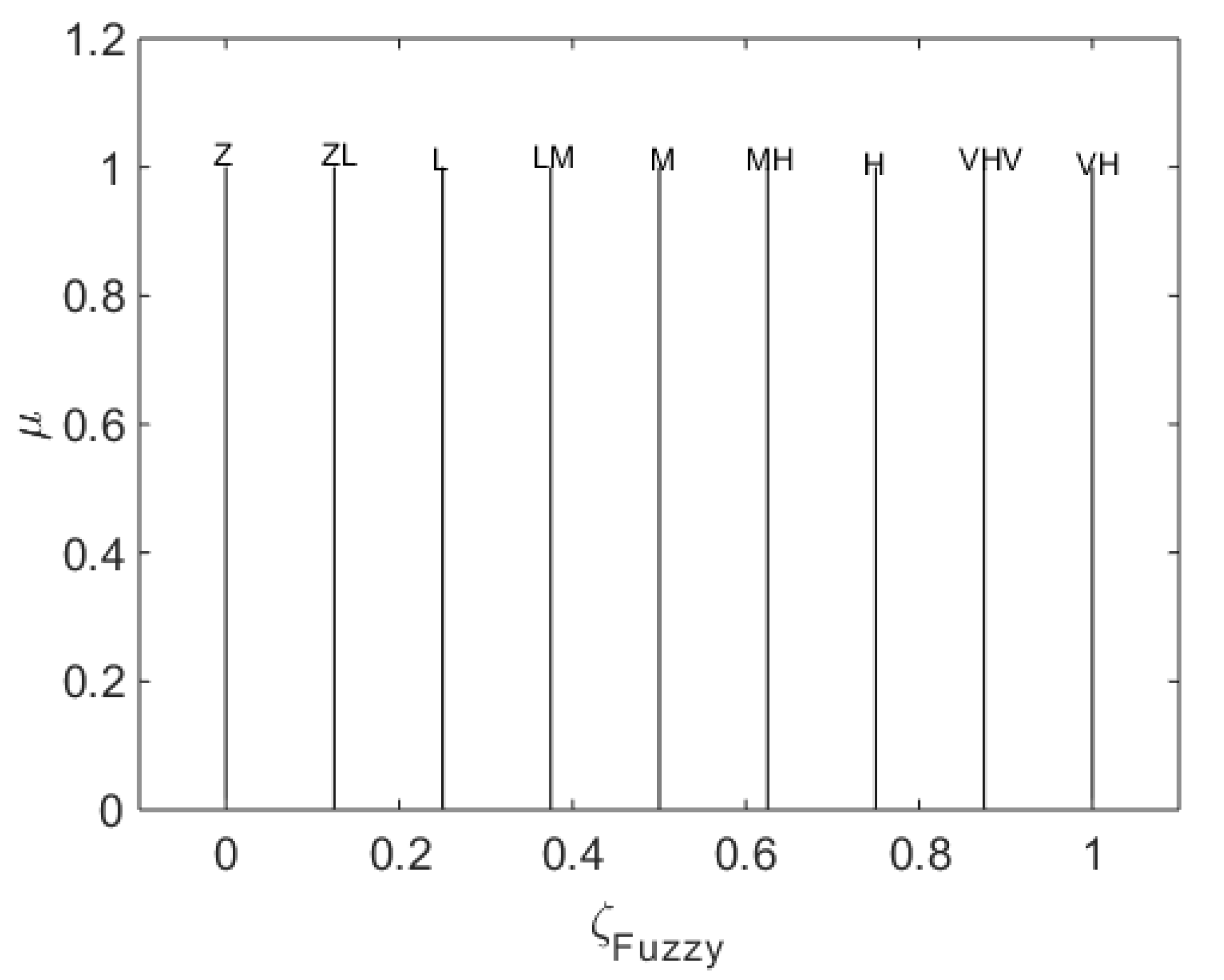

2.6.2. Fuzzy Logic Controller

- A fuzzification module;

- A fuzzy rule base;

- A fuzzy inference engine;

- A defuzzification module.

2.7. Instantaneous Driving Force Component (Fc) Generation Module

2.8. Instantaneous Driving Force Component Weighing Module

3. Experimental Testing and Analysis of the Algorithm

3.1. Experimentally Testing the Algorithm in Different Challenging Situations

- Section 3.1.1: a moving obstacle going to the goal from the start point.

- Section 3.1.2: a moving obstacle going to the start point from the goal.

- Section 3.1.3: a moving obstacle cross the robot’s path perpendicularly.

- Section 3.1.4: two moving obstacles challenge the robot at the same time.

- Section 3.1.5: three moving obstacles challenge the robot at the same time and push the robot towards a trap.

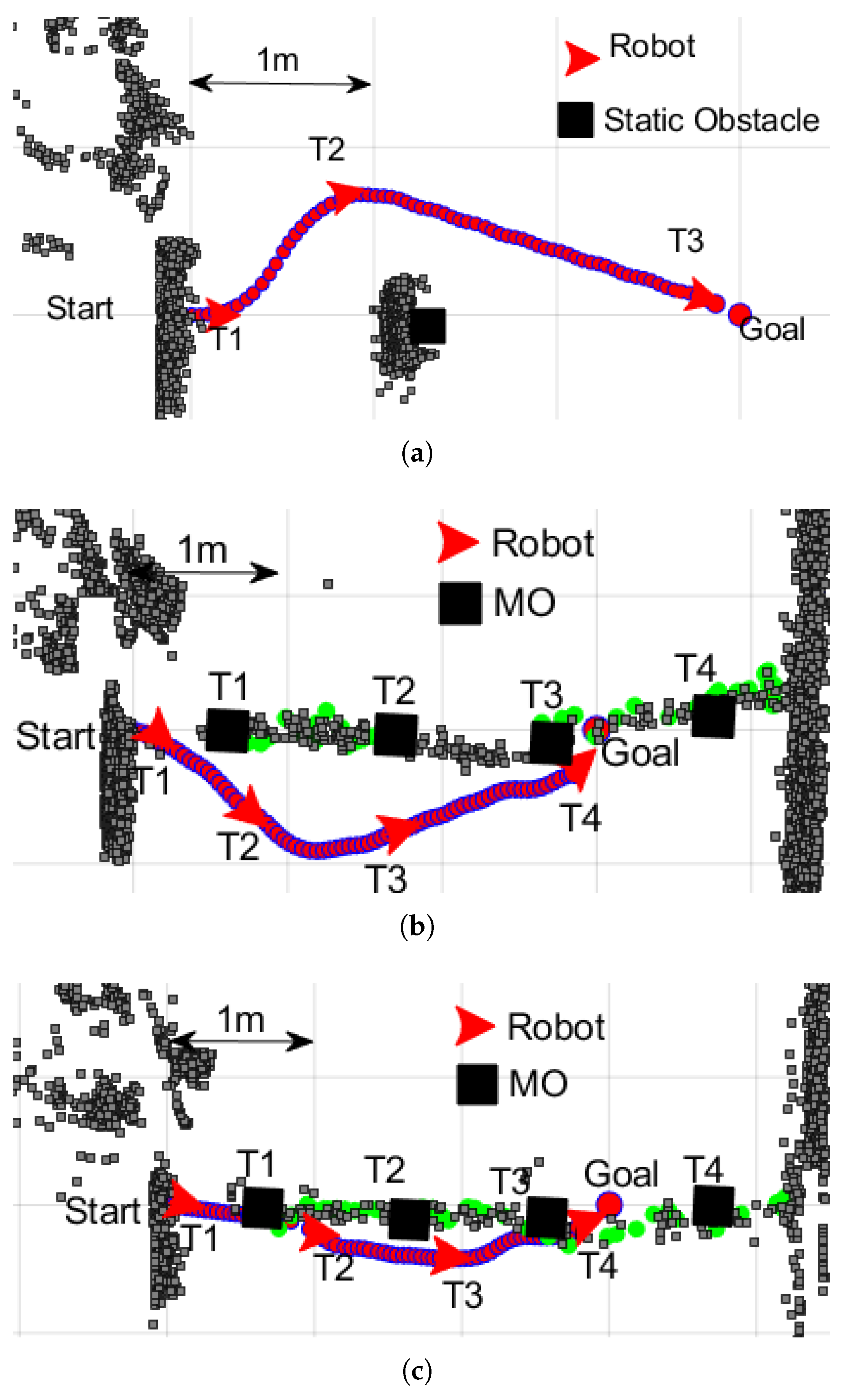

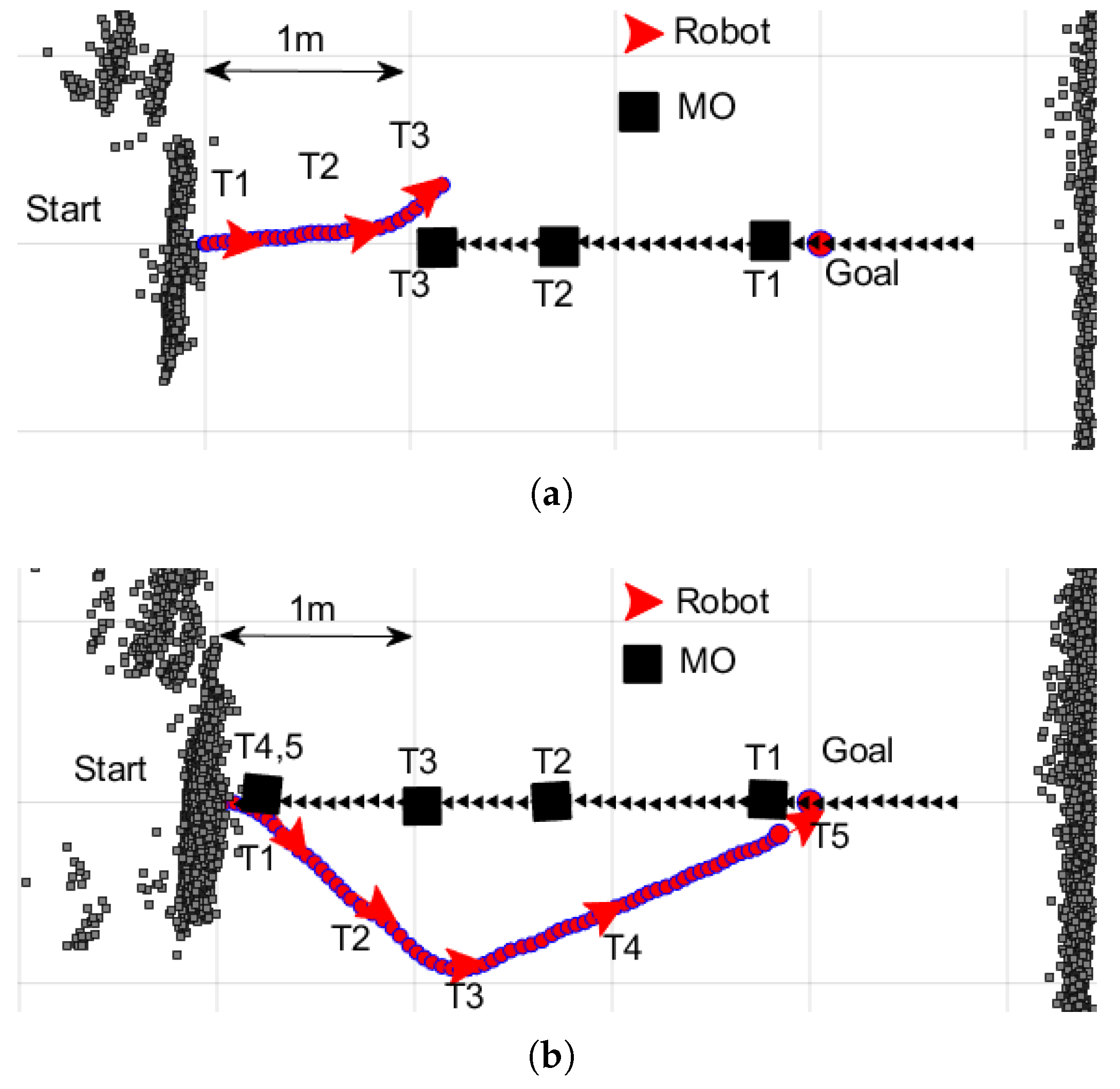

3.1.1. Experiment 1: A Moving Obstacle Going to the Goal from the Start Point

- In this experiment, the MO was moving in front of the robot towards the goal, but the MO had a higher speed. Therefore, the robot should not have problems moving towards the goal. However, the basic force-shaping module gave unnecessarily more weight to the existing free space and pushed the robot away from the MO. On the other hand, the force-shaping module with the new FCL allowed the robot to move towards the goal behind the MO while maintaining a safe distance.

- The basic force-shaping module did not consider the velocity of the moving object when it was shaping the FSFs (the path was like avoiding a static obstacle; see Figure 7a).

- The FCL makes important decisions to increase the efficiency of the algorithm by reducing the time to reach the goal and reducing the path length.

- In this experiment, the robot avoided the obstacle by changing its direction of motion (the basic force-shaping module). On the other hand, the force-shaping module with the FLC did not see the MO as a challenge to the robot.

- The object tracking module allowed the algorithm to know about the states (position, velocity) of the MO accurately. This helped the algorithm successfully complete the navigation task.

3.1.2. Experiment 2: A Moving Obstacle Going to the Start Point from the Goal Point

- In this experiment, the MO was coming towards the robot from the goal with high speed. Therefore, the robot was challenged by the MO, although it was not close to the robot. However, the basic force-shaping module allowed the robot to move towards the goal. On the other hand, force-shaping module with the new FCL immediately changed the robot’s direction to deviate the robot’s path from the path of the MO.

- The basic force-shaping module did not consider the object’s velocity in calculating the FSFs. Consequently, the challenge from the fast MO was not identified earlier. This led the robot towards the collision.

- The FCL took the velocities of the MOs into account. This allowed the robot to make important decisions at the right time to avoid collisions.

- In this experiment, the importance of the FLC was pronounced. The FLC helped the robot make the right decision and avoid collision with high-speed moving obstacles.

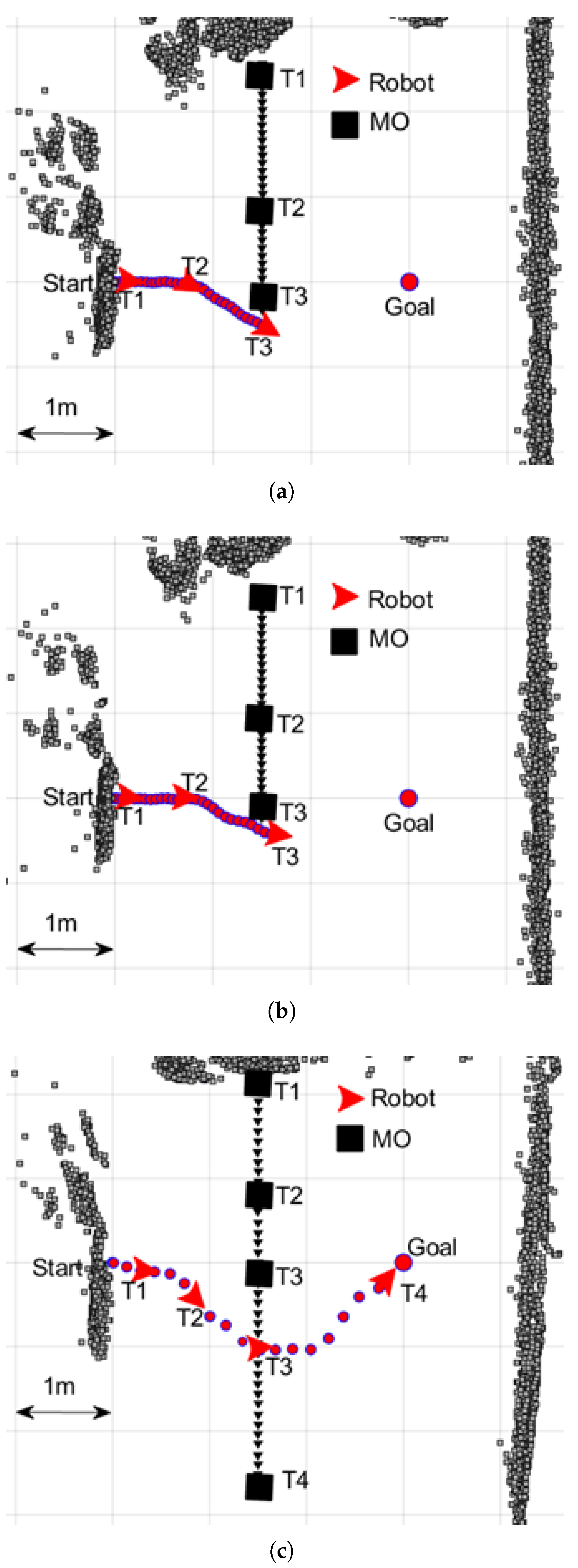

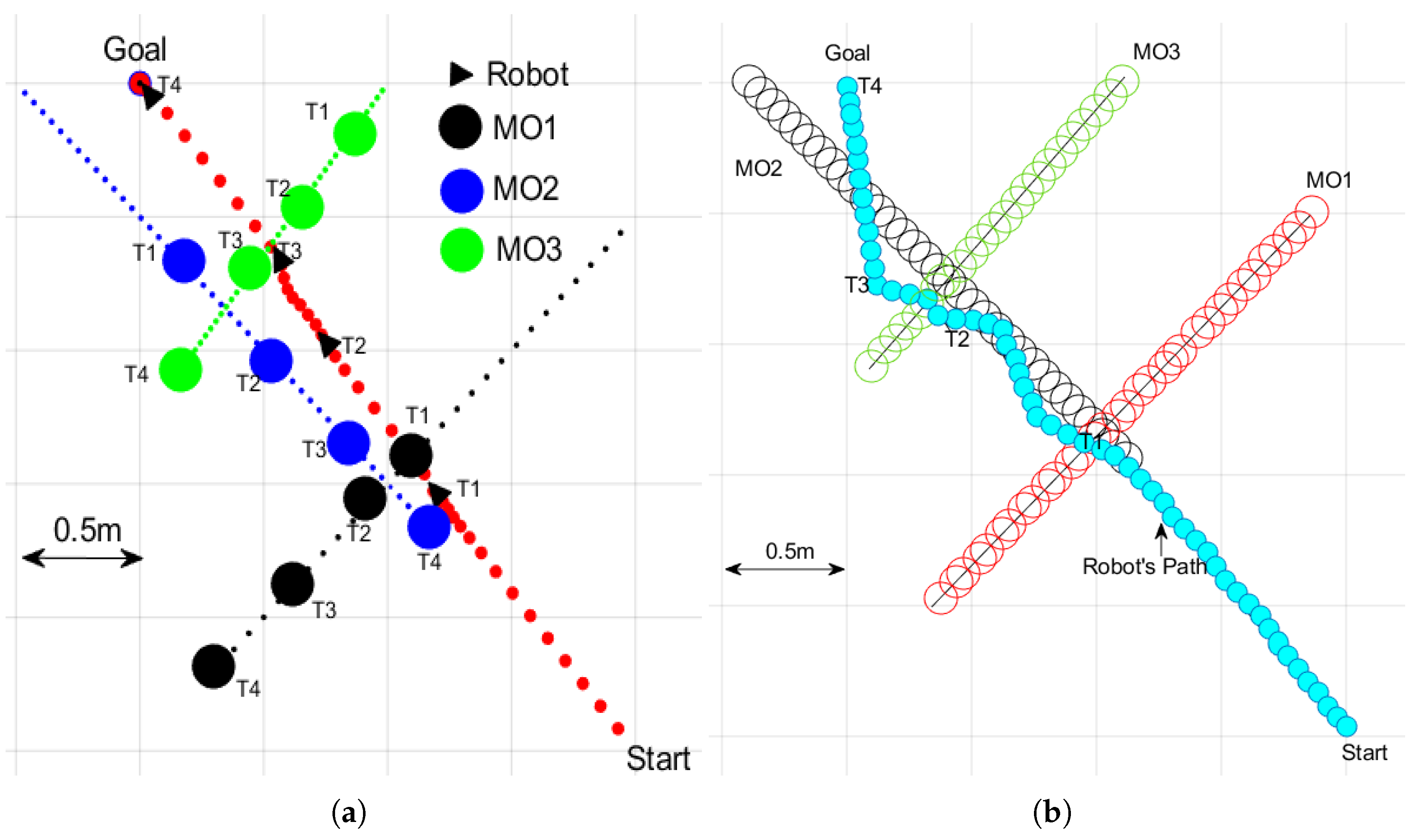

3.1.3. Experiment 3: A Moving Obstacle Crosses the Robot’s Path Perpendicularly

- In this experiment, the MO was moving fast in the direction perpendicular to the robot’s initial motion (start to goal direction). Therefore, the force-shaping module did not see any sector velocity component from the MO (as the velocity was perpendicular to the robot’s to goal direction) nor any obstacle in between the robot and the goal.

- The algorithm without the prediction module made decisions based only on the current circumstances in the robot’s surrounding. Consequently, the robot could not change its direction and kept moving towards the goal at the beginning of the journey (Key Finding 1: the algorithm did not realize the change of the MO). This was the costly decision that led the robot to a collision.

- The algorithm with the prediction module makes decisions not only based on the robot’s current surroundings, but also considering the future environments. This allowed the robot to identify future growth and diminishing of the free space. As a result, the robot could make prior decisions. This helped the robot reach the goal safely without any collision.

- In this experiment, the importance of the prediction module was verified. It enabled the robot to make early decisions when the robot is dealing with some special cases.

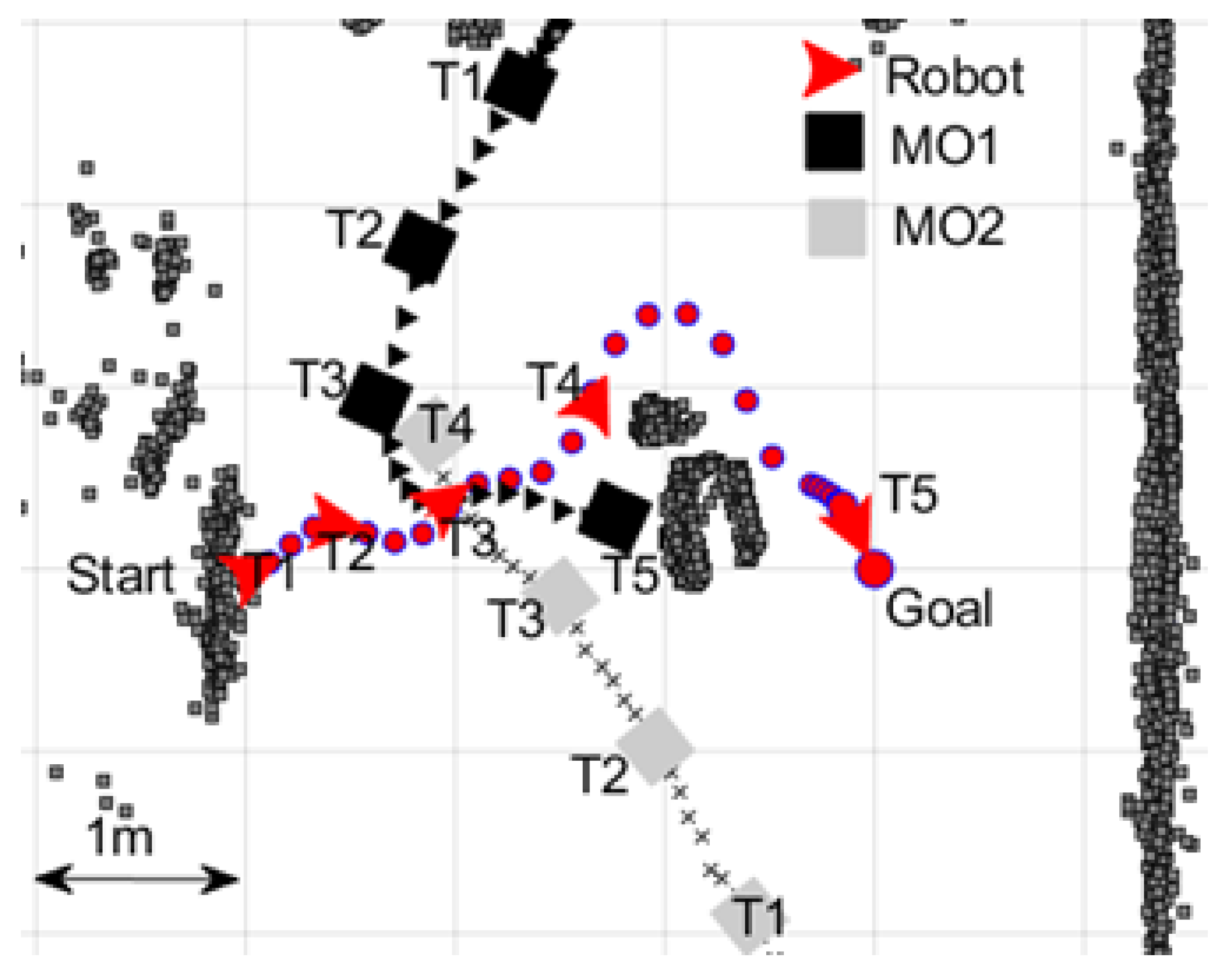

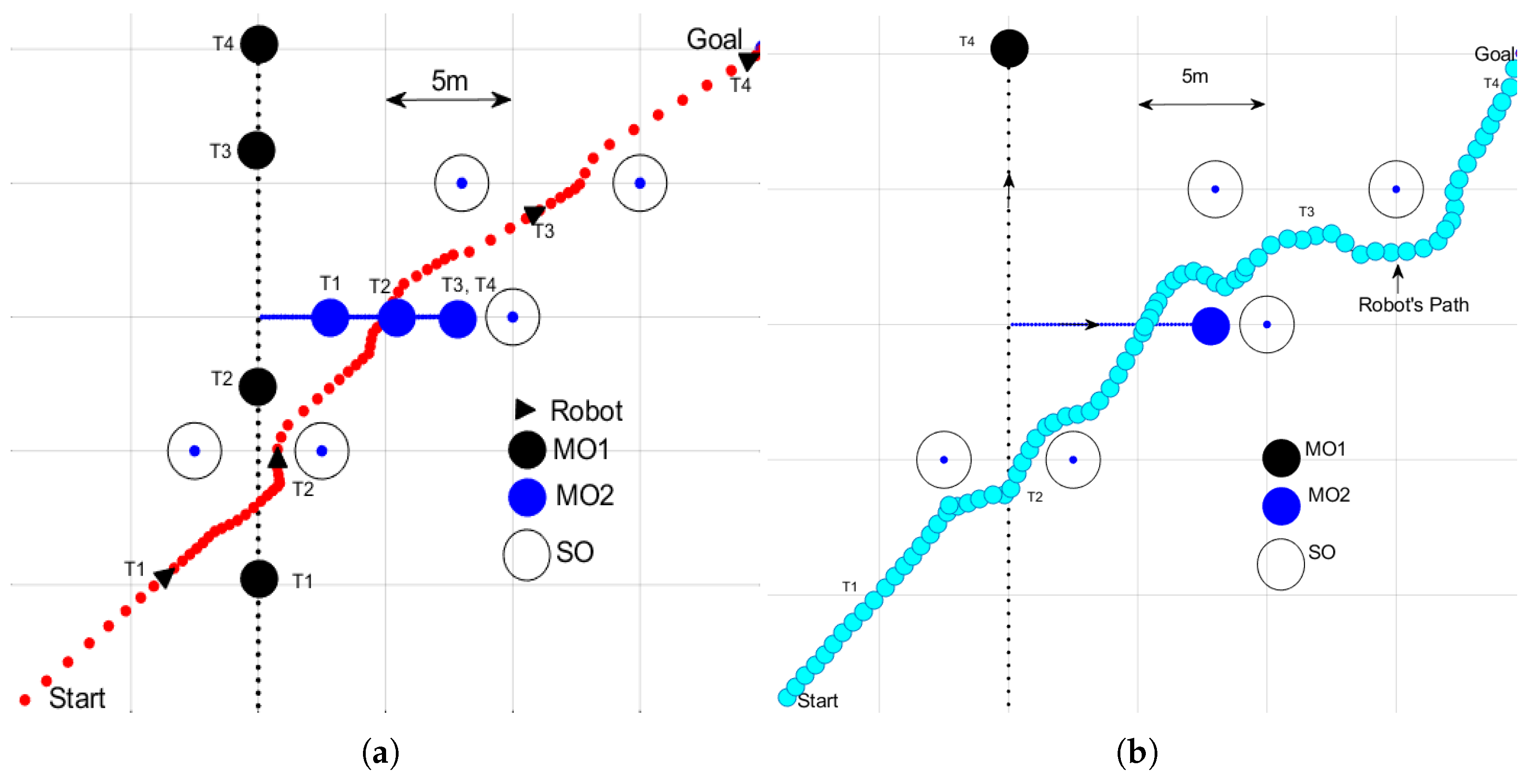

3.1.4. Experiment 4: The Robot Reaches the Goal despite Two Moving Obstacles Simultaneously Challenging the Robot

- The tacking module estimated the locations and velocities of the moving obstacles accurately.

- The prediction module could predicted the future increasing and diminishing free space based on the predictions of the future locations of the moving obstacles and the robot.

- The algorithm was able to handle challenging situations where multiple moving objects challenged the robot at the same time.

- The new Agoraphilic algorithm can successfully navigate robots in unknown dynamic environments.

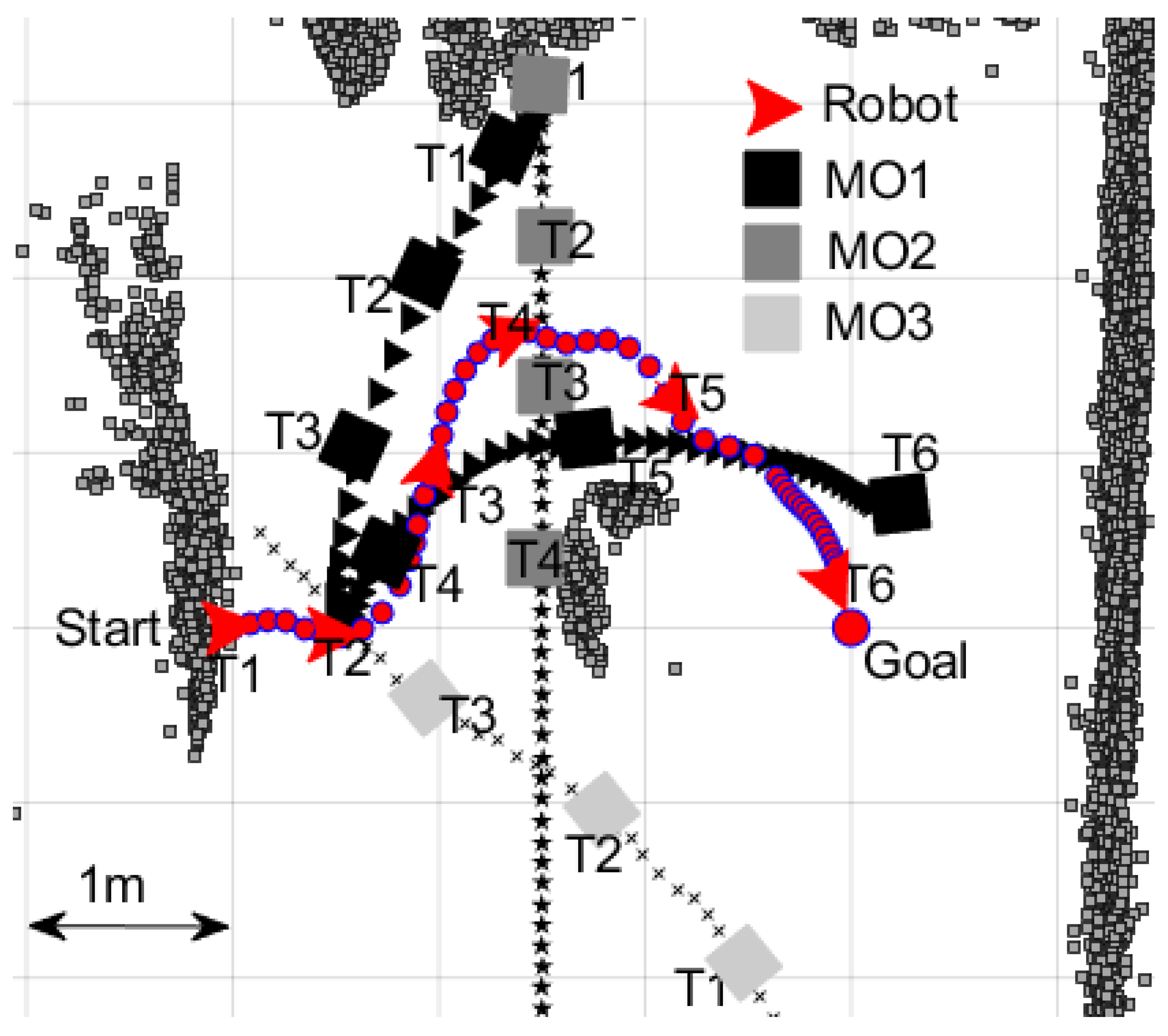

3.1.5. Experiment 5: The Robot Reaches the Goal despite Three Moving Obstacles Simultaneously Challenging the Robot and Pushing the Robot towards a Trap

- Move in the (+x, −y) direction to pass in front of MO2 and reach the goal in the shortest path.

- Reduce its speed and wait for MO2 to pass.

- Go forward towards the goal.

- Move in the (+x, +y) direction (towards the converging MO1 and MO2).

- The importance of the new FLC, even in the presence of the prediction module, was clearly shown in this experiment.

- The algorithm was able to identify and implement successful decisions in complex environments.

- The force-shaping module could select the robot’s pathway precisely to navigate through narrow time-varying free space corridors.

- The new Agoraphilic algorithm could successfully navigate robots in unknown dynamic environments with traps.

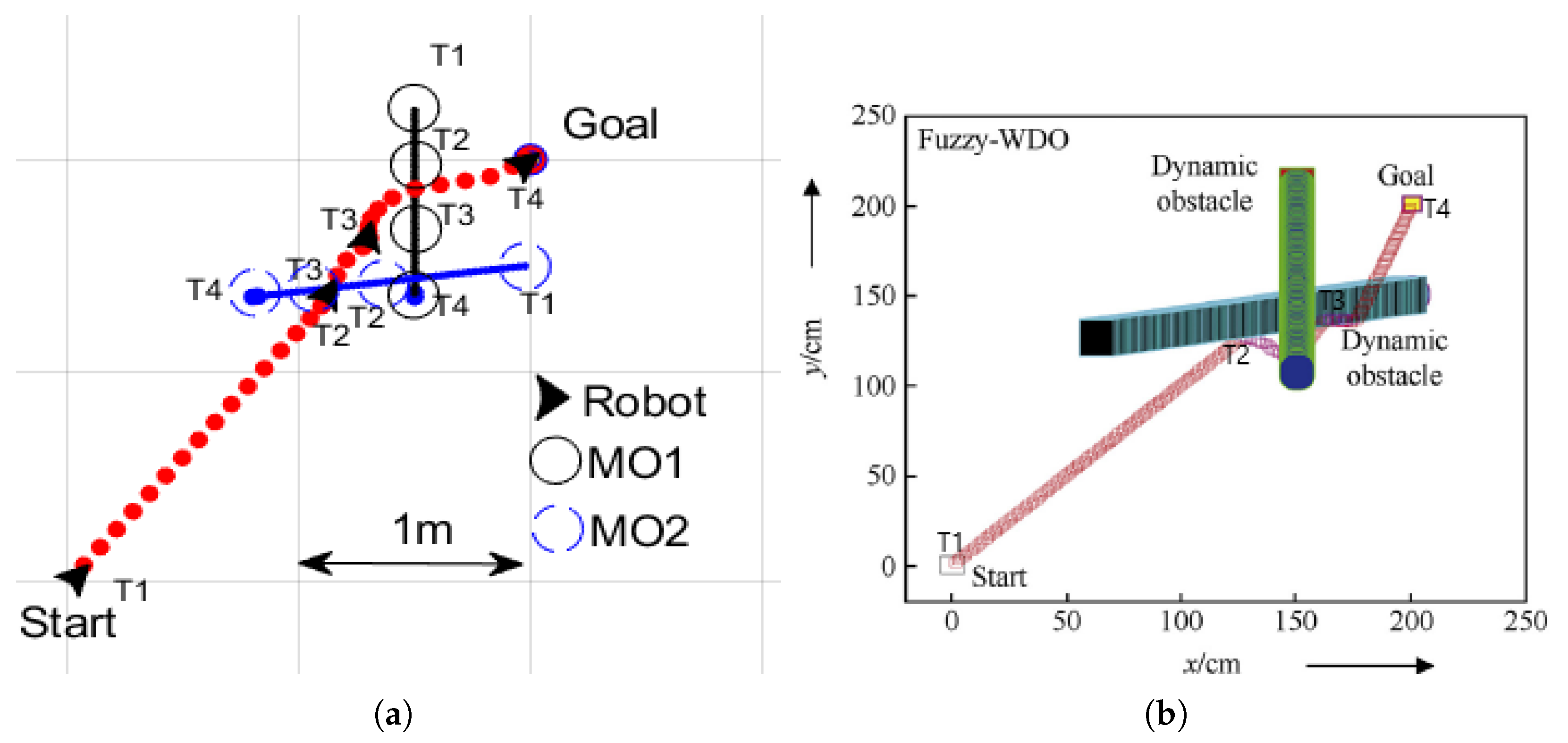

3.2. Experimental Comparison of the Proposed Algorithm with Other Recent Approaches

3.2.1. Comparison of the ANADE with the Matrix-Binary-Code-Based Genetic Algorithm

- ANADE—slowed down the robot, and MO1 was enabled to pass the robot.

- MBCGA—changed the robot’s moving direction and passed the MO1.

3.2.2. Comparison of the ANADE with the Fuzzy Wind-Driven Optimization Algorithm

- ANADE—increased the speed of the robot and passed MO2 at its front.

- FWDOA—turned towards the (+x, −y) direction.

3.2.3. Comparison of the ANADE with an Improved APF Algorithm Developed Based on the Transferable Belief Model

3.3. A Qualitative Comparison between the Proposed Algorithm with Recent Navigation Approaches

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, W.; Li, Z.; Sun, S.; Gupta, M.K.; Du, H.; Malekian, R.; Sotelo, M.A.; Li, W. Design a Novel Target to Improve Positioning Accuracy of Autonomous Vehicular Navigation System in GPS Denied Environments. IEEE Trans. Ind. Inform. 2021, 17, 7575–7588. [Google Scholar] [CrossRef]

- Gao, Y.; Chien, S. Review on space robotics: Toward top-level science through space exploration. Sci. Robot. 2017, 2, eaan5074. [Google Scholar] [CrossRef] [PubMed]

- Rangapur, I.; Prasad, B.S.; Suresh, R. Design and Development of Spherical Spy Robot for Surveillance Operation. Procedia Comput. Sci. 2020, 171, 1212–1220. [Google Scholar] [CrossRef]

- Patil, D.; Ansari, M.; Tendulkar, D.; Bhatlekar, R.; Pawar, V.N.; Aswale, S. A Survey On Autonomous Military Service Robot. In Proceedings of the 2020 International Conference on Emerging Trends in Information Technology and Engineering (ic-ETITE), Vellore, India, 24–25 February 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Huang, X.; Cao, Q.; Zhu, X. Mixed path planning for multi-robots in structured hospital environment. J. Eng. 2019, 2019, 512–516. [Google Scholar] [CrossRef]

- Kayacan, E.; Kayacan, E.; Ramon, H.; Kaynak, O.; Saeys, W. Towards Agrobots: Trajectory Control of an Autonomous Tractor Using Type-2 Fuzzy Logic Controllers. IEEE/ASME Trans. Mechatronics 2015, 20, 287–298. [Google Scholar] [CrossRef]

- Sawadwuthikul, G.; Tothong, T.; Lodkaew, T.; Soisudarat, P.; Nutanong, S.; Manoonpong, P.; Dilokthanakul, N. Visual Goal Human-Robot Communication Framework with Few-Shot Learning: A Case Study in Robot Waiter System. IEEE Trans. Ind. Inform. 2021, 18, 1883–1891. [Google Scholar] [CrossRef]

- Hewawasam, H.S.; Ibrahim, M.Y.; Appuhamillage, G.K. Past, Present and Future of Path-Planning Algorithms for Mobile Robot Navigation in Dynamic Environments. IEEE Open J. Ind. Electron. Soc. 2022, 3, 353–365. [Google Scholar] [CrossRef]

- Patle, B.; Parhi, D.; Jagadeesh, A.; Kashyap, S.K. Matrix-Binary Codes based Genetic Algorithm for path planning of mobile robot. Comput. Electr. Eng. 2018, 67, 708–728. [Google Scholar] [CrossRef]

- Engedy, I.; Horváth, G. Artificial neural network based mobile robot navigation. In Proceedings of the 2009 IEEE International Symposium on Intelligent Signal Processing, Kanazawa, Japan, 7–9 December 2009; pp. 241–246. [Google Scholar]

- Niu, H.; Ji, Z.; Arvin, F.; Lennox, B.; Yin, H.; Carrasco, J. Accelerated sim-to-real deep reinforcement learning: Learning collision avoidance from human player. In Proceedings of the 2021 IEEE/SICE International Symposium on System Integration (SII), Iwaki, Japan, 11–14 January 2021; pp. 144–149. [Google Scholar]

- Pandey, A.; Parhi, D.R. Optimum path planning of mobile robot in unknown static and dynamic environments using Fuzzy-Wind Driven Optimization algorithm. Def. Technol. 2017, 13, 47–58. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, Z.; Ye, H.; Xu, C.; Gao, F. Ego-planner: An esdf-free gradient-based local planner for quadrotors. IEEE Robot. Autom. Lett. 2020, 6, 478–485. [Google Scholar] [CrossRef]

- Na, S.; Niu, H.; Lennox, B.; Arvin, F. Bio-Inspired Collision Avoidance in Swarm Systems via Deep Reinforcement Learning. IEEE Trans. Veh. Technol. 2022, 71, 2511–2526. [Google Scholar] [CrossRef]

- Mohanta, J.C.; Keshari, A. A knowledge based fuzzy-probabilistic roadmap method for mobile robot navigation. Appl. Soft Comput. 2019, 79, 391–409. [Google Scholar] [CrossRef]

- Patle, B.; Babu L, G.; Pandey, A.; Parhi, D.; Jagadeesh, A. A review: On path planning strategies for navigation of mobile robot. Def. Technol. 2019, 15, 582–606. [Google Scholar] [CrossRef]

- Lee, D.H.; Lee, S.S.; Ahn, C.K.; Shi, P.; Lim, C. Finite Distribution Estimation-based Dynamic Window Approach to Reliable Obstacle Avoidance of Mobile Robot. IEEE Trans. Ind. Electron. 2020, 68, 9998–10006. [Google Scholar] [CrossRef]

- Li, G.; Tamura, Y.; Yamashita, A.; Asama, H. Effective improved artificial potential field-based regression search method for autonomous mobile robot path planning. Int. J. Mechatronics Autom. 2013, 3, 141–170. [Google Scholar] [CrossRef]

- Babinec, A.; Duchon, F.; Dekan, M.; Mikulova, Z.; Jurisica, L. Vector Field Histogram* with look-ahead tree extension dependent on time variable environment. Trans. Inst. Meas. Control 2018, 40, 1250–1264. [Google Scholar] [CrossRef]

- Saranrittichai, P.; Niparnan, N.; Sudsang, A. Robust local obstacle avoidance for mobile robot based on Dynamic Window approach. In Proceedings of the 2013 10th International Conference on Electrical Engineering/Electronics Computer, Telecommunications and Information Technology, Krabi, Thailand, 15–17 May 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Liu, C.; Lee, S.; Varnhagen, S.; Tseng, H.E. Path planning for autonomous vehicles using model predictive control. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 174–179. [Google Scholar] [CrossRef]

- Babic, B.; Nesic, N.; Miljkovic, Z. A review of automated feature recognition with rule-based pattern recognition. Comput. Ind. 2008, 59, 321–337. [Google Scholar] [CrossRef]

- Kovács, B.; Szayer, G.; Tajti, F.; Burdelis, M.; Korondi, P. A novel potential field method for path planning of mobile robots by adapting animal motion attributes. Robot. Auton. Syst. 2016, 82, 24–34. [Google Scholar] [CrossRef]

- Ibrahim, M.Y.; McFetridge, L. The Agoraphilic algorithm: A new optimistic approach for mobile robot navigation. In Proceedings of the 2001 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Proceedings (Cat. No. 01TH8556), Como, Italy, 8–12 July 2001; Volume 2, pp. 1334–1339. [Google Scholar] [CrossRef]

- Dai, X.; Li, C.K.; Rad, A. An approach to tune fuzzy controllers based on reinforcement learning for autonomous vehicle control. IEEE Trans. Intell. Transp. Syst. 2005, 6, 285–293. [Google Scholar] [CrossRef]

- Kamil, F.; Hong, T.S.; Khaksar, W.; Moghrabiah, M.Y.; Zulkifli, N.; Ahmad, S.A. New robot navigation algorithm for arbitrary unknown dynamic environments based on future prediction and priority behaviour. Expert Syst. Appl. 2017, 86, 274–291. [Google Scholar] [CrossRef]

- Bahraini, M.S.; Bozorg, M.; Rad, A.B. SLAM in dynamic environments via ML-RANSAC. Mechatronics 2018, 49, 105–118. [Google Scholar] [CrossRef]

- Hewawasam, H.S.; Ibrahim, M.Y.; Kahandawa, G.; Choudhury, T.A. Comparative Study on Object Tracking Algorithms for mobile robot Navigation in GPS-denied Environment. In Proceedings of the 2019 IEEE International Conference on Industrial Technology (ICIT), Melbourne, Australia, 13–15 February 2019; pp. 19–26. [Google Scholar] [CrossRef]

- Elnagar, A. Prediction of moving objects in dynamic environments using Kalman filters. In Proceedings of the Proceedings 2001 IEEE International Symposium on Computational Intelligence in Robotics and Automation (Cat. No. 01EX515), Banff, AB, Canada, 29 July–1 August 2001; pp. 414–419. [Google Scholar] [CrossRef]

- Hewawasam, H.S.; Ibrahim, M.Y.; Kahandawa, G.; Choudhury, T.A. Agoraphilic navigation algorithm in dynamic environment with obstacles motion tracking and prediction. Robotica 2022, 40, 329–347. [Google Scholar] [CrossRef]

- McFetridge, L.; Ibrahim, M.Y. Behavior fusion via free space force-shaping. In Proceedings of the IEEE International Conference on Industrial Technology, Shanghai, China, 22–25 August 2003; Volume 2, pp. 818–823. [Google Scholar] [CrossRef]

- Hewawasam, H.S.; Ibrahim, M.Y.; Appuhamillage, G.K.; Choudhury, T.A. Agoraphilic Navigation Algorithm Under Dynamic Environment. IEEE/ASME Trans. Mechatronics 2022, 27, 1727–1737. [Google Scholar] [CrossRef]

- Yaonan, W.; Yimin, Y.; Xiaofang, Y.; Yi, Z.; Yuanli, Z.; Feng, Y.; Lei, T. Autonomous mobile robot navigation system designed in dynamic environment based on transferable belief model. Measurement 2011, 44, 1389–1405. [Google Scholar] [CrossRef]

- Hossain, M.A.; Ferdous, I. Autonomous robot path planning in dynamic environment using a new optimization technique inspired by bacterial foraging technique. Robot. Auton. Syst. 2015, 64, 137–141. [Google Scholar] [CrossRef]

- Xin, J.; Jiao, X.; Yang, Y.; Liu, D. Visual navigation for mobile robot with Kinect camera in dynamic environment. In Proceedings of the 2016 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016; pp. 4757–4764. [Google Scholar] [CrossRef]

- Bodhale, D.; Afzulpurkar, N.; Thanh, N.T. Path planning for a mobile robot in a dynamic environment. In Proceedings of the 2008 IEEE International Conference on Robotics and Biomimetics, Bangkok, Thailand, 14–17 December 2009; pp. 2115–2120. [Google Scholar] [CrossRef]

- Huang, L. Velocity planning for a mobile robot to track a moving target-a potential field approach. Robot. Auton. Syst. 2009, 57, 55–63. [Google Scholar] [CrossRef]

- Jaradat, M.A.K.; Garibeh, M.H.; Feilat, E.A. Autonomous mobile robot dynamic motion planning using hybrid fuzzy potential field. Soft Comput. 2012, 16, 153–164. [Google Scholar] [CrossRef]

| Parameter | Without FLC | With FLC | Performance Comparison with Respect to Case 2 |

|---|---|---|---|

| Travel time | 85 s | 74 s | 14% |

| Path length | 368 cm | 318 cm | 14% |

| Avg speed | 4.3 cm/s | 4.3 cm/s | 0% |

| Parameter | Without FLC | With FLC |

|---|---|---|

| Travel time | 29 s (did not reach the goal) | 52 s |

| Path length | 127 cm (did not reach the goal) | 367 cm |

| Avg speed | 4.3 cm/s | 7.0 cm/s |

| Parameter | Without FLC and DOPP | With FLC and without DOPP | With FLC and DOPP |

|---|---|---|---|

| Travel time | Did not reach the goal | Did not reach the goal | 88 s |

| Path length | Did not reach the goal | Did not reach the goal | 372 cm |

| Avg speed | 4.3 cm/s | 4.3 cm/s | 4.3 cm/s |

| Algorithm [Ref.] | Base Concept | Tracking | Prediction | Velocity for Decision-Making | Unknown Dynamic Environments | Experimental Validation |

|---|---|---|---|---|---|---|

| [12] | Fuzzy Logic | No | No | No | Yes | Yes |

| [9] | Genetic Algorithm | No | No | No | Yes | Yes |

| [33] | APF | Yes | No | Yes | Yes | Yes |

| [34] | Bacterial Foraging | No | No | No | Yes | No |

| [35] | Dynamic Window Approach | No | No | No | Yes | Yes |

| [36] | APF | No | No | No | Yes | No |

| [37] | APF | No | Yes | Yes | Yes | No |

| [38] | APF | No | Yes | Yes | Yes | No |

| [13] | GDP | No | No | No | Yes (but very slow MOs. MO vel <0.5 cm/s) | Yes |

| ANADE | Agoraphilic (Free-Space Attraction) | Yes | Yes | Yes | Yes | Yes |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hewawasam, H.; Ibrahim, Y.; Kahandawa, G. A Novel Optimistic Local Path Planner: Agoraphilic Navigation Algorithm in Dynamic Environment. Machines 2022, 10, 1085. https://doi.org/10.3390/machines10111085

Hewawasam H, Ibrahim Y, Kahandawa G. A Novel Optimistic Local Path Planner: Agoraphilic Navigation Algorithm in Dynamic Environment. Machines. 2022; 10(11):1085. https://doi.org/10.3390/machines10111085

Chicago/Turabian StyleHewawasam, Hasitha, Yousef Ibrahim, and Gayan Kahandawa. 2022. "A Novel Optimistic Local Path Planner: Agoraphilic Navigation Algorithm in Dynamic Environment" Machines 10, no. 11: 1085. https://doi.org/10.3390/machines10111085

APA StyleHewawasam, H., Ibrahim, Y., & Kahandawa, G. (2022). A Novel Optimistic Local Path Planner: Agoraphilic Navigation Algorithm in Dynamic Environment. Machines, 10(11), 1085. https://doi.org/10.3390/machines10111085