1. Introduction

Production scheduling is an essential part of modern manufacturing systems, and the efficient scheduling methods can improve industrial production efficiency, increase the economic profitability of enterprises and raise customer satisfaction [

1,

2,

3]. The job shop scheduling problem (JSP) is one of the most complex problems in production scheduling and it has been proven to be NP-hard [

4]. The flexible job shop scheduling problem (FJSP) is an extension of JSP. Besides considering operation sequencing, it also needs to assign the appropriate machine to each operation. As the FJSP is more in line with the reality of modern manufacturing enterprises, the problem has been widely studied by many experts and scholars in the past decades [

5,

6,

7]. Furthermore, the problem is increasingly used in different environments, such as crane transportation, battery packaging and printing production [

8,

9,

10].

The first scholars who proposed the FJSP, Brucker and Schlie, used polynomial graph algorithm to solve the problem [

11]. With the advance of time, various solution methods were developed for the problem. Up to now, the methods for solving FJSP can be divided into two main categories: exact and approximate algorithms. Exact algorithms—for example, Lagrangian relaxation, branch and bound algorithms and mixed integer linear programming—have the advantage of seeking the optimal solution of the FJSP [

12,

13,

14]. However, they are only effective on small-scale FJSPs, and the computation time required is unaffordable once the size of the problem increases. The second approach has received more attention in recent studies due to its ability to find a better solution in a shorter period of time. Currently, metaheuristic algorithms are a kind of approximation algorithm which have been successfully applied to solve FJSP, such as genetic algorithm (GA), particle swarm algorithm (PSO), ant colony algorithm (ACO), etc.

Initially, research on metaheuristic algorithms for solving FJSPs lies in proposing new neighborhood structures and employing tabu search or simulated annealing algorithms (SA). Brandimarte designed a hierarchical algorithm based on the tabu search for solving FJSP [

15]. Based on the characteristics of FJSP, Najid et al. proposed an improved SA for solving the problem [

16]. With the objective of minimizing the maximum completion time, Mastrolilli et al. proposed two neighborhood structures (Nopt1, Nopt2) and combined them with TS, and the results validated the effectiveness of the proposed approach [

17]. Recent studies have shown that optimizing the objective of the problem by improving the neighborhood structure is an effective method. Zhao suggested a hybrid algorithm that incorporates an improved neighborhood structure. He divided the neighborhood structure into two levels: the first level for moving processes across machines, and the second level for moving processes within the same machine [

18]. As the size of FJSP grows, however, the method relying only on improving the neighborhood structure tends to lack diversity in the solution process, which in turn leads to falling into local optimum. Most current researchers solve FJSP by mixing swarm intelligence algorithms with constraint rules of the scheduling problem; the former is used to enhance the diversity of the population, while the latter is employed to exploit the neighborhood of the more optimal solution.

For GA, Li et al. proposed a hybrid algorithm (HA), which combined GA with tabu search (TS) for solving FJSP [

19]. The setting of parameters in GA is extremely significant, and a reasonable combination of parameters can better improve the performance of the algorithm. Therefore, Chang et al. proposed a hybrid genetic algorithm (HGA), and the Taguchi method was used to optimize the parameters of the GA [

20]. Similarly, Chen et al. suggested a self-learning genetic algorithm (SLGA) to solve the FJSP and dynamically adjusted its key parameters based on reinforcement learning (RL) [

21]. Wu et al. designed an adaptive population nondominated ranking genetic algorithm III, which combines a dual control strategy with GA to solve FJSP considering energy consumption [

22].

For ACO, Wu et al. proposed a hybrid ant colony algorithm based on a three-dimensional separation graph model for a multi-objective FJSP in which the optimization objectives are makespan, production duration, average idle time and production cost [

23]. Wang et al. presented an improved ant colony algorithm (IACO) to optimize the makespan of FJSP, which was tested by a real production example and two sets of well-known benchmark test examples to verify the effectiveness [

24]. To solve the FJSP in a dynamic environment, Zhang et al. combined Multi-Agent System (MAS) negotiation and ACO, and introduced the features of ACO into the negotiation mechanism to improve the performance of scheduling [

25]. Tian et al. introduced a PN-ACO-based metaheuristic algorithm for solving energy-efficient FJSP [

26].

For PSO, Ding et al. suggested a modified PSO for solving FJSP, and obtained useful solutions by improved encoding schemes, communication mechanisms of particles and modification rules for operating candidate machines [

27]. Fattahi et al. proposed a hybrid particle swarm optimization and parallel variable neighborhood search (HPSOPVNS) algorithm for solving a flexible job shop scheduling problem with assembly operations [

28]. In real industrial environments, unplanned and unforeseen events have existed. Considering the FJSP under machine failure, Nouiri et al. proposed a two-stage particle swarm optimization algorithm (2S-PSO), and the computational results showed that the algorithm has better robustness and stability compared with literature methods [

29].

There has been an increasing number of studies on solving the FJSP using other metaheuristic algorithms in recent years. Gao et al. proposed a discrete harmonic search (DHS) algorithm based on a weighting approach to solve the bi-objective FJSP, and the effectiveness of the method was demonstrated by using well-known benchmark examples [

30]. Feng et al. suggested a dynamic opposite learning assisted grasshopper optimization algorithm (DOLGOA). The dynamic opposite learning (DOL) strategy is used to improve the utilization capability of the algorithm [

31]. Li et al. introduced a diversified operator imperialist competitive algorithm (DOICA), which requires minimum makespan, total delay, total work and total energy consumption [

32]. Yuan et al. proposed a hybrid differential evolution algorithm (HDE) and introduced two neighborhood structures to improve the search performance [

33]. Li et al. designed an improved artificial bee colony algorithm (IABC) to solve the multi-objective low-carbon job shop scheduling problem with variable processing speed constraints [

34].

Table 1 shows a literature review of various common algorithms for solving FJSP.

The grey wolf optimization (GWO) algorithm is a population-based evolutionary metaheuristic algorithm proposed by Mirjalili in 2014, which was originally presented for solving continuous function optimization problems [

35]. In GWO, the hierarchical mechanism and hunting behaviors of the grey wolf population in nature are simulated. Compared with other metaheuristic algorithms, the GWO algorithm has the advantages of simple structure, few control parameters and the ability to achieve a balance between local and global search. It has been successfully applied to several fields in recent years, such as path planning, SVM model, image processing, power scheduling, signal processing, etc. [

36,

37,

38,

39,

40]. However, the algorithm is rarely used on FJSP. As the algorithm is continuous and FJSP is a discrete problem, it is important to consider how to match the algorithm with the problem.

At the moment, there are two mainstream solution methods. The first method adopts a transformation mechanism to interconvert continuous individual position vectors with discrete scheduling solutions, which has the advantage of being simple to implement and preserving the updated iterative formulation of the algorithm. Luan et al. suggested an improved whale optimization algorithm for solving FJSP, in which ROV rules are used to transform the operation sequence [

41]. For FJSP, Yuan et al. proposed a hybrid harmony search (HHS) algorithm and developed a transformation technique to convert a continuous harmony vector into a discrete two-vector code for FJSP [

42]. Luo et al. designed the multi-objective flexible job shop scheduling problem (MOFJSP) with variable processing speed, for which the chromosome encoding is represented by a three-vector representation corresponding to three subproblems: machine assignment, speed assignment and operation sequence. The mapping approach and the genetic operator are used to enable the algorithm to update in the discrete domain [

43]. Liu et al. proposed the multi-objective hybrid salp group algorithm (MHSSA) mixed with Lévy flight, random probability crossover operator and variational operator [

44]. Nevertheless, the transformation method has certain limitations—some excellent solutions will be missed in the process of conversion, and a lot of computation time will be wasted.

In the second approach, the discrete update operator is designed to achieve the correspondence between the algorithm and the problem. For the multi-objective flexible job shop scheduling problem, a hybrid discrete firefly algorithm (HDFA) was proposed by Karthikeyan et al. The search accuracy and information sharing ability of the algorithm are improved by discretization [

45]. Gu et al. suggested a discrete particle swarm optimization (DPSO) algorithm and designed the discrete update process of the algorithm by using crossover and variational operators [

46]. Gao et al. studied a flexible job shop rescheduling problem for new job insertion and discretized the update mechanism of the Jaya algorithm, and the results of extensive experiments showed that the DJaya algorithm is an effective method for solving the problem [

47]. Xiao et al. suggested a hybrid algorithm combining the chemical reaction algorithm and TS, and designed four basic operations to ensure the diversity of populations [

48]. Jiang et al. presented a discrete cat swarm optimization algorithm in order to solve a low-carbon flexible job shop scheduling problem with the objective of minimizing the sum of energy cost and delay cost, and designed discrete forms for the finding and tracking modes in the algorithm to fit the problem [

49]. Lu et al. redesigned the propagation, refraction and breaking mechanisms in the water wave optimization algorithm based on the characteristics of FJSP in order to adapt the algorithm to the scheduling problem under consideration [

50]. Although this method discards the update formula, the idea of the algorithm is retained. Thus, it is essential to design a more reasonable discrete update operator. At present, there is already a method for the discretization of the GWO operator for solving FJSP, but the method simply retains the process of the head wolf guiding the ordinary wolf hunting in the wolf pack, and does not facilitate more excavation of GWO [

51].

Table 2 contains a literature review on mapping mechanisms and discrete operators in FJSP.

In view of this, a discrete improved grey wolf optimization algorithm (DIGWO) is proposed in this paper for solving FJSP with the objectives of minimizing makespan and minimizing critical machine load. The algorithm has the following innovations. Firstly, a discrete grey wolf update operator is proposed in order to make GWO applicable for solving FJSP. Secondly, an initialization method incorporating the chaotic mapping strategy and heuristic rule is designed to obtain high high-quality and diverse initial populations. Then, an adaptive convergence factor is employed to make the algorithm better balanced in exploitation and exploration. Finally, the effectiveness as well as the superiority of the proposed algorithm are verified using international benchmark cases.

The contributions of this paper are in the following five aspects.

A hybrid initialization method which combines heuristic rules and random Tent chaotic mapping strategy is proposed for generating original populations with high quality and without loss of diversity.

For the characteristics of FJSP, a discrete grey wolf update operator is designed to improve the search performance of the algorithm while ensuring that the algorithm can solve the problem.

An adaptive convergence factor is proposed to improve the exploration and exploitation capability of the algorithm.

The improved algorithm is applied to solve the benchmark test problems in the existing literature, and the results show that DIGWO is competitive compared to other algorithms.

The performance of DIGWO was executed on 47 FJSP instances of different sizes, and the experimental results show the effectiveness of DIGWO in solving this problem under this condition.

The sections of this paper are organized as follows.

Section 1 is an introduction, and it gives the background of the topic as well as the motivation for the research. In

Section 2, the mathematical models of the multi-objective FJSP and the original GWO are given. The specific steps of the improvement strategy are described in detail in

Section 3. In

Section 4, the performance of the proposed DIGWO is tested on continuous-type benchmark functions. The effectiveness of DIGWO is verified using the international standard FJSP in

Section 5. Finally, the work is summarized and directions for future research are proposed.

3. Proposed Discrete Improved Grey Wolf Optimization Algorithm

3.1. The Framework of the Proposed DIGWO

In this paper, a discrete improved grey wolf optimization algorithm (DIGWO) is proposed. In order to apply GWO to solve FJSP as well as to enhance the search capability of the algorithm, DIGWO contains three improved strategies, namely hybrid initialization (HI), discrete grey wolf update operator (DGUO) and adaptive convergence factor. The process of discretization follows the basic principles of GWO, in which the update operator of the evolutionary algorithm is used and the key parameters in GWO are retained. The flowchart of DIGWO is shown in

Figure 2. The steps of the algorithm are as follows.

Step 1: Input the parameters of the algorithm, the information of the FJSP case and the termination conditions, etc.

Step 2: Initialize the population and calculate the fitness of all individuals (c.f.

Section 3.3).

Step 3: Determine whether the termination condition is reached—if yes, go to step 9; otherwise, go to step 4.

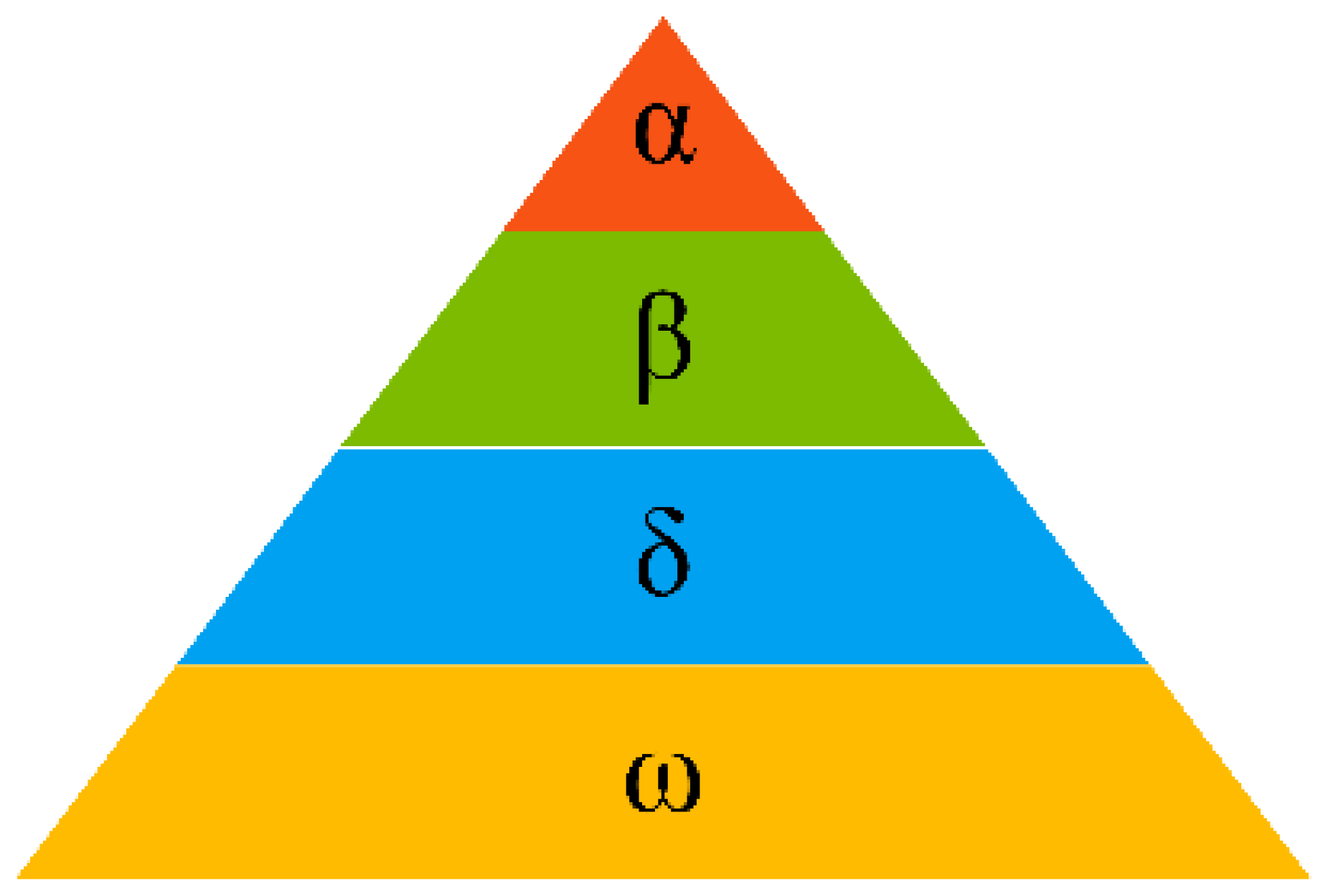

Step 4: Three optimal individuals in the population are labeled according to the size of the fitness, namely Xalpha, Xbeta and Xdelta.

Step 5: Update the control parameters

and

(c.f.

Section 3.4).

Step 6: Update all individuals in the population using the DGUO, with different update mechanisms for common and alpha wolves (c.f.

Section 3.5.1 and

Section 3.5.2).

Step 7: The offspring grey wolf individuals were selected and preserved according to the acceptance probability

(c.f.

Section 3.5.3).

Step 8: Determine if the termination condition is met—if yes, go to step 9; otherwise, return to step 4.

Step 9: Output the optimal solution and its coding sequence.

In order to elaborate the proposed DIGWO more carefully, the key strategies of the algorithm as well as the parameters are mainly discussed below, and the algorithm is thoroughly analyzed in the subsequent sections. In the proposed DIGWO, two key parameters are defined: the control parameter

in the adaptive convergence factor

and the distance acceptance probability

. These two key parameters are described in detail in

Section 3.4 and

Section 3.5.3.

In the initialization phase, the modified Tent chaotic mapping is used to generate the operation sequences and the heuristic rules are used to generate the machine sequences. The specific initialization steps can be found in

Section 3.3. Next, in the update phase of the algorithm, the improved convergence factor is used to optimize the balance between global search and local search for DIGWO. The specific improved formula can be found in

Section 3.4. Before introducing DGUO, it is important to highlight that the encoding type of DIGWO is discrete during solving FJSP. Therefore, the update formula of the original GWO is no longer used, and the discrete update operator is designed according to the characteristics of the FJSP. The following steps are followed in one update of the algorithm. The population was divided into two parts, the leader wolves and the ordinary wolves. Firstly, the ordinary wolves of the population are updated, and the mode of updating is determined by the value of the adaptive convergence factor

. If

, then the appropriate leader wolf is selected by roulette and updated in the discrete domain. On the contrary, an individual in the population of ordinary wolves is selected for updating. Secondly, the leader wolves are updated. For the operation sequence and machine sequence of the leader wolves, the neighborhood adjustment based on the critical path is adopted. Finally, the traditional elite strategy and the reception criterion based on distance proposed in this paper are combined to generate new populations, and the individuals of leader wolves and ordinary wolves are merged for the next iteration of updating. The detailed description of DGUO can be found in

Section 3.5.

3.2. Solution Representation

The solution of the proposed algorithm contains two vectors: the machine selection vector and the operation sequence vector. In order to represent these two subproblems more rationally, an integer coding approach is adopted in this paper. Next, the 3 × 3 FJSP example in

Table 3 is used to explain the encoding and decoding methods.

The machine selection (MS) vector is made up of an array of integers. The encoding of the machine sequence corresponds to the order of the job numbers from the smallest to the largest, as shown in

Figure 3. The machine sequence can be expressed as

. It should be noted that each element in the MS sequence represents the machine number; for example, the fourth element of the MS indicates that machine

is selected by operation 1 of job 2.

The operation sequence (OS) vector consists of an array of integers, representing the job information and operation processing order in FJSP. As shown in

Figure 3, the operation sequence can be expressed as

. It should be noted that the elements in the OS vector are represented by job numbers. In the order starting at the left, if the element of the OS at the

th position is

and it appears

times in the current sequence, the element correspond to the information about operation

of job

and is

. Therefore, it can be seen from the OS vector in

Figure 3 that the decoding order is

. The decoding process is as follows. First, all operations are assigned to the corresponding machines based on the MS vector. Meanwhile, the processing order of all operations on each machine is determined by the OS vector. Then, the earliest start time of the current operation is determined according to the constraint rules of FJSP. Finally, a reasonable scheduling scheme is obtained by arranging all operations to their corresponding positions.

Figure 4 shows the Gantt chart of a 3 × 3 FJSP instance.

3.3. Population Initialization

For swarm intelligence algorithms, the quality of the original population can be improved by effective initialization methods, and it is able to give a positive impact during the subsequent iterations. Currently, most of the initialization methods in studies about FJSP use random methods to generate populations. Nevertheless, it is difficult to guarantee the quality of the generated initial populations by only using random methods. Consequently, it is important to design effective strategies for the initialization phase to improve the search performance of the algorithm.

Tent chaotic sequences, with their favorable randomness, ergodicity and regularity, are often used to combine with metaheuristic algorithms to improve population diversity and enable the algorithm’s global search capability [

54]. In this paper, a modified Tent chaotic sequence is introduced, and its expression is shown in Formula (15). The variables obtained by Tent chaotic mapping in the search space are shown in Equation (16).

where

is the random number within the interval [0, 1],

is the individual number,

is the number of the chaotic variable,

is the total number of individuals within the population and

and

are the upper and lower bounds of the current variable in the search space, respectively.

As shown in

Figure 5, the machines are selected for the current operation in order from left to right according to the code, and the MS is finally obtained. A string of position index codes is obtained by arranging the obtained chaotic sequence in ascending order, and then transformed into an operation sequence according to the distribution of jobs and their operations. In

Figure 5, the chaotic sequence {0.15, 2.84, 1.66, 0.54, 2.02, 1.83} is transformed into the operation codes {

.

Three heuristic strategies are introduced in the initialization phase of the machine sequence to improve the quality of the initial population. Combined with the chaotic mapping strategy, these three strategies are described as follows.

Random initialization: This is the earliest initialization method, and the reason for adopting this strategy is that it guarantees a high diversity of the initial populations generated. (1) Generate a sequence of operations by chaotic mapping. (2) Randomly select a machine from its corresponding set of select machines for the current operation, in left-to-right order. (3) Repeat step 2 until a complete vector of machines is generated.

Local processing time minimization rule: The purpose of this rule is to select a machine with the minimum processing time for each operation, thus reducing the corresponding processing time [

55]. (1) Generate a sequence of operations by chaotic mapping. (2) Select a machine with the minimum processing time for the current operation from its corresponding set of available machines, in left-to-right order. (3) Repeat step 2 until a complete vector of machines is generated.

Minimum completion time: The purpose of this rule is to optimize the maximum completion time and prevent over-selection of the machine with the smallest processing time, which could lead to a machine with high performance but too many operations scheduled to be processed, while a machine with low performance is left idle [

56]. (1) Generate a sequence of operations by chaotic mapping. (2) In left-to-right order, if the selectable machines for the current operation are greater than or equal to two, determine the machine with the smallest completion time based on the earliest start time and processing time. (3) Repeat step 2 until a complete vector of machines is generated.

Each of the three strategies mentioned above has been proven effective in the literature, so a hybrid initialization approach (HI) is proposed by combining the advantages of the three strategies. The strategy is described in Algorithm 1.

| Algorithm 1. Hybrid initialization (HI) strategy |

| Input: Total number of individuals |

| Output: Initial population |

| 1. | for = 1: do |

| 2. | The initial population is generated using a random initialization rule, size [] |

| 3. | The initial population is generated employing the local minimum processing time rule, size [] |

| 4. | The initial population is generated applying the minimum completion time rule, size [] |

| 5. | Combine the initial populations generated by the above three rules, denoted as |

| 6. | if size of then |

| 7. | break |

| 8. | else |

| 9. | Generate the rest with a random initialization strategy |

| 10. | end if |

| 11. | end for |

3.4. Nonlinear Convergence Factor

Needless to say, the primary consideration for metaheuristics is how to better balance the exploration and exploitation capabilities of the algorithm. This is no exception in GWO. The parameter A, in the traditional GWO, plays the role of regulating the global and local search capability of the algorithm. Throughout the search process of the algorithm, |A| < 1, the ordinary wolf will move in the direction of the head wolf individual in the population, which reflects the local search of the algorithm. On the contrary, the grey wolf individuals will move away from the head wolf, which corresponds to the global search ability of the algorithm. The change in parameter A is determined by the linearly decreasing parameter . Nevertheless, since FJSP itself is a combinatorial optimization problem with high complexity, it is difficult to accurately adapt to the complex nonlinear search process if only the traditional parameter of GWO is used to control the update of the algorithm.

Therefore, a nonlinear control parameter strategy based on exponential functions is proposed in this section. At the early stage of the algorithm update, the descent rate of the proposed parameter

is accelerated, which aims to improve the convergence rate of GWO. In the later stages, the slowdown is performed to enhance the exploitation of the algorithm. The modified convergence factor

can be defined as shown in the Equation (17):

where

is the number of current iterations,

is the maximum number of iterations and

is used to regulate the non-linear declining trend of

. To visualize the convergence trend of the proposed parameter

,

Figure 6 simulates the evolution curve of the parameter

at different

values.

3.5. Discrete Grey Wolf Update Operator (DGUO)

The GWO was first proposed to be applicable for solving continuous optimization problems; nevertheless, FJSP, as a typical discrete combinatorial optimization problem, cannot be directly used by GWO for solving it. For this reason, a reasonable discretization of the coding vector of GWO is required. In this section, a discrete grey wolf update operator (DGUO) is designed, in which each solution corresponds to a grey wolf, and it consists of two parts, i.e., the operation part and the machine part. Its update method is shown in Algorithm 2.

The proposed DGUO has the following three characteristics. According to the social hierarchy of GWO, the wolf packs are divided into leader wolves (α, β, δ) and ordinary wolves (ω), and the role of the leader wolves is to guide the ordinary wolves towards the direction of prey. To distinguish the identity from ordinary wolves, the DGUO is designed with different update methods for these two kinds of wolves. Secondly, in order to enhance individual communication within the population, an intra-population information interoperability strategy was introduced for the update of common wolves, as the movement of ordinary wolves in the search space during the update of GWO was only related to the leader wolves. Finally, a hamming distance-based reception mechanism was adopted to enhance the population diversity and avoid premature convergence of the algorithm.

A two-dimensional space vector schematic is used to explain the update mechanism of DIGWO. The legend has been marked. In

Figure 7, (a) and (b) denote the renewal method of ordinary wolves at different stages, respectively. R represents a randomly selected ordinary wolf in the population, and w represents the current ordinary wolf. If |A| < 1, the ordinary wolf is called by the leader wolf to approach it; otherwise, an ordinary wolf is randomly selected in the population to determine the direction and step length of the next movement of the current individual.

Figure 7c represents the update of the head wolf, which relies only on its own experience as it moves through the search space.

Figure 7d indicates that the generated new generation of individuals is retained not only with reference to the fitness of the individuals, but also selected with a certain probability based on the hamming distance.

| Algorithm 2. Overall update process of DGUO |

| Input: The OS vectors and MS vectors of all grey wolves in generation, total number of individuals |

| Output: The OS vectors and MS vectors of all grey wolves in generation |

| 1 | All grey Wolf individuals of the generation were sorted according to the non-decreasing order of makespan |

| 2 | The three individuals with the smallest makespan |

| 3 | Remaining individual grey wolves |

| 4 | for do |

| 5 | |

| 6 | if |A| <= 1 then |

| 7 | is selected from the by roulette |

| 8 | else |

| 9 | , not the th individual, is randomly selected from within the |

| 10 | end if |

| 11 | |

| 12 | |

| 13 | generate a random number |

| 14 | if then |

| 15 | the offspring individual with the smallest makespan is preserved |

| 16 | else |

| 17 | the offspring individuals further away from is preserved |

| 18 | end if |

| 19 | end for |

| 20 | for do |

| 21 | the th OS vector was updated by the swap operation based on the critical block |

| 22 | generate a random number |

| 23 | if then |

| 24 | the th MS vector was updated using multi-point mutation to randomly select a machine |

| 25 | else |

| 26 | the th MS vector was updated using multi-point mutation to select the machine with minimum processing time |

| 27 | end if |

| 28 | end for |

| 29 | return the leader wolf and the normal wolf are merged, and the OS and MS of the +1th generation are output |

3.5.1. Update Approach Based on Leader Wolf

In order for the leader wolf to better guide the population toward the optimal solution, the update method of the leader wolf will be redesigned. In the design of the update operator for the leader wolf, it combines the coding characteristics of the operation sequence and machine sequence in FJSP, and the strategy of the critical path is also introduced.

The description of the critical path is given below. The critical path is the longest path from the start node to the end node in the feasible scheduling [

1]. According to the critical path method in operational research, moving the critical operations in the critical path can improve the solution of FJSP. Therefore, in order to enhance the convergence of the algorithm while reducing the computational cost, the neighborhood structures used are designed based on the movement on the critical path. As shown in

Figure 8, all the operations contained in the black line box constitute a complete critical path, where

,

,

and

are the critical operations on the critical path. When two or more consecutive critical operations are on the same machine, we call them critical blocks. As shown in

Figure 8,

and

are critical blocks.

The update method of the operation sequence is shown in

Figure 9. A new operation sequence is generated by moving the two key operations in the key block [

45]. The process of moving needs to satisfy the FJSP constraint that the two operations to be exchanged do not belong to the same job. The rules for the exchange are as follows.

The swapping operation is performed only on the critical block.

Only the first two and last two critical operations of the critical block are considered for swapping.

If there are only two critical operations in the block, the two operations are swapped.

The machine sequence is updated as shown in

Figure 10. A new machine sequence is generated by reselecting machines for the critical operations in the critical path. The specific steps are as follows.

Determine the number of available machines in the critical operation .

If the number of selectable machines is equal to one , a new critical operation is selected; if , another machine is selected for replacement; if , a machine with the smallest processing time is selected from it for replacement.

3.5.2. Update Approach Based on Ordinary Wolf

In the proposed DGUO, the update of ordinary wolves has the following three features. Firstly, the crossover operator is introduced to achieve the information interaction between the leader wolf and the ordinary wolf, and it can enhance the global search ability of the algorithm. Secondly, the roulette method is used to select one of the three leading wolves for the selection of crossover parents. The significance of using the roulette method is that high-quality information is used more often. Finally, the control parameter

in GWO is retained and improved. If

, the crossover operation is performed between the current individual and the leader wolf; otherwise, an ordinary wolf is randomly selected from the population to crossover with the current individual. The improved priority operation crossover (IPOX) used to operate the sequence update is shown in

Figure 11. For machine sequences, the multi-point crossover (MPX) operation is used as shown in

Figure 12. These two crossover operators are described below.

Step 1: The job set is randomly divided into two sets, and .

Step 2: All elements of the operation sequence of the parent ( which belong to the set are directly retained in Child () in their original positions. Similarly, the elements of the parent which belong to the set are directly retained in and remain in their original positions

Step 3: The vacant places of are filled by the elements of the operation sequence of the parent which belong to the set sequentially. Likewise, the elements belonging to the set in the are filled sequentially to the remaining positions in in order.

Step 1: A random array Ra consisting of 0 and 1 is generated and its length is equal to MS.

Step 2: Determine all the positions in RA where the elements are 1 and note them as Index; find the elements at Index position in P1 and P2 and swap them.

Step 3: The remaining elements in P1 and P2 are not moved.

3.5.3. Acceptance Criteria

In order to prevent the population rapidly converging to non-optimal space during the update process, a distance-based reception criterion is proposed. Similar to the role of the control parameter C in GWO, the individual further away from the optimal ones in the search space also has a chance to be retained. Considering the discrete nature of the encoding, the distances are also discretized and called hamming distances in solving the FJSP [

45].

For machine sequences, the hamming distance between two individuals is expressed using the number of unequal elements in the sequence. An example of hamming distance is as follows: if there are two machine sequences in the FJSP solution space,

and

, and if there are three points of inconsistency between the two sequences, the hamming distance is 3. This calculation procedure is shown in

Figure 13. For operational sequences, the hamming distance between two individuals can be measured by the number of swaps. For example, two operation sequences in FJSP solution space are as follows:

and

; the

will need four swaps to obtain

, so the hamming distance is 4. This process is shown in

Figure 14. The hamming distance between two individuals and the best individual is calculated and compared in turn. If the acceptance probability

is satisfied, then the individual is retained using the hamming distance; otherwise, the individual with high fitness in the offspring will be retained.

4. Numerical Analysis

In this section, to investigate the accuracy and stability of the proposed DIGWO, eight benchmark test functions are employed in the experiments with dimensions set to D = 30, 50 and 100. As shown in

Table 5, the functions are characterized by U, M, S and N, corresponding to unimodal, multimodal, separable and non-separable. The proposed DIGWO was coded in MATLAB 2016a software on an Intel 3.80 GHz Pentium Gold processor with 8 Gb RAM on a Win10 operating system. In the later experiments for solving FJSP, this same operating environment is used.

The unimodal function has only one optimal solution, Io it is used to verify the exploitation capability of the algorithm. In contrast, the multimodal function has multiple local optima and is therefore used to test the exploration capability of the algorithm. The purpose of setting multiple dimensions is to test the stability of the algorithms’ performance on problems of different complexity. To verify the effectiveness and superiority of the algorithms, there are five metaheuristic algorithms which have been proposed in recent years used for comparison: GWO, PSO, MFO, SSA, SCA and Jaya [

35,

57,

58,

59,

60,

61]. In order to ensure fairness during the experiment, the population size was set to 30, and the maximum number of iterations was set to 3000, 5000 and 10,000 according to the order of the dimensionality (D = 30, 50, 100). All other parameters of the algorithms involved in the comparison were set according to the relevant literature. Each algorithm was run 30 times independently on each benchmark function.

Table 6,

Table 7 and

Table 8 give the running results of the comparison algorithm obtained in the test functions of 30, 50 and 100 dimensions. The mean (Mean) and standard deviation (Std) obtained from the run results are used as evaluation metrics to represent the performance of the algorithm. The best results are bolded. To test the significance difference between the algorithms, the Wilcoxon signed rank test with a significance level of 0.05 is used. The statistical result Sig is marked as “

”, which means DIGWO is better than, equal to or inferior to the algorithms involved in the comparison.

From

Table 6,

Table 7 and

Table 8, it can be concluded that DIGWO has greater convergence and stability on most problems. In particular, the proposed algorithm achieves better results on all problems compared to the original GWO. Combining the statistical results of standard deviation and Wilcoxon test results, DIGWO significantly outperforms other algorithms on problems, excluding

f5 and

f8, and is robust on problems with different dimensions. The results of the statistical significance tests obtained by the proposed DIGWO and comparison algorithms in different dimensions are discussed below. In the comparison with PSO, DIGWO has 20 results that outperform PSO, one result that is not significantly different from PSO and three results that are worse than PSO. In the comparison with MFO, DIGWO had 23 results superior to MFO and one result not significantly different from MFO. In the comparison with SSA, DIGWO had 21 results better than SSA, one result not significantly different from SSA and two results worse than SSA. In the comparison with SCA, all results of DIGWO were better than SCA. In the comparison with Jaya, DIGWO had 20 results better than Jaya, two results not significantly different from Jaya and two results worse than Jaya. In the comparison with GWO, 14 results of DIGWO were better than GWO, and 10 results were not significantly different from GWO.

To further investigate the performance of DIGWO, some representative test functions are selected to analyze the convergence trends of all the algorithms involved in the comparison. The convergence curves are shown in

Figure 15,

Figure 16 and

Figure 17. The convergence curves of the multimodal benchmark functions are shown in

Figure 15 and

Figure 16, and the convergence curves of the unimodal benchmark test functions are shown in

Figure 17. It can be observed that the proposed algorithm outperforms other algorithms in terms of convergence speed and accuracy. This also shows the effectiveness of the proposed improvement strategy in DIGWO.

Figure 15 and

Figure 17 show that the results of the algorithm can still be improved even in the middle and late stages of the iteration, which further indicates the improved development capability. The convergence curve in

Figure 16 shows that DIGWO can converge to the theoretical optimum in the shortest time, which also verifies the efficient global search capability of DIGWO. In conclusion, the overall search performance of DIGWO based on the chaotic mapping strategy and adaptive convergence factor is effective.

5. Simulation of FJSP Based on DIGWO

5.1. Notation

The following notations are used in this section to evaluate algorithms or problems, and the definitions of these notations are explained below.

LB: Lower bound of the makespan values found so far.

Best: Best makespan in several independent runs.

WL: Best critical machine load achieved from several independent runs.

Avg: The average makespan obtained from several independent runs.

Tcpu: Computation time required for several independent runs to obtain the best makespan (seconds).

T(AV): The average computation time obtained by the current algorithm for all problems in the test problem set.

RE: The relative error between the optimal makespan obtained by the current algorithm and the LB, given by Equation (18).

MRE: The average RE obtained by the current algorithm for all problems of the test problem set.

RPI: Relative percentage increase, given by Formula (19).

where

is the best makespan obtained by the

ith comparison algorithm, and

denotes the best makespan among all the algorithms involved in the comparison.

5.2. Description of Test Examples

In order to test the performance of the algorithm, international benchmark arithmetic cases are used. The well-known experimental sets include KCdata, BRdata and Fdata.

BRdata is offered by Brandimarte and includes 10 problems: MK01–MK10, ranging in size from 10 jobs to 20 jobs, 4 machines to 15 machines, with medium flexibility and a range of F 0.15–0.35 [

15].

KCdata is provided by Kacem, which contains five problems: Kacem01–Kacem05, ranging in size from 4 jobs and 5 machines to 15 jobs and 10 machines, and all four problems are total flexible job shop scheduling problems, except Kacem02 [

62].

Fdata is provided by Fattahi et al. which contains 20 problems, namely SFJS01–SFJS10 and MFJS01–MFJS10. The size of the problems ranges from two jobs and two machines to nine jobs and eight machines [

63].

The existing literature does not contain test problems for large-scale FJSP; therefore, a dataset is proposed and named YAdata, which contains 12 test problems. The details of these problems are given in

Table 9, where Job denotes the number of jobs, Machine means the number of machines, Operation represents the number of operations contained in each job, F denotes the ratio of the number of machines that can be selected for each operation to the total number of machines and Time denotes the range of processing time values.

5.3. Parameter Analysis

The parameter configuration affects the performance of the algorithm in solving the problem. In the proposed DIGWO, the parameters that perform best in the same environment are obtained through experimental tests. Depending on the size of the test problem, the total population of DIGWO is set to 50 when testing three international benchmark FJSPs, namely BRdata, KCdata and Fdata. In testing the large-scale FJSP, namely YAdata, the total population is set to 50. The number of generations is set to 200.

The following sensitivity tests were performed for the key parameters used in the proposed DIGWO. For fairness, Mk04 was used as the test problem and the Avg obtained from 20 independent runs was collected to evaluate the performance. The parameter levels are as follows:

and

. The experimental results obtained with different combinations of parameters are given in

Table 10. The comprehensive observation shows that the best performance of the algorithm is obtained when

= 1.5 and

= 0.1.

5.4. Analysis of the Effectiveness of the Proposed Strategy

5.4.1. Validation of the DGUO Strategy

To verify the effectiveness of the DGUO proposed in this paper, the performance of GWO-1 and GWO-2 is compared. GWO-1 is the basic grey wolf optimization algorithm and it uses the conversion mechanism which is already available in the literature [

41]. The GWO-2 is a discrete algorithm that uses the DGUO strategy proposed in this paper. Other than that, none of the other improvement strategies proposed in this paper were used during the experiments. The performance of these two algorithms was evaluated on BRdata considering the same parameters. To ensure fairness during the experiments, the results after 20 independent runs are shown in

Table 11.

As can be seen from

Table 11, GWO-2 always outperforms or equals GWO-1 for both makespan and critical machine load metrics. The average computation time for all instances of GWO-2 on BRdata is 14.3516. This value is smaller than the GWO-1. The resulting advantage in computation time can be attributed to the fact that the algorithm uses a transformation mechanism that requires additional computations at each generation. A comparative box plot of the average RPI values is given in

Figure 18, and it can be clearly seen that GWO-2 has the smaller box block and is positioned downwards, which means that the algorithm is highly robust and convergent.

To further analyze the performance,

Figure 19 shows the convergence curves of the two compared algorithms on MK08. It can be found that GWO-2 converges to the optimal makespan in 61 generations, and GWO-1 converges in 163 generations. Therefore, the improved discrete update mechanism can enhance the convergence speed of the algorithm.

5.4.2. Validation of Initialization Strategy

In order to test the performance of the hybrid initialization strategy mentioned in

Section 3.3, this section includes the test of the two comparison algorithms, DIGWO-RI and DIGWO. It should be noted that the initial populations of DIGWO-RI are generated randomly. To ensure fairness during the experiments, the same components and all parameters were set identically, and the results after 20 independent runs are shown in

Table 12.

Combining

Table 12 and

Figure 20, it can be seen that the proposed DIGWO has better makespan and critical machine load than DIGWO-RI on all instances of BRdata. This demonstrates that combining heuristic rules with chaotic mapping strategies can provide suitable initial populations. The optimal convergence curves obtained by the comparison algorithms on MK02 are given in

Figure 21. It is observed that DIGWO converges to the optimal makespan in 58 iterations, while DIGWO-RI converges in 63 generations. Additionally, it should be noted that DIGWO is much better than the comparison algorithm in the results of the first iteration. Consequently, the improved initialization strategy proposed in this paper can generate high-quality initial solutions and enable the algorithm to converge to the optimal solution earlier.

5.5. Comparison with Other Algorithms

In this section, DIGWO is compared with algorithms proposed in recent years, and “Best” is the primary metric considered in the process of comparison. To further analyze the overall performance of the algorithm, MRE is also used as a participating comparative performance metric. Considering the differences in programming platforms, processor speed and coding skills used by the algorithms involved in the comparison, the original programming environment and programming platform are given accordingly in the comparison process, and Tcpu and T(AV) are used as comparison metrics. In the following, KCdata, BRdata and Fdata are used as experimental test problems and the comparison results are shown.

5.5.1. Comparison Results in KCdata

In this section, KCdata is used to test the performance of the proposed algorithm, and the results of the proposed algorithm are compared with recent studies IWOA, GATS+HM, HDFA and IACO [

24,

41,

45,

64]. To ensure fairness in the experimental process, the results of 20 independent runs are shown in

Table 13. From the results in

Table 13, it can be found that the best makespan obtained by the proposed DIGWO is always better than or equal to the other five compared algorithms, and the average computation time is shorter. In summary, the proposed DIGWO has more competitive advantages in terms of the accuracy of the search and convergence speed.

Figure 22 shows the optimal resultant Gantt chart (makespan = 11) obtained by the proposed algorithm in Kacem05.

5.5.2. Comparison Results in BRdata

In this section, BRdata is used to test the performance of the proposed algorithm, and the results of the proposed DIGWO are compared with those of recent studies IWOA, HGWO, PGDHS, GWO and SLGA [

21,

41,

51,

65,

66]. To eliminate randomness in the experimental process, the results of 20 independent runs are shown in

Table 14. The LB in the third column is provided by industrial solver DELMIA Quintiq [

1]. It can be clearly seen that the proposed DIGWO obtains six lower bounds out of ten instances. According to the MRE metrics obtained by the algorithm, it is known that the proposed algorithm outperforms the other five compared algorithms in terms of solution accuracy. The T(AV) metric shows that the proposed algorithm also has the shortest average running time for all problems.

Figure 23 shows the Gantt chart of the optimal results obtained by the proposed algorithm in MK09 (makespan = 307).

5.5.3. Comparison Results in Fdata

In this section, Fdata is used to test the performance of the proposed algorithm, and the results of the proposed DIGWO are compared with those of recent studies AIA, EPSO, MIIP and DOLGOA [

31,

55,

67,

68]. In order to eliminate randomness during the experiment, the results of 20 independent runs are shown in

Table 15. The LB in the third column is from the literature [

69]. As can be seen in

Table 15, the proposed DIGWO obtained 10 lower bounds in 20 instances. It is worth noting that MILP is an exact method, which means that the results obtained by the algorithm can be considered as the optimal solution. Compared with MILP, the proposed algorithm shows that the results of DIGWO in Fdata are always better than or equal to MILP. The results in the last two columns of

Table 15 show that the proposed DIGWO is superior to the other four algorithms both in terms of solution accuracy and convergence speed.

5.6. Comparison Results in LSFJSP

In this section, 12 LSFJSP examples are used to further test the performance of the proposed DIGWO. The details of these examples are presented in

Table 9. In order to verify the validity on LSFJSP of the proposed algorithm, the compared algorithms include WOA, Jaya, MFO, SSA, IPSO, HGWO and SLGA [

21,

27,

58,

59,

61,

65,

70]. The first four algorithms are metaheuristics proposed in recent years, and the rest of the algorithms are studies about FJSP. In order to ensure that the experimental environment does not have special features, the algorithms listed above are run on the same device. The maximum number of iterations is set to 200. Each algorithm was repeatedly executed 10 times during the experiment, and makespan and critical machine load among them were recorded as evaluation criteria. The results obtained from the experiments are shown in

Table 16 and

Table 17, and the best convergence curves of each instance are shown in

Figure 24,

Figure 25,

Figure 26 and

Figure 27.

To analyze the data more intuitively, the best solutions of each problem in

Table 16 and

Table 17 are shown in bold font. From these data, it is evident that the proposed DIGWO obtains 11 optimal makespans out of 12 LSFJSP instances as well as the minimum critical machine load. Comparing the convergence curves of the algorithm, in most cases, DIGWO converges faster than other algorithms. Obviously, DIGWO is comparable to the makespan obtained by the metaheuristic algorithms involved in the comparison at 200 generations after updating to about 20 generations. The Friedman ranking of the compared algorithms on all problems is given in

Table 18, and the results show that DIGWO is the best algorithm on all instances with

p-value = 4.0349 × 10

−14 < 0.05. From the information of the generated test examples, it is clear that the generated LSFJSP has not only low flexibility examples (F = 0.2, F = 0.3), but also high flexibility (F = 0.5). However, DIGWO always gives better results on these problems.

Figure 28 shows the optimal makespan Gantt chart obtained by the proposed DIGWO in YA01. The operations are denoted by “Job-operation”, and because of the large number of machines, the vertical coordinates are not annotated machine sequentially, and the horizontal coordinates in the figure indicate the processing time period of the operation. From the graph, it can be observed that the majority of machines were started for processing at the moment 0. In addition, no machine is found to be idle for a long time or overused, which is in line with the concept of smart manufacturing and effectively saving process time costs.

By the above comparison, the characteristics of FJSP and the idea of GWO are combined, and the discrete update mechanism of DIGWO algorithm is designed, so that each grey wolf individual of GWO has simple intelligence. The success of the DIGWO design lies in the effective initialization strategy as well as the DGUO strategy, which not only ensures the quality of the initial population, but also enhances the efficiency of the search in the process of iterative update. For FJSP, the proposed algorithm has better convergence compared to the original GWO. In conclusion, DIGWO has the inherent ability to solve LSFJSP, and the proposed DIGWO is generalizable and can be applied to FJSPs of different scales.