Identification and Detection of Biological Information on Tiny Biological Targets Based on Subtle Differences

Abstract

:1. Introduction

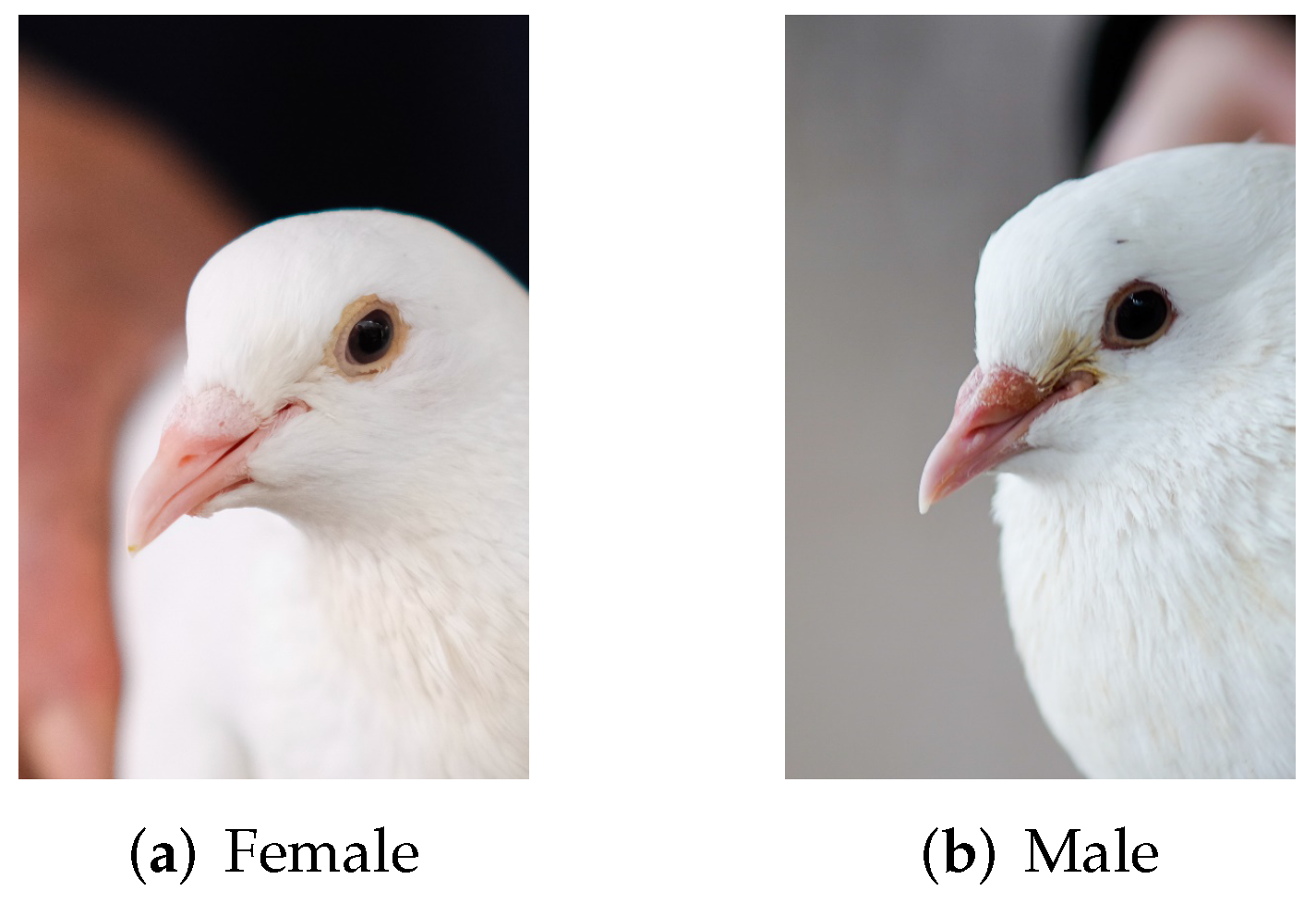

- According to the analysis of the tiny differences of biological characteristics, the detection area of pigeon’s sex characteristics is proposed, which has a strong correlation with pigeon’s sex, and the image of this area is easy to obtain in the breeding process;

- A feature enhancement method based on edge detection is proposed. The color distribution information and edge contour information of the image are extracted by stripping brightness information and edge detection, and then they are fused into a new enhanced image as required.

2. Materials and Data

2.1. Experimental Equipment

2.2. Image and Data Acquisition

3. Build Enhanced Image Data Set

3.1. Image Preprocessing

3.2. Data Set Annotation Based on Eye-Beak Compound Area

3.3. Generate Enhanced Image Data Set

3.3.1. Extract Color Information

- h—hue value;

- s—saturation value;

- v—brightness value;

- R—red channel value;

- G—green channel value;

- B—blue channel value;

- max—The maximum value of R, G, and B;

- min—The minimum value R, G, and B.

3.3.2. Edge Information Extraction

- Gray—The brightness value of gray image

- R—The brightness value of red channel in color image

- G—The brightness value of green channel in color image

- B—The brightness value of blue channel in color image

3.3.3. Generate Enhanced Image

- —The weight of color information in the enhanced image,

- —The weight of edge information in the enhanced image,

- —Hue channel of color information

- —Saturation channel of color information

- —Brightness channel of color information

- —Brightness channel of edge information

4. Test and Result Analysis

4.1. Model Training

4.2. Results and Analysis

- —Number of samples to predict males as males

- —Number of samples to predict females as females

- —Number of samples to predict females as males

- —Number of samples to predict males as females

- —Accuracy of the model

- —Precision of the model

- R—Recall rate of the model

- Score— score of the model

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tang, Y.; Chen, M.; Wang, C.; Luo, L.; Li, J.; Lian, G.; Zou, X. Recognition and localization methods for vision-based fruit picking robots: A review. Front. Plant Sci. 2020, 11, 510. [Google Scholar] [CrossRef] [PubMed]

- Wu, F.; Duan, J.; Chen, S.; Ye, Y.; Ai, P.; Yang, Z. Multi-target recognition of bananas and automatic positioning for the inflorescence axis cutting point. Front. Plant Sci. 2021, 12. [Google Scholar] [CrossRef] [PubMed]

- Su, C. Study on Sex Identification of Six Species of Monotypic Passerine Birds by Reflectance Spectroscopy. Master’s Thesis, Northeast Forestry University, Harbin, China, 2019. (In Chinese). [Google Scholar] [CrossRef]

- Li, G.; Yang, S.; Zhou, H.; Ren, J.; Ma, Q.; Wang, W. Research progress of bird sex identification technology. Dong Wu Xue Za Zhi 2003, 106–108. (In Chinese) [Google Scholar] [CrossRef]

- Clinton, M.; Haines, L.; Belloir, B.; McBride, D. Sexing chick embryos: A rapid and simple protocol. Br. Poult. Sci. 2001, 42, 134–138. [Google Scholar] [CrossRef]

- Romanov, M.N.; Betuel, A.M.; Chemnick, L.G.; Ryder, O.A.; Kulibaba, R.O.; Tereshchenko, O.V.; Payne, W.S.; Delekta, P.C.; Dodgson, J.B.; Tuttle, E.M. Widely applicable PCR markers for sex identification in birds. Russ. J. Genet. 2019, 55, 220–231. [Google Scholar] [CrossRef]

- Quinn, J.P.; Knox, C.W. Sex identification of Barred Plymouth Rock baby chicks by down, shank, and beak characteristics. Poult. Sci. 1939, 18, 259–264. [Google Scholar] [CrossRef]

- Volodin, I.A.; Volodina, E.V.; Klenova, A.V.; Matrosova, V.A. Gender identification using acoustic analysis in birds without external sexual dimorphism. Avian Res. 2015, 6, 1–17. [Google Scholar]

- Henderson, E.W. Sex identification by down color of silver laced and “Red Laced Silver” chicks. Poult. Sci. 1959, 38, 599–602. [Google Scholar] [CrossRef]

- Homma, K.; Siopes, T.D.; Wilson, W.O.; McFarland, L.Z. Identification of sex of day-old quail (Coturnix coturnix japonica) by cloacal examination. Poult. Sci. 1966, 45, 469–472. [Google Scholar] [CrossRef]

- Turk, M.; Pentland, A. Eigenfaces for recognition. J. Cogn. Neurosci. 1991, 3, 71–86. [Google Scholar] [CrossRef]

- Penev, P.S.; Atick, J.J. Local feature analysis: A general statistical theory for object representation. Network: Comput. Neural Syst. 1996, 7, 477–500. [Google Scholar] [CrossRef]

- Aggarwal, R.; Bhardwaj, S.; Sharma, K. Face Recognition System Using Image Enhancement with PCA and LDA. In Proceedings of the 2022 6th International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 29–31 March 2022; pp. 1322–1327. [Google Scholar]

- Xia, C.K.; Zhang, Y.Z.; Zhang, P.F.; Qin, C.; Zheng, R.; Liu, S.W. Multi-RPN Fusion-Based Sparse PCA-CNN Approach to Object Detection and Recognition for Robot-Aided Visual System. In Proceedings of the 2017 IEEE 7th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Honolulu, HI, USA, 31 July–4 August 2017; pp. 394–399. [Google Scholar]

- Liu, C.; Tao, Y.; Liang, J.; Li, K.; Chen, Y. Object detection based on YOLO network. In Proceedings of the 2018 IEEE 4th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 14–16 December 2018; pp. 799–803. [Google Scholar]

- Foody, G.; McCulloch, M.; Yates, W. The effect of training set size and composition on artificial neural network classification. Int. J. Remote Sens. 1995, 16, 1707–1723. [Google Scholar] [CrossRef]

- Peters, R.A. A new algorithm for image noise reduction using mathematical morphology. IEEE Trans. Image Process. 1995, 4, 554–568. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Laine, A.F.; Schuler, S.; Fan, J.; Huda, W. Mammographic feature enhancement by multiscale analysis. IEEE Trans. Med. Imaging 1994, 13, 725–740. [Google Scholar] [CrossRef] [Green Version]

- Agarwal, J.; Bedi, S.S. Implementation of hybrid image fusion technique for feature enhancement in medical diagnosis. Hum.-Centric Comput. Inf. Sci. 2015, 5, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Shao, Z.; Cai, J. Remote sensing image fusion with deep convolutional neural network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1656–1669. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Li, J.; Xiong, J. In-field citrus detection and localisation based on RGB-D image analysis. Biosyst. Eng. 2019, 186, 34–44. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Xiong, J.; Li, J. Guava detection and pose estimation using a low-cost RGB-D sensor in the field. Sensors 2019, 19, 428. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Benallal, M.; Meunier, J. Real-time color segmentation of road signs. In Proceedings of the CCECE 2003—Canadian Conference on Electrical and Computer Engineering. Toward a Caring and Humane Technology (Cat. No. 03CH37436), Montreal, QC, Canada, 4–7 May 2003; pp. 1823–1826. [Google Scholar]

- Torre, V.; Poggio, T.A. On edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 147–163. [Google Scholar] [CrossRef] [PubMed]

- Zhan, C.; Duan, X.; Xu, S.; Song, Z.; Luo, M. An improved moving object detection algorithm based on frame difference and edge detection. In Proceedings of the Fourth International Conference on Image and Graphics (ICIG 2007), Chengdu, China, 22–24 August 2007; pp. 519–523. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Han, X.; Chang, J.; Wang, K. Real-time object detection based on YOLO-v2 for tiny vehicle object. Procedia Comput. Sci. 2021, 183, 61–72. [Google Scholar] [CrossRef]

- Jocher, G.; Stoken, A.; Borovec, J.; Chaurasia, A.; Changyu, L.; Laughing, A.; Hogan, A.; Hajek, J.; Diaconu, L.; Marc, Y.; et al. ultralytics/yolov5: V5. 0-YOLOv5-P6 1280 models AWS Supervise. ly and YouTube integrations. Zenodo 2021, 11. [Google Scholar] [CrossRef]

| Original Image | Detection Result | |||

|---|---|---|---|---|

| Group 1 | Group 2 | Group 3 | Group 4 | |

|  |  |  |  |

|  |  |  |  |

|  |  |  |  |

|  |  |  |  |

| Group Number | Label the Compound Area? | Is the Image Enhanced? | Total Number of Detected Targets | Tp | Tn | Fp | Fn |

|---|---|---|---|---|---|---|---|

| 1 | false | false | 204 | 59 | 76 | 36 | 33 |

| 2 | false | true | 199 | 72 | 74 | 21 | 32 |

| 3 | true | false | 180 | 84 | 86 | 6 | 4 |

| 4 | true | true | 180 | 88 | 89 | 2 | 1 |

| Group Number | Label the Compound Area? | Is the Image Enhanced? | Accuracy | Precision | Recall Rate | F1 Score |

|---|---|---|---|---|---|---|

| 1 | False | False | 0.66 | 0.62 | 0.64 | 0.63 |

| 2 | False | True | 0.73 | 0.77 | 0.69 | 0.73 |

| 3 | True | False | 0.94 | 0.93 | 0.95 | 0.94 |

| 4 | True | True | 0.98 | 0.98 | 0.99 | 0.98 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, S.; Tang, Y.; Zou, X.; Huo, H.; Hu, K.; Hu, B.; Pan, Y. Identification and Detection of Biological Information on Tiny Biological Targets Based on Subtle Differences. Machines 2022, 10, 996. https://doi.org/10.3390/machines10110996

Chen S, Tang Y, Zou X, Huo H, Hu K, Hu B, Pan Y. Identification and Detection of Biological Information on Tiny Biological Targets Based on Subtle Differences. Machines. 2022; 10(11):996. https://doi.org/10.3390/machines10110996

Chicago/Turabian StyleChen, Siyu, Yunchao Tang, Xiangjun Zou, Hanlin Huo, Kewei Hu, Boran Hu, and Yaoqiang Pan. 2022. "Identification and Detection of Biological Information on Tiny Biological Targets Based on Subtle Differences" Machines 10, no. 11: 996. https://doi.org/10.3390/machines10110996

APA StyleChen, S., Tang, Y., Zou, X., Huo, H., Hu, K., Hu, B., & Pan, Y. (2022). Identification and Detection of Biological Information on Tiny Biological Targets Based on Subtle Differences. Machines, 10(11), 996. https://doi.org/10.3390/machines10110996