Velocity Estimation and Cost Map Generation for Dynamic Obstacle Avoidance of ROS Based AMR

Abstract

:1. Introduction

2. Related Works

2.1. Navigation Framework via ROS

2.2. Cost Map with Multi-Layer

2.3. Global Path Planner

2.4. Local Path Planner

2.5. Velocity Obstacle

3. Obstacle Detection and Estimation

3.1. System Method Workflow

3.2. Tracking of Image Objects

3.3. Clustering by K-Means

3.4. Radius Calculation of Obstacle

3.5. Velocity Estimation of Moving Obstacle

3.5.1. Kalman Filter Modeling

3.5.2. Linear Acceleration Model

3.5.3. Process Update of Kalman Filter

- The Kalman filter prediction estimate:

- Correction error for Kalman filter:

4. Velocity Obstacle Layer Creation

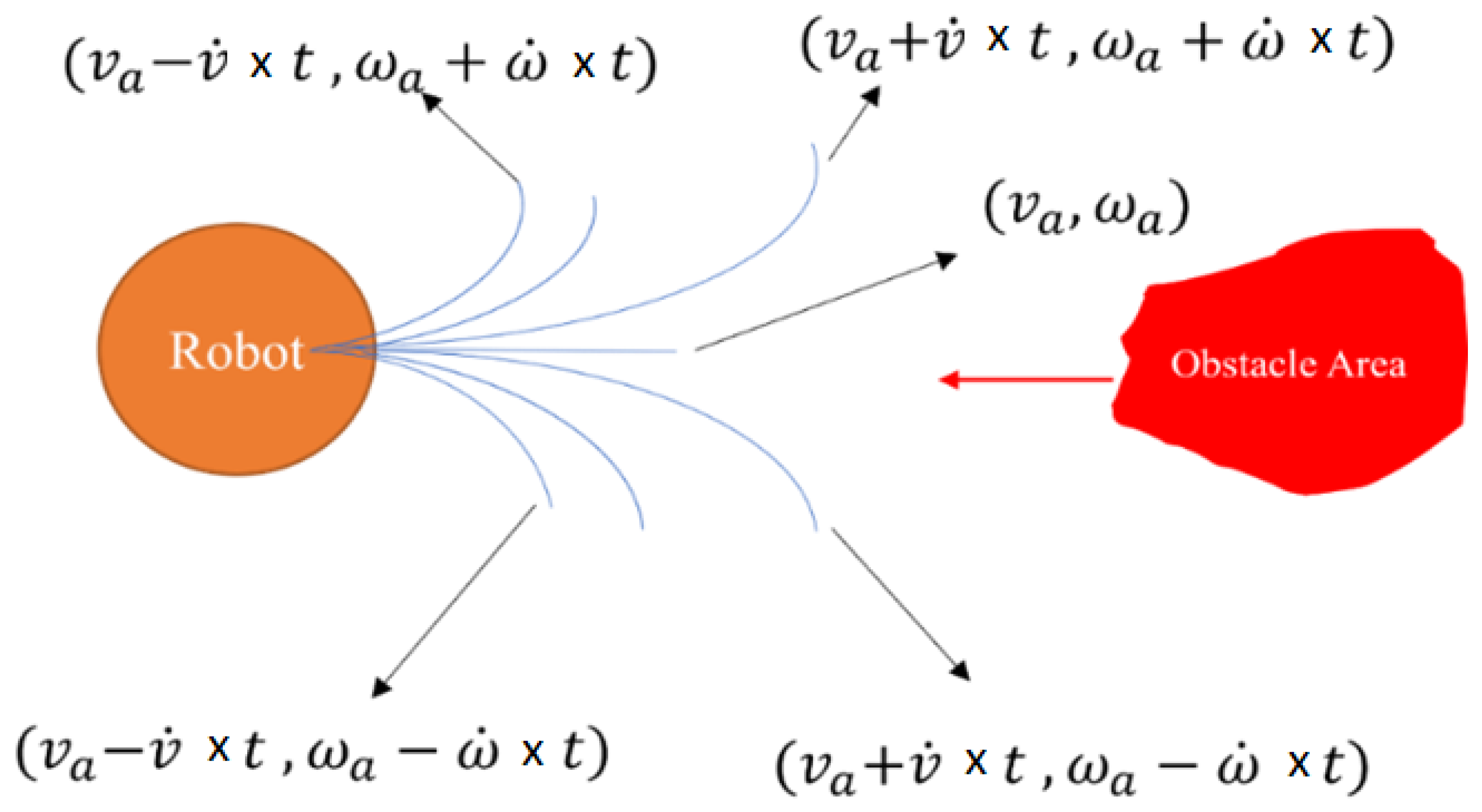

4.1. Velocity Obstacle Area

4.2. The Problem of Velocity Obstacles

4.3. Truncation area Enhancement

5. Experimental Results

5.1. Default Dynamic Obstacle Avoidance

5.2. VO for Dynamic Obstacle Avoidance

5.3. Multi-Dynamic Obstacle Avoidance with VO

5.4. Actual Dynamic Obstacle Avoidance

5.5. Performance Comparison

6. Discussion and Conclusions

6.1. Simulation and Implementation

6.2. Computation Resources

6.3. Contribution and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Quigley, M.; Gerkey, B.; Conley, K.; Faust, J.; Foote, T.; Leibs, F.; Berger, E.; Wheeler, R.; Ng, A. ROS: An open-source Robot Operating System. ICRA Workshop Open Source Softw. 2009, 3, 5. [Google Scholar]

- Move_Base—ROS Wiki. Available online: http://wiki.ros.org/move_base (accessed on 20 May 2022).

- Abdelrasoul, Y.; Saman, A.B.S.H.; Sebastian, P. A quantitative study of tuning ROS gmapping parameters and their effect on performing indoor 2D SLAM. In Proceedings of the 2016 2nd IEEE International Symposium on Robotics and Manufacturing Automation (ROMA), Ipoh, Malaysia, 25–27 September 2016; pp. 1–6. [Google Scholar]

- Weichen, W.E.I.; Shirinzadeh, B.; Ghafarian, M.; Esakkiappan, S.; Shen, T. Hector SLAM with ICP trajectory matching. In Proceedings of the 2020 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Boston, MA, USA, 6–9 July 2020; pp. 1971–1976. [Google Scholar]

- Nüchter, A.; Bleier, M.; Schauer, J.; Janotta, P. Improving Google’s Cartographer 3D mapping by continuous-time slam. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 543. [Google Scholar] [CrossRef] [Green Version]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Zapata, R.; Lepinay, P. Self-adaptive Monte Carlo localization for mobile robots using range finders. Robotica 2012, 30, 229–244. [Google Scholar] [CrossRef] [Green Version]

- Zwirello, L.; Schipper, T.; Harter, M.; Zwick, T. UWB localization system for indoor applications: Concept, realization and analysis. J. Electr. Comput. Eng. 2012, 4, 1–11. [Google Scholar] [CrossRef]

- Kriz, P.; Maly, F.; Kozel, T. Improving indoor localization using bluetooth low energy beacons. Mob. Inf. Syst. 2016, 2016, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Costmap_Prohibition_Layer—ROS Wiki. Available online: http://wiki.ros.org/-costmap_prohibition_layer (accessed on 20 May 2022).

- Duchoň, F.; Babinec, A.; Kajan, M.; Beňo, P.; Florek, M.; Fico, T.; Jurišica, L. Path planning with modified a star algorithm for a mobile robot. Proc. Eng. 2014, 96, 59–69. [Google Scholar] [CrossRef] [Green Version]

- Fan, D.; Shi, P. Improvement of Dijkstra’s algorithm and its application in route planning, IEEE Int. Conf. Fuzzy Syst. Knowl. Discov. 2010, 4, 1901–1904. [Google Scholar]

- LaValle, S.M.; Kuffner, J.J.; Donald, B.R. Rapidly-exploring random trees: Progress and prospects. Algorith. Comput. Robot. N. Dir. 2001, 5, 293–308. [Google Scholar]

- Fox, D.; Burgard, W.; Thrun, S. The dynamic window approach to collision avoidance. IEEE Robot. Autom. Mag. 1997, 4, 23–33. [Google Scholar] [CrossRef] [Green Version]

- Dechter, R.; Pearl, J. Generalized best first search strategies and the optimality of A. J. Assoc. Comput. Machin. 1985, 32, 505–536. [Google Scholar] [CrossRef]

- Wilkie, D.; Van Den Berg, J.; Manocha, D. Generalized velocity obstacles. IEEE Int. Conf. Intell. Robot. Syst. 2009, 2009, 5573–5578. [Google Scholar]

- Van den Berg, J.; Lin, M.; Manocha, D. Reciprocal velocity obstacles for real-time multi-agent navigation. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 1928–1935. [Google Scholar]

- Allawi, Z.; Abdalla, T. A PSO-optimized reciprocal velocity obstacles algorithm for navigation of multiple mobile robots. IAES Int. J. Robot. Autom. 2015, 4, 31. [Google Scholar] [CrossRef]

- Alonso-Mora, J.; Breitenmoser, A.; Rufli, M.; Beardsley, P.; Siegwart, R. Optimal reciprocal collision avoidance for multiple non-holonomic robots. Distrib. Auton. Robot. Syst. 2013, 83, 203–216. [Google Scholar]

- Liu, Z.; Jiang, Z.; Xu, T.; Cheng, H.; Xie, Z.; Lin, L. Avoidance of high-speed obstacles based on velocity obstacles. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 7624–7630. [Google Scholar]

- Samsani, S.S.; Muhammad, M.S. Socially compliant robot navigation in crowded environment by human behavior resemblance using deep reinforcement learning. IEEE Robot. Autom. Lett. 2021, 6, 5223–5230. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and real time tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Host, K.; Ivasic-Kos, M.; Pobar, M. Tracking Handball Players with the DeepSORT Algorithm. ICPRAM 2020, 593–599. [Google Scholar] [CrossRef]

- Hartigan, J.A.; Wong, M.A. Algorithm AS 136: A k-means clustering algorithm, J.R. Stat. Soc. 1979, 28, 100–108. [Google Scholar] [CrossRef]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter; University of North Carolina: Chapel Hill, NC, USA, 1995. [Google Scholar]

| Initial Setting of Parameters | |||

|---|---|---|---|

| initial position x | From k-mean | initial acceleration ax | 0.0 |

| initial position y | From k-mean | initial acceleration ay | 0.0 |

| Initial position vx | 0.0 | initial acceleration vy | 0.0 |

| AVG Cost (Sec) | Min Cost (Sec) | Max Cost (Sec) | |

|---|---|---|---|

| VO | 31.64 (s) | 26.8 (s) | 40.6 (s) |

| Non-VO | 41.2 (s) | 31.1 (s) | 58.6 (s) |

| AVG Distance | Min Distance | Max Distance | |

|---|---|---|---|

| VO | 5.901 (m) | 4.839 (m) | 7.534 (m) |

| Non-VO | 5.5087 (m) | 4.7283 (m) | 6.033 (m) |

| AVG Distance | Min Distance | Max Distance | |

|---|---|---|---|

| VO | 0.651 (m) | 0.463 (m) | 0.968 (m) |

| Non-VO | 0.284 (m) | 0.265 (m) | 0.319 (m) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, C.S.; Lin, C.J.; Lai, C.C.; Lin, S.Y. Velocity Estimation and Cost Map Generation for Dynamic Obstacle Avoidance of ROS Based AMR. Machines 2022, 10, 501. https://doi.org/10.3390/machines10070501

Chen CS, Lin CJ, Lai CC, Lin SY. Velocity Estimation and Cost Map Generation for Dynamic Obstacle Avoidance of ROS Based AMR. Machines. 2022; 10(7):501. https://doi.org/10.3390/machines10070501

Chicago/Turabian StyleChen, Chin S., Chia J. Lin, Chun C. Lai, and Si Y. Lin. 2022. "Velocity Estimation and Cost Map Generation for Dynamic Obstacle Avoidance of ROS Based AMR" Machines 10, no. 7: 501. https://doi.org/10.3390/machines10070501

APA StyleChen, C. S., Lin, C. J., Lai, C. C., & Lin, S. Y. (2022). Velocity Estimation and Cost Map Generation for Dynamic Obstacle Avoidance of ROS Based AMR. Machines, 10(7), 501. https://doi.org/10.3390/machines10070501