A Narrative Review of the Use of Artificial Intelligence in Breast, Lung, and Prostate Cancer

Abstract

:1. Introduction

2. Materials and Methods

3. Breast Cancer Screening

4. Lung Cancer Screening

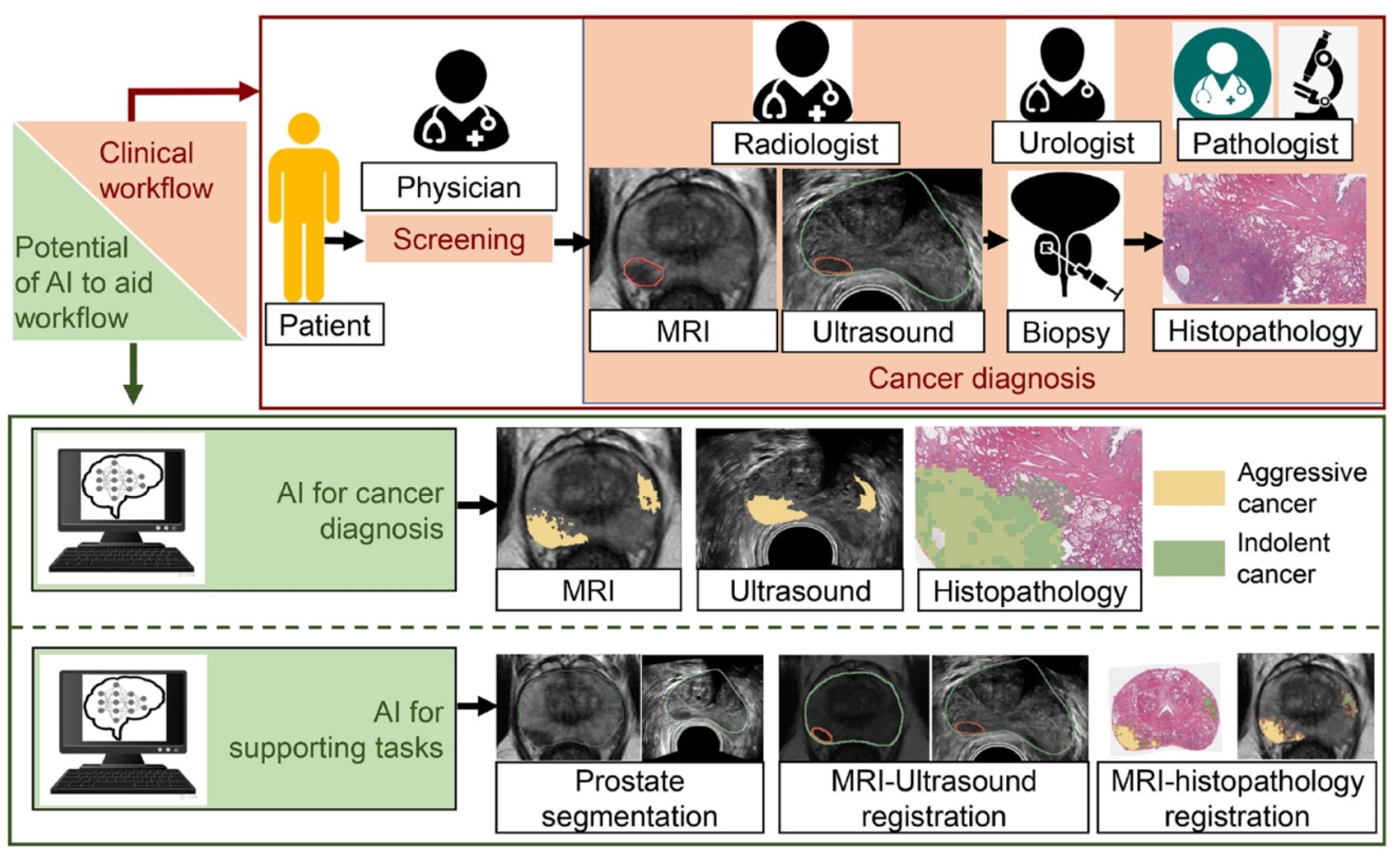

5. Prostate Cancer Screening

6. Summary

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach, 4th ed.; Pearson Series in Artificial Intelligence; Pearson: London, UK, 2021. [Google Scholar]

- Mechelli, A. Machine Learning: Methods and Applications to Brain Disorders, 1st ed.; Elsevier: Amsterdam, The Netherlands, 2019. [Google Scholar]

- Chollet, F.; Kalinowski, T.; Allaire, J.J. Deep Learning with R, 2nd ed.; Manning: Shelter Island, NY, USA, 2022; p. xviii. 548p. [Google Scholar]

- Indolia, S.; Goswami, A.K.; Mishra, S.; Asopa, P. Conceptual Understanding of Convolutional Neural Network- A Deep Learning Approach. Procedia Comput. Sci. 2018, 132, 679–688. [Google Scholar] [CrossRef]

- Varghese, B.A.; Cen, S.Y.; Hwang, D.H.; Duddalwar, V.A. Texture Analysis of Imaging: What Radiologists Need to Know. Am. J. Roentgenol. 2019, 212, 520–528. [Google Scholar] [CrossRef] [PubMed]

- Kocak, B.; Durmaz, E.S.; Ates, E.; Kilickesmez, O. Radiomics with artificial intelligence: A practical guide for beginners. Diagn. Interv. Radiol. 2019, 25, 485–495. [Google Scholar] [CrossRef] [PubMed]

- Shur, J.D.; Doran, S.J.; Kumar, S.; ap Dafydd, D.; Downey, K.; O’connor, J.P.B.; Papanikolaou, N.; Messiou, C.; Koh, D.-M.; Orton, M.R. Radiomics in Oncology: A Practical Guide. Radiographics 2021, 41, 1717–1732. [Google Scholar] [CrossRef]

- Reginelli, A.; Nardone, V.; Giacobbe, G.; Belfiore, M.P.; Grassi, R.; Schettino, F.; Del Canto, M.; Grassi, R.; Cappabianca, S. Radiomics as a New Frontier of Imaging for Cancer Prognosis: A Narrative Review. Diagnostics 2021, 11, 1796. [Google Scholar] [CrossRef]

- Siegel, R.L.; Miller, K.D.; Wagle, N.S.; Jemal, A. Cancer statistics, 2023. CA A Cancer J. Clin. 2023, 73, 17–48. [Google Scholar] [CrossRef] [PubMed]

- Scrivener, M.; de Jong, E.E.C.; van Timmeren, J.E.; Pieters, T.; Ghaye, B.; Geets, X. Radiomics applied to lung cancer: A review. Transl. Cancer Res. 2016, 5, 398–409. [Google Scholar] [CrossRef]

- Liu, M.; Wu, J.; Wang, N.; Zhang, X.; Bai, Y.; Guo, J.; Zhang, L.; Liu, S.; Tao, K. The value of artificial intelligence in the diagnosis of lung cancer: A systematic review and meta-analysis. PLoS ONE 2023, 18, e0273445. [Google Scholar] [CrossRef]

- Ferro, M.; de Cobelli, O.; Musi, G.; del Giudice, F.; Carrieri, G.; Busetto, G.M.; Falagario, U.G.; Sciarra, A.; Maggi, M.; Crocetto, F.; et al. Radiomics in prostate cancer: An up-to-date review. Ther. Adv. Urol. 2022, 14, 17562872221109020. [Google Scholar] [CrossRef]

- Varghese, B.A.; Lee, S.; Cen, S.; Talebi, A.; Mohd, P.; Stahl, D.; Perkins, M.; Desai, B.; Duddalwar, V.A.; Larsen, L.H. Characterizing breast masses using an integrative framework of machine learning and CEUS-based radiomics. J. Ultrasound 2022, 25, 699–708. [Google Scholar] [CrossRef]

- Chen, Y.; Xie, Y.; Li, B.; Shao, H.; Na, Z.; Wang, Q.; Jing, H. Automated Breast Ultrasound (ABUS)-based radiomics nomogram: An individualized tool for predicting axillary lymph node tumor burden in patients with early breast cancer. BMC Cancer 2023, 23, 340. [Google Scholar] [CrossRef]

- Kocak, B.; Baessler, B.; Cuocolo, R.; Mercaldo, N.; dos Santos, D.P. Trends and statistics of artificial intelligence and radiomics research in Radiology, Nuclear Medicine, and Medical Imaging: Bibliometric analysis. Eur. Radiol. 2023, 1–14. [Google Scholar] [CrossRef]

- Bicchierai, G.; Di Naro, F.; De Benedetto, D.; Cozzi, D.; Pradella, S.; Miele, V.; Nori, J. A Review of Breast Imaging for Timely Diagnosis of Disease. Int. J. Environ. Res. Public Health 2021, 18, 5509. [Google Scholar] [CrossRef]

- Schoub, P.K. Understanding indications and defining guidelines for breast magnetic resonance imaging. S. Afr. J. Radiol. 2018, 22, 1353. [Google Scholar] [CrossRef] [PubMed]

- Kuhl, C.K.; Schrading, S.; Leutner, C.C.; Morakkabati-Spitz, N.; Wardelmann, E.; Fimmers, R.; Kuhn, W.; Schild, H.H. Mammography, Breast Ultrasound, and Magnetic Resonance Imaging for Surveillance of Women at High Familial Risk for Breast Cancer. J. Clin. Oncol. 2005, 23, 8469–8476. [Google Scholar] [CrossRef] [PubMed]

- Masud, R.; Al-Rei, M.; Lokker, C. Computer-Aided Detection for Breast Cancer Screening in Clinical Settings: Scoping Review. JMIR Med. Inform. 2019, 7, e12660. [Google Scholar] [CrossRef] [PubMed]

- Jalalian, A.; Mashohor, S.; Mahmud, R.; Karasfi, B.; Saripan, M.I.B.; Ramli, A.R.B. Foundation and methodologies in computer-aided diagnosis systems for breast cancer detection. EXCLI J. 2017, 16, 113–137. [Google Scholar] [CrossRef]

- Ramadan, S.Z. Methods Used in Computer-Aided Diagnosis for Breast Cancer Detection Using Mammograms: A Review. J. Health Eng. 2020, 2020, 9162464. [Google Scholar] [CrossRef]

- Mahmood, T.; Li, J.; Pei, Y.; Akhtar, F. An Automated In-Depth Feature Learning Algorithm for Breast Abnormality Prognosis and Robust Characterization from Mammography Images Using Deep Transfer Learning. Biology 2021, 10, 859. [Google Scholar] [CrossRef]

- Larsen, M.; Aglen, C.F.; Lee, C.I.; Hoff, S.R.; Lund-Hanssen, H.; Lång, K.; Nygård, J.F.; Ursin, G.; Hofvind, S. Artificial Intelligence Evaluation of 122 969 Mammography Examinations from a Population-based Screening Program. Radiology 2022, 303, 502–511. [Google Scholar] [CrossRef]

- Yirgin, I.K.; Koyluoglu, Y.O.; Seker, M.E.; Gurdal, S.O.; Ozaydin, A.N.; Ozcinar, B.; Cabioğlu, N.; Ozmen, V.; Aribal, E. Diagnostic Performance of AI for Cancers Registered in A Mammography Screening Program: A Retrospective Analysis. Technol. Cancer Res. Treat. 2022, 21, 153303382210751. [Google Scholar] [CrossRef]

- Marinovich, M.L.; Wylie, E.; Lotter, W.; Lund, H.; Waddell, A.; Madeley, C.; Pereira, G.; Houssami, N. Artificial intelligence (AI) for breast cancer screening: BreastScreen population-based cohort study of cancer detection. EBioMedicine 2023, 90, 104498. [Google Scholar] [CrossRef]

- Leibig, C.; Brehmer, M.; Bunk, S.; Byng, D.; Pinker, K.; Umutlu, L. Combining the strengths of radiologists and AI for breast cancer screening: A retrospective analysis. Lancet Digit. Health 2022, 4, e507–e519. [Google Scholar] [CrossRef]

- Freeman, K.; Geppert, J.; Stinton, C.; Todkill, D.; Johnson, S.; Clarke, A.; Taylor-Phillips, S. Use of artificial intelligence for image analysis in breast cancer screening programmes: Systematic review of test accuracy. BMJ 2021, 374, n1872. [Google Scholar] [CrossRef]

- Jiang, Y.; Edwards, A.V.; Newstead, G.M. Artificial Intelligence Applied to Breast MRI for Improved Diagnosis. Radiology 2021, 298, 38–46. [Google Scholar] [CrossRef]

- Codari, M.; Schiaffino, S.; Sardanelli, F.; Trimboli, R.M. Artificial Intelligence for Breast MRI in 2008–2018: A Systematic Mapping Review. Am. J. Roentgenol. 2019, 212, 280–292. [Google Scholar] [CrossRef]

- Brunetti, N.; Calabrese, M.; Martinoli, C.; Tagliafico, A.S. Artificial Intelligence in Breast Ultrasound: From Diagnosis to Prognosis—A Rapid Review. Diagnostics 2022, 13, 58. [Google Scholar] [CrossRef] [PubMed]

- Tam, M.; Dyer, T.; Dissez, G.; Morgan, T.N.; Hughes, M.; Illes, J.; Rasalingham, R.; Rasalingham, S. Augmenting lung cancer diagnosis on chest radiographs: Positioning artificial intelligence to improve radiologist performance. Clin. Radiol. 2021, 76, 607–614. [Google Scholar] [CrossRef] [PubMed]

- del Ciello, A.; Franchi, P.; Contegiacomo, A.; Cicchetti, G.; Bonomo, L.; Larici, A.R. Missed lung cancer: When, where, and why? Diagn. Interv. Radiol. 2017, 23, 118–126. [Google Scholar] [CrossRef] [PubMed]

- Bradley, S.H.; Barclay, M.; Cornwell, B.; Abel, G.A.; Callister, M.E.; Gomez-Cano, M.; Round, T.; Shinkins, B.; Neal, R.D. Associations between general practice characteristics and chest X-ray rate: An observational study. Br. J. Gen. Pract. 2021, 72, e34–e42. [Google Scholar] [CrossRef] [PubMed]

- Sim, Y.; Chung, M.J.; Kotter, E.; Yune, S.; Kim, M.; Do, S.; Han, K.; Kim, H.; Yang, S.; Lee, D.-J.; et al. Deep Convolutional Neural Network–based Software Improves Radiologist Detection of Malignant Lung Nodules on Chest Radiographs. Radiology 2020, 294, 199–209. [Google Scholar] [CrossRef] [PubMed]

- Yoo, H.; Lee, S.H.; Arru, C.D.; Khera, R.D.; Singh, R.; Siebert, S.; Kim, D.; Lee, Y.; Park, J.H.; Eom, H.J.; et al. AI-based improvement in lung cancer detection on chest radiographs: Results of a multi-reader study in NLST dataset. Eur. Radiol. 2021, 31, 9664–9674. [Google Scholar] [CrossRef] [PubMed]

- Gould, M.K.; Donington, J.; Lynch, W.R.; Mazzone, P.J.; Midthun, D.E.; Naidich, D.P.; Wiener, R.S. Evaluation of Individuals With Pulmonary Nodules: When Is It Lung Cancer? Chest 2013, 143, e93S–e120S. [Google Scholar] [CrossRef]

- MacMahon, H.; Naidich, D.P.; Goo, J.M.; Lee, K.S.; Leung, A.N.C.; Mayo, J.R.; Mehta, A.C.; Ohno, Y.; Powell, C.A.; Prokop, M.; et al. Guidelines for Management of Incidental Pulmonary Nodules Detected on CT Images: From the Fleischner Society 2017. Radiology 2017, 284, 228–243. [Google Scholar] [CrossRef] [PubMed]

- Chi, J.; Zhang, S.; Yu, X.; Wu, C.; Jiang, Y. A Novel Pulmonary Nodule Detection Model Based on Multi-Step Cascaded Networks. Sensors 2020, 20, 4301. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Liu, Z.; Huang, H.; Lin, M.; Luo, D. Evaluating the performance of a deep learning-based computer-aided diagnosis (DL-CAD) system for detecting and characterizing lung nodules: Comparison with the performance of double reading by radiologists. Thorac. Cancer 2018, 10, 183–192. [Google Scholar] [CrossRef] [PubMed]

- Pinsky, P.F. Lung cancer screening with low-dose CT: A world-wide view. Transl. Lung Cancer Res. 2018, 7, 234–242. [Google Scholar] [CrossRef]

- Ardila, D.; Kiraly, A.P.; Bharadwaj, S.; Choi, B.; Reicher, J.J.; Peng, L.; Tse, D.; Etemadi, M.; Ye, W.; Corrado, G.; et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat. Med. 2019, 25, 954–961. [Google Scholar] [CrossRef]

- Recondo, G.; Facchinetti, F.; Olaussen, K.A.; Besse, B.; Friboulet, L. Making the first move in EGFR-driven or ALK-driven NSCLC: First-generation or next-generation TKI? Nat. Rev. Clin. Oncol. 2018, 15, 694–708. [Google Scholar] [CrossRef]

- Wang, S.; Yu, H.; Gan, Y.; Wu, Z.; Li, E.; Li, X.; Cao, J.; Zhu, Y.; Wang, L.; Deng, H.; et al. Mining whole-lung information by artificial intelligence for predicting EGFR genotype and targeted therapy response in lung cancer: A multicohort study. Lancet Digit. Health 2022, 4, e309–e319. [Google Scholar] [CrossRef]

- Huang, P.; Lin, C.T.; Li, Y.; Tammemagi, M.C.; Brock, M.V.; Atkar-Khattra, S.; Xu, Y.; Hu, P.; Mayo, J.R.; Schmidt, H.; et al. Prediction of lung cancer risk at follow-up screening with low-dose CT: A training and validation study of a deep learning method. Lancet Digit. Health 2019, 1, e353–e362. [Google Scholar] [CrossRef] [PubMed]

- Borrelli, P.; Ly, J.; Kaboteh, R.; Ulén, J.; Enqvist, O.; Trägårdh, E.; Edenbrandt, L. AI-based detection of lung lesions in [18F]FDG PET-CT from lung cancer patients. EJNMMI Phys. 2021, 8, 32. [Google Scholar] [CrossRef] [PubMed]

- Harmon, S.A.; Tuncer, S.; Sanford, T.; Choyke, P.L.; Turkbey, B. Artificial intelligence at the intersection of pathology and radiology in prostate cancer. Diagn. Interv. Radiol. 2019, 25, 183–188. [Google Scholar] [CrossRef]

- George, R.S.; Htoo, A.; Cheng, M.; Masterson, T.M.; Huang, K.; Adra, N.; Kaimakliotis, H.Z.; Akgul, M.; Cheng, L. Artificial intelligence in prostate cancer: Definitions, current research, and future directions. Urol. Oncol. 2022, 40, 262–270. [Google Scholar] [CrossRef]

- Bhattacharya, I.; Khandwala, Y.S.; Vesal, S.; Shao, W.; Yang, Q.; Soerensen, S.J.; Fan, R.E.; Ghanouni, P.; Kunder, C.A.; Brooks, J.D.; et al. A review of artificial intelligence in prostate cancer detection on imaging. Ther. Adv. Urol. 2022, 14, 17562872221128791. [Google Scholar] [CrossRef] [PubMed]

- Ploussard, G.; Rouvière, O.; Rouprêt, M.; Bergh, R.v.D.; Renard-Penna, R. The current role of MRI for guiding active surveillance in prostate cancer. Nat. Rev. Urol. 2022, 19, 357–365. [Google Scholar] [CrossRef] [PubMed]

- Sonn, G.A.; Fan, R.E.; Ghanouni, P.; Wang, N.N.; Brooks, J.D.; Loening, A.M.; Daniel, B.L.; To’o, K.J.; Thong, A.E.; Leppert, J.T. Prostate Magnetic Resonance Imaging Interpretation Varies Substantially Across Radiologists. Eur. Urol. Focus 2019, 5, 592–599. [Google Scholar] [CrossRef]

- Lay, N.S.; Tsehay, Y.; Greer, M.D.; Turkbey, B.; Kwak, J.T.; Choyke, P.L.; Pinto, P.; Wood, B.J.; Summers, R.M. Detection of prostate cancer in multiparametric MRI using random forest with instance weighting. J. Med. Imaging 2017, 4, 24506. [Google Scholar] [CrossRef]

- Fernandes, M.C.; Yildirim, O.; Woo, S.; Vargas, H.A.; Hricak, H. The role of MRI in prostate cancer: Current and future directions. Magn. Reson. Mater. Phys. Biol. Med. 2022, 35, 503–521. [Google Scholar] [CrossRef]

- Hiremath, A.; Shiradkar, R.; Fu, P.; Mahran, A.; Rastinehad, A.R.; Tewari, A.; Tirumani, S.H.; Purysko, A.; Ponsky, L.; Madabhushi, A. An integrated nomogram combining deep learning, Prostate Imaging–Reporting and Data System (PI-RADS) scoring, and clinical variables for identification of clinically significant prostate cancer on biparametric MRI: A retrospective multicentre study. Lancet Digit. Health 2021, 3, e445–e454. [Google Scholar] [CrossRef]

- Li, M.; Yang, L.; Yue, Y.; Xu, J.; Huang, C.; Song, B. Use of Radiomics to Improve Diagnostic Performance of PI-RADS v2.1 in Prostate Cancer. Front. Oncol. 2021, 10, 631831. [Google Scholar] [CrossRef]

- Hou, Y.; Zhang, Y.-H.; Bao, J.; Bao, M.-L.; Yang, G.; Shi, H.-B.; Song, Y.; Zhang, Y.-D. Artificial intelligence is a promising prospect for the detection of prostate cancer extracapsular extension with mpMRI: A two-center comparative study. Eur. J. Nucl. Med. Mol. Imaging 2021, 48, 3805–3816. [Google Scholar] [CrossRef] [PubMed]

- Ström, P.; Kartasalo, K.; Olsson, H.; Solorzano, L.; Delahunt, B.; Berney, D.M.; Bostwick, D.G.; Evans, A.J.; Grignon, D.J.; Humphrey, P.A.; et al. Artificial intelligence for diagnosis and grading of prostate cancer in biopsies: A population-based, diagnostic study. Lancet Oncol. 2020, 21, 222–232. [Google Scholar] [CrossRef] [PubMed]

- Raciti, P.; Sue, J.; Ceballos, R.; Godrich, R.; Kunz, J.D.; Kapur, S.; Reuter, V.; Grady, L.; Kanan, C.; Klimstra, D.S.; et al. Novel artificial intelligence system increases the detection of prostate cancer in whole slide images of core needle biopsies. Mod. Pathol. 2020, 33, 2058–2066. [Google Scholar] [CrossRef]

- McGarry, S.D.; Hurrell, S.L.; Iczkowski, K.A.; Hall, W.; Kaczmarowski, A.L.; Banerjee, A.; Keuter, T.; Jacobsohn, K.; Bukowy, J.D.; Nevalainen, M.T.; et al. Radio-pathomic Maps of Epithelium and Lumen Density Predict the Location of High-Grade Prostate Cancer. Int. J. Radiat. Oncol.* Biol.* Phys. 2018, 101, 1179–1187. [Google Scholar] [CrossRef] [PubMed]

- Chatterjee, A.; Bourne, R.M.; Wang, S.; Devaraj, A.; Gallan, A.J.; Antic, T.; Karczmar, G.S.; Oto, A. Diagnosis of Prostate Cancer with Noninvasive Estimation of Prostate Tissue Composition by Using Hybrid Multidimensional MR Imaging: A Feasibility Study. Radiology 2018, 287, 864–873. [Google Scholar] [CrossRef]

- Corradini, D.; Brizi, L.; Gaudiano, C.; Bianchi, L.; Marcelli, E.; Golfieri, R.; Schiavina, R.; Testa, C.; Remondini, D. Challenges in the Use of Artificial Intelligence for Prostate Cancer Diagnosis from Multiparametric Imaging Data. Cancers 2021, 13, 3944. [Google Scholar] [CrossRef] [PubMed]

- Mehralivand, S.; Harmon, S.A.; Shih, J.H.; Smith, C.P.; Lay, N.; Argun, B.; Bednarova, S.; Baroni, R.H.; Canda, A.E.; Ercan, K.; et al. Multicenter Multireader Evaluation of an Artificial Intelligence–Based Attention Mapping System for the Detection of Prostate Cancer With Multiparametric MRI. Am. J. Roentgenol. 2020, 215, 903–912. [Google Scholar] [CrossRef]

- Giannini, V.; Mazzetti, S.; Defeudis, A.; Stranieri, G.; Calandri, M.; Bollito, E.; Bosco, M.; Porpiglia, F.; Manfredi, M.; De Pascale, A.; et al. A Fully Automatic Artificial Intelligence System Able to Detect and Characterize Prostate Cancer Using Multiparametric MRI: Multicenter and Multi-Scanner Validation. Front. Oncol. 2021, 11, 718155. [Google Scholar] [CrossRef]

- Tătaru, O.S.; Vartolomei, M.D.; Rassweiler, J.J.; Virgil, O.; Lucarelli, G.; Porpiglia, F.; Amparore, D.; Manfredi, M.; Carrieri, G.; Falagario, U.; et al. Artificial Intelligence and Machine Learning in Prostate Cancer Patient Management—Current Trends and Future Perspectives. Diagnostics 2021, 11, 354. [Google Scholar] [CrossRef]

- Alarcón-Zendejas, A.P.; Scavuzzo, A.; Jiménez-Ríos, M.A.; Álvarez-Gómez, R.M.; Montiel-Manríquez, R.; Castro-Hernández, C.; Jiménez-Dávila, M.A.; Pérez-Montiel, D.; González-Barrios, R.; Jiménez-Trejo, F.; et al. The promising role of new molecular biomarkers in prostate cancer: From coding and non-coding genes to artificial intelligence approaches. Prostate Cancer Prostatic Dis. 2022, 25, 431–443. [Google Scholar] [CrossRef] [PubMed]

| Term | Abbreviation | Definition |

|---|---|---|

| Artificial Intelligence | AI | An overarching term referring to the ability for a machine to perform intelligent tasks such as decision-making. |

| Machine Learning | ML | A subset of artificial intelligence referring to the ability for a machine to make predictions based on trends and patterns. |

| Deep Learning | DL | A subset of machine learning referring to the utilization of neural networks to develop predictions. |

| Convolutional Neural Network | CNN | A type of algorithm utilized in deep learning that relies on a feed forward mechanism and is utilized in object identification. DCNNs and RCNNs are specific types of CNNs. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Patel, K.; Huang, S.; Rashid, A.; Varghese, B.; Gholamrezanezhad, A. A Narrative Review of the Use of Artificial Intelligence in Breast, Lung, and Prostate Cancer. Life 2023, 13, 2011. https://doi.org/10.3390/life13102011

Patel K, Huang S, Rashid A, Varghese B, Gholamrezanezhad A. A Narrative Review of the Use of Artificial Intelligence in Breast, Lung, and Prostate Cancer. Life. 2023; 13(10):2011. https://doi.org/10.3390/life13102011

Chicago/Turabian StylePatel, Kishan, Sherry Huang, Arnav Rashid, Bino Varghese, and Ali Gholamrezanezhad. 2023. "A Narrative Review of the Use of Artificial Intelligence in Breast, Lung, and Prostate Cancer" Life 13, no. 10: 2011. https://doi.org/10.3390/life13102011