Artificial Intelligence-Powered Quality Assurance: Transforming Diagnostics, Surgery, and Patient Care—Innovations, Limitations, and Future Directions

Abstract

1. Introduction

2. Methodology of This Review

3. AI in Diagnostics

3.1. Radiology

3.1.1. Vetting of Medical Imaging Referrals

3.1.2. Automated Image Quality Assessment

3.1.3. Automated Medical Image Segmentation

3.1.4. Emergency and Trauma Radiology

3.2. Endoscopy

3.2.1. Detection of Lesions and Neoplasm

3.2.2. Determining Depth of Cancer Invasion

3.2.3. Reducing Blind Spot Rate

3.2.4. Diagnostic Challenges of Misclassification in AI-Assisted Endoscopy

3.3. Pathology

3.3.1. Automated Detection of Out-of-Focus Areas

3.3.2. Automated Cell Quantification

3.3.3. Linking Morphological Features to Molecular and Genomic Profiles

3.3.4. Prognosis Prediction

3.3.5. Optimizing AI for Efficiency, Affordability, and User Experience

3.3.6. Clinical Validation of AI-Based Pathology Tool

4. AI in Treatment

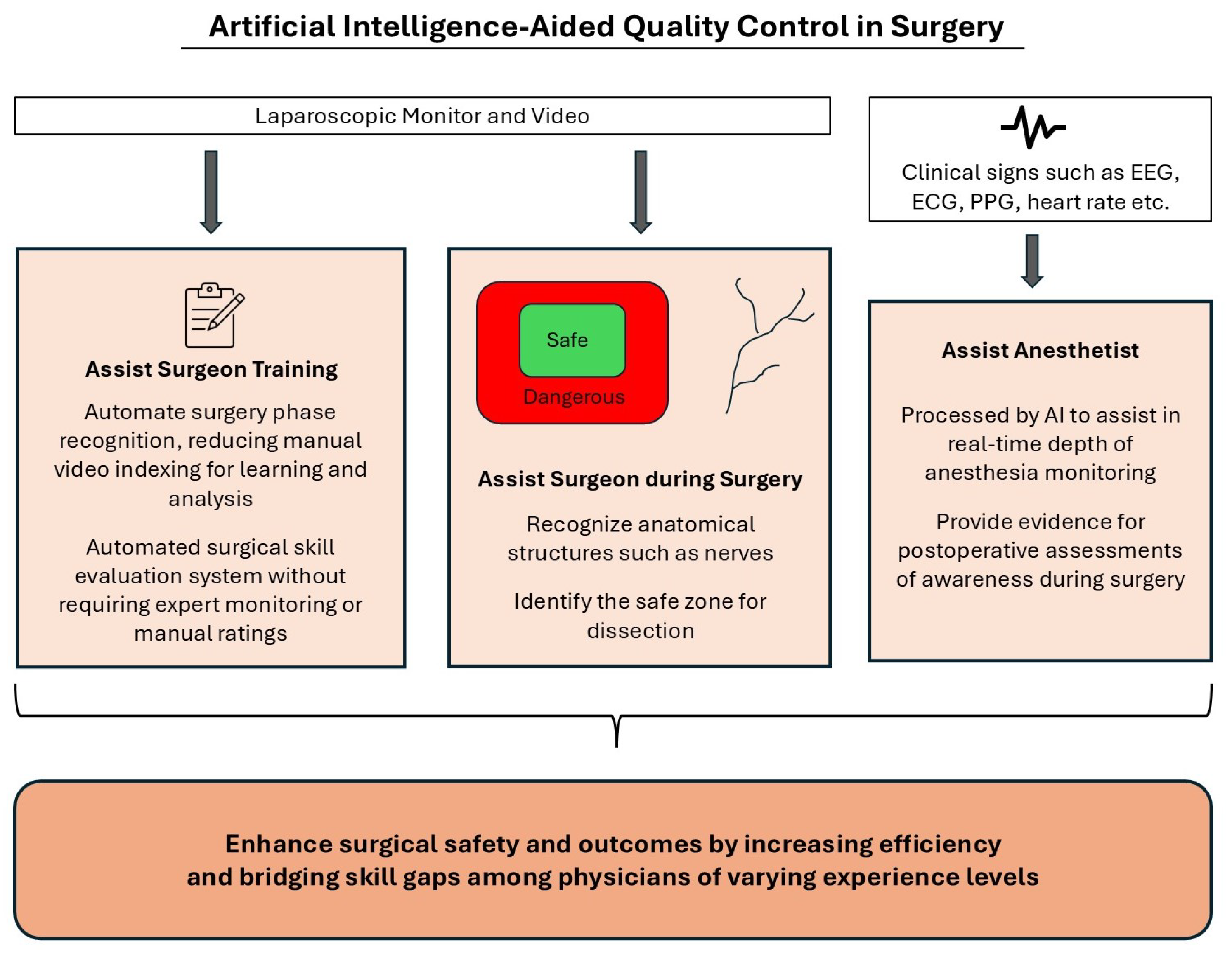

4.1. Surgery

4.1.1. Anatomical Structure Recognition

4.1.2. Surgical Workflow Recognition

4.1.3. Surgical Skill Assessment

4.1.4. Depth of Anesthesia Monitoring

5. Healthcare Applications and Devices for Personalized Treatment

6. Challenges of AI in Healthcare

7. Guidelines for AI-Driven Quality Control in the Medical Field

8. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Collins, F.S.; Varmus, H. A new initiative on precision medicine. N. Engl. J. Med. 2015, 372, 793–795. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.Y.; Kim, J. Artificial intelligence technology trends and IBM Watson references in the medical field. Korean Med. Educ. Rev. 2016, 18, 51–57. [Google Scholar] [CrossRef]

- Underdahl, L.; Ditri, M.; Duthely, L.M. Physician burnout: Evidence-based roadmaps to prioritizing and supporting personal wellbeing. J. Healthc. Leadersh. 2024, 16, 15–27. [Google Scholar] [CrossRef]

- West, C.P.; Shanafelt, T.D.; Kolars, J.C. Quality of life, burnout, educational debt, and medical knowledge among internal medicine residents. JAMA 2011, 306, 952–960. [Google Scholar] [CrossRef]

- Kerlin, M.P.; McPeake, J.; Mikkelsen, M.E. Burnout and joy in the profession of critical care medicine. Annu. Update Intensive Care Emerg. Med. 2020, 2020, 633–642. [Google Scholar]

- Bera, K.; Schalper, K.A.; Rimm, D.L.; Velcheti, V.; Madabhushi, A. Artificial intelligence in digital pathology—New tools for diagnosis and precision oncology. Nat. Rev. Clin. Oncol. 2019, 16, 703–715. [Google Scholar] [CrossRef] [PubMed]

- Cambridge University Press. Artificial Intelligence. Cambridge English Dictionary. Available online: https://dictionary.cambridge.org/dictionary/english/artificial-intelligence (accessed on 20 February 2025).

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Mintz, Y.; Brodie, R. Introduction to artificial intelligence in medicine. Minim. Invasive Ther. Allied Technol. 2019, 28, 73–81. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Networks Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef]

- Schmidt, R.M. Recurrent neural networks (rnns): A gentle introduction and overview. arXiv 2019, arXiv:1912.05911. [Google Scholar]

- Albahra, S.; Gorbett, T.; Robertson, S.; D’Aleo, G.; Kumar, S.V.S.; Ockunzzi, S.; Lallo, D.; Hu, B.; Rashidi, H.H. Artificial intelligence and machine learning overview in pathology & laboratory medicine: A general review of data preprocessing and basic supervised concepts. In Seminars in Diagnostic Pathology; Elsevier: Amsterdam, The Netherlands, 2023; Volume 40, pp. 71–87. [Google Scholar]

- Bradley, A.P. The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit. 1997, 30, 1145–1159. [Google Scholar] [CrossRef]

- Plevris, V.; Solorzano, G.; Bakas, N.P.; Ben Seghier, M.E.A. Investigation of performance metrics in regression analysis and machine learning-based prediction models. In Proceedings of the 8th European Congress on Computational Methods in Applied Sciences and Engineering (ECCOMAS Congress 2022), European Community on Computational Methods in Applied Sciences, Oslo, Norway, 5–9 June 2022. [Google Scholar]

- Clarke, J.; Akudjedu, T.; Salifu, Y. Vetting of medical imaging referrals: A scoping review of the radiographers’ role. Radiography 2023, 29, 767–776. [Google Scholar] [CrossRef]

- Potočnik, J.; Thomas, E.; Killeen, R.; Foley, S.; Lawlor, A.; Stowe, J. Automated vetting of radiology referrals: Exploring natural language processing and traditional machine learning approaches. Insights Imaging 2022, 13, 127. [Google Scholar] [CrossRef] [PubMed]

- Ooi, J.; Ng, S.; Khor, C.; Chong, M.; Tay, C.; Koh, H.; Tan, T. Service evaluation of radiographer-led vetting and protocoling of Computed Tomography (CT) scan requests in a Singapore public healthcare institution. Radiography 2023, 29, 139–144. [Google Scholar] [CrossRef] [PubMed]

- Bhatia, N.; Trivedi, H.; Safdar, N.; Heilbrun, M.E. Artificial intelligence in quality improvement: Reviewing uses of artificial intelligence in noninterpretative processes from clinical decision support to education and feedback. J. Am. Coll. Radiol. 2020, 17, 1382–1387. [Google Scholar] [CrossRef]

- Lehnert, B.E.; Bree, R.L. Analysis of appropriateness of outpatient CT and MRI referred from primary care clinics at an academic medical center: How critical is the need for improved decision support? J. Am. Coll. Radiol. 2010, 7, 192–197. [Google Scholar] [CrossRef]

- Alanazi, A.; Cradock, A.; Rainford, L. Development of lumbar spine MRI referrals vetting models using machine learning and deep learning algorithms: Comparison models vs healthcare professionals. Radiography 2022, 28, 674–683. [Google Scholar] [CrossRef]

- Branco, L.R.; Ger, R.B.; Mackin, D.S.; Zhou, S.; Court, L.E.; Layman, R.R. Proof of concept for radiomics-based quality assurance for computed tomography. J. Appl. Clin. Med Phys. 2019, 20, 199–205. [Google Scholar] [CrossRef]

- Adjeiwaah, M.; Garpebring, A.; Nyholm, T. Sensitivity analysis of different quality assurance methods for magnetic resonance imaging in radiotherapy. Phys. Imaging Radiat. Oncol. 2020, 13, 21–27. [Google Scholar] [CrossRef]

- Tracey, J.; Moss, L.; Ashmore, J. Application of synthetic data in the training of artificial intelligence for automated quality assurance in magnetic resonance imaging. Med Phys. 2023, 50, 5621–5629. [Google Scholar] [CrossRef]

- Ho, P.S.; Hwang, Y.S.; Tsai, H.Y. Machine learning framework for automatic image quality evaluation involving a mammographic American College of Radiology phantom. Phys. Medica 2022, 102, 1–8. [Google Scholar] [CrossRef]

- Whaley, J.S.; Pressman, B.D.; Wilson, J.R.; Bravo, L.; Sehnert, W.J.; Foos, D.H. Investigation of the variability in the assessment of digital chest X-ray image quality. J. Digit. Imaging 2013, 26, 217–226. [Google Scholar] [CrossRef] [PubMed]

- Nousiainen, K.; Mäkelä, T.; Piilonen, A.; Peltonen, J.I. Automating chest radiograph imaging quality control. Phys. Medica 2021, 83, 138–145. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment anything in medical images. Nat. Commun. 2024, 15, 654. [Google Scholar] [CrossRef] [PubMed]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Han, R.; Ai, T.; Yu, P.; Kang, H.; Tao, Q.; Xia, L. Serial quantitative chest CT assessment of COVID-19: A deep learning approach. Radiol. Cardiothorac. Imaging 2020, 2, e200075. [Google Scholar] [CrossRef]

- Wadhwa, A.; Bhardwaj, A.; Verma, V.S. A review on brain tumor segmentation of MRI images. Magn. Reson. Imaging 2019, 61, 247–259. [Google Scholar] [CrossRef]

- Kline, T.L.; Korfiatis, P.; Edwards, M.E.; Blais, J.D.; Czerwiec, F.S.; Harris, P.C.; King, B.F.; Torres, V.E.; Erickson, B.J. Performance of an artificial multi-observer deep neural network for fully automated segmentation of polycystic kidneys. J. Digit. Imaging 2017, 30, 442–448. [Google Scholar] [CrossRef]

- Rosen, M.P.; Siewert, B.; Sands, D.Z.; Bromberg, R.; Edlow, J.; Raptopoulos, V. Value of abdominal CT in the emergency department for patients with abdominal pain. Eur. Radiol. 2003, 13, 418–424. [Google Scholar] [CrossRef]

- Alobeidi, F.; Aviv, R.I. Emergency imaging of intracerebral haemorrhage. New Insights Intracerebral Hemorrhage 2016, 37, 13–26. [Google Scholar]

- Lamb, L.; Kashani, P.; Ryan, J.; Hebert, G.; Sheikh, A.; Thornhill, R.; Fasih, N. Impact of an in-house emergency radiologist on report turnaround time. Can. J. Emerg. Med. 2015, 17, 21–26. [Google Scholar] [CrossRef] [PubMed]

- Chong, S.T.; Robinson, J.D.; Davis, M.A.; Bruno, M.A.; Roberge, E.A.; Reddy, S.; Pyatt, R.S., Jr.; Friedberg, E.B. Emergency radiology: Current challenges and preparing for continued growth. J. Am. Coll. Radiol. 2019, 16, 1447–1455. [Google Scholar] [CrossRef]

- Jalal, S.; Parker, W.; Ferguson, D.; Nicolaou, S. Exploring the role of artificial intelligence in an emergency and trauma radiology department. Can. Assoc. Radiol. J. 2021, 72, 167–174. [Google Scholar] [CrossRef]

- Levin, S.; Toerper, M.; Hamrock, E.; Hinson, J.S.; Barnes, S.; Gardner, H.; Dugas, A.; Linton, B.; Kirsch, T.; Kelen, G. Machine-learning-based electronic triage more accurately differentiates patients with respect to clinical outcomes compared with the emergency severity index. Ann. Emerg. Med. 2018, 71, 565–574. [Google Scholar] [CrossRef] [PubMed]

- Rava, R.A.; Seymour, S.E.; LaQue, M.E.; Peterson, B.A.; Snyder, K.V.; Mokin, M.; Waqas, M.; Hoi, Y.; Davies, J.M.; Levy, E.I.; et al. Assessment of an artificial intelligence algorithm for detection of intracranial hemorrhage. World Neurosurg. 2021, 150, e209–e217. [Google Scholar] [CrossRef]

- Matsoukas, S.; Scaggiante, J.; Schuldt, B.R.; Smith, C.J.; Chennareddy, S.; Kalagara, R.; Majidi, S.; Bederson, J.B.; Fifi, J.T.; Mocco, J.; et al. Accuracy of artificial intelligence for the detection of intracranial hemorrhage and chronic cerebral microbleeds: A systematic review and pooled analysis. La Radiol. Medica 2022, 127, 1106–1123. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.; MacKinnon, T. Artificial intelligence in fracture detection: Transfer learning from deep convolutional neural networks. Clin. Radiol. 2018, 73, 439–445. [Google Scholar] [CrossRef]

- Dreizin, D.; Zhou, Y.; Fu, S.; Wang, Y.; Li, G.; Champ, K.; Siegel, E.; Wang, Z.; Chen, T.; Yuille, A.L. A multiscale deep learning method for quantitative visualization of traumatic hemoperitoneum at CT: Assessment of feasibility and comparison with subjective categorical estimation. Radiol. Artif. Intell. 2020, 2, e190220. [Google Scholar] [CrossRef]

- Kim, S.; Yoon, H.; Lee, M.J.; Kim, M.J.; Han, K.; Yoon, J.K.; Kim, H.C.; Shin, J.; Shin, H.J. Performance of deep learning-based algorithm for detection of ileocolic intussusception on abdominal radiographs of young children. Sci. Rep. 2019, 9, 19420. [Google Scholar] [CrossRef]

- Soffer, S.; Klang, E.; Shimon, O.; Barash, Y.; Cahan, N.; Greenspana, H.; Konen, E. Deep learning for pulmonary embolism detection on computed tomography pulmonary angiogram: A systematic review and meta-analysis. Sci. Rep. 2021, 11, 15814. [Google Scholar] [CrossRef]

- Katai, H.; Ishikawa, T.; Akazawa, K.; Isobe, Y.; Miyashiro, I.; Oda, I.; Tsujitani, S.; Ono, H.; Tanabe, S.; Fukagawa, T.; et al. Five-year survival analysis of surgically resected gastric cancer cases in Japan: A retrospective analysis of more than 100,000 patients from the nationwide registry of the Japanese Gastric Cancer Association (2001–2007). Gastric Cancer 2018, 21, 144–154. [Google Scholar] [CrossRef] [PubMed]

- Jeon, H.K.; Kim, G.H.; Lee, B.E.; Park, D.Y.; Song, G.A.; Kim, D.H.; Jeon, T.Y. Long-term outcome of endoscopic submucosal dissection is comparable to that of surgery for early gastric cancer: A propensity-matched analysis. Gastric Cancer 2018, 21, 133–143. [Google Scholar] [CrossRef] [PubMed]

- Menon, S.; Trudgill, N. How commonly is upper gastrointestinal cancer missed at endoscopy? A meta-analysis. Endosc. Int. Open 2014, 2, E46–E50. [Google Scholar] [CrossRef]

- Pimenta-Melo, A.R.; Monteiro-Soares, M.; Libânio, D.; Dinis-Ribeiro, M. Missing rate for gastric cancer during upper gastrointestinal endoscopy: A systematic review and meta-analysis. Eur. J. Gastroenterol. Hepatol. 2016, 28, 1041–1049. [Google Scholar] [CrossRef]

- Chadwick, G.; Groene, O.; Riley, S.; Hardwick, R.; Crosby, T.; Hoare, J.; Hanna, G.B.; Greenaway, K.; Cromwell, D.A. Gastric cancers missed during endoscopy in England. Clin. Gastroenterol. Hepatol. 2015, 13, 1264–1270. [Google Scholar] [CrossRef] [PubMed]

- Guda, N.M.; Partington, S.; Vakil, N. Inter-and intra-observer variability in the measurement of length at endoscopy: Implications for the measurement of Barrett’s esophagus. Gastrointest. Endosc. 2004, 59, 655–658. [Google Scholar] [CrossRef]

- Hyun, Y.S.; Han, D.S.; Bae, J.H.; Park, H.S.; Eun, C.S. Interobserver variability and accuracy of high-definition endoscopic diagnosis for gastric intestinal metaplasia among experienced and inexperienced endoscopists. J. Korean Med Sci. 2013, 28, 744–749. [Google Scholar] [CrossRef]

- Zhou, R.; Liu, J.; Zhang, C.; Zhao, Y.; Su, J.; Niu, Q.; Liu, C.; Guo, Z.; Cui, Z.; Zhong, X.; et al. Efficacy of a real-time intelligent quality-control system for the detection of early upper gastrointestinal neoplasms: A multicentre, single-blinded, randomised controlled trial. EClinicalMedicine 2024, 75, 102803. [Google Scholar] [CrossRef]

- Hirasawa, T.; Aoyama, K.; Tanimoto, T.; Ishihara, S.; Shichijo, S.; Ozawa, T.; Ohnishi, T.; Fujishiro, M.; Matsuo, K.; Fujisaki, J.; et al. Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images. Gastric Cancer 2018, 21, 653–660. [Google Scholar] [CrossRef]

- Ikenoyama, Y.; Hirasawa, T.; Ishioka, M.; Namikawa, K.; Yoshimizu, S.; Horiuchi, Y.; Ishiyama, A.; Yoshio, T.; Tsuchida, T.; Takeuchi, Y.; et al. Detecting early gastric cancer: Comparison between the diagnostic ability of convolutional neural networks and endoscopists. Dig. Endosc. 2021, 33, 141–150. [Google Scholar] [CrossRef]

- Choi, J.; Kim, S.G.; Im, J.P.; Kim, J.S.; Jung, H.C.; Song, I.S. Endoscopic prediction of tumor invasion depth in early gastric cancer. Gastrointest. Endosc. 2011, 73, 917–927. [Google Scholar] [CrossRef] [PubMed]

- Ikehara, H.; Saito, Y.; Matsuda, T.; Uraoka, T.; Murakami, Y. Diagnosis of depth of invasion for early colorectal cancer using magnifying colonoscopy. J. Gastroenterol. Hepatol. 2010, 25, 905–912. [Google Scholar] [CrossRef]

- Tokai, Y.; Yoshio, T.; Aoyama, K.; Horie, Y.; Yoshimizu, S.; Horiuchi, Y.; Ishiyama, A.; Tsuchida, T.; Hirasawa, T.; Sakakibara, Y.; et al. Application of artificial intelligence using convolutional neural networks in determining the invasion depth of esophageal squamous cell carcinoma. Esophagus 2020, 17, 250–256. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.; Kim, S.; Im, J.; Kim, J.; Jung, H.; Song, I. Comparison of endoscopic ultrasonography and conventional endoscopy for prediction of depth of tumor invasion in early gastric cancer. Endoscopy 2010, 42, 705–713. [Google Scholar] [CrossRef]

- Luo, X.; Wang, J.; Han, Z.; Yu, Y.; Chen, Z.; Huang, F.; Xu, Y.; Cai, J.; Zhang, Q.; Qiao, W.; et al. Artificial intelligence- enhanced white-light colonoscopy with attention guidance predicts colorectal cancer invasion depth. Gastrointest. Endosc. 2021, 94, 627–638. [Google Scholar] [CrossRef]

- Zhu, Y.; Wang, Q.C.; Xu, M.D.; Zhang, Z.; Cheng, J.; Zhong, Y.S.; Zhang, Y.Q.; Chen, W.F.; Yao, L.Q.; Zhou, P.H.; et al. Application of convolutional neural network in the diagnosis of the invasion depth of gastric cancer based on conventional endoscopy. Gastrointest. Endosc. 2019, 89, 806–815. [Google Scholar] [CrossRef]

- Yao, K.; Uedo, N.; Muto, M.; Ishikawa, H. Development of an e-learning system for teaching endoscopists how to diagnose early gastric cancer: Basic principles for improving early detection. Gastric Cancer 2017, 20, 28–38. [Google Scholar] [CrossRef] [PubMed]

- Beg, S.; Ragunath, K.; Wyman, A.; Banks, M.; Trudgill, N.; Pritchard, M.D.; Riley, S.; Anderson, J.; Griffiths, H.; Bhandari, P.; et al. Quality standards in upper gastrointestinal endoscopy: A position statement of the British Society of Gastroenterology (BSG) and Association of Upper Gastrointestinal Surgeons of Great Britain and Ireland (AUGIS). Gut 2017, 66, 1886–1899. [Google Scholar] [CrossRef]

- Moon, H.S. Improving the endoscopic detection rate in patients with early gastric cancer. Clin. Endosc. 2015, 48, 291. [Google Scholar] [CrossRef]

- Takiyama, H.; Ozawa, T.; Ishihara, S.; Fujishiro, M.; Shichijo, S.; Nomura, S.; Miura, M.; Tada, T. Automatic anatomical classification of esophagogastroduodenoscopy images using deep convolutional neural networks. Sci. Rep. 2018, 8, 7497. [Google Scholar] [CrossRef]

- Wu, L.; Zhou, W.; Wan, X.; Zhang, J.; Shen, L.; Hu, S.; Ding, Q.; Mu, G.; Yin, A.; Huang, X.; et al. A deep neural network improves endoscopic detection of early gastric cancer without blind spots. Endoscopy 2019, 51, 522–531. [Google Scholar] [CrossRef] [PubMed]

- Choi, S.J.; Khan, M.A.; Choi, H.S.; Choo, J.; Lee, J.M.; Kwon, S.; Keum, B.; Chun, H.J. Development of artificial intelligence system for quality control of photo documentation in esophagogastroduodenoscopy. Surg. Endosc. 2022, 36, 57–65. [Google Scholar] [CrossRef]

- Lai, E.J.; Calderwood, A.H.; Doros, G.; Fix, O.K.; Jacobson, B.C. The Boston bowel preparation scale: A valid and reliable instrument for colonoscopy-oriented research. Gastrointest. Endosc. 2009, 69, 620–625. [Google Scholar] [CrossRef]

- Zhu, Y.; Xu, Y.; Chen, W.; Zhao, T.; Zheng, S. A CNN-based cleanliness evaluation for bowel preparation in colonoscopy. In Proceedings of the 2019 12th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Suzhou, China, 19–21 October 2019; pp. 1–5. [Google Scholar]

- Yoon, H.J.; Kim, S.; Kim, J.H.; Keum, J.S.; Oh, S.I.; Jo, J.; Chun, J.; Youn, Y.H.; Park, H.; Kwon, I.G.; et al. A lesion-based convolutional neural network improves endoscopic detection and depth prediction of early gastric cancer. J. Clin. Med. 2019, 8, 1310. [Google Scholar] [CrossRef]

- Mori, Y.; Kudo, S.E.; Mohmed, H.E.; Misawa, M.; Ogata, N.; Itoh, H.; Oda, M.; Mori, K. Artificial intelligence and upper gastrointestinal endoscopy: Current status and future perspective. Dig. Endosc. 2019, 31, 378–388. [Google Scholar] [CrossRef]

- Kohlberger, T.; Liu, Y.; Moran, M.; Chen, P.H.C.; Brown, T.; Hipp, J.D.; Mermel, C.H.; Stumpe, M.C. Whole-slide image focus quality: Automatic assessment and impact on ai cancer detection. J. Pathol. Informatics 2019, 10, 39. [Google Scholar] [CrossRef] [PubMed]

- Senaras, C.; Niazi, M.K.K.; Lozanski, G.; Gurcan, M.N. DeepFocus: Detection of out-of-focus regions in whole slide digital images using deep learning. PLoS ONE 2018, 13, e0205387. [Google Scholar] [CrossRef] [PubMed]

- Balkenhol, M.C.; Tellez, D.; Vreuls, W.; Clahsen, P.C.; Pinckaers, H.; Ciompi, F.; Bult, P.; Van Der Laak, J.A. Deep learning assisted mitotic counting for breast cancer. Lab. Investig. 2019, 99, 1596–1606. [Google Scholar] [CrossRef]

- Cireşan, D.C.; Giusti, A.; Gambardella, L.M.; Schmidhuber, J. Mitosis detection in breast cancer histology images with deep neural networks. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2013: 16th International Conference, Nagoya, Japan, 22–26 September 2013, Proceedings, Part II 16; Springer: Berlin/Heidelberg, Germany, 2013; pp. 411–418. [Google Scholar]

- Turkki, R.; Linder, N.; Kovanen, P.E.; Pellinen, T.; Lundin, J. Antibody-supervised deep learning for quantification of tumor-infiltrating immune cells in hematoxylin and eosin stained breast cancer samples. J. Pathol. Inform. 2016, 7, 38. [Google Scholar] [CrossRef]

- Kang, L.I.; Sarullo, K.; Marsh, J.N.; Lu, L.; Khonde, P.; Ma, C.; Haritunians, T.; Mujukian, A.; Mengesha, E.; McGovern, D.P.; et al. Development of a deep learning algorithm for Paneth cell density quantification for inflammatory bowel disease. EBioMedicine 2024, 110, 105440. [Google Scholar] [CrossRef]

- Dedeurwaerdere, F.; Claes, K.B.; Van Dorpe, J.; Rottiers, I.; Van der Meulen, J.; Breyne, J.; Swaerts, K.; Martens, G. Comparison of microsatellite instability detection by immunohistochemistry and molecular techniques in colorectal and endometrial cancer. Sci. Rep. 2021, 11, 12880. [Google Scholar] [CrossRef] [PubMed]

- Greenson, J.K.; Bonner, J.D.; Ben-Yzhak, O.; Cohen, H.I.; Miselevich, I.; Resnick, M.B.; Trougouboff, P.; Tomsho, L.D.; Kim, E.; Low, M.; et al. Phenotype of microsatellite unstable colorectal carcinomas: Well-differentiated and focally mucinous tumors and the absence of dirty necrosis correlate with microsatellite instability. Am. J. Surg. Pathol. 2003, 27, 563–570. [Google Scholar] [CrossRef]

- Yamashita, R.; Long, J.; Longacre, T.; Peng, L.; Berry, G.; Martin, B.; Higgins, J.; Rubin, D.L.; Shen, J. Deep learning model for the prediction of microsatellite instability in colorectal cancer: A diagnostic study. Lancet Oncol. 2021, 22, 132–141. [Google Scholar] [CrossRef]

- Kather, J.N.; Pearson, A.T.; Halama, N.; Jäger, D.; Krause, J.; Loosen, S.H.; Marx, A.; Boor, P.; Tacke, F.; Neumann, U.P.; et al. Deep learning can predict microsatellite instability directly from histology in gastrointestinal cancer. Nat. Med. 2019, 25, 1054–1056. [Google Scholar] [CrossRef] [PubMed]

- Coudray, N.; Ocampo, P.S.; Sakellaropoulos, T.; Narula, N.; Snuderl, M.; Fenyö, D.; Moreira, A.L.; Razavian, N.; Tsirigos, A. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nat. Med. 2018, 24, 1559–1567. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Zhang, B.; Topatana, W.; Cao, J.; Zhu, H.; Juengpanich, S.; Mao, Q.; Yu, H.; Cai, X. Classification and mutation prediction based on histopathology H&E images in liver cancer using deep learning. NPJ Precis. Oncol. 2020, 4, 14. [Google Scholar]

- Echle, A.; Rindtorff, N.T.; Brinker, T.J.; Luedde, T.; Pearson, A.T.; Kather, J.N. Deep learning in cancer pathology: A new generation of clinical biomarkers. Br. J. Cancer 2021, 124, 686–696. [Google Scholar] [CrossRef]

- Donnem, T.; Hald, S.M.; Paulsen, E.E.; Richardsen, E.; Al-Saad, S.; Kilvaer, T.K.; Brustugun, O.T.; Helland, A.; Lund-Iversen, M.; Poehl, M.; et al. Stromal CD8+ T-cell density—a promising supplement to TNM staging in non–small cell lung cancer. Clin. Cancer Res. 2015, 21, 2635–2643. [Google Scholar] [CrossRef]

- Kleppe, A.; Albregtsen, F.; Vlatkovic, L.; Pradhan, M.; Nielsen, B.; Hveem, T.S.; Askautrud, H.A.; Kristensen, G.B.; Nesbakken, A.; Trovik, J.; et al. Chromatin organisation and cancer prognosis: A pan-cancer study. Lancet Oncol. 2018, 19, 356–369. [Google Scholar] [CrossRef]

- Veta, M.; Kornegoor, R.; Huisman, A.; Verschuur-Maes, A.H.; Viergever, M.A.; Pluim, J.P.; Van Diest, P.J. Prognostic value of automatically extracted nuclear morphometric features in whole slide images of male breast cancer. Mod. Pathol. 2012, 25, 1559–1565. [Google Scholar] [CrossRef]

- Bychkov, D.; Linder, N.; Turkki, R.; Nordling, S.; Kovanen, P.E.; Verrill, C.; Walliander, M.; Lundin, M.; Haglund, C.; Lundin, J. Deep learning based tissue analysis predicts outcome in colorectal cancer. Sci. Rep. 2018, 8, 3395. [Google Scholar] [CrossRef]

- Kather, J.N.; Krisam, J.; Charoentong, P.; Luedde, T.; Herpel, E.; Weis, C.A.; Gaiser, T.; Marx, A.; Valous, N.A.; Ferber, D.; et al. Predicting survival from colorectal cancer histology slides using deep learning: A retrospective multicenter study. PLoS Med. 2019, 16, e1002730. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Ju, J.; Guo, L.; Ji, B.; Shi, S.; Yang, Z.; Gao, S.; Yuan, X.; Tian, G.; Liang, Y.; et al. Prediction of HER2-positive breast cancer recurrence and metastasis risk from histopathological images and clinical information via multimodal deep learning. Comput. Struct. Biotechnol. J. 2022, 20, 333–342. [Google Scholar] [CrossRef] [PubMed]

- Yamashita, R.; Long, J.; Saleem, A.; Rubin, D.L.; Shen, J. Deep learning predicts postsurgical recurrence of hepatocellular carcinoma from digital histopathologic images. Sci. Rep. 2021, 11, 2047. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Zhao, J.; Marostica, E.; Yuan, W.; Jin, J.; Zhang, J.; Li, R.; Tang, H.; Wang, K.; Li, Y.; et al. A pathology foundation model for cancer diagnosis and prognosis prediction. Nature 2024, 634, 970–978. [Google Scholar] [CrossRef]

- Yu, G.; Sun, K.; Xu, C.; Shi, X.H.; Wu, C.; Xie, T.; Meng, R.Q.; Meng, X.H.; Wang, K.S.; Xiao, H.M.; et al. Accurate recognition of colorectal cancer with semi-supervised deep learning on pathological images. Nat. Commun. 2021, 12, 6311. [Google Scholar] [CrossRef]

- Peikari, M.; Salama, S.; Nofech-Mozes, S.; Martel, A.L. A cluster-then-label semi-supervised learning approach for pathology image classification. Sci. Rep. 2018, 8, 7193. [Google Scholar] [CrossRef]

- Pan, Y.; Gou, F.; Xiao, C.; Liu, J.; Zhou, J. Semi-supervised recognition for artificial intelligence assisted pathology image diagnosis. Sci. Rep. 2024, 14, 21984. [Google Scholar] [CrossRef]

- Chen, P.H.C.; Gadepalli, K.; MacDonald, R.; Liu, Y.; Kadowaki, S.; Nagpal, K.; Kohlberger, T.; Dean, J.; Corrado, G.S.; Hipp, J.D.; et al. An augmented reality microscope with real-time artificial intelligence integration for cancer diagnosis. Nat. Med. 2019, 25, 1453–1457. [Google Scholar] [CrossRef]

- Lu, M.Y.; Chen, B.; Williamson, D.F.; Chen, R.J.; Zhao, M.; Chow, A.K.; Ikemura, K.; Kim, A.; Pouli, D.; Patel, A.; et al. A multimodal generative AI copilot for human pathology. Nature 2024, 634, 466–473. [Google Scholar] [CrossRef]

- Pulaski, H.; Harrison, S.A.; Mehta, S.S.; Sanyal, A.J.; Vitali, M.C.; Manigat, L.C.; Hou, H.; Madasu Christudoss, S.P.; Hoffman, S.M.; Stanford-Moore, A.; et al. Clinical validation of an AI-based pathology tool for scoring of metabolic dysfunction-associated steatohepatitis. Nat. Med. 2025, 31, 315–322. [Google Scholar] [CrossRef] [PubMed]

- Ichinose, J.; Kobayashi, N.; Fukata, K.; Kanno, K.; Suzuki, A.; Matsuura, Y.; Nakao, M.; Okumura, S.; Mun, M. Accuracy of thoracic nerves recognition for surgical support system using artificial intelligence. Sci. Rep. 2024, 14, 18329. [Google Scholar] [CrossRef]

- Sato, K.; Fujita, T.; Matsuzaki, H.; Takeshita, N.; Fujiwara, H.; Mitsunaga, S.; Kojima, T.; Mori, K.; Daiko, H. Real-time detection of the recurrent laryngeal nerve in thoracoscopic esophagectomy using artificial intelligence. Surg. Endosc. 2022, 36, 5531–5539. [Google Scholar] [CrossRef]

- Kumazu, Y.; Kobayashi, N.; Kitamura, N.; Rayan, E.; Neculoiu, P.; Misumi, T.; Hojo, Y.; Nakamura, T.; Kumamoto, T.; Kurahashi, Y.; et al. Automated segmentation by deep learning of loose connective tissue fibers to define safe dissection planes in robot-assisted gastrectomy. Sci. Rep. 2021, 11, 21198. [Google Scholar] [CrossRef] [PubMed]

- Madani, A.; Namazi, B.; Altieri, M.S.; Hashimoto, D.A.; Rivera, A.M.; Pucher, P.H.; Navarrete-Welton, A.; Sankaranarayanan, G.; Brunt, L.M.; Okrainec, A.; et al. Artificial intelligence for intraoperative guidance: Using semantic segmentation to identify surgical anatomy during laparoscopic cholecystectomy. Ann. Surg. 2022, 276, 363–369. [Google Scholar] [CrossRef]

- Jaskari, J.; Sahlsten, J.; Järnstedt, J.; Mehtonen, H.; Karhu, K.; Sundqvist, O.; Hietanen, A.; Varjonen, V.; Mattila, V.; Kaski, K. Deep learning method for mandibular canal segmentation in dental cone beam computed tomography volumes. Sci. Rep. 2020, 10, 5842. [Google Scholar] [CrossRef] [PubMed]

- Jindanil, T.; Marinho-Vieira, L.E.; de Azevedo-Vaz, S.L.; Jacobs, R. A unique artificial intelligence-based tool for automated CBCT segmentation of mandibular incisive canal. Dentomaxillofacial Radiol. 2023, 52, 20230321. [Google Scholar] [CrossRef] [PubMed]

- Ding, A.S.; Lu, A.; Li, Z.; Sahu, M.; Galaiya, D.; Siewerdsen, J.H.; Unberath, M.; Taylor, R.H.; Creighton, F.X. A self-configuring deep learning network for segmentation of temporal bone anatomy in cone-beam CT imaging. Otolaryngol.-Neck Surg. 2023, 169, 988–998. [Google Scholar] [CrossRef]

- Gillot, M.; Baquero, B.; Le, C.; Deleat-Besson, R.; Bianchi, J.; Ruellas, A.; Gurgel, M.; Yatabe, M.; Al Turkestani, N.; Najarian, K.; et al. Automatic multi-anatomical skull structure segmentation of cone-beam computed tomography scans using 3D UNETR. PLoS ONE 2022, 17, e0275033. [Google Scholar] [CrossRef]

- Pernek, I.; Ferscha, A. A survey of context recognition in surgery. Med. Biol. Eng. Comput. 2017, 55, 1719–1734. [Google Scholar] [CrossRef]

- Kitaguchi, D.; Takeshita, N.; Matsuzaki, H.; Hasegawa, H.; Igaki, T.; Oda, T.; Ito, M. Deep learning-based automatic surgical step recognition in intraoperative videos for transanal total mesorectal excision. Surg. Endosc. 2022, 36, 1143–1151. [Google Scholar] [CrossRef] [PubMed]

- Chadebecq, F.; Lovat, L.B.; Stoyanov, D. Artificial intelligence and automation in endoscopy and surgery. Nat. Rev. Gastroenterol. Hepatol. 2023, 20, 171–182. [Google Scholar] [CrossRef]

- Kitaguchi, D.; Takeshita, N.; Matsuzaki, H.; Oda, T.; Watanabe, M.; Mori, K.; Kobayashi, E.; Ito, M. Automated laparoscopic colorectal surgery workflow recognition using artificial intelligence: Experimental research. Int. J. Surg. 2020, 79, 88–94. [Google Scholar] [CrossRef]

- Cao, J.; Yip, H.C.; Chen, Y.; Scheppach, M.; Luo, X.; Yang, H.; Cheng, M.K.; Long, Y.; Jin, Y.; Chiu, P.W.Y.; et al. Intelligent surgical workflow recognition for endoscopic submucosal dissection with real-time animal study. Nat. Commun. 2023, 14, 6676. [Google Scholar] [CrossRef]

- Nakawala, H.; Bianchi, R.; Pescatori, L.E.; De Cobelli, O.; Ferrigno, G.; De Momi, E. “Deep-Onto” network for surgical workflow and context recognition. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 685–696. [Google Scholar] [CrossRef]

- Long, Y.; Wu, J.Y.; Lu, B.; Jin, Y.; Unberath, M.; Liu, Y.H.; Heng, P.A.; Dou, Q. Relational graph learning on visual and kinematics embeddings for accurate gesture recognition in robotic surgery. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13346–13353. [Google Scholar]

- Van Amsterdam, B.; Funke, I.; Edwards, E.; Speidel, S.; Collins, J.; Sridhar, A.; Kelly, J.; Clarkson, M.J.; Stoyanov, D. Gesture recognition in robotic surgery with multimodal attention. IEEE Trans. Med. Imaging 2022, 41, 1677–1687. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Majewicz Fey, A. Deep learning with convolutional neural network for objective skill evaluation in robot-assisted surgery. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 1959–1970. [Google Scholar] [CrossRef] [PubMed]

- Funke, I.; Mees, S.T.; Weitz, J.; Speidel, S. Video-based surgical skill assessment using 3D convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1217–1225. [Google Scholar] [CrossRef]

- Kitaguchi, D.; Teramura, K.; Matsuzaki, H.; Hasegawa, H.; Takeshita, N.; Ito, M. Automatic purse-string suture skill assessment in transanal total mesorectal excision using deep learning-based video analysis. Bjs Open 2023, 7, zrac176. [Google Scholar] [CrossRef]

- Chan, M.T.; Cheng, B.C.; Lee, T.M.; Gin, T.; CODA Trial Group. BIS-guided anesthesia decreases postoperative delirium and cognitive decline. J. Neurosurg. Anesthesiol. 2013, 25, 33–42. [Google Scholar] [CrossRef]

- Punjasawadwong, Y.; Phongchiewboon, A.; Bunchungmongkol, N. Bispectral index for improving anaesthetic delivery and postoperative recovery. In Cochrane Database of Systematic Reviews; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2014. [Google Scholar]

- Fritz, B.; Maybrier, H.; Avidan, M. Intraoperative electroencephalogram suppression at lower volatile anaesthetic concentrations predicts postoperative delirium occurring in the intensive care unit. Br. J. Anaesth. 2018, 121, 241–248. [Google Scholar] [CrossRef]

- Abenstein, J. Is BIS monitoring cost-effective? In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 7041–7044. [Google Scholar]

- Chowdhury, M.R.; Madanu, R.; Abbod, M.F.; Fan, S.Z.; Shieh, J.S. Deep learning via ECG and PPG signals for prediction of depth of anesthesia. Biomed. Signal Process. Control 2021, 68, 102663. [Google Scholar] [CrossRef]

- Shalbaf, A.; Saffar, M.; Sleigh, J.W.; Shalbaf, R. Monitoring the depth of anesthesia using a new adaptive neurofuzzy system. IEEE J. Biomed. Health Inform. 2017, 22, 671–677. [Google Scholar] [CrossRef] [PubMed]

- Park, Y.; Han, S.H.; Byun, W.; Kim, J.H.; Lee, H.C.; Kim, S.J. A real-time depth of anesthesia monitoring system based on deep neural network with large EDO tolerant EEG analog front-end. IEEE Trans. Biomed. Circuits Syst. 2020, 14, 825–837. [Google Scholar] [CrossRef] [PubMed]

- Gu, Y.; Liang, Z.; Hagihira, S. Use of multiple EEG features and artificial neural network to monitor the depth of anesthesia. Sensors 2019, 19, 2499. [Google Scholar] [CrossRef] [PubMed]

- Ranta, S.O.V.; Hynynen, M.; Räsänen, J. Application of artificial neural networks as an indicator of awareness with recall during general anaesthesia. J. Clin. Monit. Comput. 2002, 17, 53–60. [Google Scholar] [CrossRef]

- Xing, Y.; Yang, K.; Lu, A.; Mackie, K.; Guo, F. Sensors and devices guided by artificial intelligence for personalized pain medicine. Cyborg Bionic Syst. 2024, 5, 160. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X.; Ye, X.; Chen, D. Improved health outcomes of nasopharyngeal carcinoma patients 3 years after treatment by the AI-assisted home enteral nutrition management. Front. Nutr. 2025, 11, 1481073. [Google Scholar] [CrossRef]

- Li, C.; Bian, Y.; Zhao, Z.; Liu, Y.; Guo, Y. Advances in biointegrated wearable and implantable optoelectronic devices for cardiac healthcare. Cyborg Bionic Syst. 2024, 5, 0172. [Google Scholar] [CrossRef]

- Tikhomirov, L.; Semmler, C.; McCradden, M.; Searston, R.; Ghassemi, M.; Oakden-Rayner, L. Medical artificial intelligence for clinicians: The lost cognitive perspective. Lancet Digit. Health 2024, 6, e589–e594. [Google Scholar] [CrossRef]

- Al Kuwaiti, A.; Nazer, K.; Al-Reedy, A.; Al-Shehri, S.; Al-Muhanna, A.; Subbarayalu, A.V.; Al Muhanna, D.; Al-Muhanna, F.A. A review of the role of artificial intelligence in healthcare. J. Pers. Med. 2023, 13, 951. [Google Scholar] [CrossRef] [PubMed]

- Yu, K.H.; Healey, E.; Leong, T.Y.; Kohane, I.S.; Manrai, A.K. Medical Artificial Intelligence and Human Values. N. Engl. J. Med. 2024, 390, 1895–1904. [Google Scholar] [CrossRef] [PubMed]

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial intelligence in healthcare: Past, present and future. Stroke Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef]

- Williams, E.; Kienast, M.; Medawar, E.; Reinelt, J.; Merola, A.; Klopfenstein, S.A.I.; Flint, A.R.; Heeren, P.; Poncette, A.S.; Balzer, F.; et al. A standardized clinical data harmonization pipeline for scalable AI application deployment (FHIR-DHP): Validation and usability study. JMIR Med. Inform. 2023, 11, e43847. [Google Scholar] [CrossRef] [PubMed]

- Forcier, M.B.; Gallois, H.; Mullan, S.; Joly, Y. Integrating artificial intelligence into health care through data access: Can the GDPR act as a beacon for policymakers? J. Law Biosci. 2019, 6, 317–335. [Google Scholar] [CrossRef]

- de Hond, A.A.; Kant, I.M.; Fornasa, M.; Cinà, G.; Elbers, P.W.; Thoral, P.J.; Arbous, M.S.; Steyerberg, E.W. Predicting readmission or death after discharge from the ICU: External validation and retraining of a machine learning model. Crit. Care Med. 2023, 51, 291–300. [Google Scholar] [CrossRef]

- Elhaddad, M.; Hamam, S. AI-driven clinical decision support systems: An ongoing pursuit of potential. Cureus 2024, 16, e57728. [Google Scholar] [CrossRef]

- Khanna, N.N.; Maindarkar, M.A.; Viswanathan, V.; Fernandes, J.F.E.; Paul, S.; Bhagawati, M.; Ahluwalia, P.; Ruzsa, Z.; Sharma, A.; Kolluri, R.; et al. Economics of artificial intelligence in healthcare: Diagnosis vs. treatment. Healthcare 2022, 10, 2493. [Google Scholar] [CrossRef]

| Terms | Definition |

|---|---|

| Artificial neural network (ANN) | ANN is a mathematical model that analyzes complex relationships between inputs and outputs. It mimics the human brain by processing various types of data, identifying patterns, and applying them to decision-making tasks [9]. |

| Convolutional neural network (CNN) | CNN is a specialized neural network using convolution structures inspired by the human visual system. It employs local connections, weight sharing, and pooling to reduce complexity, making it particularly effective for image and pattern recognition tasks [10]. |

| Recurrent neural network (RNN) | RNNs process sequential data, such as text, time series, and speech, by using feedback loops to capture temporal dependencies. They are ideal for tasks like speech recognition and video tagging, where understanding the sequence and timing of data is crucial [11]. |

| Terms | Definition |

|---|---|

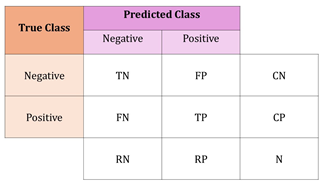

| Confusion Matrix | A tool used to assess the performance of a classification model by comparing its predicted outcomes with the actual results. For binary classification, the matrix is typically 2 × 2, displaying true positives, true negatives, false positives, and false negatives. In multi-class classification tasks, the matrix is extended to include additional rows and columns to account for all classes, showing the model’s performance in terms of these outcomes for each class [12]. |

| |

| N = total number of examples | |

| CN = number of truly negative examples | |

| CP = number of truly positive examples | |

| RN = number of predicted negative examples | |

| RP = number of predicted positive examples | |

| Recall (Sensitivity) | A test’s ability to correctly identify true positives |

| Sensitivity = (TP)/(CP) | |

| Specificity | A test’s ability to correctly identify true negatives |

| Specificity = (TN)/(CN) | |

| Accuracy | Represents the number of correct predictions over all predictions |

| Accuracy = (TN + TP)/(CP + CN) [13] | |

| Precision (positive | Accuracy of positive predictions |

| predictive value) | Precision = (TP)/(RP) |

| Negative predictive value | Accuracy of negative predictions |

| (NPV) | NPV = (TN)/(RN) |

| F1 score | Harmonic mean of precision and recall |

| F1 = 2× (precision × recall)/(precision + recall) [12] | |

| Receiver operating characteristic curve (ROC curve) | A graphical plot that illustrates the performance of a binary classifier system as its discrimination threshold is varied. It is created by plotting the true positive rate (sensitivity) against the false positive rate (1− specificity) at various threshold settings, providing a comprehensive view of the classifier’s performance across different decision thresholds [13]. |

| Area under the ROC Curve (AUROC) | Represents the overall performance of a classification model by assessing its ability to distinguish between positive and negative classes across all decision thresholds. A higher AUC indicates better model discrimination, providing a comprehensive evaluation of the system’s performance over varying operating points, rather than relying on a single sensitivity-specificity pair [13]. |

| Mean Absolute Error (MAE) | Average absolute differences between predicted and actual values. |

| Mean Squared Error (MSE) | Average squared differences between predicted and actual values, emphasizing larger errors [14]. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, Y.; Lee, M.; Lee, Y.; Kim, K.; Kim, T. Artificial Intelligence-Powered Quality Assurance: Transforming Diagnostics, Surgery, and Patient Care—Innovations, Limitations, and Future Directions. Life 2025, 15, 654. https://doi.org/10.3390/life15040654

Shin Y, Lee M, Lee Y, Kim K, Kim T. Artificial Intelligence-Powered Quality Assurance: Transforming Diagnostics, Surgery, and Patient Care—Innovations, Limitations, and Future Directions. Life. 2025; 15(4):654. https://doi.org/10.3390/life15040654

Chicago/Turabian StyleShin, Yoojin, Mingyu Lee, Yoonji Lee, Kyuri Kim, and Taejung Kim. 2025. "Artificial Intelligence-Powered Quality Assurance: Transforming Diagnostics, Surgery, and Patient Care—Innovations, Limitations, and Future Directions" Life 15, no. 4: 654. https://doi.org/10.3390/life15040654

APA StyleShin, Y., Lee, M., Lee, Y., Kim, K., & Kim, T. (2025). Artificial Intelligence-Powered Quality Assurance: Transforming Diagnostics, Surgery, and Patient Care—Innovations, Limitations, and Future Directions. Life, 15(4), 654. https://doi.org/10.3390/life15040654