Interrater Agreement and Reliability of PERCIST and Visual Assessment When Using 18F-FDG-PET/CT for Response Monitoring of Metastatic Breast Cancer

Abstract

:1. Introduction

2. Materials and Methods

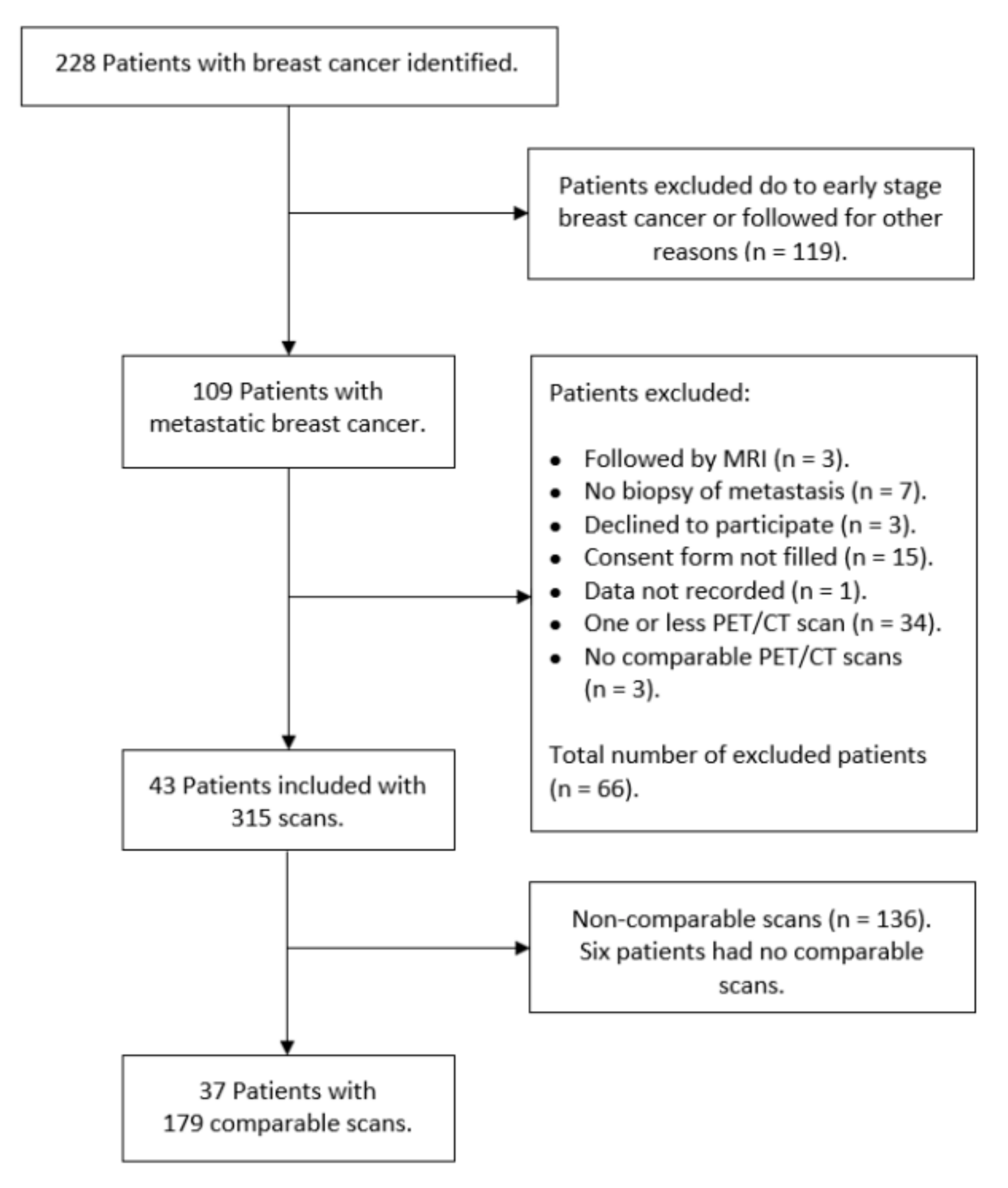

2.1. Patients

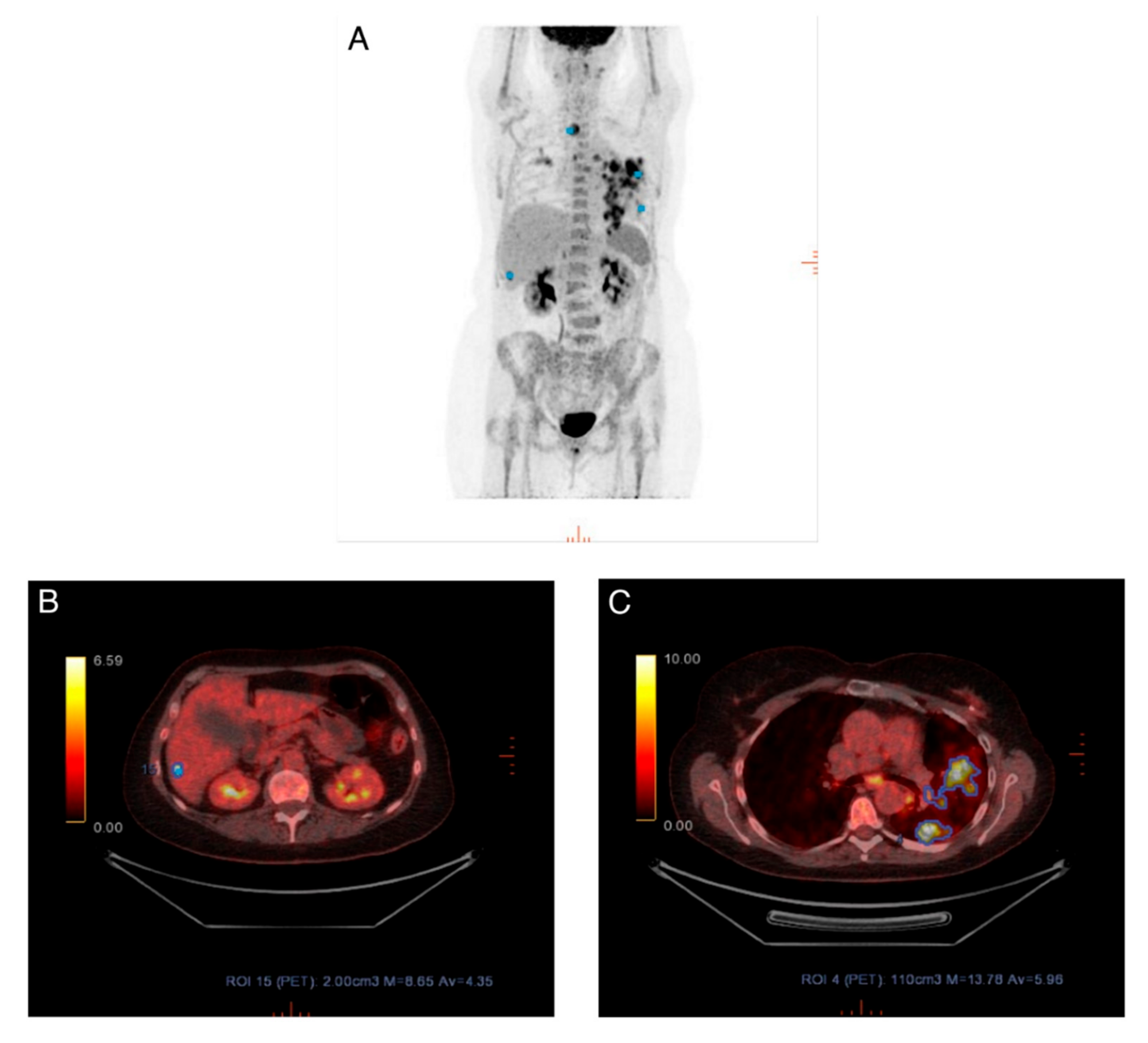

2.2. FDG-PET/CT Imaging Technique

2.3. Visual Assessment

2.4. PERCIST Protocol

2.5. PERCIST Assessment

2.6. Statistical Analyses

3. Results

3.1. Visual Assessment

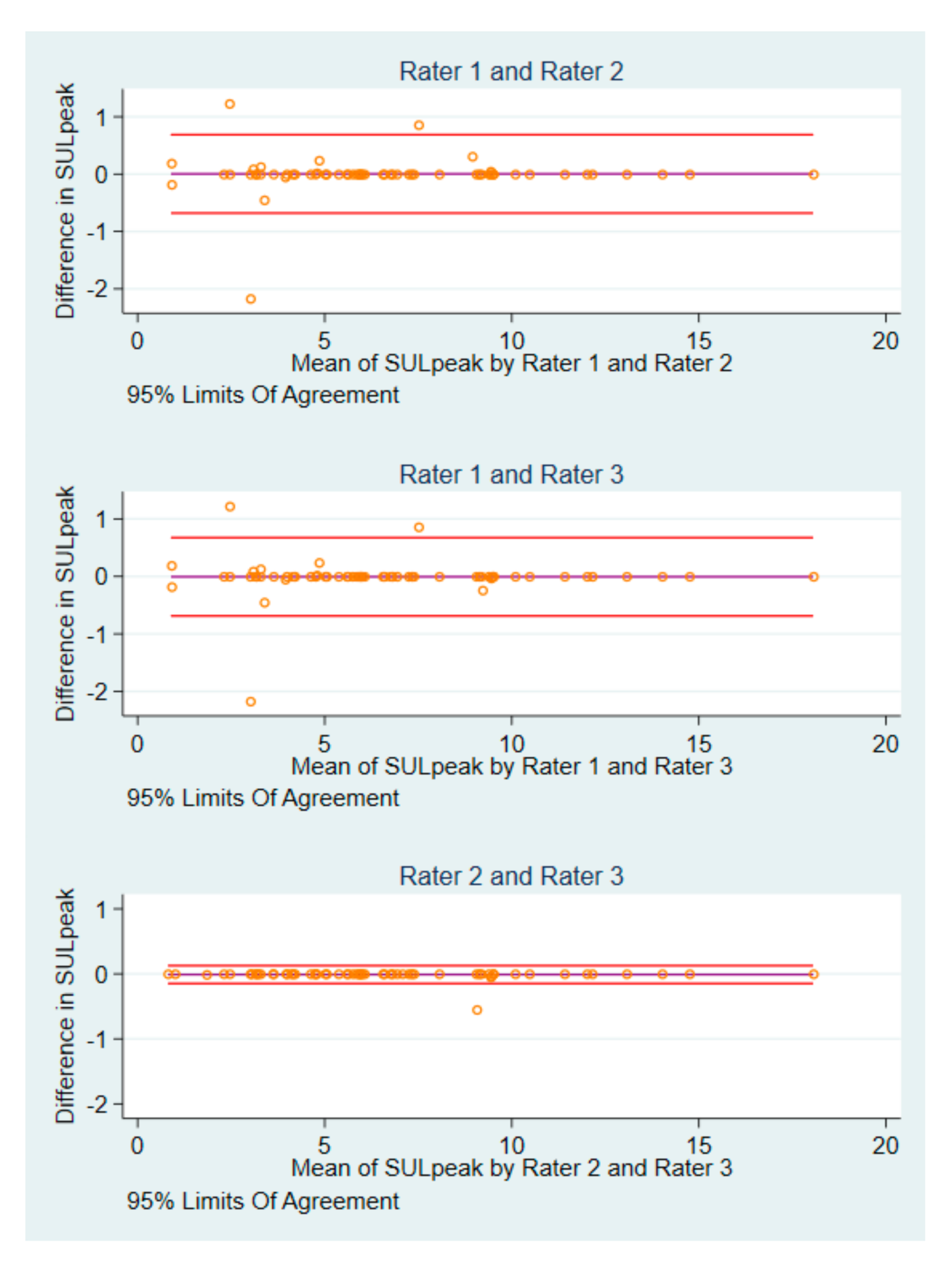

3.2. Differences in Baseline SULpeak Values and Image IDs

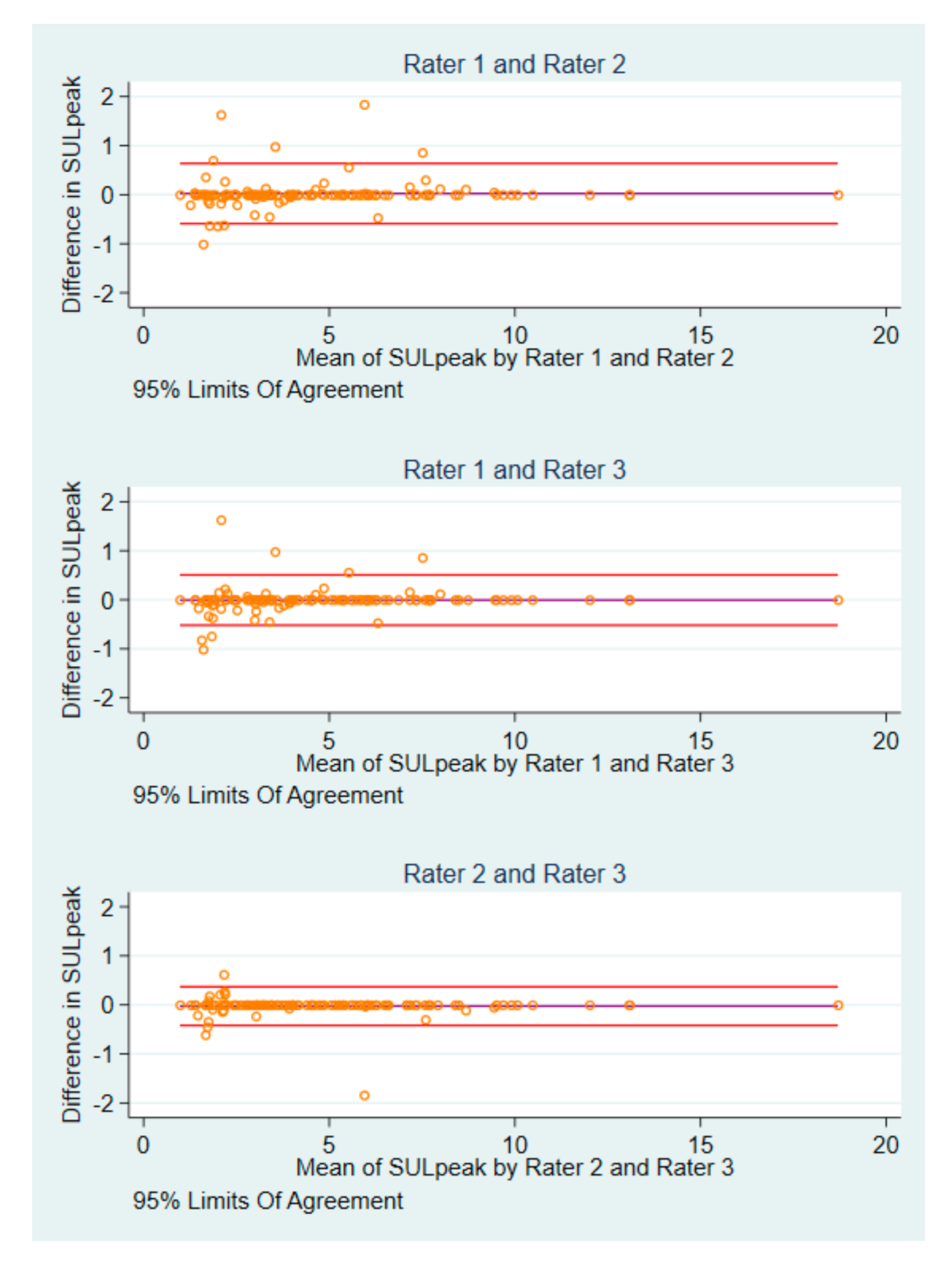

3.3. Differences in Post-Baseline SULpeak Values and Image IDs

3.4. PERCIST Assessment

3.5. Response versus Non-Response

3.6. Differences in Agreement and Reliability Measures

3.7. Intrarater Agreement

4. Discussion

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bray, F.; Ferlay, J.; Soerjomataram, I.; Siegel, R.L.; Torre, L.A.; Jemal, A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. Ca Cancer J. Clin. 2018. [Google Scholar] [CrossRef] [Green Version]

- Weigelt, B.; Peterse, J.L.; van ‘t Veer, L.J. Breast cancer metastasis: Markers and models. Nat. Rev. Cancer 2005, 5, 591–602. [Google Scholar] [CrossRef] [PubMed]

- Eisenhauer, E.A.; Therasse, P.; Bogaerts, J.; Schwartz, L.H.; Sargent, D.; Ford, R.; Dancey, J.; Arbuck, S.; Gwyther, S.; Mooney, M.; et al. New response evaluation criteria in solid tumours: Revised RECIST guideline (version 1.1). Eur. J. Cancer 2009, 45, 228–247. [Google Scholar] [CrossRef] [PubMed]

- Christiansen, P.; Jylling, A.M.B.; Nilsen, M.H.; Ejlertsen, B.; Vejborg, I.; Gerdes, A.-M.; Jensen, M.-B.; Mouridsen, H.T. Behandling af Cancer Mammae Med Fjernmetastaser. Available online: http://www.dbcg.dk/PDF%20Filer/Kap_18_Behandling_af_cancer_mammae_med_fjernmetastaser_Tyge_rev_25.07.2016.pdf (accessed on 5 October 2020).

- Cardoso, F.; Senkus, E.; Costa, A.; Papadopoulos, E.; Aapro, M.; Andre, F.; Harbeck, N.; Aguilar Lopez, B.; Barrios, C.H.; Bergh, J.; et al. 4th ESO-ESMO International Consensus Guidelines for Advanced Breast Cancer (ABC 4)dagger. Ann. Oncol. 2018, 29, 1634–1657. [Google Scholar] [CrossRef] [PubMed]

- Scheidhauer, K.; Walter, C.; Seemann, M.D. FDG PET and other imaging modalities in the primary diagnosis of suspicious breast lesions. Eur. J. Nucl. Med. Mol. Imaging 2004, 31 (Suppl. S1), S70–S79. [Google Scholar] [CrossRef]

- Wahl, R.L.; Jacene, H.; Kasamon, Y.; Lodge, M.A. From RECIST to PERCIST: Evolving Considerations for PET response criteria in solid tumors. J. Nucl. Med. 2009, 50 (Suppl. S1), 122S–150S. [Google Scholar] [CrossRef] [Green Version]

- Riedl, C.C.; Pinker, K.; Ulaner, G.A.; Ong, L.T.; Baltzer, P.; Jochelson, M.S.; McArthur, H.L.; Gonen, M.; Dickler, M.; Weber, W.A. Comparison of FDG-PET/CT and contrast-enhanced CT for monitoring therapy response in patients with metastatic breast cancer. Eur. J. Nucl. Med. Mol. Imaging 2017, 44, 1428–1437. [Google Scholar] [CrossRef]

- Kottner, J.; Audige, L.; Brorson, S.; Donner, A.; Gajewski, B.J.; Hrobjartsson, A.; Roberts, C.; Shoukri, M.; Streiner, D.L. Guidelines for Reporting Reliability and Agreement Studies (GRRAS) were proposed. J. Clin. Epidemiol. 2011, 64, 96–106. [Google Scholar] [CrossRef]

- Fledelius, J.; Khalil, A.; Hjorthaug, K.; Frokiaer, J. Inter-observer agreement improves with PERCIST 1.0 as opposed to qualitative evaluation in non-small cell lung cancer patients evaluated with F-18-FDG PET/CT early in the course of chemo-radiotherapy. Ejnmmi Res. 2016, 6, 71. [Google Scholar] [CrossRef] [Green Version]

- O, J.H.; Lodge, M.A.; Wahl, R.L. Practical PERCIST: A Simplified Guide to PET Response Criteria in Solid Tumors 1.0. Radiology 2016, 280, 576–584. [Google Scholar] [CrossRef] [Green Version]

- Pinker, K.; Riedl, C.C.; Ong, L.; Jochelson, M.; Ulaner, G.A.; McArthur, H.; Dickler, M.; Gonen, M.; Weber, W.A. The Impact That Number of Analyzed Metastatic Breast Cancer Lesions Has on Response Assessment by 18F-FDG PET/CT Using PERCIST. J. Nucl. Med. 2016, 57, 1102–1104. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Harris, P.A.; Taylor, R.; Thielke, R.; Payne, J.; Gonzalez, N.; Conde, J.G. Research electronic data capture (REDCap)—A metadata-driven methodology and workflow process for providing translational research informatics support. J. Biomed. Inform. 2009, 42, 377–381. [Google Scholar] [CrossRef] [Green Version]

- Vogsen, M.; Bûlow, J.L.; Ljungstrøm, L.; Oltmann, H.R.; Alamdari, T.A.; Naghavi-Behzad, M.; Braad, P.; Gerke, O.; Hildebrandt, M.G. PERCIST for longitudinal response monitoring in metastatic breast cancer. unpublished.

- Boellaard, R.; Delgado-Bolton, R.; Oyen, W.J.; Giammarile, F.; Tatsch, K.; Eschner, W.; Verzijlbergen, F.J.; Barrington, S.F.; Pike, L.C.; Weber, W.A.; et al. FDG PET/CT: EANM procedure guidelines for tumour imaging: Version 2.0. Eur. J. Nucl. Med. Mol. Imaging 2015, 42, 328–354. [Google Scholar] [CrossRef] [PubMed]

- Teras, M.; Tolvanen, T.; Johansson, J.J.; Williams, J.J.; Knuuti, J. Performance of the new generation of whole-body PET/CT scanners: Discovery STE and Discovery VCT. Eur. J. Nucl. Med. Mol. Imaging 2007, 34, 1683–1692. [Google Scholar] [CrossRef] [PubMed]

- Bettinardi, V.; Presotto, L.; Rapisarda, E.; Picchio, M.; Gianolli, L.; Gilardi, M.C. Physical performance of the new hybrid PETCT Discovery-690. Med. Phys. 2011, 38, 5394–5411. [Google Scholar] [CrossRef]

- Hsu, D.F.C.; Ilan, E.; Peterson, W.T.; Uribe, J.; Lubberink, M.; Levin, C.S. Studies of a Next-Generation Silicon-Photomultiplier-Based Time-of-Flight PET/CT System. J. Nucl Med. 2017, 58, 1511–1518. [Google Scholar] [CrossRef]

- Pinker, K.; Riedl, C.; Weber, W.A. Evaluating tumor response with FDG PET: Updates on PERCIST, comparison with EORTC criteria and clues to future developments. Eur. J. Nucl. Med. Mol. Imaging 2017, 44, 55–66. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [Green Version]

- Bland, J.M.; Altman, D.G. Measuring agreement in method comparison studies. Stat. Methods Med. Res. 1999, 8, 135–160. [Google Scholar] [CrossRef]

- Bland, J.M.; Altman, D.G. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet 1986, 1, 307–310. [Google Scholar] [CrossRef]

- de Vet, H.C.; Terwee, C.B.; Knol, D.L.; Bouter, L.M. When to use agreement versus reliability measures. J. Clin. Epidemiol. 2006, 59, 1033–1039. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wickstrom, G.; Bendix, T. The “Hawthorne effect”--what did the original Hawthorne studies actually show? Scand. J. Work Environ. Health 2000, 26, 363–367. [Google Scholar] [PubMed]

- Smulders, S.A.; Gundy, C.M.; van Lingen, A.; Comans, E.F.; Smeenk, F.W.; Hoekstra, O.S.; Study Group of Clinical, P.E.T. Observer variation of 2-deoxy-2-[F-18]fluoro-D-glucose-positron emission tomography in mediastinal staging of non-small cell lung cancer as a function of experience, and its potential clinical impact. Mol. Imaging Biol. 2007, 9, 318–322. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- van der Putten, L.; Hoekstra, O.S.; de Bree, R.; Kuik, D.J.; Comans, E.F.; Langendijk, J.A.; Leemans, C.R. 2-Deoxy-2[F-18]FDG-PET for detection of recurrent laryngeal carcinoma after radiotherapy: Interobserver variability in reporting. Mol. Imaging Biol. 2008, 10, 294–303. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wahl, R.L.; Siegel, B.A.; Coleman, R.E.; Gatsonis, C.G. Prospective multicenter study of axillary nodal staging by positron emission tomography in breast cancer: A report of the staging breast cancer with PET Study Group. J. Clin. Oncol. 2004, 22, 277–285. [Google Scholar] [CrossRef]

- Huang, Y.E.; Chen, C.F.; Huang, Y.J.; Konda, S.D.; Appelbaum, D.E.; Pu, Y. Interobserver variability among measurements of the maximum and mean standardized uptake values on (18)F-FDG PET/CT and measurements of tumor size on diagnostic CT in patients with pulmonary tumors. Acta Radiol. 2010, 51, 782–788. [Google Scholar] [CrossRef]

- Goh, V.; Shastry, M.; Engledow, A.; Kozarski, R.; Peck, J.; Endozo, R.; Rodriguez-Justo, M.; Taylor, S.A.; Halligan, S.; Groves, A.M. Integrated (18)F-FDG PET/CT and perfusion CT of primary colorectal cancer: Effect of inter- and intraobserver agreement on metabolic-vascular parameters. AJR Am. J. Roentgenol. 2012, 199, 1003–1009. [Google Scholar] [CrossRef]

- Marom, E.M.; Munden, R.F.; Truong, M.T.; Gladish, G.W.; Podoloff, D.A.; Mawlawi, O.; Broemeling, L.D.; Bruzzi, J.F.; Macapinlac, H.A. Interobserver and intraobserver variability of standardized uptake value measurements in non-small-cell lung cancer. J. Thorac. Imaging 2006, 21, 205–212. [Google Scholar] [CrossRef]

- Jackson, T.; Chung, M.K.; Mercier, G.; Ozonoff, A.; Subramaniam, R.M. FDG PET/CT interobserver agreement in head and neck cancer: FDG and CT measurements of the primary tumor site. Nucl. Med. Commun. 2012, 33, 305–312. [Google Scholar] [CrossRef]

- Jacene, H.; Luber, B.; Wang, H.; Huynh, M.H.; Leal, J.P.; Wahl, R.L. Quantitation of Cancer Treatment Response by (18)F-FDG PET/CT: Multicenter Assessment of Measurement Variability. J. Nucl. Med. 2017, 58, 1429–1434. [Google Scholar] [CrossRef] [Green Version]

- Aide, N.; Lasnon, C.; Veit-Haibach, P.; Sera, T.; Sattler, B.; Boellaard, R. EANM/EARL harmonization strategies in PET quantification: From daily practice to multicentre oncological studies. Eur. J. Nucl. Med. Mol. Imaging 2017, 44, 17–31. [Google Scholar] [CrossRef] [PubMed]

- Helland, F.; Hallin Henriksen, M.; Gerke, O.; Vogsen, M.; Hoilund-Carlsen, P.F.; Hildebrandt, M.G. FDG-PET/CT Versus Contrast-Enhanced CT for Response Evaluation in Metastatic Breast Cancer: A Systematic Review. Diagnostics 2019, 9, 106. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Criterion | Comparison of Raters | Proportion of Agreement | Kappa | ||

|---|---|---|---|---|---|

| Point Estimate | 95% CI | Point Estimate | 95% CI | ||

| PERCIST | 1–2 | 0.75 | 0.67–0.82 | 0.65 | 0.55–0.75 |

| 1–3 | 0.77 | 0.68–0.83 | 0.69 | 0.59–0.78 | |

| 2–3 | 0.78 | 0.70–0.84 | 0.70 | 0.60–0.79 | |

| All | 0.65 | 0.57–0.73 | 0.68 | 0.60–0.75 | |

| Visual assessment | 1–2 | 0.68 | 0.60–0.75 | 0.58 | 0.47–0.69 |

| 1–3 | 0.65 | 0.57–0.73 | 0.54 | 0.43–0.65 | |

| 2–3 | 0.64 | 0.56–0.71 | 0.52 | 0.42–0.62 | |

| All | 0.52 | 0.44–0.60 | 0.54 | 0.46–0.62 | |

| PERCIST vs. Visual assessment | 1–1 | 0.79 | 0.71–0.85 | 0.73 | 0.64–0.82 |

| 2–2 | 0.79 | 0.72–0.85 | 0.74 | 0.65–0.82 | |

| 3–3 | 0.82 | 0.74–0.87 | 0.74 | 0.65–0.83 | |

| All | 0.80 | 0.75–0.84 | 0.74 | 0.69–0.79 | |

| Response vs. Non-response: PERCIST | 1–2 | 0.79 | 0.71–0.85 | 0.55 | 0.41–0.68 |

| 1–3 | 0.85 | 0.78–0.90 | 0.66 | 0.52–0.73 | |

| 2–3 | 0.84 | 0.77–0.89 | 0.64 | 0.51–0.77 | |

| All | 0.74 | 0.66–0.80 | 0.61 | 0.50–0.72 | |

| Response vs. Non-response: Visual assessment | 1–2 | 0.79 | 0.72–0.85 | 0.56 | 0.41–0.70 |

| 1–3 | 0.81 | 0.74–0.87 | 0.53 | 0.37–0.68 | |

| 2–3 | 0.76 | 0.68–0.82 | 0.47 | 0.33–0.62 | |

| All | 0.68 | 0.60–0.75 | 0.51 | 0.39–0.63 | |

| Response vs. Non-response: PERCIST vs. Visual | 1–1 | 0.94 | 0.89–0.97 | 0.87 | 0.78–0.96 |

| 2–2 | 0.92 | 0.86–0.95 | 0.84 | 0.74–0.93 | |

| 3–3 | 0.91 | 0.86–0.95 | 0.77 | 0.65–0.89 | |

| All | 0.92 | 0.89–0.95 | 0.82 | 0.77–0.88 | |

| Rater 1 | Rater 2 | Total (%) | |||||

| CMR (%) | PMR (%) | SMD (%) | PMD (%) | MR (%) | EA (%) | ||

| CMR (%) | 16 (11.85) | 1 (0.74) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 17 (12.59) |

| PMR (%) | 1 (0.74) | 37 (27.41) | 1 (0.74) | 9 (6.67) | 0 (0) | 2 (1.48) | 50 (37.04) |

| SMD (%) | 0 (0) | 7 (5.19) | 8 (5.93) | 7 (5.19) | 0 (0) | 1 (0.74) | 23 (17.04) |

| PMD (%) | 0 (0) | 7 (5.19) | 2 (1.48) | 34 (25.19) | 2 (1.48) | 0 (0) | 45 (33.33) |

| MR (%) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 0 (0) |

| EA (%) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 0 (0) |

| Total (%) | 17 (12.59) | 52 (38.52) | 11 (8.15) | 50 (37.04) | 2 (1.48) | 3 (2.22) | 135 (100) |

| Rater 1 | Rater 3 | Total (%) | |||||

| CMR (%) | PMR (%) | SMD (%) | PMD (%) | MR (%) | EA (%) | ||

| CMR (%) | 12 (8.76) | 4 (2.92) | 0 (0) | 0 (0) | 0 (0) | 1 (0.73) | 17 (12.41) |

| PMR (%) | 2 (1.46) | 40 (29.20) | 6 (4.38) | 2 (1.46) | 0 (0) | 0 (0) | 50 (36.50) |

| SMD (%) | 0 (0) | 7 (5.11) | 16 (11.68) | 1 (0.73) | 0 (0) | 0 (0) | 24 (17.52) |

| PMD (%) | 0 (0) | 9 (6.57) | 13 (9.49) | 24 (17.52) | 0 (0) | 0 (0) | 46 (33.58) |

| MR (%) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 0 (0) |

| EA (%) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 0 (0) |

| Total (%) | 14 (10.22) | 60 (43.80) | 35 (25.55) | 27 (19.71) | 0 (0) | 1 (0.73) | 137 (100) |

| Rater 2 | Rater 3 | Total (%) | |||||

| CMR (%) | PMR (%) | SMD (%) | PMD (%) | MR (%) | EA (%) | ||

| CMR (%) | 12 (8.7) | 4 (2.90) | 0 (0) | 0 (0) | 0 (0) | 1 (0.72) | 17 (12.32) |

| PMR (%) | 1 (0.72) | 43 (31.16) | 8 (5.80) | 0 (0) | 0 (0) | 0 (0) | 52 (37.68) |

| SMD (%) | 0 (0) | 2 (1.45) | 8 (5.80) | 1 (0.72) | 0 (0) | 0 (0) | 11 (7.97) |

| PMD (%) | 1 (0.72) | 9 (6.52) | 16 (11.59) | 27 (19.57) | 0 (0) | 0 (0) | 53 (38.41) |

| MR (%) | 0 (0) | 0 (0) | 2 (1.46) | 0 (0) | 0 (0) | 0 (0) | 2 (1.45) |

| EA (%) | 0 (0) | 2 (1.45) | 1 (0.72) | 0 (0) | 0 (0) | 0 (0) | 3 (2.17) |

| Total (%) | 14 (10.14) | 60 (43.48) | 35 (25.36) | 28 (20.29) | 0 (0) | 1 (0.72) | 138 (100) |

| Rater 1 | Rater 2 | Total (%) | |||

| CMR (%) | PMR (%) | SMD (%) | PMD (%) | ||

| CMR (%) | 17 (12.06) | 1 (0.71) | 0 (0) | 0 (0) | 18 (12.77) |

| PMR (%) | 0 (0) | 34 (24.11) | 4 (2.84) | 11 (7.80) | 49 (34.75) |

| SMD (%) | 0 (0) | 0 (0) | 17 (12.06) | 7 (7.96) | 24 (17.02) |

| PMD (%) | 0 (0) | 3 (2.13) | 9 (6.38) | 38 (26.95) | 50 (35.46) |

| Total (%) | 17 (12.06) | 38 (26.95) | 30 (21.28) | 56 (39.72) | 141 (100) |

| Rater 1 | Rater 3 | Total (%) | |||

| CMR (%) | PMR (%) | SMD (%) | PMD (%) | ||

| CMR (%) | 13 (9.22) | 4 (2.84) | 1 (0.71) | 0 (0) | 18 (12.77) |

| PMR (%) | 2 (1.42) | 40 (28.37) | 4 (2.84) | 3 (2.13) | 49 (34.75) |

| SMD (%) | 0 (0) | 0 (0) | 22 (15.60) | 2 (1.42) | 24 (17.02) |

| PMD (%) | 0 (0) | 4 (2.84) | 12 (8.51) | 34 (24.11) | 50 (35.46) |

| Total (%) | 15 (10.64) | 48 (34.04) | 39 (27.66) | 39 (27.66) | 141 (100) |

| Rater 2 | Rater 3 | Total (%) | |||

| CMR (%) | PMR (%) | SMD (%) | PMD (%) | ||

| CMR (%) | 12 (8.51) | 4 (2.84) | 1 (0.71) | 0 (0) | 17 (12.06) |

| PMR (%) | 3 (2.13) | 34 (24.11) | 0 (0) | 1 (0.71) | 38 (26.95) |

| SMD (%) | 0 (0) | 0 (0) | 28 (19.86) | 2 (1.42) | 30 (21.28) |

| PMD (%) | 0 (0) | 10 (7.09) | 10 (7.09) | 36 (25.53) | 56 (39.72) |

| Total (%) | 15 (10.54) | 48 (34.04) | 39 (27.66) | 39 (27.66) | 141 (100) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sørensen, J.S.; Vilstrup, M.H.; Holm, J.; Vogsen, M.; Bülow, J.L.; Ljungstrøm, L.; Braad, P.-E.; Gerke, O.; Hildebrandt, M.G. Interrater Agreement and Reliability of PERCIST and Visual Assessment When Using 18F-FDG-PET/CT for Response Monitoring of Metastatic Breast Cancer. Diagnostics 2020, 10, 1001. https://doi.org/10.3390/diagnostics10121001

Sørensen JS, Vilstrup MH, Holm J, Vogsen M, Bülow JL, Ljungstrøm L, Braad P-E, Gerke O, Hildebrandt MG. Interrater Agreement and Reliability of PERCIST and Visual Assessment When Using 18F-FDG-PET/CT for Response Monitoring of Metastatic Breast Cancer. Diagnostics. 2020; 10(12):1001. https://doi.org/10.3390/diagnostics10121001

Chicago/Turabian StyleSørensen, Jonas S., Mie H. Vilstrup, Jorun Holm, Marianne Vogsen, Jakob L. Bülow, Lasse Ljungstrøm, Poul-Erik Braad, Oke Gerke, and Malene G. Hildebrandt. 2020. "Interrater Agreement and Reliability of PERCIST and Visual Assessment When Using 18F-FDG-PET/CT for Response Monitoring of Metastatic Breast Cancer" Diagnostics 10, no. 12: 1001. https://doi.org/10.3390/diagnostics10121001

APA StyleSørensen, J. S., Vilstrup, M. H., Holm, J., Vogsen, M., Bülow, J. L., Ljungstrøm, L., Braad, P.-E., Gerke, O., & Hildebrandt, M. G. (2020). Interrater Agreement and Reliability of PERCIST and Visual Assessment When Using 18F-FDG-PET/CT for Response Monitoring of Metastatic Breast Cancer. Diagnostics, 10(12), 1001. https://doi.org/10.3390/diagnostics10121001