Visual Evaluation of Image Quality of a Low Dose 2D/3D Slot Scanner Imaging System Compared to Two Conventional Digital Radiography X-ray Imaging Systems

Abstract

:1. Introduction

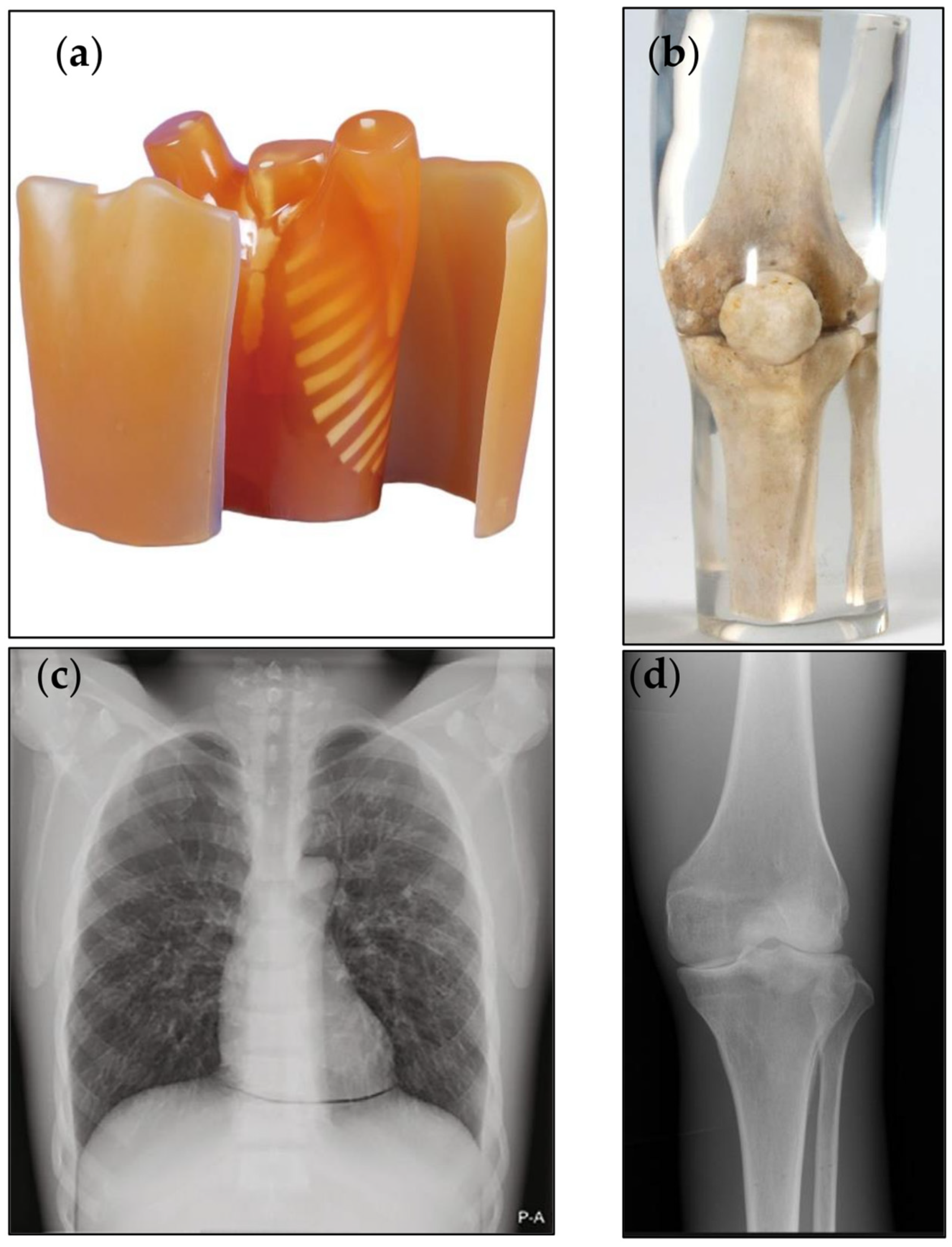

2. Materials and Methods

2.1. Clinical Examination Protocols and Technical Settings

2.2. Image Quality Assessment

2.3. Estimation of the Radiation Exposure to the Patients

2.4. Statistical Analysis

3. Results

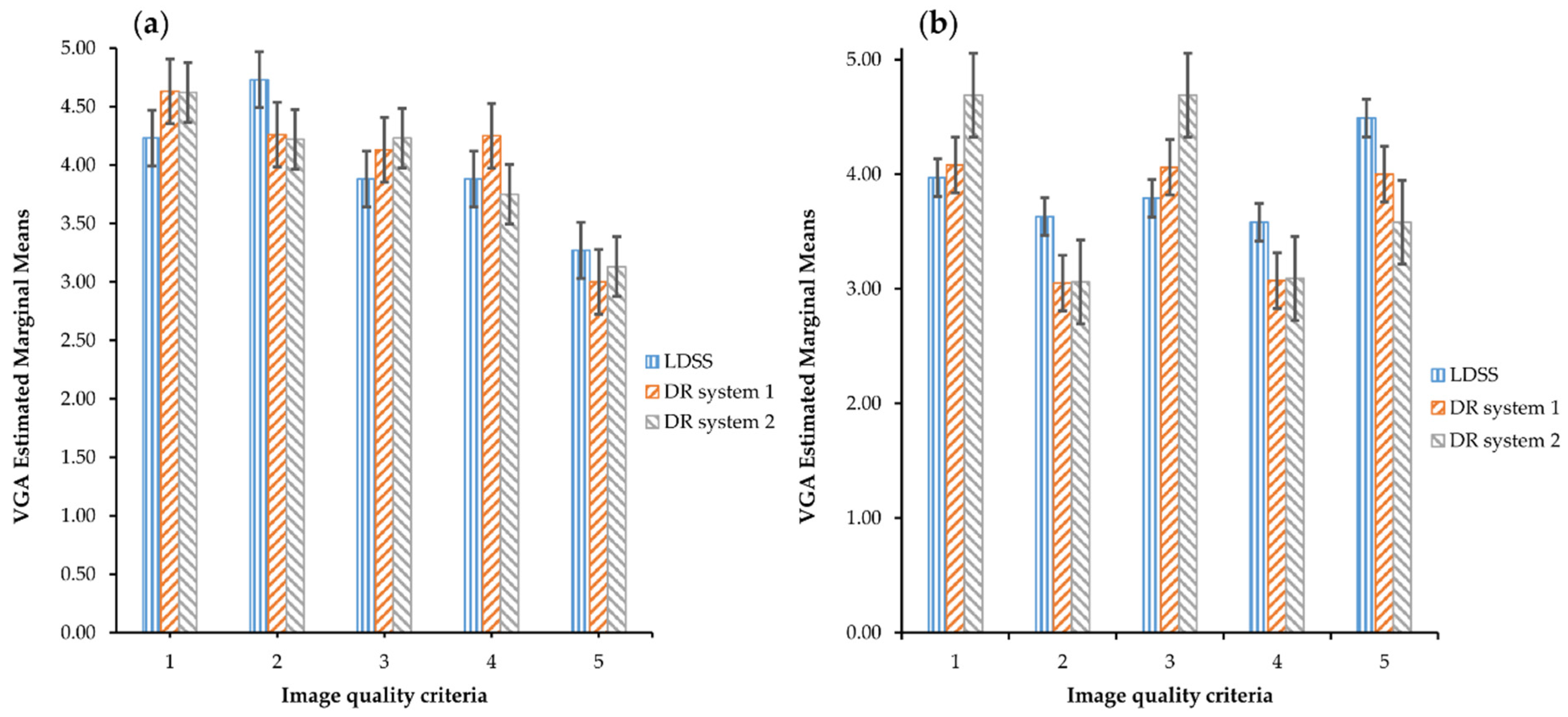

VGA Comparison across the Systems and Image Quality Criteria

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wybier, M.; Bossard, P. Musculoskeletal imaging in progress: The EOS imaging system. Jt. Bone Spine 2013, 80, 238–243. [Google Scholar] [CrossRef]

- Melhem, E.; Assi, A.; El Rachkidi, R.; Ghanem, I. EOS®biplanar X-ray imaging: Concept, developments, benefits, and limitations. J. Child. Orthop. 2016, 10, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Illés, T.; Somoskeoy, S. The EOSTM imaging system and its uses in daily orthopaedic practice. Int. Orthop. 2012, 36, 1325–1331. [Google Scholar] [CrossRef] [Green Version]

- Hui, S.C.N.; Chu, W.C.W. Supplementary Addendum to “Radiation dose of digital radiography (DR) versus micro-dose x-ray (EOS) on patients with adolescent idiopathic scoliosis: 2016 SOSORT- IRSSD ‘John Sevastic Award’ Winner in Imaging Research”. Scoliosis Spinal Disord. 2018, 13, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Newton, P.O.; Khandwala, Y.; Bartley, C.E.; Reighard, F.G.; Bastrom, T.P.; Yaszay, B. New EOS Imaging Protocol Allows a Substantial Reduction in Radiation Exposure for Scoliosis Patients. Spine Deform. 2016, 4, 138–144. [Google Scholar] [CrossRef] [PubMed]

- Ilharreborde, B.; Ferrero, E.; Alison, M.; Mazda, K. EOS microdose protocol for the radiological follow-up of adolescent idiopathic scoliosis. Eur. Spine J. 2016, 25, 526–531. [Google Scholar] [CrossRef] [PubMed]

- McKenna, C.; Wade, R.; Faria, R.; Yang, H.; Stirk, L.; Gummerson, N.; Sculpher, M.; Woolacott, N. EOS 2D/3D X-ray imaging system: A systematic review and economic evaluation. Health Technol. Assess. 2012, 16, 1–188. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Deschênes, S.; Charron, G.; Beaudoin, G.; Labelle, H.; Dubois, J.; Miron, M.-C.; Parent, S. Diagnostic imaging of spinal deformities: Reducing patients radiation dose with a new slot-scanning X-ray imager. Spine 2010, 35, 989–994. [Google Scholar] [CrossRef]

- Abdi, A.J.; Mussmann, B.; Mackenzie, A.; Klaerke, B.; Andersen, P.E. Comparison of Radiation Exposure to the Patient and Contrast Detail Resolutions Across Low Dose 2D/3D Slot Scanner and Two Conventional Digital Radiography X-ray Imaging Systems. Radiat. Prot. Dosimetry 2019, 185, 252–265. [Google Scholar] [CrossRef]

- Abdi, A.J.; Mussmann, B.R.; Mackenzie, A.; Gerke, O.; Klaerke, B.; Andersen, P.E. Quantitative Image Quality Metrics of the Low-Dose 2D/3D Slot Scanner Compared to Two Conventional Digital Radiography X-ray Imaging Systems. Diagnostics 2021, 11, 1699. [Google Scholar] [CrossRef]

- McAdams, H.P.; Samei, E.; Dobbins, J., III; Tourassi, G.D.; Ravin, C.E. Recent advances in chest radiography. Radiology 2006, 241, 663–683. [Google Scholar] [CrossRef] [PubMed]

- Thelin, N.; Holmberg, S.; Thelin, A. Knee injuries account for the sports-related increased risk of knee osteoarthritis. Scand. J. Med. Sci. Sports 2006, 16, 329–333. [Google Scholar] [CrossRef]

- Börjesson, S.; Håkansson, M.; Båth, M.; Kheddache, S.; Svensson, S.; Tingberg, A.; Grahn, A.; Ruschin, M.; Hemdal, B.; Mattsson, S.; et al. A software tool for increased efficiency in observer performance studies in radiology. Radiat. Prot. Dosimetry 2005, 114, 45–52. [Google Scholar] [CrossRef] [PubMed]

- Sund, P.; Båth, M.; Kheddache, S.; Månsson, L.G. Comparison of visual grading analysis and determination of detective quantum efficiency for evaluating system performance in digital chest radiography. Eur. Radiol. 2004, 14, 48–58. [Google Scholar] [CrossRef] [PubMed]

- Smedby, O.; Fredrikson, M. Visual grading regression: Analysing data from visual grading experiments with regression models. Br. J. Radiol. 2010, 83, 767–775. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tingberg, A.M. Quantifying the Quality of Medical X-ray Images: An Evaluation Based on Normal Anatomy for Lumbar Spine and Chest Radiography. Ph.D. Thesis, Lunds Universitet, Lund, Sweden, 2000. [Google Scholar]

- Månsson, L.G. Methods for the Evaluation of Image Quality: A Review. Radiat. Prot. Dosimetry 2000, 90, 89–99. [Google Scholar] [CrossRef]

- Tingberg, A.; Båth, M.; Håkansson, M.; Medin, J.; Besjakov, J.; Sandborg, M.; Alm-Carlsson, G.; Mattsson, S.; Månsson, L.G. Evaluation of image quality of lumbar spine images: A comparison between FFE and VGA. Radiat. Prot. Dosimetry 2005, 114, 53–61. [Google Scholar] [CrossRef]

- Ludewig, E.; Richter, A.; Frame, M. Diagnostic imaging—evaluating image quality using visual grading characteristic (VGC) analysis. Veter. Res. Commun. 2010, 34, 473–479. [Google Scholar] [CrossRef]

- Precht, H.; Hansson, J.; Outzen, C.; Hogg, P.; Tingberg, A. Radiographers’ perspectives’ on Visual Grading Analysis as a scientific method to evaluate image quality. Radiography 2019, 25, S14–S18. [Google Scholar] [CrossRef]

- De Crop, A.; Bacher, K.; Van Hoof, T.; Smeets, P.V.; Smet, B.S.; Vergauwen, M.; Kiendys, U.; Duyck, P.; Verstraete, K.; D’Herde, K.; et al. Correlation of contrast-detail analysis and clinical image quality assessment in chest radiography with a human cadaver study. Radiology 2012, 262, 298–304. [Google Scholar] [CrossRef]

- Rodríguez Pérez, S.; Marshall, N.W.; Binst, J.; Coolen, J.; Struelens, L.; Bosmans, H. Survey of chest radiography systems: Any link between contrast detail measurements and visual grading analysis? Phys. Med. 2020, 76, 62–71. [Google Scholar] [CrossRef]

- Decoster, R.; Mol, H.; van den Broeck, R.; Smits, D. Assessment of image quality in orthopaedic radiography with digital detectors: A visual grading analysis. In Medical Imaging 2013: Image Perception, Observer Performance, and Technology Assessment, Proceedings of the SPIE Medical Imaging, Lake Buena Vista, FL, USA, 9–14 February 2013; Abbey, C.K., Mello-Thoms, C.R., Eds.; International Society for Optics and Photonics: Bellingham, WA, USA, 2013; Volume 8673, pp. 349–354. [Google Scholar]

- Butler, M.L.; Rainford, L.; Last, J.; Brennan, P.C. Optimization of exposure index values for the antero-posterior pelvis and antero-posterior knee examination. In Medical Imaging 2009: Image Perception, Observer Performance, and Technology Assessment, Proceedings of the SPIE Medical Imaging, Lake Buena Vista, FL, USA, 7–12 February 2009; Sahiner, B., Manning, D.J., Eds.; International Society for Optics and Photonics: Bellingham, WA, USA, 2009; Volume 7263, p. 726302. [Google Scholar] [CrossRef]

- Svalkvist, A.; Svensson, S.; Håkansson, M.; Båth, M.; Månsson, L.G. ViewDEX: A status report. Radiat. Prot. Dosimetry 2016, 169, 38–45. [Google Scholar] [CrossRef]

- Håkansson, M.; Svensson, S.; Zachrisson, S.; Svalkvist, A.; Båth, M.; Månsson, L.G. ViewDEX: An efficient and easy-to-use software for observer performance studies. Radiat. Prot. Dosimetry 2010, 139, 42–51. [Google Scholar] [CrossRef] [PubMed]

- Ba, M. Visual grading characteristics (VGC) analysis: A non-parametric rank-invariant statistical method for image quality evaluation. Br. J. Radiol. 2007, 80, 169–176. [Google Scholar] [CrossRef]

- Håkansson, M.; Svensson, S.; Zachrisson, S.; Svalkvist, A.; Båth, M.; Månsson, L.G. ViewDEX 2.0: A Java-based DICOM-compatible software for observer performance studies. In Medical Imaging 2009: Image Perception, Observer Performance, and Technology Assessment, Proceedings of the SPIE Medical Imaging, Lake Buena Vista, FL, USA, 7–12 February 2009; Sahiner, B., Manning, D.J., Eds.; International Society for Optics and Photonics: Bellingham, WA, USA, 2009; Volume 7263, p. 72631G. [Google Scholar] [CrossRef]

- Borrego, D.; Lowe, E.M.; Kitahara, C.M.; Lee, C. Assessment of PCXMC for patients with different body size in chest and abdominal x ray examinations: A Monte Carlo simulation study. Phys. Med. Biol. 2018, 63, 065015. [Google Scholar] [CrossRef]

- Precht, H.; Mørup, S.D.; Tingberg, A.; Outzen, C.B.; Kusk, K.W.; Nielsen, R.M.; Midtgård, M.; Winther, M.B.; Waaler, D.; Kusk, M.W. Can Scatter Correction Software Replace a Grid in Dr Pelvic Examinations? Radiat. Prot. Dosimetry 2019, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Tingberg, A.; Sjöström, D. Optimisation of image plate radiography with respect to tube voltage. Radiat. Prot. Dosimetry 2005, 114, 286–293. [Google Scholar] [CrossRef] [PubMed]

- Blanc, D. European guidelines on quality criteria for diagnostic images. Radioprotection 1998, 32, 73–74. [Google Scholar]

- Almen, A.; Tingberg, A.; Mattsson, S.; Besjakov, J.; Kheddache, S.; Lanhede, B.; Mansson, L.G.; Zankl, M. The influence of different technique factors on image quality of lumbar spine radiographs as evaluated by established CEC image criteria. Br. J. Radiol. 2000, 73, 1192–1199. [Google Scholar] [CrossRef] [PubMed]

- Rainford, L.A.; Al-Qattan, E.; McFadden, S.; Brennan, P.C. CEC analysis of radiological images produced in Europe and Asia. Radiography 2007, 13, 202–209. [Google Scholar] [CrossRef]

- Decoster, R.G.L.; Mol, H. Optimization of X-Ray Units with CDRAD Phantom: Can it Predict the Radiologist Opinion. Eur. Congr. Radiol. 2013, 1–11. [Google Scholar]

- Precht, H.; Hansen, D.L.; Ring-Pedersen, B.M.; Møller Hansen, L.F.; Waaler, D.; Tingberg, A.; Midtgaard, M.; Jensen Ohlsen, M.G.; Juhl Hankelbjerg, S.T.; Ravn, P.; et al. Comparison of image quality in chest, hip and pelvis examinations between mobile equipment in nursing homes and static indirect radiography equipment in the hospital. Radiography 2020, 26, e31–e37. [Google Scholar] [CrossRef]

- The 2007 Recommendations of the International Commission on Radiological Protection. ICRP publication 103. Ann. ICRP 2007, 37, 1–332. [CrossRef]

- Law, M.; Ma, W.-K.; Lau, D.; Cheung, K.; Ip, J.; Yip, L.; Lam, W. Cumulative effective dose and cancer risk for pediatric population in repetitive full spine follow-up imaging: How micro dose is the EOS microdose protocol? Eur. J. Radiol. 2018, 101, 87–91. [Google Scholar] [CrossRef]

- Law, M.; Ma, W.K.; Chan, E.; Lau, D.; Mui, C.; Cheung, K.; Yip, L.; Lam, W. Evaluation of cumulative effective dose and cancer risk from repetitive full spine imaging using EOS system: Impact to adolescent patients of different populations. Eur. J. Radiol. 2017, 96, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Vanbelle, S.; Albert, A. A note on the linearly weighted kappa coefficient for ordinal scales. Stat. Methodol. 2009, 6, 157–163. [Google Scholar] [CrossRef] [Green Version]

- Schuster, C. A note on the interpretation of weighted kappa and its relations to other rater agreement statistics for metric scales. Educ. Psychol. Meas. 2004, 64, 243–253. [Google Scholar] [CrossRef] [Green Version]

- Fleiss, J.L. Measuring nominal scale agreement among many raters. Psychol. Bull. 1971, 76, 378–382. [Google Scholar] [CrossRef]

- Falotico, R.; Quatto, P. Fleiss’ kappa statistic without paradoxes. Qual. Quant. 2015, 49, 463–470. [Google Scholar] [CrossRef]

- Kottner, J.; Audige, L.; Brorson, S.; Donner, A.; Gajewski, B.J.; Hróbjartsson, A.; Roberts, C.; Shoukri, M.; Streiner, D.L. Guidelines for Reporting Reliability and Agreement Studies (GRRAS) were proposed. Int. J. Nurs. Stud. 2011, 48, 661–671. [Google Scholar] [CrossRef]

- Rücker, G.; Schimek-Jasch, T.; Nestle, U. Measuring inter-observer agreement in contour delineation of medical imaging in a dummy run using fleiss’ kappa. Methods Inf. Med. 2012, 51, 489–494. [Google Scholar] [CrossRef] [Green Version]

- Watkins, M.W.; Pacheco, M. Interobserver agreement in behavioral research: Importance and calculation. J. Behav. Educ. 2000, 10, 205–212. [Google Scholar] [CrossRef]

- Warrens, M.J. Inequalities between multi-rater kappas. Adv. Data Anal. Classif. 2010, 4, 271–286. [Google Scholar] [CrossRef] [Green Version]

- Wilcox, R.R. Adjusting for Unequal Variances When Comparing Means in One-Way and Two-Way Fixed Effects ANOVA Models. J. Educ. Stat. 1989, 14, 269–278. [Google Scholar] [CrossRef]

- Aflaki, S.; Memarzadeh, M. Using two-way ANOVA and hypothesis test in evaluating crumb rubber modification (CRM) agitation effects on rheological properties of bitumen. Constr. Build. Mater. 2011, 25, 2094–2106. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The Measurement of Observer Agreement for Categorical Data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Systems | Projections | Dose Level | Protocol |

|---|---|---|---|

| DR system 1 | PA/LAT | Standard (default) | Chest (thorax) |

| DR system 2 | PA/LAT | Standard (default) | |

| LDSS | PA/LAT | Medium dose (speed 6 sec.) | |

| DR system 1 | PA/LAT | Standard (default) | Extremity (knee) |

| DR system 2 | PA/LAT | Standard (default) | |

| LDSS | PA/LAT | High dose (Speed 8 sec.) |

| Imaging Systems | Tube Voltage [kV] | SID (cm) | Tube Current (mA) | Tube Load (mAs) | Exposure Mode | AF Al/Cu (mm) | Examination Protocols |

|---|---|---|---|---|---|---|---|

| LDSS speed 6 | 90 | 130 | 280 | n/a | Manual | 0/0.1 | Chest |

| DR system 1 | 133 | 250 | n/a | 1.6 | AEC | 1/0.2 | |

| DR system 2 | 145 | 300 | n/a | 1.8 | AEC | 0/0.2 | |

| LDSS speed 8 | 68 | 130 | 400 | n/a | Manual | 0/0 | Knee |

| DR system 1 | 57 | 110 | n/a | 8.5 | Manual | 0/0 | |

| DR system 2 | 63 | 115 | n/a | 6.3 | Manual | 0/0 |

| Scale | Image Scoring Scale | Description |

|---|---|---|

| 1 | Poor image quality: | Image not usable, loss of information |

| 2 | Restricted image quality: | Relevant limitations for clinical use, clear loss of information |

| 3 | Sufficient image quality: | Moderate limitations for clinical use, no substantial loss of information |

| 4 | Good image quality: | Minimal limitations for clinical use |

| 5 | Excellent image quality: | No limitations for clinical use |

| Criteria | Technical Image Quality Criteria | Projections | |

|---|---|---|---|

| 1 | Visualisation of: | Vascular pattern of lungs and peripheral vessels | PA |

| 2 | Trachea and proximal bronchi | ||

| 3 | Borders of the heart and the aorta | ||

| 4 | Diaphragm and lateral costophrenic angles | ||

| 5 | Spine through the heart shadow | ||

| 1 | Visualisation of: | Thoracic spine | LAT |

| 2 | Trachea | ||

| 3 | Costophrenic angles | ||

| 4 | Sternum | ||

| 5 | Diaphragm | ||

| 6 | Mediastinum | ||

| 7 | Posterior border of the heart and aorta | ||

| Criteria | Image Quality Criteria Definitions | Technical Image Quality | Projection |

|---|---|---|---|

| 1 | Homogeneity in soft tissue, lateral to the femoral condyle | Noise | knee PA |

| 2 | Sharpness of trabeculae in the medial femoral condyle | Spatial resolution | |

| 3 | Sharpness of the demarcation between substantia spongiosa and substantia compacta in the femur above patella | Contrast small structure | |

| 4 | Visualization of patella through the femur | Low-contrast resolution and noise | |

| 5 | Visualization of the lateral intra-articular femoral condyle | Contrast large structure | |

| 1 | Homogeneity in soft tissue posterior to the knee joint | Noise | knee LAT |

| 2 | Visualization of head of fibulae behind the tibia | Low-contrast resolution and noise | |

| 3 | Visualization of patella | Contrast large structure | |

| 4 | Sharpness of trabeculae in the tibial metaphysis | Spatial resolution | |

| 5 | Sharpness of the demarcation between substantia spongiosa and substantia compacta in the anterior femur above patella | Contrast small structure |

| Criteria | 1 | 2 | 3 | 4 | 5 | 6 | 7 | Projections |

|---|---|---|---|---|---|---|---|---|

| Kappa | 0.60 | 0.68 | 0.73 | 0.19 | 0.78 | n/a | chest PA | |

| 95% CI | 0.39–0.80 | 0.51–0.85 | 0.52–0.93 | 0.03–0.35 | 0.61–0.93 | |||

| p-value | <0.001 | <0.001 | <0.001 | 0.02 | <0.001 | |||

| Kappa | 0.55 | 0.36 | 0.56 | 0.47 | 0.44 | 0.63 | 0.27 | chest LAT |

| 95% CI | 0.49–0.61 | 0.19–0.52 | 0.41–0.72 | 0.30–0.63 | 0.26–0.61 | 0.45–81 | 0.13–0.41 | |

| p-value | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | |

| Criteria | 1 | 2 | 3 | 4 | 5 | Projections |

|---|---|---|---|---|---|---|

| Kappa | 0.45 | 0.29 | 0.31 | 0.39 | 0.23 | knee PA |

| 95% CI | 0.26–0.64 | 0.09–0.49 | 0.13–0.48 | 0.19–0.59 | 0.10–0.36 | |

| p-value | <0.001 | 0.004 | <0.001 | <0.001 | <0.001 | |

| Kappa | 0.24 | 0.18 | 0.20 | 0.17 | 0.26 | knee LAT |

| 95% CI | 0.06–0.42 | 0.03–0.33 | 0.01–0.33 | 0.05–0.29 | 0.07–0.44 | |

| p-value | 0.009 | 0.016 | 0.002 | 0.007 | 0.007 |

| Criteria | 1 | 2 | 3 | 4 | 5 | 6 | 7 | Projections |

|---|---|---|---|---|---|---|---|---|

| Kappa | 0.25 | 0.26 | 0.28 | 0.27 | 0.63 | n/a | chest PA | |

| 95% CI | 0.07–0.44 | −0.11–0.42 | −0.09–0.64 | −0.06–0.61 | 0.49–0.78 | |||

| p-value | 0.02 | 0.01 | <0.001 | <0.001 | <0.001 | |||

| Kappa | 0.13 | 0.31 | 0.28 | 0.26 | 0.17 | 0.14 | 0.26 | chest LAT |

| 95% CI | −0.22–0.48 | 0.11–0.51 | −0.15–0.70 | 0.05–0.46 | −0.02–0.36 | −0.10–0.29 | −0.09–0.60 | |

| p-value | 0.10 | <0.001 | 0.04 | 0.04 | 0.05 | 0.05 | 0.02 | |

| Criteria | 1 | 2 | 3 | 4 | 5 | Projections |

|---|---|---|---|---|---|---|

| Kappa | 0.38 | 0.13 | 0.21 | 0.21 | 1.00 | knee PA |

| 95% CI | 0.16–0.60 | −0.13–0.35 | −0.23–0.64 | −0.01–0.42 | n/a | |

| p-value | <0.001 | 0.41 | 0.07 | 0.04 | <0.001 | |

| Kappa | 0.27 | 0.19 | 0.28 | 0.21 | 0.34 | knee LAT |

| 95% CI | 0.01–0.54 | −0.05–0.44 | −0.12–0.68 | −0.10–0.53 | −0.07–0.75 | |

| p-value | 0.03 | 0.01 | <0.001 | 0.02 | 0.01 |

| Systems | Mean | 95% CI | Projections |

|---|---|---|---|

| LDSS | 4.73 | 4.64–4.83 | chest PA |

| DR system 1 | 3.90 | 3.80–3.99 | |

| DR system 2 | 3.69 | 3.59–3.78 | |

| LDSS | 4.33 | 4.24–4.42 | chest LAT |

| DR system 1 | 3.86 | 3.37–3.95 | |

| DR system 2 | 3.40 | 3.31–3.49 |

| System Comparison | Mean Difference | Std. Error | Sig. b | 95% CI | Projections |

|---|---|---|---|---|---|

| LDSS vs. DR system 1 | 0.83 | 0.07 | <0.001 | 0.67–0.99 | chest PA |

| LDSS vs. DR system 2 | 1.05 | 0.07 | <0.001 | 0.88–1.20 | |

| DR system 1 vs. DR system 2 | 0.21 | 0.07 | 0.006 | 0.05–0.38 | |

| LDSS vs. DR system 1 | 0.47 | 0.07 | <0.001 | 0.32–0.63 | chest LAT |

| LDSS vs. DR system 2 | 0.94 | 0.07 | <0.001 | 0.78–1.09 | |

| DR system 1 vs. DR system 2 | 0.46 | 0.07 | <0.001 | 0.31–0.62 |

| Systems | Mean | 95% CI | Projections |

|---|---|---|---|

| LDSS | 3.86 | 3.78–3.94 | knee PA |

| DR system 1 | 4.07 | 3.99–4.14 | |

| DR system 2 | 3.95 | 3.88–4.03 | |

| LDSS | 3.85 | 3.79–3.92 | knee LAT |

| DR system 1 | 3.72 | 3.65–3.79 | |

| DR system 2 | 3.92 | 3.85–3.99 |

| System Comparison | Mean Difference | Std. Error | Sig. b | 95% CI | Projections |

|---|---|---|---|---|---|

| LDSS vs. DR system 1 | −0.21 | 0.069 | 0.001 | −0.34–0.07 | knee PA |

| LDSS vs. DR system 2 | −0.09 | 0.069 | 0.27 | −0.23–0.04 | |

| DR system 1 vs. DR system 2 | 0.11 | 0.069 | 0.12 | −0.02–0.25 | |

| LDSS vs. DR system 1 | 0.13 | 0.07 | 0.016 | 0.02–0.25 | knee LAT |

| LDSS vs. DR system 2 | −0.07 | 0.07 | 0.49 | −0.18–0.05 | |

| DR system 1 vs. DR system 2 | −0.2 | 0.07 | <0.001 | −0.31–−0.09 |

| Imaging Systems | ESD (mGy) | DAP (mGycm2) | ED (µSv) | ED Difference (%) from LDSS | Protocol | Projections |

|---|---|---|---|---|---|---|

| LDSS Speed 6 | 0.14 | 142.21 | 19.76 | chest | PA | |

| 0.15 | 153.22 | 21.55 | LAT | |||

| Total | 0.29 | 295.42 | 41.31 | |||

| DR system 1 | 0.16 | 168.93 | 39.04 | PA | ||

| 0.19 | 193.45 | 47.71 | 52.4 | LAT | ||

| Total | 0.35 | 361.38 | 86.80 | |||

| DR system 2 | 0.15 | 158.00 | 21.00 | PA | ||

| 0.17 | 179.86 | 40.90 | 33.3 | LAT | ||

| Total | 0.32 | 337.86 | 61.90 | |||

| LDSS Speed 8 | 0.17 | 54.91 | 0.0063 | knee | PA | |

| 0.17 | 55.40 | 0.0064 | LAT | |||

| Total | 0.34 | 110.31 | 0.0127 | |||

| DR system 1 | 0.18 | 58.25 | 0.0099 | PA | ||

| 0.19 | 59.33 | 0.0089 | 35.5 | LAT | ||

| Total | 0.37 | 117.58 | 0.0197 | |||

| DR system 2 | 0.17 | 56.24 | 0.0086 | PA | ||

| 0.18 | 57.05 | 0.0096 | 30.2 | LAT | ||

| Total | 0.35 | 113.29 | 0.0182 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdi, A.J.; Mussmann, B.; Mackenzie, A.; Gerke, O.; Jørgensen, G.M.; Bechsgaard, T.E.; Jensen, J.; Olsen, L.B.; Andersen, P.E. Visual Evaluation of Image Quality of a Low Dose 2D/3D Slot Scanner Imaging System Compared to Two Conventional Digital Radiography X-ray Imaging Systems. Diagnostics 2021, 11, 1932. https://doi.org/10.3390/diagnostics11101932

Abdi AJ, Mussmann B, Mackenzie A, Gerke O, Jørgensen GM, Bechsgaard TE, Jensen J, Olsen LB, Andersen PE. Visual Evaluation of Image Quality of a Low Dose 2D/3D Slot Scanner Imaging System Compared to Two Conventional Digital Radiography X-ray Imaging Systems. Diagnostics. 2021; 11(10):1932. https://doi.org/10.3390/diagnostics11101932

Chicago/Turabian StyleAbdi, Ahmed Jibril, Bo Mussmann, Alistair Mackenzie, Oke Gerke, Gitte Maria Jørgensen, Thor Eriksen Bechsgaard, Janni Jensen, Lone Brunshøj Olsen, and Poul Erik Andersen. 2021. "Visual Evaluation of Image Quality of a Low Dose 2D/3D Slot Scanner Imaging System Compared to Two Conventional Digital Radiography X-ray Imaging Systems" Diagnostics 11, no. 10: 1932. https://doi.org/10.3390/diagnostics11101932

APA StyleAbdi, A. J., Mussmann, B., Mackenzie, A., Gerke, O., Jørgensen, G. M., Bechsgaard, T. E., Jensen, J., Olsen, L. B., & Andersen, P. E. (2021). Visual Evaluation of Image Quality of a Low Dose 2D/3D Slot Scanner Imaging System Compared to Two Conventional Digital Radiography X-ray Imaging Systems. Diagnostics, 11(10), 1932. https://doi.org/10.3390/diagnostics11101932