Accuracy of New Deep Learning Model-Based Segmentation and Key-Point Multi-Detection Method for Ultrasonographic Developmental Dysplasia of the Hip (DDH) Screening

Abstract

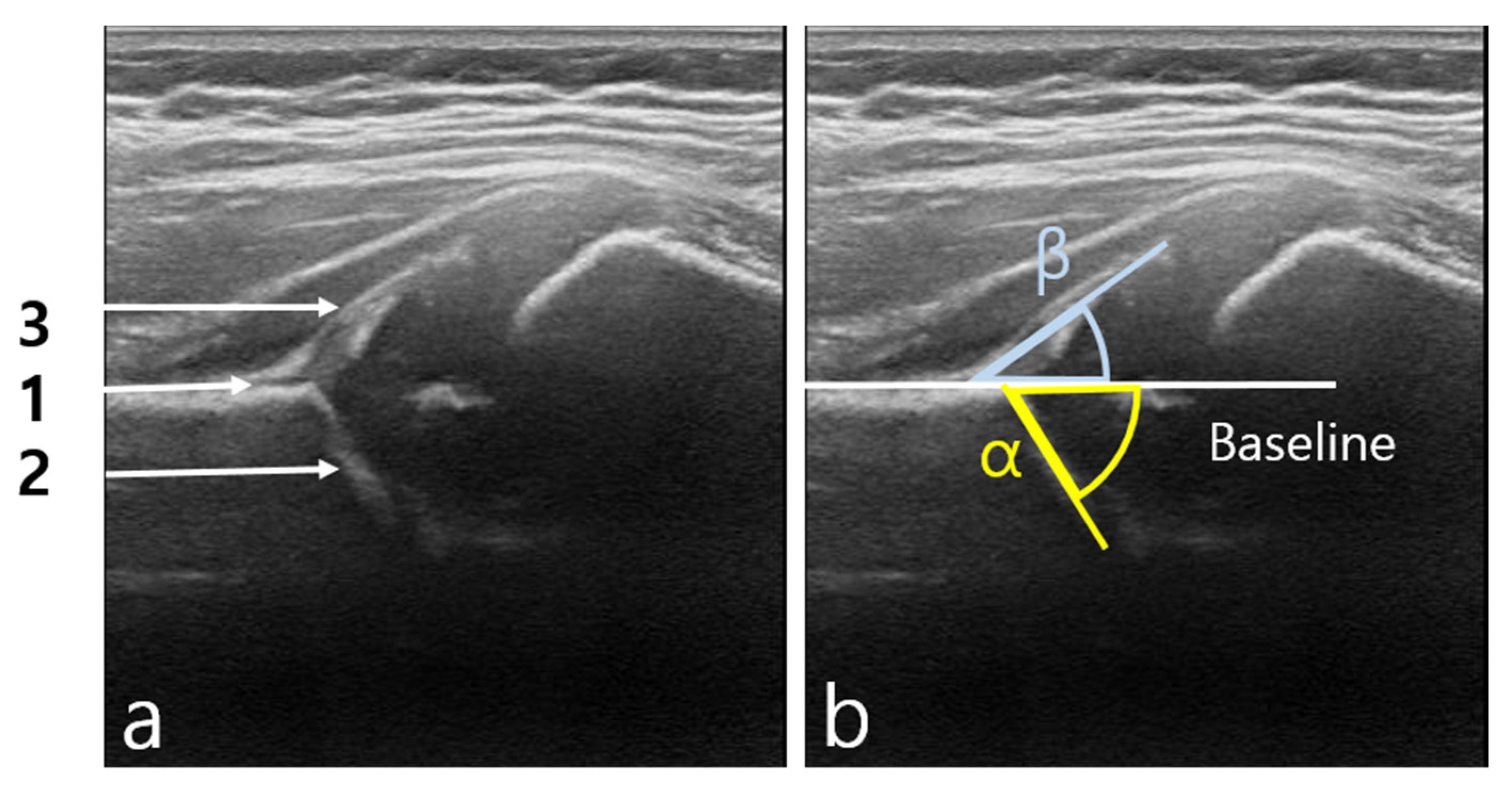

:1. Introduction

2. Materials and Methods

2.1. Images

2.2. Multi-Detection Type Artificial Intelligence (AI)

2.2.1. Segmentation

2.2.2. File Conversion

2.2.3. Layer Extraction

2.2.4. First Training

2.2.5. Second Training

2.2.6. Final Result

2.3. Network Training

2.4. Statistical Analysis

3. Results

3.1. Criteria for Data Classification

3.1.1. Data Classification Based on First Criteria

3.1.2. Data Classification Based on Second Criteria

3.2. Statistical Result of Graf Angle Measurement

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Patel, H. Canadian Task Force on Preventive Health Care. Preventive health care, 2001 update: Screening and management of developmental dysplasia of the hip in newborns. CMAJ 2001, 164, 1669–1677. [Google Scholar] [PubMed]

- Bialik, V.; Bialik, G.M.; Blazer, S.; Sujov, P.; Wiener, F.; Berant, M. Developmental dysplasia of the hip: A new approach to incidence. Pediatrics 1999, 103, 93–99. [Google Scholar] [CrossRef]

- Atalar, H.; Sayli, U.; Yavuz, O.Y.; Uraş, I.; Dogruel, H. Indicators of successful use of the Pavlik harness in infants with developmental dysplasia of the hip. Int. Orthop. 2007, 31, 145–150. [Google Scholar] [CrossRef] [Green Version]

- Pavone, V.; de Cristo, C.; Vescio, A.; Lucenti, L.; Sapienza, M.; Sessa, G.; Pavone, P.; Testa, G. Dynamic and static splinting for treatment of developmental dysplasia of the hip: A systematic review. Children 2021, 8, 104. [Google Scholar] [CrossRef] [PubMed]

- Ganz, R.; Klaue, K.; Vinh, T.S.; Mast, J.W. A new periacetabular osteotomy for the treatment of hip dysplasias: Technique and preliminary results. Clin. Orthop. Relat. Res. 1988, 232, 26–36. [Google Scholar] [CrossRef]

- Löchel, J.; Janz, V.; Perka, C.; Hofer, A.; Zimmerer, A.; Wassilew, G.I. A new rectus and sartorius sparing approach for periacetabular osteotomy in patients with developmental dysplasia of the hip. J. Clin. Med. 2021, 10, 601. [Google Scholar] [CrossRef]

- Furnes, O.; Lie, S.A.; Espehaug, B.; Vollset, S.E.; Engesaeter, L.B.; Havelin, L.I. Hip disease and the prognosis of total hip replacements: A review of 53,698 primary total hip replacements reported to the Norwegian Arthroplasty Register 1987–1999. J. Bone Jt. Surg. Br. 2001, 83, 579–586. [Google Scholar] [CrossRef]

- Tomà, P.; Valle, M.; Rossi, U.; Brunenghi, G.M. Paediatric hip—ultrasound screening for developmental dysplasia of the hip: A review. Eur. J. Ultrasound 2001, 14, 45–55. [Google Scholar] [CrossRef]

- Woolacott, N.F.; Puhan, M.A.; Steurer, J.; Kleijnen, J. Ultrasonography in screening for developmental dysplasia of the hip in newborns: Systematic review. BMJ 2005, 330, 1413. [Google Scholar] [CrossRef] [Green Version]

- Dogruel, H.; Atalar, H.; Yavuz, O.Y.; Sayli, U. Clinical examination versus ultrasonography in detecting developmental dysplasia of the hip. Int. Orthop. 2008, 32, 415–419. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Graf, R. The diagnosis of congenital hip-joint dislocation by the ultrasonic combound treatment. Arch. Orthop. Trauma Surg. 1980, 97, 117–133. [Google Scholar] [CrossRef]

- Roovers, E.A.; Boere-Boonekamp, M.M.; Geertsma, T.S.A.; Zielhuis, G.A.; Kerkhoff, A.H. Ultrasonographic screening for developmental dysplasia of the hip in infants. Reproducibility of assessments made by radiographers. J. Bone Jt. Surg. Br. 2003, 85, 726–730. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jaremko, J.L.; Mabee, M.; Swami, V.G.; Jamieson, L.; Chow, K.; Thompson, R.B. Potential for change in US diagnosis of hip dysplasia solely caused by changes in probe orientation: Patterns of alpha-angle variation revealed by using three-dimensional US. Radiology 2014, 273, 870–878. [Google Scholar] [CrossRef]

- Dias, J.J.; Thomas, I.H.; Lamont, A.C.; Mody, B.S.; Thompson, J.R. The reliability of ultrasonographic assessment of neonatal hips. J. Bone Jt. Surg. Br. 1993, 75, 479–482. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Awan, M.J.; Rahim, M.S.M.; Salim, N.; Mohammed, M.A.; Garcia-Zapirain, B.; Abdulkareem, K.H. Efficient detection of knee anterior cruciate ligament from magnetic resonance imaging using deep learning approach. Diagnostics 2021, 11, 105. [Google Scholar] [CrossRef] [PubMed]

- Azer, S.A. Challenges facing the detection of colonic polyps: What can deep learning do? Medicina 2019, 55, 473. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Von Wrede, R.; Jeub, M.; Ariöz, I.; Elger, C.E.; von Voss, H.; Klein, H.-G.; Becker, A.J.; Schoch, S.; Surges, R.; Kunz, W.S. Novel KCNH1 Mutations Associated with Epilepsy: Broadening the Phenotypic Spectrum of KCNH1-Associated Diseases. Genes 2021, 12, 132. [Google Scholar] [CrossRef]

- Park, H.-C.; Kim, Y.-J.; Lee, S.-W. Adenocarcinoma recognition in endoscopy images using optimized convolutional neural networks. Appl. Sci. 2020, 10, 1650. [Google Scholar] [CrossRef] [Green Version]

- Dachena, C.; Casu, S.; Fanti, A.; Lodi, M.B.; Mazzarella, G.J. Combined Use of MRI, fMRIand Cognitive Data for Alzheimer’s Disease: Preliminary Results. Appl. Sci. 2019, 9, 3156. [Google Scholar] [CrossRef] [Green Version]

- Golan, D.; Donner, Y.; Mansi, C.; Jaremko, J.; Ramachandran, M. Fully automating Graf’s method for DDH diagnosis using deep convolutional neural networks. In Lecture Notes in Computer Science; Carneiro, G., Ed.; Springer: Cham, Switzerland, 2016; Volume 10008, pp. 130–141. [Google Scholar] [CrossRef]

- Hareendranathan, A.R.; Zonoobi, D.; Mabee, M.; Cobzas, D.; Punithakumar, K.; Noga, M.; Jaremko, J.L. Toward automatic diagnosis of hip dysplasia from 2D ultrasound. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, VIC, Australia, 18–21 April 2017; pp. 982–985. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R.B. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), 22–29 October 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Sekachev, B. Computer Vision Annotation Tool: A Universal Approach to Data Annotation. Available online: https://software.intel.com/content/www/us/en/develop/articles/computer-vision-annotation-tool-a-universal-approach-to-data-annotation.html (accessed on 10 September 2020).

- Cicchetti, D.V. Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychol. Assess. 1994, 6, 284–290. [Google Scholar] [CrossRef]

- Zieger, M. Ultrasound of the infant hip. Part 2. Validity of the method. Pediatr. Radiol. 1986, 16, 488–492. [Google Scholar] [CrossRef] [PubMed]

- Simon, E.A.; Saur, F.; Buerge, M.; Glaab, R.; Roos, M.; Kohler, G. Inter-observer agreement of ultrasonographic measurement of alpha and beta angles and the final type classification based on the Graf method. Swiss Med. Wkly. 2004, 134, 671–677. [Google Scholar] [CrossRef]

| Subgroup | n (%) | |

|---|---|---|

| Doctor | 921 (100) | |

| Detectable | 542 (58.9) | |

| Undetectable | 379 (41.1) | |

| Artificial Intelligence | 921 (100) | |

| Detectable | 555 (60.3) | |

| Undetectable | 366 (39.7) | |

| Doctor and Artificial Intelligence | 921 (100) | |

| Commonly detectable | 512 (55.6) | |

| Fail detection | 409 (44.4) |

| n (%) | Check Angle (Doctor) OK | Check Angle (Doctor) Error | |

|---|---|---|---|

| All detectable image | 512 (100) | ||

| Check angle (AI): OK | 320 (86.7) | 49 (34.3) | |

| Check angle (AI): Error | 49 (13.3) | 94 (65.7) |

| ICC | 95% Confidence Interval | Agreement | |

|---|---|---|---|

| Alpha angle | 0.764 | 0.699–0.815 | Excellent |

| Beta angle | 0743 | 0.689–0.788 | Good |

| n (%) | Artificial Intelligence (AI) | Human | AI–Human | Mean Absolute Deviation (MAD) | |

|---|---|---|---|---|---|

| Alpha angle | 320 (100) | 62.843 ± 6.514 | 64.117 ± 7.12 | −1.274 ± 4.555 | 3.470 |

| Beta angle | 320 (100) | 40.785 ± 7.69 | 40.679 ± 8.71 | 0.106 ± 5.9 | 4.501 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.-W.; Ye, H.-U.; Lee, K.-J.; Jang, W.-Y.; Lee, J.-H.; Hwang, S.-M.; Heo, Y.-R. Accuracy of New Deep Learning Model-Based Segmentation and Key-Point Multi-Detection Method for Ultrasonographic Developmental Dysplasia of the Hip (DDH) Screening. Diagnostics 2021, 11, 1174. https://doi.org/10.3390/diagnostics11071174

Lee S-W, Ye H-U, Lee K-J, Jang W-Y, Lee J-H, Hwang S-M, Heo Y-R. Accuracy of New Deep Learning Model-Based Segmentation and Key-Point Multi-Detection Method for Ultrasonographic Developmental Dysplasia of the Hip (DDH) Screening. Diagnostics. 2021; 11(7):1174. https://doi.org/10.3390/diagnostics11071174

Chicago/Turabian StyleLee, Si-Wook, Hee-Uk Ye, Kyung-Jae Lee, Woo-Young Jang, Jong-Ha Lee, Seok-Min Hwang, and Yu-Ran Heo. 2021. "Accuracy of New Deep Learning Model-Based Segmentation and Key-Point Multi-Detection Method for Ultrasonographic Developmental Dysplasia of the Hip (DDH) Screening" Diagnostics 11, no. 7: 1174. https://doi.org/10.3390/diagnostics11071174