Diagnostic Performance of Artificial Intelligence-Based Computer-Aided Diagnosis for Breast Microcalcification on Mammography

Abstract

:1. Introduction

2. Materials and Methods

2.1. Ethics Statements

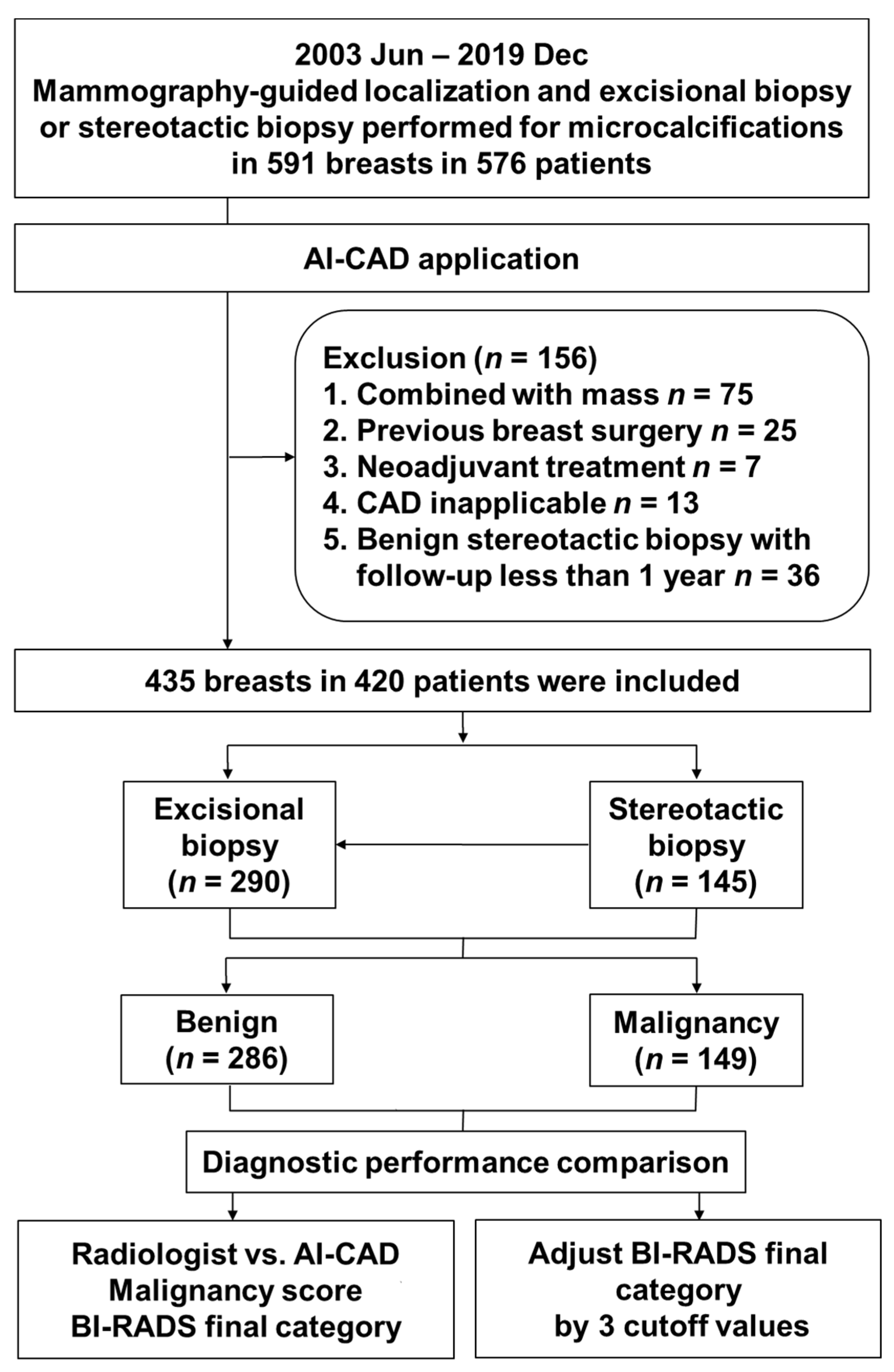

2.2. Patient Population

2.3. Electronic Medical Record Review

2.4. Image Interpretation

2.5. Computer-Aided Diagnosis Software

2.6. Adjustment of Radiologists’ Category Using AI-CAD Malignancy Score

2.7. Data and Statistical Analyses

3. Results

3.1. Patient and Lesion Characteristics

3.2. Diagnostic Performance of Radiologists and AI-CAD

3.3. Adjusted Radiologists’ Category Using AI-CAD Malignancy Score

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Broeders, M.; Moss, S.; Nyström, L.; Njor, S.; Jonsson, H.; Paap, E.; Massat, N.; Duffy, S.; Lynge, E.; Paci, E.; et al. The impact of mammographic screening on breast cancer mortality in Europe: A review of observational studies. J. Med. Screen 2012, 19, 14–25. [Google Scholar] [CrossRef]

- Marmot, M.G.; Altman, D.; Cameron, D.; Dewar, J.; Thompson, S.; Wilcox, M. The benefits and harms of breast cancer screening: An independent review. Br. J. Cancer 2013, 108, 2205–2240. [Google Scholar] [CrossRef] [Green Version]

- Bird, R.E.; Wallace, T.W.; Yankaskas, B.C. Analysis of cancers missed at screening mammography. Radiology 1992, 184, 613–617. [Google Scholar] [CrossRef]

- Giger, M.L. Computer-aided diagnosis in radiology. Acad. Radiol. 2002, 9, 1–3. [Google Scholar] [CrossRef]

- Kerlikowske, K.; Carney, P.A.; Geller, B.; Mandelson, M.T.; Taplin, S.H.; Malvin, K.; Ernster, V.; Urban, N.; Cutter, G.; Rosenberg, R.; et al. Performance of screening mammography among women with and without a first-degree relative with breast cancer. Ann. Intern. Med. 2000, 133, 855–863. [Google Scholar] [CrossRef] [PubMed]

- Majid, A.S.; de Paredes, E.S.; Doherty, R.D.; Sharma, N.R.; Salvador, X. Missed breast carcinoma: Pitfalls and pearls. Radiographics 2003, 23, 881–895. [Google Scholar] [CrossRef] [PubMed]

- US Food and Drug Administration. Summary of Safety and Effectiveness Data: R2 Technologies; P970058; US Food and Drug Administration: Silver Spring, MD, USA, 1998. [Google Scholar]

- Yang, S.K.; Moon, W.K.; Cho, N.; Park, J.S.; Cha, J.H.; Kim, S.M.; Kim, S.J.; Im, J.G. Screening mammography-detected cancers: Sensitivity of a computer-aided detection system applied to full-field digital mammograms. Radiology 2007, 244, 104–111. [Google Scholar] [CrossRef]

- Murakami, R.; Kumita, S.; Tani, H.; Yoshida, T.; Sugizaki, K.; Kuwako, T.; Kiriyama, T.; Hakozaki, K.; Okazaki, E.; Yanagihara, K.; et al. Detection of breast cancer with a computer-aided detection applied to full-field digital mammography. J. Digit. Imaging 2013, 26, 768–773. [Google Scholar] [CrossRef] [Green Version]

- Sadaf, A.; Crystal, P.; Scaranelo, A.; Helbich, T. Performance of computer-aided detection applied to full-field digital mammography in detection of breast cancers. Eur. J. Radiol 2011, 77, 457–461. [Google Scholar] [CrossRef]

- Ge, J.; Sahiner, B.; Hadjiiski, L.M.; Chan, H.P.; Wei, J.; Helvie, M.A.; Zhou, C. Computer aided detection of clusters of microcalcifications on full field digital mammograms. Med. Phys. 2006, 33, 2975–2988. [Google Scholar] [CrossRef] [Green Version]

- Scaranelo, A.M.; Eiada, R.; Bukhanov, K.; Crystal, P. Evaluation of breast amorphous calcifications by a computer-aided detection system in full-field digital mammography. Br. J. Radiol. 2012, 85, 517–522. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mayo, R.C.; Kent, D.; Sen, L.C.; Kapoor, M.; Leung, J.W.; Watanabe, A.T. Reduction of false-positive markings on mammograms: A retrospective comparison study using an artificial intelligence-based CAD. J. Digit. Marking 2019, 32, 618–624. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sickles, E.; D’Orsi, C.J.; Bassett, L.W. ACR BI-RADS Atlas: Breast Imaging Reporting and Data System; Mammography, Ultrasound, Magnetic Resonance Imaging, Follow-Up and Outcome Monitoring, Data Dictionary; ACR, American College of Radiology: Reston, VA, USA, 2013. [Google Scholar]

- Park, G.E.; Kim, S.H.; Lee, J.M.; Kang, B.J.; Chae, B.J. Comparison of positive predictive values of categorization of suspicious calcifications using the 4th and 5th editions of BI-RADS. AJR Am. J. Roentgenol. 2019, 213, 710–715. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Geras, K.J.; Lewin, A.A.; Moy, L. New frontiers: An update on computer-aided diagnosis for breast imaging in the age of artificial intelligence. AJR Am. J. Roentgenol. 2019, 212, 300–307. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Yang, X.; Cai, H.; Tan, W.; Jin, C.; Li, L. Discrimination of breast cancer with microcalcifications on mammography by deep learning. Sci. Rep. 2016, 6, 1–9. [Google Scholar] [CrossRef]

- Cai, H.; Huang, Q.; Rong, W.; Song, Y.; Li, J.; Wang, J.; Chen, J.; Li, L. Breast microcalcification diagnosis using deep convolutional neural network from digital mammograms. Comput. Math. Methods Med. 2019. [Google Scholar] [CrossRef]

- Lei, C.; Wei, W.; Liu, Z.; Xiong, Q.; Yang, C.; Yang, M.; Zhang, L.; Zhu, T.; Zhuang, X.; Liu, C.; et al. Mammography-based radiomic analysis for predicting benign BI-RADS category 4 calcifications. Eur. J. Radiol. 2019, 121, 108711. [Google Scholar] [CrossRef]

- Liu, H.; Chen, Y.; Zhang, Y.; Wang, L.; Luo, R.; Wu, H.; Wu, C.; Zhang, H.; Tan, W.; Yin, H.; et al. A deep learning model integrating mammography and clinical factors facilitates the malignancy prediction of BI-RADS 4 microcalcifications in breast cancer screening. Eur. Radiol. 2021. [Google Scholar] [CrossRef]

- Bahl, M. Detecting breast cancers with mammography: Will AI succeed where traditional CAD failed? Radiology 2019, 290, 315–316. [Google Scholar] [CrossRef]

- Kim, H.-E.; Kim, H.H.; Han, B.-K.; Kim, K.H.; Han, K.; Nam, H.; Lee, E.H.; Kim, E.-K. Changes in cancer detection and false-positive recall in mammography using artificial intelligence: A retrospective, multireader study. Lancet Digit. Health 2020, 2, e138–e148. [Google Scholar] [CrossRef] [Green Version]

- Nikitin, V.; Filatov, A.; Bagotskaya, N.; Kil, I.; Lossev, I.; Losseva, N. Improvement in ROC curves of readers with next generation of mammography. ECR 2014. [Google Scholar] [CrossRef]

- Gülsün, M.; Demirkazık, F.B.; Arıyürek, M. Evaluation of breast microcalcifications according to Breast Imaging Reporting and Data System criteria and Le Gal’s classification. Eur. J. Radiol. 2003, 47, 227–231. [Google Scholar] [CrossRef]

- Hofvind, S.; Iversen, B.F.; Eriksen, L.; Styr, B.M.; Kjellevold, K.; Kurz, K.D. Mammographic morphology and distribution of calcifications in ductal carcinoma in situ diagnosed in organized screening. Acta Radiol. 2011, 52, 481–487. [Google Scholar] [CrossRef] [PubMed]

- Freer, T.W.; Ulissey, M.J. Screening mammography with computer-aided detection: Prospective study of 12,860 patients in a community breast center. Radiology 2001, 220, 781–786. [Google Scholar] [CrossRef] [PubMed]

- Baum, F.; Fischer, U.; Obenauer, S.; Grabbe, E. Computer-aided detection in direct digital full-field mammography: Initial results. Eur. Radiol. 2002, 12, 3015–3017. [Google Scholar] [CrossRef]

- Lehman, C.D.; Wellman, R.D.; Buist, D.S.; Kerlikowske, K.; Tosteson, A.N.; Miglioretti, D.L.; Breast Cancer Surveillance Consortium. Diagnostic accuracy of digital screening mammography with and without computer-aided detection. JAMA Intern. Med. 2015, 175, 1828–1837. [Google Scholar] [CrossRef]

- Fenton, J.J.; Taplin, S.H.; Carney, P.A.; Abraham, L.; Sickles, E.A.; D’Orsi, C.; Berns, E.A.; Cutter, G.; Hendrick, R.E.; Barlow, W.E.; et al. Influence of computer-aided detection on performance of screening mammography. N. Engl. J. Med. 2007, 356, 1399–1409. [Google Scholar] [CrossRef]

- Rodríguez-Ruiz, A.; Krupinski, E.; Mordang, J.J.; Schilling, K.; Heywang-Köbrunner, S.H.; Sechopoulos, I.; Mann, R.M. Detection of breast cancer with mammography: Effect of an artificial intelligence support system. Radiology 2019, 290, 305–314. [Google Scholar] [CrossRef] [PubMed]

- Watanabe, A.T.; Lim, V.; Vu, H.X.; Chim, R.; Weise, E.; Liu, J.; Bradley, W.G.; Comstock, C.E. Improved cancer detection using artificial intelligence: A retrospective evaluation of missed cancers on mammography. J. Digit. Imaging 2019, 32, 625–637. [Google Scholar] [CrossRef] [Green Version]

- Schönenberger, C.; Hejduk, P.; Ciritsis, A.; Marcon, M.; Rossi, C.; Boss, A. Classification of mammographic breast microcalcifications using a deep convolutional neural network: A BI-RADS–based approach. Invest. Radiol. 2021, 56, 224–231. [Google Scholar] [CrossRef]

- Kim, M.J.; Kim, E.-K.; Kwak, J.Y.; Son, E.J.; Youk, J.H.; Choi, S.H.; Han, M.; Oh, K.K. Characterization of microcalcification: Can digital monitor zooming replace magnification mammography in full-field digital mammography? Eur. Radiol. 2009, 19, 310–317. [Google Scholar] [CrossRef] [PubMed]

| Characteristic | Benign (n = 286) | Malignant (n = 149) | Total | p-Value |

|---|---|---|---|---|

| Age (Years), Mean ± SD | 47.8 ± 8.6 | 50.1 ± 9.6 | 48.7 ± 9.0 | 0.01 |

| Extent (cm) | 1.8 ± 1.6 | 2.5 ± 2.0 | 2.0 ± 1.8 | <0.001 |

| Symptomatic | 15 (5.2) | 11 (7.4) | 26 (6.0) | 0.37 |

| Cancer risk factor | ||||

| Menopausal | 94 (32.9) | 60 (40.3) | 154 (35.4) | 0.13 |

| Personal history of HRT | 25 (8.7) | 19 (12.8) | 44 (10.1) | 0.19 |

| History of breast cancer | 41 (14.3) | 16 (10.7) | 57 (13.1) | 0.29 |

| Family history of breast cancer | 21 (7.3) | 19 (12.8) | 40 (9.2) | 0.06 |

| Dense breast | 263 (92.0) | 123 (82.6) | 386 (88.7) | 0.003 |

| Calcification morphology | <0.001 | |||

| Amorphous | 195 (68.2) | 43 (28.9) | 238 (54.7) | <0.001 |

| Coarse heterogeneous | 50 (17.5) | 24 (16.1) | 74 (17.0) | 0.72 |

| Fine pleomorphic | 37 (12.9) | 64 (43.0) | 101 (23.2) | <0.001 |

| Fine linear | 4 (1.4) | 18 (12.1) | 22 (5.1) | <0.001 |

| Calcification distribution | 0.001 | |||

| Diffuse | 1 (0.4) | 1 (0.7) | 2 (0.5) | 1 |

| Regional | 54 (18.9) | 28 (18.8) | 82 (18.9) | 0.98 |

| Grouped | 194 (67.8) | 80 (53.7) | 274 (63.0) | 0.004 |

| Linear | 1 (0.4) | 5 (3.4) | 6 (1.4) | 0.02 |

| Segmental | 36 (12.6) | 35 (23.5) | 71 (16.3) | 0.004 |

| Final assessment (category) | <0.001 | |||

| Category 4a | 243 (85.0) | 69 (46.3) | 312 (71.7) | <0.001 |

| Category 4b | 42 (14.7) | 45 (30.2) | 87 (20.0) | <0.001 |

| Category 4c | 1 (0.4) | 29 (19.5) | 30 (6.9) | <0.001 |

| Category 5 | 0 (0.0) | 6 (4.0) | 6 (1.4) | 0.002 |

| Performer | AUC (95% CI) | p-Value | Cutoff Value | Sensitivity (%) | Specificity (%) |

|---|---|---|---|---|---|

| Malignancy score | |||||

| Radiologist | 0.722 (0.677–0.763) | 0.393 | 43.5 | 54.4 | 89.2 |

| AI-CAD | 0.745 (0.701–0.785) | 38.03 | 69.1 | 69.01 | |

| BI-RADS Category | |||||

| Radiologist | 0.710 (0.665–0.752) | 0.758 | Category 4a | 53.7 | 85 |

| AI-CAD | 0.718 (0.673–0.760) | Category 4b | 59.1 | 78.2 |

| Category | AUC (95% CI) | p-Value | Cutoff Value | Sensitivity (%) | Specificity (%) |

|---|---|---|---|---|---|

| Radiologist | 0.710 (0.665–0.752) | Category 4a | 53.7 | 85 | |

| Adjusted at 2% cutoff | 0.726 (0.682–0.768) | 0.026 | Category 4b | 53 | 85 |

| Adjusted at 10% cutoff | 0.744 (0.701–0.785) | 0.014 | Category 4b | 53.4 | 87.1 |

| Adjusted at 38.03% cutoff | 0.756 (0.713–0.796) | 0.013 | Category 4b | 46.33 | 92.3 |

| Study | Purpose | AI Method | Result |

|---|---|---|---|

| Mayo et al. [13] | Determine to reduce false positive per image with AI-CAD | Deep learning | Significant reductions in false marks with AI-CAD; calcifications (83%), mass (56%) with no reduction in sensitivity |

| Wang et al. [17] | Improve the diagnostic accuracy of microcalcifications with deep learning-based models | Deep learning | Accuracy was increased by adopting a combinatorial approach to detect microcalcifications and masses simultaneously. |

| Cai et al. [18] | Characterize the calcifications by descriptors obtained from deep learning and handcrafted descriptors | CNN | Classification precision of 89.32% and sensitivity of 86.89% using the filtered deep features in microcalcifications |

| Lei et al. [19] | Development of a radiomic model for diagnosis of BI-RADS category 4 calcifications | LASSO algorithm | The identification ability of the radiomic nomogram including six radiomic features and the menopausal state was strong with an AUC of 0.80. |

| Liu et al. [20] | Investigate deep learning in predicting malignancy of BI-RADS category 4 microcalcifications | Deep learning | The combined model achieved non-inferior performance as senior radiologists and outperformed junior radiologists. |

| Kim et al. [22] | Evaluate whether the AI algorithm can improve accuracy of breast cancer diagnosis | Deep CNN | AUC of AI (0.940) vs. average of radiologists (0.810) and AUC of radiologists improved with AI (0.801–0.881). |

| Rodríguez-Ruiz et al. [30] | Compare the performances of radiologists with and without AI system | Deep CNN | AUC with AI (0.89) higher than without AI (0.87) and sensitivity with AI (86%) higher than without AI (83%) |

| Watanabe et al. [31] | Determines the efficacy of AI-CAD in improving radiologists’ sensitivity in detecting originally missed cancers. | Deep learning | Statistically significant improvement in radiologists’ accuracy and sensitivity for detection of originally missed cancers |

| Schönenberger et al. [32] | Investigate the potential of a deep convolutional neural network to accurately classify microcalcifications | Deep CNN | The accuracy was 39.0% for the BI-RADS 4 cohort, 80.9% for BI-RADS 5 cohort, and 76.6% for BI-RADS 4 + 5 cohort. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Do, Y.A.; Jang, M.; Yun, B.L.; Shin, S.U.; Kim, B.; Kim, S.M. Diagnostic Performance of Artificial Intelligence-Based Computer-Aided Diagnosis for Breast Microcalcification on Mammography. Diagnostics 2021, 11, 1409. https://doi.org/10.3390/diagnostics11081409

Do YA, Jang M, Yun BL, Shin SU, Kim B, Kim SM. Diagnostic Performance of Artificial Intelligence-Based Computer-Aided Diagnosis for Breast Microcalcification on Mammography. Diagnostics. 2021; 11(8):1409. https://doi.org/10.3390/diagnostics11081409

Chicago/Turabian StyleDo, Yoon Ah, Mijung Jang, Bo La Yun, Sung Ui Shin, Bohyoung Kim, and Sun Mi Kim. 2021. "Diagnostic Performance of Artificial Intelligence-Based Computer-Aided Diagnosis for Breast Microcalcification on Mammography" Diagnostics 11, no. 8: 1409. https://doi.org/10.3390/diagnostics11081409

APA StyleDo, Y. A., Jang, M., Yun, B. L., Shin, S. U., Kim, B., & Kim, S. M. (2021). Diagnostic Performance of Artificial Intelligence-Based Computer-Aided Diagnosis for Breast Microcalcification on Mammography. Diagnostics, 11(8), 1409. https://doi.org/10.3390/diagnostics11081409