1. Introduction

The causes of metal artifacts are quite complicated. Depending on the shape and density of the metal objects, the appearance of metal artifacts can vary significantly. In medical applications, metal objects can include metallic orthopedic hardware (e.g., surgical pins and clips) or equipment attached to the patient’s body (e.g., biopsy needles) [

1]. A metal object can produce beam hardening, partial volume, aliasing, small-angle scatter, under-range data acquisition electrons, or overflow of the dynamic range in the reconstruction process. A previous study on digital tomosynthesis (DT) proposed several artifact compensation approaches to minimizing metal artifacts [

2,

3,

4].

Dual-energy DT (DE-DT) has recently become available. One of the inherent capabilities of DE-DT is the generation of synthesized monochromatic images obtained at different energy levels (keV) from a single data acquisition [

3,

4]. By generating monochromatic images at higher energy levels (e.g., 140 keV), beam-hardening artifacts can be suppressed. However, DT devices that enable dual-energy acquisition in clinical practice are rare; generally, DT acquisition is performed through single-energy acquisition. Therefore, from the viewpoint of versatility, an improved method must be developed to achieve metal artifact reduction (MAR) in polychromatic radiography.

Based on highly attenuating metal objects, nearly all X-ray photons are attenuated, and few reach the detector, which results in under-range data acquisition electrons. Combined with the electronic noise in the data acquisition system, near-zero or negative readings are often recorded in the measured signal after offset correction. The non-perfect treatment of these signals prior to the logarithmic operation will bias the projection estimation. When this occurs, image artifacts similar to photon starvation would appear. Combined with the bias and beam-hardening effects caused by metals, both shading and streaking artifacts appear in the reconstructed image. The effect of dose differences on metal artifacts related to such corrupted readings has been reported [

5,

6].

Many studies have been conducted to overcome metal-induced image artifacts [

1,

7,

8,

9,

10,

11]. For projection samples that pass through highly attenuating metal objects, the measured values become unreliable and must be replaced or significantly modified. Projection in-painting is one of the approaches to replacing erroneous data [

8,

9,

10,

11]. Generally, this approach first identifies the projection channels corrupted by the metallic objects. The next step is to replace these channel readings with estimated projection signals generated by the nonmetallic portion of the scanned object. The new projection samples allow the reconstruction of a metal-free image volume of the scanned object.

This approach is associated with several challenges as follows [

12]: inconsistency between the synthetic and actual projections, loss of low-contract objects in the inpainted projection due to influence of the synthetic projection, and a degraded spatial resolution in the final reconstruction images. To meet these challenges, advanced image-processing techniques are often used. However, all inpainting types of algorithms have limitations. Using a large amount of missing information, the signal estimation process will not be able to fully restore the information, which leads to residual artifacts.

The development of deep learning in the reconstruction of medical images has led to recent advances in MAR featuring neural networks. Park et al. [

13] used U-Net [

14] in the sinogram region to process artifacts associated with beam hardening in polychromatic radiographic computed tomography (CT). Zhang et al. [

15] suggested that a convolutional neural network (CNN) [

16] generates a priority image with less artifacts to correct the metal-corrupted regions of the sinogram. While these methods have shown reasonable results in MAR, they have limited ability to process new artifacts remaining in the reconstructed CT image. Motivated by the success of deep learning in solving inappropriate inverse problems in image processing [

17,

18,

19], researchers have most recently formulated MAR as an image restoration problem, and its resolution improves the quality of reconstructed CT images, image-to-image translation networks [

20,

21,

22,

23,

24], as well as conditional generative adversarial networks (cGAN or pix2pix) [

25], thereby further reducing metal artifacts [

26]. GAN improves the recognition of lesions or tissues in medical images. For example, Han et al. have reported that the combination of noise-to-image and image-to-image improves the detection accuracy of brain tumors [

27]. Sandfort et al. have also reported that cycle-GAN [

28] improves tissue segmentation accuracy on CT images [

29].

In general, the metal mask or metal trace regions are usually small and occupy a small portion of the whole image. The network input would weaken the metal trace information, owing to the down-sampling operations of the network. Therefore, we used the mask pyramid U-Net (mask pyramid network [MPN]) [

30] to retain the model trace information at each layer to explicitly enable the network to extract more discriminative features to restore the missing information in the metal trace region. As GAN has been reported to be useful for noise reduction [

31], including MAR, it can be useful for image quality improvement (artifact reduction) in DT.

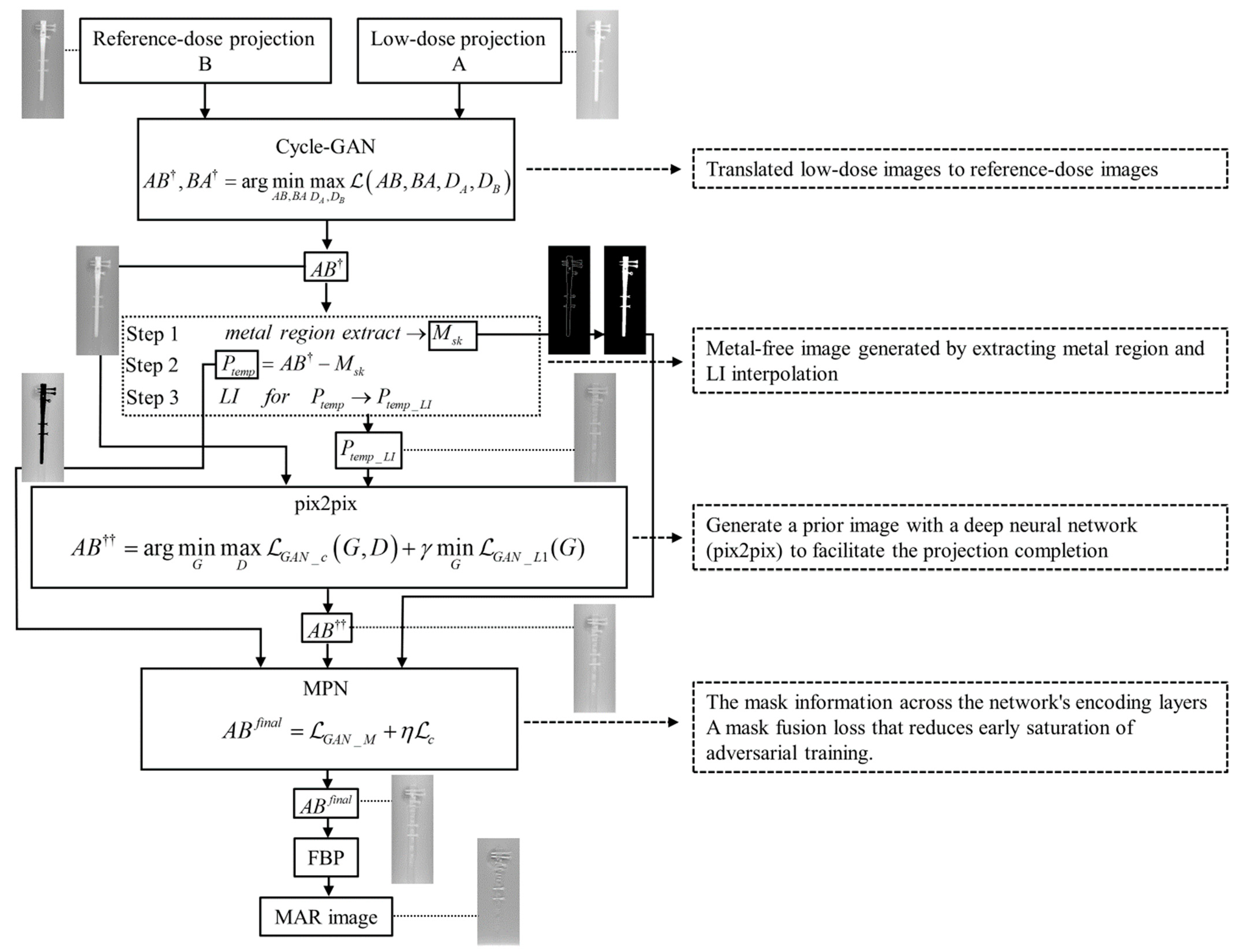

In this paper, we present a novel projection-based cross-domain learning framework for generalizable MAR. Distinct from previous image restoration-based solutions, MAR was formulated as a deep learning- and projection-based completion task and training for a deep neural network, that is, a combination of multiple GANs (cycle, pix2pix, and MPN) to restore the unreliable projections within the metal region. The prior metal-free image would provide a good estimation of the missing projections [

23]. To ease the pix2pix learning and improve the completion quality, we trained another neural network, cycle-GAN, to generate a good prior image with fewer metal artifacts and guide the pix2pix learning by standardizing the low-dose projection of the prior image. Moreover, we designed a novel mask pyramid projection learning strategy that would fully utilize the prior projection guidance to improve the continuity of projection completion, thereby alleviating the new artifacts in the reconstructed DT images. The final DT image was then reconstructed from the completed projection using the conventional filtered back projection (FBP) algorithm [

32]. Compared with the previous MAR approaches for DT [

2,

3,

4], the whole framework was trained efficiently for the complementary learning of prior processed images according to the method of each network so that the prior image generation and deep projection completion procedures can be learned in a collaborative manner and benefit from each other. Our recommended MAR algorithm (combination of hybrid GAN: cycle-GAN_pix2pix_MPN [CGpM-MAR]) is described in the Methods section.

In addition, we investigated the causal relationship between dose reduction and quality of MAR. Our findings suggest the possibility of reducing exposure dose and improving image quality using the CGpM-MAR algorithm. The developmental process and basic evaluation of the method are presented in this study.

5. Discussion

This study revealed that the CGpM-MAR algorithm yielded an adequate overall performance, reducing the radiation dose by 55%. The combination of multi-training network images produced using this algorithm yielded good results independently of the type of metal present in the prosthesis phantom. In addition, this algorithm successfully removed low- and high-frequency artifacts from the images. CGpM-MAR was particularly useful in reducing a large number of artifacts. Therefore, this algorithm is a promising new option for prosthetic imaging, as it generated artifact-reduced images and reduced radiation doses that were far superior to those obtained from images processed using conventional algorithms. The flexibility of CGpM-MAR in the choice of imaging parameters, which is based on the desired final images and prosthetic imaging conditions, promises increased usability.

The projection-space combination of multi-training approaches described here can be used to generate images to formulate the MAR as a deep learning algorithm for projection completion problems to improve the generalization and robustness of the framework. Since directly regressing accurate missing projection data is difficult to undertake [

23], we propose to incorporate the prior projection image generation procedure and adopt a combination of multiple networks and a projection completion strategy. This method can improve the continuity of the projection values at the boundary of metal traces and alleviate the new artifacts, which are common drawbacks of projection completion-based MAR methods. Therefore, we believe that our novel CGpM-MAR could effectively reduce metal artifacts in actual practice.

The ability of CGpM-MAR to obtain MAR images and reduce the radiation dose by approximately 55% (

Figure 5 and

Figure 6) may be due to the benefits of the first process, cycle-GAN. Training an image-to-image translation framework requires fully associated images, which is often difficult to learn. Cycle-GAN allows the translation between domains that are not fully associated and can therefore solve this problem. Cycle-GAN has three types of losses: First, the cycle consistency loss calculates the difference between the original image and the original domain after translation into another domain and the original domain. Second, adversarial loss guarantees that the image is real. Third, identity loss preserves the quantization of the pixel space of the image. The two generators use a U-Net [

14] structure, and the two discriminators have a Patch-GAN-based structure [

39] for learning. By applying another style to the image during the translation process, the low-dose projection image can then be applied to the reference-dose projection image.

The MPN used as the final learning process in CGpM-MAR contributed to the MAR. The reason was that adversarial learning could be introduced into the projection to recover more structural and anatomically plausible information from the metallic domain. In addition, a new MPN has been developed, which extracts geometric information of different scales and mask fusion loss that penalizes premature saturation, making learning more robust for different shapes of metal implants, were introduced.

In DT-MAR processing without deep learning methods [

2], voxels containing artifacts tended to have higher values than their neighboring artifact-free voxels, which affected the prosthetic appearance, wherein former voxels stood out against the background of the latter. Accordingly, these residual artifacts are conspicuous when images subjected to the FBP method are compared with non-artifact-reduced images. Therefore, DT-MAR processing based on the polychromatic radiographic imaging method has a limited ability to reduce metal artifacts.

The usefulness of image quality improvement for reducing noise and metal artifacts in DT using deep learning has recently been reported [

4,

31]. Although noise and radiation-dose reductions using deep learning in the DT of the breast are possible, no studies have reported on the reduction of radiation dose related to MAR. The radiation-dose reduction is approximately 20% in MAR without deep learning [

6], thus, applying deep learning can further improve MAR and radiation-dose reduction.

Our CGpM-MAR algorithm has some limitations. First, this study was to reduce DT metal artifacts by single-energy (polychromatic) acquisition depending on the versatility of the processing. When DE-DT acquisition becomes widespread in the future, we would like to apply CGpM-MAR in monochromatic radiographic imaging. Second, because CGpM-MAR combines multiple deep learning processes, generating the final image takes time. Therefore, improvements in hardware processing are desired to speed up the process. Third, the model used for learning used a phantom. By acquiring data, such as prosthesis size and the positional relationship between prosthesis and normal structure, under various conditions according to clinical use, image quality can possibly be improved further. Moreover, further knowledge of various other structural patterns is needed in processing complicated structures.

Although the results of this study is limited to prosthesis phantom, the evaluations were performed in a state closest to actual biological composition in vivo. Furthermore, this approach will accelerate clinical application in terms of radiation-dose reduction and image quality improvement, which are issues in X-ray imaging. We believe that the CGpM-MAR algorithm will optimize the acquisition protocol in future X-ray imaging and radiation-dose reduction technology and improve the accuracy of medical images.

Recently, some works studied GAN applications with and without MAR [

40,

41]. These studies applied GAN technology to the reconstructed image to improve the accuracy of the tomographic image in the in-plane and longitudinal directions. Although the images processed were projection images, we predict that further improvements to the image quality (MAR) of the reconstructed image can be made by theoretically using GAN in the three-dimensional direction or in multiple slice generation from the input slice images. In addition, investigating the simultaneous reduction of GAN and metal artifacts in three-dimensional data would be an interesting future research direction.