Review of Machine Learning Applications Using Retinal Fundus Images

Abstract

:1. Introduction

2. Domain Knowledge

2.1. Fundus Image

2.2. Retinal Structure

2.3. Retinopathy

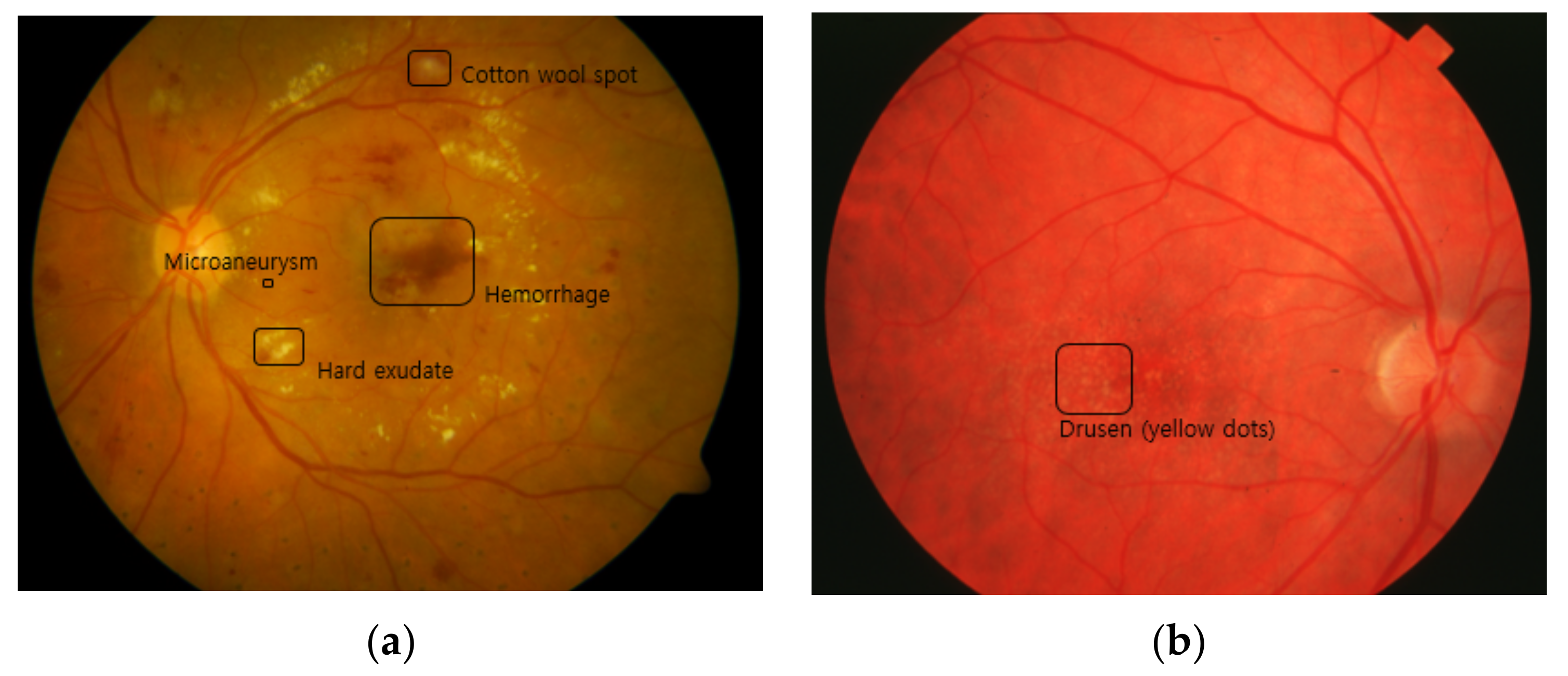

2.3.1. DR

- MAIt is the most typical lesion and the first visible sign of DR. It is caused by limited oxygen supply. It appears in the form of small saccular structures represented by round red spots with a diameter of 25 to 100 µm [27].

- Cotton wool spot (soft exudate)It is an acute sign of vascular insufficiency to an area of the retina found in early DR, and it is also called soft exudate. It appears as white patches on the retina, which is a result of damage to the nerve fibers due to the occlusion of small arterioles, and it causes accumulations of axoplasmic material within the nerve fiber layer [28].

- HemorrhageRetinal hemorrhage refers to bleeding from the blood vessels in the retina caused by high blood pressure or blockage in arterioles. It ranges from the smallest dot to a massive sub-hyaloid hemorrhage. Depending on the size, location, and shape, it provides clues about underlying systemic disorders such as DR and AMD [29].

- Hard exudateHard exudate is caused by increased vascular permeability, leading to the leakage of fluid and lipoprotein into the retina from blood vessels, which are represented as small, sharply demarcated yellow or white, discrete compact groups of patches at the posterior pole [30].

- NeovascularizationWhen oxygen shortage occurs in the retinal region due to retinal vessel occlusion, the vascular endothelium grows to overcome the lack of oxygen. This new vessel formation can be extended into the vitreous cavity region and leads to vision impairment [31].

2.3.2. AMD

2.3.3. Glaucoma

3. Machine Learning Methods

3.1. Retinal Vessel Extraction Methods

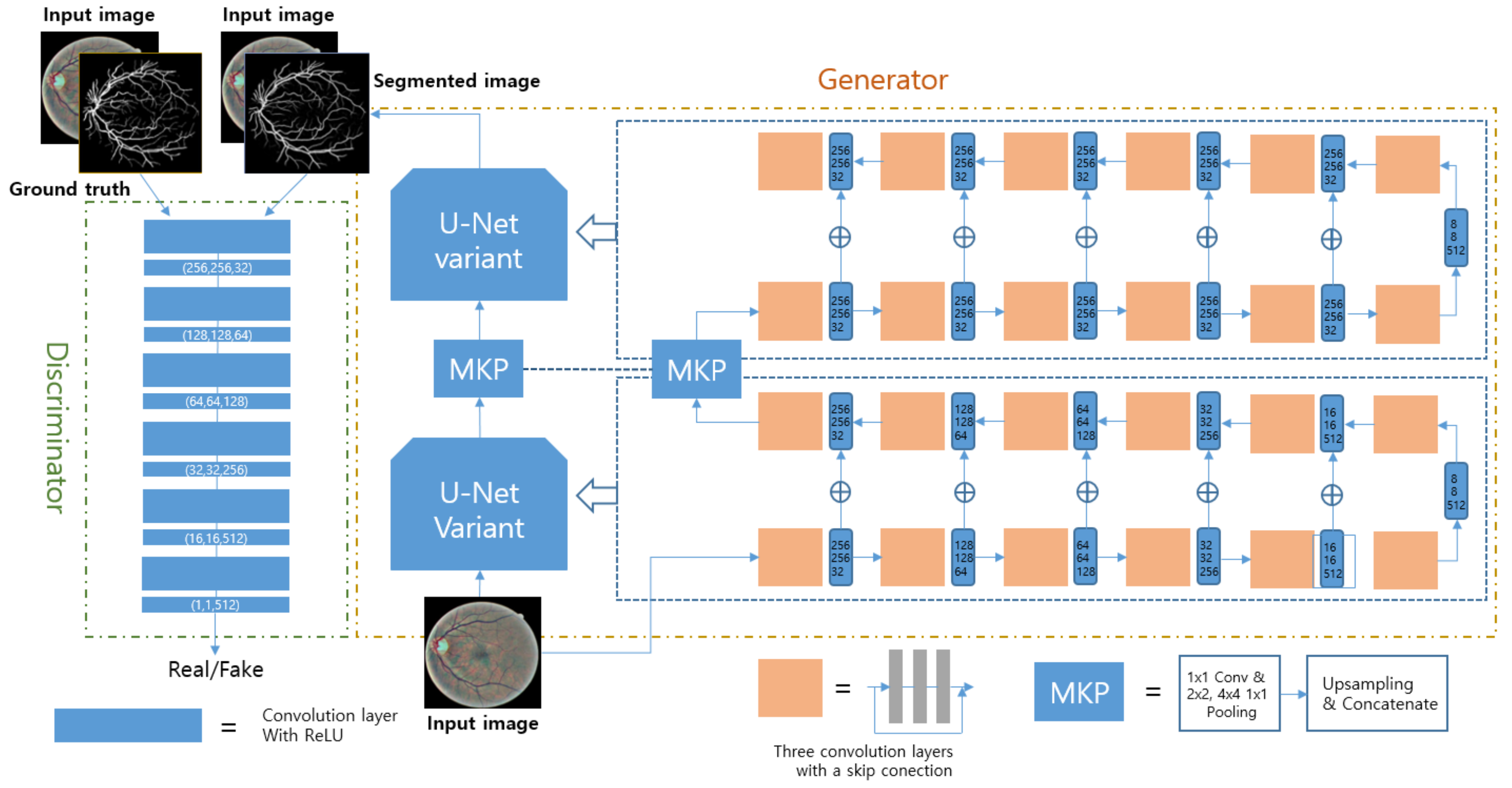

3.1.1. Deep Learning Methods for Retinal Vessel Segmentation

3.1.2. Other Machine Learning Methods for Retinal Vessel Segmentation

3.1.3. Machine Learning Methods for Retinal Vessel Classification

| Task | Reference | Dataset | Metric (%) | ||

|---|---|---|---|---|---|

| Sensitivity | Specificity | Accuracy | |||

| Vessel segmentation | [51] | DRIVE | 83.46 | 98.36 | 97.06 |

| STARE | 83.24 | 99.38 | 98.76 | ||

| [54] | DRIVE | 86.44 | 95.54 | 94.63 | |

| STARE | 82.54 | 96.47 | 95.32 | ||

| [55] | DRIVE | 86.44 | 97.67 | 95.89 | |

| STARE | 83.25 | 97.46 | 95.02 | ||

| Vessel classification | [73] | DRIVE | 94.2 | 92.7 | 93.5 |

| INSPIRE | 96.8 | 95.7 | 96.4 | ||

| WIDE | 96.2 | 94.2 | 95.2 | ||

| [74] | DRIVE | 95.0 | 91.5 | 93.2 | |

| INSPIRE | 96.9 | 96.6 | 96.8 | ||

| WIDE | 92.3 | 88.2 | 90.2 | ||

3.2. Automation of Diagnosis and Screening Methods

3.2.1. DR

3.2.2. AMD

3.2.3. Glaucoma

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lakhani, P.; Sundaram, B. Deep Learning at Chest Radiography: Automated Classification of Pulmonary Tuberculosis by Using Convolutional Neural Networks. Radiology 2017, 284, 2. [Google Scholar] [CrossRef] [PubMed]

- Lundervold, A.S.; Lundervold, A. An overview of deep learning in medical imaging focusing on MRI. Z. Med. Phys. 2019, 29, 102–127. [Google Scholar] [CrossRef]

- Jo, T.; Nho, K.; Saykin, A.J. Deep Learning in Alzheimer’s Disease: Diagnostic Classification and Prognostic Using Neuroimaging Data. Front. Aging Neurosci. 2019, 11, 220. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cina, A.; Bassani, T.; Panico, M.; Luca, A.; Masharawi, Y.; Brayda-Bruno, M.; Galbusera, F. 2-step deep learning model for landmarks localization in spine radiographs. Sci. Rep. 2021, 11, 9482. [Google Scholar] [CrossRef] [PubMed]

- Ranjbarzadeh, R.; Kasgari, A.B.; Ghoushchi, S.J.; Anari, S.; Naseri, M.; Bendechache, M. Brain tumor segmentation based on deep learning and an attention mechanism using MRI multi-modalities brain images. Sci. Rep. 2021, 11, 10930. [Google Scholar] [CrossRef]

- Zeng, C.; Gu, L.; Liu, Z.; Zhao, S. Review of Deep Learning Approaches for the Segmentation of Multiple Sclerosis Lesions on Brain MRI. Front. Aging Neurosci. 2020, 14, 610967. [Google Scholar] [CrossRef]

- Ebrahimkhani, S.; Jaward, M.H.; Cicuttini, F.M.; Dharmaratne, A.; Wang, Y.; Herrera, A.G.S. A review on segmentation of knee articular cartilage: From conventional methods towards deep learning. Artif. Intell. Med. 2020, 106, 101851. [Google Scholar] [CrossRef]

- Cui, S.; Ming, S.; Lin, Y.; Chen, F.; Shen, Q.; Li, H.; Chen, G.; Gong, X.; Wang, H. Development and clinical application of deep learning model for lung nodules screening on CT images. Sci. Rep. 2020, 10, 13657. [Google Scholar] [CrossRef]

- Frid-Adar, M.; Diamant, I.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. GAN-based synthetic medical image augmentation for increased CNN performance in liver lesion classification. Neurocomputing 2018, 321, 321–331. [Google Scholar] [CrossRef] [Green Version]

- Dar, S.U.; Yurt, M.; Karacan, L.; Erdem, A.; Erdem, E.; Çukur, T. Image Synthesis in Multi-Contrast MRI with Conditional Generative Adversarial Networks. IEEE Trans. Med. Imaging 2019, 38, 2375–2388. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Li, H.; Maurer-Stroh, S.M.; Cheng, L. Synthesizing retinal and neuronal images with generative adversarial nets. Med. Image Anal. 2018, 49, 14–26. [Google Scholar] [CrossRef]

- Ilginis, T.; Clarke, J.; Patel, P.J. Ophthalmic imaging. Br. Med. Bull. 2014, 111, 77–88. [Google Scholar] [CrossRef] [Green Version]

- Aumann, S.; Donner, S.; Fischer, J.; Müller, F. Optical Coherence Tomography (OCT): Principle and Technical Realization. In High Resolution Imaging in Microscopy and Ophthalmology; Bille, J.F., Ed.; Springer: Cham, Switzerland, 2019; pp. 59–85. [Google Scholar]

- Chu, A.; Squirrell, D.; Phillips, A.M.; Veghefi, E. Essentials of a Robust Deep Learning System for Diabetic Retinopathy Screening: A Systematic Literature Review. J. Ophthalmol. 2020, 2020, 8841927. [Google Scholar] [CrossRef]

- Jiang, A.; Huang, Z.; Qui, B.; Meng, X.; You, Y.; Liu, X.; Liu, G.; Zhou, C.; Yang, K.; Maier, A.; et al. Comparative study of deep learning models for optical coherence tomography angiography. Biomed. Opt. Express 2020, 11, 1580–1597. [Google Scholar] [CrossRef]

- O’Byrne, C.; Abbas, A.; Korot, E.; Keane, P.A. Automated deep learning in ophthalmology: AI that can build AI. Curr. Opin. Ophthalmol. 2021, 32, 406–412. [Google Scholar] [CrossRef]

- Charry, O.J.P.; Gonzales, F.A. A systematic Review of Deep Learning Methods Applied to Ocular Images. Cienc. Ing. Neogranad. 2020, 30, 9–26. [Google Scholar] [CrossRef]

- Pekala, M.; Joshi, N.; Liu, T.Y.A.; Bressler, N.M.; Debuc, D.C.; Burlina, P. OCT Segmentation via Deep Learning: A Review of Recent Work. In Proceedings of the Asian Conference on Computer Vision (ACCV), Perth, Australia, 2–6 December 2018; pp. 316–322. [Google Scholar]

- Li, T.; Bo, W.; Hu, C.; Kang, H.; Liu, H.; Wang, K.; Fu, H. Applications of deep learning in fundus images: A review. Med. Image Anal. 2021, 69, 101971. [Google Scholar] [CrossRef]

- Badar, M.; Haris, M.; Fatima, A. Application of deep learning for retinal image analysis: A review. Comput. Sci. Rev. 2020, 35, 100203. [Google Scholar] [CrossRef]

- Barros, D.M.S.; Moura, J.C.C.; Freire, C.R.; Taleb, A.C.; Valentim, R.A.M.; Morais, P.S.G. Machine learning applied to retinal image processing for glaucoma detection: Review and perspective. Biomed. Eng. Online 2020, 19, 20. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nuzzi, R.; Boscia, G.; Marolo, P.; Ficardi, F. The Impact of Artificial Intelligence and Deep Learning in Eye Diseases: A Review. Front. Med. 2021, 8, 710329. [Google Scholar] [CrossRef]

- Chen, C.; Chuah, J.H.; Ali, R.; Wang, Y. Retinal Vessel Segmentation Using Deep Learning: A Review. IEEE Access 2021, 9, 111985–112004. [Google Scholar] [CrossRef]

- Selvam, S.; Kumar, T.; Fruttiger, M. Retinal Vasculature development in health and disease. Prog. Retin. Eye Res. 2018, 63, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Kumar, V.; Abbas, A.; Aster, J. Robbins & Cotran Pathologic Basis of Disease, 8th ed.; Salamat, M.S., Ed.; Elsevier: Philadelphia, PA, USA, 2009; Volume 69, pp. 1616–1617. [Google Scholar]

- Microaneurysm. Available online: https://www.sciencedirect.com/topics/medicine-and-dentistry/microaneurysm (accessed on 1 August 2021).

- Cotton Wool Spots. Available online: https://www.sciencedirect.com/topics/medicine-and-dentistry/cotton-wool-spots (accessed on 1 August 2021).

- Retinal Hemorrhage. Available online: https://www.ncbi.nlm.nih.gov/books/NBK560777 (accessed on 1 August 2021).

- Esmann, V.; Lundbæk, L.; Madsen, P.H. Types of Exudates in Diabetic Retinopathy. J. Intern. Med. 1963, 174, 375–384. [Google Scholar] [CrossRef] [PubMed]

- Neely, K.A.; Gardner, T.W. Ocular Neovascularization. Am. J. Pathol. 1998, 153, 665–670. [Google Scholar] [CrossRef]

- Ministry of Health of New Zealand. Diabetic Retinal Screening, Grading, Monitoring and Referral Guidance; Ministry of Health: Wellington, New Zealand, 2016; pp. 9–15.

- Davis, M.D.; Gangnon, R.E.; Lee, L.Y.; Hubbard, L.D.; Klein, B.E.K.; Klein, R.; Ferris, F.L.; Bressler, S.B.; Milton, R.C. The Age-Related Eye Disease Study severity scale for age-related macular degeneration: AREDS Repot No. 17. Arch Ophthalmol. 2005, 123, 1484–1498. [Google Scholar]

- Seddon, J.M.; Sharma, S.; Adelman, R.A. Evaluation of the clinical age-related maculopathy staging system. Ophthalmology 2006, 113, 260–266. [Google Scholar] [CrossRef] [PubMed]

- Thomas, R.; Loibl, K.; Parikh, R. Evaluation of a glaucoma patient. Indian J. Ophthalmol. 2011, 59, S43–S52. [Google Scholar] [CrossRef]

- Weinreb, R.N.; Aung, T.; Medeiros, F.A. The Pathophysiology and Treatment of Glaucoma. JAMA 2014, 311, 1901–1911. [Google Scholar] [CrossRef] [Green Version]

- Dong, L.; Yang, Q.; Zhang, R.H.; Wei, W.B. Artificial intelligence for the detection of age-related macular degeneration in color fundus photographs: A systematic review and meta-analysis. EclinicalMedicine 2021, 35, 100875. [Google Scholar] [CrossRef]

- Mookiah, M.R.K.; Hogg, S.; MacGillivray, T.J.; Prathiba, V.; Pradeepa, R.; Mohan, V.; Anjana, R.M.; Doney, A.S.; Palmer, C.N.A.; Trucco, E. A review of machine learning methods for retinal blood vessel segmentation and artery/vein classification. Med. Image Anal. 2021, 68, 101905. [Google Scholar] [CrossRef] [PubMed]

- Alyoubi, W.L.; Shalash, W.M.; Abulkhair, M.F. Diabetic retinopathy detection through deep learning techniques: A review. Inform. Med. Unlocked 2020, 20, 100377. [Google Scholar] [CrossRef]

- Thompson, A.C.; Jammal, A.A.; Medeiros, F.A. A Review of Deep Learning for Screening, Diagnosis, and Detection of Glaucoma Progression. Transl. Vis. Sci. Technol. 2020, 9, 42. [Google Scholar] [CrossRef] [PubMed]

- Neuroanatomy, Retina. Available online: https://www.ncbi.nlm.nih.gov/books/NBK545310 (accessed on 22 August 2021).

- Maji, D.; Santara, A.; Ghosh, S.; Sheet, D.; Mitra, P. Deep neural network and random forest hybrid architecture for learning to detect retinal vessels in fundus images. In Proceedings of the 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 3029–3032. [Google Scholar]

- Wang, S.; Yin, Y.; Cao, G.; Wei, B.; Zheng, Y.; Yang, G. Hierarchical retinal blood vessel segmentation based on feature and ensemble learning. Neurocomputing 2015, 149, 708–717. [Google Scholar] [CrossRef]

- Fu, H.; Xu, Y.; Wong, D.W.K.; Liu, J. Retinal vessel segmentation via deep learning network and fully-connected conditional random fields. In Proceedings of the 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016; pp. 698–701. [Google Scholar]

- Fu, H.; Xu, Y.; Lin, S.; Wong, D.W.K.; Liu, J. DeepVessel: Retinal Vessel Segmentation via Deep Learning and Conditional Random Field. In Proceedings of the 19th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Athens, Greece, 17–21 October 2016; pp. 132–139. [Google Scholar]

- Luo, Y.; Yang, L.; Wang, L.; Cheng, H. Efficient CNN-CRF Network for Retinal Image Segmentation. In Proceedings of the 3rd International Conference on Cognitive Systems and Signal Processing (ICCSIP), Beijing, China, 19–23 November 2016; pp. 157–165. [Google Scholar]

- Dasgupta, A.; Singh, S. A fully convolutional neural network based structured prediction approach towards the retinal vessel segmentation. In Proceedings of the 14th International Symposium on Biomedical Imaging (ISBI), Melbourne, Australia, 18–21 April 2017; pp. 248–251. [Google Scholar]

- Meyer, M.I.; Costa, P.; Galdran, A.; Mendonça, A.M.; Campilho, A. A Deep Neural Network for Vessel Segmentation of Scanning Laser Ophthalmoscopy Images. In Proceedings of the 14th International Conference Image Analysis and Recognition (ICIAR), Montreal, QC, Canada, 5–7 July 2017; pp. 507–515. [Google Scholar]

- Hu, K.; Zhang, Z.; Niu, X.; Zhang, Y.; Cao, C.; Xiao, F.; Gao, X. Retinal vessel segmentation of color fundus images using multiscale convolutional neural network with an improved cross-entropy loss function. Neurocomputing 2018, 309, 179–191. [Google Scholar] [CrossRef]

- Yan, Z.; Yang, X.; Cheng, K. A Three-Stage Deep Learning Model for Accurate Retinal Vessel Segmentation. IEEE J. Biomed. Health Inform. 2019, 23, 1427–1436. [Google Scholar] [CrossRef] [PubMed]

- Park, K.; Choi, S.H.; Lee, J.Y. M-GAN: Retinal Blood Vessel Segmentation by Balancing Losses Through Stacked Deep Fully Convolutional Networks. IEEE Access 2020, 8, 146308–146322. [Google Scholar] [CrossRef]

- Rizzi, A.; Gatta, C.; Marini, D. A new algorithm for unsupervised global and local color correction. Pattern Recognit. Lett. 2003, 24, 1663–1677. [Google Scholar] [CrossRef]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.K.; Wang, Z.; Smolley, S.P. Least Squares Generative Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2813–2821. [Google Scholar]

- Oliveira, W.S.; Teixeira, J.V.; Ren, T.I.; Cavalcanti, G.D.C.; Sijbers, J. Unsupervised Retinal Vessel Segmentation Using Combined Filters. PLoS ONE 2016, 11, e0149943. [Google Scholar]

- Srinidhi, C.L.; Aparna, P.; Rajan, J. A visual attention guided unsupervised feature learning for robust vessel delineation in retinal images. Biomed. Signal Process. Control 2018, 44, 110–126. [Google Scholar] [CrossRef]

- Chaudhuri, S.; Chatterjee, S.; Katz, N.; Nelson, M.; GoldBaum, M. Detection of blood vessels in retinal images using two-dimensional matched filters. IEEE Trans. Med. Imaging 1989, 8, 263–269. [Google Scholar] [CrossRef] [Green Version]

- Frangi, A.F.; Niessen, W.J.; Vincken, K.L.; Viergever, M.A. Multiscale vessel enhancement filtering. In Proceedings of the 1st International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Cambridge, MA, USA, 11–13 October 1998; pp. 130–137. [Google Scholar]

- Soares, J.V.B.; Leandro, J.J.G.; Cesar, R.M.; Jelinek, H.F.; Cree, M.J. Retinal vessel segmentation using the 2-D Gabor wavelet and supervised classification. IEEE Trans. Med. Imaging 2006, 25, 1214–1222. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Goldberg, D.E. Genetic Algorithms in Search Optimization and Machine Learning, 1st ed.; Addison-Wesley Publishing Company: Boston, MA, USA, 1989; pp. 59–88. [Google Scholar]

- Dunn, J.C. A Fuzzy Relative of the ISODATA Process and Its Use in Detecting Compact Well-Separated Clusters. Cybern. Syst. 1973, 3, 32–57. [Google Scholar] [CrossRef]

- Li, C.; Kao, C.; Gore, J.C.; Ding, Z. Minimization of Region-Scalable Fitting Energy for Image Segmentation. IEEE Trans. Image Process. 2008, 17, 1940–1949. [Google Scholar]

- Joshi, G.D.; Sivaswamy, J. Colour Retinal Image Enhancement Based on Domain Knowledge. In Proceedings of the 6th Indian Conference on Computer Vision, Graphics & Image Processing (ICVGIP), Bhubaneswar, India, 16–19 December 2008; pp. 591–598. [Google Scholar]

- Coates, A.; Ng, A.Y. Learning Feature Representation with K-Means. In Neural Networks: Tricks of the Trade, 2nd ed.; Montavon, G., Orr, G.B., Müller, K., Eds.; Springer: New York, NY, USA, 2012; pp. 561–580. [Google Scholar]

- Coates, A.; Ng, A.; Lee, H. An Analysis of Single-Layer Networks in Unsupervised Feature Learning. In Proceedings of the 14th International Conference on Artificial Intelligence and Statistics Conference (AISTATS), Fort Lauderdale, FL, USA, 11–13 April 2011; pp. 215–223. [Google Scholar]

- Welikala, R.A.; Foster, P.J.; Whincup, P.H.; Rudnicka, A.R.; Owen, C.G.; Strachan, D.P.; Barman, S.A. Automated arteriole and venule classification using deep learning for retinal images from the UK Biobank cohort. Comput. Biol. Mod. 2017, 90, 23–32. [Google Scholar] [CrossRef] [Green Version]

- Girard, F.; Kavalec, C.; Cheriet, F. Joint segmentation and classification of retinal arteries/veins from fundus images. Artif. Intell. Med. 2019, 94, 96–109. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, J.; Dong, X.; Hu, Y.; Peng, Q.; Tao, G.; Ou, Y.; Cai, H.; Yang, X. Fully Automatic Arteriovenous Segmentation in Retinal Images via Topology-Aware Generative Adversarial Networks. Interdiscip. Sci. 2020, 12, 323–324. [Google Scholar] [CrossRef]

- Mirsharif, Q.; Tajeripour, F.; Pourreza, H. Automated characterization of blood vessels as arteries and veins in retinal images. Comput. Med. Imaging. Graph. 2013, 37, 607–617. [Google Scholar] [CrossRef] [PubMed]

- Hu, Q.; Abràmoff, M.; Garvin, M.K. Automated construction of arterial and venous trees in retinal images. J. Med. Imaging 2015, 2, 044001. [Google Scholar] [CrossRef] [Green Version]

- Xu, X.; Ding, W.; Abràmoff, M.D.; Cao, R. An improved arteriovenous classification method for the early diagnostics of various diseases in retinal image. Comput. Methods Programs Biomed. 2017, 141, 3–9. [Google Scholar] [CrossRef]

- Huang, F.; Dashtbozorg, B.; Romeny, B.M.H. Artery/vein classification using reflection features in retina fundus images. Mach. Vis. Appl. 2018, 29, 23–34. [Google Scholar] [CrossRef] [Green Version]

- Vijayakumar, V.; Koozekanani, D.D.; White, R.; Kohler, J.; Roychowdhury, S.; Parhi, K.K. Artery/vein classification of retinal blood vessels using feature selection. In Proceedings of the 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 1320–1323. [Google Scholar]

- Zhao, Y.; Xie, J.; Zhang, H.; Zheng, Y.; Zhao, Y.; Qi, H.; Zhao, Y.; Su, P.; Liu, J.; Liu, Y. Retinal Vascular Network Topology Reconstruction and Artery/Vein Classification via Dominant Set Clustering. IEEE Trans. Med. Imaging 2020, 39, 341–356. [Google Scholar] [CrossRef]

- Srinidhi, C.L.; Aparna, P.; Rajan, J. Automated Method for Retinal Artery/Vein Separation via Graph Search Metaheuristic Approach. IEEE Trans. Image Process. 2019, 28, 2705–2718. [Google Scholar] [CrossRef]

- Cheng, J.; Liu, J.; Xu, Y.; Yin, F.; Wong, D.W.K.; Tan, N.; Tao, D.; Cheng, C.; Aung, T.; Wong, T.Y. Superpixel Classification Based Optic Disc and Optic Cup Segmentation for Glaucoma Screening. IEEE Trans. Med. Imaging 2013, 32, 1019–1032. [Google Scholar] [CrossRef]

- Zhao, Y.; Rada, L.; Chen, K.; Harding, S.P.; Zheng, Y. Automated Vessel Segmentation Using Infinite Perimeter Active Contour Model with Hybrid Region Information with Application to Retinal Images. IEEE Trans. Med. Imaging 2015, 34, 1797–1807. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pavan, M.; Pelillo, M. Dominant sets and pairwise clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 167–172. [Google Scholar] [CrossRef] [PubMed]

- Srinidhi, C.L.; Rath, P.; Sivaswamy, J. A Vessel Keypoint Detector for junction classification. In Proceedings of the IEEE 14th International Symposium on Biomedical Imaging (ISBI), Melbourne, VIC, Australia, 18–21 April 2017; pp. 882–885. [Google Scholar]

- Foracchia, M.; Grisan, E.; Ruggeri, A. Luminosity and contrast normalization in retinal images. Med. Image Anal. 2005, 9, 179–190. [Google Scholar] [CrossRef]

- When Glaucomatous Damage Isn’t Glaucoma. Available online: https://www.reviewofophthalmology.com/article/when-glaucomatous-damage-isnt-glaucoma (accessed on 4 August 2021).

- When It’s Not Glaucoma. Available online: https://www.aao.org/eyenet/article/when-its-not-glaucoma (accessed on 4 August 2021).

- Leung, C.K.; Cheung, C.Y.; Weinreb, R.N.; Qui, Q.; Liu, S.; Li, H.; Xu, G.; Fan, N.; Huang, L.; Pang, C.; et al. Retinal nerve fiber layer imaging with spectral-domain optical coherence tomography: A variability and diagnostic performance study. Ophthalmology 2009, 116, 1257–1263. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-ResNet and the impact of residual connections on learning. In Proceedings of the 31st AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4278–4284. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D. MobileNets: Effcient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ahshraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separablee Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.V.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 26th Annual Conference on Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Jiang, J.; Yang, K.; Gao, M.; Zhang, D.; Ma, H.; Qian, W. An Interpretable Ensemble Deep Learning Model for Diabetic Retinopathy Disease Classification. In Proceedings of the 41th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 2045–2048. [Google Scholar]

- Dutta, S.; Manideep, B.C.; Basha, S.M.; Caytiles, R.D.; Iyengar, N. Classification of Diabetic Retinopathy Images by Using Deep Learning Models. Int. J. Grid Dist. Comput. 2018, 11, 89–106. [Google Scholar] [CrossRef]

- Pires, R.; Avila, S.; Wainer, J.; Valle, E.; Abramoff, M.D.; Rocha, A. A data-driven approach to referable diabetic retinopathy detection. Artif. Intell. Med. 2019, 96, 93–106. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Zhong, J.; Yang, S.; Gao, Z.; Hu, J.; Chen, Y.; Yi, Z. Automated identification and grading system of diabetic retinopathy using deep neural network. Knowl. Based. Syst. 2019, 175, 12–25. [Google Scholar] [CrossRef]

- Rehman, M.; Khan, S.H.; Abbas, Z.; Rizvi, S.M.D. Classification of Diabetic Retinopathy Images Based on Customised CNN Architecture. In Proceedings of the Amity International Conference on Artificial Intelligence (AICAI), Dubai, United Arab Emirates, 4–6 February 2019; pp. 244–248. [Google Scholar]

- Wang, X.; Lu, Y.; Wang, Y.; Chen, W. Diabetic Retinopathy Stage Classification Using Convolutional Neural Networks. In Proceedings of the IEEE International Conference on Information Reuse and Integration (IRI), Salt Lake City, UT, USA, 6–8 July 2018; pp. 465–471. [Google Scholar]

- Oh, K.; Kang, H.M.; Leem, D.; Lee, H.; Seo, K.Y.; Yoon, S. Early detection of diabetic retinopathy based on deep learning and ultra-wide-field fundus images. Sci. Rep. 2021, 11, 1897. [Google Scholar] [CrossRef]

- Adem, K. Exudate detection for diabetic retinopathy with circular Hough transformation and convolutional neural networks. Expert Syst. Appl. 2018, 114, 289–295. [Google Scholar] [CrossRef]

- Wang, H.; Yuan, G.; Zhao, X.; Peng, L.; Wang, Z.; He, Y.; Qu, C.; Peng, Z. Hard exudate detection based on deep model learned information and multi-feature joint representation for diabetic retinopathy screening. Comput. Methods Programs Biomed. 2020, 191, 105398. [Google Scholar] [CrossRef] [PubMed]

- Chudzik, P.; Majumdar, S.; Calivá, F.; Al-Diri, B.; Hunter, A. Microaneurysm detection using fully convolutional neural networks. Comput. Methods Programs Biomed. 2018, 159, 185–192. [Google Scholar] [CrossRef]

- Grinsven, M.J.J.P.; Ginneken, B.; Hoyng, C.B.; Theelen, T.; Sánchez, C.I. Fast Convolutional Neural Network Training Using Selective Data Sampling: Application to Hemorrhage Detection in Color Fundus Images. IEEE Trans. Med. Imaging 2020, 35, 105398. [Google Scholar] [CrossRef]

- Zago, G.T.; Andreão, R.V.; Dorizzi, B.; Salles, E.O.T. Diabetic retinopathy detection using red lesion localization and convolutional neural networks. Comput. Biol. Med. 2020, 116, 103537. [Google Scholar] [CrossRef]

- Alyoubi, W.L.; Abulkhair, M.F.; Shalash, W.M. Diabetic Retinopathy Fundus Image Classification and Lesions Localization System Using Deep Learning. Sensors 2021, 21, 3704. [Google Scholar] [CrossRef]

- Grassmann, F.; Mengelkamp, J.; Brandl, C.; Harsch, S.; Zimmermann, M.E.; Linkohr, B.; Peters, A.; Heid, I.M.; Palm, C.; Weber, B.H. A Deep Learning Algorithm for Prediction of Age-related Eye Disease Study Severity Scale for Age-related Macular Degeneration from Color Fundus Photography. Ophthalmology 2018, 125, 1410–1420. [Google Scholar] [CrossRef] [Green Version]

- Bhuiyan, A.; Wong, T.Y.; Ting, D.S.W.; Govindaiah, A.; Souied, E.H.; Smith, T.R. Artificial Intelligence to Stratify Severity of Age-Related Macular Degenration (AMD) and Predict Risk of Progression to Late AMD. Transl. Vis. Sci. Technol. 2020, 9, 25. [Google Scholar] [CrossRef] [Green Version]

- Li, F.; Yan, L.; Wang, Y.; Shi, J.; Chen, H.; Zhang, X.; Jiang, M.; Wu, Z.; Zhou, K. Deep learning-based automated detection of glaucomatous optic neuropathy on color fundus photographs. Graefes. Arch. Clin. Exp. Ophthalmol. 2020, 258, 851–867. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Burlina, P.M.; Joshi, N.; Pekala, M.; Pacheco, K.D.; Freund, D.E.; Bressler, N.M. Automated Grading of Age-Related Macular Degeneration From Color Fundus Images Using Deep Convolutional Neural Networks. JAMA Ophthalmol. 2017, 135, 1170–1176. [Google Scholar] [CrossRef] [PubMed]

- González-Gonzalo, C.; Sánchez-Gutiérrez, V.; Hernández-Martínez, P.; Contreras, I.; Lechanteur, Y.T.; Domanian, A.; Ginneken, B.; Sánchez, C. Evaluation of a deep learning system for the joint automated detection of diabetic retinopathy and age-related macular degeneration. Acta Ophthalmol. 2020, 98, 368–377. [Google Scholar] [CrossRef] [PubMed]

- Keel, S.; Li, Z.; Scheetz, J.; Robman, L.; Phung, J.; Makeyeva, G.; Aung, K.; Liu, C.; Yan, X.; Meng, W.; et al. Development and validation of a deep-learning algorithm for the detection of a deep-learning algorithm for the detection of neovascular age-related macular degeneration from colour fundus photographs. Clin. Exp. Ophthalmol. 2019, 47, 1009–1018. [Google Scholar] [CrossRef] [Green Version]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; LeCun, Y. OverFeat: Integrated Recognition, Localization and Detection using Convolutional Networks. arXiv 2014, arXiv:1312.6229. [Google Scholar]

- Burlina, P.; Pacheeco, K.D.; Joshi, N.; Freund, D.E.; Bressler, N.M. Comparing humans and deep learning performance for grading AMD: A study in using universal deep features and transfer learning for automated AMD analysis. Comput. Biol. Med. 2017, 82, 80–86. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peng, Y.; Dharssi, S.; Chen, Q.; Keenan, T.D.; Agrón, E.; Wong, W.T.; Chew, E.Y.; Lu, Z. DeepSeeNet: A Deep Learning Model for Automated Classification of Patient-based Age-related Macular Degeneration Severity from Color Fundus Photographs. Ophthalmology 2019, 126, 565–574. [Google Scholar] [CrossRef]

- Keenan, T.D.; Dharssi, S.; Peng, Y.; Wong, W.T.; Lu, Z.; Chew, E.Y. A Deep Learning Approach for Automated Detection of Geographic Atrophy from Color Fundus Photographs. Ophthalmology 2019, 126, 1533–1540. [Google Scholar] [CrossRef] [Green Version]

- Hussain, M.A.; Govindaiah, A.; Souied, E.; Smith, R.T.; Bhuiyan, A. Automated tracking and change detection for Age-related Macular Degeneration Progression using retinal fundus imaging. In Proceedings of the 7th International Conference on Informatics, Electronics & amp (ICIEV), Kitakyushu, Japan, 25–29 June 2018; pp. 394–398. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning Transferable Architectures for Scalable Image Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8697–8710. [Google Scholar]

- Prum, B.E., Jr.; Rosenberg, L.F.; Gedde, S.J.; Mansberger, S.L.; Stein, J.D.; Moroi, S.E.; Herndon, L.W., Jr.; Lim, M.C.; Williams, R.D. Primary Open-Angle Glaucoma Preferred Practice Pattern(®) Guidelines. Ophthalmology 2016, 123, P41–P111. [Google Scholar]

- Hollands, H.; Johnson, D.; Hollands, S.; Simel, D.L.; Jinapriya, D.; Sharma, S. Do findings on routine examination identify patients at risk for primary open-angle glaucoma? The rational clinical examination systematic review. JAMA 2013, 309, 2035–2042. [Google Scholar] [CrossRef] [PubMed]

- Fingeret, M.; Medeiors, F.A.; Susanna, R., Jr.; Weinreb, R.N. Five rules to evaluate the optic disc and retinal nerve fiber layer for glaucoma. Optometry 2005, 76, 661–668. [Google Scholar] [CrossRef] [PubMed]

- Shinde, R. Glaucoma detection in retinal fundus images using U-Net and supervised machine learning algorithms. Intell. Based Med. 2021, 5, 10038. [Google Scholar] [CrossRef]

- Singh, L.K.; Garg, H.; Khanna, M.; Bhadoria, R.H. An enhanced deep image model for glaucoma diagnosis using feature-based detection in retinal fundus. Med. Biol. Eng. Comput. 2021, 59, 333–353. [Google Scholar] [CrossRef]

- Li, Z.; He, Y.; Keel, S.; Meng, W.; Chang, R.T.; He, M. Efficacy of a Deep Learning System for Detecting Glaucomatous Optic Neuropathy Based on Color Fundus Photographs. Ophthalmology 2018, 125, 1199–1206. [Google Scholar] [CrossRef] [Green Version]

- The Japan Glaucoma Society Guidelines for Glaucoma (4th Edition). Available online: http://journal.nichigan.or.jp/Search?chk0=on&searchfull2=The+Japan+Glaucoma+Society+Guidelines+for+Glaucoma (accessed on 2 September 2021).

- Shibata, N.; Masaki, T.; Mitsuhashi, K.; Fujino, Y.; Matsuura, M.; Murata, H.; Asaoka, R. Development of a deep residual learning algorithm to screen for glaucoma from fundus photography. Sci. Rep. 2018, 8, 14665. [Google Scholar] [CrossRef] [Green Version]

- Kim, M.; Park, H.; Zuallaert, J.; Janssens, O.; Hoeecke, S.; Neve, W. Computer-Aided Diagnosis and Localization of Glaucoma Using Deep Learning. In Proceedings of the IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2357–2362. [Google Scholar]

- Phene, S.; Dunn, R.C.; Hammel, N.; Liu, Y.; Krause, J.; Kitade, N.; Schaekermann, M.; Sayres, R.; Wu, D.J.; Bora, A.; et al. Deep Learning and Glaucoma Specialists. Ophthalmology 2019, 126, 1627–1639. [Google Scholar] [CrossRef] [Green Version]

- Deng, Z.; Li, Y.; Wang, Y.; Yue, Q.; Yang, Z.; Boumediene, D.; Carloganu, C.; Français, V.; Cho, G.; Kim, D.-W.; et al. Tracking within Hadronic Showers in the CALICE SDHCAL prototype using a Hough Transform Technique. J. Instrum. 2017, 12, P05009. [Google Scholar] [CrossRef] [Green Version]

- Li, T.; Gao, Y.; Wang, K.; Guo, S.; Liu, H.; Kang, H. Diagnostic assessment of deep learning algorithms for diabetic retinopathy screening. Inf. Sci. 2019, 501, 511–522. [Google Scholar] [CrossRef]

- Shanti, T.; Sabeenian, R. Modified Alexnet architecture for classification of diabetic for classification of diabetic retinopathy images. Comput. Electr. Eng. 2019, 76, 56–64. [Google Scholar] [CrossRef]

- Zapata, M.; Royo-Fibla, D.; Font, O.; Vela, J.; Marcantonio, I.; Moya-Sanchez, E.; Sanchez-Perez, A.; Garcia-Gasulla, D.; Cortes, U.; Ayguade, E.; et al. Artificial intelligence to identify retinal fundus images, quality validation, laterality evaluation, macular degeneration, and suspected glaucoma. Clin. Ophthalmol. 2020, 14, 419–429. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Li, L.; Wormstone, M.; Qiao, C.; Zhang, C.; Liu, P.; Li, S.; Wang, H.; Mou, D.; Pang, R. Development and Validation of a Deep Learning System to Detect Glaucomatous Optic Neuropathy Using Fundus Photographs. JAMA Ophthalmol. 2019, 137, 1353–1360. [Google Scholar] [CrossRef]

- Christopher, M.; Belghith, A.; Bowd, C.; Proudfoot, J.A.; Goldbaum, M.H.; Weinreb, R.N.; Girkin, C.A.; Liebmann, J.M.; Zangwill, L.M. Performance of Deep Learning Architectures and Transfer Learning for Detecting Glaucomatous Optic Neuropathy in Fundus Photographs. Sci. Rep. 2018, 8, 16685. [Google Scholar] [CrossRef] [Green Version]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Selvaraju, R.; Cogswell, M.; Das, A.; Vedantam, R.; Parika, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Anowar, F.; Sadaoui, S.; Selim, B. Conceptual and empirical comparison of dimensionality reduction algorithms (PCA, KPCA, LDA, MDS, SVD, LLE, ISOMAP, LE, ICA, t-SNE). Comput. Sci. Rev. 2021, 40, 100378. [Google Scholar] [CrossRef]

- Sisson, C.P.; Farnand, S.; Fairchild, M.; Fischer, B. Analysis of Color Consistency in Retinal Fundus Photography: Application of Color Management and Development of an Eye Model Standard. Anal. Cell. Pathol. 2014, 2014, 398462. [Google Scholar] [CrossRef] [Green Version]

- Jampel, H.D.; Friedman, D.; Quigley, H.; Vitale, S.; Miller, R.; Knezevich, F.; Ding, Y. Agreement among glaucoma specialists in assessing progressive disc changes from photographs in open-angle glaucoma patients. Am. J. Ophthalmol. 2009, 147, 39–44. [Google Scholar] [CrossRef] [Green Version]

- Abrams, L.S.; Scott, I.U.; Spaeth, G.L.; Quigley, H.A.; Varma, R. Agreement among optometrists, ophthalmologists, and residents in evaluating the optic disc for glaucoma. Ophthalmology 1994, 101, 1662–1667. [Google Scholar] [CrossRef]

- Tatham, A.J.; Medeiros, F.A. Detecting Structural Progression in Glaucoma with Optical Coherence Tomography. Ophthalmology 2017, 124, S57–S65. [Google Scholar] [CrossRef] [PubMed]

- Medeiros, F.A.; Jammal, A.A.; Thompson, A.C. From Machine to Machine: An OCT-Trained Deep Learning Algorithm for Objective Quantification of Glaucomatous Damage in Fundus Photographs. Ophthalmology 2019, 126, 513–521. [Google Scholar] [CrossRef]

- Reznicek, L.; Burzer, S.; Laubichler, A.; Nasseri, A.; Lohmann, C.P.; Feucht, N.; Ulbig, M.; Maier, M. Structure-function relationship comparison between retinal nerve fibre layer and Bruch’s membrane opening-minimum rim width in glaucoma. Int. J. Ophthalmol. 2017, 10, 1534–1538. [Google Scholar] [PubMed]

- Thompson, A.; Jammal, A.A.; Medeiors, F.A. A Deep Learning Algorithm to Quantify Neuroretinal Rim Loss from Optic Disc Photographs. Am. J. Ophthalmol. 2019, 201, 9–18. [Google Scholar] [CrossRef] [PubMed]

| Dataset | No. Images | Image Size | FOV | Camera | Ground Truth |

|---|---|---|---|---|---|

| DRIVE | 40 | 564 × 584 | 45° | Canon CR6-45NM | A/V label |

| STARE | 50 | 605 × 700 | 35° | Topcon TRV-50 | Topology and A/V label |

| INSPIRE | 40 | 2392 × 2048 | 30° | Carl Zeiss Meditec | A/V label |

| WIDE | 30 | 1440 × 900 | 45° | Optos 200Tx | Topology and A/V label |

| Diabetic Retinopathy (DR) | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dataset | Total | Grade | ||||||||||||

| Normal | Mild DR | Moderate DR | Severe DR | Proliferative DR | ||||||||||

| DIARETDB1 | 89 | 27 | 7 | 28 | 27 | |||||||||

| IDRiD | 516 | 168 | 348 | |||||||||||

| DDR | 13,673 | 6266 | 630 | 4713 | 913 | |||||||||

| Messidor | 1200 | 540 | 660 | |||||||||||

| APTOS 2019 | 3662 | 1805 | 370 | 990 | 193 | 295 | ||||||||

| Age-Related Macular Degeneration (AMD) | ||||||||||||||

| Dataset | Total | Grade | ||||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | UG | ||

| AREDS | 120,656 | 41,770 | 12,133 | 5070 | 8985 | 6012 | 7953 | 6916 | 6634 | 2539 | 4128 | 13,260 | 1098 | 4158 |

| Glaucoma | ||||||||||||||

| Dataset | Total | Grade | ||||||||||||

| Normal | Early | Moderate | Deep | Ocular hypertension | ||||||||||

| RIM-ONE | 169 | 118 | 12 | 14 | 14 | 11 | ||||||||

| Retinopathy | Reference | Task | Dataset | Metrics (%) | ||||

|---|---|---|---|---|---|---|---|---|

| AUC | Accuracy | Sensitivity | Specificity | Kappa Coefficient | ||||

| DR | [103] | Binary classification for non-severe and severe DR | DIARETDB0 | 78.6 | 82.1 | 50 | ||

| IDRiD | 81.8 | 84.1 | 50 | |||||

| Messidor | 91.2 | 94.0 | 50 | |||||

| Binary classification for DR detection | IDRiD | 79.6 | 85.9 | 50 | ||||

| Messidor | 93.6 | 97.6 | 50 | |||||

| [104] | 5 DR stage classification | DDR | 97.0 | 89.0 | 89.0 | 97.3 | ||

| 5 DR stage classification | APTOS2019 | 97.3 | 84.1 | 84.1 | 96.0 | |||

| AMD | [105] | 4 AMD stage classification | AREDS | 96.1 | ||||

| 13 AMD stage classification | 63.3 | 55.47 | ||||||

| [106] | Binary classification | 99 | 99.2 | 98.9 | 99.5 | |||

| Glaucoma | [107] | 3 glaucoma stage classification | RIM-ONE | 95.4 | 96.5 | 94.1 | 92.7 | |

| Retinopathy | Reference | Task | Dataset | Metrics (%) | |||

|---|---|---|---|---|---|---|---|

| AUC | Accuracy | Sensitivity | Specificity | ||||

| DR | [130] | 6 class classification | DDR | 82.84 | |||

| [132] | 4 class classification | Messidor | 96.35 | 92.35 | 97.45 | ||

| AMD | [111] | binary classification | AREDS | 94.9 | 88.0 | ||

| [123] | binary classification | Optretina | 93.6 | 86.3 | |||

| Glaucoma | [124] | binary classification | Private dataset | 98.6 | 95.6 | 92.0 | |

| [126] | binary classification | Private dataset | 96.5 | ||||

| [128] | binary classification | Private dataset | 94.5 | ||||

| [133] | binary classification | Private dataset | 99.6 | 96.2 | 97.7 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, Y.; Hong, Y.-J.; Han, J.-H. Review of Machine Learning Applications Using Retinal Fundus Images. Diagnostics 2022, 12, 134. https://doi.org/10.3390/diagnostics12010134

Jeong Y, Hong Y-J, Han J-H. Review of Machine Learning Applications Using Retinal Fundus Images. Diagnostics. 2022; 12(1):134. https://doi.org/10.3390/diagnostics12010134

Chicago/Turabian StyleJeong, Yeonwoo, Yu-Jin Hong, and Jae-Ho Han. 2022. "Review of Machine Learning Applications Using Retinal Fundus Images" Diagnostics 12, no. 1: 134. https://doi.org/10.3390/diagnostics12010134

APA StyleJeong, Y., Hong, Y.-J., & Han, J.-H. (2022). Review of Machine Learning Applications Using Retinal Fundus Images. Diagnostics, 12(1), 134. https://doi.org/10.3390/diagnostics12010134