The Gifted Rating Scales-Preschool/Kindergarten Form (GRS-P): A Preliminary Examination of Their Psychometric Properties in Two Greek Samples

Abstract

:1. Introduction

1.1. Evaluation of Giftedness

1.2. The Gifted Rating Scales

1.3. Aims of the Studies

2. Materials and Methods

2.1. Study 1

2.2. Procedure

2.3. Instruments

3. Results

3.1. Test of the GRS-P Factor Structure

3.2. Test of the GRS-P Internal Consistency Reliability

3.3. Test of the GRS-P Convergent Validity

3.4. Study 2

3.4.1. Participants

3.4.2. Procedure

3.4.3. Instruments

- Language Analogies. Language analogies consist of 32 pairs of sentences. Each pair contains four meanings that relate to each other (e.g., shape) and form an analogy expressed in words. For each pair of sentences, the first sentence is complete while the second is incomplete. The participant’s task is to find the missing word in this second sentence. Every correctly answered item results in 1 point. It is a test of verbal concept formation, which reflects a child’s abstract verbal classification abilities.

- Pattern Copying. The participant is presented with three geometrical patterns that vary in difficulty. The correct pattern copying requires increasing visuo-conceptual maturity with every pattern. The drawings are scored by three morphological characteristics: (a) general resemblance to standard, (b) shape orientation, and (c) equality of different parts. Each drawing is scored by 0–3 points.

- Vocabulary. It consists of 20 items: words, both concrete and abstract, for which the subject needs to give a definition. They vary in difficulty from the simplest to more difficult words. Each answer is scored by 0–2 points depending on the accuracy of the definition. This test assesses word knowledge, language development, and long-term memory.

- Digit Span. It includes 16 items: sequences of numbers that gradually get longer. Participants are given the sequences orally and are asked to repeat them as heard. Correctly repeated items are given a score of 2 or 1, at the first or second attempt, respectively. This test assesses short-term phonological memory.

- Memory of Common Sequences. This is a supplemental subtest in the AT test. The child is asked to name parts of sequences encountered in everyday life (days of the week, months of the year, etc.). Every correctly answered item results in 1 point.

- “Right—Left” Perception (neuropsychological maturation). Two items in the form of instructions are to be executed by the participants, indicating whether they can distinguish and orientate accordingly the right and left “side” of the body, either their own (direct perception) or the examiner’s (“mirror” perception). Every correctly answered item results in 1 point.

4. Results

4.1. Test of the GRS-P Internal Consistency Reliability

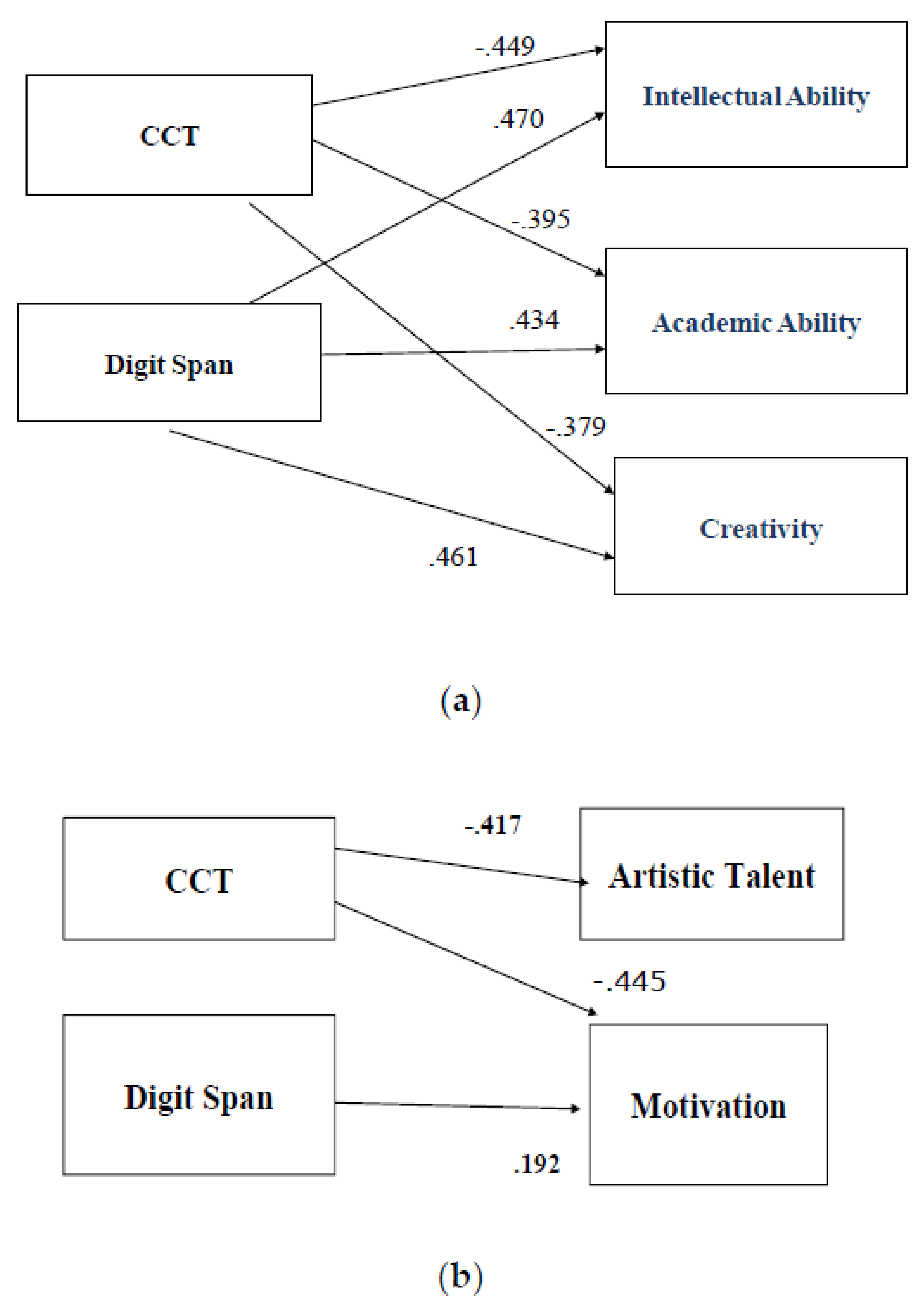

4.2. Test of the GRS-P Convergent Validity

5. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Park, G.; Lubinski, D.; Benbow, C.P. Contrasting intellectual patterns predict creativity in the arts and sciences: Tracking intellectually precocious youth over 25 years. Psychol. Sci. 2007, 18, 948–995. [Google Scholar] [CrossRef] [PubMed]

- Plucker, J.A.; Callahan, C.M. Research on giftedness and gifted education: Status of the field and considerations for the future. Except. Child. 2014, 80, 390–406. [Google Scholar] [CrossRef]

- Pfeiffer, S.I. Identifying gifted and talented students: Recurring issues and promising solutions. J. Appl. Sch. Psychol. 2002, 1, 31–50. [Google Scholar]

- Pierson, E.E.; Kilmer, L.M.; Rothlisberg, B.A.; McIntosh, D.E. Use of brief intelligence tests in the identification of giftedness. J. Psychoeduc. Assess. 2012, 30, 10–24. [Google Scholar] [CrossRef] [Green Version]

- Pfeiffer, S.I. Essentials of Gifted Assessment; John Wiley: Hoboken, NJ, USA, 2015; pp. 57–58. [Google Scholar]

- Pfeiffer, S.I.; Petscher, Y.; Jarosewich, T. The Gifted Rating Scale–School Form: Analysis of the standardization sample based on age, gender, race, and diagnostic efficiency. Gift. Child Q. 2007, 51, 39–50. [Google Scholar] [CrossRef]

- Acar, S.; Sen, S.; Cayirdag, N. Consistency of the performance and nonperformance methods in gifted identification: A multilevel meta-analytic review. Gift. Child Q. 2016, 60, 81–101. [Google Scholar] [CrossRef]

- Pfeiffer, D. Can creativity be measured? An attempt to clarify the notion of creativity and general directions for future research. Think. Ski. Creat. 2012, 7, 258–264. [Google Scholar] [CrossRef]

- Canivez, G.L.; Youngstrom, E.A. Challenges to the Cattell-Horn-Caroll theory: Empirical, clinical and policy implication. Appl. Meas. Educ. 2019, 32, 232–248. [Google Scholar] [CrossRef]

- Brown, S.; Renzulli, J.; Gubbins, E.; Zhang, D.; Chen, C. Assumptions underlying the identification of gifted and talented students. Gift. Child Q. 2005, 49, 68–79. [Google Scholar] [CrossRef] [Green Version]

- Johnsen, S.; Qiu, X.; Frederick, K. What does the research say about leadership development of gifted students. Tempo 2010, 3, 9–18. [Google Scholar]

- Flynn, J.R. Massive IQ gains in 14 nations: What IQ tests really measure. Psychol. Bull. 1987, 101, 171–191. [Google Scholar] [CrossRef]

- Harrington, R.G. Caution: Standardized testing may be hazardous to the educational programs of intellectually gifted children. Education 1982, 103, 112–117. [Google Scholar]

- Naglieri, J.A.; Ford, D.Y. Addressing underrepresentation of gifted minority children using the Naglieri Nonverbal Ability Test (NNAT). Gift. Child Q. 2003, 47, 155–160. [Google Scholar] [CrossRef]

- Sternberg, R.J. Lies we live by: Misapplication of tests in identifying the gifted. Gift. Child Q. 1982, 26, 157–161. [Google Scholar] [CrossRef]

- Kaufman, A.S.; Harrison, P.L. Intelligence tests and gifted assessment: What are the positives? Special Issue: The IQ controversy. Roeper Rev. 1986, 8, 154–159. [Google Scholar] [CrossRef]

- Duggan, E.C.; Garcia-Barrera, M.A. Executive functioning and intelligence. In Handbook of Intelligence: Evolutionary Theory, Historical Perspective, and Current Concepts; Goldstein, S., Naglieri, J.A., Princiotta, D., Eds.; Springer: New York, NY, USA, 2015; pp. 435–458. [Google Scholar]

- Duff, K.; Schoenberg, M.; Scott, J.; Adams, R. The relationship between executive functioning and verbal and visual learning and memory. Arch. Clin. Neuropsychol. 2005, 20, 111–122. [Google Scholar] [CrossRef]

- Foutsitzi, E.; Moraitou, D.; Papantoniou, G. Language related abilities and visual-spatial abilities. Their relations with measures of executive functioning. BAOJ Psychol. 2016, 1, 008. [Google Scholar]

- Anderson, P.G.; Reidy, N. Assessing executive functions in preschoolers. Neuropsychol. Rev. 2012, 22, 345–360. [Google Scholar] [CrossRef]

- Zimmerman, B.J.; Kitsantas, A. Homework practices, and academic achievement: The mediating role of self-efficacy and perceived responsibility beliefs. Contemp. Educ. Psychol. 2005, 30, 397–417. [Google Scholar] [CrossRef] [Green Version]

- Foley Nicpon, M.; Allmon, A.; Sieck, B.; Stinson, R.D. Empirical investigation of twice-exceptionality: Where have we been and where are we going? Gift. Child Q. 2011, 55, 3–17. [Google Scholar] [CrossRef]

- Viana-Saenz, L.; Sastre-Riba, S.; Urraca-Martinez, M.; Botella, J. Measurement of executive functioning and High-Intellectual ability in childhood: A comparative metaanalysis. Sustainability 2020, 12, 4976. [Google Scholar] [CrossRef]

- Renzulli, J.S.; Renzulli, S.R. The Schoolwide Enrichment Model: A Focus on Student Strengths and Interests. Gift. Educ. Int. 2010, 26, 140–156. [Google Scholar] [CrossRef]

- Kitsantas, A.; Bland, L.; Chirinos, D.S. Gifted students’ perceptions of gifted programs: An inquiry into their academic and social-emotional functioning. J. Educ. Gift. 2017, 40, 266–288. [Google Scholar] [CrossRef]

- Slater, E. The identification of gifted children in Australia: The importance of policy. TalentEd 2018, 30, 1–16. [Google Scholar]

- Almarode, J.T.; Subotnik, R.F.; Crowe, E.; Tai, R.H.; Lee, G.S.M.; Nowlin, F. Specialized high schools and Talent Search programs: Incubators for adolescents with high ability in STEM disciplines. J. Adv. Acad. 2014, 25, 307–331. [Google Scholar] [CrossRef]

- Pfeiffer, S.I. Success in the classroom and in life: Focusing on strengths of the head and strengths of the heart. Gift. Educ. Int. 2017, 33, 95–101. [Google Scholar] [CrossRef] [Green Version]

- GilIiam, J.E.; Carpenter, B.O.; Cristensen, J.R. Gifted and Talented Evaluation Scales; Prufrock Press: Waco, TX, USA, 1997; pp. 11–23. [Google Scholar]

- Renzulli, J.S.; Smith, L.H.; White, A.J.; Callahan, C.M.; Hartman, R.K.; Weisberg, K.I. Scales for Rating the Behavioral Characteristics of Superior Students; Creative Learning Process; Mansfield Center: New York, NY, USA, 1997; pp. 23–34. [Google Scholar]

- Ryser, G.R.; McConnell, K. Scales for Identifying Gifted Students: Ages 5 through 18; Taylor & Francis Group: Waco, TX, USA, 2004; pp. 11–19. [Google Scholar]

- Gentry, M.; Peters, S.J. Effect sizes in gifted education research. Gift. Child Q. 2009, 53, 219–222. [Google Scholar] [CrossRef] [Green Version]

- Pfeiffer, S.I.; Jarosewich, T. Gifted Rating Scales; The Psychological Association: San Antonio, TX, USA, 2003; pp. 23–37. [Google Scholar]

- Pfeiffer, S.I.; Morris, Y. Identifying young, gifted children using the gifted rating scales-Preschool/Kindergarten Forms. Gift. Child Q. 2008, 52, 19–29. [Google Scholar] [CrossRef]

- Heller, K.A.; Reimann, R. Theoretical and methodological problems of a 10-year-follow-up program evaluation study. Eur. J. Psychol. Assess. 2002, 18, 229–241. [Google Scholar] [CrossRef]

- Brighton, C.M.; Moon, T.R.; Huang, F.H.L. Advanced readers in reading first classrooms: Who was really “left behind”? Considerations for the field of gifted education. J. Educ. Gift. 2015, 38, 257–293. [Google Scholar] [CrossRef]

- Housand, A.; Reis, S.M. Self-regulated learning in reading: Gifted pedagogy and instructional settings. J. Adv. Acad. 2009, 20, 108–136. [Google Scholar] [CrossRef]

- Li, H.; Pfeiffer, Y.; Kumtepe, A.T.; Mo, G. Validation of the Gifted Rating Scales-School Form in China. Gift. Child Q. 2008, 52, 160–169. [Google Scholar]

- Rosado, J.I.; Pfeiffer, S.; Petscher, Y. Identifying gifted students in Puerto Rico: Validation of a Spanish translation of the Gifted Rating Scales. Gift. Educ. Int. 2015, 31, 162–175. [Google Scholar] [CrossRef]

- Mohamed, A.H.H.; Omara, E.M.N. Validation of the Arabic version of the gifted rating Scales-School form. J. Educ. Gift. 2020, 43, 252–269. [Google Scholar] [CrossRef]

- Karadag, F.; Pfeiffer, S.I. Identifying gifted preschoolers in Turkey: The reliability and validity of the GRS-Preschool/Kindergarten Form. J. Educ. Train. Stud. 2016, 4, 8–16. [Google Scholar]

- Siu, A.F.Y. The Reliability and Validity of a Chinese-Translated Version of the Gifted Rating Scale Preschool/Kindergarten Form. J. Psychoeduc. Assess. 2010, 28, 249–258. [Google Scholar] [CrossRef]

- Lee, D.; Pfeiffer, S.I. The reliability and validity of a Korean-translated version of the Gifted Ratings Scales. J. Psychoeduc. Assess. 2006, 24, 210–224. [Google Scholar] [CrossRef]

- Petscher, Y.; Pfeiffer, S.I. Reconsidering the Psychometrics of the GRS-S: Evidence for Parsimony in Measurement. Assess. Eff. Interv. 2020, 46, 55–66. [Google Scholar] [CrossRef]

- Brown, T.A. Confirmatory Factor Analysis for Applied Research; The Guilford: New York, NY, USA, 2006; pp. 14–26. [Google Scholar]

- Hu, L.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Benson, N.E.; Kranzler, J.H. Another look at the construct validity of the Gifted Rating Scales: Preschool/Kindergarten and School forms. J. Psychoeduc. Assess. 2018, 36, 782–797. [Google Scholar] [CrossRef]

- Kline, R. Principal and Practice of Structural Equation Modeling; The Guilford Press: New York, NY, USA, 2005; pp. 29–32. [Google Scholar]

- Renzulli, J.S. What makes giftedness? Reexamining a definition: Giftedness needs to be redefined to include three elements: Above-average intelligence, high levels of task commitment, and high levels of creativity. Phi Delta Kappan 2011, 92, 81–88. [Google Scholar] [CrossRef]

- Sak, U. Giftedness in Adolescence. Encycl. Child Adolesc. Dev. 2020, 1, 1–11. [Google Scholar] [CrossRef]

- Sternberg, R.J. Successful intelligence: A model for testing intelligence beyond IQ tests. Eur. J. Educ. Psychol. 2005, 8, 76–84. [Google Scholar] [CrossRef] [Green Version]

- Dai, X.; Müller, H.G.; Wang, J.L.; Deoni, S.C.L. Age-dynamic networks and functional correlation for early white matter myelination. Brain Struct. Funct. 2018, 224, 535–551. [Google Scholar] [CrossRef] [PubMed]

- Fiske, A.; Holmboe, K. Neural substrates of early executive function development. Dev. Rev. 2019, 52, 42–62. [Google Scholar] [CrossRef]

- Vaivre-Douret, L. Developmental and cognitive characteristics of high-level potentialities (highly gifted) children. Int. J. Pediatr. 2011, 420297, 1687–9740. [Google Scholar] [CrossRef] [Green Version]

- Barbey, A.K. Network neuroscience theory of human intelligence. Trends Cogn. Sci. 2018, 22, 8–20. [Google Scholar] [CrossRef]

- Thorell, L.B.; Nyberg, L. The Childhood Executive Functioning Inventory (CHEXI): A new rating instrument for parents and teachers. Dev. Neuropsychol. 2008, 33, 536–552. [Google Scholar] [CrossRef]

- Thomaidou, C.; Papantoniou, G.; Moraitou, D.; Dinou, M.; Katsadima, E.; Savvidou, E. Reliability and concurrent validity of the Greek Version of the Preschool/Kindergarten Form of the Gifted Rating Scales (GRS-P). In Proceedings of the 3rd Biennial EARLI Conference of Special Interest Group 5–Learning and Development in Early Childhood, Helsinki, Finland, 20–24 August 2014. [Google Scholar]

- Barkley, R.A. ADHD and the Nature of Self-Control; The Guilford Press: New York, NY, USA, 1997; pp. 12–19. [Google Scholar]

- Bentler, P.M. Structural Equations Program Manual; EQS 6.1.; Multivariate Software Inc.: Encino, Canada, 2005; pp. 14–25. [Google Scholar]

- Catale, C.; Lejeune, C.; Merbah, S.; Meulemans, T. French adaptation of the Childhood Executive Functioning Inventory (CHEXI). Eur. J. Psychol. Assess. 2013, 29, 149–155. [Google Scholar] [CrossRef]

- Kompa, D. The Childhood Executive Function Inventory’’ (CHEXI) Questionnaire: Evaluating the Convergent Validity in the Greek Population. Unpublished Dissertation, University of Ioannina, Ioannina, Greece, 2014. [Google Scholar]

- Hair, J.; Anderson, R.; Tatham, R.; Black, W. Multivariate Data Analysis, 5th ed.; Prentice Hall: Hoboken, NJ, USA, 1998; pp. 78–79. [Google Scholar]

- Margulies, A.S.; Floyd, R.G. Test Review: The Gifted Rating Scales. J. Psychoeduc. Assess. 2004, 22, 275–282. [Google Scholar] [CrossRef]

- Georgas, G.; Paraskevopoulos, H.N.; Bezevegis, Z.; Giannitsis, N.G. Greek WISC-III; Ellinika Grammata: Athens, Greece, 1997; pp. 47–56. [Google Scholar]

- Boll, T. Manual for Children’s Category Test; Pearson: San Antonio, TX, USA, 1993; pp. 13–19. [Google Scholar]

- Rust, J. Colored Progressive Matrices and Chrichton Vocabulary Scale Manual; Pearson: London, UK, 2008; pp. 15–21. [Google Scholar]

- Folstein, M.F.; Folstein, S.E.; McHugh, P.R. A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 1975, 12, 189–198. [Google Scholar] [CrossRef]

- Paraskevopoulos, I.N.; Kalantzi-Azizi, A.; Giannitsas, N.D. ATHENA Test; Ellinika Grammata: Athens, Greece, 1999; pp. 26–45. [Google Scholar]

- Raven, J.; Rust, J.; Squire, A. Manual: Coloured Progressive Matrices and Crichton Vocabulary Scale; Pearson: London, UK, 2008; pp. 34–38. [Google Scholar]

- Fountoulakis, K.N.; Tsolaki, M.; Chantzi, H.; Kazis, A. Mini mental state examination (MMSE): A validation study in Greece. Am. J. Alzheimers Dis. Other Dement. 2000, 15, 342–345. [Google Scholar] [CrossRef]

- Savvidou, E.; Papantoniou, G.; Moraitou, D.; Dinou, M.; Katsadima, E.; Foutsitzi, E.; Tsentidou, G. Comparing the latent structure of the Mini-Mental State Examination among young children and older adults: A preliminary study. Front. Hum. Neurosci. 2016, 10. [Google Scholar] [CrossRef]

- Gresham, F.M.; Elliott, S.N.; Cook, C.R.; Vance, M.J.; Kettler, R. Cross-informant agreement for ratings for social skill and problem behavior ratings: An investigation of the Social Skills Improvement System—Rating Scales. Psychol. Assess. 2008, 22, 157. [Google Scholar] [CrossRef]

- Jarosewich, T.; Pfeiffer, S.L.; Morris, J. Identifying gifted students using teacher rating scales. A review of existing instruments. J. Psychoeduc. Assess. 2002, 20, 322–336. [Google Scholar] [CrossRef]

- Schoemaker, M.M.; Flapper, B.C.; Reinders-Messelink, H.A.; de Kloet, A. Validity of the motor observation questionnaire for teachers as a screening instrument for children at risk for developmental coordination disorder. Hum. Mov. Sci. 2008, 27, 190–199. [Google Scholar] [CrossRef]

- Machts, N.; Kaiser, J.; Schmidt, F.T.C.; Moller, J. Accuracy of teachers’ judgments of students’ cognitive abilities: A meta-analysis. Educ. Res. Rev. 2016, 19, 85–103. [Google Scholar] [CrossRef]

- Kornmann, J.; Zettler, I.; Kammerer, Y.; Gerjets, P.; Trautwein, U. What characterizes children nominated as gifted by teachers? A closer consideration of working memory and intelligence. High Abil. Stud. 2015, 26, 75–92. [Google Scholar] [CrossRef]

- Pham, A.V.; Hasson, R.M. Verbal and visuospatial working memory as predictors of children’s reading ability. Arch. Clin. Neuropsychol. 2014, 2, 467–477. [Google Scholar] [CrossRef] [Green Version]

- St Clair-Thompson, H.; Stevens, R.; Hunt, A.; Bolder, E. Improving children’s working memory and classroom performance. Educ. Psychol. 2003, 30, 203–219. [Google Scholar] [CrossRef]

- Jaburek, M.; Cigler, H.; Valesova, T.; Portesova, S. What is the basis of teacher judgment of student cognitive abilities and academic achievement and what affects its accuracy? Contemp. Educ. Psychol. 2022, 69, 102088. [Google Scholar] [CrossRef]

- Johnson, J.; Im-Bolter, N.; Pascual-Leone, J. Development of mental attention in gifted and mainstream children: The role of mental capacity, inhibition, and speed processing. Child Dev. 2003, 74, 1594–1614. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Alloway, T.P.; Alloway, R.G. Investigating the predictive roles of working memory and IQ in academic attainment. J. Exp. Child Psychol. 2010, 106, 20–29. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Marini, A.; Eliseeva, N.; Fabbro, F. Impact of early second-language acquisition on the development of first language and verbal short-term and working memory. Int. J. Biling. Educ. Biling. 2019, 22, 165–176. [Google Scholar] [CrossRef]

- Gathercole, S.E.; Pickering, S.J. Working memory deficits in children with low achievements in the national curriculum at 7 years of age. Br. J. Educ. Psychol. 2000, 70, 177–194. [Google Scholar] [CrossRef]

- Lim, J.K.; Song, Y.M.; Song, M.S.; Yang, I.H. An analysis on the level of elementary gifted students’ argumentation in scientific inquiry. J. Korean Elem. Sci. Educ. 2010, 29, 441–450. [Google Scholar]

- Yammarino, F. Leadership: Past, present, and future. J. Leadersh. Organ. Stud. 2013, 20, 149–155. [Google Scholar] [CrossRef]

- Siegle, D.; Moore, M.; Mann, R.L.; Wilson, H.E. Factors that influence in-service and preservice teachers’ nominations of students for gifted and talented programs. J. Educ. Gift. 2010, 33, 337–360. [Google Scholar] [CrossRef]

| GRS-P | Factor |

|---|---|

| Intellectual Ability | 0.957 |

| Academic Ability | 0.944 |

| Creativity | 0.893 |

| Artistic Talent | 0.801 |

| Motivation | 0.950 |

| GRS-P | Cronbach’s α |

|---|---|

| Intellectual Ability | 0.980 |

| Academic Ability | 0.965 |

| Creativity | 0.980 |

| Artistic Talent | 0.979 |

| Motivation | 0.983 |

| Intellectual Ability | Academic Ability | Creativity | Artistic Talent | Motivation | |

|---|---|---|---|---|---|

| CHEXI Working Memory | −0.738 ** | −0.739 ** | −0.596 ** | −0.573 ** | −0.736 ** |

| CHEXI Inhibition | −0.566 ** | −0.583 ** | −0.438 ** | −0.484 ** | −0.586 ** |

| GRS-P | Cronbach’s α |

|---|---|

| Intellectual Ability | 0.99 |

| Academic Ability | 0.98 |

| Creativity | 0.99 |

| Artistic Talent | 0.99 |

| Motivation | 0.99 |

| GRS-P | Colored Progressive Matrices (CPM) |

|---|---|

| Intellectual Ability | 0.245 |

| Academic Ability | 0.295 |

| Creativity | 0.308 |

| Artistic Talent | 0.120 |

| Motivation | 0.131 |

| Intellectual Ability | Academic Ability | Creativity | Artistic Talent | Motivation | |

|---|---|---|---|---|---|

| Language Analogies | 0.310 | 0.287 | 0.360 | 0.092 | 0.143 |

| Pattern Copying | 0.190 | 0.249 | 0.211 | 0.317 | 0.200 |

| Vocabulary | 0.297 | 0.223 | 0.374 | 0.133 | 0.149 |

| Digit Span | 0.472 * | 0.437 * | 0.463 * | 0.101 | 0.276 |

| Memory of Common Sequences | 0.078 | 0.137 | −0.068 | 0.014 | 0.076 |

| Right-Left Perception | 0.158 | 0.198 | 0.033 | 0.291 | 0.355 |

| GRS-P | MMSE |

|---|---|

| Intellectual Ability | 0.431 * |

| Academic Ability | 0.386 |

| Creativity | 0.327 |

| Artistic Talent | 0.273 |

| Motivation | 0.346 |

| Intellectual Ability | Academic Ability | Creativity | Artistic Talent | Motivation | |

|---|---|---|---|---|---|

| Intellectual Ability | |||||

| Academic Ability | 0.954 ** | ||||

| Creativity | 0.920 ** | 0.901 ** | |||

| Artistic Talent | 0.793 ** | 0.835 ** | 0.811 ** | ||

| Motivation | 0.848 ** | 0.919 ** | 0.822 ** | 0.907 ** | |

| CCTest 1st Subtest | 0.121 | 0.120 | 0.156 | 0.096 | 0.070 |

| CCT 2nd Substest | −0.005 | −0.003 | 0.015 | −0.062 | −0.007 |

| CCT 3rd Subtest | −0.219 | −0.222 | −0.322 | −0.243 | −0.203 |

| CCT 4th Subtest | −534 ** | −0.459 * | −0.401 * | −0.470 * | −0.540 ** |

| CCT 5th Subtest | −0.375 | −0.325 | −0.324 | −0.244 | −0.272 |

| CCT Total | −0.452 * | −0.397 * | −0.382 | −0.417 * | −0.439 * |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sofologi, M.; Papantoniou, G.; Avgita, T.; Lyraki, A.; Thomaidou, C.; Zaragas, H.; Ntritsos, G.; Varsamis, P.; Staikopoulos, K.; Kougioumtzis, G.; et al. The Gifted Rating Scales-Preschool/Kindergarten Form (GRS-P): A Preliminary Examination of Their Psychometric Properties in Two Greek Samples. Diagnostics 2022, 12, 2809. https://doi.org/10.3390/diagnostics12112809

Sofologi M, Papantoniou G, Avgita T, Lyraki A, Thomaidou C, Zaragas H, Ntritsos G, Varsamis P, Staikopoulos K, Kougioumtzis G, et al. The Gifted Rating Scales-Preschool/Kindergarten Form (GRS-P): A Preliminary Examination of Their Psychometric Properties in Two Greek Samples. Diagnostics. 2022; 12(11):2809. https://doi.org/10.3390/diagnostics12112809

Chicago/Turabian StyleSofologi, Maria, Georgia Papantoniou, Theodora Avgita, Aikaterina Lyraki, Chrysoula Thomaidou, Harilaos Zaragas, Georgios Ntritsos, Panagiotis Varsamis, Konstantinos Staikopoulos, Georgios Kougioumtzis, and et al. 2022. "The Gifted Rating Scales-Preschool/Kindergarten Form (GRS-P): A Preliminary Examination of Their Psychometric Properties in Two Greek Samples" Diagnostics 12, no. 11: 2809. https://doi.org/10.3390/diagnostics12112809