1. Introduction

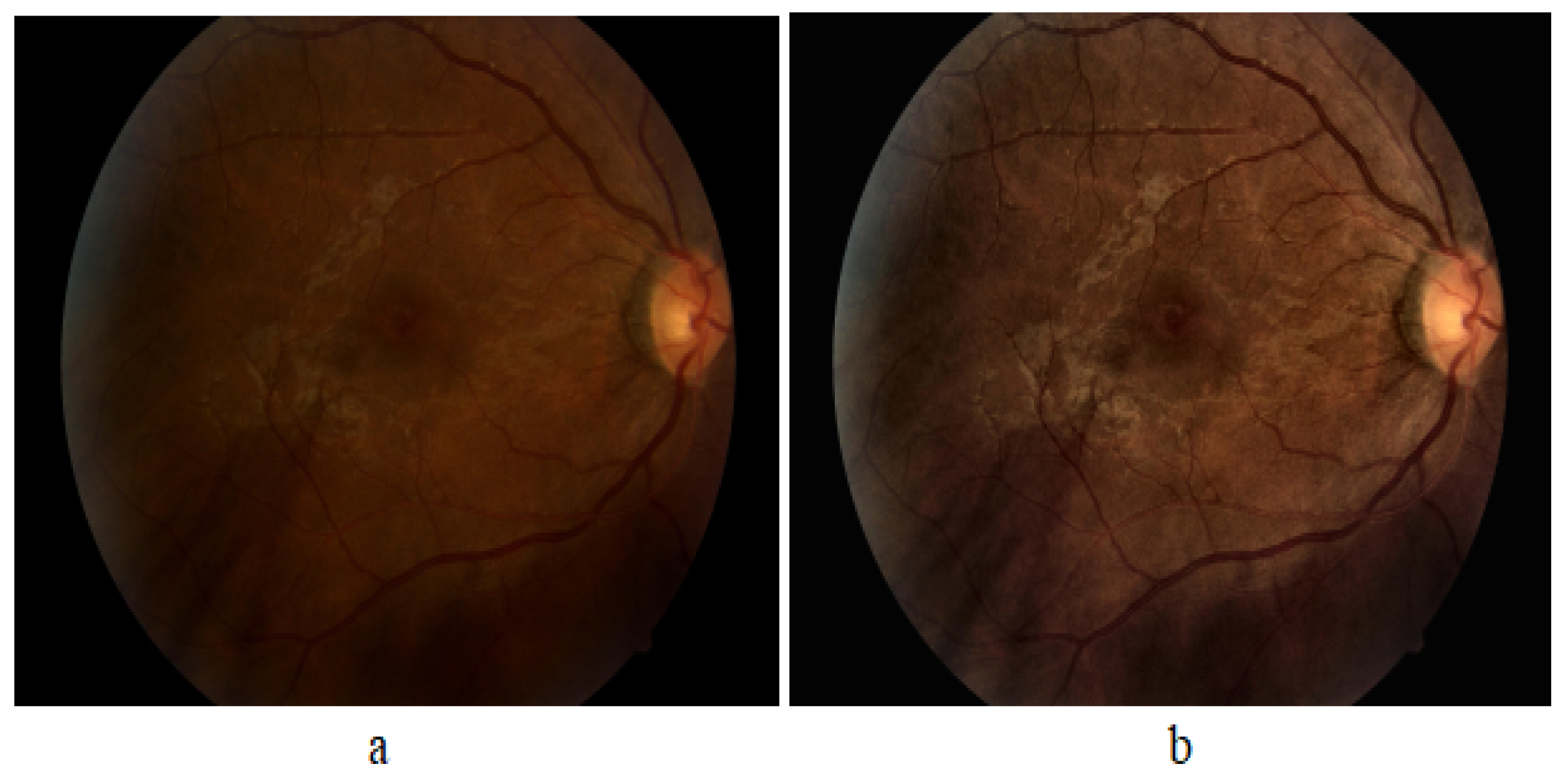

Diabetic Retinopathy (DR) is a chronic eye disease, commonly found in elderly people (age 50 or above), and can cause severe visual impairments or even blindness if not treated at an early stage [

1]. The progression of DR can be categorized into four stages (as per the clinical standards), where the perceptible symptomatic appearances of the disease can only be visualized in the last stages when it becomes nearly impossible to fully recover the vision loss. DR is caused by the blood vessels rupturing due to high blood sugar levels. These leaky vessels produce fluid clots and oxygen deficiency, leading to severe visual impairments. Clinically, DR is classified into two types, i.e., proliferative and non-proliferative Diabetic Retinopathy (NPDR). NPDR is an early DR stage in which retinal lesions such as hard exudates, microaneurysms, hemorrhages, and cotton wool spots appear on the macula. If the disease is left untreated, then abnormal blood vessels from choroid intercept the retina, resulting in choroidal neovascularization. This is the severe stage of DR, dubbed as proliferative DR (PDR), which may result in complete blindness.

Apart from this, NPDR is further graded into three stages, i.e., mild, moderate, and severe NPDR, where the severe NPDR is transitioned into PDR due to the lack of proper and timely treatment [

2]. Moreover, according to a recent survey, around 333 million diabetic subjects are forecasted to have a high risk of retinal abnormalities (especially DR), thus requiring proper retinal examination by 2025 [

3]. At present, 387 million people are diagnosed with diabetes mellitus (DM) worldwide, with an estimated increase in count to 592 million people by 2035 [

4]. Among these 387 million people, 93 million people are with positive DR [

5]. The alarming figure of 28 million people out of those 93 million people have reached the vision-threatening stage. Patient vision impairment/eyesight loss count can be effectively reduced with early/timely detection of DR with a certainty of accuracy. Overall, 5–8% of people with early-stage DR can be treated with laser technology.

According to a survey conducted by WHO, improper examination or late diagnosis might leads to permanent blindness in diabetic patients. However, the progression of the disease can be reduced if diagnosed at an early stage. The intelligent self-learning and decision-making system designed to process medical images for the detection and classification of DR can be used as a decision support system to detect and stop the progression of disease at an early stage. Autonomous disease detection systems provide an efficient and timely solution with the ease of non-invasive image acquisition.

In the proposed algorithm, we have designed deep learning framework to accurately classify the detected fundus image into one of the five stages of DR. The major research contributions covered in the paper are as follows:

A custom lightweight CNN is proposed to handle a complex multi-class problem.

A new pre-processing pipeline including different color spaces for fundus images is proposed to compliment CNN architecture, which has lowered the burden from CNN architecture.

A new ensemble approach is presented to handle inter-class similarities in a robust manner.

Deep features are complimented with a random forest classifier to reduce trainable parameters.

This research article is organized as follows. Starting from

Section 1, i.e., a brief introduction followed by

Section 2, comprised of literature review highlighting the research gaps in this area.

Section 3 explains the proposed methodology in detail, i.e., all three proposed frameworks along with the parameter details, and classifier selection. Results, comparison and dataset details are elaborated in

Section 4 followed by the conclusion, i.e.,

Section 5. Lastly, All of the citations are referred.

2. Literature Review

Diabetic retinopathy is one of the most widely studied areas with respect to the development of computer-aided diagnostic systems in the last 3 decades. The developed techniques covered conventional image processing machine learning, optimization, deep learning, and now explainable models. Wang Yu, proposed a research [

6] framework, which used advanced retinal image processing, deep learning, and a boosting algorithm for high-performance DR grading. The pre-processed images are passed through a convolutional neural network for the extraction of features, then the boosting tree algorithm was applied to make predictions. MK et al. [

7] used a different approach that focused only on important features and exudates on retina to predict the condition of the disease, since they play a significant role in detecting the severity of disease. They used scale-invariant feature transform (SIFT) and speed-up robust features (SURF) to extract the features of exudate region. Later applied support vector machine (SVM) for the detection of DR and achieved sensitivity of 94%. P.W. et al. [

8] worked on basic CNN architecture of VGG and achieved an accuracy of 99.9% using the Messidor dataset and 98% accuracy using the Kaggle dataset. They classified input data into one of three classes i.e., no DR, non-proliferative DR, and proliferative DR.

F. Alzami et al. [

9] used fractal dimensions for retinopathy detection. Fractal dimensions are used to observe the vascular system of the retinal part of the eye, it not only detects the DR, but also the severity of the disease. The proposed algorithm, evaluated on the Messidor dataset using a random forest classifier, provided satisfactory results for the detection of DR, but the results were not very promising for the classification. They further concluded that other features such as univariate, multivariate, etc., can be used for testing the proposed algorithm. Another proposed algorithm [

10] used inception-v3 for the feature extraction by adaptive learning the customized different classification functions of inception-v3. They used 90 retinal images for training the framework followed by testing using 48 retinal images. For classification of these deep features they used support vector machine (SVM). Results were classified into two classes only including normal and diabetic retinopathy images. D.K. Elswah et al. [

11] first pre-processed the images using normalization and augmentation, followed by feature extraction using ResNet, and then performed classification on the features into one of the five classes of normal, mild, moderate, severe, and proliferative. They used Indian Diabetic Retinopathy Image Dataset (IDRiD) for their research validation and testing.

In [

12], Alexander Rakhlin represented his work for Diabetic Retinopathy Detection (DRD) by designing a Deep Learning framework. He trained and tested the proposed algorithm on the Kaggle dataset, and achieved sensitivity, and specificity of 92% and 72%, respectively. The network was trained for binary classification and lacks the categorization of DR with respect to severity level. The architecture used in the project originated from famous VGG-Net family, winner of ImageNet Challenge ILSVRC-2014, designed for large-scale natural image classification. As a pre-processing step, retinal images were normalized, scaled, centered, and cropped to 540 × 540 pixels. Pratt and Coenen in [

13] used a CNN approach for the diagnoses of DR using digital fundus images and also classifying its severity accurately. They developed an architecture and applied pre-processing techniques such as data augmentation for non-biased identification of the intricate features, exudates, micro-aneurysms, and hemorrhages. They used GPU to run the Architecture and tested it on 80,000 images from publicly the available Kaggle dataset. They achieved a sensitivity of 95% and an accuracy of 75% on 5000 validation images for five class classifications of DR. Ratul and Kuntal proposed a methodology in [

14] using a 24-layered customized CNN architecture. In pre-processing, images are de-noised so that micro-aneurysms and hemorrhages become prominent on the retina. Images were resized, normalized, and de-noised using Non-Local Means De-noising (NLMD) and class imbalance removal by over-sampling data. Theano python was used for development and GPU for processing. The Kaggle dataset was used for training and testing. On the data set of 30,000 images, an accuracy of 95% was achieved for two-class classification and 85% accuracy for five-class classification. Zhou worked to conserve the computational resources that are invariably demanding due to deep architecture and high-resolution images [

15]. They used a multistage model where the complexity of architecture and resolution of images improves at every stage. Images were labeled at each stage and labels of different stages were related. According to results on the Kaggle dataset, they received a kappa of 0.841. Romany merged two kinds of classifiers. He used a neural network for feature extraction and then performed classification on the obtained features using other algorithms [

16]. Initially, image quality was improved with the help of histogram equalization. Before feature extraction, an adaptive-learning-based algorithm (Gaussian Mixture Model (GMM)) for region segmentation was performed followed by region of interest (ROI) localization using connected component-analysis. As a result, blood vessel extraction/segmentation was achieved. AlexNet DNN is used to extract features and the support-vector-machine (SVM) classifier has been for classification. Different types of features were extracted including FC7 (features of the layer before classification in CNN), linear discriminant analysis (LDA), and principle component analysis (PCA). The algorithm was evaluated on Kaggle fundus images dataset resulting in an accuracy of 97.93% using FC7 features. On the other hand, by using PCA, it showed 95.26% accuracy. Using Spatial Invariant Feature Transform (SIFT) algorithm comparative analysis showed an accuracy of 94.40%.

Guo et al. used CNN [

17] for the detection of exudates in fundus images. An auxiliary loss and boosted training method was used for speedy and improved CNN training. They used U-Net, a special architecture, for exudates, and neural structure detection. They emphasized that images are classified as normal, completely ignoring the exudates (owing to the small size). During pre-processing, Contrast Limited Adaptive Histogram Equalization (CLAHE) was used on green channel only since it holds the most significant information among all planes. It enhanced the contrast between exudates and non-exudates. They proposed a boosted training method, which applied different weights to samples to vary their significance during training and used small patches as input instead of the whole images. The learned weights were tested on their own dataset and other three publicly available datasets. The obtained accuracy was 0.98, 0.96, 0.94, and 0.91, respectively. In 2022 [

18], Nojood et al. proposed a deep learning framework using Inception as a baseline model to grade diabetic retinopathy using fundus images. Proposed algorithm in-cooperated Moth Optimization to enhance the algorithm efficiency. To extract ROI and lesions, histogram-based segmentation is applied followed by deep feature extraction. The algorithm yielded an accuracy of 0.999 on Messidor dataset.

Rahman et al. [

19] proposed a deep learning framework to grade fundus images as mild, moderate, or severe based on the lesions and exudates. Fundus images are passed to DenseNet-169 after resizing followed by data augmentation. Extracted deep features were used to classify the image among one of the four stages of diabetic retinopathy. Training and testing of the proposed framework were performed on Kaggle APTOS 2019. The proposed model was compared with pre-trained models DenseNet-121 and ResNet-50, and resulted in the highest accuracy of 96.54%. In 2022 [

20], a novel deep learning-based algorithm was proposed to accurately classify a fundus image among different stages of diabetic retinopathy and Macular Edema using order one and two 2D Fourier–Bessel series expansion-based flexible analytic wavelet transforms. Local binary patterns and variance were extracted from the selected bands to represent the statistical properties of the extracted regions using a feature vector. Various classifiers, i.e., Random Forest, K-Nearest Neighbour, and Support Vector Machines, were used to test the accuracy patterns on Messidor and IDRiD [

21], resulting in an accuracy of 0.975 and 0.955, respectively. Mohamed et al. [

22] devised an algorithm for diabetic retinopathy classification using DenseNet-169 as a baseline model followed by CNN to enhance the classification capability of the model. The model was trained and tested on the APTOS dataset, yielding an accuracy of 97% for binary classification, i.e., healthy or Diabetic retinopathy. The proposed framework lacks the severity-based grading of diabetic retinopathy. Novel cross-disease diagnostic CNN frameworks [

23,

24] were proposed for diabetic retinopathy and Macular Edema using disease-specific and disease-dependent features. IDRiD and Messidor dataset have been used to evaluate the proposed algorithms, yielding an accuracy of 92.6% and 92.9%, respectively. Wang et al. [

25] proposed a cascaded 2-Phase framework. The first module of the proposed framework classifies an input fundus image as diseased or normal based on deep features. In the second phase, the diseased fundus image is further classified among four stages using a novel lesion attention module. The results of three baseline models, i.e., MobileNet, ResNet-50, and DenseNet-121, were evaluated using the DDR dataset. The highest accuracy of 80.29% was observed using DenseNet-121 Model. A CNN based hybrid model [

26] was proposed to categorize a retinal input image as healthy or non-healthy based on the lesions, exudates, etc. The proposed model utilized the ResNet and DenseNet architecture to learn multi-scale features and resolve diminishing gradient problem. Model training and testing were done on a local dataset annotated by ophthalmologists, yielding an accuracy of 83.2%. Eman et al. [

27] proposed an algorithm to detect various severity levels of diabetic retinopathy using retinal image dataset. The proposed framework takes a retinal image and computes PCA for each channel, followed by classification of each channel using neural network architecture. Final results were concluded based on majority voting by accumulating the results of all three channels. The algorithm yielded an accuracy of 85% when tested on DRD (Kaggle Competition dataset) with retinal images belonging to all severity levels.

An ensemble-based machine learning algorithm proposed in 2021 [

28] incorporated three different classifiers, i.e., Random Forest, SVM, and Neural Networks, followed by a meta classifier to reach a decision. Using an ensemble-based approach, robustness and performance have been enhanced. The proposed algorithm was tested on the Messidor dataset and yielded an accuracy of 0.75. Another ensemble-based algorithm was proposed for diabetic retinopathy screening in 2021 [

29]. A two staged classifier has been proposed, where the first stage was comprised of outputs from six classifiers, i.e., SVM, KNN, Multilayer perceptron, Naive Bayes, Decision Trees and Logistic Regression, followed by the second stage, i.e., a neural network, which utilized the output from classifiers to reach the final decision. The proposed algorithm resulted in a test accuracy 76.40% when tested on the Messidor dataset. Another ensemble-based deep neural network architecture was proposed using ResNet in 2020 [

30]. The proposed algorithm utilized four ResNets to binary classify among the five classes of DR, i.e., normal vs. mild DR, normal vs. moderate DR, normal vs. severe DR, and normal vs. proliferative DR. The results from each classifier from stage-1 were then processed by AdaBoost classifier in stage-2 to reach the final classification results. The algorithm was evaluated on the Kaggle dataset APTOS 3662 retinal images, resulting in an accuracy of 61.9%.

In a comprehensive review of state-of-the-art algorithms, we have concluded various research gaps in diabetic retinopathy autonomous detection systems. The majority of the research algorithms yielding good results are catering to the problem of binary classification, i.e., healthy vs. non-healthy image or a three-class classification, and thus might result in the incorrect classification of severe cases, which might lead to permanent loss of sight. Keeping this in view, because of data distortions, it is difficult to deal with all five classes at the same time, and we tried to resolve the matter of class imbalance with our different frameworks. Finding the appropriate set of pre-processing steps to extract images that provide the best results possible is also one of the milestones achieved in the research. Focusing on the features of interest and enhancing the main features required for the targeted problem can help improve the results.

3. Methodology

The proposed architecture is comprised of three phases, i.e., image pre-processing, feature extraction followed by classification. We have used the Kaggle dataset to train/test the proposed frameworks. The flow chart diagram of the proposed work is given in

Figure 1. To extract deep features, we trained Deep Convolutional Networks. Heat Maps extracted from the proposed framework highlight the presence of any exudates, microaneurysms, hemorrhages, cotton wool spots, or new build vessels; which indicate the extraction of features from the affected region, thus yielding high accuracy. The deep CNN is capable of taking unknown images as input and extracting problem-specific features, thereby generating an appropriate response. CNN, improving its feature extraction during every iteration of backpropagation, converges closer to the optimum solution for the specific problem under consideration. Based on the decision-making of CNN, it is decided to update the parameter weights in case of false positives or to retain the parameter weights in case of true positives. Invariably, the working capabilities of CNN are dependent on the quality and nature of images; therefore, we resorted to pre-processing instead of feeding the system with raw images. During the pre-processing, we have enhanced the minute details in order to make exudates and micro-aneurysms more prominent. We have further carried out light equalization as the images were taken in light conditions of varying intensities. To conserve the computational resources, images were resized and the background was removed. The pre-processed images were then forwarded to our deep CNN architecture, which extracted the best-defined features to signify the objects of our interest within the images. These collected features are fed to a classifier for DR classification into normal, mild, moderate, severe, or proliferative. As highlighted in

Figure 1, we have proposed three different CNN-based frameworks to categorize an image among five classes. Lastly, the accuracy of all the frameworks has been compared to analyze the performance. Below are the details of each of the CNN-based proposed frameworks.

Framework 1 used a basic CNN Architecture for feature extraction; these deep features are defined to represent our objects of interest within the images and are classified using a conventional Random forest classifier [

31] (showed the best accuracy among many other classifiers), and designed a cascaded architecture. In this architecture, multiple cascaded layers are used to classify an image into one of the five classes.

Framework 2 performed multiple pre-processing techniques on dataset to compare their results for five class classifications and created an improved ensemble result using them. We applied CNN Architecture of Framework 1 on RGB, HSV and normalized images, categorized as CNN-1, CNN-2, and CNN-3, respectively.

Framework 3 classified images by creating a series of patches (9 patches from each image), and classification was done using LSTM-based CNN Architecture.

3.1. Stage 1: Pre-Processing

The first stage of the proposed architecture is the pre-processing phase. The input images are not standardized as they have an un-wanted black background, noise, different aspect ratios, varying light conditions, different color averages, and imbalanced classes. An algorithm shown in

Figure 2 has been designed to pre-process the raw images and make it optimal. The steps included:

Data Augmentation. In order to solve the issue of class imbalance, we applied data augmentation to the dataset. A huge class imbalance existed among the dataset. With class 0 representing more than 50% of the dataset, portions of the dataset represented by the classes in terms of percentage are shown in

Table 1. There was a great possibility of over-fitting. As the system will be seeing normal class images way more than any of the other 4 classes, ultimately the trained parameters will learn to lean towards normal class. We over-sampled the images of less dominant classes, using the regeneration of new images from the existing ones by changing a few details in them. We have applied the following augmentations (transformations) during our research:

- (a)

Flipping to 90 degrees.

- (b)

Rotating images by [0, 180] degrees with a step size of 15 degrees.

- (c)

Translating (on scale x and y dimensions).

To up-sample Class 1 and Class 2 samples, the flip transformation has been applied. Since classes 3 and 4 differ from the rest of the classes by a large percentage, therefore both of the classes were up-sampled by applying all of the transformation steps, i.e., flip, rotation, and translation to reduce the skewed output of the trained model. The classwise data distribution before and after augmentation is shown in

Table 1.

CLAHE. We used Contrast Limited Adaptive Histogram Equalization (CLAHE) technique for the enhancement of an image. It performs a very clear and detailed contrast improvement in an image by equalization of lighting effects. The enhancement results are remarkable even for low-contrast images (underwater images), evident in

Figure 3. It can be seen that applying CLAHE on retinal images has enhanced the visibility of minute details. The working of CLAHE is as follows:

- (a)

Step 1 Division of image into small equal-sized partitions.

- (b)

Step 2 Performing histogram equalization on every partition.

- (c)

Step 3 Limiting the amplification by clipping the histogram at some specific value.

Re-scaling. We have then re-scaled the images by standardizing the size of the optic region to have a common radius (300 pixels or 500 pixels).

Background Removal, Colour Normalization andGray Scale Mapping. We then removed the background, keeping a specific radius to focus on ROI, subtracted the local average color, thereby suppressing the unwanted details, and mapped the local average to 50% grayscale image.

Resizing. Finally, we resized the image to 256 × 256 according to the requirement of CNN.

3.2. Stage 2: CNN Architecture for Deep Feature Extraction

In order to improve the accuracy, we may add architectural layers to a level, after which there is a drop in accuracy due to inherent factors like over-fitting, etc. The CNN proposed in our research is comprised of layers, namely; input map, convolution layer, activation layer, and max pooling layer. The parameters of CNN, depicting the layer, number of feature maps in each layer (Activation Shape), number of features in each layer (Activation size), and weights (parameters to be trained) are shown in

Table 2. The architectural diagram for custom CNN is also shown in

Figure 4.

Detailed architecture of the layers are as follows:

Covolution. This layer has a set of filters (kernel), as our first layer has eight filters. Each filter should have the same depth as the depth of input, since our first layer input image is RGB with depth 3, so we have eight filters of depth 3. The input image becomes convolved with each filter and forms eight feature maps on each convolution layer. Comprising filters (kernel), feature maps are created for the input image based on the number of filters, e.g., if the first layer has 8× filters, this layer will produce 8× feature maps as a result of input image convolution with each filter. For an image of size

and filter of size

, each feature map will have size (with 2 × 2 zero padding and stride = 1):

For 8× filters of size

, the trainable parameters to collect features from image will be:

where 1 is added for bias.

MaxPooling Layer. Max pooling is used to reduce the size (down sample) of the coming feature maps intelligently so that most of the information remains intact. With the reduced dimensions, parameters are also reduced as we move forward in the network. Therefore, it reduces the chances of over-fitting and becomes an efficient system with less computational cost. We used a kernel of size 2 × 2 in each max-pooling layer, and it selects the maximum number from 2 × 2 frame and the frame moves with a 2 × 2 stride. This layer has no parameters that are to be trained.

Activation Layer. In order to make an effective system for complicated problem solving, an activation function has been added. We used a rectified linear unit (ReLU) after every convolutional and Fully Connected layer since it resulted in the highest accuracy among other activation functions and prevented the vanishing gradient problem effectively. We have designed ReLU within the convolution layer in our architecture, and it has improved the accuracy of classification.

Dropout Layer. We have avoided the over-fitting by using the dropout layer, as it prevents the network dependence on single node. Probability is assigned to each node, which decides its value.

Fully Connected (FC) Layer. After passing through many convolutions, activation and max-pooling layers, the most important feature is concentrated. Feature maps of the last layer are then flattened, enabling them to be fed into the FC layer, where every feature value is connected to every neuron. We have used an FC layer consisting of 2048× neurons. The number of parameters to be trained for this layer are represented as:

Feature Vector. After this FC layer, we collected these 2048× features of every image as labelled feature vectors for subsequent training of our classifier.

3.3. Stage 3: Classification Using Classifiers

We have tested our system with various classifiers, i.e., Naive Bayes Logistic Regression, Simple Logistic, SVM, 3NN, Bagging and Random Forest. Among all, Random Forest Tree (RFT) has proven to be most suited classifier for grading of DR. As suggested by its name, RFT is structured on the idea of decision trees in which a decision is made at every node for an unknown problem. The nature of questions in the unknown problem should be discriminating among various classes. At every stage, data is traversed towards its destined end-node (related class) based on the decisions.

3.4. Framework 1: Cascaded Classifier

Instead of designing one Classifier of five classes, we have designed a Cascaded Classifier using four binary classifiers, since cascaded classifiers are computationally less expensive and require less training time [

32,

33]. Our Cascaded Classifier Network (CCN) is a novel deep learning architecture comprised of multiple CNNs merged with classifiers, each predicting a specific feature-set to further divide the data. The division process continues until we obtain leaf nodes equal to the number of classes. Our cascaded classifier is shown in

Figure 5. This design allowed us to train all the neural networks simultaneously. We were able to reduce the possibility of error and over-fitting due to the binary nature of the proposed classifier.

A coloured fundus image (256 × 256 × 3) is fed to the CCN as input.

The output map is sequentially forwarded from the previous one to the next four classifiers within the CCN.

The new test color fundus image moves node-wise and ultimately reaches one of the five leaf nodes (terminal nodes) for final decision-making.

Finally, it can be classified among one the five classes: Normal, Mild, Moderate, Severe, and Proliferative.

Cascaded Classifier Architecture

Level 0. The unknown test image is partially classified at the first stage. In Classifier 0, the image goes to class 0, representing Normal and Mild Classes, or Class1, which characterizes Moderate, Severe, and Proliferative stages of DR.

Level 1. At level-1, Classifier-1 further classifies class-0 from level-1 by labelling them into Normal (Label0) or Mild (Label1).

Level 2. Using Classifier-2, class-1 can further be divided into two classes where class-0 is Label 2 (Moderate), and class 1 is Label 3 (Severe) and Label4 (Proliferative). At this level, we have acquired end nodes for the classes Normal, Mild and Moderate; however, Class 1 output from Classifier 2 needs further classification.

Level 3. During this stage, Class 1 output from Classifier 2 is further segregated and labeled as Severe (Label3) or Proliferative (Label4).

Level 4. Finally, we were able to acquire five separate classes as leaf nodes with subsequent labels as Label 0 (Normal), Label 1 (Mild), Label 2 (Moderate), Label 3 (Severe) and Label 4 (Proliferative). In our design, by making use of the simultaneous training technique, we were able to save the time originally required in case of independent classifier training.

3.5. FrameWork 2: Ensembled System Design

In the ensembled system, we combined different systems to get our final results. We used our CNN Architecture for the classification of three different types of pre-processed datasets, i.e., Normalized images, RGB images, and HSV images, categorized as CNN-1, CNN-2, and CNN-3, respectively.

Normalized (CNN-1): We Pre-processed Images in order to suppress unwanted information from images. This dataset is pre-processed with the same algorithm as we used in Framework 1.

RGB (CNN-2): It is additive colour representation of images. Every pixel in the image represents the saved information in the terms of color. The intensity value of the three primary colors, red, green, and blue, combined shows one color. The name RGB is also derived from the initials of the three primary colours.

HSV (CNN-3): It is a representation of an image in terms of hue, saturation and value, and the three channels represent each of these. Hue is an angle on a colored spherical surface; it gives color. Saturation is the measure of how light or dark that color is; it is a point on the radius at the angle represented by hue. Value is a measure of brightness or intensity of color; it works simultaneously with saturation.

Separate results of these three different systems are observed and we also observed ensemble results in two different ways for improved accuracy as shown in

Figure 6.

3.6. Framework 3: LSTM CNN

Long Short Term Memory networks, usually just called LSTM, share a special kind of RNN, capable of learning long-term dependencies. LSTMs are explicitly designed to avoid the long-term dependency problem. Remembering information for long periods of time is practically their default behavior, not something they struggle to learn. The learning mechanism of LSTM is shown in

Figure 7, and these working steps of an LSTM architecture are as follows:

The first step in our LSTM [

34] is to decide what information we are going to throw away from the cell state. This decision is made by a sigmoid layer called the forget gate layer. It looks at

and

, and outputs a number between 0 and 1 for each number in the cell state

. A ‘1’ represents completely keep this, while a ‘0’ represents completely get rid of this.

The next step is to decide what new information we are going to store in the cell state. It has two parts.

First, a sigmoid layer, called the input gate layer, decides which values we will update.

Next, a tanh layer creates a vector of new candidate values, , that could be added to the state. In the next step, we will combine these two to create an update to the state.

It’s now time to update the old cell state, , into the new cell state . The previous steps have already reached a decision; we just need to propagate it. We multiply the old state by , forgetting the things we decided to forget earlier. Then, we add to it. This is the new candidate value, scaled by how much we decided to update each state value.

Finally, we need to decide what we are propagating to the output. This output will be based on our cell state, but will be a filtered version. First, we run a sigmoid layer, which decides what parts of the cell state we are passing to the output. Then, we put the cell state through tanh (to push the values to be between −1 and 1) and multiply it by the output of the sigmoid gate, so that we only output the parts we decided to propagate.